Abstract

Dutertre and de Moura developed a simplex-based solver for linear rational arithmetic that has an incremental interface and provides unsatisfiable cores. We present a verification of their algorithm in Isabelle/HOL that significantly extends previous work by Spasić and Marić. Based on the simplex algorithm we further formalize Farkas’ Lemma. With this result we verify that linear rational constraints are satisfiable over \(\mathbb {Q}\) if and only they are satisfiable over \(\mathbb {R}\). Hence, our verified simplex algorithm is also able to decide satisfiability in linear real arithmetic.

This research was supported by the Austrian Science Fund (FWF) project Y757. The authors are listed in alphabetical order regardless of individual contributions or seniority.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

CeTA [7] is a verified certifier for checking untrusted safety and termination proofs from external tools such as AProVE [12] and T2 [6]. To this end, CeTA also contains a verified SAT-modulo-theories (SMT) solver, since these untrusted proofs contain claims of validity of formulas. It is formalized as a deep embedding and is generated via code generation.

The ultimate aim of this work is the optimization of the existing verified SMT solver, as it is quite basic: The current solver takes as input a quantifier free formula in the theory of linear rational arithmetic, translates it into disjunctive normal form (DNF), and then tries to prove unsatisfiability for each conjunction of literals with the verified simplex implementation of Spasić and Marić [16]. This basic solver has at least two limitations: It only works on small formulas, since the conversion to DNF often leads to an exponential blowup in the formula size; and the procedure is restricted to linear rational arithmetic, i.e., the existing formalization only contain results on satisfiability over \(\mathbb {Q}\), but not over \(\mathbb {R}\).

Clearly, instead of the expensive DNF conversion, the better approach is to verify an SMT solver that is based on DPLL(T) or similar algorithms [4, 11]. Although there has been recent success in verifying a DPLL-based SAT solver [2], for DPLL(T), a core component is missing, namely a powerful theory solver.

Therefore, in this paper we will extend the formalization of the simplex algorithm due to Spasić and Marić [16]. This will be an important milestone on the way to obtain a fully verified DPLL(T)-based SMT solver. To this end, we change the verified implementation and the existing soundness proofs in such a way that minimal unsatisfiable cores are computed instead of the algorithm merely indicating unsatisfiability. Moreover, we provide an incremental interface to the simplex method, as required by a DPLL(T) solver, which permits the incremental assertion of constraints, backtracking, etc. Finally, we formalize Farkas’ Lemma, an important result that is related to duality in linear programming. In our setting, we utilize this lemma to formally verify that unsatisfiability of linear rational constraints over \(\mathbb {Q}\) implies unsatisfiability over \(\mathbb {R}\). In total, we provide a verified simplex implementation with an incremental interface, that generates minimal unsatisfiable cores over \(\mathbb {Q}\) and \(\mathbb {R}\).

We base our formalization entirely on the incremental simplex algorithm described by Dutertre and de Moura [10]. This paper was also the basis of the existing implementation by Spasić and Marić, of which the correctness has been formalized in Isabelle/HOL [14].

Although the sizes of the existing simplex formalization and of our new one differ only by a relatively small amount (8143 versus 11167 lines), the amount of modifications is quite significant: 2940 lines have been replaced by 5964 new ones. The verification of Farkas’ Lemma and derived lemmas required another 1647 lines. It mainly utilizes facts that are proved in the existing simplex formalization, but it does not require significant modifications thereof.

The remainder of our paper is structured as follows. In Sect. 2 we describe the key parts of the simplex algorithm of Dutertre and de Moura and its formalization by Spasić and Marić. We present the development of the extended simplex algorithm with minimal unsatisfiable cores and incremental interfaces in Sect. 3. We formalize Farkas’ Lemma and related results in Sect. 4. Finally, we conclude with Sect. 5.

Our formalization is available in the Archive of Formal Proofs (AFP) for Isabelle 2019 under the entries Simplex [13] and Farkas [5]. The Simplex entry contains the formalization of Spasić and Marić with our modifications and extensions. Our Isabelle formalization can be accessed by downloading the AFP, or by following the hyperlink at the beginning of each Isabelle code listing in Sects. 3 and 4.

Related Work. Allamigeon and Katz [1] formalized and verified an implementation of the simplex algorithm in Coq. Since their goal was to verify theoretical results about convex polyhedra, their formalization is considerably different from ours, as we aim at obtaining a practically efficient algorithm. For instance, we also integrate and verify an optimization of the simplex algorithm, namely the elimination of unused variables, cf. Dutertre and de Moura [10, end of Section 3]. This optimization also has not been covered by Spasić and Marić.

Chaieb and Nipkow verified quantifier elimination procedures (QEP) for dense linear orders and integer arithmetic [9], which are more widely applicable than the simplex algorithm. Spasić and Marić compared the QEPs with their implementation on a set of random quantifier-free formulas [16]. In these tests, their (and therefore our) simplex implementation outperforms the QEPs significantly. Hence, neither of the formalizations subsumes the other.

There is also work on verified certification of SMT proofs, where an untrusted SMT solver outputs a certificate that is checked by a verified certifier. This is an alternative to the development of a verified SMT prover, but the corresponding Isabelle implementation of Böhme and Weber [3] is not usable in our setting, as it relies on internal Isabelle tactics, such as linarith, which are not accessible in Isabelle-generated code such as CeTA.

2 The Simplex Algorithm and the Existing Formalization

The simplex algorithm as described by Dutertre and de Moura is a decision procedure for the question whether a set of linear constraints is satisfiable over \(\mathbb {Q}\). We briefly recall the main steps.

For the sake of the formalization, it is useful to divide the work of the algorithm into phases, and to think of the data available at the beginning and end of each phase as a layer (see Fig. 1). Thus, Layer 1 consists of the set of input constraints, which are (in)equalities of the form \(p \sim c\), for some linear polynomial p, constant \(c \in \mathbb {Q}\), and \({\sim } \in \{{<},{\le },{=},{\ge },{>}\}\). Phase 1, the first preprocessing phase, transforms all constraints of Layer 1 into non-strict inequalities involving \(\delta \)-rationals, i.e. rationals in combination with a symbolic value \(\delta \), representing some small positive rational number.Footnote 1 In Phase 2, each constraint with exactly one variable is normalized; in all other constraints the linear polynomial is replaced by a new variable (a slack variable). Thus, Phase 2 produces a set of inequalities of the form \(x \le c\) or \(x \ge c\), where x is a variable (such constraints are called atoms). Finally, the equations defining the newly introduced slack variables constitute a tableau, and a valuation (a function assigning a value to each variable) is taken initially to be the all-zero function.

At this point, the preprocessing phases have been completed. At the end of Phase 2, on Layer 3, we have a tableau of equations of the form \(s_j=\sum a_i x_i\), where the \(s_j\) are slack variables, together with a set of atoms bounding both original and slack variables. The task now is to find a valuation that satisfies both the tableau and the atoms. This will be done by means of two operations, assert and check, that provide an incremental interface: assert adds an atom to the set of atoms that should be considered, and check decides the satisfiability of the tableau and currently asserted atoms. Both operations preserve the following invariant: Each variable occurs only on the left-hand or only on the right-hand side of tableau equations, and the valuation satisfies the tableau and the asserted atoms whose variables occur on the right-hand side of tableau equations.

In order to satisfy the invariant, the assert operation has to update the valuation whenever an atom is added whose variable is the right-hand side of the tableau. If this update conflicts with previously asserted atoms in an easily detectable way, assert itself can detect unsatisfiability at this point. Otherwise, it additionally recomputes the valuation of the left-hand side variables according to the equations in the tableau.

The main operation of Phase 3 is check, where the algorithm repeatedly modifies the tableau and valuation, aiming to satisfy all asserted atoms or detect unsatisfiability. The procedure by which the algorithm actually manipulates the tableau and valuation is called pivoting, and works as follows: First, it finds a tableau equation where the current valuation does not satisfy an asserted atom, A, involving the left-hand side variable, x. If no such x can be found, the current valuation satisfies the tableau and all asserted atoms. Otherwise, the procedure looks, in the same equation, for a right-hand side variable y for which the valuation can be modified so that the resulting value of x, as given by the equation, exactly matches the bound in A. If no such y can be found, the pivoting procedure concludes unsatisfiability. Otherwise, it updates the valuation for both x and y, and flips the sides of the two variables in the equation, resulting in an equation that defines y. The right-hand side of the new equation replaces all appearances of y on the right-hand side of other equations, ensuring that the invariant is maintained. Since y’s updated value may no longer satisfy the asserted atoms involving y, it is not at all clear that repeated applications of pivoting eventually terminate. However, if the choice of variables during pivoting is done correctly, it can be shown that this is indeed the case.

Consider the example in Fig. 2. The input constraints A–D are given in step 1 and converted into non-strict inequalities with \(\delta \)-rationals in the step 2. In step 3, the constraint \(2y \ge 6\) is normalized to the atom \(y \ge 3\), two slack variables \(s=2x+y\) and \(t=x - 3y\) are created, and the constraints \(2x+y \le 12\) and \(x-3y \le 2\) are simplified accordingly. The equations defining s and t then form the initial tableau, and the initial valuation \(v_0\) is the all-zero function. In step 4, the three atoms A, B and D are asserted (indicated by boldface font) and the valuation is updated accordingly. Next, the algorithm invokes check and performs pivoting to find the valuation \(v_2\) that satisfies A, B, D and the tableau. This valuation on Layer 3 assigns \(\delta \)-rationals to all variables x, y, s, t and can then be translated to a satisfying valuation over \(\mathbb {Q}\) for constraints A, B, D on Layer 1. If the incremental interface is then used to also assert the atom C (step 6), unsatisfiability is detected via check after two further pivoting operations (step 7). Hence, the constraints A–D on Layer 1 are also unsatisfiable.

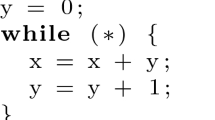

Spasić and Marić use Isabelle/HOL for the formalization, as do we for the extension. Isabelle/HOL is an interactive theorem prover for higher-order logic. Its syntax conforms to mathematical notation, and Isabelle supports keywords such as

,

,

and

and

, allowing us to state theorems in Isabelle in a way which is close to mathematical language. Furthermore, all terms in Isabelle have a well-defined type, specified with a double-colon:

, allowing us to state theorems in Isabelle in a way which is close to mathematical language. Furthermore, all terms in Isabelle have a well-defined type, specified with a double-colon:

We use Greek letters for arbitrary types. Isabelle has built-in support for the types of rational numbers (

We use Greek letters for arbitrary types. Isabelle has built-in support for the types of rational numbers (

) and real numbers (

) and real numbers (

). The type of a function

). The type of a function

from type \(\alpha \) to type \(\beta \) is specified as

from type \(\alpha \) to type \(\beta \) is specified as

. There is a set type (

. There is a set type (

), a list type (

), a list type (

), an option type (

), an option type (

with constructors

with constructors

and

and

) and a sum type (

) and a sum type (

with constructors

with constructors

and

and

). The syntax for function application is

). The syntax for function application is

. In this paper we use the terms Isabelle and Isabelle/HOL interchangeably.

. In this paper we use the terms Isabelle and Isabelle/HOL interchangeably.

Spasić and Marić proved the following main theorem about their simplex implementation

.

.

The lemma states that if

returns no valuation, then the constraints

returns no valuation, then the constraints

are unsatisfiable. If

are unsatisfiable. If

returns a valuation

returns a valuation

, then

, then

satisfies

satisfies

.

.

To prove the correctness of their algorithm they used a modular approach: Each subalgorithm (e.g. pivoting, incremental assertions) and its properties were specified in a locale, a special feature of Isabelle. Locales parameterize definitions and theorems over operations and assumptions. The overall algorithm is then implemented by combining several locales and their verified implementations. Soundness of the whole algorithm is then easily obtained via the locale structure. The modular structure of the formalization allows us to reuse, adapt and extend several parts of their formalization.

3 The New Simplex Formalization

In the following we describe our extension of the formalization of Spasić and Marić through the integration of minimal unsatisfiable cores (Sect. 3.1), the integration of an optimization during Phase 2 (Sect. 3.2) and the development of an incremental interface to the simplex algorithm (Sect. 3.3).

3.1 Minimal Unsatisfiable Cores

Our first extension is the integration of the functionality for producing unsatisfiable cores, i.e., given a set of unsatisfiable constraints, we seek a subset of the constraints which is still unsatisfiable. Small unsatisfiable cores are crucial for a DPLL(T)-based SMT solver in order to derive small conflict clauses, hence it is desirable to obtain minimal unsatisfiable cores, of which each proper subset is satisfiable. For example, in Fig. 2, \(\{A,B,C\}\) is a minimal unsatisfiable core. We will refer to this example throughout this section.

Internally, the formalized simplex algorithm represents the data available on Layer 3 in a data structure called a state, which contains the current tableau, valuation, the set of asserted atoms,Footnote 2 and an unsatisfiability flag. Unsatisfiability is detected by the check operation in Phase 3, namely if the current valuation of a state does not satisfy the atoms, and pivoting is not possible.Footnote 3 For instance, in step 7 unsatisfiability is detected as follows: The valuation \(v_3\) does not satisfy the atom \(x \ge 5 + \delta \) since \(v_3(x) = \frac{9}{2}\). The pivoting procedure looks at the tableau equation for x,

and checks whether it is possible to increase the value of x. This is only possible if the valuation of s in increased (since s occurs with positive coefficient in (1)), or if y is decreased (since y occurs with a negative coefficient). Neither is possible, because \(v_3(s)\) is already at its maximum (\(s \le 12\)) and \(v_3(y)\) at its minimum (\(y \ge 3\)). Hence, in order prove unsatisfiability on Layer 3, it suffices to consider the tableau and the atoms \(\{x \ge 5 + \delta , s \le 12, y \ge 3\}\).

We formally verify that this kind of reasoning works in general: Given the fact that some valuation v of a state does not satisfy an atom \(x \ge c\) for some left-hand side variable x, we can obtain the corresponding equation \(x = p\) of the tableau T, and take the unsatisfiable core as the set of atoms formed of: \(x \ge c\), all atoms \(y \ge v(y)\) for variables y of p with coefficient \(<0\), and all atoms \(s \le v(s)\) for variables s of p with coefficient \(>0\). The symmetric case \(x \le c\) is handled similarly by flipping signs.

We further prove that the generated cores are minimal w.r.t. the subset relation: Let A be a proper subset of an unsatisfiable core. There are two cases. If A does not contain the atom of the left-hand side variable x, then all atoms in A only contain right-hand side variables. Then by the invariant of the simplex algorithm, the current valuation satisfies both the tableau T and A. In the other case, some atom with a variable z of p is dropped. But then it is possible to apply pivoting for x and z. Let \(T'\) be the new tableau and v be the new valuation after pivoting. At this point we use the formalized fact that pivoting maintains the invariant. In particular, \(v \models T'\) and \(v \models A\), where the latter follows from the fact that A only contains right-hand side variables of the new tableau \(T'\) (note that x and z switched sides in the equation following pivoting). Since T and \(T'\) are equivalent, we conclude that v satisfies both T and A.

In the formalization, the corresponding lemma looks as follows:

The assumptions in the lemma express precisely the invariant of the simplex algorithm, and the lemma states that whenever the check operation sets the unsatisfiability flag \(\mathcal U\), then indeed a minimal unsatisfiable core is stored in the new state

. Whereas the assumptions have been taken unmodified from the existing simplex formalization, we needed to modify the formalized definition of the check operation and the datatype of states, so that

. Whereas the assumptions have been taken unmodified from the existing simplex formalization, we needed to modify the formalized definition of the check operation and the datatype of states, so that

can compute and store the unsatisfiable core in the resulting state.

can compute and store the unsatisfiable core in the resulting state.

At this point, we have assembled a verified simplex algorithm for Layer 3 that will either return satisfying valuations or minimal unsatisfiable cores. The next task is to propagate the minimal unsatisfiable cores upwards to Layer 2 and 1, since, initially, the unsatisfiable cores are defined in terms of the data available at Layer 3, which is not meaningful when speaking about the first two layers. A question that arises here is how to represent unsatisfiable cores. Taking the constraints literally is usually not a desirable solution, as then we would have to convert the atoms \(\{x \ge 5 + \delta , s \le 12,y \ge 3\}\) back to the non-strict constraints \(\{x \ge 5 \,+\, \delta , 2x \,+\, y \le 12, 2y \ge 6\}\) and further into \(\{x > 5, 2x \,+\, y \le 12, 2y \ge 6\}\), i.e., we would have to compute the inverses of the transformations in Phases 2 and 1. A far more efficient and simple solution is to use indexed constraints in the same way, as they already occur in the running example. Hence, the unsatisfiable core is just a set of indices (\(\{A,B,C\}\) in our example). These indices are then valid for all layers and do not need any conversion.

Since the formalization of Spasić and Marić does not contain indices at all, we modify large parts of the source code so that it now refers to indexed constraints, i.e., we integrate indices into algorithms, data structures, definitions, locales, properties and proofs. For instance, indexed constraints ics are just sets of pairs, where each pair consists of an index and a constraint, and satisfiability of indexed constraints is defined as

where I is an arbitrary set of indices.

In order to be able to lift the unsatisfiable core from Layer 3 to the upper layers, we have to prove that the two transformations (elimination of strict inequalities and introduction of slack variables) maintain minimal unsatisfiable cores. To this end, we modify existing proofs for these transformation, since they are not general enough initially. For instance, the soundness statement for the introduction of slack variables in Phase 2 states that if the transformation on non-strict constraints N produces the tableau T and atoms A, then N and the combination of T and A are equisatisfiable, i.e.,

However, for lifting minimal unsatisfiable cores we need a stronger property, namely that the transformation is also sound for arbitrary indexed subsets I:Footnote 4

Here, the indexed subsets in (2) are needed for both directions: given a minimal unsatisfiable core I of T and A, by the left-to-right implication of (2) we conclude that I is an unsatisfiable core of N, and it is minimal because of the right-to-left implication of (2). Note that tableau satisfiability (\(v \models T\)) is not indexed, since the tableau equations are global.

Our formalization therefore contains several new generalizations, e.g., the following lemma is the formal analogue to (2), where

is the function that introduces slack variables. In addition to the tableau

is the function that introduces slack variables. In addition to the tableau

and the indexed atoms

and the indexed atoms

, it also provides a computable function

, it also provides a computable function

to convert satisfying valuations for

to convert satisfying valuations for

and

and

into satisfying valuations for

into satisfying valuations for

.

.

After all these modifications we obtain a simplex implementation that indeed provides minimal unsatisfiable cores. The corresponding function

returns a sum type, which is either a satisfying valuation or an unsatisfiable core represented by a set of indices.

returns a sum type, which is either a satisfying valuation or an unsatisfiable core represented by a set of indices.

Here, the minimality of the unsatisfiable cores can only be ensured if the indices in the input constraints are distinct. That distinctness is essential can easily be seen: Consider the following indexed constraints \(\{(E, x \le 3), (F, x \le 5), (F, x \ge 10)\}\) where index F refers to two different constraints. If we invoke the verified simplex algorithm on these constraints, it detects that \(x \le 3\) is in conflict with \(x \ge 10\) and hence produces \(\{E,F\}\) as an unsatisfiable core. This core is clearly not minimal, however, since \(\{F\}\) by itself is already unsatisfiable.

Some technical problems arise, regarding distinctness in combination with constraints involving equality. For example, the Layer 1-constraint \((G, p = c)\) will be translated into the two constraints \((G, p \ge c)\) and \((G, p \le c)\) on Layer 2,Footnote 5 violating distinctness. These problems are solved by weakening the notion of distinct constraints on Layers 2 and 3, and strengthening the notion of a minimal unsatisfiable core for these layers: For each proper subset J of the unsatisfiable subset, each inequality has to be satisfied as if it were an equality, i.e., whenever there is some constraint \((j, p \le c)\) or \((j, p \ge c)\) with \(j \in J\), the satisfying valuation must fulfill \(p = c\).

3.2 Elimination of Unused Variables in Phase 2

Directly after creating the tableau and the set of atoms from non-strict constraints in Phase 2, it can happen that there are unused variables, i.e., variables in the tableau for which no atoms exist.

Dutertre and de Moura propose to eliminate unused variables by Gaussian elimination [10, end of Section 3] in order to reduce the size of the tableau. We integrate this elimination of variables into our formalization. However, instead of using Gaussian elimination, we implement the elimination via pivoting. To be more precise, for each unused variable x we perform the following steps.

-

If x is not already a left-hand side variable of the tableau, find any equation \(y = p\) in the tableau that contains x, and perform pivoting of x and y, so that afterwards x is a left-hand side variable of the tableau.

-

Drop the unique equation from the tableau that has x on its left-hand side, but remember the equation for reconstructing satisfying valuations.

Example 1

Consider the non-strict constraints \(\{x + y \ge 5, x + 2y \le 7, y \ge 2\}\) on Layer 2. These are translated to the atoms \(\{s \ge 5, t \le 7, y \ge 2\}\) in combination with the tableau \(\{s = x + y, t = x + 2y\}\), so x becomes an unused variable. Since x is not a left-hand side variable, we perform pivoting of x and s and obtain the new tableau \(\{x = s - y, t = s + y\}\). Then we drop the equation \(x = s - y\) resulting in the smaller tableau \(\{t = s + y\}\). Moreover, any satisfying valuation v for the variables \(\{y,s,t\}\) will be extended to \(\{x,y,s,t\}\) by defining \(v(x) := v(s) - v(y)\).

In the formalization, the elimination has been integrated into the

function of Sect. 3.1. In fact,

function of Sect. 3.1. In fact,

just executes both preprocessing steps sequentially: first, the conversion of non-strict constraints into tableau and atoms, and afterwards the elimination of unused variables as described in this section. Interestingly, we had to modify the locale-structure of Spasić and Marić at this point, since preprocessing now depends on pivoting.

just executes both preprocessing steps sequentially: first, the conversion of non-strict constraints into tableau and atoms, and afterwards the elimination of unused variables as described in this section. Interestingly, we had to modify the locale-structure of Spasić and Marić at this point, since preprocessing now depends on pivoting.

3.3 Incremental Simplex

The previous specifications of the simplex algorithm are monolithic: even if two (consecutive) inputs differ only in a single constraint, the functions

(in Sect. 2) and

(in Sect. 2) and

(in Sect. 3.1) will start the computation from scratch. Hence, they do not specify an incremental simplex algorithm, despite the fact that an incremental interface is provided on Layer 3 via assert and check.

(in Sect. 3.1) will start the computation from scratch. Hence, they do not specify an incremental simplex algorithm, despite the fact that an incremental interface is provided on Layer 3 via assert and check.

Since the incrementality of a theory solver is a crucial requirement for developing a DPLL(T)-based SMT solver, we will provide a formalization of the simplex algorithm that provides an incremental interface at each layer. Our design closely follows Dutertre and de Moura, who propose the following operations.

-

Initialize the solver by providing the set of all possible constraints. This will return a state where none of these constraints have been asserted.

-

Assert a constraint. This invokes a computationally inexpensive deduction algorithm and returns an unsatisfiable core or a new state.

-

Check a state. Performs an expensive computation that decides satisfiability of the set of asserted constraints; returns an unsat core or a checked state.

-

Extract a solution of a checked state.

-

Compute some checkpoint information for a checked state.

-

Backtrack to a state with the help of some checkpoint information.

Since a DPLL(T)-based SMT solver basically performs an exhaustive search, its performance can be improved considerably by having it keep track of checked states from which the search can be restarted in a different direction. This is why the checkpointing and backtracking functionality is necessary.

In Isabelle/HOL we specify this informal interface for each layer as a locale, which fixes the operations and the properties of that layer. For instance, the locale

is for Layer 1, where the type-variable \(\sigma \) represents the internal state for the layer, and \(\gamma \) is the checkpoint information.

is for Layer 1, where the type-variable \(\sigma \) represents the internal state for the layer, and \(\gamma \) is the checkpoint information.

The interface consists of the six operations

to invoke the algorithm, and the two invariants

to invoke the algorithm, and the two invariants

and

and

, the latter of which entails the former.

, the latter of which entails the former.

Both invariants

and

and

take the three arguments

take the three arguments

,

,

and

and

. Here,

. Here,

is the global set of indexed constraints that is encoded in the state

is the global set of indexed constraints that is encoded in the state

. It can only be set by invoking

. It can only be set by invoking

and is kept constant otherwise.

and is kept constant otherwise.

indicates the set of all constraints that have been asserted in the state

indicates the set of all constraints that have been asserted in the state

.

.

We briefly explain the specification of

and

and

and leave the usage of the remaining functionality to the reader.

and leave the usage of the remaining functionality to the reader.

For the

operation there are two possible outcomes. If the assertion of index

operation there are two possible outcomes. If the assertion of index

was successful, it returns a new state

was successful, it returns a new state

which satisfies the same invariant as

which satisfies the same invariant as

, and whose set of indices of asserted constraints contains

, and whose set of indices of asserted constraints contains

, and is otherwise the same as the corresponding set in

, and is otherwise the same as the corresponding set in

. Otherwise, the operation returns a set of indices

. Otherwise, the operation returns a set of indices

, which is a subset of the set of indices of asserted constraints (including

, which is a subset of the set of indices of asserted constraints (including

), such that the set of all

), such that the set of all

-indexed constraints is a minimal unsatisfiable core.

-indexed constraints is a minimal unsatisfiable core.

The backtracking facility works as follows. Assume that one has computed the checkpoint information

in a state

in a state

, which is only permitted if

, which is only permitted if

satisfies the stronger invariant for some set of indices

satisfies the stronger invariant for some set of indices

. Afterwards, one may have performed arbitrary operations and transitioned to a state

. Afterwards, one may have performed arbitrary operations and transitioned to a state

corresponding to a superset of indices

corresponding to a superset of indices

. Then, solely from

. Then, solely from

and

and

, one can compute via

, one can compute via

a new state

a new state

that corresponds to the old set of indices

that corresponds to the old set of indices

. Of course, the implementation should be done in such a way that the size of

. Of course, the implementation should be done in such a way that the size of

is small in comparison to the size of

is small in comparison to the size of

; in particular,

; in particular,

should not be

should not be

itself. And, indeed, our implementation behaves in the same way as the informally described algorithm by Dutertre and de Moura: for a checkpoint

itself. And, indeed, our implementation behaves in the same way as the informally described algorithm by Dutertre and de Moura: for a checkpoint

of state

of state

we store the asserted atoms of the state

we store the asserted atoms of the state

, but neither the valuation nor the tableau. These are taken from the state

, but neither the valuation nor the tableau. These are taken from the state

when invoking

when invoking

.

.

In order to implement the incremental interface, we take the same modular approach as Spasić and Marić, namely that for each layer and its corresponding Isabelle locale, we rely upon the existing functionality of the various phases, together with the interface of the lower layers, to implement the locale.

In our case, a significant part of the work has already been done via the results described in Sect. 3.1: most of the generalizations that have been performed in order to support indexed constraints, play a role in proving the soundness of the incremental simplex implementation. In particular, the generalizations for Phases 1 and 2 are vital. For instance, the set of indices

in lemma

in lemma

on page 9 can not only be interpreted as an unsatisfiable core, but also as the set of currently asserted constraints. Therefore,

on page 9 can not only be interpreted as an unsatisfiable core, but also as the set of currently asserted constraints. Therefore,

allows us to convert a satisfying valuation on Layer 2 into a satisfying valuation on Layer 1 for the currently asserted constraints that are indexed by

allows us to convert a satisfying valuation on Layer 2 into a satisfying valuation on Layer 1 for the currently asserted constraints that are indexed by

. Consequently, the internal state of the simplex algorithm on Layer 1 not only stores the state of Layer 3 as it is described at the beginning of Sect. 3.1, but additionally stores the function

. Consequently, the internal state of the simplex algorithm on Layer 1 not only stores the state of Layer 3 as it is described at the beginning of Sect. 3.1, but additionally stores the function

, in order to compute satisfying valuations on Layer 1.

, in order to compute satisfying valuations on Layer 1.

We further integrate and prove the correctness of the functionality of checkpointing and backtracking on all layers, since these features have not been formalized by Spasić and Marić. For instance, when invoking

on Layer 3 with

on Layer 3 with

, we obtain a new state that contains the tableau

, we obtain a new state that contains the tableau

and valuation

and valuation

of state

of state

, but the asserted atoms

, but the asserted atoms

of state

of state

. Hence, we need to show that

. Hence, we need to show that

satisfies those asserted atoms of

satisfies those asserted atoms of

that correspond to right-hand side variables of

that correspond to right-hand side variables of

. To this end, we define the invariant on Layer 3 in a way that permits us to conclude that the tableaux

. To this end, we define the invariant on Layer 3 in a way that permits us to conclude that the tableaux

and

and

are equivalent. Using this equivalence, we then formalize the desired result for Layer 3. Checkpointing and backtracking on the other layers is just propagated to the next-lower layers, i.e., no further checkpointing information is required on Layers 1 and 2.

are equivalent. Using this equivalence, we then formalize the desired result for Layer 3. Checkpointing and backtracking on the other layers is just propagated to the next-lower layers, i.e., no further checkpointing information is required on Layers 1 and 2.

Finally, we combine the implementations of all phases and layers to obtain a fully verified implementation of the simplex algorithm w.r.t. the specification defined in the locale

.

.

Note that the incremental interface does not provide a function to assert constraints negatively. However, this limitation is easily circumvented by passing both the positive and the negative constraint with different indices to the

function. For example, instead of using \((A, x > 5)\) as in Fig. 2, one can use the two constraints \((+A, x > 5)\) and \((-A, x \le 5)\). Then one can assert both the original and the negated constraint via indices \(+A\) and \(-A\), respectively. Only the negation of equations is not possible in this way, since this would lead to disjunctions. However, each equation can easily be translated into the conjunction of two inequalities on the formula-level, i.e., they can be eliminated within a preprocessing step of the SMT-solver.

function. For example, instead of using \((A, x > 5)\) as in Fig. 2, one can use the two constraints \((+A, x > 5)\) and \((-A, x \le 5)\). Then one can assert both the original and the negated constraint via indices \(+A\) and \(-A\), respectively. Only the negation of equations is not possible in this way, since this would lead to disjunctions. However, each equation can easily be translated into the conjunction of two inequalities on the formula-level, i.e., they can be eliminated within a preprocessing step of the SMT-solver.

4 A Formalized Proof of Farkas’ Lemma

Farkas’ Lemma states that a system of linear constraints is unsatisfiable if and only if there is a linear combination of the constraints that evaluates to a trivially unsatisfiable inequality (e.g. \(0 \le d\) for a constant \(d<0\)). The non-zero coefficients in such a linear combination are referred to as Farkas coefficients, and can be thought of as an easy-to-check certificate for the unsatisfiability of a set of linear constraints (given the coefficients, one can simply evaluate the corresponding linear combination and check that the result is indeed unsatisfiable.)

One way to prove Farkas’ Lemma is by using the Fundamental Theorem of Linear Inequalities; this theorem can in turn be proved in the same way as the fact that the simplex algorithm terminates (see [15, Chapter 7]). Although Spasić and Marić have formalized a proof of termination for their simplex implementation [16], this is not sufficient to immediately prove Farkas’ Lemma. Instead, our formalization of the result begins at the point where the simplex algorithm detects unsatisfiability in Phase 3, because this is the only point in the execution of the algorithm where Farkas coefficients can be computed directly from the available data.Footnote 6 Then, these coefficients need to be transferred up to Layer 1. In the following we illustrate how Farkas coefficients are computed and propagated through the various phases of the algorithm, by giving examples and explaining, informally, intermediate statements that have been formalized.

To illustrate how Farkas coefficients are determined at the point where the check-operation detects unsatisfiability in Phase 3, let us return once more to the example in Fig. 2. In step 7, the algorithm detects unsatisfiability via the equation \(x = \frac{s - y}{2}\), and generates the unsatisfiable core based on this equation. This equality can also be used to obtain Farkas coefficients. To this end, we rewrite the equation as \(-x + \frac{1}{2}s - \frac{1}{2}y = 0\), and use the coefficients in this equation (\(-1\) for x, \(\frac{1}{2}\) for s, and \(-\frac{1}{2}\) for y) to form a linear combination of the corresponding atoms involving the variables:

where \(p = 0\) is a reformulation of an equation of the tableau and d is a negative constant. Consequently, we show in the formalization that whenever unsatisfiability is detected for a given tableau T and set of atoms A, there exist Farkas coefficients \(r_i\), i.e., that there is a linear combination \((\sum r_i a_i) = (p \le d)\), where \(d < 0\), \(a_i \in A\) for all i, each \(r_ia_i\) is a \(\le \)-inequality, and \(T \models p = 0\). The second-to-last condition ensures that only inequalities are added which are oriented in the same direction, so that the summation is well-defined. The condition \(T \models p = 0\) means that for every valuation that satisfies T, p evaluates to 0.

Recall that before detecting unsatisfiability, several pivoting steps may have been applied, e.g., when going from step 3 to step 7. Hence, it is important to verify that Farkas coefficients are preserved by pivoting. This is easily achieved by using our notion of Farkas coefficients: Spasić and Marić formally proved that pivoting changes the tableau \(T'\) into an equivalent tableau T, and, hence, the condition \(T \models p = 0\) immediately implies \(T' \models p = 0\). In the example, we conclude that \(T' \models -x + \frac{1}{2}s - \frac{1}{2} y = 0\) for any tableau \(T'\) in steps 3–7. Thus, (FC3) provides Farkas coefficients for the atoms and tableau mentioned in any of these steps.

Layer 2 requires a new definition of Farkas coefficients, since there is no tableau T and set of atoms A at this point, but a set N of non-strict constraints. The new definition is similar to the one on Layer 3, except that the condition \(T \models p = 0\) is dropped, and instead we require that \(p = 0\). To be precise, \(r_i\) are Farkas coefficients for N if there is a linear combination \((\sum r_i c_i) = (0 \le d)\) where \(d < 0\), \(c_i \in N\) for all i, and each \(r_ic_i\) is a \(\le \)-inequality.

We prove that the preprocessing done in Phase 2 allows for the transformation of Farkas coefficients for Layer 3 to Farkas coefficients for Layer 2. In essence, the same coefficients \(r_i\) can be used, one just has to replace each atom \(a_i\) by the corresponding constraint \(c_i\). The only exception is that if a constraint \(c_i\) has been normalized, then one has to multiply the corresponding \(r_i\) by the same factor. However, this will not change the constant d, and we formally verify that the polynomial resulting from the summation will indeed be 0.

In the example, we would obtain (FC2) for Layer 2. Here, the third coefficient has been changed from \(-\frac{1}{2}\) to \(-\frac{1}{2} \cdot \frac{1}{2} = -\frac{1}{4}\), where the latter \(\frac{1}{2}\) is the factor used when normalizing the constraint \(2y \ge 6\) to obtain the atom \(y \ge 3\).

Finally, for Layer 1 the notion of Farkas coefficients must once again be redefined so as to work with a more general constraint type that also allows strict constraints. In particular, we have that either the sum of inequalities is strict and the constant d is non-positive, or the sum of inequalities is non-strict and d is negative. In the example we obtain (again with the same coefficients, but using the original, possibly strict inequalities in the linear combination):

Farkas coefficients \(r_i\) on Layer 2 are easily translated to Layer 1, since no change is required, i.e., the same coefficients \(r_i\) can be used. We just prove that whenever the resulting inequality in Layer 2 is \(0 \le d\) for \(d = a + b\delta \) with \(a,b \in \mathbb {Q}\), then the sum of inequalities on Layer 1 will be \(0 \le a\) (and \(b = 0\)), or it will be \(0 < a\). In both cases we use the property that \(a + b\delta = d\) is negative, to show that the \(r_i\) are Farkas coefficients for Layer 1.

We illustrate the results of our formalization of Farkas coefficients by providing the formal statements for two layers. In both lemmas,

is a set of linear constraints of the form \(p \sim d\) for a linear polynomial p, constant d and \({\sim } \in \{{\le }, {<}\}\). Here, the first theorem is an Isabelle statement of [8, Lemma 2], i.e., Farkas’ Lemma over \(\delta \)-rationals. The second theorem is a more general version of Farkas’ Lemma which also permits strict inequalities, i.e., our statement on Layer 1. It is known as Motzkin’s Transposition Theorem [15, Cor. 7.1k] or the Kuhn–Fourier Theorem [17, Thm. 1.1.9].

is a set of linear constraints of the form \(p \sim d\) for a linear polynomial p, constant d and \({\sim } \in \{{\le }, {<}\}\). Here, the first theorem is an Isabelle statement of [8, Lemma 2], i.e., Farkas’ Lemma over \(\delta \)-rationals. The second theorem is a more general version of Farkas’ Lemma which also permits strict inequalities, i.e., our statement on Layer 1. It is known as Motzkin’s Transposition Theorem [15, Cor. 7.1k] or the Kuhn–Fourier Theorem [17, Thm. 1.1.9].

The existence of Farkas coefficients not only implies unsatisfiability over \(\mathbb {Q}\), but also unsatisfiability over \(\mathbb {R}\): lifting the summation of linear inequalities from \(\mathbb {Q}\) to \(\mathbb {R}\) yields the same conflict \(0 \le d\), with d negative, over the reals. Hence, we formalize the property that satisfiability of linear rational constraints over \(\mathbb {Q}\) and over \(\mathbb {R}\) are the same. Consequently, the (incremental) simplex algorithm is also able to prove unsatisfiability over \(\mathbb {R}\).

Note that the finiteness condition of the set of constraints in the previous three statements mainly arose from the usage of the simplex algorithm for doing the underlying proofs, since the simplex algorithm only takes finite sets of constraints as input. However, the finiteness of the constraint set is actually a necessary condition, regardless of how the statements are proved: none of the three properties hold for infinite sets of constraints. For instance, the constraint set \(\{x \ge c \mid c \in \mathbb {N}\}\) is unsatisfiable over \(\mathbb {Q}\), but there are no Farkas coefficients for these constraints. Moreover, the rational constraints \(\{x \ge c \mid c \le \pi , c \in \mathbb {Q}\} \cup \{ x \le c \mid c \ge \pi , c \in \mathbb {Q}\}\) have precisely one real solution, \(v(x) = \pi \), but there is no rational solution since \(\pi \notin \mathbb {Q}\).

5 Conclusion

We have presented our development of an Isabelle/HOL formalization of a simplex algorithm with minimal unsatisfiable core generation and an incremental interface. Furthermore, we gave a verified proof of Farkas’ Lemma, one of the central results in the theory of linear inequalities. Both of these contributions are related to the simplex formalization of Spasić and Marić [16]: the incremental simplex formalization is an extension built on top of their work, and the formal proof of Farkas’ Lemma follows their simplex implementation through the phases of the algorithm.

In our formalization we use locales as the main structuring technique for obtaining modular proofs – as was done by Spasić and Marić. Our formal proofs were mainly written interactively, with frequent use of

rather than

rather than

(which only provided a few externally generated proofs).

(which only provided a few externally generated proofs).

Both of our contributions form a crucial stepping stone towards our initial goal, the development of a verified SMT solver that is based on the DPLL(T) approach and supports linear arithmetic over \(\mathbb {Q}\) and \(\mathbb {R}\). The connection of the theory solver and the verified DPLL-based SAT solver [2] remains as future work. Here, we already got in contact with Mathias Fleury to initiate some collaboration. However, he immediately informed us that the connection itself will be a non-trivial task on its own. One issue is that his SAT solver is expressed in the imperative monad, but in our use case we need to apply it outside this monad, i.e., it should have a purely functional type such as

.

.

Notes

- 1.

Arithmetic on \(\delta \)-rationals is defined pointwise, e.g., \((a+b\delta ) + (c + d\delta ) := (a+c) + (b+d)\delta \), and \(a+b\delta< c + d\delta := a< c \vee (a = c \wedge b < d)\) for any \(a,b,c,d \in \mathbb {Q}\).

- 2.

In the simplex algorithm [10] and the formalization, the asserted atoms are stored via bounds, but this additional data structure is omitted in the presentation here.

- 3.

Asserting an atom can also detect unsatisfiability, but this gives rise to trivial unsatisfiable cores of the form \(\{x \le c, x \ge d\}\) for constants \(d > c\).

- 4.

This stronger property is also required, if the preprocessing is performed on the global formula, i.e., including the Boolean structure. The reason is that also there one needs soundness of the preprocessing for arbitrary subsets of the constraints.

- 5.

Note that it is not possible to directly add equality constraints on Layer 1 to the tableau: First, this would invalidate the incremental interface, since the tableau constraints are global; second, the tableau forms a homogeneous system of equations, so it does not permit equations such as \(x - y = 1\) which have a non-zero constant.

- 6.

Again, we here consider only the check operation, since obtaining Farkas coefficients for a conflict detected by assert is trivial, cf. footnote 3.

References

Allamigeon, X., Katz, R.D.: A formalization of convex polyhedra based on the simplex method. In: Ayala-Rincón, M., Muñoz, C.A. (eds.) ITP 2017. LNCS, vol. 10499, pp. 28–45. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-66107-0_3

Blanchette, J.C., Fleury, M., Lammich, P., Weidenbach, C.: A verified SATsolver framework with learn, forget, restart, and incrementality. J. Autom. Reasoning 61(1–4), 333–365 (2018). https://doi.org/10.1007/s10817-018-9455-7

Böhme, S., Weber, T.: Fast LCF-style proof reconstruction for Z3. In: Kaufmann, M., Paulson, L.C. (eds.) ITP 2010. LNCS, vol. 6172, pp. 179–194. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-14052-5_14

Bonacina, M.P., Graham-Lengrand, S., Shankar, N.: Proofs in conflict-driven theory combination. In: 7th ACM SIGPLAN International Conference Certified Programs and Proofs, CPP 2018, pp. 186–200. ACM (2018). https://doi.org/10.1145/3167096

Bottesch, R., Haslbeck, M.W., Thiemann, R.: Farkas’ Lemma and Motzkin’s Transposition Theorem. Archive of Formal Proofs, January 2019. http://isa-afp.org/entries/Farkas.html. Formal proof development

Brockschmidt, M., Cook, B., Ishtiaq, S., Khlaaf, H., Piterman, N.: T2: temporal property verification. In: Chechik, M., Raskin, J.-F. (eds.) TACAS 2016. LNCS, vol. 9636, pp. 387–393. Springer, Heidelberg (2016). https://doi.org/10.1007/978-3-662-49674-9_22

Brockschmidt, M., Joosten, S.J.C., Thiemann, R., Yamada, A.: Certifying safety and termination proofs for integer transition systems. In: de Moura, L. (ed.) CADE 2017. LNCS (LNAI), vol. 10395, pp. 454–471. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-63046-5_28

Bromberger, M., Weidenbach, C.: New techniques for linear arithmetic: cubes and equalities. Formal Methods Syst. Des. 51(3), 433–461 (2017). https://doi.org/10.1007/s10703-017-0278-7

Chaieb, A., Nipkow, T.: Proof synthesis and reflection for linear arithmetic. J. Autom. Reasoning 41(1), 33 (2008). https://doi.org/10.1007/s10817-008-9101-x

Dutertre, B., de Moura, L.: A fast linear-arithmetic solver for DPLL(T). In: Ball, T., Jones, R.B. (eds.) CAV 2006. LNCS, vol. 4144, pp. 81–94. Springer, Heidelberg (2006). https://doi.org/10.1007/11817963_11

Ganzinger, H., Hagen, G., Nieuwenhuis, R., Oliveras, A., Tinelli, C.: DPLL(T): fast decision procedures. In: Alur, R., Peled, D.A. (eds.) CAV 2004. LNCS, vol. 3114, pp. 175–188. Springer, Heidelberg (2004). https://doi.org/10.1007/978-3-540-27813-9_14

Giesl, J., et al.: Analyzing program termination and complexity automatically with AProVE. J. Autom. Reasoning 58, 3–31 (2017). https://doi.org/10.1007/s10817-016-9388-y

Marić, F., Spasić, M., Thiemann, R.: An incremental simplex algorithm with unsatisfiable core generation. Archive of Formal Proofs, August 2018. http://isa-afp.org/entries/Simplex.html. Formal proof development

Nipkow, T., Wenzel, M., Paulson, L.C. (eds.): Isabelle/HOL. LNCS, vol. 2283. Springer, Heidelberg (2002). https://doi.org/10.1007/3-540-45949-9

Schrijver, A.: Theory of Linear and Integer Programming. Wiley, Hoboken (1999)

Spasić, M., Marić, F.: Formalization of incremental simplex algorithm by stepwise refinement. In: Giannakopoulou, D., Méry, D. (eds.) FM 2012. LNCS, vol. 7436, pp. 434–449. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-32759-9_35

Stoer, J., Witzgall, C.: Convexity and Optimization in Finite Dimensions I. Die Grundlehren der mathematischen Wissenschaften, vol. 163 (1970). https://www.springer.com/gp/book/9783642462184

Acknowledgments

We thank the reviewers and Mathias Fleury for constructive feedback.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2019 The Author(s)

About this paper

Cite this paper

Bottesch, R., Haslbeck, M.W., Thiemann, R. (2019). Verifying an Incremental Theory Solver for Linear Arithmetic in Isabelle/HOL. In: Herzig, A., Popescu, A. (eds) Frontiers of Combining Systems. FroCoS 2019. Lecture Notes in Computer Science(), vol 11715. Springer, Cham. https://doi.org/10.1007/978-3-030-29007-8_13

Download citation

DOI: https://doi.org/10.1007/978-3-030-29007-8_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-29006-1

Online ISBN: 978-3-030-29007-8

eBook Packages: Computer ScienceComputer Science (R0)