Abstract

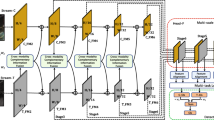

Due to the complementarity of multispectral data, the performance of pedestrian detection can be significantly improved, so multispectral pedestrian detection has received great attention from the research community. However, existing pedestrian detection algorithms still suffer from some problems, such as insufficient information exchange between the two streams, and lack of targeted network design for the characteristics of the image source. In practical application scenarios, different targeted network models are generally used during the day and night, and the day model and night model can be simply switched during the deduction process. Therefore, we propose two subnetworks FTHd (Fusion Transformer Histogram day) and FTn (Fusion Transformer night) for the characteristics of daytime and nighttime images. The texture features of RGB images during the day are more obvious. We first add a histogram layer to the input branch of the detection network. After that, we added the cross-modal feature fusion method CFT (Cross-Modal Fusion Transformer) module to fuse and interact features. By leveraging the Transformer’s self-attention, the network can naturally perform intra-modal and inter-modal fusion. The light at night is very weak, and thermal images play a key role. Since the texture information is weak, complex network structures are not required, and we combine the two streams into one stream to reduce the amount of computation. Finally, we add a CFT module to fuse and interact features. Compared with baseline methods, the proposed FTHd and FTn achieve improved pedestrian detection accuracy.

Similar content being viewed by others

Data availability

The KAIST dataset can be accessed via https://github.com/SoonminHwang/rgbt-ped-detection, and CVC-14 dataset can be accessed via http://adas.cvc.uab.es/elektra/enigma-portfolio/cvc-14-visible-fir-day-night-pedestrian-sequence-dataset/.

References

Hwang, S., Park, J., Kim, N., et al.: Multispectral pedestrian detection: benchmark dataset and baseline. In: Proceedings of the IEEE Conference On Computer Vision And Pattern Recognition, pp. 1037–1045 (2015)

Liu, J., Zhang, S,, Wang, S., et al.: Multispectral deep neural networks for pedestrian detection. arXiv:1611.02644 (2016)

Li, C., Song, D., Tong, R., et al.: Multispectral pedestrian detection via simultaneous detection and segmentation. arXiv:1808.04818 (2018)

Zhang, L., Zhu, X., Chen, X., et al.: Weakly aligned cross-modal learning for multispectral pedestrian detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 5127–5137 (2019)

Li, C., Song, D., Tong, R., et al.: Illumination-aware faster R-CNN for robust multispectral pedestrian detection. Pattern Recognit. 85, 161–171 (2019)

Guan, D., Cao, Y., Yang, J., et al.: Fusion of multispectral data through illumination-aware deep neural networks for pedestrian detection. Inf. Fus. 50, 148–157 (2019)

Zhou, K., Chen, L., Cao, X.: Improving multispectral pedestrian detection by addressing modality imbalance problems. In: European Conference On Computer Vision. Springer, Cham, pp. 787-803 (2020)

Kim, J., Kim, H., Kim, T., et al.: MLPD: multi-label pedestrian detector in multispectral domain. IEEE Robot. Autom. Lett. 6(4), 7846–7853 (2021)

Peeples, J., Xu, W., Zare, A.: Histogram layers for texture analysis. IEEE Trans. Artif. Intell. 3(4), 541–552 (2021)

Li, H., Wu, X.J.: DenseFuse: a fusion approach to infrared and visible images. IEEE Trans. Image Process. 28(5), 2614–2623 (2018)

Li, H., Wu, X.J., Kittler, J.: RFN-Nest: an end-to-end residual fusion network for infrared and visible images. Inf. Fus. 73, 72–86 (2021)

Xu, H., Ma, J., Jiang, J., et al.: U2Fusion: a unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 44(1), 502–518 (2020)

Zhang, H., Fromont, E., Lefèvre, S., et al.: Multispectral fusion for object detection with cyclic fuse-and-refine blocks. In: 2020 IEEE International Conference on Image Processing (ICIP), pp. 276-280 (2020)

VS, V., Valanarasu, J.M.J., Oza, P., et al.: Image fusion transformer. arXiv:2107.09011 (2021)

Prakash, A., Chitta, K., Geiger, A.: Multi-modal fusion transformer for end-to-end autonomous driving. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7077–7087 (2021)

Fang, QY., Han, DP., Wang, ZK.: Cross-modality fusion transformer for multispectral object detection. arXiv:2111.00273 (2021)

Cimpoi, M., Maji, S., Vedaldi, A.: Deep filter banks for texture recognition and segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3828–3836 (2015)

Zhang, H., Xue, J., Dana, K.: Deep ten: Texture encoding network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 708–717 (2017)

Xue, J., Zhang, H., Dana, K.: Deep texture manifold for ground terrain recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 558–567 (2018)

Hu, Y., Long, Z., AlRegib, G.: Multi-level texture encoding and representation (multer) based on deep neural networks. In: 2019 IEEE International Conference on Image Processing (ICIP), pp. 4410–4414 (2019)

Zhai, W., Cao, Y., Zhang, J., et al.: Deep multiple-attribute-perceived network for real-world texture recognition. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 3613–3622 (2019)

Chen, Z., Li, F., Quan, Y., et al.: Deep texture recognition via exploiting cross-layer statistical self-similarity. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5231–5240 (2021)

Basu, S., Karki, M., Mukhopadhyay, S., et al.: A theoretical analysis of Deep Neural Networks for texture classification. In: 2016 International Joint Conference on Neural Networks (IJCNN), pp. 992–999 (2016)

Basu, S., Mukhopadhyay, S., Karki, M., et al.: Deep neural networks for texture classification: a theoretical analysis. Neural Netw. 97, 173–182 (2018)

González, A., Fang, Z., Socarras, Y., et al.: Pedestrian detection at day/night time with visible and FIR cameras: a comparison. Sensors 16(6), 820 (2016)

Dollar, P., Wojek, C., Schiele, B., et al.: Pedestrian detection: an evaluation of the state of the art. IEEE Trans. Pattern Anal. Mach. Intell. 34(4), 743–761 (2011)

Konig, D., Adam, M., Jarvers, C., et al.: Fully convolutional region proposal networks for multispectral person detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 49–56 (2017)

Park, K., Kim, S., Sohn, K.: Unified multi-spectral pedestrian detection based on probabilistic fusion networks. Pattern Recognit. 80, 143–155 (2018)

Choi, H., Kim, S., Park, K., et al.: Multi-spectral pedestrian detection based on accumulated object proposal with fully convolutional networks. In: 2016 23rd International Conference on Pattern Recognition (ICPR), pp. 621–626 (2016)

Adadi, A., Berrada, M., et al.: Peeking inside the black-box: a survey on explainable artificial intelligence (XAI). IEEE Access 6, 52138–52160 (2018)

Aceto, G., Ciuonzo, D., Montieri, A., et al.: MIMETIC: mobile encrypted traffic classification using multimodal deep learning. Comput. Netw. 165, 106944 (2019)

Nascita, A., Montieri, A., Aceto, G., et al.: XAI meets mobile traffic classification: understanding and improving multimodal deep learning architectures. IEEE Trans. Netw. Serv. Manage. 18(4), 4225–4246 (2021)

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

DY and YZ conceived the idea. YZ and CF realized the idea and wrote the main manuscript text. CF, HL, and CD prepared all figures and tables. DY and QL provided supervision. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zang, Y., Fu, C., Yang, D. et al. Transformer fusion and histogram layer multispectral pedestrian detection network. SIViP 17, 3545–3553 (2023). https://doi.org/10.1007/s11760-023-02579-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02579-y