Abstract

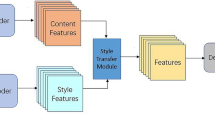

Most arbitrary style transfer methods only consider transferring the features of the style and content images. Although the pixel-wise style transfer is achieved. It is limited to preserving the content structure, the model tends to transfer the style features, and the loss of image information occurs during the transfer process. The model incline to transfer the style features and preservation of the content structure is weak. The generated pictures will produce artifacts and patterns of style pictures. In this paper, an attention feature distribution matching method for arbitrary style transfer is proposed. In network architecture, a combination of the self-attention mechanism and second-order statistics is used to perform style transfer, and the style strengthen block enhances the style features of generated images. In the loss function, the traditional content loss is not used. We integrate the attention mechanism and feature distribution matching to construct the loss function. The constraints are strengthened to avoid artifacts in the generated image. Qualitative and quantitative experiments demonstrate the effectiveness of our method compared with state-of-the-art arbitrary style transfer in improving arbitrary style transfer quality.

Similar content being viewed by others

References

Gatys, L. A., Ecker, A. S., Bethge, M.: Image style transfer using convolutional neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 2414–2423) (2016)

Wu, H., Sun, Z., Yuan, W.: Direction-aware neural style transfer. In: Proceedings of the 26th ACM International Conference on Multimedia, pp. 1163–1171 (2018)

Ulyanov, D., Vedaldi, A., Lempitsky, V.:. Improved texture networks: Maximizing quality and diversity in feed-forward stylization and texture synthesis. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6924–6932, (2017)

Kotovenko, D., Sanakoyeu, A., Lang, S., Ommer, B.: Content and style disentanglement for artistic style transfer. In: Proceedings of the IEEE/CVF international conference on computer vision, pp. 4422–4431 (2019)

Chen H, Zhao L, Zhang H, et al. Diverse image style transfer via invertible cross-space mapping[C]//2021 IEEE/CVF International Conference on Computer Vision (ICCV). IEEE Computer Society, 2021: 14860–14869.

Risser E.: Optimal textures: Fast and robust texture synthesis and style transfer through optimal transport[J] (2020). arXiv preprint arXiv:2010.14702

Kotovenko D, Wright M, Heimbrecht A, et al. Rethinking style transfer: from pixels to parameterized brushstrokes. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12196–12205 (2021)

Lin T, Ma Z, Li F, He D, Li X, Ding E, Wang N, Li J, Gao X:. Drafting and revision: Laplacian pyramid network for fast high-quality artistic style transfer. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5141–5150, (2021)

Chen, D., Yuan, L., Liao, J., Yu, N., StyleBank, H.G.: An explicit representation for neural image style transfer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–10 (2017)

Dumoulin, V., Shlens, J., Kudlur, M.: A learned representation for artistic style (2016). arXiv preprint arXiv:1610.07629.

Li, Y., Fang, C., Yang, J., Wang, Z., Lu, X., Yang, M.H.: Diversified texture synthesis with feed-forward networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 3920–3928 (2017)

Zhang, H., Dana, K.: Multi-style generative network for real-time transfer. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, pp. 0–0 (2018)

Chen, H., Wang, Z., Zhang, H., Zuo, Z., Li, A., Xing, W., Lu, D.: Artistic style transfer with internal-external learning and contrastive learning. Adv. Neural. Inf. Process. Syst. 34, 26561–26573 (2021)

Chen, T. Q., Schmidt, M.: Fast patch-based style transfer of arbitrary style (2016). arXiv preprint arXiv:1612.04337

Deng, Y., Tang, F., Dong, W., Huang, H., Ma, C., Xu, C.: Arbitrary video style transfer via multi-channel correlation. In: Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 35, No. 2, pp. 1210–1217 (2021)

Deng, Y., Tang, F., Dong, W., Sun, W., Huang, F., Xu, C.: Arbitrary style transfer via multi-adaptation network. In: Proceedings of the 28th ACM International Conference on Multimedia, pp. 2719–2727 (2020)

Zhang, Y., Tian, Y., Hou, J.: CSAST: Content self-supervised and style contrastive learning for arbitrary style transfer. Neural Netw. 164, 146–155 (2023)

Huang, X., Belongie, S.: Arbitrary style transfer in real-time with adaptive instance normalization. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1501–1510 (2017)

Li, X., Liu, S., Kautz, J., Yang, M.H.: Learning linear transformations for fast arbitrary style transfer (2018). arXiv preprint arXiv:1808.04537.

Li, Y., Fang, C., Yang, J., Wang, Z., Lu, X., Ang, M.H.: Universal style transfer via feature transforms. In: Advances in neural information processing systems, 30 (2017)

Park, D.Y., Lee, K.H.: Arbitrary style transfer with style-attentional networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5880–5888 (2019)

Sheng, L., Lin, Z., Shao, J., Wang, X.: Avatar-net: Multi-scale zero-shot style transfer by feature decoration. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8242–8250 (2018)

Wang Z, Zhao L, Zuo Z, et al. MicroAST: towards super-fast ultra-resolution arbitrary style transfer. In: Proceedings of the AAAI Conference on Artificial Intelligence. 37(3): 2742–2750 (2023)

Zhou, Z., Wu, Y., Zhou, Y.: Consistent arbitrary style transfer using consistency training and self-attention module[J]. IEEE Trans. Neural Netw. Learn. Syst. (2023). https://doi.org/10.1109/TNNLS.2023.3298383

Liu, S., Lin, T., He, D., Li, F., Wang, M., Li, X., Ding, E.: Adaattn: Revisit attention mechanism in arbitrary neural style transfer. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 6649–6658 (2021)

Deng, Y., Tang, F., Dong, W., Ma, C., Pan, X., Wang, L., Xu, C.: Stytr2: Image style transfer with transformers. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11326–11336 (2022)

An, J., Huang, S., Song, Y., Dou, D., Liu, W., Luo, J.: Artflow: Unbiased image style transfer via reversible neural flows. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 862–871 (2021)

Yao, Y., Ren, J., Xie, X., Liu, W., Liu, Y. J., Wang, J.: Attention-aware multi-stroke style transfer. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 1467–1475 (2019)

Wu, Z., Zhu, Z., Du, J., & Bai, X: CCPL: contrastive coherence preserving loss for versatile style transfer. In: European Conference on Computer Vision (pp. 189–206). Cham: Springer Nature Switzerland (2022)

Zhang Y, Li M, Li R, et al. Exact feature distribution matching for arbitrary style transfer and domain generalization. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 8035–8045 (2022)

Hong, K., Jeon, S., Lee, J., Ahn, N., Kim, K., Lee, P., Byun, H.: AesPA-Net: aesthetic pattern-aware style transfer networks (2023). arXiv preprint arXiv:2307.09724.

Lyu, Y., Jiang, Y., Peng, B., et al.: Infostyler: Disentanglement information bottleneck for artistic style transfer[J]. IEEE Trans. Circuits Syst. Video Technol. (2023). https://doi.org/10.1109/TCSVT.2023.3300906

Wang, X., Wang, W., Yang, S., et al.: CLAST: contrastive learning for arbitrary style transfer[J]. IEEE Trans. Image Process. 31, 6761–6772 (2022)

Wang Z, Zhang Z, Zhao L, et al.: AesUST: towards aesthetic-enhanced universal style transfer. In: Proceedings of the 30th ACM International Conference on Multimedia. pp. 1095–1106 (2022)

Wu Z, Song C, Zhou Y, et al. Efanet: Exchangeable feature alignment network for arbitrary style transfer[C]//Proceedings of the AAAI Conference on Artificial Intelligence. 2020, 34(07): 12305–12312.

Xin, H., Li, L.: Arbitrary style transfer with fused convolutional block attention modules[J]. IEEE Access 11, 4497 (2023)

Ma, Z., et al.: Dual-affinity style embedding network for semantic-aligned image style transfer. IEEE Trans. Neural Netw. Learn. Syst. 34(10), 7404–7417 (2023). https://doi.org/10.1109/TNNLS.2022.3143356

Chen, H., Zhao, L., Wang, Z., Zhang, H., Zuo, Z., Li, A., Lu, D.: Dualast: Dual style-learning networks for artistic style transfer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 872–881 (2021)

Lin, T.Y., Maire, M., Belongie, S., et al.: Microsoft coco: Common objects in context. In: European conference on computer vision, pp. 740–755. Springer, Cham (2014)

Karayev, S., Trentacoste, M., Han, H.,et al.: Recognizing image style (2013). arXiv preprint arXiv:1311.3715.

Acknowledgements

This work was supported by Natural Science Foundation of Anhui Province (2108085QF258).

Author information

Authors and Affiliations

Contributions

BG and ZSH were mainly responsible for writing the full article and preparing all the figures. All authors reviewed the manuscript

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Communicated by B. Bao.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ge, B., Hu, Z., Xia, C. et al. Arbitrary style transfer method with attentional feature distribution matching. Multimedia Systems 30, 96 (2024). https://doi.org/10.1007/s00530-024-01300-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00530-024-01300-4