Abstract

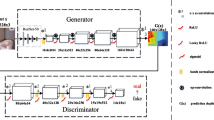

Owing to the improved representation ability, recent deep learning-based methods enable to estimate scene depths accurately. However, these methods still have difficulty in estimating consistent scene depths under real-world environments containing severe illumination changes, occlusions, and texture-less regions. To solve this problem, in this paper, we propose a novel depth-estimation method for unstructured multi-view images. Accordingly, we present a plane sweep generative adversarial network, where the proposed adversarial loss significantly improves the depth-estimation accuracy under real-world settings, and the consistency loss makes the depth-estimation results insensitive to the changes in viewpoints and the number of input images. In addition, 3D convolution layers are inserted into the network to enrich feature representation. Experimental results indicate that the proposed plane sweep generative adversarial network quantitatively and qualitatively outperforms state-of-the-art methods.

Similar content being viewed by others

Notes

W and H denote the width and height of cost volumes, respectively, and D in (1) denotes the number of depth ranges.

References

Aleotti, F., Tosi, F., Poggi, M., Mattoccia, S.: Generative adversarial networks for unsupervised monocular depth prediction. In: ECCVW (2018)

Chang, J.R., Chen, Y.S.: Pyramid stereo matching network. In: CVPR (2018)

Cui, Z., Heng, L., Yeo, Y.C., Geiger, A., Pollefeys, M., Sattler, T.: Real-time dense mapping for self-driving vehicles using fisheye cameras. In: ICRA (2019)

Gallup, D., Frahm, J.M., Mordohai, P., Yang, Q., Pollefeys, M.: Real-time plane-sweeping stereo with multiple sweeping directions. In: CVPR (2007)

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. In: NIPS (2014)

He, K., Zhang, X., Ren, S., Sun, J.: Spatial pyramid pooling in deep convolutional networks for visual recognition. In: ECCV (2014)

Huang, P.H., Matzen, K., Kopf, J., Ahuja, N., Huang, J.B.: Deepmvs: Learning multi-view stereopsis. In: CVPR (2018)

Im, S., Jeon, H.G., Lin, S., Kweon, S.: DPSNet: End-to-end deep plane sweep stereo. In: ICLR (2019)

Jaderberg, M., Simonyan, K., Zisserman, A., Kavukcuoglu, K.: Spatial transformer networks. In: NIPS (2015)

Ji, M., Gall, J., Zheng, H., Liu, Y., Fang, L.: Surfacenet: an end-to-end 3d neural network for multiview stereopsis. In: CVPR (2017)

Kendall, A., Martirosyan, H., Dasgupta, S., Henry, P., Kennedy, R., Bachrach, A., Bry, A.: End-to-end learning of geometry and context for deep stereo regression. In: ICCV (2017)

Kumar, A.C., Bhandarkar, S.M., Prasad, M.: Monocular depth prediction using generative adversarial networks. In: CVPRW (2018)

Li, R., Wang, S., Long, Z., Gu, D.: Undeepvo: Monocular visual odometry through unsupervised deep learning. In: ICRA (2018)

Liang, Z., Feng, Y., Guo, Y., Liu, H., Qiao, L., Chen, W., Zhou, L., Zhang, J.: Learning deep correspondence through prior and posterior feature constancy. In: CVPR (2018)

Lore, K.G., Reddy, K., Giering, M., Bernal, E.A.: Generative adversarial networks for depth map estimation from rgb video. In: CVPR (2018)

Mayer, N., Ilg, E., Hausser, P., Fischer, P., Cremers, D., Dosovitskiy, A., Brox, T.: A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation. In: CVPR (2016)

Moniz, J.R.A., Beckham, C., Rajotte, S., Honari, S., Pal, C.: Unsupervised depth estimation, 3d face rotation and replacement. In: NeurIPS (2018)

Schonberger, J.L., Zheng, E., Frahm, J.M., Pollefeys, M.: Semantic stereo matching with pyramid cost volumes. In: ECCV (2016)

Sinha, S.N., Scharstein, D., Szeliski, R.: Efficient high-resolution stereo matching using local plane sweeps. In: CVPR (2014)

Suominen, O., Gotchev, A.: Efficient cost volume sampling for plane sweeping based multiview depth estimation. In: ICIP (2015)

Ummenhofer, B., Zhou, H., Uhrig, J., Mayer, N., Ilg, E., Dosovitskiy, A., Brox, T.: Demon: Depth and motion network for learning monocular stereo. In: CVPR (2017)

Won, C., Ryu, J., Lim, J.: Omnimvs: End-to-end learning for omnidirectional stereo matching. In: ICCV (2019)

Wu, Z., Wu, X., Zhang, X., Wang, S., Ju, L.: Semantic stereo matching with pyramid cost volumes. In: ICCV (2019)

Yao, Y., Luo, Z., Li, S., Fang, T., Quan, L.: Mvsnet: depth inference for unstructured multi-view stereo. In: ECCV (2018)

Žbontar, J., LeCun, Y.: Stereo matching by training a convolutional neural network to compare image patches. J. Mach. Learn. Res. 17(65), 1–32 (2016)

Acknowledgements

This work was partly supported by the Chung-Ang University Graduate Research Scholarship Grants in 2018 and partly supported by ‘The Cross-Ministry Giga KOREA Project’ of The Ministry of Science, ICT and Future Planning, Korea (GK19C0200, Development of full-3D mobile display terminal and its contents).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Shu, D.W., Jang, W., Yoo, H. et al. Deep-plane sweep generative adversarial network for consistent multi-view depth estimation. Machine Vision and Applications 33, 5 (2022). https://doi.org/10.1007/s00138-021-01258-7

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-021-01258-7