Abstract

While reservoir computing is drawing attention, its applications are limited to small tasks. This chapter proposes a solution to this issue by introducing the Hidden-Fold Network, a recursive model with fixed random weights that resembles reservoir computing. The model is constructed based on recent discoveries in neural networks, namely the Strong Lottery Ticket Hypothesis and folding. By pruning an overparameterized model that is randomly initialized, it is possible to find accurate neural networks without the need for weight optimization. It is conjectured that residual networks may contain better subnetwork candidates for inference time when transformed into recurrent architectures, as they may be approximating unrolled shallow recurrent neural networks. This hypothesis is tested in image classification tasks, where subnetworks within the recurrent models are found to be more accurate and parameter-efficient than those within feedforward models, as well as the full models with learned weights.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

1 Introduction

Due to their complex structure, deep neural networks are capable of various types of learning. However, when it comes to time-series problems, recurrent neural networks (RNNs) are commonly used, but as their size increases, the cost of training becomes an issue. Reservoir computing (RC) is a special type of RNNs that offer a solution to this problem. In RC, the weights of the intermediate layer are fixed, and only the output weights from the intermediate layer to the output layer are trained using low-cost learners such as linear learners, which reduces the overall training cost. Physical reservoir computing takes this a step further by implementing the intermediate layer nodes of reservoir computing as physical phenomena on hardware. This approach reduces the need for design and can achieve even greater energy efficiency by selecting appropriate physical phenomena for the intermediate layer. There are various approaches for implementing physical reservoir computing [1].

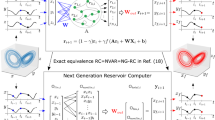

Currently, all RC models are single-layered and limited to processing small datasets like MNIST. However, although there is a desire to scale up RC to larger models capable of handling bigger datasets like CIFAR100, there are no methods to achieve this. Increasing the size of the reservoir is not feasible since it makes the output too complex for the simple classifier, and backpropagation cannot be utilized. Figure 1 illustrates our approach toward achieving deep reservoir computing (DRC). We begin by developing a method to make convolutional neural networks (CNNs) similar to RC and replacing CNN layers with reservoirs to construct a DRC.

This chapter explores the compatibility between CNN and RC using two recent concepts: the Strong Lottery Ticket Hypothesis [2, 3] and folding [4]. According to the Strong Lottery Ticket Hypothesis, high-performing neural networks can be obtained by pruning overparameterized dense models since they contain already available high-performing subnetworks. These subnetworks are sparse, random, and tiny, and can achieve competitive performance in vision tasks, making them suitable for efficient hardware implementation [5]. However, finding optimal connectivity patterns using current training methods can be challenging [6]. Folding, on the other hand, is based on the observation that a specific type of shallow RNN is equivalent to a deep residual neural network (ResNet) with weight sharing among layers [4], where the original ResNet is proposed in [7] and remains the backbone of many SOTA models. Implementing a folded RNN with significantly fewer parameters than the corresponding ResNet leads to similar performance.

Figure 2 shows our baseline idea. We fold a ResNet to then find a strong lottery ticket within the network with random fixed weights. We have found that the obtained recursive model, which is called Hidden-Fold Network (HFN), is similar to reservoir computing.

We have observed that ResNets have an inherent ability to learn ensembles of all possible unrollings of a shallow recurrent neural network. This restriction on the hypothesis space actually increases the number of potential subnetworks available at initialization time [8]. These resulting subnetworks are efficient in terms of parameters and demonstrate competitive performance on image classification tasks. Additionally, these subnetworks can be compressed to small memory sizes and have a high degree of parameter reusability, making them ideal candidates for inference acceleration.

2 Background

Driven by the increasingly powerful computational power promised by Moore’s Law, artificial neural networks have grown in size, leading to the field of deep learning [9]. This trend was initially led by image classification models [10] and has continued as researchers enhance neural networks at the cost of larger models. Natural language processing and generative models have also joined the trend, offering impressive capabilities at the expense of immense size [11, 12]. However, while this is a convenient approach for cutting-edge research, the high computational cost of these models makes them impractical for real-world applications. Therefore, researchers are also exploring small and efficient models as an alternative trend of research.

Strong lottery tickets refer to a set of efficient neural networks obtained through a training process that combines learning, pruning, and weight quantization. The development of this approach, depicted in Fig. 3, is described in detail in Sect. 2.1. In this study, we introduce a method that converts a ResNet into a recurrent architecture to enhance the quality of the strong lottery tickets that can be extracted from it, where ResNet is introduced in Sect. 2.2.

2.1 Lottery Ticket Hypotheses

Pruning is a widely used technique to compress trained neural networks into much smaller models by removing unnecessary weights [13,14,15,16,17]. By iteratively applying training and pruning, large portions of trained models can be removed without affecting accuracy, revealing that the original models are overparameterized. The sparsity of the resulting network can be leveraged for additional compression using entropy coding and for arithmetic optimization. This approach, combined with weight quantization, has resulted in highly efficient model compression schemes [18, 19] and specialized hardware neural accelerators [20].

In the past, it was observed that pruning did not separate the connectivity patterns from the pre-trained weights, making it impossible to reinitialize and train from scratch. However, a recent paper [21] introduced the concept of the Lottery Ticket Hypothesis (LTH), which states that overparameterized neural networks contain a subnetwork that can match the original model if trained in isolation. These subnetworks, known as winning tickets, are discovered by iteratively training, pruning, and resetting the remaining weights to their original value.

In an unexpected development during the analysis of the LTH, [2] discovered that learning weights is not essential: high-performing subnetworks exist within overparameterized neural networks at their randomly initialized state, which can be identified through pruning. Moreover, they proposed an algorithm for discovering these subnetworks by training a binary mask. Building upon this, [3] introduced a training algorithm and weight initialization method that produces sparse random subnetworks with competitive performance in image classification tasks.

Following a series of studies that explored the theoretical limits of necessary overparameterization to obtain these subnetworks [22,23,24], the notion of subnetworks obtained exclusively by pruning has been referred to as the Strong Lottery Ticket Hypothesis (SLTH). However, some researchers also refer to it as the multi-prize lottery ticket hypothesis [25], or simply as “hidden network.”

Although the discovery of strong tickets is surprising, it has some related precedents. For example, extreme learning machines use fixed random weights in their hidden units, only learning the output layer. Reservoir computers also use random recurrent architectures in an analogous manner. Perturbative neural networks propose substituting convolutional layers with fixed additive random noise and a learned linear combination. Training the batch normalization parameters of a fixed random network can achieve non-trivial accuracy. The binary neural network training method has been adapted to learn binary masks that, when applied to a trained model, extract subnetworks that perform well on untrained tasks.

2.2 Residual Neural Networks

The residual neural network (ResNet) [7], as illustrated in Fig. 4, is a popular architecture among the continuously expanding variety of neural network architectures. It serves as the backbone of many state-of-the-art (SOTA) models. ResNetis a deep convolutional neural network that follows a pyramidal feedforward structure. It comprises a convolutional pre-net, four stages of residual blocks, and a fully-connected post-net classifier. The first residual block of each stage downsamples the feature map and doubles the number of channels, adjusting the representation space size. The remaining blocks in a stage have the same size and shape and maintain the same representation space size.

Residual blocks in the ResNet architecture consist of batch normalization, ReLU activation, and convolutional layers concatenated together. In this chapter, we focus on the bottleneck block variant [7], which consists of three convolutional layers applied in sequence with kernel sizes of \(1\!\times \!1\), \(3\!\times \!3\), and \(1\!\times \!1\), respectively. Each residual block has a skip-connection, which is an identity function running parallel to the block that adds the block’s input to its output. In order to accommodate different representation space sizes, the downsampling blocks have a learnable layer in the skip-connection called a projection shortcut.

The original motivation for the ResNet architecture was to provide a clear path for backpropagation to reach a layer directly, solving the vanishing gradient problem. However, studies have shown that shuffling or removing residual blocks does not severely impact its performance, but rather there is a gradual degradation proportional to the amount of corruption introduced [26]. This phenomenon is not observed in other feedforward models where similar lesions result in critical performance loss. As a result, two alternative views of ResNet have been proposed: one suggests that it is an ensemble of shallow networks, while the other argues that it is an approximation of an unrolled shallow recurrent neural network. This work aims to reconcile these two views into a single coherent explanation.

Each residual block in ResNet can be thought of as a path divergence point, which leads to the interpretation of ResNet as an ensemble of all possible paths within it [26], resulting in \(2^n\) possible paths for a model with n residual blocks. Several improvements to ResNet have been proposed based on this perspective. For example, during training, removing random subsets of blocks makes the ensembled networks shallower and acts as regularization [27]. Furthermore, increasing the number of skip-connections per block increases the number of ensembled paths, thereby improving performance [28]. Another approach is to reduce network depth by increasing width, enabling the training of larger models for improved performance in less time [29].

An alternative explanation for the lesion and shuffling results is that all the blocks within a stage approximate the same function, as they have the same shape and partially receive the same inputs and gradients through the identity shortcuts. This implies that ResNet may naturally converge to the approximation of an unrolled shallow recurrent neural network, with each stage corresponding to a different hierarchical level of representation, including the downsampling block for feature map size adjustment. Meanwhile, the remaining blocks perform iterative refinement of features, according to proponents of this view [30].

The ensemble of unrollings in ResNet, which combines the two views mentioned above, the ensemble view and the unrolled iteration view, offers a vast search space to discover effective tickets within a model with limited parameters. Therefore, it is reasonable to assume that a ResNet with more recurrent approximations at its initial state may contain more potent tickets.

3 Hidden-Fold Networks

In this section, we present a technique for discovering a strong lottery ticket in a ResNet by first folding it. The approach, described in [8], yields Hidden-Fold Networks (HFNs), which outperform the strong tickets present in feedforward ResNets. Here, we provide details on the network’s architecture and training. Refer to [8] for information on weight initialization and batch normalization.

A ResNet stage folded into a recurrent residual stage through the opposite of time unrolling. The downsampling block is left untouched, whereas the rest of the stage is folded into a single recurrent block. That is, a stage of n blocks is folded into 2 blocks, the second of which is applied \(n-1\) iterations

3.1 Folded ResNet Architecture

In line with the unrolled iteration view, the chains of residual blocks with identical shapes in each stage approximate an iterative function. To achieve this, folding [4] is used, which converts these chains into recurrent blocks through weight sharing. In other words, \(h\!\,\approx \!\,g\!\,\approx \!\,f\) in Fig. 4 is explicitly transformed into \(h\!\,=\!\,g\!\,=\!\,f\). Since applying the same functions in succession is equivalent to repeatedly applying one of them, the feedforward chain can be transformed into a single recurrent block, in a process opposite to time unrolling.

The downsampling block in ResNet has a different shape than the rest of the blocks, and therefore cannot be folded with them. Strategies to address this issue have been explored in previous works, such as removing the block to create an isotropic architecture or substituting it with a simpler block, as discussed in [4, 31]. However, this work does not modify the downsampling blocks based on the view that different stages correspond to different hierarchical levels of features, which are composed of downsampling blocks [30]. Instead, the rest of the blocks within a stage are folded into a single recurrent residual block that is iterated the same number of times as the original number of blocks, as illustrated in Fig. 5.

Folding has a dual effect on the search space. It restricts the hypothesis space of the model to iterative functions and reduces the number of parameters. Despite the exponential reduction in available subnetwork candidates, we argue that folded residual networks contain better performing strong tickets than their feedforward counterparts. If the weights of a ResNet naturally converge to approximations of unrolled iterative functions, then the strong tickets within it are likely approximations of recurrent networks. This restricts the number of relevant subnetworks to a small subset that includes consecutive blocks with similar random weights. By folding, all candidate subnetworks become recurrent, which increases the number of relevant subnetworks and their likelihood of containing stronger tickets.

Moreover, the parameter reduction occurs not only at inference time but also during training. By reducing the search space for strong tickets during training, folded tickets become easier to find. At inference time, the found subnetworks are even smaller and benefit from parameter reusability, making them ideal for efficient hardware implementation.

3.2 Supermask Training

Rather than optimizing the model’s weights, the model is pruned to identify a high-performing subnetwork that is hidden within the randomly initialized folded model, which is referred to as an HFN. This connectivity pattern is learned by training a supermask [2], which is a pruning mask containing a binary element for each weight. During inference, the ticket is discovered by applying an element-wise product of the random weight tensor and the trained supermask.

This study adopts the edge-popup algorithm [3] for training the supermasks, as shown in Fig. 6a. The algorithm assigns a score to each weight, which is updated during backpropagation using straight-through estimation for the supermask (i.e., the supermask is not applied in the backward pass). The weights are sorted based on their scores, and the supermask is updated to include the weights with the highest top-k% scores and prune the rest. Although the value of top-k% is determined globally, the sparsity is enforced at the layer level. Folding does not impact this process; supermasks and scores are shared similarly to their corresponding weights, and backpropagation gradients are propagated through the unrolled model just like a feedforward model. Therefore, folded parameters receive distinct gradients from each iteration, as demonstrated in Fig. 6b.

4 Experiments and Results

In this section, we explore how to effectively integrate supermask training with a recurrent residual network and compare the outcomes with the baseline approaches outlined in Table 1.

4.1 Experimental Settings

All experiments were implemented in PyTorch [32], using the original code of [3] available in their public repository [33]. The baseline model for all experiments was ResNet-50 [7] and its variations, as folding only applies to deep networks. The experiments were conducted on the CIFAR100 [34] dataset, which is relatively complex.

Unless stated otherwise, the experiments were conducted using the following methodology. We split the CIFAR100 dataset, consisting of 60, 000 images, into 45, 000 for training, 5, 000 for validation, and 10, 000 for the test set. Image pre-processing and augmentation were carried out as in [3]. We trained the models on CIFAR100 using stochastic gradient descent (SGD) with weight decay of 0.0005, momentum of 0.9, and batch size of 128 for 200 epochs. For models deeper than 100 layers or double width, we trained for an additional 100 epochs. The learning rate started at 0.1 and was reduced using cosine annealing with no warmup. We report the average of three runs of top-1 test accuracy scores measured at the highest scoring validation epoch. The standard deviation is shown with shaded areas on plots.

A vanilla ResNet-50 trained on CIFAR100 using an NVIDIA GeForce RTX 3090 requires 2.4 h. In comparison, the folded, HNN, and HFN versions of the same model require 2.2, 4.4, and 4.2 h, respectively.

4.2 Results

The comparison of accuracy and parameter count of various ResNet sizes trained with different methods on CIFAR100 is shown in Fig. 7a. HFN models are found to be the most parameter-efficient among the compared methods, with fewer parameters than equally performing models, and more accurate than models with similar parameter counts. Additionally, deeper and wider HFNs achieve the highest accuracies overall.

In addition, the superiority of HFNs is more pronounced when examining the model memory sizes under the compression scheme presented in [8], as shown in Fig. 7b. The memory size of HFNs is approximately half that of their feedforward counterparts, with ResNet-50 fitting into less than 2 MB. Moreover, the wider HFN models outperform the dense models that are more than \(30\times \) larger in size.

5 Summary

We explored the similarity between the Hidden-Fold Network, a recursive model with fixed random weights, and reservoir computing, as depicted in Fig. 8. In this chapter, we presented a method for folding and training ResNet with supermasks, as a first step toward deep reservoir computing. We also tested the conjecture that recurrent residual networks have stronger tickets than their feedforward counterparts, which can be leveraged to significantly reduce the memory footprint while achieving comparable or superior accuracy to dense models. Since HFN’s blocks are recurrent and unlearned, leaving all the learning load to a simple mask, the model bears strong resemblance to a deep reservoir computer. However, the second step of substituting convolutional layers with actual reservoir layers remains a future direction. Once this is achieved, we will bridge the gap between reservoirs and neural networks and approach deep reservoir computing that can solve complex tasks.

References

G. Tanaka, T. Yamane, J.B. Héroux, R. Nakane, N. Kanazawa, S. Takeda, H. Numata, D. Nakano, A. Hirose, Recent advances in physical reservoir computing: a review. Neural Netw. 115, 100–123 (2019)

H. Zhou, J. Lan, R. Liu, J. Yosinski, Deconstructing lottery tickets: zeros, signs, and the supermask, in Advances in Neural Information Processing Systems, pp. 3597–3607

V. Ramanujan, M. Wortsman, A. Kembhavi, A. Farhadi, M. Rastegari, What’s hidden in a randomly weighted neural network? in, IEEE Computer Society Conference on Computer Vision and Pattern Recognition (2020), pp. 11893–11902

Q. Liao, T. Poggio, Bridging the gaps between residual learning, recurrent neural networks and visual cortex. arXiv:1604.03640

K. Hirose, J. Yu, K. Ando, Y. Okoshi, Á. López García-Arias, J. Suzuki, T.V. Chu, K. Kawamura, M. Motomura, Hiddenite: 4K-PE hidden network inference 4D-tensor engine exploiting on-chip model construction achieving 34.8-to-16.0TOPS/W for CIFAR-100 and ImageNet, in IEEE International Solid-State Circuits Conference, vol. 65 (2022), pp. 1–3

J. Fischer, R. Burkholz, Plant’n’seek: Can you find the winning ticket? arXiv:2111.11153

K. He, X. Zhang, S. Ren, J. Sun, Deep residual learning for image recognition, in IEEE Computer Society Conference on Computer Vision and Pattern Recognition (2016), pp. 770–778

Á. López García-Arias, Y. Okoshi, M. Hashimoto, M. Motomura, J. Yu, Recurrent residual networks contain stronger lottery tickets. IEEE Access 11, 16588–16604 (2023)

Y. LeCun, Y. Bengio, G. Hinton, Deep learning. Nature 521(7553), 436–444 (2015)

A. Krizhevsky, I. Sutskever, G.E. Hinton, Imagenet classification with deep convolutional neural networks. Commun. ACM 60(6), 84–90 (2017)

A. Ramesh, M. Pavlov, G. Goh, S. Gray, C. Voss, A. Radford, M. Chen, I. Sutskever, Zero-shot text-to-image generation, in International Conference on Machine Learning (2021), pp. 8821–8831

E. Strubell, A. Ganesh, A. McCallum, Energy and policy considerations for deep learning in NLP. arXiv:1906.02243

S. Han, J. Pool, J. Tran, W. Dally, Learning both weights and connections for efficient neural network, in Advances in Neural Information Processing Systems (2015), pp. 1135–1143

J. Liu, Z. Xu, R. Shi, R.C. Cheung, H.K. So, Dynamic sparse training: Find efficient sparse network from scratch with trainable masked layers. arXiv:2005.06870

M. Zhu, S. Gupta, To prune, or not to prune: exploring the efficacy of pruning for model compression. arXiv:1710.01878

T. Gale, E. Elsen, S. Hooker, The state of sparsity in deep neural networks. arXiv:1902.09574

D. Blalock, J.J.G. Ortiz, J. Frankle, J. Guttag, What is the state of neural network pruning? arXiv:2003.03033

S. Han, H. Mao, W.J. Dally, Deep compression: Compressing deep neural networks with pruning, trained quantization and Huffman coding, in The International Conference on Learning Representations

A. Dubey, M. Chatterjee, N. Ahuja, Coreset-based neural network compression, in European Conference on Computer Vision (2018), pp. 454–470

S. Han, X. Liu, H. Mao, J. Pu, A. Pedram, M.A. Horowitz, W.J. Dally, EIE: Efficient inference engine on compressed deep neural network, in International Symposium on Computer Architecture

J. Frankle, M. Carbin, The lottery ticket hypothesis: Finding sparse, trainable neural networks, in The International Conference on Learning Representations (2019)

E. Malach, G. Yehudai, S. Shalev-Schwartz, O. Shamir, Proving the lottery ticket hypothesis: Pruning is all you need, in International Conference on Machine Learning (2020), pp. 6682–6691

A. Pensia, S. Rajput, A. Nagle, H. Vishwakarma, D.S. Papailiopoulos, Optimal lottery tickets via subset sum: Logarithmic over-parameterization is sufficient, in Advances in Neural Information Processing Systems (2020)

L. Orseau, M. Hutter, O. Rivasplata, Logarithmic pruning is all you need, in Advances in Neural Information Processing Systems (2020), pp. 2925–2934

J. Diffenderfer, B. Kailkhura, Multi-prize lottery ticket hypothesis: Finding accurate binary neural networks by pruning a randomly weighted network, in The International Conference on Learning Representations (2021)

A. Veit, M.J. Wilber, S. Belongie, Residual networks behave like ensembles of relatively shallow networks, in Advances in Neural Information Processing Systems, p. 29

G. Huang, Y. Sun, Z. Liu, D. Sedra, K.Q. Weinberger, Deep networks with stochastic depth, in European Conference on Computer Vision (Springer, 2016), pp. 646–661

M. Abdi, S. Nahavandi, Multi-residual networks: Improving the speed and accuracy of residual networks. arXiv:1609.05672

S. Zagoruyko, N. Komodakis, Wide residual networks, in British Machine Vision Conference (2016)

K. Greff, R.K. Srivastava, J. Schmidhuber, Highway and residual networks learn unrolled iterative estimation. arXiv:1612.07771

S. Jastrzębski, D. Arpit, N. Ballas, V. Verma, T. Che, Y. Bengio, Residual connections encourage iterative inference. arXiv:1710.04773

A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga, A. Desmaison, A. Kopf, E. Yang, Z. DeVito, M. Raison, A. Tejani, S. Chilamkurthy, B. Steiner, L. Fang, J. Bai, S. Chintala, PyTorch: An imperative style, high-performance deep learning library, in Advances in Neural Information Processing Systems (2019), pp. 8024–8035

V. Ramanujan, M. Wortsman, A. Kembhavi, A. Farhadi, M. Rastegari, What’s hidden in a randomly weighted neural network? (2020) https://github.com/allenai/hidden-networks

A. Krizhevsky, Learning multiple layers of features from tiny images, Master’s thesis, Department of Computer Science, University of Toronto, Toronto (2009)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2024 The Author(s)

About this chapter

Cite this chapter

Hashimoto, M., García-Arias, Á.L., Yu, J. (2024). Bridging the Gap Between Reservoirs and Neural Networks. In: Suzuki, H., Tanida, J., Hashimoto, M. (eds) Photonic Neural Networks with Spatiotemporal Dynamics. Springer, Singapore. https://doi.org/10.1007/978-981-99-5072-0_12

Download citation

DOI: https://doi.org/10.1007/978-981-99-5072-0_12

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-5071-3

Online ISBN: 978-981-99-5072-0

eBook Packages: Computer ScienceComputer Science (R0)