Abstract

When considering computation using physical phenomena beyond established digital circuits, the variability of the device must be addressed. In this chapter, we will focus on random sampling for its algorithmic solution. In particular, we discuss the nonlinear dynamical system that achieves the sampling behavior. The system, called herding, is proposed as an algorithm that can be used in the same manner as Monte Carlo integration. The algorithm combines optimization methods in the system and does not depend on random number generators. In this chapter, we review this algorithm using nonlinear dynamics and related studies, including the author’s previous results. Then we discuss the perspective of the application of herding in relation to the use of physical phenomena in computation.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

As mentioned previously, the exploration of the use of physical phenomena in computation, not limited to digital electronics, is expected to achieve breakthroughs recently. The problem with computing with such physical phenomena is the variability of the device. In other words, physical devices generally have a limit to their accuracy in both fabrication and operation, and this is particularly problematic when the physical quantities are used as analog variables in the computation, as opposed to digital electronics. For example, physical reservoir computing, as discussed in earlier chapters, can be considered a method to deal with this problem. Owing to the physical limitations mentioned above, it may be difficult to tune the parameters of the physical reservoir for each; however, this problem can be addressed by training only a simple single-layer neural network inserted between the reservoir and the output. Similarly, in the case of the spatial photonic Ising machine (SPIM) [1], although the spatial light modulator (SLM) takes digital inputs, the computation is analog and the output can be degraded by the light propagation. When considering computations beyond the established digital circuits, this variability must be dealt with; in particular, an algorithmic solution is required.

In this chapter, we will focus on random sampling for this purpose. In statistics and machine learning, Monte Carlo (MC) integration is often used to compute expectations. The problem is to determine the expected value of a function f(x) over a probability distribution p denoted by

In MC integration, a sequence of samples \(x^{(1)}, x^{(2)}, \ldots , x^{({T})}\) that follow the probability distribution p is generated, and then the expectation is approximated as the sample average:

For example, MC integration is important for Bayesian inferences. When inferring the parameters \(\theta \) of a probabilistic model \(p(x; \theta )\) from the data \(\mathcal {D}\), we often use the maximum likelihood method taking \(\theta \) as an estimate that maximizes the likelihood \(P(\mathcal {D};\theta )\) of the data. Instead, Bayesian inference introduces a prior distribution \(\pi (\theta )\) for the parameters and applies Bayes’ rule to obtain the posterior distribution \(P(\theta )\propto \pi (\theta )P(\mathcal {D};\theta )\) as the inference result. The advantage of assuming distributions in the parameters is that estimates with uncertainty can be obtained.

Because the variability in physical phenomena is often understood as a stochastic behavior, this sampling and MC integration is a promising application of physical phenomena in computation. Usually, a random number generator (RNG) is used for sampling. Typically, a pseudo-RNG is used to generate a sequence of numbers using deterministic computations; however, physical random numbers can also be used. In either case, the RNG must be precisely designed to guarantee the quality of the output. Although the use of stochastic behavior observed in physical phenomena is promising, it is also difficult to precisely control its probabilistic properties.

Herding, proposed by [2, 3], is an algorithm that can be used in the same manner as MC integration. However, it does not use RNGs, so we can expect it to be a possible method for avoiding such difficulties. In this chapter, we review the herding algorithm and its related studies, including the author’s previous results. Then we also discuss the prospects of studying herding from perspectives that include the use of physical phenomena in computation.

The remainder of this chapter is organized as follows. In Sects. 1 and 2, we introduce herding, focusing on its aspects as a method of MC integration. In particular, we introduce the herding algorithm in Sect. 1. In Sect. 2, the herded Gibbs algorithm is introduced as an application of herding. We discuss the improvement of the estimation error and its convergence and review the relevant literature. In Sect. 3, we consider herding from a different perspective, the maximum entropy principle. We explore the relationship between herding and entropy, including the role of high-dimensional nonlinear dynamics in herding. In Sect. 4, based on the discussion in the previous sections, we discuss the prospects of the study of herding, including the aspect of computation with the variability of physical phenomena, and finally, we provide concluding remarks in Sect. 5.

1 Herding: Sample Generation Using Nonlinear Dynamics and Monte Carlo Integration

In this section, we introduce the herding algorithm. We adopt a different approach to the introduction than that in the original work [2, 3].

1.1 Deterministic Tossup

We begin with a simple example. Consider a sequence of \({T}\) random variables \(x^{(1)},\ldots ,x^{({T})}\in \mathbb {R}\). Let \(\mathbb {E}_{p}[\cdot ]\) and \(\text {Var}_p[\cdot ]\) denote the expectation and variance, respectively, of a function over a probability distribution p. Assume that each marginal distribution is equivalent to the distribution p whose expected value is \(\mathbb {E}_{p}[x]=\mu \) and whose variance is \(\text {Var}_p[x ]=V\). Then, the expected value of the sample mean

satisfies \(\mathbb {E}[{\hat{\mu }}]=\mu \) and the variance is calculated as

Assume that \(x^{(1)},\ldots ,x^{({T})}\) are not independently and identically distributed (i.i.d.) and have a stationary autocorrelation as

then the variance is calculated as

Considering these \(x^{({t})}\) as the samples in MC integration, this suggests that the variance of the estimate is reduced when the number of samples T is large, and it can be further reduced when the autocorrelation \(\rho _k\) is small or especially negative.

Consider a distribution of the binary random variable \(x\sim p_\pi \) with parameter \(\pi \), representing a tossup that returns 1 with probability \(\pi \) and 0 with probability \(1-\pi \). This distribution is the well-known Bernoulli distribution with the parameter \(\pi \). Instead of generating a sequence of i.i.d. samples from \(p_\pi \), we consider generating a sequence that follows the deterministic process presented in Algorithm 1 and Fig. 1. According to the update rule (line 3) of the algorithm, \(w^{({t})}\) is expected to be smaller after the step if \(x^{({t})}=1\), and \(w^{({t})}\) is expected to be larger after the step if \(x^{({t})}=0\). Therefore, from the equation in line 2, we expect that this sequence will have negative autocorrelations.

Although the above arguments concern only \(\rho _1\), in fact the sample mean \(\hat{\mu }\) converges very quickly as \(O(1/{T})\). Let \(C=[-1+\pi ,\pi )\) and assume that the initial value \(w^{(0)}\) is taken from C. Then, one can easily check that \(w^{({t})}\in C\) holds for all \({t}\), for example, from that we can change the variable \(w^{({t})}\) to \({w'}^{({t})}\) to obtain an equivalent system including the rotation of the circle represented as

Adding the update formula (line 3) with respect to \({t}=1,\ldots ,{T}\), we obtain

Then we evaluate the difference in average as

which converges as \(O(1/{T})\) because \(w^{(0)},w^{({T})}\in C\).

An example trajectory of the deterministic tossup (herding for Bernoulli distribution). In the left panel, off-diagonal dashed lines represent the maps used to update \(w^{({t})}\). The points on the diagonal dashed line indicate the \(w^{({t})}\) for each \({t}\). The circles represent \(x^{({t}+1)}=1\) and crosses represent \(x^{({t}+1)}=0\). The gray area represents the condition for \(w^{({t})}\) to output \(x^{({t}+1)}=1\). The right panel represents the trajectory as the function of \({t}\)

1.2 Herding

We then generalize the deterministic tossup above to introduce herding. Let \(\mathcal {X}\) be the sample space and \(\varphi _m:\mathcal {X}\rightarrow \mathbb {R}\) for \(m\in \mathcal {M}\) be the feature functions defined therein, where \(\mathcal {M}\) is the set of their indices. Suppose that we have parameters \(\mu _m\in \mathbb {R}\) that specify the target moment values of \(\varphi _m\) over output samples. The empirical moment values can be used as parameters if the dataset is available. We can also consider a situation in which only the aggregated observation is available, e.g., for privacy reasons or because the subject is microscopic so that individual observation is impossible. The problem considered here is the reconstruction of the distribution such that the expected value of the feature \(\mathbb {E}_{\pi }[\varphi _m(x)]\) is equal to the given moment \(\mu _m\). Herding is an algorithm proposed by Welling [2], which generates a sequence of samples \(x^{(1)}, x^{(2)}, \ldots \) that are diverse while satisfying the moment condition.

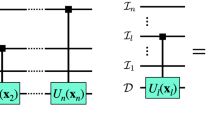

For ease of notation, we denote by \({\boldsymbol{\varphi }}(x)\) and \({\boldsymbol{\mu }}\) the vectors of features and moments, respectively. We denote by \(\langle \cdot ,\cdot \rangle \) the inner product of vectors and by \(\Vert \boldsymbol{x}\Vert \equiv \sqrt{\langle \boldsymbol{x},\boldsymbol{x}\rangle }\) the square norm. The overall herding algorithm is presented in Algorithm 2. We refer to \({\boldsymbol{w}}^{({t})}\) of the algorithm as the weight vector. As discussed below, herding is an extension of the above deterministic tossup where the sample mean of \({\boldsymbol{\varphi }}\) converges to \({\boldsymbol{\mu }}\). We can also consider the algorithm as a nonlinear dynamical system with the discrete-time variable \({t}\). As shown in Algorithm 2, the system includes optimization problem as a component. If we obtain the unique solution to the optimization problem, then updating the weight vector is deterministic. However, the system behaves in a random or chaotic manner. This random-like behavior can generate a pseudo-random sequence of samples while satisfying the moment conditions. Thus, the generated sequence that reflects the given information can be used as samples drawn from the background distribution. We discuss the importance of the weight vector behavior later.

There are many studies related to herding; for example, it is extended to include hidden variables through Markov probability fields and is applied to data compression by learning the rate coefficients of the dynamical system of herding [4]. It is also combined with the kernel trick to sample from a continuous distribution [5]. However, these are not discussed in detail here.

1.3 Convergence of Herding

Let us consider the herding algorithm for the Bernoulli distribution. In this situation, the sample space is \(\mathcal {X}=\{0, 1\}\) and the features are \({\boldsymbol{\varphi }}(x)\,{=}\,(\phi _0(x), \phi _1(x))^{\top }=\)\((1-x, x)^{\top }\), whose moments are \({\boldsymbol{\mu }}=(1-\pi , \pi )^{\top }\). The herding algorithm in this situation is equivalent to

where \(f^{({t}-1)}(x)\equiv \langle {\boldsymbol{w}}^{({t}-1)}, \boldsymbol{\phi }(x) \rangle =w^{({t}-1)}_0+(w^{({t}-1)}_1-w^{({t}-1)}_0)x\). This updated formula corresponds to the deterministic tossup (Algorithm 1), with the relation \((w_0^{({t})}, w_1^{({t})})=(-w^{({t})}, w^{({t})})\); the two algorithms become equivalent if the initial value satisfies \(w_0^{({t})}=-w_1^{({t})}\).

Generalizing and using this relation conversely, we can perform an analysis similar to that of the deterministic tossup in the general case. Suppose \(x^{({t})}\) is chosen such that \(\varphi _m(x^{({t})})\) is small at step \({t}\) and the target value is \(\varphi _m(x^{({t})})< \mu _m\). In this case, the corresponding weight \(w_m^{({t})}\) increases as

which corresponds to line 3 of Algorithm 2. Therefore, \(\varphi _m\) becomes more important in the optimization step and \(\varphi _m(x^{({t}+1)})\) is expected to be larger in the next step. Similarly, \(\varphi _m(x^{({t}+1)})\) is expected to be smaller when \(\varphi _m(x^{({t})})\) is large. In other words, the sequence \(\varphi _m(x^{({t})})\) is expected to have negative autocorrelation, which makes its average converge to \(\mu _m\) faster.

In addition, we obtain a convergence result for herding similar to that of the deterministic tossup. In particular, the average of the features for the generated sequence converges as follows:

where the convergence rate is \(O(1/{T})\). This convergence is obtained by using the boundedness of \({\boldsymbol{w}}^{({T})}\) and equation

This equation is obtained by summing both sides of (14) for \({t}=1,\ldots ,{T}\).

Using the optimality for the optimization problem

we can guarantee the boundedness of \({\boldsymbol{w}}^{({T})}\) as the following summarized version of the proof in [2]: Let \(R^{({t})}=\Vert {\boldsymbol{w}}^{({t})}\Vert \). The change in the value of R is calculated as follows:

Note that \(x^{({t})}\) is obtained by the optimization problem in (17); we define the following quantity

where \(\hat{x}\) is defined as \(\hat{x}= \displaystyle \mathop {{\text {arg}}\,{\text {max}}}_x \langle \tilde{{\boldsymbol{w}}},{\boldsymbol{\varphi }}(x)\rangle \). From the optimality of \(\hat{x}\), we obtain \(A>0\) under a mild assumption of \({\boldsymbol{\mu }}\). We also assume that the third term in (18) is bounded; that is, \(\Vert {\boldsymbol{\varphi }}(x^{({t})})-{\boldsymbol{\mu }}\Vert ^2\le B\) holds for some \(B\in \mathbb {R}\). This holds if the functions \({\boldsymbol{\varphi }}\) are bounded. Then, we obtain

In other words, if \(R^{({t}-1)}>R\) holds for \(R\equiv B/2A\), then \(R^{({t})}\) is decreasing; \(R^{({t})}< R^{({t}-1)}\). On the other hand, if \(R^{({t}-1)}\le R\), then \(R^{({t})}\) is bounded. Thus, there are \(R'\in \mathbb {R}\) such that \(R^{({t})}\le R'\) always holds if \(R^{(0)}\le R'\).

2 Herded Gibbs: Model-Based Herding on Spin System

Herding typically requires the feature moments \({\boldsymbol{\mu }}\) as the inputs. However, in sampling applications, the probability model \(\pi \) is often available instead. In this section, we describe herded Gibbs (HG), an extension of herding that can be applied to such model-based situations.

2.1 Markov Chain Monte Carlo Method

Sampling independently from a general probability distribution \(\pi \) is difficult in general; thus the Markov chain Monte Carlo (MCMC) method is often used. In MCMC, we consider the state variable \(x^{({t})}\) that is repeatedly updated to be the next state \(x^{({t}+1)}\), where this transition is represented as a Markov chain. By appropriately designing this transition, the probability distribution of \(x^{({t})}\) converges to \(\pi \). In addition, the two samples \(x^{({t})}\) and \(x^{({t}+T)}\) become nearly independent for sufficiently large T; the measure of such a time difference T is called the mixing time. If the transitions are designed such that the mixing time is short, then the MCMC becomes more effective for MC integration.

MCMC transitions are often designed to be local, i.e., the state \(x^{({t})}\) moves only in its neighborhood at each step. In such a case, the mixing time strongly depends on the energy landscape of probability distribution \(\pi \) to be sampled. The energy landscape is represented as the graph of \(E(x)\), where the probability distribution is represented by the Gibbs-Boltzmann distribution, \(\pi (x)\propto \exp (-E(x))\). In particular, if there are large energy barriers in the phase space, the mixing time increases because it takes time for \(x^{({t})}\) to pass over them.

A commonly used method for designing MCMC is the Metropolis-Hastings method [6, 7], and it is a good example of a local transition. In the Metropolis-Hastings method, given the current state x, the state y is generated according to the proposal distribution \(q_x(y)\). Typically, it is designed so that y is in the neighborhood of x. The proposed state y is accepted as the next state with an acceptance probability \(\alpha \in [0, 1]\) and is rejected with probability \(1-\alpha \). If rejected, state x is kept as the next state. If the proposal distribution is symmetric, i.e., \(q_x(y)=q_y(x)\; \forall x,y\), and the acceptance probability is defined as \(\alpha =\min (1, \exp (-E(y))/\exp (-E(x)))\), the chain has \(\pi (x)\propto \exp (-E(x))\) as its stationary distribution. Transition probabilities are defined such that a transition to y with higher energy \(E(y)\) has a lower acceptance probability.

Gibbs sampling [8] is another type of MCMC method. Let us denote by \(p(\cdot \mid \cdot )\) the conditional distribution for a distribution p. Suppose \(\pi \) is a probability distribution of N variables \(x_1,\ldots ,x_N\), and we can compute the conditional distribution \(\pi (x_i\mid {\boldsymbol{x}}_{-i})\) for each i, where \({\boldsymbol{x}}_{-i}\) is the vector of all variables except \(x_i\). Then, we can construct a Markov chain as presented in Algorithm 3.

In particular, in this chapter, we consider the following spin system: Let us consider N binary random variables, \(x_1,\ldots ,x_N\in \{0, 1\}\). Suppose that the random variables \(\mathcal {V}=\{x_1,\ldots , x_N\}\) form a network \(G=(\mathcal {V},\mathcal {E})\), where \(\mathcal {E}\) denotes the set of edges, and the joint distribution \(\pi \) is represented as

where Z is the normalization factor that makes the sum of the probabilities equal to one;

This probability model is widely known as the Boltzmann machine (BM), which is also discussed in Chap. 2.

For simplicity, let us suppose that there is no bias term, such that

Let \(\mathcal {N}(i)\) be the index set of neighboring variables of \(x_i\) on G and \({\boldsymbol{x}}_{\mathcal {N}(i)}\) be the vector of the corresponding variables. For the BM, the conditional distribution can be easily obtained as

where \(Z_i\) is also the normalization factor. Therefore, sampling from this distribution can be easily performed using Gibbs sampling.

2.2 Herded Gibbs

Herding can be used as a deterministic sampling algorithm, but it cannot be applied directly to the BM because the input parameter \({\boldsymbol{\mu }}\) is typically unavailable. By combining Gibbs sampling with herding, a deterministic sampling method for BMs called the herded Gibbs (HG) algorithm [9] is obtained. The structure of the algorithm is the same as that of Gibbs sampling, but the random update step for each variable \(x_i\) is replaced by herding, as presented in Algorithm 4. The variable neighborhood \({\boldsymbol{x}}_{\mathcal {N}(i)}\) of \(x_i\) has \(2^{|\mathcal {N}(i)|}\) configurations, so let us give indices for such configurations. HG uses weight variables, denoted by \(w_{i,j}\) for the ith variable \(x_i\) and the j-th configuration of the variable neighborhood \({\boldsymbol{x}}_{\mathcal {N}(i)}\). We can compute the corresponding conditional probability given that \({\boldsymbol{x}}_{\mathcal {N}(i)}\) takes the jth state denoted by \(\pi _{i,j}\equiv \pi (x_i=1\mid {\boldsymbol{x}}_{\mathcal {N}(i)} = j)\).

HG is not only a deterministic variant of Gibbs sampling but is reported to have better performance than Gibbs sampling [9]. For a function f of the spins \({\boldsymbol{x}}\) in the BM, let us consider estimating the expected value \(\mathbb {E}_{\pi }[f({\boldsymbol{x}})]\). Theoretically, for the BM on the complete graph, the error of the estimate by HG decreases at a rate of \(O(1/{T})\), whereas it decreases at a rate of \(O(1/\sqrt{{T}})\) for random sampling. Experimental results show that HG outperforms Gibbs sampling for image processing and natural language processing tasks.

When a BM is used as a probabilistic model, its parameters must be learned from data. The learning procedure is expressed as

where \(\mathbb {E}_{{\text {data}}}[\cdot ]\) is the mean of the training data and \(\mathbb {E}_{{\text {model}}}[\cdot ]=\mathbb {E}_{\pi }[\cdot ]\) is the expected value in the model (21) with the current parameters \(W_{ij}\). The exact calculation of the third term is typically difficult owing to the exponentially increasing number of terms, but MCMC can be used to estimate it [10,11,12]. HG can also be applied to the learning process via its estimation; the variance reduction by HG can have a positive effect on learning, as demonstrated for BM learning on handwritten digit images [13].

2.3 Sharing Weight Variables

The original literature on HG [9] also introduces the idea of “weight sharing.” For a variable \(x_i\), let \(j,j'\) be indices of different configurations with equal conditional probabilities \(\pi _{i,j}=\pi _{i,j'}\). For example, this can occur when the coupling coefficients are restricted to \(W_{i,j}\in \{0,\pm c\}\) for \(c\in \mathbb {R}\); thus the conditional probability is determined only by counting neighboring variable values. In weight sharing, such configurations are classified into groups, and those in the same group, indexed by y, share a weight variable \(w_{i,y}\). We will also refer to these versions, which are based on general graphs and use weight sharing, as HG.

Eskelinen [14] proposed a variant of this algorithm called discretized herded Gibbs (DHG), which is presented in Algorithm 5. It uses B disjoint intervals of the conditional probability value dividing the unit interval [0, 1]. Let us call them bins and denote by \(C_y\) the y-th interval. At the time of the update, the conditional probability \(\pi (x_i=1 \mid {\boldsymbol{x}}_{\mathcal {N}(i)})\) is approximated by the representative value \(\tilde{\pi }_{i,y} \in C_y\), where y is the index of the interval to which the configuration \({\boldsymbol{x}}_{\mathcal {N}(i)}\) belongs. The weight variable used for the update is shared among several spin configurations with similar conditional probability values. The probability value used for the update is replaced by the representative value, but this replacement introduces an error in the distribution of the output samples. A trade-off exists between the computational complexity proportional to B and the magnitude of the error.

These ideas aim to reduce computational complexity by reducing the number of weights. However, Bornn et al. [9] reported a performance improvement for image restoration using the BM, and Eskelinen [14] reported a reduction in the estimation error in the early iterations for the BM on a small complete graph.

2.4 Monte Carlo Integration Using HG

On complete graphs, HG has been shown to be consistent; the estimates converge to the true value associated with the target distribution \(\pi \). On general graphs, however, this is not always the case. In particular, the error decay for HG in the general case has two characteristics: faster convergence in the early iterations and convergence bias in the later iterations. These are explained by the “weight sharing” and the temporal correlation of the weights.

Specifically, the accuracy of the sample approximation of the function f with HG was evaluated as follows [13]: For HG with a target BM \(\pi \) (21), let P be the empirical distribution of the obtained samples. Let the error be defined as

The magnitude of D is evaluated as

This is obtained by decomposing the estimation error with respect to a variable, where each term has the following meaning: Let \(x=x_i\) with fixing i, and let \({\boldsymbol{z}}={\boldsymbol{x}}_{-i}\) be all the variables except \(x_i\). Let y be the index of the weight used to generate \(x_i\) (line 3 of Algorithm 4 or line 4 of Algorithm 5) at each step. \(D^{{\text {approx}}}\) is the error term resulting from the replacement of the conditional probability \(\pi (x=1\mid {\boldsymbol{z}})\) by the representative value \(\tilde{\pi }_{i,y}\), which occurs in the DHG. \(D^{\text {z}}\) is the error in the distribution of \({\boldsymbol{z}}\), namely, the joint distribution of the variables other than \(x_i\), and expected to depend largely on the mixing time of the original Gibbs sampling.

The other two, \(D^{{\text {cor}}}\) and \(D^{{\text {herding}}}\), are the terms most relevant to the herding dynamics. \(D^{{\text {herding}}}\) is the term corresponding to the herding algorithm for the Bernoulli distribution or the deterministic tossup that decays by \(O(1/{T})\). \(D^{{\text {cor}}}\) is a non-vanishing term. Thus, the error decay is characterized by the fast decay in early iterations dominated by \(D^{{\text {herding}}}\) and its stagnation in later iterations dominated by \(D^{{\text {cor}}}\). \(D^{{\text {cor}}}\) is the term owing to the temporal correlation of the weight variables in the HG and is represented by the following equation:

Let us consider the ideal case that the weight index y and the conditional distribution \(\pi (x\mid {\boldsymbol{z}})\) have a one-to-one correspondence. For DHG, this means that there are enough many small bins. Then, for the target distribution, x and \({\boldsymbol{z}}\) conditioned on y are independent; \(\pi (x,{\boldsymbol{z}}\mid y)=\pi (x\mid y)\pi ({\boldsymbol{z}}\mid y)\). This is because \(\pi (x\mid y)\) becomes constant on \({\boldsymbol{z}}\). For the output distribution, however, this independence does not hold even under this assumption because the internal state \(w_{i,y}\) determines the value of x and also has the temporal correlation with \({\boldsymbol{z}}\). \(D^{{\text {cor}}}\) evaluates the correlation between the conditional distributions of x and \({\boldsymbol{z}}\).

This temporal correlation is mainly caused by the deterministic nature of the herding dynamics. To mitigate this, the algorithm can be modified by introducing some stochasticity in the transitions. This has been shown to solve the bias problem [13], but at the cost of a degradation in the estimation for small \({T}\).

2.5 Rao-Blackwellization

Let us further discuss this analysis with a more concrete example. Let us fix i as before. Let \(f({\boldsymbol{x}})=x_i\) and consider the accuracy of estimating the expected value \(\mathbb {E}_{\pi }[f({\boldsymbol{x}})]=\mathbb {E}_{\pi }[x_i]\). The estimation error is bounded as

Under some assumptions, the first term corresponds to \(D^{{\text {herding}}}\) and the second term corresponds to \(D^{\text {z}}\). Therefore, we can evaluate the error decay as the first term decaying by \(O(1/{T})\) as in herding but is eventually dominated by the second term for a large \({T}\).

The results were compared with those of a similar algorithm as follows: First, an i.i.d. sample sequence \({\boldsymbol{x}}^{({t})}\) is generated via random sampling. Subsequently, the expected value of \(f({\boldsymbol{x}})=x_i\) is approximated as the sample mean of the conditional probability \(\pi (x=1 \mid {\boldsymbol{z}})\). In this case, the approximation error is bounded above as

which is equal to the term that is dominant in the HG case. The substitution of the conditional mean (or distribution) into an estimator is sometimes referred to as Rao-Blackwellization [15, 16], after the Rao-Blackwell theorem that guarantees the improvement in estimation accuracy from this substitution. In other words, herding can be viewed as a sample-based estimation method that achieves error reduction through Rao-Blackwellization, although it is implemented implicitly.

However, HG is not equivalent to this; the evaluation of the decaying error simultaneously holds for any other \(x_j\) (\(j\ne i\)) without changing the algorithm. If the conditional probability \(\pi (x=1\mid {\boldsymbol{z}})\) is considered as a function of \({\boldsymbol{z}}\), this function itself can have an approximation similar to that of (28). That is, we can decompose this function as

and evaluate each term in the summation by (28) for \(f({\boldsymbol{x}})=x_j\). Then we find that the first term in (32) is dominant, which leads to the improvement of the error evaluation in HG.

We can also use this formula for i.i.d. random sampling to improve the estimation. However, in this case, it is necessary to determine the appropriate \(\beta _j\) by estimating from the random samples obtained. However, in the case of herding, because this evaluation is valid for any \(\beta _j\), selecting \(\beta _j\) is not necessary. Extending this discussion, we may be able to analyze the accuracy of estimation by herding in general; however, this is left open for future study.

3 Entropic Herding: Regularizer for Dynamical Sampling

In the previous section, we discussed the characteristics of the herding algorithm as a Monte Carlo numerical integration algorithm. In particular, there is a negative autocorrelation in the weight variables and samples, which is important for numerical integration. However, this does not cover all the characteristics of herding as a high-dimensional nonlinear dynamics. This section discusses the connection between herding and the maximum entropy principle.

3.1 Maximum Entropy Principle

The maximum entropy principle [17] is a common approach to statistical inference. It states that the distribution with the greatest uncertainty among those consistent with the information at hand should be used. As the name implies, entropy is often used to measure uncertainty. Specifically, for a distribution with a probability mass (or density) function p, the (differential) entropy is defined as

Furthermore, we assume that information is collected on features \(\varphi _m:\mathcal {X}\rightarrow \mathbb {R}\) indexed with \(m\in \mathcal {M}\), where \(\mathcal {X}\) is the sample space and \(\mathcal {M}\) is the set of feature indices. Assume further that the collected data is available as the mean \(\mu _m\) of each feature. According to the maximum entropy principle, the estimated distribution p should satisfy the condition that the expected value \(\mathbb {E}_{p}[\varphi _m(x)]\) of the feature values is equal to the given value \(\mu _m\). Therefore, the maximum entropy principle can be explicitly expressed as the following optimization problem:

We obtain the condition that the solution should satisfy as follows: For simplicity, let \(\mathcal {X}=\{x_1,\ldots ,x_N\}\) be a discrete set and, for each i, let \(p_i\) denote the probability of the i-th state \(x_i\). The gradient of \(H\) is

Using the Lagrange multiplier method, we obtain the condition

where \(\theta _m\) corresponds to the Lagrange multiplier for the moment condition \(\mathbb {E}_{p}[\varphi _m({\boldsymbol{x}})]=\mu _m\). For continuous \(\mathcal {X}\), we can make a similar argument using the functional derivative.

In general, a family of distributions of the form (36) where the parameters \(\theta _m\) are unconstrained is called an exponential family, and is also called Gibbs-Boltzmann distribution. Optimizing the parameters \(\theta _m\) requires a learning algorithm similar to that of the Boltzmann machine (21), which is generally computationally difficult.

For example, if \(\mathcal {X}=\mathbb {R}\) and \((\varphi _1(x),\varphi _2(x))=(x,x^2)\), the maximum entropy principle gives the normal distribution as

where \((m, \sigma )=(-\theta _1/2\theta _2, \sqrt{1/2\theta _2})\).

If we use \(\mathcal {X}\in \{0, 1\}^N\) and \(\varphi _m({\boldsymbol{x}})=x_ix_j\) for all pairs \(i< j\) where \(m=(i,j)\), we obtain

which is identical to the Boltzmann machine (21) without bias on the fully connected graph.

The output of the herding algorithm is also such that the moment condition \(\mathbb {E}_{p}[\varphi _m(x)] = \mu _m\; \forall m\in \mathcal {M}\) is satisfied in the limit of \(T\rightarrow +\infty \), and is expected to be diversified due to the complexity of the herding dynamics. Therefore, we can expect the output sequence to follow, at least partially, the maximum entropy principle. The original literature on herding [2, 3] also describes its motive in the context of the maximum entropy principle.

3.2 Entropic Herding

We observed that herding is an algorithm closely related to the maximum entropy principle. Entropic herding, which is described in this section, is an algorithm that incorporates this principle in a more explicit manner.

Let us consider the same features \(\varphi _m\) and target means \(\mu _m\) as above. Pseudocode for entropic herding is provided in Algorithm 6. Here, we introduce scale parameters \(\Lambda _m\ge 0\) for each condition \(m\in \mathcal {M}\), which are used to control the penalty for the condition \(\mathbb {E}_{p}[\varphi _m]=\mu _m\) as described below. In addition, we introduce step-size parameters \(\varepsilon ^{({t})}\ge 0\) to the algorithm.

Similar to the original herding algorithm, entropic herding is an iterative process and the time-varying weight \(a_m\) for each feature is included in the system. Each iteration of the algorithm, indexed by \({t}\), consists of two steps: the first step solves the optimization problem and the second step updates the parameters based on the solution. Unlike the original herding algorithm, the entropic herding algorithm outputs a sequence of distributions \(r^{(1)}, r^{(2)}, \ldots \) instead of points. The two steps for each time step, derived later, are as follows:

where \(\mathcal {Q}\) is the (sometimes parameterized) family of distributions and \(\eta _m(q)\equiv \mathbb {E}_{q}[\varphi _m(x)]\) is the feature mean for the distribution q.

Obtaining the exact solution to the optimization problem (39) is often computationally intractable. We can restrict the candidate distributions \(\mathcal {Q}\) or allow suboptimal solutions of (39) to reduce the computational cost.

3.3 Dynamical Entropic Maximization in Herding

Entropic herding is derived from the minimization problem \(\min _p \mathcal {L}(p)\) with the following objective function:

The optimal solution of (41) is expressed with parameter \({{\boldsymbol{\theta }}^*}\) as

This has the same form as the distribution obtained using the maximum entropy principle (36). However, parameter \({{\boldsymbol{\theta }}^*}\) does not coincide with the optimal solution \({\boldsymbol{\theta }}\) expressed in (36). Specifically, it satisfies the following equation:

It roughly implies that the moment error becomes smaller when \(\Lambda _m\) is large.

Entropic herding can be interpreted as an approximate optimization algorithm for this problem with a restricted search space. Suppose it only considers P that has the following form:

where \(\rho _{t}\) are the fixed component weights and each component \(r^{({t})}\) is determined sequentially using the results of the previous steps \(r^{(0)},\ldots ,r^{({t}-1)}\).

Let \(\tilde{\mathcal {L}}\) be the function obtained by replacing the entropy term \(H(p)\) of \(\mathcal {L}\) with the weighted average of the entropies of each component \(\tilde{H}\equiv \sum _{{t}=0}^{{T}}\rho _{t}H(r^{({t})})\):

Since \(H\ge \tilde{H}\) holds due to the convexity of the entropy, \(\tilde{\mathcal {L}}\) is an upper bound on \(\mathcal {L}\). Equation (39) is obtained by optimizing \(r^{({t})}\) with fixing the components \(r^{(0)},\ldots ,r^{({t}-1)}\) to minimize \(\tilde{\mathcal {L}}(P^{({t})})\), where \(P^{({t})}\propto \sum _{{t'}=0}^{{t}} \rho _{t'}r^{({t'})}\) is the tentative solution. The optimal condition is equivalent to (39), where the parameters are represented using the tentative solution \(P^{({t})}\) as

If we choose \(\rho _{t}\) appropriately, the update formula (40) can be derived by (46) and a recursive relation between the tentative solutions for consecutive steps, \(P^{({t}-1)}\) and \(P^{({t})}\).

We expect that the formalization of entropic herding will help theoretical studies of the herding algorithm. Equation (41) contains the entropy term \(H(p)\), and we need to make it larger. Entropic herding does so through the following two mechanisms:

-

(a)

Explicit optimization: Greedy minimization of \(\tilde{\mathcal {L}}\), which is the upper bound of \(\mathcal {L}\), by solving (39) including the entropy term.

-

(b)

Implicit diversification: The additional reduction of \(\mathcal {L}\) owing to the diversity of \(r^{({t})}\) caused by the complicated joint dynamics of the optimization steps and the weight update steps.

We rely on entropy maximization to reconstruct the target distribution, especially to reproduce the distributional characteristics not directly included in the inputs \({\boldsymbol{\varphi }}(x)\) and \({\boldsymbol{\mu }}\). In the case of the original non-entropic herding algorithm, this depends entirely on the complexity of the dynamics, which is difficult to analyze accurately. Entropic herding explicitly incorporates this concept.

The added entropy term regularizes the output sample distribution. From (41), the error of \(\eta _m\) is small when \(\Lambda _m\) is large; however, the absolute value of \(a_m\) defined in (46) becomes large, and thus, the entropy values of the output components \(r^{({t})}\) are expected to be small. Thus, a trade-off exists in the choice of \(\Lambda _m\).

Additionally, setting \(\Lambda _m\) to \(+\infty \) implies ignoring the entropy terms in (39). This is equivalent to the original herding algorithm. That is, it is an extremum of entropic herding such that only (b) implicit diversification is used for entropy maximization.

3.4 Other Notes on Entropic Herding

Entropic herding yields the mixture distribution expressed in (44). The required number of components \({T}\) of the mixture distribution is large when the target distribution is complex. In this case, the usual parameter fitting requires the simultaneous optimization of \(O({T})\) parameters, which causes computational difficulties. On the other hand, entropic herding can determine \(r^{({t})}\) sequentially. Therefore, the number of parameters to be optimized in each step can be kept small, which simplifies the implementation and improves its numerical stability.

In general, the optimization (39) is non-convex. Therefore, a local improvement strategy alone may lead to a local optimum. One possible solution to this problem is to prepare several candidate initial values. Unlike normal situations in optimization, herding solves optimization problems that differ only in their weights repeatedly. As a result, a good initial value can again become a good initial value again in later steps. Furthermore, the candidate initial values themselves can be improved during the algorithm. This introduces dynamics into them, which has been shown to exhibit interesting behavior [18].

4 Discussion

We reviewed the herding algorithm that exploits negative autocorrelation and the complexity of nonlinear dynamical systems. In this section, we present two perspectives on herding with a review of related studies.

4.1 Application of Physical Combinatorial Optimization Using Herding

To use physical phenomena in devices, we must precisely control them, particularly for computation. However, a certain amount of variability usually remains in both fabrication and operation, which hinders their practical use in computation. For example, quantum mechanics inherently involves probabilistic behavior, and current devices for quantum computing are also susceptible to thermal noise. Given that information processing in the human brain occurs through neurons that are energy efficient but noisy, perfect control may not be an essential element of information processing.

Sampling is a promising direction for the application of physical systems in computation, because such variability can be used as a source of complexity. However, completely disorganized behavior is not useful, and the system should be balanced with appropriate control; herding can be the basis for such control. Specifically, this incompletely controlled noisy device can be applied to the optimization step of the herding algorithm. Although the output may deviate from the optimal solution owing to the variability, this is compatible with the formulation of entropic herding, in which a point distribution is not expected. The gap of the output from the ideal solution may affect R, the upper bound of the weight norm \(\Vert {\boldsymbol{w}}\Vert \) used in Sect. 1.3, but a larger R may be acceptable because it can be handled by increasing the number of samples with a trade-off in the overall cost of the algorithm as long as R does not diverge to infinity.

Conventional computer systems have important advantages, such as the versatility of the von Neumann architecture, established communication standards, and the efficiency from the long history of performance improvements. Therefore, even if a novel device that achieves a breakthrough in computation, it is expected to eventually be used as a hybrid system coupled to conventional computers, in which we expect that herding can serve as the interface. For example, herding and optimization with quantum computers such as quantum annealing (QA) [19, 20] or quantum approximate optimization algorithm (QAOA) [21] can be a good combination. As shown in Sect. 1, herding reduces the variance of the estimate through negative autocorrelation. However, this does not mean that the mixing time of MCMC is improved. For herding, when the objective function in the optimization step is multimodal and steep, finding the optimal solution should be difficult even for herding. When an optimization method that can overcome this difficulty, such as quantum annealing, is realized, its combination with herding is expected to lead to further improvements.

4.2 Generative Models and Herding

Among the latest machine learning technologies, deep generative models are one of the areas that have attracted the most attention in recent years. In machine learning, a generative model is a mathematical model that represents the process of generating points from the sample space, which represents the set of possible targets, such as images or sentences. For example, flow-based models [22] and diffusion models [23] are among the most famous deep generative models, and other popular methods such as variational autoencoders (VAE) [24] and generative adversarial networks (GAN) [25] use generative models as components. These models are known for their amazing performance in AI, but their behavior with a massive number of tunable parameters is generally a black-box.

One of the unique approaches used in machine learning and other new mathematical techniques that take full advantage of computer technology is the use of implicit representation of probability distributions through black-box generative processes. For example, the stochastic gradient method, which is the basis of deep learning, requires only its generative method as an algorithm, not a density function in analytic form. When there is a large amount of data, randomly selecting from it can be considered as generating a point from a true generative process. Therefore, it is possible to implement the minimization of the objective function defined using an inaccessible true probability distribution. This is also exemplified by the famous reparameterization trick for VAE [24], where the variational model to be optimized is represented as a generative model instead of an explicit density function. Deep reinforcement learning is another good example, where the probability distribution of the possible time series of agent and environment states is represented as records of past trials, and the agent is trained using randomly sampled records of past experience [26]. This way of using the models increases their affinity with evolving computing technologies and the big-data accumulated from the growing Internet.

On the other hand, one of the main purposes of using more traditional explicit modeling of the density functions using parameterized analytic forms is to correlate model parameters and knowledge. The simplest example of this is regression analysis, as expressed by \(Y\sim \beta X\). Here, the probability density function representing the model is fitted to the data, and whether or not the model parameter \(\beta \) is 0 is interpreted as an indication of whether the variables X and Y are related. We can also use a mathematical model to represent knowledge or hypotheses about a subject and use it to mathematically formalize the arguments based on that knowledge or hypotheses.

If we look at herding from the above perspective, we can find a unique feature of herding. Herding is the process of generating a sequence of points and can be thought of as generative models that implicitly represent a probability distribution. In addition, another important feature is that the algorithmic parameters \({\boldsymbol{\varphi }}(x)\) and \({\boldsymbol{\mu }}\) can be directly associated with knowledge as parameters of a mathematical model. Herding is a combination of implicit representation and explicit modeling. Although random sampling methods such as MCMC can be used to generate a set of points in the same manner as herding, the process is derived from the explicit model of the distribution, as opposed to the implicit definition of probability distributions as in herding.

5 Conclusion

In this chapter, we reviewed the herding algorithm, which can be used in the same manner as random sampling, although it is implemented as a deterministic dynamical system. Herding is expected to play an important role in the application of physical phenomena in computation because of the two aspects discussed in this chapter: a Monte Carlo numerical integration algorithm in Sects. 1 and 2, which are related to the mathematical advantages of the herding algorithm, and the connection to the maximum entropy principle in Sect. 3, which is related to the application of physical phenomena with herding. In addition, as shown in Sect. 4, herding is an interesting algorithm not only from these perspectives. We expect that theoretical studies of herding and its applications will continue in both directions.

References

D. Pierangeli, G. Marcucci, C. Conti, Large-scale photonic Ising machine by spatial light modulation. Phys. Rev. Lett. 122(21), 213902 (2019)

M. Welling, Herding dynamical weights to learn, in Proceedings of the 26th Annual International Conference on Machine Learning (2009), pp. 1121–1128

M. Welling, Y. Chen, Statistical inference using weak chaos and infinite memory. J. Phys.: Conf. Ser. 233, 012005 (2010)

M. Welling, Herding dynamic weights for partially observed random field models, in Proceedings of the Twenty-Fifth Conference on Uncertainty in Artificial Intelligence (2009), pp. 599–606

Y. Chen, M. Welling, A. Smola, Super-samples from kernel herding, in Proceedings of the Twenty-Sixth Conference on Uncertainty in Artificial Intelligence (2010), pp. 109–116

N. Metropolis, A.W. Rosenbluth, M.N. Rosenbluth, A.H. Teller, E. Teller, Equations of state calculations by fast computing machines. J. Chem. Phys. 21(6), 1087–1092 (1953)

W. Hastings, Monte Carlo sampling methods using Markov chains and their application. Biometrika 57, 97–109 (1970)

S. Geman, D. Geman, Stochastic relaxation, Gibbs distribution, and the Bayesian restoration of images. IEEE Trans. Pattern Anal. Mach. Intell. 6(6), 721–741 (1984)

Y. Chen, L. Bornn, N.D. Freitas, M. Eskelinen, J. Fang, M. Welling, Herded Gibbs sampling. J. Mach. Learn. Res. 17(10), 1–29 (2016)

G.E. Hinton, Training products of experts by minimizing contrastive divergence. Neural Comput. 14(8), 1771–1800 (2002)

T. Tieleman, Training restricted Boltzmann machines using approximations to the likelihood gradient, in Proceedings of the 25th International Conference on Machine Learning (2008), pp. 1064–1071

T. Tieleman, G. Hinton, Using fast weights to improve persistent contrastive divergence, in Proceedings of the 26th Annual International Conference on Machine Learning (2009), pp. 1033–1040

H. Yamashita, H. Suzuki, Convergence analysis of herded-Gibbs-type sampling algorithms: effects of weight sharing. Stat. Comput. 29(5), 1035–1053 (2019)

M. Eskelinen, Herded Gibbs and discretized herded Gibbs sampling, Master’s thesis, The University of British Columbia (2013)

A.E. Gelfand, A.F.M. Smith, Sampling-based approaches to calculating marginal densities. J. Am. Stat. Assoc. 85(410), 398–409 (1990)

C.P. Robert, G. Roberts, Rao-Blackwellisation in the Markov chain Monte Carlo era. Int. Stat. Rev. 89(2), 237–249 (2021)

E.T. Jaynes, Information theory and statistical mechanics. Phys. Rev. 106(4), 620–630 (1957)

H. Yamashita, H. Suzuki, K. Aihara, Herding with self-organizing multiple starting point optimization, in Proceedings of the 2022 International Symposium on Nonlinear Theory and its Applications (2022), pp. 33–36

T. Kadowaki, H. Nishimori, Quantum annealing in the transverse Ising model. Phys. Rev. E 58, 5355–5363 (1998)

M.W. Johnson, M.H.S. Amin, S. Gildert, T. Lanting, F. Hamze, N. Dickson, R. Harris, A.J. Berkley, J. Johansson, P. Bunyk, E.M. Chapple, C. Enderud, J.P. Hilton, K. Karimi, E. Ladizinsky, N. Ladizinsky, T. Oh, I. Perminov, C. Rich, M.C. Thom, E. Tolkacheva, C.J.S. Truncik, S. Uchaikin, J. Wang, B. Wilson, G. Rose, Quantum annealing with manufactured spins. Nature 473, 194–198 (2011)

E. Farhi, J. Goldstone, S. Gutmann, A quantum approximate optimization algorithm. arXiv:1411.4028 [quant-ph] (2014)

I. Kobyzev, S.J. Prince, M.A. Brubaker, Normalizing flows: an introduction and review of current methods. IEEE Trans. Pattern Anal. Mach. Intell. 43(11), 3964–3979 (2021)

J. Sohl-Dickstein, E. Weiss, N. Maheswaranathan, S. Ganguli, Deep unsupervised learning using nonequilibrium thermodynamics, in Proceedings of the 32nd International Conference on Machine Learning (2015), pp. 2256–2265

D. P. Kingma, M. Welling, Auto-encoding variational Bayes, in 2nd International Conference on Learning Representations (ICLR, 2014)

I. J. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, Y. Bengio, Generative adversarial nets, in Advances in Neural Information Processing Systems, vol. 27 (NIPS 2014) (2014), pp. 2672–2680

V. Mnih, K. Kavukcuoglu, D. Silver, A.A. Rusu, J. Veness, M.G. Bellemare, A. Graves, M. Riedmiller, A.K. Fidjeland, G. Ostrovski, S. Petersen, C. Beattie, A. Sadik, I. Antonoglou, H. King, D. Kumaran, D. Wierstra, S. Legg, D. Hassabis, Human-level control through deep reinforcement learning. Nature 518, 529–533 (2015)

Acknowledgements

The author would like to thank Hideyuki Suzuki and Naoki Watamura for their valuable discussions and comments on the manuscript.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2024 The Author(s)

About this chapter

Cite this chapter

Yamashita, H. (2024). Sampling-Like Dynamics of the Nonlinear Dynamical System Combined with Optimization. In: Suzuki, H., Tanida, J., Hashimoto, M. (eds) Photonic Neural Networks with Spatiotemporal Dynamics. Springer, Singapore. https://doi.org/10.1007/978-981-99-5072-0_10

Download citation

DOI: https://doi.org/10.1007/978-981-99-5072-0_10

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-5071-3

Online ISBN: 978-981-99-5072-0

eBook Packages: Computer ScienceComputer Science (R0)