Abstract

Optical computing is a general term for high-performance computing technologies that effectively use the physical properties of light. With the rapid development of electronics, its superiority as a high-performance computing technology has diminished; however, there is momentum for research on new optical computing. This study reviews the history of optical computing, clarifies its diversity, and provides suggestions for new developments. Among the methods proposed thus far, those considered useful for utilizing optical technology in information systems are introduced. Subsequently, the significance of optical computing in the modern context is considered and directions for future development is presented.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

1 Introduction

Optical computing is a general term for high-performance computing technologies that make effective use of the physical properties of light; it is also used as the name of a research area that attracted attention from the 1980s to the 1990s. This was expected to be a solution for image processing and large-capacity information processing problems that could not be solved by electronics at that time, and a wide range of research was conducted, from computing principles to device development and architecture design. Unfortunately, with the rapid development of electronics, its superiority as a high-performance computing technology was lost, and the boom subsided. However, as seen in the recent boom in artificial intelligence (AI), technological development has repeated itself. In the case of AI, the success of deep learning has triggered another boom. It should be noted that technologies with comparable potential for further development have been developed in the field of optical computing. Consequently, the momentum for research on new optical computing is increasing.

Optical computing is not a technology that emerged suddenly. Figure 1 shows the relationship among the research areas related to optical computing. Its roots are optical information processing, represented by a Fourier transform using lenses, and technologies such as spatial filtering and linear transform processing. They are based on the physical properties of light waves propagating in free space, where the superposition of light waves is a fundamental principle. Light waves emitted from different sources in space propagate independently and reach different locations. In addition, light waves of different wavelengths propagate independently and can be separated. Thus, the light-wave propagation phenomenon has inherent parallelism along both the spatial and wavelength axes. Utilizing this property, large-capacity and high-speed signal processing can be realized. In particular, optical information processing has been researched as a suitable method for image processing. From this perspective, optical information processing can be considered an information technology specialized for image-related processing.

Relationship between research areas related to optical computing. Fourier optical processing is a root of optical computing in the 1980s and the 1990s. Computer science and neuroscience accelerated the research fields of optical digital computing and optical neurocomputing. Currently, both research fields have been extended as optical special purpose processing and nanophotonic computing

Optical computing in the 1980s and the 1990s was characterized by the realization of general-purpose processing represented by digital optical computing. Compared with previous computers, the development of the Tse computer [1], which processes two-dimensional signals such as images in parallel, was a turning point. In the Tse computer, the concept of parallelizing logical gates that compose a computer to process images at high speed was presented. Inspired by this study, various parallel logic gates based on nonlinear optical phenomena have been developed. The development of optical computers composed of parallel logic gates has become a major challenge. Several computational principles and system architectures were proposed. Two international conferences on Optical Computing (OC) and Photonic Switching (PS) were started and held simultaneously. Notably, the proposal of optical neurocomputing based on neural networks was one of the major achievements in optical computing research during this period.

At that time, optical computing showed considerable potential; however, the development boom eventually waned because of the immaturity of peripheral technologies that could support ideas, as well as the improvement of computational performance supported by the continuous development of electronics. Nevertheless, the importance of light in information technology has increased with the rapid development of optical communication. In addition, optical functional elements and spatial light modulators, which have been proposed as devices for optical computing, continue to be developed and applied in various fields. Consequently, the development of optical computing technology in a wider range of information fields has progressed, and a research area called information photonics has been formed. The first international conference on Information Photonics (IP) was held in Charlotte, North Carolina, in June 2005 [2] and has continued to date.

Currently, optical computing is in the spotlight again. Optical computing is expected to play a major role in artificial intelligence (AI) technology, which is rapidly gaining popularity owing to the success of deep learning. Interesting methods, such as AI processors using nonphotonic circuits [3] and optical deep networks using multilayer optical interconnections [4], have been proposed. In addition, as a variation of neural networks, a reservoir model suitable for physical implementation was proposed [5], which increased the feasibility of optical computing. Reservoir implementation by Förster resonant energy transfer (FRET) between quantum dots was developed as a low-energy information processor [6]. In addition to AI computation, optical technology is considered a promising implementation of quantum computation. Owing to the potential capabilities of the optics and photonics technologies, optical computing is a promising solution for a wide range of high-performance computations.

This study reviews the history of optical computing, clarifies its diversity, and provides suggestions for future developments. Many methods have been developed for optical computing; however, only a few have survived to the present day, for various reasons. Here, the methods proposed thus far that are considered useful for utilizing optical technology in information technology are introduced. In addition, the significance of optical computing in the modern context is clarified, and directions for future development are indicated. This study covers a part of works based on my personal opinion and is not a complete review of optical computing as a whole.

2 Fourier Optical Processing

Shortly after the invention of lasers in the 1960s, a series of optical information-processing technologies was proposed. Light, which is a type of electromagnetic wave, has wave properties. Interference and diffraction are theoretically explained as basic phenomena of light; however, they are based on the picture of light as an ideal sinusoidal wave. Lasers have achieved stable light-wave generation and made it possible to perform various types of signal processing operations by superposing the amplitudes of light waves. For example, the Fraunhofer diffraction, which describes the diffraction phenomenon observed at a distance from the aperture, is equivalent to a two-dimensional Fourier transform. Therefore, considering that an infinite point is equivalent to the focal position of the lens, a Fourier transform using a lens can be implemented with a simple optical setup.

Figure 2 shows a spatial filtering system as a typical example of optical information processing based on the optical Fourier transform. This optical system is known as a telecentric or double diffraction system. For the input function f(x, y) given as the transmittance distribution, the result of the convolution with the point spread function h(x, y), whose Fourier transform \(H(\mu ,\nu )\) is embodied as a filter with the transmittance distribution at the filter plane, is obtained as the amplitude distribution g(x, y) as follows:

The following relationship is obtained using the Fourier transforms of Eq. (1), which is performed at the filter plane of the optical system:

where \(F(\mu ,\nu )\) and \(G(\mu , \nu )\) are the spectra of the input and output signals, respectively. The filter function \(H(\mu ,\nu )\) is called the optical transfer function. The signal processing and filter operations can be flexibly designed and performed in parallel with the speed of light propagation. The Fourier transform of a lens is used effectively in the optical system.

Spatial filtering in optical systems. The input image f(x, y) is Fourier transformed by the first lens, and the spectrum is obtained at the filter plane. The spatial filter \(H(\mu ,\nu )\) modulates the spectrum and the modulated signal is Fourier transformed by the second lens. The output image g(x, y) is observed as an inverted image because the Fourier transform is performed instead of an inverse Fourier transform

In this optical system, because the input and filter functions are given as transmittance distributions, spatial light modulators, such as liquid crystal light bulbs (LCLV) [7] and liquid crystals on silicon devices [8] which can dynamically modulate light signals on a two-dimensional plane are introduced. In the spatial light modulator, the amplitude or phase of the incident light over a two-dimensional plane is modulated by various nonlinear optical phenomena. Spatial light modulators are important key devices not only in Fourier optical processing but also in general optical computing. Additionally, they are currently being researched and developed as universal devices for a wide range of optical applications.

Pulse shaping has been proposed as a promising optical information technology [9]. As shown in Fig. 3, the optical setup comprises symmetrically arranged gratings and Fourier transform lenses. Because the Fourier time spectrum \(F(\nu )\) of the incident light f(t) is obtained at the spatial filter plane, the filter function located therein determines the modulation properties. Subsequently, the shaped output pulse g(t) is obtained after passing through the optical system. Note that the grating and cylindrical lens perform a one-dimensional Fourier transform by dispersion, and that the series of processes is executed at the propagation speed of light.

Fourier optical processing is also used in current information technologies such as image encryption [10] and biometric authentication [11]. In addition, owing to their capability to process ultrahigh-speed optical pulses, signal processing in photonic networks is a promising application. Furthermore, in combination with holography, which fixes the phase information of light waves through interference, high-precision measurements and light-wave control can be achieved [12].

3 Digital Optical Computing

The significance of the Tse computer [1] is that it introduced the concept of digital arithmetic into optical computing. Optical information processing based on the optical Fourier transform is typical of optical analog arithmetic. Digital operations over analog operations are characterized by the elimination of noise accumulation, arbitrary computational precision, and moderate device accuracy requirements [13]. Although analog optical computing is suitable for specific processing, many researchers have proposed digital optical computing for general-purpose processing. The goal is to achieve computational processing of two-dimensional signals, such as figures and images, with high speed and efficiency.

A Tse computer consists of Tse devices that process two-dimensional information in parallel. Several prototype examples of the Tse devices are presented. In the prototype system, the parallel logic gate is composed of logic gate elements mounted on electronics, and the parallel logic gate is connected by fiber plates or optical fiber bundles. In contrast, using free-space propagation of light, two-dimensional information can be transmitted more easily. Various optical logic gate devices have been developed as free-space optical devices. ATT Bell Labs developed a series of optical logic gate devices called SEED [14] to implement an optical switching system. These developments directly contributed to the current optoelectronic devices and formed the foundation of photonic technologies for optical communication.

Digital operations are designed by a combination of logical operations. Logical operations on binary signals include logical AND, logical OR, exclusive logic, etc., and higher level functions such as flip-flops and adding circuits are realized by combining them. Logical operations are nonlinear, and any type of nonlinear processing is required for their implementation. Optoelectronic devices directly utilize nonlinear optical phenomena. In addition, by replacing nonlinear operations with a type of procedural process called spatial coding, a linear optical system can be used to perform logical operations.

The authors proposed a method for performing parallel logical operations using optical shadow casting [15]. As shown in Fig. 4, parallel logical operations between two-dimensional signals were demonstrated by the spatial coding of the input signals and multiple projections with an array of point light sources. Using two binary images as inputs, an encoded image was generated by replacing the combination of the corresponding pixel values with a spatial code that is set in an optical projection system. Subsequently, the optical signal provides the result of a logical operation through the decoding mask on the screen. The switching pattern of the array of point light sources enables all 16 types of logical operations, including logical AND and OR operations, as well as operations between adjacent pixels.

Optical shadow casting for parallel logical operations. Two binary images are encoded pixel by pixel with spatial codes, and then, the coded image is placed in the optical projection system. Each point light source projects the coded image onto the screen and the optical signals provide the result of the logical operation through the decoding mask

Parallel operations based on spatial codes have been extended to parallel-computing paradigms. Symbolic substitution [16, 17] is shown in Fig. 5 which illustrates the process in which a set of specific spatial codes is substituted with another set of spatial codes. Arbitrary parallel logical operations can be implemented using a substitution rule. In addition, a framework was proposed that extends parallel logic operations using optical shadow casting to arbitrary parallel logic operations, called optical array logic [18, 19]. These methods are characterized by data fragments distributed on a two-dimensional image and a parallel operation on the image enables flexible processing of the distributed data in parallel. Similarly, cellular automaton [20] and life games [21] are mentioned as methods of processing data deployed on a two-dimensional plane. The parallel operations assumed in these paradigms can easily be implemented optically, resulting in the extension of gate-level parallelism to advanced data processing.

Symbolic substitution. A specific spatial pattern is substituted with another spatial pattern by the process of pattern recognition and substitution. A set of substitution rules enables the system to perform arbitrary parallel logical operations such as binary addition. Various optical systems can be used to implement the procedure

Construction of an optical computer is the ultimate goal of optical computing. The Tse computer is a clear target for the development of digital optical logical operations, and other interesting architectures have been proposed for optical computers. These architectures are categorized into gate-oriented architectures, in which parallel logic gates are connected by optical interconnections; register-oriented architectures, which combine processing on an image-by-image basis; and interconnection-oriented architectures, in which electronic processors are connected by optical interconnections. The gate-oriented architecture includes an optical sequence circuit processor [22] and a multistage interconnect processor [23]. The register-oriented architecture is exemplified by a Tse computer and parallel optical array logic system [24]. The interconnection-oriented architecture includes optoelectronic computer using laser arrays with reconfiguration (OCULAR) systems [25] and a three-dimensional optoelectronic stacked processor [26]. These systems were developed as small-scale systems with proof-of-principle, but unfortunately, few have been extended to practical applications.

4 Optical Neurocomputing

Digital optical logic operations were a distinctive research trend that distinguished optical computing in the 1980s from optical information processing. However, other important movements were underway during the same period. Neurocomputing was inspired by the neural networks of living organisms. As shown in Fig. 6, the neural network connects the processing nodes modeled on the neurons and realizes a computational task with signal processing over the network. Each node receives input signals from other processing units and sends an output signal to the successive processing node according to the response function. For example, when processing node i receives a signal from processing node j \((1\le j \le N)\), the following operations are performed at the node:

where \(u_i\) is the internal state signal of processing node i, \(w_{i,j}\) is the connection weight from processing node j to node i, \(f (\cdot )\) is the response function, and \(x'_i\) is the output signal of processing node i. All processing nodes perform the same operation, and the processing achieved by the entire network is determined by the connection topology of the processing node, connection weight of the links, and response function.

Neural network. Processing nodes modeled on neurons of life organisms are connected to form a network structure. Each processing node performs a nonlinear operation for the weighted sum of the input signals and sends the output signal to the next processing node. Various forms of networks can be made, and a wide range of functionalities can be realized

Therefore, neural networks are suitable for optical applications. Several same type of processing nodes must be operated in parallel and connected effectively, which can be achieved using parallel computing devices and free-space optical interconnection technology. Even if the characteristics of the individual processing nodes are diverse, this is not a serious problem because the connection weight compensates for this variation. In addition, if a free-space optical interconnection is adopted, physical wiring is not required, and the connection weight can be changed dynamically.

Anamorphic optics was proposed as an early optical neural network system to perform vector-matrix multiplication [27, 28]. The sum of the input signals for each processing node given by Eq. (3) was calculated using vector-matrix multiplication. As shown in Fig. 7, the input signals arranged in a straight line were stretched vertically and projected onto a two-dimensional image, expressing the connection weight as the transmittance. The transmitted signals were then collected horizontally and detected by a one-dimensional image sensor. Using this optical system, the weighted sum of the input signals to multiple processing nodes can be calculated simultaneously. For the obtained signal, a response function was applied to obtain the output signal sequence. If the output signals are fed back directly as the input signals of successive processing nodes, a Hopfield type neural network is constructed. In this system, the connection weight is assumed to be trained and is set as a two-dimensional image before optical computation.

Anamorphic optical processor. Optical signals emitted from the LED array express vector \(\textbf{x}\), and the transmittance of the two-dimensional filter corresponds to matrix \(\textrm{H}\). The result of vector-matrix multiplication, \(\textbf{y}=\textrm{H}\textbf{x}\), is obtained on the one-dimensional image censor. Cylindrical lenses are employed to stretch and collect the optical signals along one-dimensional direction

To implement a neural network, it is important to construct the required network effectively. An optical implementation based on volumetric holograms was proposed to address this problem. For example, a dynamic volumetric hologram using a nonlinear optical crystal, such as lithium niobate, is an interesting method in which the properties of materials are effectively utilized for computation [29]. The application of nonlinear optical phenomena is a typical approach in optical computing, which is still an efficient method of natural computation.

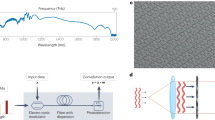

Because of the recent success in deep neural networks, novel optical neuroprocessors have been proposed. One is the diffractive deep neural network (D\(^2\)NN) [4] which implements a multilayer neural network by stacking diffractive optical elements in multiple stages. The other is the optical integrated circuit processor [3] which is composed of beamsplitters and phase shifters by optical waveguides and efficiently performs vector-matrix multiplications. This is a dedicated processor specialized in AI calculations. These achievements are positioned as important movements in optical computing linked to the current AI boom.

Reservoir computing has been proposed as an approach opposite to multi-layered and complex networks [30]. This is a neural network model composed of a reservoir layer in which processing nodes are randomly connected and input and output nodes to the reservoir layer, as shown in Fig. 8. Computational performance equivalent to that of a recurrent neural network (RNN) can be achieved by learning only the connection weight from the reservoir layer to the output node. Owing to unnecessary changes in all connection weights, the neural network model is suitable for physical implementation. Optical fiber rings [31], lasers with optical feedback and injection [32], and optical iterative function systems [33] have also been proposed for the implementation.

A schematic diagram of reservoir computing. A neural network consisting of input, reservoir, and output layers is employed. The reservoir layer contains multiple processing nodes connected randomly, and the input and output layers are connected to the reservoir layer. Only by training the connection weight between the reservoir and the output layers, \(W_{\textrm{out}}\), equivalent performance to a RNN can be achieved

5 Optical Special Purpose Processing

Electronics continued to develop far beyond what was expected when optical computing was proposed in the 1980s and the 1990s. Therefore, it is impractical to construct an information processing system using only optical technology; therefore, a system configuration that combines technologies with superiority is reasonable. Although electronic processors provide sufficient computational performance, the superiority of optical technology in signal transmission between processors is clear. Optical technology, also known as optical interconnection or optical wiring, has been proposed and developed along with optical computing. In particular, it has been shown that the higher the signal bandwidth and the longer the transmission distance, the more superior the optical interconnection becomes to that in electronics.

System architectures based on optical interconnection have encouraged the development of smart pixels with optical input and output functions for semiconductor processors. In addition, under the concept of a more generalized systemization technology called VLSI photonics, various kinds of systems-on-chips were considered in the US Department of Defense, DARPA research program, and the deployment of various systems has been considered [34]. In Japan, the element processor developed for the optoelectronic computer using laser arrays with reconfiguration (OCULAR) system [25] was extended to an image sensor with a processing function called VisionChip [35]. Until now, semiconductor materials other than silicon, such as GaAs, have been used as light-emitting devices. However, advances in silicon photonics have made it possible to realize integrated devices that include light-emitting elements [36]. This is expected to lead to an optical interconnection at a new stage.

Visual cryptography is an optical computing technology that exploits human visibility [37], which is an important feature of light. This method applies logical operations using spatial codes and effectively encrypts image information. As shown in Fig. 9, the method divides each pixel into 2\(\times \)2 sub-pixels and assigns image information and a decoding mask to a couple of the spatial codes in the sub-pixels. Owing to the arbitrariness of this combination, an efficient image encryption can be achieved. Another method for realizing secure optical communication was proposed by dividing logical operations based on spatial codes at the transmission and receiving ends of a communication system [38]. This method can be applied to information transmission from Internet of Things (IoT) edge devices.

Procedure of visual cryptography. Each pixel to be encrypted is divided into 2\(\times \)2 sub-pixels selected randomly. To decode the pixel data, 2\(\times \)2 sub-pixels of the corresponding decoding mask must be overlapped correctly. Exactly arranged decoding masks are required to retrieve the original image

The authors proposed a compound-eye imaging system called thin observation module by bound optics (TOMBO) which is inspired by a compound eye found in insects [39]. As shown in Fig. 10, it is an imaging system in which a microlens array is arranged in front of the image sensor, and a plurality of information is captured at once. This is a kind of multi-aperture optical system and provides various interesting properties. The system can be made very thin, and by appropriately setting the optical characteristics of each ommatidium and combining it with post-processing using a computer, the object parallax, light beam information, spectral information, etc. can be acquired. The presentation of TOMBO was one of the triggers for the organization of the OSA Topical Meeting on Computational Optical Sensing and Imaging (COSI) [40].

The compound-eye imaging system TOMBO. The system is composed of a microlens array, a signal separator, and an image sensor. The individual microlens and the corresponding area of the image sensor form an imaging system called a unit. This system is a kind of multi-aperture optical system and provides various functionalities associated with the post processing

The imaging technique used in COSI is called computational imaging. In conventional imaging, the signal captured by the imaging device through an optical system faithfully reproduces the subject information. However, when considering signal acquisition for information systems such as machine vision, such requirements are not necessarily important. Instead, by optimizing optical and arithmetic systems, new imaging technologies beyond the functions and performance of conventional imaging can be developed. This is the fundamental concept of computational imaging, which combines optical encoding and computational decoding. This encoding style enables various imaging modalities, and several imaging techniques beyond conventional frameworks have been proposed [41, 42].

The task of optical coding in computational imaging is the same as that in optical computing, which converts object information into a form that is easy to handle for the processing system. This can be regarded as a modern form of optical computing dedicated to information visualization [43]. In imaging and object recognition using scattering media, new achievements have been made using deep learning [44, 45]. In addition, the concept of a photonic accelerator was proposed for universal arithmetic processing [46]. This concept is not limited to imaging and also incorporates optical computing as a processing engine in high-performance computing systems. This strategy is more realistic than that of the optical computers of the 1980s, which were aimed at general-purpose computation. Furthermore, when considering a modern computing system connected to a cloud environment, IoT edge devices as the interface to the real world will be the main battlefield of optical computing. If this happens, the boundary between optical computing and optical coding in computational imaging will become blurred, and a new optical computing image is expected to be built.

6 Nanophotonic Computing

A major limitation of optical computing is the spatial resolution limit due to the diffraction of light. In the case of visible light, the spatial resolution of sub-\(\mu \)m is much larger than the cell pitch of a semiconductor integrated circuit of several nanometers. Near-field probes can be used to overcome this limitation and an interesting concept has been proposed that uses the restriction imposed by the diffraction of light [47]. This method utilizes the hierarchy in optical near-field interaction to hide information under the concept of a hierarchical nanophotonic system, where multiple functions are associated with the physical scales involved. Unfortunately, a problem is associated with the near-field probe: the loss of parallelism caused by the free-space propagation of light. To solve this problem, a method that combines optical and molecular computing has been proposed [48]. The computational scheme is called photonic DNA computing which combines autonomous computation by molecules and arithmetic control by light.

DNA molecules have a structure in which four bases, adenine, cytosine, guanine, and thymine, are linked in chains. Because adenine specifically forms hydrogen bonds with thymine and cytosine with guanine, DNA molecules with this complementary base sequence are synthesized in vivo, resulting in the formation of a stable state called a double-stranded structure. This is the mechanism by which DNA molecules store genetic information and can be referred to as the blueprint of living organisms. The principle of DNA computing is to apply this property to matching operations of information encoded as base sequences. Single-stranded DNA molecules with various base sequences are mixed, and the presence of specific information is detected by the presence or absence of double-strand formation. This series of processes proceeds autonomously; however, the processing content must be encoded in the DNA molecule as the base sequence. Therefore, it is difficult to perform large-scale complex calculations using naïve principles.

To solve this problem, the author’s group conceived photonic DNA computing [48] which combines optical technology and DNA computing and conducted a series of studies. It is characterized by the microfineness, autonomous reactivity, and large-scale parallelism of DNA molecules, as shown in Fig. 11. Photonic DNA computing realizes flexible and efficient massive parallel processing by collectively manipulating the DNA molecules using optical signals. In this system, DNA molecules act as nanoscale computational elements and optical technology provides an interface. Because it is based on molecular reactions, it has the disadvantage of slow calculation speed; however, it is expected to be used as a nanoprocessor that can operate in vivo.

A schematic diagram of photonic DNA computing. Similar to the conventional DNA computing, DNA molecules are used for information media as well as an autonomous computing engine. In this system, several reacting spaces are prepared to proceed multiple sets of DNA reactions concurrently in the separated reacting spaces. Laser trapped micro beads are employed to transfer the information-encoded DNA over the reacting spaces. DNA denaturation induced by light illumination is used to control the local reaction

For accelerating computational speed restricted by molecular reactions, an arithmetic circuit using Förster resonance energy transfer (FRET) has been developed [49]. FRET is an energy-transfer phenomenon that occurs at particle intervals of several nanometers, and high-speed information transmission is possible. Using DNA molecules as scaffolds, fluorescent molecules can be arranged with nanoscale precision to generate FRET. As shown in Fig. 12, AND, OR, and NOT gates are implemented. By combining these gates, nanoscale arithmetic circuits can be constructed. This technology is characterized by its minuteness and low energy consumption. In contrast to a system configuration with precisely arranged fluorescent molecules, a random arrangement method is also promising. Based on this concept, our group is conducting research to construct a FRET network with quantum dots and use the output signal in the space, time, and spectrum domains as a physical reservoir [6]. For more details, please refer to the other chapters of this book.

Logic gates implemented by FRET. a DNA scaffold logic using FRET signal cascades. b A fluorescence dye corresponding to site i of a DNA scaffold switches between the ON and OFF states according to the presence of an input molecule (input a). c Configuration for the AND logic operation. Fluorescent molecules of a FRET pair are assigned to neighboring sites, i and j. d Configuration for the OR logic operation. Multiple input molecules can deliver a fluorescent molecule to a single site [49]

7 Toward Further Extension

First, the significance of optical computing technology in the 1980s and the 1990s is considered. Despite the introduction of this study, explicit computing systems have not yet been developed for several technologies. However, some technologies have come to fruition as optical devices and technologies that support current information and communication technologies. The exploration of nonlinear optical phenomena has promoted the development of optoelectronics, and numerous results have been obtained, including those for quantum dot optical devices. They are used in current optical communications, and their importance is evident. Many researchers and engineers involved in optical computing have contributed to the development of general optical technologies. These fields have evolved into digital holography [50] and information photonics [51]. From this perspective, it can be said that the previous optical computing boom laid the foundation for the current optical information technology.

However, considering computing tasks, research at that time was not necessarily successful. One of the reasons for this is that they were too caught up in digital arithmetic methods, for better or worse. The diffraction limit of light is in the order of submicrometers, which is too large compared to the information density realized using semiconductor integration technology. Therefore, a simple imitation or partial application of digital arithmetic methods cannot demonstrate the uniqueness of computation using light. In addition, the peripheral technologies that could constitute the system are immature. Systematization requires not only elemental technologies but also design theories and implementation technologies to bring them together and applications that motivate their development. Unfortunately, the technical level at that time did not provide sufficient groundwork for development.

What about neural networks based on analog arithmetic other than digital arithmetic methods? As mentioned above, optical neural networks are architectures suitable for optical computing that can avoid these problems. However, the immaturity of the elemental devices is similar, and it has been difficult to develop it into a practical system that requires interconnections with a very large number of nodes. In addition, studies on neural networks themselves are insufficient, and we must wait for the current development of deep neural networks. Thus, the ingenious architecture at that time was difficult to develop owing to the immaturity of peripheral technology, and it did not lead to superiority over competitors. Furthermore, the problems that require high-performance computing are not clear, and it can be said that the appeal of the proposition of computing, including social demands, was insufficient.

Based on these circumstances, we will consider the current status of optical computing. Now that the practical applications of quantum computing are on the horizon, expectations for optical quantum computing are increasing [52]. Although not discussed in this article, quantum computing continues to be a promising application of optical computing. In addition, optical coding in computational imaging can be considered as a modern form of optical computing with limited use and purpose [43]. Computational imaging realizes highly functional and/or high-performance imaging combined with optical coding and arithmetic decoding. In this framework, the optical coding that proceeds through the imaging process determines the function of the entire process and enables high throughput. For optical coding, various methods, such as point spread function modulation, called PSF engineering [53], object modulation by projecting light illumination [54], and multiplexing imaging by multiple apertures [55], form the field of modern optical computing.

Optical computing, which is closer to practical applications, is neuromorphic computing [56]. The superior connection capability of optical technology makes it suitable for implementing neural networks that require many interconnections, and many studies are underway. However, in the human brain, approximately 100 billion neurons are connected by approximately 10 thousand synapses per neuron; therefore, a breakthrough is required to realize them optically. Deep learning models have advanced computational algorithms; however, their implementation relies on existing computers. The concept of a photonic accelerator is a realistic solution used specifically for processing in which light can exert superiority [46]. An optical processor that performs multiply-accumulate operations necessary for learning processing can be cited as a specific example [3].

Furthermore, nanophotonic computing, based on optical phenomena at the molecular level, has significant potential. Reservoir computing can alleviate connectivity problems and enable the configuration of more realistic optical computing systems [57]. Our group is developing a reservoir computing system that utilizes a FRET network, which is the energy transfer between adjacent quantum dots [6]. FRET networks are expected to perform computational processing with an extremely low energy consumption. The background of this research is the explosive increase in the amount of information and arithmetic processing, which require enormous computational power, as represented by deep learning. Computing technologies that consume less energy are expected to become increasingly important in the future. In addition, cooperation between real and virtual worlds, such as metaverse [58] and cyber-physical systems [59], is becoming increasingly important. As an interface, optical computing is expected to be applied in IoT edge devices that exploit the characteristics of optical technologies.

In conclusion, optical computing is by no means a technology of the past and is being revived as a new computing technology. In addition, optical information technology research areas that are not aimed at computing, such as digital holography and information photonics, are being developed. By combining the latest information technology with various optical technologies cultivated thus far, a new frontier in information science is being opened.

References

D. Schaefer, J. Strong, Tse computers. Proc. IEEE 65(1), 129–138 (1977). https://doi.org/10.1109/PROC.1977.10437

Adaptive optics: Analysis and methods/computational optical sensing and imaging/information photonics/signal recovery and synthesis topical meetings on CD-ROM (2005)

Y. Shen, N.C. Harris, S. Skirlo, M. Prabhu, T. Baehr-Jones, M. Hochberg, X. Sun, S. Zhao, H. Larochelle, D. Englund, M. Soljačić, Deep learning with coherent nanophotonic circuits. Nat. Photonics 11(7), 441–446 (2017). https://doi.org/10.1038/nphoton.2017.93

X. Lin, Y. Rivenson, N.T. Yardimci, M. Veli, Y. Luo, M. Jarrahi, A. Ozcan, All-optical machine learning using diffractive deep neural networks. Science 8084, eaat8084 (2018). arXiv:1406.1078, https://doi.org/10.1126/science.aat8084, http://www.sciencemag.org/lookup/doi/10.1126/science.aat8084

G. Tanaka, T. Yamane, J.B. Héroux, R. Nakane, N. Kanazawa, S. Takeda, H. Numata, D. Nakano, A. Hirose, Recent advances in physical reservoir computing: a review. Neural Netw. 115, 100–123 (2019). https://doi.org/10.1016/j.neunet.2019.03.005

N. Tate, Y. Miyata, S. ichi Sakai, A. Nakamura, S. Shimomura, T. Nishimura, J. Kozuka, Y. Ogura, J. Tanida, Quantitative analysis of nonlinear optical input/output of a quantum-dot network based on the echo state property. Opt. Express 30(9), 14669–14676 (2022). https://doi.org/10.1364/OE.450132,https://opg.optica.org/oe/abstract.cfm?URI=oe-30-9-14669

W.P. Bleha, L.T. Lipton, E. Wiener-Avnear, J. Grinberg, P.G. Reif, D. Casasent, H.B. Brown, B.V. Markevitch, Application of the liquid crystal light valve to real-time optical data processing. Opt. Eng. 17(4), 174371 (1978). https://doi.org/10.1117/12.7972245

G. Lazarev, A. Hermerschmidt, S. Krüger, S. Osten, LCOS Spatial Light Modulators: Trends and Applications, Optical Imaging and Metrology: Advanced Technologies (2012), pp.1–29

A.M. Weiner, J.P. Heritage, E.M. Kirschner, High-resolution femtosecond pulse shaping. J. Opt. Soc. Am. B 5(8), 1563–1572 (1988). https://doi.org/10.1364/JOSAB.5.001563, https://opg.optica.org/josab/abstract.cfm?URI=josab-5-8-1563

P. Refregier, B. Javidi, Optical image encryption based on input plane and fourier plane random encoding. Opt. Lett. 20(7), 767–769 (1995). https://doi.org/10.1364/OL.20.000767, https://opg.optica.org/ol/abstract.cfm?URI=ol-20-7-767

O. Matoba, T. Nomura, E. Perez-Cabre, M.S. Millan, B. Javidi, Optical techniques for information security. Proc. IEEE 97(6), 1128–1148 (2009). https://doi.org/10.1109/JPROC.2009.2018367

X. Quan, M. Kumar, O. Matoba, Y. Awatsuji, Y. Hayasaki, S. Hasegawa, H. Wake, Three-dimensional stimulation and imaging-based functional optical microscopy of biological cells. Opt. Lett. 43(21), 5447–5450 (2018). https://doi.org/10.1364/OL.43.005447, https://opg.optica.org/ol/abstract.cfm?URI=ol-43-21-5447

B.K. Jenkins, A.A. Sawchuk, T.C. Strand, R. Forchheimer, B.H. Soffer, Sequential optical logic implementation. Appl. Opt. 23(19), 3455–3464 (1984). https://doi.org/10.1364/AO.23.003455.URL https://opg.optica.org/ao/abstract.cfm?URI=ao-23-19-3455

D.A.B. Miller, D.S. Chemla, T.C. Damen, A.C. Gossard, W. Wiegmann, T.H. Wood, C.A. Burrus, Novel hybrid optically bistable switch: the quantum well self-electro-optic effect device. Appl. Phys. Lett. 45(1), 13–15 (1984). arXiv: https://pubs.aip.org/aip/apl/article-pdf/45/1/13/7753868/13_1_online.pdf , https://doi.org/10.1063/1.94985

J. Tanida, Y. Ichioka, Optical logic array processor using shadowgrams. J. Opt. Soc. Am. 73(6), 800–809 (1983). https://doi.org/10.1364/JOSA.73.000800, https://opg.optica.org/abstract.cfm?URI=josa-73-6-800

A. Huang, Parallel algormiivls for optical digital computers, in 10th International Optical Computing Conference, vol. 0422, ed. by S. Horvitz (International Society for Optics and Photonics, SPIE, 1983), pp. 13–17. https://doi.org/10.1117/12.936118

K.-H. Brenner, A. Huang, N. Streibl, Digital optical computing with symbolic substitution. Appl. Opt. 25(18), 3054–3060 (1986). https://doi.org/10.1364/AO.25.003054, https://opg.optica.org/ao/abstract.cfm?URI=ao-25-18-3054

J. Tanida, Y. Ichioka, Programming of optical array logic. 1: Image data processing. Appl. Opt. 27(14), 2926–2930 (1988). https://doi.org/10.1364/AO.27.002926, https://opg.optica.org/ao/abstract.cfm?URI=ao-27-14-2926

J. Tanida, M. Fukui, Y. Ichioka, Programming of optical array logic. 2: Numerical data processing based on pattern logic. Appl. Opt. 27(14), 2931–2939 (1988). https://doi.org/10.1364/AO.27.002931, https://opg.optica.org/ao/abstract.cfm?URI=ao-27-14-2931

B. Chopard, M. Droz, Cellular Automata Modeling of Physical Systems (Cambridge University Press, Cambridge, 1998). https://doi.org/10.1007/978-0-387-30440-3_57

B. Durand, Z. Róka, The Game of Life: Universality Revisited (Springer Netherlands, Dordrecht, 1999), pp. 51–74. https://doi.org/10.1007/978-94-015-9153-9_2

K.-S. Huang, A.A. Sawchuk, B.K. Jenkins, P. Chavel, J.-M. Wang, A.G. Weber, C.-H. Wang, I. Glaser, Digital optical cellular image processor (docip): experimental implementation. Appl. Opt. 32(2), 166–173 (1993). https://doi.org/10.1364/AO.32.000166, https://opg.optica.org/ao/abstract.cfm?URI=ao-32-2-166

M.J. Murdocca, A Digital Design Methodology for Optical Computing (MIT Press, Cambridge, MA, USA, 1991)

J. Tanida, Y. Ichioka, Opals: optical parallel array logic system. Appl. Opt. 25(10), 1565–1570 (1986). https://doi.org/10.1364/AO.25.001565, https://opg.optica.org/ao/abstract.cfm?URI=ao-25-10-1565

N. McArdle, M. Naruse, M. Ishikawa, Optoelectronic parallel computing using optically interconnected pipelined processing arrays. IEEE J. Sel. Top. Quantum Electron. 5(2), 250–260 (1999)

G. Li, D. Huang, E. Yuceturk, P.J. Marchand, S.C. Esener, V.H. Ozguz, Y. Liu, Three-dimensional optoelectronic stacked processor by use of free-space optical interconnection and three-dimensional vlsi chip stacks. Appl. Opt. 41(2), 348–360 (2002). https://doi.org/10.1364/AO.41.000348, https://opg.optica.org/ao/abstract.cfm?URI=ao-41-2-348

J.W. Goodman, A.R. Dias, L.M. Woody, Fully parallel, high-speed incoherent optical method for performing discrete fourier transforms. Opt. Lett. 2(1), 1–3 (1978). https://doi.org/10.1364/OL.2.000001, https://opg.optica.org/ol/abstract.cfm?URI=ol-2-1-1

D. Psaltis, N. Farhat, Optical information processing based on an associative-memory model of neural nets with thresholding and feedback. Opt. Lett. 10(2), 98–100 (1985). https://doi.org/10.1364/OL.10.000098, https://opg.optica.org/ol/abstract.cfm?URI=ol-10-2-98

D. Psaltis, D. Brady, K. Wagner, Adaptive optical networks using photorefractive crystals. Appl. Opt. 27(9), 1752–1759 (1988). https://doi.org/10.1364/AO.27.001752, https://opg.optica.org/ao/abstract.cfm?URI=ao-27-9-1752

M. Lukoševičius, H. Jaeger, Reservoir computing approaches to recurrent neural network training. Comput. Sci. Rev. 3(3), 127–149 (2009). https://doi.org/10.1016/j.cosrev.2009.03.005, https://www.sciencedirect.com/science/article/pii/S1574013709000173

F. Duport, B. Schneider, A. Smerieri, M. Haelterman, S. Massar, All-optical reservoir computing. Opt. Express 20(20), 22783–22795 (2012). https://doi.org/10.1364/OE.20.022783, https://opg.optica.org/oe/abstract.cfm?URI=oe-20-20-22783

J. Nakayama, K. Kanno, A. Uchida, Laser dynamical reservoir computing with consistency: an approach of a chaos mask signal. Opt. Express 24(8), 8679–8692 (2016). https://doi.org/10.1364/OE.24.008679, https://opg.optica.org/oe/abstract.cfm?URI=oe-24-8-8679

N. Segawa, S. Shimomura, Y. Ogura, J. Tanida, Tunable reservoir computing based on iterative function systems. Opt. Express 29(26), 43164–43173 (2021). https://doi.org/10.1364/OE.441236, https://opg.optica.org/oe/abstract.cfm?URI=oe-29-26-43164

E.-H. Lee, Vlsi photonics: a story from the early studies of optical microcavity microspheres and microrings to present day and its future outlook, in Optical Processes in Microparticles and Nanostructures: A Festschrift Dedicated to Richard Kounai Chang on His Retirement from Yale University (World Scientific, 2011), pp. 325–341

T. Komuro, I. Ishii, M. Ishikawa, A. Yoshida, A digital vision chip specialized for high-speed target tracking. IEEE Trans. Electron Devices 50(1), 191–199 (2003). https://doi.org/10.1109/TED.2002.807255

B. Jalali, S. Fathpour, Silicon photonics. J. Light. Technol. 24(12), 4600–4615 (2006). https://doi.org/10.1109/JLT.2006.885782

H. Yamamoto, Y. Hayasaki, N. Nishida, Securing information display by use of visual cryptography. Opt. Lett. 28(17), 1564–1566 (2003). https://doi.org/10.1364/OL.28.001564, https://opg.optica.org/ol/abstract.cfm?URI=ol-28-17-1564

J. Tanida, K. Tsuchida, R. Watanabe, Digital-optical computational imaging capable of end-point logic operations. Opt. Express 30(1), 210–221 (2022). https://doi.org/10.1364/OE.442985, https://opg.optica.org/oe/abstract.cfm?URI=oe-30-1-210

J. Tanida, T. Kumagai, K. Yamada, S. Miyatake, K. Ishida, T. Morimoto, N. Kondou, D. Miyazaki, Y. Ichioka, Thin observation module by bound optics (tombo): concept and experimentalverification. Appl. Opt. 40(11), 1806–1813 (2001). https://doi.org/10.1364/AO.40.001806, https://opg.optica.org/ao/abstract.cfm?URI=ao-40-11-1806

D.J. Brady, A. Dogariu, M.A. Fiddy, A. Mahalanobis, Computational optical sensing and imaging: introduction to the feature issue. Appl. Opt. 47(10), COSI1–COSI2 (2008). https://doi.org/10.1364/AO.47.0COSI1, https://opg.optica.org/ao/abstract.cfm?URI=ao-47-10-COSI1

E.R. Dowski, W.T. Cathey, Extended depth of field through wave-front coding. Appl. Opt. 34(11), 1859–1866 (1995). https://doi.org/10.1364/AO.34.001859, https://opg.optica.org/ao/abstract.cfm?URI=ao-34-11-1859

J.N. Mait, G.W. Euliss, R.A. Athale, Computational imaging. Adv. Opt. Photon. 10(2), 409–483 (2018). https://doi.org/10.1364/AOP.10.000409, https://opg.optica.org/aop/abstract.cfm?URI=aop-10-2-409

J. Tanida, Computational imaging demands a redefinition of optical computing. Jpn. J. Appl. Phys. 57(9S1), 09SA01 (2018). https://doi.org/10.7567/JJAP.57.09SA01

T. Ando, R. Horisaki, J. Tanida, Speckle-learning-based object recognition through scattering media, Opt. Express 23(26), 33902–33910 (2015). https://doi.org/10.1364/OE.23.033902, https://opg.optica.org/oe/abstract.cfm?URI=oe-23-26-33902

R. Horisaki, R. Takagi, J. Tanida, Learning-based imaging through scattering media. Opt. Express 24(13), 13738–13743 (2016). https://doi.org/10.1364/OE.24.013738, https://opg.optica.org/oe/abstract.cfm?URI=oe-24-13-13738

K. Kitayama, M. Notomi, M. Naruse, K. Inoue, S. Kawakami, A. Uchida, Novel frontier of photonics for data processing—Photonic accelerator. APL Photonics 4(9), 090901 (2019). arXiv: https://pubs.aip.org/aip/app/article-pdf/doi/10.1063/1.5108912/14569493/090901_1_online.pdf , https://doi.org/10.1063/1.5108912

M. Naruse, N. Tate, M. Ohtsu, Optical security based on near-field processes at the nanoscale. J. Opt. 14(9), 094002 (2012). https://doi.org/10.1088/2040-8978/14/9/094002

J. Tanida, Y. Ogura, S. Saito, Photonic DNA computing: concept and implementation, in ICO20: Optical Information Processing, vol. 6027, eds. by Y. Sheng, S. Zhuang, Y. Zhang (International Society for Optics and Photonics, SPIE, 2006), p. 602724. https://doi.org/10.1117/12.668196

T. Nishimura, Y. Ogura, J. Tanida, Fluorescence resonance energy transfer-based molecular logic circuit using a DNA scaffold. Appl. Phys. Lett. 101(23), 233703 (2012). arXiv: https://pubs.aip.org/aip/apl/article-pdf/doi/10.1063/1.4769812/13176369/233703_1_online.pdf , https://doi.org/10.1063/1.4769812

B. Javidi, A. Carnicer, A. Anand, G. Barbastathis, W. Chen, P. Ferraro, J.W. Goodman, R. Horisaki, K. Khare, M. Kujawinska, R.A. Leitgeb, P. Marquet, T. Nomura, A. Ozcan, Y. Park, G. Pedrini, P. Picart, J. Rosen, G. Saavedra, N.T. Shaked, A. Stern, E. Tajahuerce, L. Tian, G. Wetzstein, M. Yamaguchi, Roadmap on digital holography [invited]. Opt. Express 29(22), 35078–35118 (2021). https://doi.org/10.1364/OE.435915, https://opg.optica.org/oe/abstract.cfm?URI=oe-29-22-35078

G. Barbastathis, A. Krishnamoorthy, S.C. Esener, Information photonics: introduction. Appl. Opt. 45(25), 6315–6317 (2006). https://doi.org/10.1364/AO.45.006315, https://opg.optica.org/ao/abstract.cfm?URI=ao-45-25-6315

P. Kok, W.J. Munro, K. Nemoto, T.C. Ralph, J.P. Dowling, G.J. Milburn, Linear optical quantum computing with photonic qubits. Rev. Mod. Phys. 79, 135–174 (2007). https://doi.org/10.1103/RevModPhys.79.135, https://link.aps.org/doi/10.1103/RevModPhys.79.135

P.T.C. So, H.-S. Kwon, C.Y. Dong, Resolution enhancement in standing-wave total internal reflection microscopy: a point-spread-function engineering approach. J. Opt. Soc. Am. A 18(11), 2833–2845 (2001). https://doi.org/10.1364/JOSAA.18.002833, https://opg.optica.org/josaa/abstract.cfm?URI=josaa-18-11-2833

T. Ando, R. Horisaki, J. Tanida, Three-dimensional imaging through scattering media using three-dimensionally coded pattern projection. Appl. Opt. 54(24), 7316–7322 (2015). https://doi.org/10.1364/AO.54.007316, https://opg.optica.org/ao/abstract.cfm?URI=ao-54-24-7316

J. Tanida, Multi-aperture optics as a universal platform for computational imaging. Opt. Rev. 23(5), 859–864 (2016). https://doi.org/10.1007/s10043-016-0256-0

N. Zheng, P. Mazumder, Learning in Energy-Efficient Neuromorphic Computing: Algorithm and Architecture Co-Design (Wiley, New York, 2019)

D. Brunner, M.C. Soriano, G.V. der Sande (eds.), Optical Recurrent Neural Networks (De Gruyter, Berlin, Boston, 2019) [cited 2023-06-08]. https://doi.org/10.1515/9783110583496

S. Mystakidis, Metaverse. Encyclopedia 2(1), 486–497 (2022). https://doi.org/10.3390/encyclopedia2010031, https://www.mdpi.com/2673-8392/2/1/31

E.A. Lee, Cyber physical systems: design challenges, in 2008 11th IEEE International Symposium on Object and Component-Oriented Real-Time Distributed Computing (ISORC) (2008), pp. 363–369. https://doi.org/10.1109/ISORC.2008.25

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2024 The Author(s)

About this chapter

Cite this chapter

Tanida, J. (2024). Revival of Optical Computing. In: Suzuki, H., Tanida, J., Hashimoto, M. (eds) Photonic Neural Networks with Spatiotemporal Dynamics. Springer, Singapore. https://doi.org/10.1007/978-981-99-5072-0_1

Download citation

DOI: https://doi.org/10.1007/978-981-99-5072-0_1

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-5071-3

Online ISBN: 978-981-99-5072-0

eBook Packages: Computer ScienceComputer Science (R0)