Abstract

Sea surface height anomaly (SSHA) refers to an essential parameter that can be used to monitor the oceans. Because of global warming and glacial melting, sea-level rise is expected to accelerate, eventually causing ecosystem imbalance and threatening coastal cities. In this chapter, we introduce a multilayer fusion recurrent neural network (MLFrnn) for achieving a precise and holistic estimate of the SSHA by considering only a series of previous SSHA observations. In addition, the MLFrnn model can capture and fuse the spatiotemporal feature of the SSHA time series in neighboring and remote areas. This network was trained in an end-to-end manner, and the daily average satellite altimeter SSHA data of the South China Sea from January 1, 2001, to May 13, 2019, was used for evaluating the prediction performance. Experiments showed that the MLFrnn model yields a reliable result, with a mean square error (MSE) of the 21-day average prediction of 0.027 m. In comparison with the currently available deep learning networks, the MLFrnn model is superior in terms of prediction performance and stability, particularly in large-scale and long-term prediction.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

1 Significance of Sea Surface Height Anomaly Prediction

Sea surface height anomaly (SSHA) is one of the essential parameters for investigating ocean dynamics and climate change [4, 6, 32] and indicates mesoscale ocean dynamics features such as currents, tides, ocean fronts, and water masses. In addition, it is an important parameter for marine disaster emergency response [10, 30, 37, 39]. Historically, sea-level changes were computed using the tide gauge data [15, 40]. Compared with sparsely distributed tide gauge stations, recently, the advent of satellite altimetry has enabled constant sea-level measurements that include the entire sea area. The use of altimetry allows to acquire a particular sea-level change to the terrestrial reference frame, thus offering high-precision data for studying sea level [1, 8, 13, 25, 34].

2 Review of SSHA Predicting Methods

To predict sea-level changes, numerous approaches based on satellite altimetry data have been proposed, which can be categorized according to the type of model used as physical-based [7, 16, 27] and data-driven models [3, 31].

Physical-based models estimate sea-level changes by statistically combining related physics and dynamics equations. Reference [7] predicted the annual and semi-annual global average sea-level changes by employing a hydrological model. Reference [16] compared the future worldwide and regional sea-level anomalies caused by the emissions of greenhouse gas in the 21st century in accordance with the Hadley Center climate simulations (HadCM2 and HadCM3). Reference [27] attempted to predict worldwide seasonal sea-level anomalies ahead of 7 months by developing the dynamic atmosphere-ocean coupled model.

Data-driven models build mapping correlations between sea-level anomaly records by using statistical methods and are comparatively more accurate in the prediction of sea-level changes. Reference [3] forecasted seasonal sea-level anomalies in the North Atlantic based on the auto-regressive integrated moving average. By using the least-squares (LS) method, [31] forecasted the global average and gridded sea level anomalies of the eastern equatorial Pacific by using a polynomial-harmonic model. To assess the sea-level changes on the mid-Atlantic coast of the United States, [12] developed a technique based on empirical mode decomposition (EMD). References [33] and [28] respectively studied sea level anomalies based on the changes in earth’s temperature and ice sheet flow by using semi-empirical methods. Reference [14] proposed a hybrid model that combines the EMD, LS, and singular spectrum analysis to predict long-run sea-surface anomalies in the South China Sea (SCS). Reference [17] employed evolutionary support vector regression (SVR) and gene expression programming to predict sea-level anomalies in the Caspian Sea by using previous sea-level records. They also combined SVR with empirical orthogonal function (EOF) [18], wherein they adopted SVR for predicting sea-level anomalies in the tropical Pacific and simultaneously applied EOF for extracting the main components with the aim to lower data dimensions.

Deep learning (DL) is a data-driven approach that is well adapted to nonlinear relationships. Recently, DL has been used for forecasting time-series data [9, 19, 22, 36, 42]. The similarity between SSHA pattern prediction and time-series data prediction has prompted researchers to propose a variety of data-driven models for predicting SSHAs by using DL. Reference [5] adopted an RNN network for predicting and analyzing sea-level anomalies. RNN networks outperform simple regression models by extracting and fusing the characteristics of the time dimension [11]. Reference [23] proposed a DL model that integrates long short-term memory (LSTM) network with an attention mechanism [2] to reliably predict SSHAs. Reference [38] developed the merged-LSTM model and showed that it is superior to several advanced machine learning approaches in predicting sea-surface anomalies.

The aforementioned RNN/LSTM forecasting strategies focus on temporal change modeling, where constant state data updating is practiced within every LSTM unit over time. They perform SSHA estimation by utilizing the former SSHA series either at a single site or at its tightly adjoining sites, thus failing to consider the veiled information of the SSHA series at the remaining associated remote positions. However, the sea level of a region is influenced by both nearby and distant areas. In addition, spatial deformations and temporal dynamics have equal significance in the prediction of forthcoming SSHA fields.

In addition, the former approaches can only predict the SSHA at a single grid and not the SSHA over the entire region. In terms of each grid in the region, the models must be trained. Thus, to forecast the value of all grids in the area, each grid must be trained numerous times to acquire diverse network parameters. In addition, the high model storage and retraining time are not acceptable.

In the following section, we introduce an SSHA prediction method named multilayer fusion recurrent neural network (MLFrnn). MLFrnn can be used in the temporal and spatial domains and can accurately predict the SSHA map for the entire region.

3 Multi-Layer Fusion Recurrent Neural Network for SSHA Field Prediction

We introduce a classical spatiotemporal forecasting architecture in Sect. 3.1 and then present the network architecture of MLFrnn Sect. 3.2. Finally, the multilayer fusion cell as the fundamental building block of MLFrnn is discussed in Sect. 3.3.

3.1 A Classical Spatiotemporal Forecasting Architecture: ConvLSTM

Spatiotemporal zone modeling is necessary for the prediction of SSHA fields, an unachievable task using the RNN/LSTM forecasting strategies. For extracting spatiotemporal information from time-series data, [35] developed ConvLSTM, a variant of LSTM where three-dimensional (3D) tensors are used for representing all of the inputs, gates, cell states, and hidden states. ConvLSTM utilizes convolution operators to capture spatial characteristics by clearly encoding the spatial information into tensors. The critical equations of ConvLSTM are as follows:

In this architecture, \(*\) denotes the convolutional operator, \(\odot \) denotes the Hadamard product, and \(\sigma \) refers to a sigmoid activation function with a value of [0, 1] describing the amount of to-be-transmitted information about the state. The cell state conveyance is dependent on \(i_{t}\),\(g_{t}\),\(f_{t}\) and \(o_{t}\) which helps avoid fast gradient disappearance by capturing the state in the memory and helps alleviate the problem of long-run dependency.

By stacking ConvLSTM, an encoder-decoder network can be obtained, as shown in Fig. 1. The ConvLSTM network takes \(X_{t}\) as the input of the first layer and \({\widetilde{X}}_{t}\) as the prediction result of the n-th layer. In addition, the ConvLSTM transmits hidden states in both vertical and horizontal directions. The update of the cell state is restricted within every layer of ConvLSTM and transmitted only in horizontal directions. The cell states of various layers are mutually irrelevant. Thus, every ConvLSTM layer will completely ignore cell states at lower layer. Moreover, the original layer (the lowest layer) does not reflect the memory contents of the deepest layer (the n-th layer) at the prior time step.

3.2 Architecture of MLFrnn

The states of different ConvLSTM layers are collectively irrelevant, and the interlayer connections are not explored sufficiently. To tackle this problem, the MLFrnn was developed in this study for the estimation of SSHA fields. As MLFrnn’s fundamental building element, a novel type of multilayer fusion cells was designed that enable spatiotemporal trait acquisition at the nearby and remote positions for the SSHA fields. These features are delivered both horizontally and vertically.

MLFrnn model for forecasting SSHA fields. The red and purple arrows respectively indicate the directions of the information flows for inputs and forecasts, whereas the green arrows denote the flow direction of the cell/hidden state information that is transmitted both transversely and longitudinally. The blue arrows show the flow direction of relevance state information constituting the pristine-deepest layer connection

Let’s denote the SSHA field at time step \(t\) by \(X_{t}\). The SSHA field can then be predicted based on the former \(t\)-days observations, as follows:

where \(p( \cdot )\) represents a conditional probability and \({X^{'}}_{t + 1}\) is the forecast SSHA field at time step \(t + 1\).

Figure 2 presents the architecture of the MLFrnn model. It can extract cell state \(C_{t}^{n}\) and hidden state \(H_{t}^{n}\) (\(t\) denotes time and \(n\) represents the number of layers) for storing long-run and short-run memories. The cell state is expressed in vertical and horizontal ways rather than horizontally as in ConvLSTM. To study SSHA variations, the MLFrnn model fuses cell states and hidden states from diverse layers.

Additionally, in ConvLSTM, the pristine and deepest layers are irrelevant at the prior time step. To overcome this limitation, a supplementary relevance state \(R_{t}^{n}\) is added, which accomplishes the spatial trait storage for the SSHA fields and their straight transverse updating. In the subsequent time step, the pristine layer is fed with the relevance state of the deepest layer \(R_{t}^{n = 4}\), to allow a more abundant spatial feature.

The forecast of the SSHA field \({\widetilde{X}}_{t}\) is acquired by adopting a \(1 \times 1\) convolution operation to the hidden state from the deepest layer \(H_{t}^{n = 4}\).

3.3 Multi-layer Fusion Cell

A recurrent forecasting module and a feature fusion module constitute a multilayer fusion cell, which completely fuses cell state, hidden state as well as relevance state to study the variations of SSHA fields (Fig. 3a). The structure of the recurrent forecasting module is presented, and then the feature fusion module is discussed.

Multilayer Fusion Cell. a Structure of multilayer fusion cell: The multilayer fusion cell consists of a recurrent forecasting module and a feature fusion module. Concentric circles denote concatenation. b Recurrent forecasting module: The extraction of relevance states is shown in the red box. The extraction of cell states and hidden states is presented in the blue box

3.3.1 Recurrent Forecasting Module

The recurrent forecasting module can simultaneously encode the relevance state and the cell state. As shown in Fig. 3b, the structures in the red box encode the relevance state, and the structures in the blue box encode the cell state. Subsequently, the recurrent forecasting module extracts the hidden states transferred to the next time step cell via the output gate.

(1) Encoding relevance states: The time-series data \(X_{t}\) and relevance state \(R_{t}^{n - 1}\) are considered as inputs. The input gate \({i^{'}}_{t}\), forgetting gate \({f^{'}}_{t}\), and input-modulation gate \({g^{'}}_{t}\) control the update of all the relevance states. The equations for encoding relevance states are:

(2) Encoding cell states: The time-series data \(X_{t}\) and hidden state \(H_{t - 1}^{n}\) are considered as inputs. The input gate \(i_{t}\), forgetting gate \(f_{t}\), and input-modulation gate \(g_{t}\) control the update of all the cell states. The equations for encoding cell states are:

(3) Extracting hidden states: The output gate of the recurrent forecasting module relies on \({\widehat{C}}_{t}^{n}\), \(H_{t - 1}^{n}\), and \(R_{t}^{n}\). The output gate extracts the hidden state from the cell and relevance states as the output. The equations for extracting hidden states are:

where \(*\) is the convolutional operator, \(\odot \) denotes the Hadamard product, and \(\sigma \) represents a sigmoidal activation function with a value of [0, 1], describing the amount of each to-be-transmitted information about the state. The recurrent prediction module implements the spontaneous application of broad-spectrum receptive fields by using a series of convolutional operators so that the evolutions at the adjoining and distant sites of the SSHA domain can be portrayed [21].

3.3.2 Feature Fusion Module

Figure 4 shows the feature fusion module. In this study, \({\widehat{H}}_{t - 1}^{n}\) was concatenated with the hidden state from the former layer \(H_{t}^{n - 1}\) as one of the inputs for feature fusion module. \({\widehat{C}}_{t - 1}^{n}\) was concatenated with cell state from the former layer \(C_{t}^{n - 1}\) and taken as another input. The feature fusion module can be defined as

The cell and hidden states from different layers are integrated using the feature fusing module. While the states from the shallow layer comprise the nearby location information on a local scale, the states from the deep layer comprise the remote location information on a global scale. For the generation of subsequent SSHA fields, the local and worldwide information is integrated using the feature fusing module.

4 Experimental Results and Discussion

The experimental results of the MLFrnn model are presented. First, the study area, dataset, and implementation details are presented. Next, the MLFrnn is compared with the currently available approaches. Moreover, the prediction results of the MLFrnn in different seasons are revealed. Finally, the impact of the different fusion modules and the number of layers on SSHA prediction is discussed.

4.1 Study Area and Dataset

As a semi-enclosed basin, the SCS connects the Pacific Ocean with the Indian Ocean. In addition, it features a complex seafloor topography accompanied by mesoscale eddies and frequent storm surges. It is considered as an area where oceanic and atmospheric modes exert a strong influence on the sea level [26, 29, 41, 43]. As a result, the fluctuating features of SSHA in the SCS are suitable for confirming the performance of the proposed network model. A subarea of the SCS was chosen as our study area, spanning 4.875\(^{\circ }\)N-19.625\(^{\circ }\)N and 109.875\(^{\circ }\)E-119.625\(^{\circ }\)E (red box in Fig. 5).

The altimeter data of satellites were sourced from different sensors, including Envisat, ERS-1/2, GFO, Jason-1/2/3, and T/P. The data generation was based on archiving, confirming, and interpreting satellite oceanographic (AVISO) data, while the data distributor was Copernicus Marine Environment Monitoring Service (CMEMS). The mean everyday data (0.125\(^{\circ }\)N-25.125\(^{\circ }\)N, 100.125\(^{\circ }\)E-125.125\(^{\circ }\)E) between January 1, 2001 and May 13, 2019 were utilized for experimentation, with a spatial resolution of 1/4\(^{\circ }\) latitude \(\times \) 1/4\(^{\circ }\) longitude. The training set comprised the SSHA fields recorded between January 1, 2001 and May 1, 2016, while the test set consisted of the SSHA fields recorded between May 2, 2016 and May 13, 2019. Among 6647 sequences of grouped data, 5570 sequences belonged to the training set, and 1077 sequences belonged to the test set. There were 31 tensors in every sequence, and the inter-tensor temporal interval was set as 1 day. In this study, the initial 10 tensors were regarded as the input, whereas the subsequent 21 tensors were regarded as the prediction reference. Prior to model feeding, the augmentation of data was accomplished using horizontal mirroring.

4.2 Implementation Detail

The spatial zone of the monitored SSHA field was considered a \(H \times W\) grid involving L measurements. Accordingly, a 3D tensor \(X_{t}\) with dimensions \(L \times W \times H\) was used to denote the daily SSHA data. A tensor sequence \(X_{1},X_{2},...,X_{t}\) was established by the monitoring results across t time steps from a temporal perspective. Prior to MLFrnn model feeding, the foregoing tensors were normalized within a [0, 1] scope. The normalization procedure allowed better centralization of data so that the model training and convergence could be accelerated. In the following experiments, \(H\) and \(W\) were set as 100, \(L\) was set as 1, and \(t\) was set as 10.

At the model training stage, all the initial state parameters were assigned as zero for the hidden states \(H_{t = 0}^{n}\), cell states \(C_{t = 0}^{n}\), and relevance states \(R_{t = 0}^{n}\). Upon completion of 80,000 iterations, the training procedure was terminated, and every iteration had a mini-batch size of 8. The learning rate was 0.003 at baseline value, which was progressively decreased by a factor of 0.9 per 2500 iterations [24]. The MSE was adopted as the loss function, and the optimizer proposed by Adam [20] was used. A rapid decrease in the loss function was noted, ultimately converging to a small value. The repeated training outcomes differed only slightly.

For assessing the performance of the MLFrnn model, the following three metrics were adopted: the mean absolute error (MAE), the root mean square error (RMSE), and Pearson’s correlation coefficient (r).

4.3 Experiment Results and Discussion

To predict the SSHA field for 21 d ahead and assess the performance of MLFrnn against the strong recent baseline ConvLSTM, the MLFrnn model was studied experimentally. The MLFrnn model was compared with the currently available DL approaches, namely the merged LSTM and attention-based LSTM from space and time dimensions (LSTM + STA).

In Table 1, the RMSE values of the MLFrnn and ConvLSTM models with a varying number of layers are presented for comparison. MLFrnn outperformed ConvLSTM in terms of predictive capacity, and with an increase in the time step, serious performance degradation was noted for ConvLSTM. Moreover, MLFrnn exhibited superior SSHA field predictability compared to ConvLSTM owing to the blending of spatiotemporal features (both local and global).

The predictive behavior of the MLFrnn model was compared with that of LSTM + STA and merged LSTM (Table 2). The SSHA estimation data for the latter two models were available only for 1 and 5 d ahead, respectively. Therefore, MLFrnn was compared at identical prediction times. Interestingly, despite the design purpose of MLFrnn to estimate the future 21-day SSHAs, it was noted to be superior in the case of short-period prediction as well because the vector input shortcoming with the LSTM was surmounted by the MLFrnn model, which can accomplish concurrent SSHA field elucidation for spatiotemporal architectures.

For the performance characterization of the MLFrnn model, we studied the MLFrnn prediction outcomes across various seasons. The summertime and wintertime MLFrnn predictions are displayed in Figs. 6, 7, 8, 9, 10, and 11, where the SSHA observations, MLFrnn forecasts and, their deviations are presented in a top-bottom order. Quite evidently, there were small and permissible differences in the observations made from the forecasts. As suggested by this finding, the SSHA predictability of MLFrnn is preferable across various seasons.

4.4 Ablation Study

To explore the contributions of the feature fusion module and the number of layers, an ablation study was conducted experimentally for the following models:

-

(1)

The two-layered MLFrnn based on the feature fusion module (MLF(F)-2).

-

(2)

The three-layered MLFrnn based on the feature fusion module (MLF(F)-3).

-

(3)

The four-layered MLFrnn based on the feature fusion module (MLF(F)-4).

-

(4)

The four-layered MLFrnn based on a \(3 \times 3\) convolution as feature fusion module (MLF(Conv)-4).

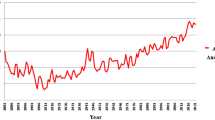

The RMSE values are displayed in Fig. 12 for the future 21-day forecasts obtained using various models. MLF(F)-4 was compared with MLF(Conv)-4. The SSHA field evolutions were forecasted by MLFrnn by using the feature fusionmodule; thus, exhibiting higher accuracy. This was probably due to the preferable modeling of long-term SSHA dependencies by the MLFrnn owing to the feature fusion module.

Further comparison was made concerning the SSHA field predictive behaviors among the models having a different number of layers. MLFrnn-4 outperformed others in terms of predictability. The broader SSHAs from peripheral zones were due to the increased number of layers, which facilitated better accuracy of the forecasts. The MAE comparisons of the 1-21-day SSHA-field forecasts obtained using different models are presented in Fig. 13. The similarities in the trend of these data to the RMSEs in Fig. 12 were noted.

The correlation coefficient reveals the degree of linearity among the investigated parameters. The Pearson’s correlation coefficient values (r) of SSHA forecasts and observations on the entire samples were determined and compared (Fig. 14). As is clear, compared to the remaining three models, MLFrnn-4, having a feature fusion module, exhibited greater r values all along. Moreover, a slower decrease in r was observed MLFrnn-4 as compared to the other three models. This confirms the positive linearity between the SSHA field (forecasted using the feature fusion module-based MLFrnn-4) and the true value.

5 Conclusion

In this chapter, we first introduced the significance of SSHA prediction and then presented a review of currently available SSHA prediction methods. Next, an SSHA forecasting model named MLFrnn was proposed. The main advantages of MLFrnn can be summarized as follows:

-

(1)

The proposed MLFrnn model can acquire spatiotemporal traits from both nearby and distant sites. Existing RNN/LSTM-based studies emphasize temporal modeling while disregarding spatial information. In contrast, the proposed MLFrnn model prominently improves the SSHA predictability by modeling both the temporal variations and the spatial evolutions of the SSHA fields.

-

(2)

MLFrnn enables prediction for the entire SSHA map rather than single-site forecasts of the SSHA. Prior approaches could achieve SSHA estimation for only one grid and required repeated model training for performing predictions for the entire zone. In contrast, the MLFrnn model can perform predictions for the entire SSHA map accurately.

-

(3)

In this study, a type of multilayer fusion cells was developed for MLFrnn to fuse local and global spatiotemporal characteristics. In addition, reliable modeling of the SSHA field evolutions was achieved using the SSHAs from both nearby and remote locations.

Finally, we selected the SCS as study area and presented the experimental results of the MLFrnn model on the daily average satellite altimeter SSHA data for nearly 19 years. The experimental results demonstrated that the MLFrnn model is effective and has better performance than the currently available DL networks in predicting the SSHA field.

References

Ablain M, Legeais JF, Prandi P, Marcos M, Fenoglio-Marc L, Dieng HB, Benveniste J, Cazenave A (2017) Satellite altimetry-based sea level at global and regional scales. Surv Geophys 38(1):7–31

Bahdanau D, Cho K, Bengio Y (2014) Neural machine translation by Jointly Learning to Align and Translate. arXiv preprint arXiv:1409.0473

Barbosa SM, Silva ME, Fernandes MJ (2006) Multivariate autoregressive modelling of sea level time series from TOPEX/Poseidon satellite altimetry. Nonlinear Process Geophys 13(2):177–184

Bonaduce A, Pinardi N, Oddo P, Spada G, Larnicol G (2016) Sea-level variability in the Mediterranean Sea from altimetry and tide gauges. Clim Dyn 47(9):2851–2866

Braakmann-Folgmann A, Roscher R, Wenzel S, Uebbing B, Kusche J (2017) Sea level anomaly prediction using recurrent neural networks. arXiv preprint arXiv:1710.07099

Carton JA, Giese BS, Grodsky SA (2005) Sea level rise and the warming of the oceans in the Simple Ocean Data Assimilation (SODA) ocean reanalysis. J Geophys Res: Oceans 110(C9)

Chen JL, Wilson CR, Chambers DP, Nerem RS, Tapley BD (1998) Seasonal global water mass budget and mean sea level variations. Geophys Res Lett 25(19):3555–3558

Cheng Y, Xu Q, Li X (2018) Spatio-temporal variability of annual sea level cycle in the Baltic Sea. Remote Sensing 10(4):528

Chien JT, Ku YC (2015) Bayesian Recurrent Neural Network for language modeling. IEEE Trans Neural Netw Learn Syst 27(2):361–374

Church JA, White NJ, Aarup T, Wilson WS, Woodworth PL, Domingues CM, Hunter JR, Lambeck K (2008) Understanding global sea levels: past, present and future. Sustain Sci 3(1):9–22

Elman JL (1990) Finding structure in time. Cogn Sci 14(2):179–211

Ezer T, Atkinson LP, Corlett WB, Blanco JL (2013) Gulf Stream’s induced sea level rise and variability along the U.S. mid-Atlantic coast. J Geophys Res: Oceans 118(2):685–697

Fu LL, Chelton DB, Zlotnicki V (1988) Satellite altimetry: observing ocean variability from space. Oceanography 1(2):4–58

Fu Y, Zhou X, Sun W, Tang Q (2019) Hybrid model combining empirical mode decomposition, singular spectrum analysis, and least squares for satellite-derived sea-level anomaly prediction. Int J Remote Sens 40(20):7817–7829

Gornitz V, Lebedeff S (1987) Global sea-level changes during the past century

Gregory JM, Lowe JA (2000) Predictions of global and regional sea-level rise using AOGCMs with and without flux adjustment. Geophys Res Lett 27(19):3069–3072

Imani M, You RJ, Kuo CY (2014) Forecasting Caspian Sea level changes using satellite altimetry data (June 1992-December 2013) based on evolutionary support vector regression algorithms and gene expression programming. Global Planet Change 121:53–63

Imani M, Chen YC, You RJ, Lan WH, Kuo CY, Chang JC, Rateb A (2017) Spatiotemporal prediction of satellite altimetry sea level anomalies in the Tropical Pacific Ocean. IEEE Geosci Remote Sens Lett 14(7):1126–1130

Karevan Z, Suykens JAK (2020) Transductive LSTM for time-series prediction: an application to weather forecasting. Neural Netw 125:1–9

Kingma DP, Ba J (2014) Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp 1097–1105

Li X, Liu B, Zheng G, Ren Y, Zhang S, Liu Y, Gao L, Liu Y, Zhang B, Wang F (2020) Deep-learning-based information mining from ocean remote-sensing imagery. Natl Sci Rev 7(10):1584–1605

Liu J, Jin B, Wang L, Xu L (2020) Sea surface height prediction with deep learning based on attention mechanism. IEEE Geosci Remote Sens Lett

Loshchilov I, Hutter F (2017) Decoupled weight decay regularization. arXiv preprint arXiv:1711.05101

McGoogan JT (1975) Satellite altimetry applications. IEEE Trans Microw Theory Tech 23(12):970–978

Metzger EJ, Hurlburt HE (2001) The nondeterministic nature of Kuroshio penetration and eddy shedding in the South China Sea. J Phys Oceanogr 31(7):1712–1732

Miles ER, Spillman CM, Church JA, McIntosh PC (2014) Seasonal prediction of global sea level anomalies using an ocean-atmosphere dynamical model. Clim Dyn 43(7–8):2131–2145

Moore JC, Grinsted A, Zwinger T, Jevrejeva S (2013) Semiempirical and process-based global sea level projections. Rev Geophys 51(3):484–522

Nan F, He Z, Zhou H, Wang D (2011) Three long-lived anticyclonic eddies in the northern South China Sea. J Geophys Res: Oceans 116(C5)

Nicholls RJ, Cazenave A (2010) Sea-level rise and its impact on coastal zones. Science 328(5985):1517–1520

Niedzielski T, Kosek W (2009) Forecasting sea level anomalies from TOPEX/Poseidon and Jason-1 satellite altimetry. J Geodesy 83(5):469–476

Norris RD, Turner SK, Hull PM, Ridgwell A (2013) Marine ecosystem responses to cenozoic global change. Science 341(6145):492–498

Rahmstorf S (2007) A semi-empirical approach to projecting future sea-level rise. Science 315(5810):368–370

Rudenko S, Neumayer KH, Dettmering D, Esselborn S, Schöne T, Raimondo JC (2017) Improvements in precise orbits of altimetry satellites and their impact on mean sea level monitoring. IEEE Trans Geosci Remote Sens 55(6):3382–3395

SHI X, Chen Z, Wang H, Yeung DY, Wong WK, Woo Wc (2015) Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In: Advances in Neural Information Processing Systems, pp 802–810

Sivakumar S, Sivakumar S (2017) Marginally stable triangular recurrent neural network architecture for time series prediction. IEEE Trans Cybern 48(10):2836–2850

Slangen ABA, Katsman CA, Van de Wal RSW, Vermeersen LLA, Riva REM (2012) Towards regional projections of twenty-first century sea-level change based on IPCC SRES scenarios. Clim Dyn 38(5):1191–1209

Song T, Jiang J, Li W, Xu D (2020) A deep learning method with merged LSTM Neural Networks for SSHA Prediction. IEEE J Sel Topics Appl Earth Obs Remote Sens 13:2853–2860

Tandeo P, Chapron B, Ba S, Autret E, Fablet R (2013) Segmentation of mesoscale ocean surface dynamics using satellite SST and SSH observations. IEEE Trans Geosci Remote Sens 52(7):4227–4235

Unal YS, Ghil M (1995) Interannual and interdecadal oscillation patterns in sea level. Clim Dyn 11(5):255–278

Zhao Z, Liu B, Li X (2014) Internal solitary waves in the China seas observed using satellite remote-sensing techniques: a review and perspectives. Int J Remote Sens 35(11–12):3926–3946

Zheng G, Li X, Zhang RH, Liu B (2020) Purely satellite data–driven deep learning forecast of complicated tropical instability waves. Sci Adv 6(29):eaba1482

Zheng Q, Hu J, Zhu B, Feng Y, Jo YH, Sun Z, Zhu J, Lin H, Li J, Xu Y (2014) Standing wave modes observed in the South China Sea deep basin. J Geophys Res: Oceans 119(7):4185–4199

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License (http://creativecommons.org/licenses/by-nc-nd/4.0/), which permits any noncommercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if you modified the licensed material. You do not have permission under this license to share adapted material derived from this chapter or parts of it.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Zhou, Y., Lu, C., Chen, K., Li, X. (2023). Sea Surface Height Anomaly Prediction Based on Artificial Intelligence. In: Li, X., Wang, F. (eds) Artificial Intelligence Oceanography. Springer, Singapore. https://doi.org/10.1007/978-981-19-6375-9_3

Download citation

DOI: https://doi.org/10.1007/978-981-19-6375-9_3

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-6374-2

Online ISBN: 978-981-19-6375-9

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)