Abstract

In this paper, a new structure of instruction prefetching unit is proposed. The prefetching is achieved by building the relationship between the branch source and its branch target and the relationship between the branch target and the first branch in its following instruction sequence. With the help of the proposed structure, it is easy to know whether the instruction block of branch target blocks exist in the instruction cache based on the recorded branch information. The two-level depth target prefetching can be performed to eliminate or reduce the instruction cache miss penalty. Experimental results demonstrate that the proposed instruction prefetching scheme can achieve lower cache miss rate and miss penalty than the traditional next-line prefetching technique.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Digital Signal Processors (DSPs) are widely used in communication, high performance computing, internet of things, artificial intelligence and other fields. In order to achieve extraordinary data processing ability, VLIW and SIMD are the most common techniques. The former is instruction level parallelism and the latter is data level parallelism. A VLIW instruction package contains several instructions (e.g.: 4 instructions), which will be issued in the same clock cycle [1]. On the one hand, in order to utilize the locality of executed instruction, the size of cache block should be at least 4 times the size of the instruction package [2]. On the other hand, the application program running on the DSP usually have small code amount, so that the capacity of instruction cache is not too large.

Combined with the above two factors, the number of instruction cache blocks will be relatively small, especially when way-set associative organization is used. If the program is executed following the instruction sequence, there will be no instruction cache miss with the help of next-line prefetching scheme [3]. According to statistics, however, there is one branch instruction in every seven instructions [4]. Once a branch is taken, chances are that instruction block of the branch target is not in the cache, which causes an instruction cache miss and leads to severe miss penalty.

Branch target buffer (BTB) is a structure to facilitate the performance by recording the target address [5]. With BTB, it is easy to fill the instruction block of branch target into the cache in advance. It is recommended to check whether the instruction block is already in the cache before filling, to avoid unnecessary filling. Tag matching is the simplest way to check the existence, but resulting in higher power consumption [6]. From the view of power consumption and implementation cost, using an indication bit may be a better solution to label the existence of the instruction block.

The other cache miss problem caused by branch is the beginning of branch target prefetching may not early enough [7]. For a 5-stage pipeline architecture, the branch decision is generally made in the second or third stage [8]. If the target prefetching is started in the first stage of the branch instruction, prefetching can only start one or two cycles in advance. How to prefetching the target instruction block much earlier is then a problem to affect the performance of the processor.

2 Proposed Structure of the Prefetching Unit

To realize the proposed architecture and eliminate the penalty of cache miss, there is one primary problem need to be solved: how to obtain the branch target instruction early enough in advance? The traditional branch predictor can provide the clue of branch target address, or the branch target instruction. An improved prefetching unit may fetch the branch target instruction from the external memory and store it in the cache before the processer core executes the branch instruction (hereinafter referred to as ‘1st level branch’), so that the cache miss penalty can be reduced if the branch is taken. However, if there is another branch instruction (hereinafter referred to as ‘2nd level branch’) in the instruction flow of the target instructions, the prefetching unit is unable to fetch the target instruction of the 2nd branch instruction before the 1st branch instruction is executed, due to difficulty of getting the target information of 2nd branch at that stage. That is, how to perform a two-level depth target prefetching?

The proposed prefetching unit solves the problem with a novel structure which builds the relationship between the 1st branch and the 2nd branch. It mainly bases on the classic N-bit branch predictor [9], as shown in Fig. 1. In the diagram, the columns ‘Source’ and ‘N-bit’ form the N-bit branch predictor. Take the most widely used 2-bit branch predictor for example [10], the source addresses of the branch instructions are recoded in the column ‘Source’. Without losing generality, we can use the lower part of the source address as the index of the rows and recode the upper part of the source address as the content of the first column, to form a direct-mapped structure. That is, each row of this column corresponds to a branch instruction. The column ‘N-bit’ in the same row is then used to contain the prediction value of the branch instruction. In 2-bit branch prediction scheme, ‘11’ and ‘10’ indicate that the branch is likely to be taken, while ‘01’and ‘00’ indicate that the branch is likely to be not-taken.

Now let’s build the relationship between the 1st branch and its branch target. A simply way to achieve this is adding a new entry for each row to save the address of branch target instruction. In this case, once a branch instruction is processed by the prefetching unit, the value of branch prediction and the target address can be reached directly.by the same row indexing. Further, it is easy to adding an indication bit for each row, to distinguish whether the branch target instruction is already in the instruction cache. Thus, when processing a branch instruction, if the indication bit is ‘0’, the prefetching unit may start to fill the instruction block addressed by the target address, to guarantee that the target instruction is in the cache or being filled into the cache when the branch is taken. The cache miss penalty is then eliminated or reduced. However, there is a problem in the scheme. Because the target address is stored as a content instead of an index, it is difficult to maintain the value of the indication bit when the target instruction block is moved in or out of the instruction cache.

To solve the above problem, the target addresses are also organized with direct-mapped structure which is similar with the structure of the source addresses, as shown in the column ‘Target’ in the right part of Fig. 2. A pair of pointers are adopted to connect the branch source and the corresponding branch target. On the side of branch source, ‘Pointer_A’ contains the row number of the branch target and two valid bits indicating whether the branch target address and the branch target instruction are valid in the prefetching unit and in the instruction cache, respectively. On the side of branch target, ‘Pointer_B’ contains the line number of the corresponding branch source. Therefore, it is easy to find out the target address and fill the target instruction in advance if necessary based on the branch address, while the valid bits in ‘Pointer_A’ can be easily modified as soon as the target address is updated or the target instruction is filled into or moved out of the instruction cache.

Let’s then build the relationship between the 1st branch and the 2nd branch. The 2nd branch itself is an ordinary branch source, so it is also recorded in another row of column ‘source’. As shown in Fig. 3, ‘Pointer_C’ is used to save the row number of the 2nd branch. According to this structure, when processing a branch instruction (i.e.: the 1st branch), the target address and the source address of the 2nd branch can be obtained consequently. The corresponding instruction blocks can then be filled into the instruction cache much early in advance to eliminate or reduce the cache miss penalty.

3 Simulation Model and Experimental Results

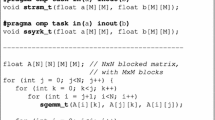

To estimate the performance of the proposed prefetching unit, a simulation model is built based on a 5-stage pipelined RISC processor of PISA instruction set architecture (PISA-ISA) in simplescalar simulator [11]. In the original simulator, the first stage is instruction fetching, the second stage is instruction decoding, the third stage is executing and making the branch decision, the fourth stage is memory accessing and the last stage is register writing back. Obviously, the proposed prefetching unit is placed in the first stage. Once the program counter (PC) is updated, it is used to fetch instruction from the instruction cache, as well as being sent to the prefetching unit. If the content read from the column ‘source’ indexing by the lower part of the PC equals to the upper part of the PC, which means the instruction according to the PC is a branch instruction recorded in the prefetching unit, the valid bits in the same row is checked to determine whether to fill its target instruction block or not. In the next clock cycle, the target address is obtained based on ‘Pointer_A’, which means the filling process of the 1st branch target can be started if necessary while the 1st branch instruction is in the second stage. Two more clock cycles later, the target address of the 2nd branch address can be obtained based on ‘Pointer_C’ and a new ‘Pointer_A’. That is, the filling process of the target of the 2nd branch can be started if necessary while the 1st branch instruction is in the fourth stage and the its branch decision is just made one clock cycle before.

In PISA-ISA, there is a branch delay slot after each branch instruction, so the worst case is one branch instruction for every two instructions, and the target instruction of the 1st branch happened to the 2nd branch unfortunately. In this case, the target instruction of the 1st branch is to be filled when the 1st branch is in the second stage, and to be fetched when the 1st branch is in the third stage; the target instruction of the 2nd branch is to be filled when the 1st branch is in the fourth stage, and to be fetched when the 1st branch is in the fifth stage. The timing diagram is illustrated in Fig. 4. Besides that, the prefetching timing requirements in other cases are more relax.

With the different experimental configurations, we get several sets of simulation results. Instruction cache may have a capacity from 16k bytes to 64k bytes. The organization of instruction cache may be direct-mapped, 2-way-set associative or 4-way-set associative. Since VLIW is widely used in DSP architecture, the block size should be large enough to contain at least 4 VLIW instruction packages. Therefore, the block size is set as 64 bytes. Assume that there is a level 2 cache with a reasonable capacity, the instruction cache miss penalty may vary from 2 cycles to 6 cycles. Further, the branch prediction unit has 64 entries with direct-mapped organization, so that the prefetching unit also has 64 rows in total.

Table 1 shows the instruction cache miss rates of the traditional next-line prefetching with 2-bit branch prediction and the proposed structure. Each data row corresponds to a kind of instruction cache configuration. From the top to the bottom, the configurations are 16k bytes with direct-mapped, 16k bytes with 2-way-set-associative, 16k bytes with4-way-set-associative, 32k bytes with direct-mapped, 32k bytes with 2-way-set-associative, 32k bytes with4-way-set-associative, 64k bytes with direct-mapped, 64k bytes with 2-way-set-associative, and 64k bytes with 4-way-set-associative, respectively. In the traditional structure, miss rate has nothing to do with miss penalty, so we only need to use the leftmost data column to represent miss rate of traditional structure. The remaining columns correspond to different cases of miss penalty for the proposed structure. From the left to the right, the miss penalties are 2 cycles, 3 cycles, 4 cycles, 5 cycles, and 6 cycles, respectively.

It is obvious from the table that no matter what the miss penalty is, the proposed structure has a significant decrease in miss rate. Further, the smaller the miss penalty is, the more significant the decrease of miss rate becomes. This is because when miss penalty is small, more cache miss can be completely concealed by the two-level depth target prefetching. When the miss penalty become larger, some of the branch target is to be accessed before the prefetching is completed. This is still a cache miss, but the actual penalty of this miss can be reduced.

Table 2 shows the instruction cache miss penalty reduction in total of the proposed structure. Comparing Table 1 and Table 2, we can see that the miss rate and the total miss penalty reduction are both relative high for the bigger penalty cases. This is because although part of the penalty is covered by the prefetching, even if there is only one penalty cycle left, it will be treated as a cache miss and increase the miss rate. In some cases, although the miss rate is still relatively high, the total miss penalty has already reduced to a very low level.

4 Conclusion

In this paper, we have described a new structure of DSP prefetching unit based on the two-level depth target prefetching scheme. The instruction block of the branch source is already in the instruction cache. The instruction blocks of the 1st branch target and the 2nd branch target are filled into the instruction cache before the branch decision is made, so that the possible instructions following the branch source are also in the cache, or being filled into the cache, which eliminate or reduce the total cache miss penalty. The performance of the proposed structure has been demonstrated by experimental results.

References

Ren, H., Zhang, Z., Wu, J.: SWIFT: a computationally-intensive DSP architecture for communication applications. Mob. Netw. Appl. 21, 974–982 (2016)

Hennessy, J., Patterson, D.: Computer Architecture: A Quantitative Approach, 6th edn. Morgan Kaufmann Publishers, Burlington (2017)

Xu, J.: Research on prefetch technique of cache. Popular Science & Technology (2011)

Xiong, Z., Lin, Z., Ren, H.: BI-LRU: a replacement strategy based on prediction information for Branch Target Buffer. J. Comput. Inf. Syst. 11(20), 7587–7594 (2015)

Monchiero, M.: Low-power branch prediction techniques for VLIW architectures: a compiler-hints based approach integration. VLSI J. 38, 515–524 (2005)

Sun, Y., Yuan, Y., Li, W., et al.: An aggressive implementation method of branch instruction prefetch. J. Phys. Conf. Ser. 1769(1), 012062 (7pp) (2021)

Rostami-Sani, S., Valinataj, M., Chamazcoti, S.: Parloom: a new low-power set-associative instruction cache architecture utilizing enhanced counting bloom filter and partial tags. J. Circuits Syst. Comput. 28(6) (2018)

Patterson, D., Hennessy, J.: PattersnComputer Organization and Design: The Hardware Software Interface RISC-V Edition, Morgan Kaufmann Publishers (2018)

Lee, J., Smith, A.: Branch prediction strategies and branch target buffer design. IEEE Comput. Mag. 17, 6–22 (1984)

Zhang, L., Tao, F., Xiang, J.: Researches on design and implementations of two 2-bit predictors. Adv. Eng. Forum 1, 241–246 (2011)

Burger, D., Austin, T.: The SimpleScalar Tool Set Version 2.0, Department Technical Report, University of Wiscon-Madison (1997)

Acknowledgement

This work was supported by the Key-Area Research and Development Program of Guangdong Province Projects under Grant 2018B010115002, National Natural Science Foundation of China under (NSFC) Grant 61831018 and 61631017.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this paper

Cite this paper

Ji, R., Ren, H. (2022). A New Prefetching Unit for Digital Signal Processor. In: Qian, Z., Jabbar, M., Li, X. (eds) Proceeding of 2021 International Conference on Wireless Communications, Networking and Applications. WCNA 2021. Lecture Notes in Electrical Engineering. Springer, Singapore. https://doi.org/10.1007/978-981-19-2456-9_94

Download citation

DOI: https://doi.org/10.1007/978-981-19-2456-9_94

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-2455-2

Online ISBN: 978-981-19-2456-9

eBook Packages: EngineeringEngineering (R0)