Abstract

Gaussian Naive Bayes (GNB) is a popular supervised learning algorithm to address various classification issues. GNB has strong theoretical basis, however, its performance tends to be hurt by skewed data distribution. In this study, we present an optimal decision threshold-moving strategy for helping GNB to adapt imbalanced classification data. Specifically, a PSO-based optimal procedure is conducted to tune the posterior probabilities produced by GNB, further repairing the bias on classification boundary. The proposed GNB-ODTM algorithm presents excellent adaptation to skewed data distribution. Experimental results on eight class imbalance data sets also indicate the effectiveness and superiority of the proposed algorithm.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In recent years, class imbalance learning (CIL) has become one of hot topics in the field of machine learning [1]. Also, the CIL has been widely applied in various real-world applications, including disease classification [2], software defect detection [3], biology data analysis [4], bankrupt prediction [5], etc. So-called class imbalance problem means in training data, the instances belong to one class is much more than that in other classes. The problem tends to highlight the performance of majority class, but to ignore the minority class.

There exist three major techniques to implement CIL: 1) data-level approach, 2) algorithmic-level method and 3) ensemble learning strategy. Data-level, which is called resampling, addresses CIL problem by re-balancing data distribution [6,7,7]. It contains oversampling that generates lots of new minority instances, and undersampling which removes a lot of majority instances. Algorithmic-level adapts class imbalance by modifying the original supervised learning algorithms. It mainly contains: cost-sensitive learning [8], and decision threshold-moving strategy [9,10,10]. Cost-sensitive learning designates different training costs for the instances belonging to different classes to highlight the minority class, while decision threshold-moving tune the biased decision boundary from the minority class region to the majority class region. As for ensemble learning, it integrates either a data-level algorithm or an algorithmic-level method into a popular ensemble learning paradigm to promote the quality of CIL [11,12,12]. Among these CIL techniques, the decision threshold-moving is relatively flexible and effective, however, it also faces a challenge, i.e., it is difficult to select an appropriate threshold.

In this study, we focus on a popular supervised learning algorithm named Gaussian Naive Bayes (GNB) [13] which also tends to be hurt by skewed data distribution. First, we analyze why the GNB tends to be hurt by imbalanced data distribution in theory. Then, we explain why adopting several popular CIL techniques could repair this bias. Finally, based on the idea, PSO optimization algorithm, we propose an optimal decision threshold-moving algorithm for GNB named GNB-ODTM. Experimental results on eight class imbalance data sets indicate the effectiveness and superiority of the proposed algorithm.

2 Methods

2.1 Gaussian Naive Bayes Classifier

GNB is a variant of Naive Bayes (NB) [14], which is used only to deal with data in continuous space. Like NB, GNB has a strong theoretical basis. GNB assumes in each class, all instances satisfy a multivariate Gaussian distribution, i.e., for an instance xi, we have:

where μy and σy denote the mean and variance of all instances belonging to class y, respectively. P(xi| y) represents in class y, xi’s conditional probability. As the prior probability P(y) is known, hence the posterior probability P(y| xi) and P(~y| xi) can be calculated as,

We expect the classification boundary can correspond to P(xi| y) = P(xi| ~y). However, if the data set is imbalanced (supposing P(y) << P(~y), then to guarantee P(y| xi) = P(~y| xi), i.e., P(xi| y)P(y) = P(xi| ~y)P(~y), the real classification boundary must correspond to a condition of P(xi| y) >> P(xi| ~y). That means the classification boundary is extremely pushed towards the minority class y. That explains why skewed data distribution hurts the performance of GNB.

To repair the bias, data-level approaches change P(y) or P(~y) to make P(y) = P(~y), cost-sensitive learning designates a high cost C1 for class y and a low cost C2 for class ~ y to make P(y) C1 = P(~y) C2, while for decision threshold-moving strategy, it adds a positive value λ for compensating the posterior probability of class y.

2.2 Optimal Decision Threshold-Moving Strategy

As we know, decision threshold-moving is an effective and efficient strategy to address CIL problem. However, we also face a challenge that is how to designate an appropriate moving threshold λ. Some previous work adopt empirical value [9] or trial-and-error method [10] to designate the value for λ, but ignore the specific data distribution, causing over-moving or under-moving phenomenon.

To address the problem above, we present an adaptive strategy for searching the most appropriate moving threshold. The strategy is based on particle swarm optimization (PSO) [15], which is a population-based stochastic optimization technique, inspired by the social behavior of bird flocking. During the optimization process of PSO, each particle dynamically changes its position and velocity by recalling its historical optimal position (pbest) and observing the position of the optimal particle (gbest). On each round, the position of each particle is updated by:

where \(v_{id}^k\) and \(v_{id}^{k + 1}\) represent the velocities of the dth dimension of the ith particle in the kth round and the (k + 1)st round, while \(x_{id}^k\) and \(x_{id}^{k + 1}\) denote their positions, respectively. c1 and c2 are two nonnegative constants that are called acceleration factors, while r1 and r2 are two random variables in the range of [0, 1]. In this study, the size of particle swarm and the search times are both set as 50, as well c1 and c2 are both set to 1. Meanwhile, the position x is restricted in the range of [0, 1] with considering the upper limit of a posterior probability is 1, and the velocity v is restricted between –1 and 1.

As for the fitness function, it should directly associate with the classification performance. We all know in CIL, accuracy is not an appropriate performance evaluation metric, thus we use a widely used CIL performance evaluation metric called G-mean as fitness function, which could be described as below,

where TPR and TNR indicate the accuracy of the positive and negative class, respectively.

2.3 Description About GNB-ODTM Algorithm

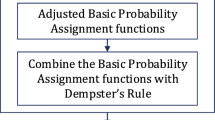

Combining GNB and the optimization strategy presented above, we propose an optimal decision threshold-moving algorithm for GNB named GNB-ODTM. The flow path of the GNB-ODTM algorithm is simply described as follows:

Algorithm: GNB-ODTM.

Input: A skewed binary-class training set Φ, a binary-class testing set Ψ.

Output: An optimal moving threshold λ*, the G-mean value on the testing set Ψ.

Procedure:

-

1)

Train a GNB classifier on Φ;

-

2)

Calculate the posterior probabilities of each instance in Φ, and hereby calculate the original G-mean value on Φ;

-

3)

Call PSO algorithm and use the training set Φ to find the optimal moving threshold λ*;

-

4)

Adopt the trained GNB classifier to calculate the posterior probabilities of each instance in Ψ;

-

5)

Tune the posterior probabilities in Ψ by the recorded λ*;

-

6)

Calculate the G-mean value on the testing set Ψ by using the tuned the posterior probabilities.

From the procedure described above, it is not difficult to observe that in comparison with empirical moving threshold setting, the proposed GNB-ODTM algorithm must be more time-consuming as it needs to conduct an iterative PSO optimization procedure. However, the time-complexity can be decreased by assigning small iterative times and population as soon as possible, which is also helpful for reducing the possibility of making classification model be overfitting. Moreover, we also note that the GNB-ODTM algorithm is self-adaptive, which means it is not restricted by data distribution, and meanwhile it can adapt any data distribution type without exploring it.

3 Results and Discussions

3.1 Description About the Used Data Sets

We collected 8 class imbalance data sets from UCI machine learning repository which is avaliable at: http://archive.ics.uci.edu/ml/datasets.php. The detailed information about these data sets is presented in Table 1. Specifically, these data sets have also been used in our previous work about class imbalance learning [16].

3.2 Analysis About the Results

We compared our proposed algorithm with GNB [13], GNB-SMOTE [7], CS-GNB [8], GNB-THR [9] and GNB-OTHR [10] in our experiments. All parameters in PSO have been designated in Sect. 2. In addition, to guarantee the impartiality of experimental comparison, we adopted external 10-fold cross-validation and randomly conducted it 10 times to provide the average G-mean as the final result.

Table 2 shows the comparable results of various algorithms, where on each data set, the best result has been highlighted in boldface.

From the results in Table 2, we observe:

-

1)

In comparison with original GNB, no matter associating it with resampling, cost-sensitive learning or decision threshold-moving techniques could promote classification performance on imbalanced data sets. The results indicate the necessity of adopting CIL technique to address imbalance classification problem, again.

-

2)

It is difficult to compare the quality of resampling and cost-sensitive learning as each of them performs better on partial data sets. GNB-SMOTE performs better on abalone9, pageblocks5, cardiotocographyC5 and cardiotocographyN3, while CS-GNB produces better result on rest data sets.

-

3)

Although GNB-THR significantly outperforms to the original GNB model, it is obviously worse than several other algorithms. It indicates the unreliability of setting moving threshold by empirical approach.

-

4)

We beleive the proposed GNB-ODTM algorithm is successful as it has produced the best result on nearly all data sets except pageblocks2345 and cardiotocographyN3. In addition, we observe on mst data sets, the performance promotion is remarkable by adopting the proposed algorithm. It should attribute to the consideration of distribution self-adaption. Although the proposed GNB-ODTM algorithm has a higher time-complexity than several other algorithms, it is still an excellent altinative for processing imbalance data classification problem.

4 Concluding Remarks

In this study, we focus on a specific class imbalance learning technique named decision threshold-moving strategy. A common problem about this technique is indicated, i.e., it generally lacks adaption to data distribution, further causing unreliable classification results. Specifically, in the context of Gaussian Naive Bayes classification model, we presented a robust decision threshold-moving strategy and proposed a novel CIL algorithm called GNB-ODTM. The experimental results have indicated the effective and superiority of the proposed algorithm.

The contribution of this paper is two-folds which are described as follows:

-

1)

In context of Gaussian Naive Bayes classifier, we analyze the hazard of skewed data distribution in theory, and indicate rationality of several popular CIL techniques;

-

2)

Based on Particle Swarm Optimization technique, we propose a robust decision threshold-moving algorithm which can adapt various data distribution.

The work was supported by Natural Science Foundation of Jiangsu Province of China under grant No.BK20191457.

References

Branco, P., Torgo, L., Ribeiro, R.P.: ACM Comput. Surv. 9 (2016)

Dai, H.J., Wang, C.K.: Int. J. Med. Inform. 129 (2019)

Malhotra, R., Kamal, S.: Neurocomputing 343 (2019)

Qian, Y., Ye, S., Zhang, Y., Zhang, J.: Gene 741 ( 2020)

D. Veganzones, E. Severin: Decis. Support Syst. 112 (2018)

Ng, W.W.Y., Hu, J., Yeung, D.S., Yin, S., Roli, F.: IEEE Trans. Cybern. 45 (2015)

Chawla, N., Bowyer, K.W., Hall, L.O.: J. Artif. Intell. Res. 16 (2002)

Veropoulos, K., Campbell, C., Cristianini, N.: IJCAI (1999)

Lin, W.J., Chen, J.J.: Brief. Bioinform. 14 (2013)

Yu, H., Mu, C., Sun, C., Yang, W., Yang, X., Zuo, X.: Knowl.-Based Syst. 76 (2015)

Tang, B., He, H.: Pattern Recogn. 71 (2017)

Lim, P., Goh, C.K., Tan, K.C.: IEEE Trans. Cybern. 47 (2016)

Berrar, D.: Encyclopedia of Bioinformatics and Computational Biology: ABC of Bioinformatics. Elsevier Science Publisher, Amsterdam (2018)

Griffis, J.C., Allendorfer, J.B., Szaflarski, J.P.: J. Neurosci. Methods 257 (2016)

Shi, Y., Eberhart, R.C.: CEC (1999)

Yu, H., Sun, C., Yang, X., Zheng, S., Zou, H.: IEEE Trans. Fuzzy Syst. 27 (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this paper

Cite this paper

He, Q., Yu, H. (2022). Optimal Decision Threshold-Moving Strategy for Skewed Gaussian Naive Bayes Classifier. In: Qian, Z., Jabbar, M., Li, X. (eds) Proceeding of 2021 International Conference on Wireless Communications, Networking and Applications. WCNA 2021. Lecture Notes in Electrical Engineering. Springer, Singapore. https://doi.org/10.1007/978-981-19-2456-9_85

Download citation

DOI: https://doi.org/10.1007/978-981-19-2456-9_85

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-2455-2

Online ISBN: 978-981-19-2456-9

eBook Packages: EngineeringEngineering (R0)