Abstract

A human action recognition network (AE-HRNet) based on high-resolution network (HRNet) and attention mechanism is proposed for the problem that the semantic and location information of human action features are not sufficiently extracted by convolutional networks. Firstly, the channel attention (ECA) module and spatial attention (ESA) module are introduced; on this basis, new base (EABasic) and bottleneck (EANeck) modules are constructed to reduce the computational complexity while obtaining more accurate semantic and location information on the feature map. Experimental results on the MPII and COCO validation sets in the same environment configuration show that AE-HRNet reduces the computational complexity and improves the action recognition accuracy compared to the high-resolution network.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Human action recognition is an important factor and key research object for the development of artificial intelligence. The purpose of human action recognition is to predict the type of action visually. And it had important applications in security monitoring, intelligent video analysis, group behavior recognition and other fields, such as the detection abnormal behavior in ship navigation and the identification of dangerous people in the transportation environment of subway stations. Other scholars had applied action recognition technology to smart home, where daily behavior detection, fall detection, and dangerous behavior recognition were getting more and more concentrate from researchers.

Literature [1] proposed an improved dense trajectories (referred to as iDT), which is currently widely used. The advantage of this algorithm is that it is stable and reliable, but the recognition speed was slow. With the innovation and development of deep learning technology, the method of image recognition had been further developed. Literature [2] had designed a new CNN (Convolutional Neural Network) action recognition network-3D Convolutional Network, This net extracted features from both temporal and spatial dimensions and performs 3D convolution to capture motion information in multiple adjacent frames for human action recognition. In the literature [3], a two-stream expansion 3D convolutional network (referred to as TwoStream-I3D) was used for feature extraction. And in literature [4], Long Short-Term Memory (referred to as LSTM) had been used.

In papers that use the two-stream network structure, researchers have further improved the two-stream network. The literature [4] used a two-stream network structure based on the proposed temporal segmentation network (TSN) for human action recognition, literature [5] used a deep network based on learning weight values to recognize action types, literature [6] uses a ResNet network structure. As the connection method of dual-stream network, and the literature [7] used a new two-stream that is three-dimensional convolutional neural network (I3D) based on a two-dimensional convolutional neural network to recognize human actions. These types of deep learning methods lead to a significant increase in the accuracy of action recognition.

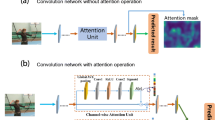

All the above improvements were based on convolutional neural networks, and the spatially and temporally based self-attentive convolution-free action classification methods had been proposed in the literature [8], which could learn features directly from frame-level patch sequence data. This type of method directly assigned weight values through the attention mechanism, which increases the complexity of model processing and ignores the structural information of the picture itself during pre-processing and feature extraction.

For human behaviour action recognition in video data or image data, both need to transform the data carrier into sequence images, then recognizing human actions in static images can be transformed into an image classification problem. The advantage of the convolution method applied to the action classification in the image is that it could learn through hierarchical transfer, save the reasoning and perform new learning on subsequent levels, and feature extraction had been performed when training the model. There was no need to repeat this operation. However, on those data which was not pre-processed, it was not possible to rotate and scale images with different scales, and the human features extracted using convolution operations do not reflect the overall image description (e.g., “biking” and “repairing” may be divided into one category), so it is necessary to use attention network to recognize local attribute features.

Based on the above research, the action recognition in this paper uses high-resolution network HRNet as the basic network framework, at the same time, making improvements to the basic modules of HRNet, and improving the HRNet base module by using Channel Attention and Spatial Attention to further increase the local feature information extracted from the feature maps, besides, allowing the feature maps exchange with each other in terms of spatial information. At the same time, the fusion output of HRNet has been improved. We have designed a fusion module to perform gradual fusion operations on the output feature maps, and finally output the feature maps after multiple fusions. The main work of this paper is as follows:

-

(1)

Designed the basic modules AEBasic and AENeck which integrate the attention mechanism. While extracting image features with high resolution, it improves the weight of local key point information in image features, reduces the loss caused by key point positioning, and has better performance than the HRNet network model.

-

(2)

Compared with the original three outputs of HRNet, we designed a new fusion output method, fused the feature maps layer by layer to obtain more sufficient semantic information in the feature maps.

2 Overview of HRNet and Attention Mechanism

2.1 HRNet Network Structure

The HRNet network structure started with the Stem layer, as shown in Fig. 1. the Stem layer consists of two stride-2 3 * 3 convolutions. After the Stem layer, the image resolution reduced from R to R/4, while the number of channels changed from RGB three channels to C. as shown in Fig. 1. The main body of the structure was divided into four stages, while containing four parallel convolutional streams, the resolution R in the convolutional stream is R/4, R/8, R/16 and R/32, respectively, and the resolution is kept constant in the same branch. The first stage contains four residual units consisting of a bottleneck layer of width 64, followed by a 3 * 3 convolution that changes the number of channels of the feature map to R. The second, third and fourth stages contain 1, 4 and 3 of the above modules, respectively.

In the modular multi-resolution parallel convolution, each branch contains 4 residual units, each residual unit contains two 3 * 3 convolutions of the same resolution with batch normalization and nonlinear activation function ReLu. the number of channels in the parallel convolution stream is C, 2C, 4C, 8C, respectively.

2.2 Attentional Mechanisms

The attention mechanism plays an important role in human perception. For what is observed, the human visual system does not process the entire scene at once, but selectively focuses on a certain part so that we can better understand the scene. Also in the field of Machine-vision, using the attention mechanism can make the computer better understand the content of the picture. The following describes the channel attention and spatial attention used in this paper.

Channel Attention.

For a channel feature map \(F(X)\in {R}^{C*H*W}\) ,the feature map has height H and width W and contains C channels. In some learning tasks, not all channels contribute equally to the learning task, some channels are less important for this task, while others are very important for this task. Therefore, computer needs to assign channel weights according to different learning tasks.

Literature [9] proposed SENet, a channel-based attention model, as shown in Fig. 2. Through compression (\({F}_{sq}\)) and excitation (\({F}_{ex}\)) operations, the weight ω of each feature channel was calculated. The weight ω of the feature channel is used to indicate the importance of the feature channel. and the learned feature channel weights ω vary for different learning tasks. Subsequently, the corresponding channel in the original feature map \(F\) is weighted using the feature channel weight ω, that is, each element of the corresponding channel in the original feature map F is multiplied by the weight to obtain the channel attention feature map \((\tilde{X })\). In short, channel attention is focused on “what” is a meaningful input image. The larger the feature channel weight ω, the more meaningful the current channel; conversely, if the feature channel weight ω is smaller, the current channel is meaningless.

Spatial Attention.

In the literature [3,4,5,6,7], researchers had used a model based on a two-stream convolutional network and made improvements on the original, using the improved model for image feature extraction, which had improved the accuracy of action recognition but still essentially uses convolution to extract image features.

When performing the convolution operation, the computer divides the whole image into regions of equal size and treats the contribution made by each region to the learning task equally. In fact, each region of the image contributes differently to the task, thus each region cannot be treated equally. Moreover, the convolution kernel is designed to capture only the local spatial information, but not the global spatial information. Although the stacking of convolutions can increase the receptive field, it still does not fundamentally change the situation, which leads to some global information being ignored.

Therefore, some researchers have proposed the CBAM (Convolutional Block Attention Module) model [10], which uses the spatial attention module to focus on the location information of the target, and the area with prominent significance for the task increases the attention, while the area with less significance is Reduce attention, as shown in Fig. 3.

3 Action Recognition Model Based on Attention Mechanism

The performance improved by modifications on the convolutional network only can no longer meet the needs of the study, inspired by the literature [10,11,12], we choose to fuse the convolutional neural network and the attention mechanism to improve the network performance. A high-resolution network, HRNet, is used in the literature [13] to maintain the original resolution of the image during convolution and reduce the loss of location information, so we add attention mechanisms to the selected HRNet network model and propose an action recognition model based on channel attention and spatial attention mechanisms, AE-HRNet (Attention Enhance High Resolution Net).

3.1 AE-HRNet

AE-HRNet inherits the original network structure of HRNet, which contains four stages, as shown in Fig. 4. The reason for using four stages is to let the resolution of the feature map decrease gradually. Due to the adoption of a substantial downsampling operation, which leads to the rapid loss of details such as location information and human action information in the feature map, it is difficult to guarantee the accuracy of the prediction even if the feature information is learned from the blurred image and then restored by upsampling the image. Therefore, in each stage, parallel branches with 1, 2, 3, and 4 different resolutions and number of channels are used to maintain the high resolution of the image while performing the downsampling operation, which allows the location information to be retained.

The specific processing of the AE-HRNet network model is as follows.

-

(1)

In the pre-processing stage, the resolution of the image is unified to 256 * 256, and two standard stride-2 3 * 3 convolutions are used, so that the input resolution is 1/4 of the original resolution, at the same time, number of channels becomes C.

-

(2)

Take the pre-processed feature map as the input of stage 1, and extract the feature map through 4 EABasic modules.

-

(3)

In the following three stages, EANeck pair features with different resolutions (1/4, 1/8, 1/16, 1/32) and channel numbers (C, 2C, 4C, 8C) are used respectively Figure for feature extraction.

The basic network architecture used in our experiment is HRNet-w32. The resolution and the number of channels will be adjusted between each stage. At the same time, the feature maps between the resolutions will also be exchanged and merged to form a feature map with richer semantic information.

3.2 ECA (Enhance Channel Attention) Module

The structure of ECA (Enhance Channel Attention) module is shown in Fig. 5, firstly, the convolved feature maps are pooling by Max Pooling and Avg Pooling respectively. In order to maximize the retention of image features, we use both Max Pooling and Avg Pooling; then we use two 1 * 1 convolutions on the pooling feature maps; next, we add those two feature maps and use the Sigmoid activation function to obtain the channel attention feature map with dimension C * 1 * 1. Finally, multiply the channel attention feature map \({F}_{c}\) with the original feature map \(F\), and reduce the output dimension to C * H * W to get the new feature map.

3.3 ESA (Enhance Spatial Attention) Module

The ESA (Enhance Spatial Attention) module is shown in Fig. 6. The original feature map is also subjected to Max Pooling and Avg Pooling, then we concatenate the two parts of the feature map to get a tensor which dimension is 2 * H * W, use a convolution operation with a convolution kernel size of 7 or 3 to make the number of channels 1 and keep H and W unchanged. Then use the Sigmoid function to get a dimension of 1 * H * W. Finally, matrix multiplication is used to multiply the spatial attention feature map with the feature map output by the ECA module, and the output dimension is restored to C * H * W, and the final feature map is obtained.

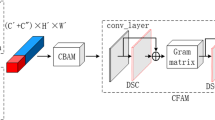

3.4 EABasic and EANeck Modules

The EABlock module consists of ECA module and ESA module. The main modules of HRNet network model are Bottle neck module and Basic block module. In order to integrate with the attention mechanism, we designed EABlock (Enhance Attention block) module to add it to the Bottle neck module and Basic block module, as shown in Fig. 7, called EABasic (Enhance Attention Basic) module and EANeck (Enhance Attention Neck) module, as shown in Fig. 7.

In EABasic, the image of dimension C * H * W input from the Stem layer is convolved by two consecutive 3 * 3 convolutions to obtain a feature map \(F\) of dimension 2C * H * W. The number of channels is increased from C to 2C in the first convolution, and the number of channels does not change in the second convolution. The feature map \(F\) is then input to the EABlock, and the feature map weights are weighted using the ECA module as well as the ESA module, and the final output feature map.

In EANeck, the image of dimension C * H * W input from the Stem layer is first convolved by 1 * 1 and the number of channels is changed from C to 2C, and then the feature map width and height are maintained unchanged using 3 * 3 convolution with padding of 1. Finally, the feature map of image dimension 2C * H * W is obtained using 1 * 1 convolution \(F\). Subsequently, the feature map \(F\) is input to EABlock, and the feature map weights are weighted using ECA module and ESA module, and finally the feature map is output.

3.5 Aggregation Module

When outputting fused features, the output of the aggregation module is redesigned to gradually fuse the extracted feature maps with a view to obtaining richer semantic information, as shown in Fig. 8. That is, the output of branch 4 is first subjected to up-sampling operation, and then feature fusion is performed after unifying with the dimensionality of the output of branch 3 to form a new output 3, and so on, and finally the fused features with the highest resolution are output.

4 Experiment

4.1 MPII Data Set

Description of MPII Data Set.

The MPII data set contains 24,987 images, a total of 40,000 different instances of human action, of which 28,000 are used as training samples and 11,000 as testing samples. The label contain 16 key points, which are 0-right ankle, 1-right knee, 2-right hip, 3-left hip, 4-left knee, 5-left ankle, 6- pelvis, 7- chest, 8- upper neck, 9- top of head, 10-right wrist, 11-right elbow, 12-right shoulder, 13- left shoulder, 14- left elbow, 15- left wrist.

Evaluation Criteria.

The experiments were trained on the MPII training set, and verified using the MPII validation set. The calibration criteria are accuracy top@1 and top@5. We divide the MPII data set into 20 categories based on behavior, and output 1 and 5 image feature labels respectively after training using the model. if the output labels are consistent with the real labels, then the prediction is correct, and vice versa, the prediction is wrong.

The accuracy top@1 refers to the percentage of the predicted labels that match the true labels in the same batch of data with 1 label output; the accuracy top@5 is the percentage of the predicted labels that contain the true labels in the same batch of data with 5 labels output.

Training Details.

The experimental environment in this paper is configured as follows: Ubuntu 20.04 64−bit system, 3 GeForce RTX 2080ti graphics cards, and pytoch1.8.1 deep learning framework is used for training.

The training was performed on the MPII training set with a uniform image scaling crop of 256 * 256. The initial learning rate of the model is 1e−2, which is reduced to 1e−3 in the 60th round, 1e−4 in the 120th round, and 1e−5 in the 180th round. each GPU batch training is 32, and the data are enhanced using random horizontal rotation (p = 0.5) and random vertical rotation (p = 0.5) during the training process.

Experimental Validation Analysis.

The data results of this paper on the MPII validation set are shown in Table 1. The results show that our AE-HRNet model compared with the improved HRNet, although increased spatial attention and channel attention, the amount of calculation of the model has increased, from the original 8.03 GFLOPs to 8.32 GFLOPs, but the amount of parameters 41.2 * 107 drops to 40.0 * 107, and the parameter amount is 3% less than HRNet. Compared with HRNet-w32 network, AE-HRNet network has an accuracy rate of top@1 increased by 0.72%, and an accuracy rate of top@5 increased by 0.97%.

Since both ResNet50 and ResNet101 in Simple Baseline use pre-trained models, and neither HRNet nor our model use pre-trained models, compared to ResNet50 in Simple Baseline, the accuracy of HRNet-w32 is Top@1 lower than Simple Baseline By 1.34%, our AE-HRNet accuracy rate only dropped by 0.62%.

4.2 COCO Data Set

Description of COCO Data Set.

The COCO dataset contains 118287 images, and the validation set contains 5000 images. The COCO dataset contains 17 key points in the whole body in the COCO data set annotation, which are 0-nose, 1-left eye, 2-right eye, 3-left ear, 4-right ear, 5-left shoulder, 6-right shoulder, 7-left elbow, 8-right elbow, 9-left wrist, 10-right wrist, 11-left hip, 12-right hip, 13-left knee, 14-right knee, 15-left ankle, 16-right ankle.

In this paper, we use part of the COCO data set, the training set contains 93,049 images and the validation set contains 3,846 images. It is divided into 11 action categories according to labels, which are baseball bat, baseball glove, frisbee, kite, person, skateboard, skis, snowboard, sports ball, surfboard and tennis racket.

Evaluation Criteria.

The tests used for our evaluation criteria on the COCO data set are accuracy top@1 and top@5, and the details are described in MPII Data Set Evaluation Criteria.

Experimental Details.

When training on the COCO data set, the images were first uniformly cropped to a size of 256 * 256, and the other experimental details used the same parameter configuration and experimental environment as the MPII data set, as detailed MPII Data Set Experimental Details.

Experimental Validation Analysis.

The data results of this paper on the COCO validation set are shown in Table 2. The AE-HRNet model operation volume rises to 8.32 GFLOPs compared with that of HRNet. The number of parameters in the AE-HRNet network is reduced by 3% compared with that of HRNet. At the same time, the accuracy of the AE-HRNet network is 0.87% higher than that of HRNet-w32 on top@1 and 0.46% higher than HRNet-w32.

Compared with ResNet50 in Simple Baseline, the accuracy rate of AE-HRNet has increased by 1.09%, and the accuracy rate of ResNet101 has increased by 1.03%.

5 Ablation Experiment

In order to verify the degree of influence of the ECA module and ESA module on the feature extraction ability of AE-HRNet, AE-HRNet containing only ECA module and ESA module were constructed respectively.

It was trained and validated on the COCO data set and MPII data set respectively, and both were not loaded with pre-trained models, and the experimental results are shown in Table 3.

On the MPII data set, the accuracy rates of AE-HRNet top@1 and top@5 are 74.62% and 95.03%, respectively. After using only the ECA module, top@1 drops by 5.43%, and top@5 drops by 1.32%; only use After the ESA module, top@1 dropped by 1.18%, and top@5 dropped by 0.37%.

On the COCO data set, the accuracy rate of AE-HRNet top@1 is 71.12%, and the accuracy rate of top@5 is 98.73%. After only using the ECA module, top@1 drops by 1.33%, and top@5 drops by 0.47%; After using the ESA module, the accuracy rate of top@1 dropped by 1.02%, and top@5 dropped by 0.13%.

6 Conclusion

In this paper, we introduced ECA module and ESA module to improve the basic module of HRNet, and built EABasic module and EANeck module to form an efficient human action recognition network AE-HRNet based on high-resolution network and attention mechanism, which can obtain more accurate semantic feature information on the feature map while reducing the complexity of operation and retaining the key spatial location information. The spatial location information, which plays a key role, is retained. This paper improves the accuracy of human action recognition, but further improvement is needed in the parametric number of models.

In addition, this paper is validated on the MPII validation set and the COCO validation set, and a larger data set can be used for action recognition validation if conditions permit; on the premise of ensuring the accuracy of the network model for action recognition, how to perform real-time human action recognition in the video data set is the main direction of future research.

References

Wang, H., Cordelia, S.: Action recognition with improved trajectories. In: Proceedings of the IEEE International Conference on Computer Vision (2013)

Ji, S., Xu, W., Yang, M., et al.: 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 35(1), 221–231 (2012)

Liu, L.X., Lin, M.F., Zhong, L.Q., et al.: Two-stream inflated 3D CNN for abnormal behaviour detection. Comput. Syst. Appl. 30(05), 120–127 (2021)

Zeng, M.R., Luo, Z.S., Luo, S.: Human behaviour recognition combining two-stream CNN with LSTM. Mod. Electron. Technol. 42(19), 37–40 (2019)

Wang, L., Xiong, Y., Wang, Z., et al.: Temporal segment networks: towards good practices for deep action recognition. In: European Conference on Computer Vision. Springer, Cham, pp. 20–36 (2016). https://doi.org/10.1007/978-3-319-46484-8_2

Lan, Z., Zhu, Y., Hauptmann, A.G., et al.: Deep local video feature for action recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 1–7 (2017)

Zhao, L., Wang, J., Li, X., et al.: Deep convolutional neural networks with merge-and-run mappings. arXiv preprint arXiv:1611.07718 (2016)

Carreira, J., Zisserman, A.: Quo vadis, action recognition? A new model and the kinetics dataset. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6299–6308 (2017)

Jie, H., Li, S., Gang, S., et al.: Squeeze-and-excitation networks. IEEE Trans. Patt. Anal. Mach. Intell. PP(99) (2017)

Woo, S., Park, J., Lee, J.Y., et al.: CBAM: Convolutional Block Attention Module. arXiv preprint arXiv:1807.06521v. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01234-2_1

Guo, H.T., Long, J.J.: High efficient action recognition algorithm based on deep neural network and projection tree. Comput. Appl. Softw. 37(4), 8 (2020)

Li, K., Hou, Q.: Lightweight human pose estimation based on attention mechanism[J/OL]. J. Comput. Appl. 1–9 (2021). http://kns.cnki.net/kcms/detail/51.1307.tp.20211014.1419.016.html

Sun, K., Xiao, B., Liu, D., et al.: Deep High-Resolution Representation Learning for Human Pose Estimation. arXiv e-prints (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this paper

Cite this paper

Liu, S., Wu, N., Jin, H. (2022). Human Action Recognition Based on Attention Mechanism and HRNet. In: Qian, Z., Jabbar, M., Li, X. (eds) Proceeding of 2021 International Conference on Wireless Communications, Networking and Applications. WCNA 2021. Lecture Notes in Electrical Engineering. Springer, Singapore. https://doi.org/10.1007/978-981-19-2456-9_30

Download citation

DOI: https://doi.org/10.1007/978-981-19-2456-9_30

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-2455-2

Online ISBN: 978-981-19-2456-9

eBook Packages: EngineeringEngineering (R0)