Abstract

In order to solve the problem that the data forwarding performance requirements of the security gateway are becoming higher and higher, the difficulty of operation and maintenance is increasing day by day, and the physical resource configuration strategy is constantly changing, a multi-level network software defined gateway forwarding system based on Multus is proposed and implemented. On the basis of kubernetes' centralized management and control of the service cluster, different types of CNI plugins are dynamically called for interface configuration, At the same time, it supports the multi-level network of kernel mode and user mode, separates the control plane and data plane of the forwarding system, and enhances the controllability of the system service. At the same time, the load balancing module based on user mode protocol stack is introduced to realize the functions of dynamic scaling, smooth upgrade, cluster monitoring, fault migration and so on without affecting the forwarding performance of the system.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

With the advancement of the construction of the Internet of things, the terminal equipment presents the development trend of large scale, complex structure and diverse types. The security services are facing many new problems [1]. First, the number of IOT network terminal equipment is increasing day by day, and the number of terminals is increasing exponentially. The requirements for the data forwarding performance of the border security gateway are becoming higher and higher. It is necessary to continuously expand and upgrade the equipment cluster, and the difficulty of operation and maintenance is increasing day by day. Second, with the continuous increase of security services, different types of services have different requirements for resources, resulting in the continuous dynamic change of the resource allocation strategy. The original gateway equipment of different types can not adapt to the dynamic changes of services, resulting in the shortage of resources for some services and a large number of idle resources for other services. Limited physical resources need to be allocated more effectively and reasonably.

The development of docker technology [2] has set off a new change in the field of cloud platform technology, which enables various applications to be quickly packaged and seamlessly migrated on different physical devices [3]. The release of applications has changed from a lot of environmental restrictions and use dependencies to a simple image, which can be used indiscriminately on different types of physical devices. However, container is only a virtualization technology, and simple installation and deployment is far from being able to be used directly. We also need tools to arrange the applications and containers on so many nodes.

Kubernetes [4] container cluster management platform based on docker has developed rapidly in recent years. It is an open source system for automatic deployment, expansion and management of container applications, which greatly simplifies the process of container cluster creation, integration, deployment and operation and maintenance [5]. In the process of building container cluster network, kubernetes realizes the interworking between container networks through container network interface (CNI) [6]. Different container platforms can call different network components through the same interface. This protocol connects two components: container management system (i.e. kubernetes) and network plugins (common such as flannel [7], calico [8]). The specific network functions are realized by plugins. A CNI plugin usually includes functions such as creating a container network namespace, putting a network interface into the corresponding network space, and assigning IP to the network interface [9].

For the gateway forwarding system, because it involves a large number of packet forwarding services, the underlying logic is mostly implemented based on the Intel DPDK (data plane development kit) [10] forwarding driver. DPDK's application program runs in the userspace, uses its own data plane library to send and receive data packets, bypasses the data packet processing of Linux kernel protocol stack, and obtains high packet data processing and forwarding ability at the expense of generality and universality. Therefore, for the virtualization deployment of gateway forwarding system applications, the selection of CNI plugins has strong particularity. The current mainstream CNI plugins are uniformly deployed by kubernetes management plane, and their management of network interface is based on Linux kernel protocol stack, which is not suitable for DPDK forwarding driven gateway business applications. In addition, the software defines that the gateway forwarding system is composed of data plane and control plane. The data plane is responsible for the analysis and forwarding of data packets based on DPDK forwarding driver, which belongs to performance sensitive applications. The control plane is responsible for receiving control messages and configuring the network system and various protocols. For control plane message, due to the small amount of data, the Linux kernel protocol stack can be used for communication during cluster deployment to obtain more universality. To sum up, when the software defined gateway forwarding system for cluster is deployed, it calls different CNI container network plugins to configure the network interfaces according to different use scenarios, and develops CNI network plugins based on DPDK forwarding driver for the corresponding DPDK forwarding interface, which are the two major problems to be solved urgently for such systems to support virtualization deployment.

In this paper, a multi-level network software defined gateway forwarding system based on Multus is proposed and implemented, and the CNI plugin and load balancing module based on DPDK network interface are implemented to ensure that the application performance based on DPDK is not affected. At the same time, for the control plane interface, because the kernel protocol stack is used to communicate with kubernetes, this paper constructs a multi-level network based on Multus, dynamically calls different types of CNI plugins for interface configuration, realizes the cluster deployment scheme compatible with kubernetes kernel protocol stack, and enhances the controllability of system services, It realizes the functions of dynamic scaling, smooth upgrade, cluster monitoring, fault migration and so on.

2 Design of Multi-level Network Gateway Forwarding System Based on Multus

With the development of nfv technology, virtual network devices based on X86 and other general hardware are widely deployed in the data center network. These virtual network devices carry the software processing of many high-speed network functions (including tunnel gateway, switch, firewall, load balancer, etc.), and can deploy multiple different network services concurrently to meet the diversified, complex and customized business needs of users. OVS (open vswitch) [11] and VPP (vector packet processor) [12] are two virtual network devices widely used in industry.

OVS is an open multi-layer virtual switch, which can realize the automatic deployment of large-scale networks through open API interfaces. However, the definition of flow table rules is complex, which can be realized only by modifying its core software code, and its packet processing performance is not as good as that of traditional switches. VPP is an efficient packet processing architecture. The packet processing logic developed based on this architecture can run on a general CPU. In terms of packet processing performance, VPP is based on DPDK userspace forwarding driver and adopts vector packet processing technology, which can greatly reduce the overhead of data plane processing packets, and the comprehensive performance is better than OVS. Therefore, in the multi-level network software defined gateway forwarding system proposed in this paper, we choose VPP as its receiving and contracting management framework.

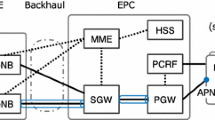

The overall architecture of the system is shown in Fig. 1, in terms of configuration management, it is mainly divided into the management of various gateway services and the management of container resources. The management of gateway service mainly includes business configuration management, policy management, remote debugging management, log audit management, etc. the business developer is responsible for packaging the management process into the container image of the business. When the service is pulled up, it can communicate with the master node to complete the business-related configuration. Container resource management is related to cluster deployment, mainly including deployment cluster management, resource scheduling management, service scheduling management, operation monitoring management, etc. this part of management is related to the operation status of service cluster. It is the basis for providing functions such as dynamic scaling, smooth upgrade, cluster monitoring and fault migration. Kubernetes cluster management framework is responsible for it. The secure service process will be uniformly packaged as a business image and loaded into the host machine that can be deployed by the kubernetes management framework for scheduling by kubernetes. When you need to create or expand a certain type of service, you can create several service containers corresponding to the service in the host of the existing cluster. Similarly, when a certain kind of service resources are surplus and need to shrink, only a few service containers need to be destroyed. Compared with the traditional scheme of purchasing customized physical equipment at a high price and manually joining the network cluster, its cost and operation portability have been greatly improved. In the traditional kubernetes solution, Kube proxy component provides load balancing services for all business pods to realize the dynamic selection of traffic. Besides, we need a load balancing component based on DPDK user mode protocol stack, which will be introduced in Sect. 3.1.

The last module is the hardware network card driver responsible for sending and receiving data packets. The DPDK based userspace forwarding driver at the bottom of the VPP forwarding framework avoids two data copies from the user space of the traditional protocol stack to the kernel state by creating a memif interface, as shown in Fig. 2. Therefore, the network card responsible for forwarding traffic on the service data plane needs to load the DPDK forwarding driver, while the network card responsible for forwarding messages on the control plane can communicate through the kernel protocol stack. In the overall architecture shown in Fig. 1, the data plane network card and the control plane network card should adopt a multi-level network management scheme based on the Multus CNI plugin to meet the communication requirements of kubernetes cluster management and the high-speed forwarding requirements of various gateway service data packets.

3 Design of Core Components of Software Defined Gateway Forwarding System

3.1 Design and Implementation of Load Balancing Module

This paper proposes user mode load balancing DPDK-lb based on DPDK, which uses DPDK user mode forwarding driver to take over the protocol stack, so as to obtain higher data message processing efficiency. The overall architecture of DPDK-lb is shown in Fig. 3. DPDK-lb hijacks the network card, bypasses the kernel protocol stack, parses the message based on the user mode IP protocol stack, and supports common network protocols such as IPv4, routing, ARP, ICMP, etc. At the same time, the control plane programs dpip and ipadm are provided to configure the load balancing strategy of DPDK-lb. In order to optimize the performance, DPDK-lb also supports CPU binding processing, realizes the lock free processing of key data, avoids the additional overhead required by context switching, and supports the batch processing of data messages in TX/RX queue.

3.2 Design and Implementation of Multi-level CNI Plugin

Due to the gateway forwarding service based on VPP includes control message and data message, the data message runs in the user mode protocol stack, and all the above CNI plugins need to use the kernel protocol stack to analyze the data packet, so it can not meet the networking requirements of the data plane of the system. The control message is mainly used to update the service flow table and the distributed configuration management of kubernetes cluster. It is necessary to realize the cross host communication of pod in different network segments. Therefore, for the control plane, you can choose the mainstream CNI plugins that support overlay mode. As a result, in the software defined gateway forwarding system with the separation of control plane and data plane, the responsibilities of control plane and data plane are different, and the selection criteria of network plugins are also different. It is difficult to support the network communication of the system through a single CNI plugin. In order to meet the requirement of creating multiple network interfaces using multiple CNI plugins, Intel implemented a CNI plugin named Multus [13]. It provides the function of adding multiple interfaces to the pod. This will allow the pods connecting to multiple networks by creating multiple different interfaces, and different CNI plugins can be specified for different interfaces, so as to realize the separation control of network functions, as shown in Fig. 4.

Before using the Multus plugin, kubernetes container cluster deployment can only create a single network card eth0, and call the specified CNI plugin to complete interface creation, network setting, etc. When using Multus, we can create eth0 for the control plane of pod to communicate with the master node of kubernetes. At the same time, we can create net0 and net1 data plane network interfaces, and configure the data plane by using userspace CNI plugins to achieve cascade use of multi-level CNI plugins. Kubernetes calls Multus for interface management, and Multus calls the self-developed userspace CNI plugin to realize data plane message forwarding. In this way, it not only meets the separation of control plane and data plane required in the software defined gateway system, but also ensures that in the process of data plane message forwarding, the DPDK forwarding driver based on VPP completes the forwarding operation of data message without copying from operating system kernel state to userspace. To sum up, Multus' multi-level CNI plugin scheme is very applicable in the software defined gateway forwarding system.

3.3 Design and Implementation of Userspce CNI Plugin

In order to register the new service pod on load balancing, it is not enough to only use flannel to complete the control plane network configuration, but also rely on the userspace CNI plugin mentioned above. The plugin needs to complete two types of work: first, create several service interfaces on the local pod, assign the created interfaces to the corresponding IP, and then access the specific network on the host to ensure that the data plane traffic can reach. Second, after the interface is created in the pod, because the kernel protocol stack is not used, it is necessary to configure the interface in the VPP forwarding framework in the pod (such as completing memif port pairing, assigning memif port address, etc.), and connect the newly created interface to the current data plane container network. Memif interfaces created in VPP appear in pairs and communicate by sharing large page memory. Therefore, the memif interfaces in the pods will find two corresponding virtual memif interfaces on the VPP of the host. By using these two pairs of memif interfaces, we can realize the data plane communication from the host message to the service pod.

The traffic of the system cluster is shown in Fig. 5. Taking the working node as an example, the service pod creates three network interfaces, eth0 is used for control plane message communication with the master node, the network card is created and configured by flannel, and net1 and net2 are the two data plane network interfaces required by the service, which are created and configured by the userspace network plugin. All data packages (red in the figure) are taken over by the userspace protocol stack, which improves the overall data message processing capacity of the system. Flannel provides network services for control messages related to configuration and cluster management (blue in the figure), which realizes the functions of dynamic expansion, smooth upgrade, cluster monitoring, fault migration and so on.

4 Experimental Scheme and Results

In this paper, we limit the resources of a single service pod to 1GB of large page memory. We will conduct three groups of comparative experiments. Firstly, we will compare and test whether there is a gap between the service capability provided by a single pod in the software defined gateway system and that provided by the traditional gateway device when it is limited to 1GB of available memory. Then, we will compare the maximum number of pods (16) run by a single physical device in the way of software defined gateway with the traditional way of running the service by a single device, so as to judge whether the performance of the original system is affected under the same hardware conditions after the introduction of kubernetes cluster management scheme. Finally, we will completely release the cluster system, no longer limit physical resources, and verify the overall performance and feasibility of the system. In the experiment, the connection request of real customers is simulated, and the number of access users is increasing. The overall resource consumption of the system is observed through the Prometheus component provided by kubernetes. The scheme comparison of the three experiments is shown in Table 1 and the results is shown in Fig. 6.

The experimental results are shown in Fig. 6. In the first group of experiments, 1GB memory can server about 7500 client terminals. When the number of clients reaches 7000, the connection failure begins to occur. The scheme provided in this paper is almost the same as that of traditional equipment. Therefore, the way of providing services through virtualization has no impact on the performance of the original service.In the second group of experiments, the scheme in this paper and the traditional single device begin to fail when the number of users is close to 110000. When the number of users is close to 120000, they can no longer accept more user access due to memory constraints. The overall performance of this scheme is not inferior to or even slightly better than that of the original single equipment. In the third group of experiments, when the number of users is close to 120000, the memory occupancy rate of each device in the cluster is about 50%. Eight pods are scheduled on each of the two nodes, and each pod provides services for nearly 7500 users. At this time, nearly 50% of the resources of the physical machine node can be used for the deployment of other services. When the number of clients continues to increase, kubernetes will continue to evenly allocate new resources on the two nodes and create new pods to provide services for more users. Until the number of users is close to 240000, the physical node tends to be saturated. However, the traditional single physical device can no longer provide services for so many users.

It can be seen that when the number of physical machines in the cluster continues to increase, the ability of the whole system to provide services will increase linearly. When the number of users decreases in a certain period of time, the physical machine resources are released and can dynamically provide services for other services. Therefore, the service provider only needs to ensure that the total amount of equipment for multiple services is sufficient. Since the peak usage of each service is different, the proportion of physical resources occupied by different services will be dynamically adjusted by kubernetes.

5 Conclusion

In this paper, a multi-level network software defined gateway forwarding system based on Multus is proposed and implemented, and the CNI plugin and load balancing module based on DPDK network interface are implemented. The created gateway service container is based on VPP packet processing framework, and the corresponding DPDK interface can be created to associate with the host interface, It ensures that the packet processing efficiency of the data forwarding application based on DPDK is not affected. At the same time, for the control plane interface of the gateway forwarding system, because the kernel protocol stack is used to communicate with kubernetes, this paper constructs a multi-level network based on Multus, dynamically calls different types of CNI plugins for interface configuration according to the use scenario and attribute configuration of relevant interfaces, and realizes the cluster deployment scheme compatible with kubernetes kernel protocol stack, The controllability of system services is enhanced, and the functions of dynamic expansion, smooth upgrade, cluster monitoring, fault migration and so on are realized.

This paper was partly supported by the science and technology project of State Grid Corporation of China: “Research on The Security Protection Technology for Internal and External Boundary of State Grid information network Based on Software Defined Security” (No. 5700-202058191A-0–0-00).

References

Huang, Y., Dong, Z., Meng, F.: Research on security risks and countermeasures in the development of internet of things. Inf. Secur. Commu. Priva. 000(005), 78–84 (2020)

Nderson, C.: Docker. IEEE Softw. 32(3), 102–103 (2015)

Yu, Y., Li, B., Liu, S.: Research on the portability of docker. Comp. Eng. Softw. (07), 57–60 (2015)

Li, Z., et al.: Performance overhead comparison between hypervisor and container based virtualization. In: 2017 IEEE 31st International Conference on Advanced Information Networking and Applications (AINA). IEEE (2017). https://doi.org/10.1109/AINA.2017.79

Networking Analysis and Performance Comparison of Kubernetes CNI Plugins: Advances in Computer, Communication and Computational Sciences. In: Proceedings of IC4S 2019 (2020). https://doi.org/10.1007/978-981-15-4409-5_9

https://docs.openshift.com/container-platform/3.4/architecture/additional_concepts/flannel.html

Sriplakich, P., Waignier, G., Meur, A.: CALICO documentation, pp. 1116–1121 (2008)

Kapocius, N.: Performance studies of kubernetes network solutions. In: 2020 IEEE Open Conference of Electrical, Electronic and Information Sciences (eStream). IEEE (2020). https://doi.org/10.1109/eStream50540.2020.9108894

Pfaff, B., et al.: The design and implementation of open vswitch. In: 12th USENIX Symposium on Networked Systems Design and Implementation. USENIX Association, Berkeley, pp. 117-130 (2015)

Barach, D., et al.: High-speed software data plane via vectorized packet processing. IEEE Commun. Mag. 56(12), 97–103 (2018). https://doi.org/10.1109/MCOM.2018.1800069

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this paper

Cite this paper

Wang, Z., Ji, Y., Zheng, W., Li, M. (2022). Multi-level Network Software Defined Gateway Forwarding System Based on Multus. In: Qian, Z., Jabbar, M., Li, X. (eds) Proceeding of 2021 International Conference on Wireless Communications, Networking and Applications. WCNA 2021. Lecture Notes in Electrical Engineering. Springer, Singapore. https://doi.org/10.1007/978-981-19-2456-9_18

Download citation

DOI: https://doi.org/10.1007/978-981-19-2456-9_18

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-2455-2

Online ISBN: 978-981-19-2456-9

eBook Packages: EngineeringEngineering (R0)