Abstract

Errors are the enemy of classification systems, so minimising the total probability of error is an understandable objective in statistical machine learning classifiers. However, for open-world application in trusted autonomous systems , not all errors are equal in terms of their consequences. So, the ability for users and designers to define an objective function that distributes errors according to preference criteria might elevate trust. Previous approaches in cost-sensitive classification have focussed on dealing with distribution imbalances by cost weighting the probability of classification. A novel alternative is proposed that learns a ‘confusion objective’ and is suitable for integration with modular Deep Network architectures. The approach demonstrates an ability to control the error distribution in training of supervised networks via back-propagation for the penalty of an increase in total errors. Theory is developed for the new confusion objective function and compared with cross-entropy and squared loss objectives. The capacity for error shaping is demonstrated via a range of empirical experiments using a shallow and deep network. The classification of handwritten digits from up to three independent databases demonstrates desired error performance is maintained across unforeseen data distributions. Some significant and unique forms of error control are demonstrated and their limitations investigated.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

1 Introduction

Automated systems typically operate on their own only if they are simple, or within a closed and managed environment. In situations when things can go wrong with serious consequences, control is typically introduced through a human-in-the-loop. Trusted Autonomous Systems (TAS) different in that they will be required to operate in open and unmanaged environments and when human guidance may not be available. To achieve machine perception in open environments, machine learning classifiers are likely critical components in TAS. However, in order for such systems to be worthy of their trust, users and designers will want to ensure that classification errors that may result in high-consequence impact can be managed; in effect, to introduce a bias in the machine analogous to a learned human bias.

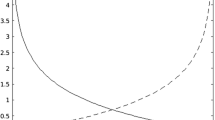

Much recent statistical machine learning [11] involves learning weights in complex, modular non-linear artificial neural networks. These weights are derived from the gradient of the objective (or loss) function. This gradient is back-propagated (Rummelhart et al. 1986) throughout the whole system. In supervised classification, the objective function typically uses some relationship between the machine’s output estimated class and the training set true class. The squared error and cross entropy are popular example functions. The choice of objective function instructs the machine by reward for a correct estimate, and lack thereof for incorrect estimates. These objective functions reward all correct choices and punish all incorrect choices equally. The choice of objective function affects error distribution and in high consequence situations some types of errors may be undesirable. When errors are made, a user wants them to be of least consequence, in effect, the lesser of evils. There may be more at stake than simply choosing the ‘best’ overall performance.

Consider as illustrative, the following humourous exchange from the 20th Century Fox film ‘Master and Commander: The Far Side of the World’, when in the officers mess Captain Aubrey points to a plate of bread and asks Dr Maturin,

Do you see those two weevils doctor?

I do.

Which would you choose?

(sighs annoyed) Neither; there is not a scrap a difference between them. They are the same species of Curculio.

If you had to choose. If you were forced to make a choice. If there was no other response...

(Exasperated) Well then if you are going to push me... (the doctor studies the weevils briefly) ...I would choose the right hand weevil; it has... significant advantage in both length and breadth.

(the captain thumps his fist in the table) There, I have you! You’re completely dished! Do you not know that in the service... one must always choose the lesser of two weevils.

(the officers burst out in laughter)

The consequences of a deep network incorrectly classifying a pedestrian as a rubbish bin may have dire consequences in a potential driver-less vehicle accident, yet incorrectly classifying a post box as a rubbish bin may be of lesser concern. Further, the nature of those consequences will be application specific and thus empirically driven. In mission and safety critical applications when the consequences of error are high, the semantic coding of errors for a machine to learn more and less important costs is an important problem. One would ideally like the designer and/or user of systems to be able to specify a profile for acceptability of error consequences for the system application at hand and is critical to trust justifications. Errors will be a reality for deployed network solutions. Despite the performance claims of Deep Networks and the rush to multi-layer engineering with GPU implementations, the vast majority of techniques remain brittle. Small changes can result in magnified errors undermining trustworthiness in the accuracy and competence of such systems. This brittleness is evident when a network trained on given data sets is exposed to independently-derived dataset. Seewald [12] illustrates an order of magnitude in error degradation when a classifier trained on MNIST and USPS datasets is tested on the independently-derived DIGITS handwritten digits dataset. Further, it appears that adversarial examples may be created that have deleterious error effects on practically all forms of network architecture, both deep and shallow [6]. Although adversarial forms appear to be rare in naturally-occurring data, they can be easily generated by an adversary in ways that are indistinguishable to humans [15], which may undermine trustworthiness in the integrity of the system.

The literature on ‘cost-sensitive classification’ (CSS) is concerned with class distribution imbalance, typical of situations when the training data is limited or not necessarily statistically representative, and for classifying rare and important classes (e.g. medical diagnosis of rare diseases). Despite this specific focus, the techniques may be applicable to objective functions for more general deliberate error shaping, as they are generally formulated for a cost C of confusing the actual class i with estimated class j and probability of class j given training example x,

Approaches to CSS include reweighting (stratifying) available training data so that more costly errors will incur a larger overall cost [5], cost-sensitive boosting [13], [4] that combine multiple weak or diverse learners, or by changing the learning algorithm. Those that alter the learning algorithm appear to focus on either decision tree classifiers where cost sensitivity is achieved by pruning [10], or Support Vector Machines (SVM) that all use a hinge loss approximation of the cost-sensitive loss function [9]. A cost sensitive learning algorithm applicable as an augmentation to deep networks appeared only recently in the literature [7] and as with all the CSS has not considered the broader problem of shaping the entire error distribution, but only to make up for imbalance in the input class distribution.

The feasibility of a simple augmentation to networks to learn user-defined error profiles is examined next on the basis of a new proposed maximum likelihood objective function. The new technique is developed and explained in the context of binomial and multinomial regression. An implementation using Google’s TensorFlow is then studied. A range of experiments are conducted with several independent data sets to ascertain the degree of control over error distributions where the prior distribution is unknown, on a shallow and a deep network architecture and findings are discussed.

2 Foundations

A derivation of maximum likelihood classifiers to match a target error profile follows. Noting, in this preliminary study, there is not yet any established theory as to how the overlaps in classification can be traded off, so the examination will necessarily be empirical.

In supervised learning, the Error Matrix, or Confusion Matrix indicates the correct and error classifications across categories from either training or test data. Each row represents the number of instances in an actual (true) class and each column represents the number of instances in an estimated class. The term confusion is used, as this representation quickly shows how often one class is confused with another. Type I errors refer to a true class X being incorrectly classified as a different class Y and is indicated in the non-diagonal values in the rows of the confusion matrix. Type II errors refer to a classified class being X when the true class was Y, and is indicated in the non-diagonal column values of the confusion matrix.

Logistic Regression for the binomial case (two class) and multinomial [3] have been well studied in the literature. However, in order for the reader to comprehend the gradient function for the pairing of the multi-class logistic function also termed ‘softmax’ [14] function with alternate objective functions, it is necessary to derive these from first principles. Multinomial logistic regression is also extended to the Gaussian case to gain insight and contrast the form of the gradient function with our proposed objective. The following forms a foundation for the proposed technique for multinomial softmax regression on the confusion matrix to follow in the next section.

2.1 Binomial Logistic Regression

Consider a network with input \(\mathbf {x}_n\) for the nth data presentation, weight vector \(\mathbf {w}\), and bias vector \(\mathbf {b}\).

Consider a simple binary decision classifier, with a logistic function non-linearity,

In training the network with N examples, the probability of the true class t given the estimated class y output for training case n is,

To maximise this probability, it is equivalent to minimise the negative log likelihood termed the loss function,

When the gradient of the loss function is zero, this corresponds to the minimum.

Now consider the chain rule,

Differentiating (13.2),

Thus from (13.6),

The logistic function matches the negative log likelihood function to achieve a cancellation in terms and a very simple expression for the gradient and thus the minimum point, which occurs precisely when \(y_n=t_n\).

2.2 Multinomial Logistic Regression

Consider the network as per (13.1), independently duplicated in structure for each class j,

Consider a multi-class decision classifier, with the generalised logistic or ’softmax’ function non-linearity, for data presentation n and class j as follows,

Softmax is thus the logistic function extended to one output for each class. This generalises the previous binary case where \(K=2\). Noting each class output is normalised with respect to all other classes, and therefore is not independent.

As before, in training the network with N examples, the probability of the true class t (a ‘one-hot’ vector) given the estimated output y for training case n and class j might be described with a multinomial probability mass function, normalised such that \(\sum _{j} t_{j}=1\)

To maximise this likelihood, it is equivalent to minimise the negative log likelihood,

This log-likelihood function is often termed cross entropy and can be derived from the Kullback-Liebler divergence. Where the gradient of the negative log likelihood function is zero, \({{\partial {E}} \over {\partial {\mathbf {w}}}} = 0\) corresponds to the minimum. This is pursued in stages,

Recalling the softmax function normalises with regard to other classes, means that the derivative of the softmax has two cases,

The chain rule must account for K class paths, however, the expression may be simplified according to \(j=k\) and \(j \ne k\) parts only,

Thus,

This is the same form as for the binary case. As Eq. (13.16) makes clear from the cancellation of terms, the gradient of the softmax function perfectly matches the gradient of the log likelihood objective function, conspiring so as to form a significant reduction. Only one element of the training and the output is used, representing the single true (kth) condition.

Having established this foundation, alternative objective functions are considered next.

2.3 Multinomial Softmax Regression for Gaussian Case

Consider the case where y are Gaussian distributed. Such a distribution may not be an unreasonable approximation in the case of the limit for very large training sets. This will allow investigation of the less-than-perfect match of softmax function with this new objective function.

To maximise this likelihood, it is equivalent to minimise the negative log likelihood,

Instead of the logarithmic relationship to the outputs y, previously, there is now a square law relationship.

As previously, the gradient of the negative log likelihood function is zero, \({{\partial {E}} \over {\partial {\mathbf {w}}}} = 0 \) corresponds to the minimum. Which is pursued in stages,

Using Eqs. (13.14) and (13.15) as before,

Firstly, note the sign of the gradient is reversed with respect to (13.19). Second, as the minimum is achieved only when the gradient is zero, this occurs iff \(t_{nj}=y_{nj},\;\forall j\). This is notably different to the previous form (13.19), which showed no dependency to any other than the \(k^{th}\) estimates of y and training values t. Indeed, even if \(t_{nk}=y_{nk}\) a gradient remainder is left over.

Notably, if a one-hot vector is used for training (which is usual for multinomial classifiers), then \(t_{nj}=0\) if \(j\ne {k}\) so,

This clearly shows that the incorrect estimate outputs from the right-hand term in (13.25) introduce an irreducible offset into the gradient. However, if the classifier is working well then \(y_{nj}^2<< 1\) and thus this term may be small.

3 Multinomial Softmax Regression on Confusion

A proposal to effect control over the confusion matrix is examined. The confusion matrix summarises the overall distribution of examples from trained classes \(\mathbf {t}\) versus estimated classes \(\mathbf {y}\). Consider the potential to learn a user-defined target confusion matrix distribution \(\mathbf {u}\). Using Bayes theorem this means maximising,

Noting the matrix product of \(\mathbf {t}\) and \(\mathbf {y}\) defines the confusion matrix for some representative data batch size N, writing the likelihood as,

Unlike previous formulations this likelihood directly mixes the estimator output and training classes within the argument of the logarithm. The negative log likelihood is thus,

In this form it resembles the multinomial regression objective, with an important distinction in the log over a training-weighted sum of estimated y values. Where the gradient of the negative log likelihood function is zero, \({{\partial {E}} \over {\partial {\mathbf {w}}}} = 0 \) corresponds to the minimum. Which as previously is pursued in stages,

Equations (13.14) and (13.15) similarly apply,

This does not reduce further. If \(t_{in}=0\) for all but the \(i=k\) training element (one hot training vector) then from (13.31),

The right hand term is zero iff \(y_{nk}=1\). This will only be the case if all \(y_{nj}=0, \forall {j \ne k}\). Indeed the left hand term is zero if the latter condition is true, making the left and right hand terms coupled. An alternative condition that makes the left hand term equal to zero is if \(u_{kj}=0, \forall {j \ne k}\) that is, all non-diagonals of the user-defined matrix are zero (i.e. u is the identity matrix). Classification performance cannot be expected to equal that of the optimum multinomial case except in this special case. Similar to the Gaussian case prior, then the gradient cannot reach a minimum. Thus any choice of user-defined error confusions will compromise overall performance. A question remaining is to what degree will that performance be compromised and under what conditions might that compromise be acceptable?

4 Implementation and Results

An implementation was chosen to make an empirical study of classifier performance. The MNIST digits database [8], was chosen due to its requiring a multinomial classifier with modest computational requirements. The error objective that a user or designer might want could take a large variety of forms, so instead of attempting some exhaustive approach, a few likely error-shaping scenarios were chosen. The plan for the empirical testing to follow is then:

-

Simple Classifier Evaluation

-

‘Baseline’ to establish whether the confusion objective performs as well as the standard classifier under conditions when a user does not care about the error distribution.

-

‘Error trading’ to examine the capacity to trade error types I and II related to specific classification classes.

-

-

Deep Network Classifier Evaluation

-

Repeat of ‘error trading’ used for the simple network to examine if a deeper classifier structure provides a greater capacity to trade error types I and II.

-

‘Adversarial Errors’ examines the potential to thwart classification decisions by producing deliberate errors.

-

The first candidate was a simple regression network from the TensorFlow tutorial [1]. TensorFlow is an open source software library well suited to fast multidimensional data array (tensor) processing and allows deployment of computation on multiple CPUs or GPUs. The simple network uses 784 (\(28 \times 28\) pixels) inputs and n = 10 output nodes, each output employing a softmax non-linear function (corresponding to MNIST digit categories 0–9). As is typical with this dataset, 60,000 training images and 10,000 test images were used. A batch size of m=200 was maintained throughout. Only the objective function and optimizer were modified, with the \(10 \times 10\) user-defined error distribution tensor, u according to the specific applied test. Given the batch of true labels \(\mathrm{y}\_\)

Using the Adaptive Gradient optimizer,

The following implements the extended square loss,

The following implements the extended cross-entropy loss,

Noting * represents element-wise multiplication of tensors.

Seewald [12] compared machine learning performance on three independently sourced handwritten digits databases: MNIST [8]; USPS, the US Postal Service ‘zip’ codes reduced to individual digitsFootnote 1; and DIGITS, Seewald’s own collection from school students. The value of independent data rests in the fact that the off-line machine training may differ from the real world when a system is deployed. By studying the proposed error redistribution learner on independent data, its true robustness may be studied.

Table 13.1 shows the results of a baseline test to compare the simple classifier overall performance for cross entropy, square loss and the confusion objective functions as detailed in previous sections. The latter novel objective employed a \(10 \times 10\) identity matrix as the user objective. The network was trained on the MNIST 60,000 image set only but tested on the MNIST 10,000 image test set, USPS 2,007 image test set and the entire DIGITS 4389 images. Table 13.1 demonstrates the expected degradation in performance for unseen data sets reported by Seewald, and that the performance of the novel objective function is comparable under baseline conditions.

The confusion matrix is shown in Table 13.2 with actual digit categories by row and estimated digit categories by column for MNIST training only on the 10,000 image test data set.

As illustrated in the row totals, not all digits are equally likely in the training data and, of course, not all were equally ‘easy’ for the network to recognise. Correct identification of a digit ‘0’ was highest at p = 0.985 and correct identification of a ‘5’ lowest at p = 0.874. The five most significant error confusions are (2,8), (9,4), (4,9), (5,8), (5,3).

4.1 Error Trading

In the following tests the capacity to trade errors related to a specific class (arbitrarily the digit 4) was examined. Table 13.3 summarises error trading tests with the simple network trained on MNIST data only and tested on MNIST, USPS and DIGITS data sets. Beginning with test 1, as the error benchmark, which is the standard cross entropy objective function, Table 13.1 shows the probability of correctly classifying a digit 4 given a digit 4 was presented, the Type I error related to the row for the digit 4 and Type II errors related to column for the digit 4, for each test data set. The results of test 1 clearly show that the nature of the errors across independent data sets is highly varied.

In test 2, the relative value of one diagonal cell in the user-defined matrix u with respect to all others was changed. This effect may be achieved by scaling one-hot vector unit values. The result of increasing the weight for digit 4 to be five times higher than any other individual digit is illustrated in Table 13.4. For the MNIST test, the average accuracy was 0.920 down slightly from 0.927. This reduced the errors across the row for the digit 4 from 56 to 23 errors, but in the column for digit 4, errors increased from 88 to 193. A reduction in type I errors is achieved at the expense of an increase in type II errors. This is shown in detail with the confusion matrix for the MNIST test data in Table 13.4.

As an analogy, consider a hunter who has a bias that improves detecting a ‘lion’ in long grass. This bias is better for the survival of the hunter to predation, but comes at the cost of more often perceiving a lion when in fact there was not one, which may have other consequences.

If this test is illustrative of the behaviour to be expected for a classifier, is it possible to exert more shaping control over type I and type II errors as trade for average error performance? Table 13.3 (test 3) summarises results of raising the ‘floor’ (flr) for diagonals in u as a compensatory allowance. A setting of \(flr=0.05\) was chosen to represent a desire to keep errors low everywhere (reasoning 0.05 is near to zero), but it is most desired to reduce errors in combinations of row ‘4’ and column ‘4’ where cells were set to zero. Table 13.4 illustrates the resulting confusion matrix for test. Test 3 was typical of attempts to change the same major row and column (i = j). The result was in increase in both type I and type II errors compared with manipulating one hot vector values only. Table 13.3 shows across test data sets a relative increase in Type I errors and decrease in Type II errors compared with test 2. For the MNIST test data the most errors related to cells (9,4) and/or (4,9). Driving down errors for one of these cells had the direct effect of raising errors in the other: a kind of ‘pivot’ effect. The strong and direct relationship between errors appeared to be only very weakly controllable. Evidence of these pivots appear in the unshaped confusion matrix in the form of the larger and symmetrical errors in Table 13.2. Pivots were observed at (4,9):(9,4), (5,8):(8,5), (5,3):(3,5), (7,9):(9,7) for MNIST tests. Notably these pivots were different, for each of the three test data sets.

In test 4, the capacity to target a reduction in type II errors only for a specific classification was examined. Table 13.3 summarises the result which had a strong desired effect, at the expense of an increase in type I errors. Test 5 demonstrates a final level of ‘tuning’ where the type II errors were generally lower than those of test 1, with only a small increase in Type I errors. The confusion matrix for test 5 with MNIST test data is shown in Table 13.5.

Tests 4 and 5 demonstrate a level of control over errors that is not possible by one-hot vector manipulation and could not be achieved without shaping the objective function. The set of tests also demonstrates an ability to target reduction in Type I or Type II errors at the expense of the other, and was sustained across two unforseen independent data sets.

These results led to the question ‘if pivots identified in the initial confusion matrix are excluded from consideration, can significant control over errors in other arbitrary rows and columns be achieved?’ For the tests attempted, the answer appears to be yes. Table 13.6 illustrates the results of an attempt to minimise errors in the column for a digit ‘4’ (type II) and a row for a digit ‘7’ (type I) for MNIST test data. The user defined matrix, u column representing a ’4’ contained zeros, and the row representing a ‘7’ contained zeros, with u(4,4) = 2, u(7,7) = 2, u(i,i) = 1 if i4 and a floor u(i,j) otherwise equal to 0.05. The overall test accuracy was 0.88. Notably the most significant errors at (9,4) and (7,9) were suppressed as desired. The ability to push these errors lower would not come without more significant cost to the overall error rate. Type II errors increased in column ‘8’.

In practice, users will likely want to achieve a lower misclassification rate for some arbitrary chosen cell targets relevant to their problem choice. Empirical investigation appears to confirm this is possible providing data pivot points are avoided. This is discussed later.

4.2 Performance Using a Deep Network and Independent Data Sources

The deep convolutional network (Convnet) chosen was from the TensorFlow tutorial.Footnote 2 The Convnet chosen uses a hierarchy of three macro-levels, each level comprises a convolutional layer, rectified linear unit layer, max pool layer, and drop out layer. At the top of all this, there is an output processing layer termed ‘softmax’ or normalised exponential, making 13 layers in total. This provides significantly better performance than the simple network. When trained on MNIST data, this network provided a 99.2% average classification accuracy which is near to the current state of the art of 99.77% [2].

As a comparison, the earlier successful aim to ‘minimise errors in row 7 without excessive impact on column 4 and the overall error average’ (test 6) is revisited. In this case it was performed by training the Convnet on USPS training data only and then tested on USPS test data, MNIST test data and DIGITS test data. For the experiment 1280 USPS training images only were used. Test data used included 8192 MNIST test images, 1024 USPS test images, and 4096 DIGITS test images. The results are shown in Tables 13.7, 13.8, 13.9, 13.10, 13.11 and 13.12 inclusive. Tables 13.7, 13.9, and 13.11 show the confusion matrix achieved using the standard classifier on MNIST, USPS and DIGITS test databases with average error rates 0.897, 0.957, and 0.692, respectively. These errors are contrasted with Tables 13.8, 13.10, and 13.12 which show the achieved error performance for test 6 using the new objective function on MNIST, USPS and DIGITS test databases with average error rates of 0.784, 0.893 and 0.538 respectively. As expected, test 6 resulted in some increase in the average errors in all cases. Notably, the misclassifications for each of these databases show significantly different characteristics to what is now seen trained on USPS data. Pivot points for the MNIST test are different to those earlier, now with confusions (7,2) and (6,4) as most significant. Pivot points for the DIGITS test have significant confusions (7,2), (9,3), (1,4) and (1,7). Examining the objective to minimise errors in row 7, this is demonstrated in all three cases. Error confusion (7,2) were reduced from 117 to 31, and 220 to 130 in the MNIST and DIGITS tests and remained the same at 2 for the USPS test. Errors for column 4 increased from 212 to 460 and 204 to 278 for MNIST and DIGITS tests, and from 3 to 20 for the USPS test. This largely fulfilled the aim of the test, however, there are several notable ’surprises’. Table 13.8 shows significant error increases for (0,2), (0,6), and (1,6) compared with Table 13.7. Table 13.12 shows significant error increases for (0,2), (2,6) and (4,9).

4.3 Adversarial Errors

Control over the occurrence of type I errors is most strikingly demonstrated by training a network to create a deliberate confusion. This may be a requirement for a cyber operations system. Table 13.13 illustrates the result to cause a deliberate confusion of a digit ‘7’ for a ‘0’ created by a user matrix. As expected the average error drops by 10% to 0.829. Notably this network learns never to output a ‘7’ and instead classifies a ‘7’ as a ‘0’ precisely as desired. Further, the “normal” detection statistics of other categories is largely unaffected.

To produce type II errors in a specific cell of row i and column j, proved more difficult. It is necessary to maintain at least some small value for u(j, j) to ensure correct classifications of i are allowed as reducing u(j, j) to zero will have the effect of reducing the entire column to zero, as per the example in Table 13.13, with the resulting distribution of the classification estimates redistributed without user control, into the next most similar categories. Table 13.14 illustrates a best effort test (error average 0.806) achieved with the aim of ‘creating errors in cell (2,5) and minimising correct classifications in cell (5,5)’. As can be seen in Table 13.14, the desired effect is achievable, however, the consequence of the desired weakness in correctly classifying (5,5) produces more significant errors at both (5,3) and (5,8), which may be the ‘next weakest’ points.

5 Discussion

The hypothesis that a more expressive deep network would be significantly more capable of supporting an arbitrary redistribution of errors than a shallow network was not demonstrated in these tests. It does appear possible to trade errors under limited conditions towards arbitrarily-chosen errors which, as expected from theoretical study of the gradients, comes at some cost to the total error rate. The degree of control that can be exercised appears limited by pivots in the presented data. These pivots are in turn dependent on training data and the actual data used in practice. As demonstrated by use of three independent data sets, the ‘confusion training’ to meet an objective performed best when the training and live data were drawn from independent sources.

A critical question is ‘what other loss functions might one choose, and what are the implications for that choice?’ [16], Williamsion argues that a proper composite loss (objective) needs to control convexity (geometrical properties) and control statistical properties. Considering logistic loss, the deliberate perfect match of the logistic or softmax characteristic and the multinomial log likelihood characteristic that yields a collapse in complexity of the gradient was not afforded by our proposed choice of objective function with softmax. Yet, perhaps it remains possible to find an alternate functional composition that would allow a similar simplification?

The adversarial generation of confusions was convincingly demonstrated with the ‘user specified confusion’ objective function. The confusion objective function is very good at creating errors where they are wanted.

6 Conclusion

A technique has been demonstrated with the ability to learn to shape errors for both a shallow and deep network based on a novel maximum likelihood ‘confusion objective’ function. Results were demonstrated for some limited but useful cases in trading type I and type II errors, maintaining error objectives across independent and unforseen data sets, and an ability to create adversarial confusions. Next steps might include tests on examples with a significantly larger number of classes, and the derivation of bounds of the gradient minima for the technique to provide further insights. The technique appears sufficiently promising to warrant more thorough statistical analysis of sensitivity of total errors to specific error objectives.

Notes

- 1.

- 2.

https://www.tensorflow.org/versions/r0.9/tutorials/mnist/pros/index.html.

References

M. Abadi, A. Agarwal, P. Barham, E. Brevdo, Z. Chen, C. Citro, G. Corrado, A. Davis, J. Dean, M. Devin, et al., Tensorflow: Large-scale machine learning on heterogeneous distributed systems. (2016) arXiv preprint arXiv:1603.04467

D. Ciregan, U. Meier, J. Schmidhuber, Multi-column deep neural networks for image classification. 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (IEEE, 2012), pp. 3642–3649

J.N. Darroch, D. Ratcliff, Generalized iterative scaling for log-linear models. The Annals of Mathematical Statistics (1972), pp. 1470–1480

P. Domingos, Metacost: A general method for making classifiers cost-sensitive. Proceedings of the fifth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (ACM, 1999), pp. 155–164

C. Elkan, The foundations of cost-sensitive learning. International Joint Conference on Artificial Intelligence, vol. 17 (LAWRENCE ERLBAUM ASSOCIATES LTD, 2001), pp. 973–978

I.J. Goodfellow, J. Shlens, C. Szegedy, Explaining and harnessing adversarial examples (2014). arXiv preprint arXiv:1412.6572

S.H. Khan, M. Bennamoun, F. Sohel, R. Togneri, Cost sensitive learning of deep feature representations from imbalanced data (2015). arXiv preprint arXiv:1508.03422

Y. LeCun, L. Bottou, Y. Bengio, P. Haffner, Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998)

Y. Lee, Y. Lin, G. Wahba, Multicategory support vector machines: Theory and application to the classification of microarray data and satellite radiance data. J. Am. Stat. Assoc. 99(465), 67–81 (2004)

S. Lomax, S. Vadera, A survey of cost-sensitive decision tree induction algorithms. ACM Comput. Surv. (CSUR) 45(2), 16 (2013)

J. Schmidhuber, Deep learning in neural networks: An overview. Neural Netw. 61, 85–117 (2015)

A.K. Seewald, On the brittleness of handwritten digit recognition models. ISRN Machine Vision, 2012 (2011)

Y. Sun, M.S. Kamel, A.K.C. Wong, Y. Wang, Cost-sensitive boosting for classification of imbalanced data. Pattern Recogn. 40(12), 3358–3378 (2007)

R.S. Sutton, A.G. Barto, Reinforcement Learning: An Introduction (MIT press Cambridge, 1998)

P. Tabacof, E. Valle. Exploring the space of adversarial images (2015). arXiv preprint arXiv:1510.05328

R. Williamson. Loss Functions, vol. 1 (Springer, 2013), pp. 71–80

Acknowledgements

Thanks to: Mr. Darren Williams, Dr. Glenn Moy and Dr. Darryn Reid for reviewing earlier drafts and encouragement; Prof. Hussein Abbass for timely and critical feedback on the draft; and Dr. Alexander Seewald for kindly supplying USPS and DIGITS data sets.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2018 The Author(s)

About this chapter

Cite this chapter

Scholz, J. (2018). Learning to Shape Errors with a Confusion Objective. In: Abbass, H., Scholz, J., Reid, D. (eds) Foundations of Trusted Autonomy. Studies in Systems, Decision and Control, vol 117. Springer, Cham. https://doi.org/10.1007/978-3-319-64816-3_13

Download citation

DOI: https://doi.org/10.1007/978-3-319-64816-3_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-64815-6

Online ISBN: 978-3-319-64816-3

eBook Packages: EngineeringEngineering (R0)