Abstract

As image gains much wider importance in our society, image processing has found various applications since the 60’s: biomedical imagery, security and many more. A highly common issue in those processes is the presence of an uncontrolled and destructive perturbation generally referred to “noise”. The ability of an algorithm to resist to this noise has been referred to as “robustness”; but this notion has never been clearly defined for image processing techniques. A wide bibliographic study showed that this term “robustness” is largely mixed up with others as efficiency, quality, etc., leading to a disturbing confusion. In this article, we propose a completely new framework to define the robustness of image processing algorithms, by considering multiple scales of additive noise. We show the relevance of our proposition by evaluating and by comparing the robustness of recent and more classic algorithms designed to two tasks: still image denoising and background subtraction in videos.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Background Subtraction

- Image Denoising

- Image Processing Algorithm

- Medical Image Segmentation

- Computer Vision Algorithm

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

As image gains much wider importance in our society, image processing has found various applications since the 60’s [1]: visual inspection systems, remote satellite image interpretation, biomedical imagery, surveillance and security, astronomy, etc. As well established in many fields of research [31, 32], image processing is carried out thanks to a series of algorithms applied on the input image after acquisition, sampling and quantization. It consists of two major steps: improvement by pre-processing treatments, as denoising filtering for example, and segmentation. More complex operations are driven afterwards on the image, such as object recognition, people identification, etc., addressed as computer vision techniques.

A highly common issue in those processes, whatever the field, is the presence of an uncontrolled and destructive perturbation on the image, coming from diverse sources (medical images perturbed by artefacts coming from the acquisition system, videos jittered by the camera movement due to the wind, etc.), which is generally referred to noise.

The ability of an algorithm to resist to this noise (i.e. that the algorithm’s output has been experimentally or theoretically approved to be independent to this noise) has been referred as robustness. This robustness is a key issue in image processing, since it ensures that the developed algorithm satisfies the final user of the application. A wide bibliographic study around “robustness” in image processing demonstrated that it is largely mixed up with other terms as efficiency, quality, performance, persistence, etc., leading to a highly disturbing confusion [3, 14, 23]. This lack of model for the robustness thus blocks its formal integration in algorithms designed for image processing tasks as filtering, segmentation, or even compression.

The formal definition of a robust algorithm in the field of computer vision comes initially from the researches and formulations of Peter Meer [26, 27]. In this work, robust techniques, as RANSAC [13] or the Hough transform [17], are based on robust statistics tools [33]. Noise is considered as an additive perturbation of the input data, under a known or estimated scale. This can be summarized as follows: “Robustness in computer vision cannot be achieved without having access to a reasonably correct value of the scale [of the noise]”. This work has a large impact upon computer vision tasks, which are machine learning algorithms [7] using robust statistical tools [6, 8, 29] and devoted to character recognition, object classification, people tracking, etc. When the targeted noise cannot be represented theoretically or do not fit into a statistical model, the challenge is to gather a sufficient amount of data to test, to evaluate the computer vision algorithms, and to increase their robustness: this data gathering is a key of Big Data [35].

Unfortunately, in the particular field of image processing, this definition of robustness, and also its evaluation, has not been further modelled in such a way. In this article, concurrently with these works in machine learning, we propose to solve the problem of robustness assessment in image processing tasks, thanks to a novel definition introduced in Sect. 2, based on multiple scales of noises. To the best of our knowledge, no formal definition of the robustness has been designed in the literature to evaluate image processing algorithms with such a formulation. We then propose to employ this measure, called \(\alpha \)-robustness, to evaluate the robustness of algorithms devoted to two tasks in Sect. 3.1: image denoising filtering and background subtraction in videos. As a future prospect, we hope that this generic measure will be employed to evaluate the robustness of novel contributions in image processing and that related research communities will seize this scientific bottleneck.

2 A Novel Definition of Robustness

We first consider that an algorithm designed in image processing may be perturbed, because of an input data altered with an additive noise. With similar notations as the work of Peter Meer [26], we have:

which will be shortened by \(\mathbf {\widehat{Y}}=\mathbf {Y^0}+\delta \mathbf {Y}\) when the context allows it, i.e. when the subscripts are not necessary. In this equation, the measurement \(\mathbf {\widehat{Y}}\) is obtained from a true (and generally unknown) value \(\mathbf {Y^0}\), corrupted by the noise \(\delta \mathbf {Y}\). In robust statistics, the objective of an algorithm is to compute and minimize an error between (i) the estimated parameters \(\widehat{\theta }\) of the measurements \(\mathbf {\widehat{Y}}\), e.g. a set of lines constructed with a Hough transform through a cloud of noisy points, (ii) the true parameters of the model, \(\theta \), obtained from \(\mathbf {Y^0}\), e.g. the true line that we should obtain (the underlying line represented by the points). In this case, we may assume that the noise is independent and identically distributed (iid):

where \(\sigma ^2C_y\) is the covariance of the errors at the known scale \(\sigma \). This formulation leads to the definition of robustness by Peter Meer as “An estimator is considered robust only when the estimation error is guaranteed to be less than what can be tolerated in the application”.

As a summary, a statistical algorithm may be considered as robust if the error generated by the constructed parameters \(\theta \) is less than a given threshold, depending on the final application and on the knowledge of the noise (scale \(\sigma \) in Eq. 2). This formulation represents the current challenges of designing robust computer vision algorithms in a large amount of data. We propose now to study further these notions of robustness and noise for the development of robust image processing.

In this article, we assume that the robustness must be assessed in a multi-scale approach, in order to appreciate the limitations of an algorithm, and what would even make it fail. Let A be an algorithm designed for a given image processing application, leading to a set of values \(\mathbf {X}=\{x_i\}_{i=1,n}\) (generally the output image obtained by A). Let N be an additive noise (i.e. respecting Eq. 2) specific to the considered application, and \(\{\sigma _k\}_{k=1,m}\) a set of scales of N. Let \(Q(\mathbb {\mathbf {X_k},\mathbf {Y_k^0}})\) be a measure of the quality of A for the scale k of N (that is, \(\sigma _k\)), for example, the F-measure, combining both positive true and false detections versus negative ones in binary decisions (as in the case of binary segmentation).

The algorithm A may be considered as \(\alpha \) -robust if the difference \(d_Y\) between the result \(\mathbf {X}\) and the ground truth \(\mathbf {Y^0}\) is bounded, w.r.t. the increase of noise scale \(d_X\), by the Lipschitz continuity [5] of Q:

Figure 1 is a synthetic illustration of the use of this definition, where we have indicated the quality score (e.g. the quality of an image segmentation algorithm by calculating a Dice coefficient) of four fictional algorithms for multiple scales of a given noise. In this figure, we can notice that Algorithm 2 has the best quality, at scale \(\sigma _1\). We advocate that using this single scale is not sufficient to assess the robustness of this algorithm, i.e. to guarantee the error tolerated by the application for this algorithm, as P. Meer claimed. After increasing the impact of noise, Algorithm 4 has the best robustness (\(\alpha \)-robust with \(\alpha =0.02\)), and Algorithm 2 the worst (\(\alpha =0.30\)). Finally, Algorithm 1’s behaviour is not linear, implying a high \(\alpha \) value, and Algorithm 3 is the best algorithm of this synthetic test, providing a very good robustness (\(\alpha =0.05\)) and high performance in term of quality. Thanks to this synthetic study, we show the gap between classic quality assessment, as done currently in research in image processing when considering a single scale of noise, and this novel and original notion of robustness. We propose in the next section to compare image denoising and video background subtraction algorithms thanks to this framework.

3 Experimental Study

3.1 Application for Image Denoising Filtering Algorithms

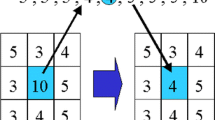

Image denoising has been substantially dealt since 70’s [21, 22], from linear and simple non-linear algorithms such as median filtering [18] to more sophisticated approaches as block-matching based ones for example [25]. In the literature, algorithms are generally compared with some sample images to show their respective efficiency, without any peculiar strategy to evaluate their robustness. In this article, we will focus our attention on some classic algorithms, and others related to shock filters, which modify pixels by performing a dilation near local maxima and erosion near local minima [30]. The Laplacian of the image guides the choice of operations: if it is negative (resp. positive), then the pixel is judged to be located near a maximum (resp. minimum). Using an iterative discretization of a PDE, the shock filter produces local segmentations in inflection zones:

for each pixel \(f^t(p_i,q_j)\) of a 2-D image \(\{f^t(p_i,q_j)\}_{i=1,M;j=1,N}\) at iteration t, where \(\varDelta f^{t}(p_i,q_j)\) is the Laplacian computed at pixel \((p_i,q_j)\), D is a disk-shaped structuring element of radius 1, and \(\oplus \) and \(\ominus \) are the symbols of classic dilation and erosion operators. Please also note that \(f^{0}(p_i,q_j)=f(p_i,q_j)\), the first input image of the algorithm.

In [37], we have proposed to enhance this filter by using local smoothed histograms, which have the property to produce smoothed dilations and smoothed erosions, hence replacing the classic operators depicted in Eq. 4. The smoothed local histogram of the neighborhood \(V_{ij}=\mathcal{V}((p_i,q_j))\) of a pixel \(f(p_i,q_j)\) is modeled as:

where \(k\in \{1,n_b\}\), K, W are generally Gaussian kernels and \(s_n\) is the n-th bin of the histogram. We have also shown that this algorithm is capable of enhancing segmentation further processes in [38].

We propose to show the application of our definition in comparing the robustness of our approach with several other related image denoising filtering algorithms of the literature. As depicted in Fig. 2, our material is a set of 13 classic images well-known in the field of image processing (\(\mathbf {\widehat{Y}}\) in Eq. 1) perturbated by additive white Gaussian noises with increasing standard deviations, i.e. the scales \(\{\sigma _k\}_{k=1,5}=\{5,10,15,20,25\}\). We compare our algorithm with the classic median filter [18]; the bilateral filter [36]; the original shock filter [30]; its complex extension proposed by [15]; the enhanced version from [4]; the coherence filter introduced by [40] and the smoothed median filter proposed by [21]. To do so, we employ the SSIM (Structural Similarity) quality measure [39], ranging between 0 and 1, which assesses the similarity between any output image and the original image without any noise (ground truth, \(\mathbf {Y^0}\) in Eq. 1). Our study of robustness is illustrated in Fig. 3-a, wherein a plot represents the SSIM values obtained for all algorithms (averaged over all images), at each noise scale. The Gaussian noise alteration is also plotted as a reference with a black dotted line. For a clear comparison of numerical values obtained, evaluation of \(\alpha \)-robustness is summarized in Fig. 3-b.

We can first observe that the original shock filtering method, the classic median filter and the coherence filter are not able to filter accurately the noisy images, since it even worsen the alteration originally impacted by the noise. At the first scale \(\sigma _1\), several algorithms compete to achieve the first rank with high values of SSIM, but a further analysis of the \(\alpha \)-robustness shows that our contribution, with smoothed median filtering are the most robust methods, being \(\alpha \)-robust with \(\alpha =0.05\), while most of other algorithms achieve a higher value (\(\alpha > 0.10\)). We also show in Fig. 4 a visual comparison of the results obtained by means of several robust algorithms. This comparison confirms visually that our method enhances efficiently the structures in the image, as measured by the SSIM.

3.2 Application for Background Subtraction in Urban Videos

Background subtraction is a crucial step in many computer vision systems, and consists of detecting moving objects within videos by subtracting the background from the filmed scene, without any a priori knowledge about the foreground [10]. This technique has been widely investigated since the 90’s, mainly for video-surveillance applications, but also for many others as compression, medecine, etc.

In [34], we have proposed a comprehensive review of background subtraction techniques, and compared classic and modern algorithms thanks to real and synthetic urban videos. The comparison of these approaches by averaging quality measures upon a given data-set has shown some limitations, and it is difficult to separate them, when F-measure is always greater than 0.9 for the best methods in particular. To explore robustness evaluation in this article, we have kept the best algorithms of this survey (whose names afterwards are similar to those chosen in [34]): a simple adaptive background learning method explained in [34]; the fuzzy algorithm based on Choquet integral [12]; the single Gaussian distribution background model in [41]; the representation with mixture of Gaussian distributions from [19]; the type-2 fuzzy based approach [9]; the multi-layer algorithm employing color and texture [42]; the pixel based adaptive segmenter [16]; eigen values based background model introduced by [28] and a method that employs self-organizing maps [24].

A still open challenge of background subtraction is to take into account lighting variations in videos. Therefore, to compare those methods, we have generated synthetic videos with variable lighting perturbations by means of a real-time 3-D urban simulator produced by the 4D-Virtualiz companyFootnote 1. From a single scenario of 4500 frames long (i.e. 3 min) we have generated 4 videos, wherein an ambient light noise has been modeled in a multi-scale way, by perturbating lighting during the sequence. We have calculated this data-set by adapting ambient illumination in the rendering equation [20]. The lighting function is first modeled in a cloudy scene as a constant power during the whole sequence (first scale \(\sigma _1\)), which is then perturbed by sunny periods (in the following, such a period is denoted by S), with a higher lighting value. The probability that such periods occur during the sequence P(S) increases, leading to 3 more scales of lighting noise. We also impose that the duration of S decreases and its associated lighting value increases while P(S) increases, which models S as a function converging to a Dirac impulse and simulates fast lighting variations. Figure 5-a is a plot of the lighting value during the time of the 4 videos generated by the set of scales \(\{\sigma _k\}_{k=1,4}\), with sample images obtained by the 3-D simulator. Moving objects in those scenes are automatically calculated, providing the ground truth for our tests as binary images (see Fig. 5-b). To evaluate the similarity between algorithms’ outputs (\(\mathbf {X_k}\) in our formalism) and ground truth (\(\mathbf {Y_k^0}\)) frames, we opt for a calculation of SSIM adapted to binary segmentation, as we proposed in [34].

The graphical evaluation of robustness for all tested algorithms is given in Fig. 6-a, wherein the plot represents the SSIM values obtained for all algorithms (averaged over all video frames), at each noise scale (lighting noise impact is plotted as a reference with a black dotted line). Values of \(\alpha \)-robustness are presented in Fig. 6-b.

This experiment permits to separate the tested algorithms into two sets. A first one is composed of non-robust methods having high \(\alpha \) values (\(\alpha >0.15\)): PBAS, LBAdaptiveSOM and DPEigenBGS. The test failed for the two last methods of this group, considering the plot of Fig. 6-a. The other set contains the rest of the algorithms, the most \(\alpha \)-robust method is MoGV1BGS with a \(\alpha \) value of 0.01. We present in Fig. 7 the outputs obtained by means of the most robust algorithms of our test. The colors correspond to: black and yellow for true and false negative (background) detections, white and red for true and false positive (foreground) detections. Even if these are the best methods of our study, a lot of pixels are still wrongly classified (yellow and red pixels), which shows the complexity to take into account lighting variations in video background subtraction. Moreover, the calculations of those elements lead to the same evaluation with F-measuring each algorithm. Most of the observations we have enumerated previously are confirmed with F-measure instead of SSIM measure (MoGV1BGS evaluated as the most \(\alpha \)-robust methods, and PBAS, LBAdaptiveSOM and DPEigenBGS as the least ones).

4 Discussion

In this article, we have proposed a novel approach to evaluate the robustness of image processing algorithms, based on multiple scales of additive noises, to guarantee their error towards a given application. This first research work opens the doors to numerous investigations.

The example of image denoising filtering shows that the robustness of an image processing approach should be assessed at several noise powers instead of a single one, to ensure that the tested algorithm resists to this perturbation, even in the worst configurations. Moreover, it confirms the importance to build a sufficiently large data-set, as the current challenge of Big Data highlights. We have to propose more complex noises related to other concrete applications (medical image segmentation for example), and to confront image processing algorithms to high perturbances. In Fig. 3, we can remark that our smoothed shock filtering algorithm has still a good behaviour (SSIM greater than 0.8) for the most aggressive noise (\(\sigma _5\)), which invites us to increase its impact.

This first specific example we have proposed is obviously not a restriction for our definition of robustness, which can be used for any other kind of image processing algorithm. For instance, we have proposed another experiment devoted to evaluate background subtraction techniques, for which the impact of an increasing noise is not studied so far and robustness is not specifically addressed. Our \(\alpha \)-robustness measure is a relevant way to compare new methods w.r.t. state-of-the-art, by employing quality measures as the SSIM. Various other fields can benefit from the \(\alpha \)-robustness as medical image segmentation, large satellite image compression, etc.

Equations 1 and 2 refer to an additive Gaussian noise alteration upon data. Several complex noises can be assimilated to Gaussian distributions, as the Rician noise in medical MRI acquisition system for example [2]. But as an extension, various other noise impacts can be addressed, as Poisson noise, multiplicative Gaussian noise and so on. Moreover, it should be noted that noise is generally modeled at the pixel’s scale, without any more global consideration. Here, our framework has been applied with the representation of global illumination changes in videos, which is still an open scientific challenge.

Gathering data to evaluate our measure of robustness implies that we are able to reproduce or to estimate a given noise at several scales. Hence, two strategies can be conducted. The first one consists of generating synthetic data with a variable noise. Still in the example of background subtraction, this means that we should ensure that a realistic simulator is capable of rendering relevant 3-D urban scenarios, incorporating complex noises. The second option is to collect data, and to estimate the target noise inside (\(\delta y_i\) in Eq. 1). In our illustrative example of medical image analysis, Rician noise can be estimated thanks to recent works as [11], which enables sorting MRI volumes by increasing noise order.

Finally, our definition of robustness can be compared with other formulations based on multiple instances of noises. Instead of using Lipschitz continuity (imposing local linearity under a given slope, \(\alpha \)), we could consider an algorithm as robust by considering that the quality function Q is a linear function over the domain of noise \(\sigma \). To compare those definitions, a supervised evaluation should be conducted, to decide which formalism induces the best robustness, regarding a given application (for example, counting people in urban scenes thanks to background subtracted videos).

Notes

References

Acharya, T., Ray, A.: Image Processing - Principles and Applications. Wiley, New Jersey (2006)

Aja-Fernandez, S., Tristan-Vega, A.: A review on statistical noise models for magnetic resonance imaging. Technical report TECH-LPI2013-01, University of Valladolid (2013)

Al-Assaf, A., Vaudrey, T., Klette, R., Woo, Y.: An approach for evaluating robustness of edge operators using real-world driving scenes. In: International Conference on Image and Vision Computing, pp. 1–6 (2008)

Alvarez, L., Mazorra, L.: Signal and image restoration using shock filters and anisotropic diffusion. SIAM J. Numer. Anal. 31(2), 590–605 (1994)

Ambrosio, L., Tilli, P.: Topics on Analysis in Metric Spaces. Oxford University Press, Oxford (2004)

Basiri, S., Ollila, E., Koivunen, V.: Robust, scalable and fast bootstrap method for analyzing large scale data. IEEE Trans. Signal Process. 64, 1007–1017 (2015)

Bishop, C.: Pattern Recognition and Machine Learning. Springer, New York (2006)

Bouveyron, C., Girard, S.: Robust supervised classification with mixture models: learning from data with uncertain labels. Pattern Recogn. 42(11), 2649–2658 (2009)

Bouwmans, T., El Baf, F.: Modeling of dynamic backgrounds by type-2 fuzzy Gaussian mixture models. MASAUM J. Basic Appl. Sci. 1(2), 265–276 (2010)

Bouwmans, T., Porikli, F., Hörferlin, B., Vacavant, A.: Background Modeling and Foreground Detection for Video Surveillance. Chapman and Hall/CRC, Boca Raton (2014). ISBN: 1482205386

Coupé, P., Manjón, J., Gedamu, E., Arnold, D., Robles, M., Collins, D.: Robust Rician noise estimation for MR images. Med. Image Anal. 14(4), 483–493 (2010)

El Baf, F., Bouwmans, T., Vachon, B.: Fuzzy integral for moving object detection. In: IEEE International Conference on Fuzzy Systems, Istanbul (2008)

Fischler, M., Bolles, R.C.: Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. In: DARPA Image Understanding Workshop, pp. 71–88 (1980)

Fridrich, J., Goljan, M.: Comparing robustness of watermarking techniques. In: SPIE Security and Watermarking of Multimedia Contents (1999)

Gilboa, G., Sochen, N., Zeevi, Y.: Regularized shock filters and complex diffusion. In: European Conference on Computer Vision, pp. 399–313 (2004)

Hofmann, M., Tiefenbacher, P., Rigoll, G.: Background segmentation with feedback: the pixel-based adaptive segmenter. In: IEEE Conference on Computer Vision and Pattern Recognition Work, Providence (2012)

Hough, P.: Method and means for recognizing complex patterns (1962)

Huang, T., Yang, G., Tang, G.: A fast two-dimensional median filtering algorithm. IEEE Trans. Acous. Speech Signal Process. 27(1), 13–18 (1979)

Kaewtrakulpong, P., Bowden, R.: An improved adaptive background mixture model for realtime tracking with shadow detection. In: IEEE European Workshop on Advanced Video Based Surveillance Systems, London (2001)

Kajiya, J.: The rendering equation. ACM SIGGRAPH Comput. Graph. 20(4), 143–150 (1986)

Kass, M., Solomon, J.: Smoothed local histogram filters. ACM Trans. Graph. 29(4), 100:1–100:10 (2010)

Lebrun, M., Colom, M., Buades, A., Morel, J.: Secrets of image denoising cuisine. Acta Num. 21, 475–576 (2012)

Liu, Z., Klette, R.: Performance evaluation of stereo and motion analysis on rectified image sequences. CITR, The University of Auckland, Technical report (2007)

Maddalena, L., Petrosino, A.: A self-organizing approach to background subtraction for visual surveillance applications. IEEE Trans. Image Process. 17(7), 1168–1177 (2008)

Maggioni, M., Boracchi, G., Foi, A., Egiazarian, K.: Video denoising, deblocking and enhancement through separable 4-D nonlocal spatiotemporal transforms. IEEE Trans. Image Process. 21(9), 3952–3966 (2012)

Meer, P.: From a robust hierarchy to a hierarchy of robustness. In: Foundations of Image Analysis, pp. 323–347. Kluwer (2001)

Meer, P.: Robust techniques for computer vision. In: Emerging Topics in Computer Vision, pp. 107–190. Prentice Hall, Boston (2004)

Oliver, N., Rosario, B., Pentland, A.: A Bayesian computer vision system for modeling human interactions. IEEE Trans. Pattern Anal. Mach. Intell. 22(8), 831–843 (2000)

Ordonez, C., Omiecinski, E.: FREM: Fast and robust EM clustering for large data sets. In: International Conference on Systems, Man, and Cybernetics, pp. 590–599 (2012)

Osher, S., Rudin, L.: Feature-oriented image enhancement using shock filters. SIAM J. Numer. Anal. 27, 919–940 (1990)

Pun, G., Ratib, O.: Image analysis and computer vision in medicine. Comput. Med. Imaging Graph. 18(2), 85–96 (1994)

Qureshi, S.: Computer vision acceleration using GPUs. Talk presented at the AMD Fusion Developer Summit, Bellevue (2011)

Rampel, R., Ronchetti, E., Rousseeuw, P., Stahel, W.A.: Robust Statistics. The Approach Based on Influence Function. Wiley, New York (1986)

Sobral, A., Vacavant, A.: A comprehensive review of background subtraction algorithms evaluated with synthetic and real videos. Comput. Vis. Image Underst. 122, 4–21 (2014)

Thomas, R., McSharry, P.: Big Data Revolution: What Farmers, Doctors and Insurance Agents Teach Us About Discovering Big Data Patterns. Wiley, New York (2015)

Tomasi, C., Manduchi, R.: Bilateral filtering for gray and color images. In: International Conference on Computer Vision, Bombay (1998)

Vacavant, A., Albouy-Kissi, A., Menguy, P., Solomon, J.: Fast smoothed shock filtering. In: IEEE International Conference on Pattern Recognition, Tsukuba (2012)

Vacavant, A., Ali, A., Grand-Brochier, M., Albouy-Kissi, A., Boire, J., Alfidja, A., Chabrot, P.: Smoothed shock filtered defuzzification with Zernike moments for liver tumor extraction in MR images. In: IEEE International Conference on Image Processing Tools and Applications, Orléans (2015)

Wang, Z., Bovik, A., Sheikh, H., Simoncelli, E.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Weickert, J.: Coherence-enhancing shock filters. In: Michaelis, B., Krell, G. (eds.) DAGM 2003. LNCS, vol. 2781, pp. 1–8. Springer, Heidelberg (2003). doi:10.1007/978-3-540-45243-0_1

Wren, C., Azarbayejani, A., Darrell, T., Pentland, A.: Pfinder: real-time tracking of the human body. IEEE Trans. Pattern Anal. Mach. Intell. 19(7), 780–785 (1997)

Yao, J., Odobez, J.: Multi-layer background subtraction based on color and texture. In: IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis (2007)

Acknowledgement

The author would like to thank the 4D-Virtualiz company for providing realistic synthetic urban videos for this research work.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Vacavant, A. (2017). A Novel Definition of Robustness for Image Processing Algorithms. In: Kerautret, B., Colom, M., Monasse, P. (eds) Reproducible Research in Pattern Recognition. RRPR 2016. Lecture Notes in Computer Science(), vol 10214. Springer, Cham. https://doi.org/10.1007/978-3-319-56414-2_6

Download citation

DOI: https://doi.org/10.1007/978-3-319-56414-2_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-56413-5

Online ISBN: 978-3-319-56414-2

eBook Packages: Computer ScienceComputer Science (R0)