Abstract

With the explosion in the number of digital images taken every day, the demand for more accurate and visually pleasing images is increasing. However, the images captured by modern cameras are inevitably degraded by noise, which leads to deteriorated visual image quality. Therefore, work is required to reduce noise without losing image features (edges, corners, and other sharp structures). So far, researchers have already proposed various methods for decreasing noise. Each method has its own advantages and disadvantages. In this paper, we summarize some important research in the field of image denoising. First, we give the formulation of the image denoising problem, and then we present several image denoising techniques. In addition, we discuss the characteristics of these techniques. Finally, we provide several promising directions for future research.

Similar content being viewed by others

Introduction

Owing to the influence of environment, transmission channel, and other factors, images are inevitably contaminated by noise during acquisition, compression, and transmission, leading to distortion and loss of image information. With the presence of noise, possible subsequent image processing tasks, such as video processing, image analysis, and tracking, are adversely affected. Therefore, image denoising plays an important role in modern image processing systems.

Image denoising is to remove noise from a noisy image, so as to restore the true image. However, since noise, edge, and texture are high frequency components, it is difficult to distinguish them in the process of denoising and the denoised images could inevitably lose some details. Overall, recovering meaningful information from noisy images in the process of noise removal to obtain high quality images is an important problem nowadays.

In fact, image denoising is a classic problem and has been studied for a long time. However, it remains a challenging and open task. The main reason for this is that from a mathematical perspective, image denoising is an inverse problem and its solution is not unique. In recent decades, great achievements have been made in the area of image denoising [1,2,3,4], and they are reviewed in the following sections.

The remainder of this paper is organized as follows. In Section “Image denoising problem statement”, we give the formulation of the image denoising problem. Sections “Classical denoising method, Transform techniques in image denoising, CNN-based denoising methods” summarize the denoising techniques proposed up to now. Section “Experiments” presents extensive experiments and discussion. Conclusions and some possible directions for future study are presented in Section “Conclusions”.

Image denoising problem statement

Mathematically, the problem of image denoising can be modeled as follows:

where y is the observed noisy image, x is the unknown clean image, and n represents additive white Gaussian noise (AWGN) with standard deviation σn, which can be estimated in practical applications by various methods, such as median absolute deviation [5], block-based estimation [6], and principle component analysis (PCA)-based methods [7]. The purpose of noise reduction is to decrease the noise in natural images while minimizing the loss of original features and improving the signal-to-noise ratio (SNR). The major challenges for image denoising are as follows:

-

flat areas should be smooth,

-

edges should be protected without blurring,

-

textures should be preserved, and

-

new artifacts should not be generated.

Owing to solve the clean image x from the Eq. (1) is an ill-posed problem, we cannot get the unique solution from the image model with noise. To obtain a good estimation image \( \hat{x} \), image denoising has been well-studied in the field of image processing over the past several years. Generally, image denoising methods can be roughly classified as [3]: spatial domain methods, transform domain methods, which are introduced in more detail in the next couple of sections.

Classical denoising method

Spatial domain methods aim to remove noise by calculating the gray value of each pixel based on the correlation between pixels/image patches in the original image [8]. In general, spatial domain methods can be divided into two categories: spatial domain filtering and variational denoising methods.

Spatial domain filtering

Since filtering is a major means of image processing, a large number of spatial filters have been applied to image denoising [9,10,11,12,13,14,15,16,17,18,19], which can be further classified into two types: linear filters and non-linear filters.

Originally, linear filters were adopted to remove noise in the spatial domain, but they fail to preserve image textures. Mean filtering [14] has been adopted for Gaussian noise reduction, however, it can over-smooth images with high noise [15]. To overcome this disadvantage, Wiener filtering [16, 17] has further been employed, but it also can easily blur sharp edges. By using non-linear filters, such as median filtering [14, 18] and weighted median filtering [19], noise can be suppressed without any identification. As a non-linear, edge-preserving, and noise-reducing smoothing filter, Bilateral filtering [10] is widely used for image denoising. The intensity value of each pixel is replaced with a weighted average of intensity values from nearby pixels. One issue concerning the bilateral filter is its efficiency. The brute-force implementation takes O(Nr2) time, which is prohibitively high when the kernel radius r is large.

Spatial filters make use of low pass filtering on pixel groups with the statement that the noise occupies a higher region of the frequency spectrum. Normally, spatial filters eliminate noise to a reasonable extent but at the cost of image blurring, which in turn loses sharp edges.

Variational denoising methods

Existing denoising methods use image priors and minimize an energy function E to calculate the denoised image \( \hat{x} \). First, we obtain a function E from a noisy image y, and then a low number is corresponded to a noise-free image through a mapping procedure. Then, we can determine a denoised image \( \hat{x} \) by minimizing E:

The motivation for variational denoising methods of Eq. (2) is maximum a posterior (MAP) probability estimate. From a Bayesian perspective, the MAP probability estimate of x is

which can be equivalently formulated as

where the first term P(y|x) is a likelihood function of x, and the second term P(x) represents the image prior. In the case of AWGN, the objective function can generally be formulated as

where \( {\left\Vert y-x\right\Vert}_2^2 \) is a data fidelity term that denotes the difference between the original and noisy images. R(x) = ‐ logP(x) denotes a regularization term and λ is the regularization parameter. For the variational denoising methods, the key is to find a suitable image prior (R(x)). Successful prior models include gradient priors, non-local self-similarity (NSS) priors, sparse priors, and low-rank priors.

In the remainder of this subsection, several popular variational denoising methods are summarized.

Total variation regularization

Starting with Tikhonov regularization [20, 21], the advantages of non-quadratic regularizations have been explored for a long time. Although the Tikohonov method [20, 21] is the simplest one in which R(x) is minimized with the L2 norm, it over-smooths image details [22, 23]. To solve this problem, anisotropic diffusion-based [24, 25] methods have been used to preserve image details, nevertheless, the edges are still blurred [26, 27].

Meanwhile, to solve the issue of smoothness, total variation (TV)-based regularization [28] has been proposed. This is the most influential research in the field of image denoising. TV regularization is based on the statistical fact that natural images are locally smooth and the pixel intensity gradually varies in most regions. It is defined as follows [28]:

where ∇x is the gradient of x.

It has achieved great success in image denoising because it can not only effectively calculate the optimal solution but also retain sharp edges. However, it has three major drawbacks: textures tend to be over-smoothed, flat areas are approximated by a piecewise constant surface resulting in a stair-casing effect and the image suffers from losses of contrast [29,30,31,32].

To improve the performance of the TV-based regularization model, extensive studies have been conducted in image smoothing by adopting partial differential equations [33,34,35,36]. For example, Beck et al. [36] proposed a fast gradient-based method for constrained TV, which is a general framework for covering other types of non-smooth regularizers. Although it improves the peak signal-to-noise rate (PSNR) values, it only accounts for the local characteristics of the image.

Non-local regularization

While local denoising methods have low time complexities, the performances of these methods are limited when the noise level is high. The reason for this is that the correlations of neighborhood pixels are seriously disturbed by high level noise. Lately, some methods have applied the NSS prior [37]. This is because images contain extensive similar patches at different locations. A pioneering work on non-local means (NLM) [38] used the weighted filtering of the NSS prior to achieve image denoising, which is the most notable improvement for the problem of image denoising. Its basic idea is to build a pointwise estimation of the image, where each pixel is obtained as a weighted average of pixels centered at regions that are similar to the region centered at the estimated pixel. For a given pixel xi in an image x, NLM(xi) indicates the NLM-filtered value. Let xi and xj be image patches centered at xi and xj, respectively. Let wi, j be the weight of xj to xi, which is computed by.

where ci denotes a normalization factor, and h indicates a filter parameter. Different from local denoising methods, NLM can make full use of the information provided by the given images, which can be robust to noise. Since then, many improved versions have been proposed. Some studies focus on the acceleration of the algorithm [39,40,41,42,43,44], while others focus on how to enhance the performance of the algorithm [45,46,47].

By considering the first step of NLM [38] (the estimation of pixel similarities), regularization methods have been developed [48]. According to Eq. (5), the NSS prior is defined as [49].

where κi and wi denote column vectors; the former contains the central pixels around xi, and the latter contains all corresponding weights wi, j.

At present, most research on image denoising has shifted from local methods to non-local methods [50,51,52,53,54,55]. For instance, extensions of non-local methods to TV regularization have been proposed in refs. [37, 56]. Considering the respective merits of the TV and NLM methods, an adaptive regularization of NLM (R-NL) [56] has been proposed to combine NLM with TV regularization. The results showed that the combination of these two models was successful in removing noise. Nevertheless, structural information is not well preserved by these methods, which degrades the visual image quality. Moreover, further prominent extensions and improvements of NSS methods are based on learning the likelihood of image patches [57] and exploiting the low-rank property using weighted nuclear norm minimization (WNNM) [58, 59].

Sparse representation

Sparse representation merely requires that each image patch can be represented as a linear combination of several patches from an over-complete dictionary [12, 60]. Many current image denoising methods exploit the sparsity prior of natural images.

Sparse representation-based methods encode an image over an over-complete dictionary D with L1-norm sparsity regularization on the coding vector, i.e., \( \underset{\boldsymbol{\upalpha}}{\min }{\left\Vert \boldsymbol{\upalpha} \right\Vert}_1\ s.t.x=\mathbf{D}\boldsymbol{\upalpha } \), resulting in a general model:

where α is a matrix containing vectors of sparse coefficients. Eq. (9) turns the estimation of x in Eq. (5) into α.

As a dictionary learning method, the sparse representation model can be learned from a dataset, as well as from the image itself with the K-singular value decomposition (K-SVD) algorithm [61, 62]. The basic idea behind K-SVD denoising is to learn the dictionary D from a noisy image y by solving the following joint optimization problem:

where Ri is the matrix extracting patch xi from image x at location i.

Since the learned dictionaries can more flexibly represent the image structures [63], sparse representation models with learned dictionaries perform better than designed dictionaries. As shown in ref. [61], the K-SVD dictionary achieves up to 1–2 dB better for bit rates less than 1.5 bits per pixel (where the sparsity model holds true) compared to all other dictionaries. However, methods in this category are all local, meaning they ignore the correlation between non-local information of the image. In the case of high noise, local information is seriously disturbed, and the result of denoising is not effective.

Coupled with the NSS prior [37], the sparsity from self-similarity properties of natural images, which has received significant attention in the image processing community, is widely applied for image denoising [64,65,66]. One representative work is the non-local centralized sparse representation (NCSR) model [66].

where βi is a good estimation of α. Then, for each image patch xi, βi can be computed as the weighted average of αi, q:

where wi, q \( =\frac{1}{c_i}\exp \left(-\frac{{\left\Vert {\hat{\mathtt{x}}}_i-{\hat{\mathtt{x}}}_{i,q}\right\Vert}_2^2}{h}\right) \), \( {\hat{\boldsymbol{x}}}_i \) is the estimation of xi, and \( {\hat{\boldsymbol{x}}}_{i,q} \) are the non-local similar patches to \( {\hat{\boldsymbol{x}}}_i \) in a search window Si.

The NCSR model naturally integrates NSS into the sparse representation framework, and it is one of the most commonly considered image denoising methods at present. As mentioned in ref. [66], NCSR is very effective in reconstructing both smooth and textured regions. Despite the successful combination of the above two techniques, the iterative dictionary learning and non-local estimates of unknown sparse coefficients make this algorithm computationally demanding, which largely limits its applicability in many applications.

Low-rank minimization

Different from the sparse representation model, this low-rank-based model formats similar patches as a matrix. Each column of this matrix is a stretched patch vector. By exploiting the low-rank prior of the matrix, this model can effectively reduce the noise in an image [67, 68]. The low-rank method first appeared in the field of matrix filling, and it has made great progress under the drive of Cand \( \overset{`}{\mathrm{e}} \) s and Ma [69]. In recent years, the low-rank model has achieved good denoising results, resulting in low-rank denoising methods being studied more often.

Low-rank approaches for the reconstruction of noisy data can be grouped in two categories: methods based on low rank matrix factorization (refs. [70,71,72,73,74,75,76,77,78]) and those based on nuclear norm minimization (NNM, ref. [58, 59, 79, 80]).

Methods in the first category typically approximate a given data matrix as a product of two matrices of fixed low rank. For example, in refs. [70, 71], a video denoising algorithm based on low-rank matrix recovery was proposed. In these methods, similar patches are decomposed by low-rank decomposition to remove noise from videos. Ref. [72] proposed an image denoising algorithm based on low-rank matrix recovery and obtained good results. In ref. [73], a hybrid noise removal algorithm based on low-rank matrix recovery was proposed. Dong et al. [74] proposed a low-rank method based on SVD to model the sparse representation of non-locally similar image patches. In this method, singular value iteration contraction in the BayesShrink framework was used to remove noise. The main limitation of these methods is that the rank must be provided as input, and values that are too low or too high will result in the loss of details or the preservation of noise, respectively.

Low-rank minimization is a non-convex non-deterministic polynomial (NP) hard problem [63]. Alternatively, methods based on NNM aim to find the lowest rank approximation X of an observed matrix Y. Let Y be a matrix of noisy patches. From Y, the low-rank matrix X can be estimated by the following NNM problem [80]:

where \( {\left\Vert \cdot \right\Vert}_F^2 \) denotes the Frobenius norm, and the nuclear norm \( {\left\Vert \boldsymbol{X}\right\Vert}_{\ast }=\sum \limits_i{\left\Vert {\sigma}_i\left(\boldsymbol{X}\right)\right\Vert}_1 \), where σi(X) is the i-th singular value of X. A closed-form solution of Eq. (13) has been proposed in ref. [80] and is shown in Eq. (14)

where Y = UΣVT is the SVD of Y and Sλ(Σ) = max(Σ − λI, 0) is the singular value thresholding operator. For NNM [80], the weights of each singular value are equal, and the same threshold is applied to each singular value, however different singular values have different levels of importance.

Hence, on the basis of the NNM, Gu et al. [58, 59] proposed a WNNM model, which can adaptively assign weights to singular values of different sizes and denoise them using a soft threshold method. Given a weight vector w, the weighted nuclear norm proximal problem consists of finding an approximation X of Y that minimizes the following cost function:

where \( {\left\Vert \boldsymbol{X}\right\Vert}_{\boldsymbol{w},\ast }=\sum \limits_i{\left\Vert {w}_i{\sigma}_i\left(\boldsymbol{X}\right)\right\Vert}_1 \) is the weighted nuclear norm of X. Here, wi denotes the weight assigned to singular value σi(X). As shown in ref. [58], Eq. (15) has a unique global minimum when the weights satisfy 0 ≤ w1 ≤ ⋯ ≤ wn:

where Sw(Σ) = max(Σ − Diag(w), 0).

From ref. [58], we know that WNNM achieves advanced denoising performance and is more robust to noise strength than other NNMs. Besides, the low-rank theory has been widely used in artificial intelligence, image processing, pattern recognition, computer vision, and other fields [63]. Although most low-rank minimization methods (especially the WNNM method) outperform previous denoising methods, the computational cost of the iterative boosting step is relatively high.

Transform techniques in image denoising

Image denoising methods have gradually developed from the initial spatial domain methods to the present transform domain methods. Initially, transform domain methods were developed from the Fourier transform, but since then, a variety of transform domain methods gradually emerged, such as cosine transform, wavelet domain methods [81,82,83], and block-matching and 3D filtering (BM3D) [55]. Transform domain methods employ the following observation: the characteristics of image information and noise are different in the transform domain.

Transform domain filtering methods

In contrast with spatial domain filtering methods, transform domain filtering methods first transform the given noisy image to another domain, and then they apply a denoising procedure on the transformed image according to the different characteristics of the image and its noise (larger coefficients denote the high frequency part, i.e., the details or edges of the image, smaller coefficients denote the noise). The transform domain filtering methods can be subdivided according to the chosen basis transform functions, which may be data adaptive or non-data adaptive [84].

Data adaptive transform

Independent component analysis (ICA) [85, 86] and PCA [65, 87] functions are adopted as the transform tools on the given noisy images. Among them, the ICA method has been successfully implemented for denoising non-Gaussian data. These two kinds of methods are data adaptive, and the assumptions on the difference between the image and noise still hold. However, their main drawback is high-computational cost because they use sliding windows and require a sample of noise-free data or at least two image frames from the same scene. However, in some applications, it might be difficult to obtain noise-free training data.

Non-data adaptive transform

The non-data adaptive transform domain filtering methods can be further subdivided into two domains, namely spatial-frequency domain and wavelet domain.

Spatial-frequency domain filtering methods use low pass filtering by designing a frequency domain filter that passes all frequencies lower than and attenuates all frequencies higher than a cut-off frequency [14, 16]. In general, after being transformed by low-pass filters, such as Fourier transform, image information mainly spreads in the low frequency domain, while noise spreads in the high frequency domain. Thus, we can remove noise by selecting specific transform domain features and transforming them back to the image domain [88]. Nevertheless, these methods are time-consuming and depend on the cut-off frequency and filter function behavior.

As the most investigated transform in denoising, the wavelet transform [89] decomposes the input data into a scale-space representation. It has been proved that wavelets can successfully remove noise while preserving the image characteristics, regardless of its frequency content [90,91,92,93,94,95]. Similar to spatial domain filtering, filtering operations in the wavelet domain can also be subdivided into linear and non-linear methods. Since the wavelet transform has many good characteristics, such as sparseness and multi-scale, it is still an active area of research in image denoising [96]. However, the wavelet transform heavily relies on the selection of wavelet bases. If the selection is inappropriate, image shown in the wavelet domain cannot be well represented, which causes poor denoising effect. Therefore, this method is not adaptive.

BM3D

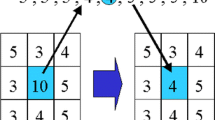

As an effective and powerful extension of the NLM approach, BM3D, which was proposed by Dabov et al. [55], is the most popular denoising method. BM3D is a two-stage non-locally collaborative filtering method in the transform domain. In this method, similar patches are stacked into 3D groups by block matching, and the 3D groups are transformed into the wavelet domain. Then, hard thresholding or Wiener filtering with coefficients is employed in the wavelet domain. Finally, after an inverse transform of coefficients, all estimated patches are aggregated to reconstruct the whole image. However, when the noise increases gradually, the denoising performance of BM3D decreases greatly and artifacts are introduced, especially in flat areas.

To improve denoising performance, many improved versions of BM3D have appeared [97, 98]. For example, Maggioni et al. [98] recently proposed the block-matching and 4D filtering (BM4D) method, which is an extension of BM3D to volumetric data. It utilizes cubes of voxels, which are stacked into a 4-D group. The 4-D transform applied on the group simultaneously exploits the local correlation and non-local correlation of voxels. Thus, the spectrum of the group is highly sparse, leading to very effective separation of signal and noise through coefficient shrinkage.

CNN-based denoising methods

In general, the solving methods of the objective function in Eq. (7) build upon the image degradation process and the image priors, and it can be divided into two main categories: model-based optimization methods and convolutional neural network (CNN)-based methods. The variational denoising methods discussed above belong to model-based optimization schemes, which find optimal solutions to reconstruct the denoised image. However, such methods usually involve time-consuming iterative inference. On the contrary, the CNN-based denoising methods attempt to learn a mapping function by optimizing a loss function on a training set that contains degraded-clean image pairs [99, 100].

Recently, CNN-based methods have been developed rapidly and have performed well in many low-level computer vision tasks [101, 102]. The use of a CNN for image denoising can be tracked back to [103], where a five-layer network was developed. In recent years, many CNN-based denoising methods have been proposed [99, 104,105,106,107,108]. Compared to that of ref. [103], the performance of these methods has been greatly improved. Furthermore, CNN-based denoising methods can be divided into two categories: multi-layer perception (MLP) models and deep learning methods.

MLP models

MLP-based image denoising models include auto-encoders proposed by Vincent et al. [104] and Xie et al. [105]. Chen et al. [99] proposed a feed-forward deep network called the trainable non-linear reaction diffusion (TNRD) model, which achieved a better denoising effect. This category of methods has several advantages. First, these methods work efficiently owing to fewer ratiocination steps. Moreover, because optimization algorithms [77] have the ability to derive the discriminative architecture, these methods have better interpretability. Nevertheless, interpretability can increase the cost of performance; for example, the MAP model [106] restricts the learned priors and inference procedure.

Deep learning-based denoising methods

The state-of-the-art deep learning denoising methods are typically based on CNNs. The general model for deep learning-based denoising methods is formulated as

where F(⋅) denotes a CNN with parameter set Θ, and loss(⋅) denotes the loss function. loss(⋅) is used to estimate the proximity between the denoised image \( \hat{x} \) and the ground-truth x. Owing to their outstanding denoising ability, considerable attention has been focused on deep learning-based denoising methods.

Zhang et al. [106] introduced residual learning and batch standardization into image denoising for the first time; they also proposed feed-forward denoising CNNs (DnCNNs). The aim of the DnCNN model is to learn a function \( \hat{x}=F\left(y;{\Theta}_{\sigma}\right) \) that maps between y and \( \hat{x} \). The parameters Θσ are trained for noisy images under a fixed variance σ. There are two main characteristics of DnCNNs: the model applies a residual learning formulation to learn a mapping function, and it combines it with batch normalization to accelerate the training procedure while improving the denoising results. Specifically, it turns out that residual learning and batch normalization can benefit each other, and their integration is effective in speeding up the training and boosting denoising performance. Although a trained DnCNN can also handle compression and interpolation errors, the trained model under σ is not suitable for other noise variances.

When the noise level σ is unknown, the denoising method should enable the user to adaptively make a trade-off between noise suppression and texture protection. The fast and flexible denoising convolutional neural network (FFDNet) [107] was introduced to satisfy these desirable characteristics. In particular, FFDNet can be modeled as \( \hat{x}=F\left(y,\mathrm{M};\Theta \right) \) (M denotes a noise level map), which is a main contribution. For FFDNet, M indicates an input while the parameter set Θ are fixed for noise level. Another major contribution is that FFDNet acts on down-sampled sub-images, which speeds up the training and testing and also expands the receptive field. Thus, FFDNet is quite flexible to different noises.

Although this method is effective and has a short running time, the time complexity of the learning process is very high. The development of CNN-based denoising methods has enhanced the learning of high-level features by using a hierarchical network.

Experiments

For a comparative study, the existing denoising methods adopt two factors (visual analysis and performance metrics) to analyze the denoising performance.

Currently, we cannot find any mathematical or specific methods to evaluate the visual analysis. In general, there are three criteria for visual analysis: (1) significant degree of artifacts, (2) protection of edges, and (3) reservation of textures. For image denoising methods, several performance metrics are adopted to evaluate accuracy, e.g., PSNR and structure similarity index measurement (SSIM) [109].

In this study, all image denoising methods work on noisy images under three different noise variances σ ∈ [30, 50, 75]. For the test images, we use two datasets for a thorough evaluation: BSD68 [110] and Set12. The BSD68 dataset consists of 68 images from the separate test set of the BSD dataset. The Set12 dataset, which is shown in Fig. 1, is a collection of widely used testing images. The sizes of the first seven images are 256 × 256, and the sizes of the last five images are 512 × 512.

Metrics of denoising performance

To evaluate the performance metrics of image denoising methods, PSNR and SSIM [109] are used as representative quantitative measurements:

Given a ground truth image x, the PSNR of a denoised image \( \hat{x} \) is defined by

In addition, the SSIM index is calculated by

where \( {\mu}_x,{\mu}_{\hat{x}},{\sigma}_x \), and \( {\sigma}_{\hat{x}} \) are the means and variances of x and \( \hat{x} \), respectively, \( {\sigma}_{x\hat{x}} \) is the covariance between x and \( \hat{x} \), and C1 and C2 are constant values used to avoid instability. While quantitative measurements cannot reflect the visual quality perfectly, visual quality comparisons on a set of images are necessary. Besides the noise removal effect, edge and texture preservation is vital for evaluating a denoising method.

Comparison methods

A comprehensive evaluation is conducted on several state-of-the-art methods, including Wiener filtering [16], Bilateral filtering [10], PCA method [87], Wavelet transform method [89], BM3D [55], TV-based regularization [28], NLM [38], R-NL [56], NCSR model [66], LRA_SVD [78], WNNM [58], DnCNN [106], and FFDNet [107]. Among them, the first five are all filtering methods, while the last two are CNN-based methods. The remaining algorithms are variational denoising methods.

In our experiments, the code and implementations provided by the original authors are used. All the source codes are run on an Intel Core i5–4570 CPU 3.20 GHz with 16 GB memory. The core part of the BM3D calculation is implemented with a compiled C++ mex-function and is performed in parallel, while the other methods are all conducted using MATLAB.

Comparison of filtering methods and variational denoising methods

We first present experimental results of image denoising on the 12 test images from the Set12 dataset. Figures 2 and 3 show the denoising comparison results by the filtering methods variational denoising methods, respectively.

Visual comparisons of denoising results on Lena image corrupted by additive white Gaussian noise with standard deviation 30: a Wiener filtering [16] (PSNR = 27.81 dB; SSIM = 0.707); b Bilateral filtering [10] (PSNR = 27.88 dB; SSIM = 0.712); c PCA method [87] (PSNR = 26.68 dB; SSIM = 0.596); d Wavelet transform domain method [89] (PSNR = 21.74 dB; SSIM = 0.316); e Collaborative filtering: BM3D [55] (PSNR = 31.26 dB; SSIM = 0.845)

Visual comparisons of denoising results on Boat image corrupted by additive white Gaussian noise with standard deviation 50: a TV-based regularization [28] (PSNR = 22.95 dB; SSIM = 0.456); b NLM [38] (PSNR = 24.63 dB; SSIM = 0.589); c R-NL [56] (PSNR = 25.42 dB; SSIM = 0.647); d NCSR model [66] (PSNR = 26.48 dB; SSIM = 0.689); e LRA_SVD [78] (PSNR = 26.65 dB; SSIM = 0.684); f WNNM [58] (PSNR = 26.97 dB; SSIM = 0.708)

From Fig. 2, one can see that the spatial filters (Wiener filtering [16] and Bilateral filtering [10]) denoise the image better than the transform domain filtering methods (PCA method [87] and Wavelet transform domain method [89]). However, the spatial filters eliminate high frequency noise at the expense of blurring fine details and sharp edges. The result of collaborative filtering (BM3D) [55] has big potential for noise reduction and edge protection.

In Fig. 3, the visual evaluation shows that the denoising result of the TV-based regularization [28] smooths the textures and generates artifacts. Although the R-NL [56] and NLM [38] methods can obtain better performances, these two methods have difficulty restoring tiny structures. Meanwhile, we find that the representative low-rank-based methods (WNNM [58], LRA_SVD [78]) and the sparse coding scheme NCSR [66] produce better results in homogenous regions because the underlying clean patches share similar features, so they can be approximated by a low-rank or sparse coding problem.

Comparison of CNN-based denoising methods

Here, we compare the denoising results of the CNN-based methods (DnCNN [106] and FFDNet [107]) with those of several current effective image denoising methods, including BM3D [55] and WNNM [58]. To the best of our knowledge, BM3D has been the most popular denoising method over recent years, and WNNM is a successful scheme that has been proposed recently.

Table 1 reports the PSNR results on the BSD68 dataset. From Table 1, the following observations can be made. First, FFDNet [107] outperforms BM3D [55] by a large margin and outperforms WNNM [58] by approximately 0.2 dB for a wide range of noise levels. Secondly, FFDNet is slightly inferior to DnCNN [106] when the noise level is low (e.g., σ ≤ 25), but it gradually outperforms DnCNN as the noise level increases (e.g., σ > 25).

In Fig. 4, we can see that the details of the antennas and contour areas are difficult to recover. BM3D [55] and WNNM [58] blur the fine textures, whereas the other two methods restore more textures. This is because Monarch has many repetitive structures, which can be effectively exploited by NSS. Moreover, the contour edges of these regions are much sharper and look more natural. Overall, FFDNet [107] produces the best perceptual quality of denoised images.

Conclusions

As the complexity and requirements of image denoising have increased, research in this field is still in high demand. We have introduced the recent developments of several image denoising methods and discussed their merits and drawbacks in this paper. Recently, the rise of NLM has replaced the traditional local denoising model, which has created a new theoretical branch, leading to significant advances in image denoising methods, including sparse representation, low-rank, and CNN (more specifically deep learning)-based denoising methods. Although the image sparsity and low-rank priors have been widely used in recent years, CNN-based methods, which have been proved to be effective, have undergone rapid growth in this time.

Despite the many in-depth studies on removing AWGN, few have considered real image denoising. The major obstacle is the complexity of real noises because AWGN is much simpler than real noises. In this situation, the thorough evaluation of a denoiser is a difficult task. There are several components (e.g., white balance, color demosaicing, noise reduction, color transform, and compression) contained in the in-camera pipeline. The output image quality is affected by some external and internal conditions, such as illumination, CCD/CMOS sensors, and camera shaking.

Although deep learning is developing rapidly, it is not necessarily an effective way to solve the denoising problem. The main reason for this is that real-world denoising processes lack image pairs for training. To the best of our knowledge, the existing denoising methods are all trained by simulated noisy data generated by adding AWGN to clean images. Nevertheless, for the real-world denoising process, we find that the CNNs trained by such simulated data are not sufficiently effective.

In summary, this paper aims to offer an overview of the available denoising methods. Since different types of noise require different denoising methods, the analysis of noise can be useful in developing novel denoising schemes. For future work, we must first explore how to deal with other types of noise, especially those existing in real life. Secondly, training deep models without using image pairs is still an open problem. Besides, the methodology of image denoising can also be expanded to other applications [111, 112].

Availability of data and materials

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- AWGN:

-

Additive white Gaussian noise

- BM3D:

-

Block-matching and 3D filtering

- CNN:

-

Convolutional netural network

- DnCNN:

-

Feed-forward denoising convolutional neural network

- FFDNet:

-

Fast and flexible denoising convolutional neural network

- ICA:

-

Independent component analysis

- K-SVD:

-

K-singular value decomposition

- MAP:

-

Maximum a posterior

- MLP:

-

Multi-layer perception model

- NCSR:

-

Non-local centralized sparse representation

- NLM:

-

Non-local means

- NNM:

-

Nuclear norm minimization

- NP:

-

Non-deterministic polynomial

- NSS:

-

Non-local self-similarity

- PCA:

-

Principle component analysis

- PSNR:

-

Peak signal to noise rate

- SNR:

-

Signal-to-noise ratio

- SSIM:

-

Structure similarity index measurement

- TV:

-

Total variation

- WNNM:

-

Weighted nuclear norm minimization

References

Motwani MC, Gadiya MC, Motwani RC, Harris FC Jr (2004) Survey of image denoising techniques. In: Abstracts of GSPX. Santa Clara Convention Center, Santa Clara, pp 27–30

Jain P, Tyagi V (2016) A survey of edge-preserving image denoising methods. Inf Syst Front 18(1):159–170. https://doi.org/10.1007/s10796-014-9527-0

Diwakar M, Kumar M (2018) A review on CT image noise and its denoising. Biomed Signal Process Control 42:73–88. https://doi.org/10.1016/j.bspc.2018.01.010

Milanfar P (2013) A tour of modern image filtering: new insights and methods, both practical and theoretical. IEEE Signal Process Mag 30(1):106–128. https://doi.org/10.1109/MSP.2011.2179329

Donoho DL, Johnstone IM (1994) Ideal spatial adaptation by wavelet shrinkage. Biometrika 81(3):425–455. https://doi.org/10.1093/biomet/81.3.425

Shin DH, Park RH, Yang S, Jung JH (2005) Block-based noise estimation using adaptive gaussian filtering. IEEE Trans Consum Electron 51(1):218–226. https://doi.org/10.1109/TCE.2005.1405723

Liu W, Lin WS (2013) Additive white Gaussian noise level estimation in SVD domain for images. IEEE Trans Image Process 22(3):872–883. https://doi.org/10.1109/TIP.2012.2219544

Li XL, Hu YT, Gao XB, Tao DC, Ning BJ (2010) A multi-frame image super-resolution method. Signal Process 90(2):405–414. https://doi.org/10.1016/j.sigpro.2009.05.028

Wiener N (1949) Extrapolation, interpolation, and smoothing of stationary time series: with engineering applications. MIT Press, Cambridge

Tomasi C, Manduchi R (1998) Bilateral filtering for gray and color images. In: Abstracts of the sixth international conference on computer vision IEEE, Bombay, India, pp 839–846. https://doi.org/10.1109/ICCV.1998.710815

Yang GZ, Burger P, Firmin DN, Underwood SR (1996) Structure adaptive anisotropic image filtering. Image Vis Comput 14(2):135–145. https://doi.org/10.1016/0262-8856(95)01047-5

Takeda H, Farsiu S, Milanfar P (2007) Kernel regression for image processing and reconstruction. IEEE Trans Image Process 16(2):349–366. https://doi.org/10.1109/TIP.2006.888330

Bouboulis P, Slavakis K, Theodoridis S (2010) Adaptive kernel-based image denoising employing semi-parametric regularization. IEEE Trans Image Process 19(6):1465–1479. https://doi.org/10.1109/TIP.2010.2042995

Gonzalez RC, Woods RE (2006) Digital image processing, 3rd edn. Prentice-Hall, Inc, Upper Saddle River

Al-Ameen Z, Al Ameen S, Sulong G (2015) Latest methods of image enhancement and restoration for computed tomography: a concise review. Appl Med Inf 36(1):1–12

Jain AK (1989) Fundamentals of digital image processing. Prentice-hall, Inc, Upper Saddle River

Benesty J, Chen JD, Huang YT (2010) Study of the widely linear wiener filter for noise reduction. In: Abstracts of IEEE international conference on acoustics, speech and signal processing, IEEE, Dallas, TX, USA, pp 205–208. https://doi.org/10.1109/ICASSP.2010.5496033

Pitas I, Venetsanopoulos AN (1990) Nonlinear digital filters: principles and applications. Kluwer, Boston. https://doi.org/10.1007/978-1-4757-6017-0

Yang RK, Yin L, Gabbouj M, Astola J, Neuvo Y (1995) Optimal weighted median filtering under structural constraints. IEEE Trans Signal Process 43(3):591–604. https://doi.org/10.1109/78.370615

Katsaggelos AK (ed) (2012) Digital image restoration. Springer Publishing Company, Berlin

Tikhonov AN, Arsenin VY (1977) Solutions of ill-posed problems. (trans: John F). Wiley, Washington

Dobson DC, Santosa F (1996) Recovery of blocky images from noisy and blurred data. SIAM J Appl Math 56(4):1181–1198. https://doi.org/10.1137/S003613999427560X

Nikolova M (2000) Local strong homogeneity of a regularized estimator. SIAM J Appl Math 61(2):633–658. https://doi.org/10.1137/S0036139997327794

Perona P, Malik J (1990) Scale-space and edge detection using anisotropic diffusion. IEEE Trans Pattern Anal Mach Intell 12(7):629–639. https://doi.org/10.1109/34.56205

Weickert J (1998) Anisotropic diffusion in image processing. Teubner, Stuttgart

Catté F, Lions PL, Morel JM, Coll T (1992) Image selective smoothing and edge detection by nonlinear diffusion. SIAM J Numer Anal 29(1):182–193. https://doi.org/10.1137/0729012

Esedoḡlu S, Osher SJ (2004) Decomposition of images by the anisotropic rudin-osher-fatemi model. Commun Pure Appl Math 57(12):1609–1626. https://doi.org/10.1002/cpa.20045

Rudin LI, Osher S, Fatemi E (1992) Nonlinear total variation based noise removal algorithms. In: Paper presented at the eleventh annual international conference of the center for nonlinear studies on experimental mathematics: computational issues in nonlinear science. Elsevier North-Holland, Inc, New York, pp 259–268. https://doi.org/10.1016/0167-2789(92)90242-F

Chambolle A, Pock T (2011) A first-order primal-dual algorithm for convex problems with applications to imaging. J Math Imaging Vis 40(1):120–145. https://doi.org/10.1007/s10851-010-0251-1

Rudin LI, Osher S (1994) Total variation based image restoration with free local constraints. In: Abstracts of the 1st international conference on image processing. IEEE, Austin, pp 31–35

Bowers KL, Lund J (1995) Computation and control IV, progress in systems and control theory. Birkhäuser, Boston, pp 323–331. https://doi.org/10.1007/978-1-4612-2574-4

Vogel CR, Oman ME (1996) Iterative methods for total variation denoising. SIAM J Sci Comput 17(1):227–238. https://doi.org/10.1137/0917016

Lou YF, Zeng TY, Osher S, Xin J (2015) A weighted difference of anisotropic and isotropic total variation model for image processing. SIAM J Imaging Sci 8(3):1798–1823. https://doi.org/10.1137/14098435X

Zibulevsky M, Elad M (2010) L1-L2 optimization in signal and image processing. IEEE Signal Process Mag 27(3):76–88. https://doi.org/10.1109/MSP.2010.936023

Hu Y, Jacob M (2012) Higher degree total variation (HDTV) regularization for image recovery. IEEE Trans Image Process 21(5):2559–2571. https://doi.org/10.1109/TIP.2012.2183143

Beck A, Teboulle M (2009) Fast gradient-based algorithms for constrained total variation image denoising and deblurring problems. IEEE Trans Image Process 18(11):2419–2434. https://doi.org/10.1109/TIP.2009.2028250

Gilboa G, Osher S (2009) Nonlocal operators with applications to image processing. SIAM J Multiscale Model Simul 7(3):1005–1028. https://doi.org/10.1137/070698592

Buades A, Coll B, Morel JM (2005) A non-local algorithm for image denoising. In: Abstracts of 2005 IEEE computer society conference on computer vision and pattern recognition. IEEE, San Diego, pp 60–65. https://doi.org/10.1109/CVPR.2005.38

Mahmoudi M, Sapiro G (2005) Fast image and video denoising via nonlocal means of similar neighborhoods. IEEE Signal Process Lett 12(12):839–842. https://doi.org/10.1109/LSP.2005.859509

Coupe P, Yger P, Prima S, Hellier P, Kervrann C, Barillot C (2008) An optimized blockwise nonlocal means denoising filter for 3-d magnetic resonance images. IEEE Trans Med Imaging 27(4):425–441. https://doi.org/10.1109/TMI.2007.906087

Thaipanich T, Oh BT, Wu PH, Xu DR, Kuo CCJ (2010) Improved image denoising with adaptive nonlocal means (ANL-means) algorithm. IEEE Trans Consum Electron 56(4):2623–2630. https://doi.org/10.1109/TCE.2010.5681149

Wang J, Guo YW, Ying YT, Liu YL, Peng QS (2006) Fast non-local algorithm for image denoising. In: Abstracts of 2006 international conference on image processing. IEEE, Atlanta, pp 1429–1432. https://doi.org/10.1109/ICIP.2006.312698

Goossens B, Luong H, Pizuirca A (2008) An improved non-local denoising algorithm. In: Abstracts of international workshop local and non-local approximation in image processing. TICSP, Lausanne, p 143

Pang C, Au OC, Dai JJ, Yang W, Zou F (2009) A fast NL-means method in image denoising based on the similarity of spatially sampled pixels. In: Abstracts of 2009 IEEE international workshop on multimedia signal processing. IEEE, Rio De Janeiro, pp 1–4

Tschumperlé D, Brun L (2009) Non-local image smoothing by applying anisotropic diffusion PDE's in the space of patches. In: Abstracts of the 16th IEEE international conference on image processing. IEEE, Cairo, pp 2957–2960. https://doi.org/10.1109/ICIP.2009.5413453

Grewenig S, Zimmer S, Weickert J (2011) Rotationally invariant similarity measures for nonlocal image denoising. J Vis Commun Image Represent 22(2):117–130. https://doi.org/10.1016/j.jvcir.2010.11.001

Fan LW, Li XM, Guo Q, Zhang CM (2018) Nonlocal image denoising using edge-based similarity metric and adaptive parameter selection. Sci China Inf Sci 61(4):049101. https://doi.org/10.1007/s11432-017-9207-9

Kheradmand A, Milanfar P (2014) A general framework for regularized, similarity-based image restoration. IEEE Trans Image Process 23(12):5136–5151. https://doi.org/10.1109/TIP.2014.2362059

Fan LW, Li XM, Fan H, Feng YL, Zhang CM (2018) Adaptive texture-preserving denoising method using gradient histogram and nonlocal self-similarity priors. IEEE Trans Circuits Syst Video Technol. https://doi.org/10.1109/TCSVT.2018.2878794 (in press)

Wei J (2005) Lebesgue anisotropic image denoising. Int J Imaging Syst Technol 15(1):64–73. https://doi.org/10.1002/ima.20039

Kervrann C, Boulanger J (2008) Local adaptivity to variable smoothness for exemplar-based image regularization and representation. Int J Comput Vis 79(1):45–69. https://doi.org/10.1007/s11263-007-0096-2

Lou YF, Favaro P, Soatto S, Bertozzi A (2009) Nonlocal similarity image filtering. In: Abstracts of the 15th international conference on image analysis and processing. ACM, Vietri sul Mare, pp 62–71. https://doi.org/10.1007/978-3-642-04146-4_9

Zimmer S, Didas S, Weickert J (2008) A rotationally invariant block matching strategy improving image denoising with non-local means. In: Abstracts of international workshop on local and non-local approximation in image processing. IEEE, Lausanne, pp 103–113

Yan RM, Shao L, Cvetkovic SD, Klijn J (2012) Improved nonlocal means based on pre-classification and invariant block matching. J Disp Technol 8(4):212–218. https://doi.org/10.1109/JDT.2011.2181487

Dabov K, Foi A, Katkovnik V, Egiazarian K (2007) Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans Image Process 16(8):2080–2095. https://doi.org/10.1109/TIP.2007.901238

Sutour C, Deledalle CA, Aujol JF (2014) Adaptive regularization of the nl-means: application to image and video denoising. IEEE Trans Image Process 23(8):3506–3521. https://doi.org/10.1109/TIP.2014.2329448

Zoran D, Weiss Y (2011) From learning models of natural image patches to whole image restoration. In: Abstracts of 2011 international conference on computer vision. IEEE, Barcelona, pp 479–486. https://doi.org/10.1109/ICCV.2011.6126278

Gu SH, Xie Q, Meng DY, Zuo WM, Feng XC, Zhang L (2017) Weighted nuclear norm minimization and its applications to low level vision. Int J Comput Vis 121(2):183–208. https://doi.org/10.1007/s11263-016-0930-5

Gu SH, Zhang L, Zuo WM, Feng XC (2014) Weighted nuclear norm minimization with application to image denoising. In: Abstracts of 2014 IEEE conference on computer vision and pattern recognition. IEEE, Columbus, pp 2862–2869. https://doi.org/10.1109/CVPR.2014.366

Zhang KB, Gao XB, Tao DC, Li XL (2012) Multi-scale dictionary for single image super-resolution. In: Abstracts of 2012 IEEE conference on computer vision and pattern recognition. IEEE, Providence, pp 1114–1121. https://doi.org/10.1109/CVPR.2012.6247791

Aharon M, Elad M, Bruckstein A (2006) rmK-SVD: an algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans Signal Process 54(11):4311–4322. https://doi.org/10.1109/TSP.2006.881199

Elad M, Aharon M (2006) Image denoising via sparse and redundant representations over learned dictionaries. IEEE Trans Image Process 15(12):3736–3745. https://doi.org/10.1109/TIP.2006.881969

Zhang L, Zuo WM (2017) Image restoration: from sparse and low-rank priors to deep priors [lecture notes]. IEEE Signal Process Mag 34(5):172–179. https://doi.org/10.1109/MSP.2017.2717489

Mairal J, Bach F, Ponce J, Sapiro G, Zisserman A (2009) Non-local sparse models for image restoration. In: Abstracts of the 12th international conference on computer vision. IEEE, Kyoto, pp 2272–2279. https://doi.org/10.1109/ICCV.2009.5459452

Zhang L, Dong WS, Zhang D, Shi GM (2010) Two-stage image denoising by principal component analysis with local pixel grouping. Pattern Recogn 43(4):1531–1549. https://doi.org/10.1016/j.patcog.2009.09.023

Dong WS, Zhang L, Shi GM, Li X (2013) Nonlocally centralized sparse representation for image restoration. IEEE Trans Image Process 22(4):1620–1630. https://doi.org/10.1109/TIP.2012.2235847

Markovsky I (2011) Low rank approximation: algorithms, implementation, applications. Springer Publishing Company, Berlin

Liu GC, Lin ZC, Yu Y (2010) Robust subspace segmentation by low-rank representation. In: Abstracts of the 27th international conference on machine leaning. ACM, Haifa, pp 663–670

Liu T (2010) The nonlocal means denoising research based on wavelet domain. Dissertation, Xidian University

Ji H, Liu CQ, Shen ZW, Xu YH (2010) Robust video denoising using low rank matrix completion. In: Abstracts of 2010 IEEE computer vision and pattern recognition. IEEE, San Francisco, pp 1791–1798. https://doi.org/10.1109/CVPR.2010.5539849

Ji H, Huang SB, Shen ZW, Xu YH (2011) Robust video restoration by joint sparse and low rank matrix approximation. SIAM J Imaging Sci 4(4):1122–1142. https://doi.org/10.1137/100817206

Liu XY, Ma J, Zhang XM, Hu ZZ (2014) Image denoising of low-rank matrix recovery via joint frobenius norm. J Image Graph 19(4):502–511

Yuan Z, Lin XB, Wang XN (2013) The LSE model to denoise mixed noise in images. J Signal Process 29(10):1329–1335

Dong WS, Shi GM, Li X (2013) Nonlocal image restoration with bilateral variance estimation: a low-rank approach. IEEE Trans Image Process 22(2):700–711. https://doi.org/10.1109/TIP.2012.2221729

Eriksson A, van den Hengel A (2012) Efficient computation of robust weighted low-rank matrix approximations using the L1 norm. IEEE Trans Pattern Anal Mach Intell 34(9):1681–1690. https://doi.org/10.1109/TPAMI.2012.116

Liu RS, Lin ZC, De la Torre F (2012) Fixed-rank representation for unsupervised visual learning. In: Abstracts of 2012 IEEE conference on computer vision and pattern recognition. IEEE, Providence, pp 598–605

Bertalmío M (2018) Denoising of photographic images and video: fundamentals, open challenges and new trends. Springer Publishing Company, Berlin. https://doi.org/10.1007/978-3-319-96029-6

Guo Q, Zhang CM, Zhang YF, Liu H (2016) An efficient SVD-based method for image denoising. IEEE Trans Circuits Syst Video Technol 26:868–880. https://doi.org/10.1109/TCSVT.2015.2416631

Liu GC, Lin ZC, Yan SC, Sun J, Yu Y, Ma Y (2013) Robust recovery of subspace structures by low-rank representation. IEEE Trans Pattern Anal Mach Intell 35(1):171–184. https://doi.org/10.1109/TPAMI.2012.88

Cai JF, Candès EJ, Shen ZW (2010) A singular value thresholding algorithm for matrix completion. SIAM J Optim 20(4):1956–1982. https://doi.org/10.1137/080738970

Hou JH (2007) Research on image denoising approach based on wavelet and its statistical characteristics. Dissertation, Huazhong University of Science and Technology

Jiao LC, Hou B, Wang S, Liu F (2008) Image multiscale geometric analysis: theory and applications. Xidian University press, Xi'an

Zhang L, Bao P, Wu XL (2005) Multiscale lmmse-based image denoising with optimal wavelet selection. IEEE Trans Circuits Syst Video Technol 15(4):469–481. https://doi.org/10.1109/TCSVT.2005.844456

Jain P, Tyagi V (2013) Spatial and frequency domain filters for restoration of noisy images. IETE J Educ 54(2):108–116. https://doi.org/10.1080/09747338.2013.10876113

Jung A (2001) An introduction to a new data analysis tool: independent component analysis. In: Proceedings of workshop GK. IEEE, “nonlinearity”, Regensburg, pp 127–132

Hyvarinen A, Oja E, Hoyer P, Hurri J (1998) Image feature extraction by sparse coding and independent component analysis. In: Abstracts of the 14th international conference on pattern recognition. IEEE, Brisbane, pp 1268–1273. https://doi.org/10.1109/ICPR.1998.711932

Muresan DD, Parks TW (2003) Adaptive principal components and image denoising. In: Abstracts of 2003 international conference on image processing. IEEE, Barcelona, pp I–101

Hamza AB, Luque-Escamilla PL, Martínez-Aroza J, Román-Roldán R (1999) Removing noise and preserving details with relaxed median filters. J Math Imaging Vis 11(2):161–177. https://doi.org/10.1023/A:1008395514426

Mallat SG (1989) A theory for multiresolution signal decomposition: the wavelet representation. IEEE Trans Pattern Anal Mach Intell 11(7):674–693. https://doi.org/10.1109/34.192463

Choi H, Baraniuk R (1998) Analysis of wavelet-domain wiener filters. In: Abstracts of IEEE-SP international symposium on time-frequency and time-scale analysis. IEEE, Pittsburgh, pp 613–616. https://doi.org/10.1109/TFSA.1998.721499

Combettes PL, Pesquet JC (2004) Wavelet-constrained image restoration. Int J Wavelets Multiresolution Inf Process 2(4):371–389. https://doi.org/10.1142/S0219691304000688

da Silva RD, Minetto R, Schwartz WR, Pedrini H (2013) Adaptive edge-preserving image denoising using wavelet transforms. Pattern Anal Applic 16(4):567–580. https://doi.org/10.1007/s10044-012-0266-x

Malfait M, Roose D (1997) Wavelet-based image denoising using a markov random field a priori model. IEEE Trans Image Process 6(4):549–565. https://doi.org/10.1109/83.563320

Portilla J, Strela V, Wainwright MJ, Simoncelli EP (2003) Image denoising using scale mixtures of gaussians in the wavelet domain. IEEE Trans Image Process 12(11):1338–1351. https://doi.org/10.1109/TIP.2003.818640

Strela V (2001) Denoising via block wiener filtering in wavelet domain. In: Abstracts of the 3rd European congress of mathematics. Birkhäuser, Barcelona, pp 619–625. https://doi.org/10.1007/978-3-0348-8266-8_55

Yao XB (2014) Image denoising research based on non-local sparse models with low-rank matrix decomposition. Dissertation, Xidian University

Dabov K, Foi A, Katkovnik V, Egiazarian K (2009) Bm3D image denoising with shape-adaptive principal component analysis. In: Abstracts of signal processing with adaptive sparse structured representations. Inria, Saint Malo

Maggioni M, Katkovnik V, Egiazarian K, Foi A (2013) Nonlocal transform-domain filter for volumetric data denoising and reconstruction. IEEE Trans Image Process 22(1):119–133. https://doi.org/10.1109/TIP.2012.2210725

Chen YY, Pock T (2017) Trainable nonlinear reaction diffusion: a flexible framework for fast and effective image restoration. IEEE Trans Pattern Anal Mach Intell 39(6):1256–1272. https://doi.org/10.1109/TPAMI.2016.2596743

Schmidt U, Roth S (2014) Shrinkage fields for effective image restoration. In: Abstracts of 2014 IEEE conference on computer vision and pattern recognition. IEEE, Columbus, pp 2774–2781. https://doi.org/10.1109/CVPR.2014.349

Kim J, Lee JK, Lee KM (2016) Accurate image super-resolution using very deep convolutional networks. In: Abstracts of 2016 IEEE conference on computer vision and pattern recognition. IEEE, Las Vegas, pp 1646–1654. https://doi.org/10.1109/CVPR.2016.182

Nah S, Kim TH, Lee KM (2017) Deep multi-scale convolutional neural network for dynamic scene deblurring. In: Abstracts of 2017 IEEE conference on computer vision and pattern recognition. IEEE, Honolulu, pp 257–265. https://doi.org/10.1109/CVPR.2017.35

Jain V, Seung HS (2008) Natural image denoising with convolutional networks. In: Abstracts of the 21st international conference on neural information processing systems. ACM, Vancouver, pp 769–776

Vincent P, Larochelle H, Bengio Y, Manzagol PA (2008) Extracting and composing robust features with denoising autoencoders. In: Abstracts of the 25th international conference on machine learning. ACM, Helsinki, pp 1096–1103. https://doi.org/10.1145/1390156.1390294

Xie JY, Xu LL, Chen EH (2012) Image denoising and inpainting with deep neural networks. In: Abstracts of the 25th international conference on neural information processing systems - volume 1. ACM, Lake Tahoe, pp 341–349

Zhang K, Zuo WM, Chen YJ, Meng DY, Zhang L (2017) Beyond a Gaussian denoiser: residual learning of deep CNN for image denoising. IEEE Trans Image Process 26(7):3142–3155. https://doi.org/10.1109/TIP.2017.2662206

Zhang K, Zuo WM, Zhang L (2018) FFDNet: toward a fast and flexible solution for CNN-based image denoising. IEEE Trans Image Process 27(9):4608–4622. https://doi.org/10.1109/TIP.2018.2839891

Cruz C, Foi A, Katkovnik V, Egiazarian K (2018) Nonlocality-reinforced convolutional neural networks for image denoising. IEEE Signal Process Lett 25(8):1216–1220. https://doi.org/10.1109/LSP.2018.2850222

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612. https://doi.org/10.1109/TIP.2003.819861

Roth S, Black MJ (2005) Fields of experts: A framework for learning image prior. In: Paper presented at the IEEE computer society conference on computer vision and pattern recognition, IEEE Computer Society, Washington, 20–26 June, 2005. https://doi.org/10.1109/CVPR.2005.160

Liu SG, Wang XJ, Peng QS (2011) Multi-toning image adjustment. Comput Aided Draft Des Manuf 21(2):62–72

Yu WW, He F, Xi P (2009) A method of digitally reconstructed radiographs based on medical CT images. Comput Aided Draft Des Manuf 19(2):49–55

Acknowledgments

This work is supported by NSFC Joint Fund with Zhejiang Integration of Informatization and Industrialization under Key Project (No. U1609218), the National Nature Science Foundation of China (No. 61602277), Shandong Provincial Natural Science Foundation of China (No. ZR2016FQ12).

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

All authors read and approved the final manuscript.

Authors’ information

Linwei Fan is currently a Ph.D. candidate in the School of Computer Science and Technology, Shandong University, and a member of the Shandong Province Key Lab of Digital Media Technology, Shandong University of Finance and Economics. Her research interests include computer graphics and image processing.

Fan Zhang is currently an associate professor at the Shandong Co-Innovation Center of Future Intelligent Computing, Shandong Technology and Business University. His research interests include computer graphics and image processing.

Hui Fan is currently a professor at the Shandong Co-Innovation Center of Future Intelligent Computing, Shandong Technology and Business University. His research interests include computer graphics, image processing, and virtual reality.

Caiming Zhang is currently a professor with the School of Software, Shandong University. His research interests include computer aided geometric design, computer graphics, information visualization, and medical image processing.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Fan, L., Zhang, F., Fan, H. et al. Brief review of image denoising techniques. Vis. Comput. Ind. Biomed. Art 2, 7 (2019). https://doi.org/10.1186/s42492-019-0016-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s42492-019-0016-7