Abstract

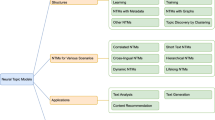

Topic modeling techniques have been widely used to uncover dominant themes hidden inside an unstructured document collection. Though these techniques first originated in the probabilistic analysis of word distributions, many deep learning approaches have been adopted recently. In this paper, we propose a novel neural network based architecture that produces distributed representation of topics to capture topical themes in a dataset. Unlike many state-of-the-art techniques for generating distributed representation of words and documents that directly use neighboring words for training, we leverage the outcome of a sophisticated deep neural network to estimate the topic labels of each document. The networks, for topic modeling and generation of distributed representations, are trained concurrently in a cascaded style with better runtime without sacrificing the quality of the topics. Empirical studies reported in the paper show that the distributed representations of topics represent intuitive themes using smaller dimensions than conventional topic modeling approaches.

Chapter PDF

Similar content being viewed by others

References

AlSumait, L., Barbará, D., Domeniconi, C.: On-line lda: adaptive topic models for mining text streams with applications to topic detection and tracking. In: ICDM 2008, pp. 3–12 (2008)

Bengio, Y., Ducharme, R., Vincent, P., Janvin, C.: A neural probabilistic language model. Machine Learning Research 3, 1137–1155 (2003)

Blei, D., Lafferty, J.: Correlated topic models. Advances in Neural Information Processing Systems 18, 147 (2006)

Blei, D.M., Lafferty, J.D.: Dynamic topic models. In: ICML 2006, pp. 113–120 (2006)

Blei, D.M., Ng, A.Y., Jordan, M.I.: Latent dirichlet allocation. Machine Learning Research 3, 993–1022 (2003)

Cao, Z., Li, S., Liu, Y., Li, W., Ji, H.: A novel neural topic model and its supervised extension. In: AAAI 2015 (2015)

Chaitin, G.J.: Algorithmic information theory. Wiley Online Library (1982)

Chalmers, D.J.: Syntactic transformations on distributed representations. In: Connectionist Natural Language Processing, pp. 46–55. Springer (1992)

Deerwester, S.C., Dumais, S.T., Landauer, T.K., Furnas, G.W., Harshman, R.A.: Indexing by latent semantic analysis. American Society for Information Science 41(6), 391–407 (1990)

Dunn, J.C.: A fuzzy relative of the isodata process and its use in detecting compact well-separated clusters (1973)

Griffiths, T.L., Steyvers, M., Blei, D.M., Tenenbaum, J.B.: Integrating topics and syntax. In: NIPS 2004, pp. 537–544 (2004)

G. E. Hinton. Learning distributed representations of concepts. In: CogSci 1986, vol. 1, p. 12 (1986)

Hofmann, T.: Probabilistic latent semantic indexing. In: SIGIR 1999, pp. 50–57. ACM (1999)

Hummel, J.E., Holyoak, K.J.: Distributed representations of structure: A theory of analogical access and mapping. Psychological Review 104(3), 427 (1997)

Larochelle, H., Lauly, S.: A neural autoregressive topic model. In: NIPS 2012, pp. 2708–2716 (2012)

Le, Q.V., Mikolov, T.: Distributed representations of sentences and documents. In: ICML 2014, pp, 1188–1196 (2014)

Mikolov, T., Chen, K., Corrado, G., Dean, J.: Efficient estimation of word representations in vector space (2013). arXiv preprint arXiv:1301.3781

Mikolov, T., Sutskever, I., Chen, K., Corrado, G.S., Dean, J.: Distributed representations of words and phrases and their compositionality. In: NIPS 2013, pp. 3111–3119 (2013)

Pollack, J.B.: Recursive distributed representations. Artificial Intelligence 46(1), 77–105 (1990)

Ramakrishnan, N., et al.: ‘Beating the news’ with EMBERS: Forecasting civil unrest using open source indicators. In: SIGKDD 2014, pp. 1799–1808 (2014)

Rousseeuw, P.J.: Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. Computational and Applied Mathematics 20, 53–65 (1987)

Rumelhart, D.E., Hinton, G.E., Williams, R.J.: Learning representations by back-propagating errors. Cognitive Modeling 5, (1988)

Rumelhart, D.E., Hinton, G.E., Williams, R.J.: Enrichment or depletion of a go category within a class of genes: which test? Bioinformatics 23(4), 401–407 (2007)

Steinley, D.: Properties of the hubert-arable adjusted rand index. Psychological Methods 9(3), 386 (2004)

Wallach, H.M.: Topic modeling: beyond bag-of-words. In: ICML 2006, pp. 977–984 (2006)

Wan, L., Zhu, L., Fergus, R.: A hybrid neural network-latent topic model. In: AISTATS 2012, pp. 1287–1294 (2012)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Shamanta, D., Naim, S.M., Saraf, P., Ramakrishnan, N., Hossain, M.S. (2015). Concurrent Inference of Topic Models and Distributed Vector Representations. In: Appice, A., Rodrigues, P., Santos Costa, V., Gama, J., Jorge, A., Soares, C. (eds) Machine Learning and Knowledge Discovery in Databases. ECML PKDD 2015. Lecture Notes in Computer Science(), vol 9285. Springer, Cham. https://doi.org/10.1007/978-3-319-23525-7_27

Download citation

DOI: https://doi.org/10.1007/978-3-319-23525-7_27

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-23524-0

Online ISBN: 978-3-319-23525-7

eBook Packages: Computer ScienceComputer Science (R0)