Abstract

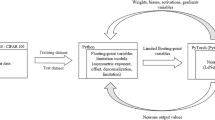

Existing Continual Learning (CL) solutions only partially address the constraints on power, memory and computation of the deep learning models when deployed on low-power embedded CPUs. In this paper, we propose a CL solution that embraces the recent advancements in CL field and the efficiency of the Binary Neural Networks (BNN), that use 1-bit for weights and activations to efficiently execute deep learning models. We propose a hybrid quantization of CWR* (an effective CL approach) that considers differently forward and backward pass in order to retain more precision during gradient update step and at the same time minimizing the latency overhead. The choice of a binary network as backbone is essential to meet the constraints of low power devices and, to the best of authors’ knowledge, this is the first attempt to prove on-device learning with BNN. The experimental validation carried out confirms the validity and the suitability of the proposed method.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Note that the output \(a_{i+1}\) of level i corresponds to the input of level \(i+1\).

- 2.

- 3.

- 4.

Refer to the following https://github.com/liuzechun/Bi-Real-net repository for all the details.

- 5.

Refer to the following https://github.com/liuzechun/ReActNet repository for all the details.

References

Howard, A.G., et al.: Mobilenets: efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:1704.04861 (2017)

Tan, M., Le, Q.: Efficientnet: rethinking model scaling for convolutional neural networks. In: International Conference on Machine Learning, pp. 6105–6114. PMLR (2019)

Cai, H., Zhu, L., Han, S.: Proxylessnas: direct neural architecture search on target task and hardware. arXiv preprint arXiv:1812.00332 (2018)

Qin, H., Gong, R., Liu, X., Bai, X., Song, J., Sebe, N.: Binary neural networks: a survey. Pattern Recogn. 105, 107281 (2020)

Lomonaco, V., Maltoni, D., Pellegrini, L.: Rehearsal-free continual learning over small non-iid batches. In: CVPR Workshops, vol. 1, p. 3 (2020)

Lomonaco, V., Maltoni, D.: Core50: a new dataset and benchmark for continuous object recognition. In: Conference on Robot Learning, pp. 17–26. PMLR (2017)

Krizhevsky, A., Hinton, G., et al.: Learning multiple layers of features from tiny images (2009)

De Lange, M., et al.: A continual learning survey: defying forgetting in classification tasks. IEEE Trans. Pattern Anal. Mach. Intell. 44(7), 3366–3385 (2021)

Bannink, T., et al.: Larq compute engine: design, benchmark and deploy state-of-the-art binarized neural networks. Proceedings of Machine Learning and Systems, vol. 3, pp. 680–695 (2021)

Vorabbi, L., Maltoni, D., Santi, S.: Optimizing data-flow in binary neural networks (2023). arXiv:2304.00952 [cs.LG]

Jacob, B., et al.: Quantization and training of neural networks for efficient integer-arithmetic-only inference. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2704–2713 (2018)

Jacob, B., Warden, P., Guney, M.E.: gemmlowp: a small self-contained low-precision gemm library (2017). https://github.com/google/gemmlowp

Hubara, I., Courbariaux, M., Soudry, D., El-Yaniv, R., Bengio, Y.: Binarized neural networks. Adv. Neural Inf. Process. Syst. 29 (2016)

Mishra, A., Nurvitadhi, E., Cook, J.J., Marr, D.: WRPN: wide reduced-precision networks. arXiv preprint arXiv:1709.01134 (2017)

Gupta, S., Agrawal, A., Gopalakrishnan, K., Narayanan, P.: Deep learning with limited numerical precision. In: International Conference on Machine Learning, pp. 1737–1746. PMLR (2015)

Das, D., et al.: Mixed precision training of convolutional neural networks using integer operations. arXiv preprint arXiv:1802.00930 (2018)

Banner, R., Hubara, I., Hoffer, E., Soudry, D.: Scalable methods for 8-bit training of neural networks. Adv. Neural Inf. Process. Syst. 31 (2018)

Martinez, B., Yang, J., Bulat, A., Tzimiropoulos, G.: Training binary neural networks with real-to-binary convolutions. arXiv preprint arXiv:2003.11535 (2020)

Russakovsky, O., et al.: ImageNet large scale visual recognition challenge. Int. J. Comput. Vision (IJCV) 115(3), 211–252 (2015). https://doi.org/10.1007/s11263-015-0816-y

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Liu, Z., Wu, B., Luo, W., Yang, X., Liu, W., Cheng, K.T.: Bi-real net: enhancing the performance of 1-bit cnns with improved representational capability and advanced training algorithm. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 722–737 (2018)

Bethge, J., Yang, H., Bornstein, M., Meinel, C.: Back to simplicity: how to train accurate bnns from scratch? arXiv preprint arXiv:1906.08637 (2019)

Liu, Z., Shen, Z., Savvides, M., Cheng, K.-T.: ReActNet: towards precise binary neural network with generalized activation functions. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12359, pp. 143–159. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58568-6_9

Pellegrini, L., Graffieti, G., Lomonaco, V., Maltoni, D.: Latent replay for real-time continual learning. In: 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 10203–10209. IEEE (2020)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Vorabbi, L., Maltoni, D., Santi, S. (2024). On-Device Learning with Binary Neural Networks. In: Foresti, G.L., Fusiello, A., Hancock, E. (eds) Image Analysis and Processing - ICIAP 2023 Workshops. ICIAP 2023. Lecture Notes in Computer Science, vol 14365. Springer, Cham. https://doi.org/10.1007/978-3-031-51023-6_4

Download citation

DOI: https://doi.org/10.1007/978-3-031-51023-6_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-51022-9

Online ISBN: 978-3-031-51023-6

eBook Packages: Computer ScienceComputer Science (R0)