Abstract

In mining projects, the confidence in an estimate is associated with the quantity and quality of the available information. Thus, the closer the data to the targeted location, the smaller the error associated with the estimated value. In the advanced stages of a project (i.e. the pre-feasibility and feasibility phases), it is usual to take samples derived from drillings. Since sampling and chemical analysis involve high costs, it is essential that these costs contribute to a reduction in the uncertainty of estimation. This paper presents a workflow for a case study of a lateritic nickel deposit and proposes a methodology to address the issue of optimising the drilling grid based on uncertainty derived from Gaussian conditional geostatistical simulations. The usefulness of the proposed workflow is demonstrated in terms of saving time and money when selecting a drill hole grid.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Although infill drilling is mandatory to reduce the uncertainty in estimated figures, the drilling grid is frequently selected without reference to any geostatistical criteria to support or optimise the locations of these additional drill holes. It is also important to determine the point at which these additional drillings become irrelevant in terms of reducing the associated error, since in this case, infill drilling will only result in temporal and financial losses.

The use of geostatistics can assist in the construction of models that have more than one variable, which can be estimated using ordinary kriging [1] or any other technique derived from it. However, this procedure does not make it possible to access the correct uncertainty associated with the estimated value, since the variance in the interpolated values is less than the variance of the original data. The limitations related to the use of kriging variance as a measure of uncertainty have been extensively discussed in the literature [2, 3]. The kriging variance considers only the spatial distribution of the samples, and does not take into account their values and local variability [4, p. 189]. Thus, for a given spatial continuity model, the kriging variance is not affected by the original data variance, and there may be equal kriging variances in situations where the data variance is completely different.

This article proposes a methodology for defining the optimal drilling grid in a lateritic nickel deposit based on the measurement of uncertainty, using the technique of conditional geostatistical simulation [5]. This method aims to reproduce the spatial continuity and intrinsic variability of the original data, and to combine multiple equally probable models in order to determine the associated uncertainty in the variables under study, thus enabling the appropriate sampling pattern for a given mineral deposit to be found.

2 Sequential Gaussian Simulation

The first sequential simulation methodology used in this study is sequential Gaussian simulation (SGS). This is an extension of the sequential conditional simulation algorithm, and is based on a Gaussian random function model. According to Pilger [6], the SGS method proposed by Isaaks [7] is characterised by the application of a sequential algorithm to the local univariate conditional distributions resulting from the decomposition of a particular Gaussian multivariate probability density function, controlled by a Gaussian multivariate model characterised by a covariance function C(h).

In the SGS method, the local conditional probability distribution is determined using simple kriging, which defines the mean and variance of the distribution. This method assumes that the distribution is stationary and follows the form of a normal distribution, that is, with a mean of zero and variance of one. The element of interest (Ni in this case) rarely has values following this type of distribution, with the most common being asymmetric distributions with a few extreme values (positive asymmetry). It is therefore necessary to transform the distribution of the original data to a normal distribution [4] to enable sequential Gaussian simulation to be used. A process called data normalisation is used to assign a corresponding value to each original data item in the normal space. After SGS has been applied, it is then necessary to re-transform the normal values to their corresponding values in the original space.

Normalisation of the distribution of the original data is carried out using a transformation based on an increasing monotonic function. This function is called Gaussian anamorphosis [8], and can be written as follows:

where:

z = original data;

ϕ = transformation function;

y = normalised data for the respective value of z.

As described by Rivoirard [8], for each value of Z, the corresponding value in normal space is obtained from the accumulated distribution function of Z values (F(z)) and the accumulated distribution function of a standard normal variable Y(G(y)). Figure 1 shows this transformation. In addition, the mathematical translation of this methodology can be expressed as follows:

Example of transformation of an original distribution into a normalised equivalent (modified from [4])

where:

y = normalised data for the respective value of Z;

G(y) = accumulated distribution function of a standard normal variable y;

F(z) = accumulated distribution function of Z values;

G−1(F(z)) = standard normalised value whose cumulative probability is equal to F(z).

The reverse transformation process consists of transforming the values obtained from SGS in the normal space into their respective values in the original space. This reverse transformation can be performed by the inverse of normalisation; that is, for each value in the normal space (y), a value in the original space (z) is assigned that has the same accumulated probability (F(z) = G(y)).

3 Sequential Indicator Simulation

The second methodology used in this study is sequential indicator simulation (SIS) [9]. SSI is widely used to model categorical variables, and is based on a nonlinear kriging algorithm called indicator kriging [10]. Unlike SGS, this simulation algorithm is classified as nonparametric. Models of categorical variables are commonly created to represent weathering profiles or rock types, and it is during the modelling stage that important decisions are made such as the definition of the volume to be estimated and the stationarity of the data within the modelled domains for the different categorical variables. The models of these categorical variables are usually constructed explicitly, and depend on the interpretation and judgment of the specialist responsible for modelling the deposit. In many cases, there is insufficient data to allow for reliable (explicit) deterministic modelling, and a stochastic modelling algorithm is therefore used to build multiple realisations.

There are some drawbacks associated with SIS; for example, the variograms of the indicators control only the spatial relationship between two points in the model. SIS can also lead to geologically unrealistic or incorrect transitions between the simulated categories, and the cross-correlation between multiple categories is not explicitly controlled.

Despite these criticisms, there are many good reasons to consider SIS, such as the fact that the necessary statistical parameters are easy to infer from limited data. In addition, the algorithm is robust and provides a simple way to transfer uncertainty into categories using the resulting numerical models.

According to Journel [10], an indicator can be defined as follows:

This model uses K different categories that are mutually exclusive and exhaustive; that is, only one category can exist in a particular location.

4 Optimisation of a Drilling Grid

Geostatistical simulation is an excellent tool for assessing the uncertainty in the values of a given attribute or the probability of these values being above a given limit. This is because geostatistical simulation algorithms allow several possible scenarios to be constructed for the distribution of attribute values, i.e. the distribution of possible values at each simulated grid node.

Using geostatistical simulation, Pilger [11] quantified the uncertainty at each location using different uncertainty indices. In this way, each additional drillhole was located according to the values of the uncertainty indices. Pilger et al. [12] added sampling in regions of high uncertainty, after the insertion of each additional piece of information, a new simulation was performed, and the uncertainty indexes were recalculated.

The reduction of uncertainty to a theoretical and operational limit was observed after the addition of each piece of information related to the resources of a coal deposit. According to the authors, the theoretical limit is that above which the addition of new drilling sites becomes ineffective in terms of reducing uncertainty, and the operational limit is related to the cost of an extra drill hole.

Koppe [13] used geostatistical simulation to analyse the efficiency of different configurations of additional samples in terms of reducing uncertainty about a function and the factors that influence this efficiency. In her thesis, Koppe [13] presented the algorithm for the automatic construction of sample configurations and the computational workflow created to speed up the approximation of the uncertainty value on the transfer function obtained for each tested configuration.

5 Case Study

5.1 Methodology

The geometry of the ore body is defined by a non-continuous axis, approximately 18 km along its main direction (N12° E), and 0.9 to 2.4 km perpendicular to this direction (N102° E). The thickness varies from 0.5 to 34 m, with an average of 3.8 m. Due to this geometry, in which the horizontal dimensions are significantly larger than the vertical, the ore body was treated as being 2D.

The technique involves generating 100 simulations of the grade of the nickel, the thickness and the type of ore at a 90% confidence level, i.e. taking values between the 5th and 95th percentiles to measure the dispersion over the average, and hence finding the error for the block with a volume equivalent to three months of production.

5.2 Geostatistical Simulation with Original Database

Using the original drilling database distribution, 100 simulations were performed for the variables of ore type, thickness and nickel grades. For the ore type indicator simulation, the gslib program blocksis was used, whereas for the other two variables, the sgsim program was used.

The results from the 100 ore type simulations were used to condition the thickness and nickel grade simulations, so that the thickness and nickel grade were only simulated in cases where the presence of ore was predicted by the ore type indicator simulation. The simulations were carried out using grid of dimensions 5 × 5 × 1 m, and were divided into five files due to space limitations.

Figure 2 shows the parameter files for the blocksis and sgsim programs.

In order to guarantee the adherence of these simulations to the original data, it was necessary to validate the variograms, histograms and averages. Figure 3 shows the validation of the variograms for 100 simulations of the nickel grade, thickness and ore type variables.

The results of the simulation for the nickel grade and thickness over a grid of 5 × 5 × 1 m were reblocked to a scale of 350 × 350 × 1 m, representing the mass over three months of production. For this, we used the program modelrescale from GSLib, and at this scale, the uncertainties in the nickel grade and thickness variables were assessed using the rule of 15% of error with 90% confidence.

To obtain the results in terms of the metal content, the results for the nickel and thickness were multiplied directly, since the area and density used in this study were treated as constant and the intention was to assess only the error in the metal content and not its absolute value.

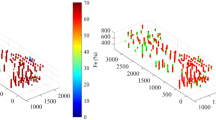

Figure 4 shows the errors for the metal content versus the number of drillholes within each panel for three months of production. The red horizontal line shows an error of 15% with 90% confidence, the blue cross represents the error in each panel for three months versus the number of drill holes, and the red squares show the average error for the panels for the same numbers of holes. The solid black line represents a logarithmic adjustment function.

It can be concluded that the current drilling distribution in the project is not sufficient to determine the optimal drilling grid, since the number of drillholes and the error shown by the blocks for three months of production are not sufficient to reach the ideal value of 15% error with 90% confidence (the red line in Fig. 4).

Hence, a virtual drilling grid was created with additional drillholes in order to test the methodology and to define an appropriate grid.

5.3 Geostatistical Simulation with a Virtual Drilling Grid Database

The virtual drilling grid used for this test was 25 × 25 m. To create this grid, the entire process described above was applied, although only one simulation involving the ore type, nickel and thickness was performed.

5.4 Geostatistical Simulation of 100 Realisations of Thickness, Nickel and Ore Type

Using the results derived for the ore type, thickness and nickel simulations in the previous step, a new database was created for these variables over a grid of 25 × 25 m (a simulated virtual database). This database was then used in a new simulation based on a 5 × 5 m grid with 100 realisations.

As in all stages of this study, it was necessary to validate the simulations with the data for the 25 × 25 m, which is referred to in the following as the original grid. Figure 5 shows the validation of the histograms for the nickel grade and the thickness. Note the small range of variation between the simulation histograms. Figure 6 shows the validation of the averages and proportions, where the average for each realisation is calculated and compared with the average from the database.

Figure 7 shows the validation of the variograms. The 100 realisations variogram ergodic fluctuations for the three variables represent the data in the original 25 × 25 m grid along the main directions.

The grid scale of 5 × 5 × 1 m was reblocked to 350 × 350 × 1 m, using the modelrescale program from gslib.

6 Results and Discussion

Figure 8 shows the panels for three months of production with their respective errors, where the red blocks indicate an error of less than 15% at a confidence level of 90%.

Since panels with less than 50% chance of being ore may be defined as waste, only panels with a probability of greater than 50% of being ore were considered in this study. A graph of the relationship between the error and ore percentage is shown in Fig. 9.

Note that the error is reduced as the proportion of ore in the panel increases. This gives rise to the possibility of optimising the drilling grid in the regions with a higher probability of ore.

7 Conclusions

The applicability of the proposed methodology was evaluated, and the results indicate that the 25 × 25 m grid meets the requirements of an error of less than 15% with 90% confidence in the panels selected as ore.

An important aspect of the methodology proposed in this study is that it does not depend on the existence of a geological model, since the existence or otherwise of a mineralised zone is defined using SIS. This technique can also therefore be recommended for applications involving mineral deposits where there is no detailed knowledge, i.e. during the intermediate stages of exploration.

References

Matheron, G.: Principles of geostatistics. Econ. Geol. 58, 1246–1266 (1963)

David, M.: Geostatistical Ore Reserve Estimation. Developments in Geomathematics, vol. 2, 364p. Elsevier Scientific Publishing Company, Amsterdam (1977)

Isaaks, E.H., Srivastava, M.R.: An Introduction to Applied Geostatistics, 561p. Oxford University Press, New York (1989)

Goovaerts, P.: Geostatistics for Natural Resources Evaluation, 483p. Oxford University Press, New York (1997)

Journel, A.G.: Geostatistics for conditional simulation of ore bodies. Econ. Geol. 69(5), 673–687 (1974)

Pilger, G.G.: Aumento da Eficiência dos Métodos Sequenciais de Simulação Condicional, 229p. Universidade Federal do Rio Grande do Sul, Tese de Doutorado (2005)

Isaaks, E.H.: The application of monte Carlo methods to the analysis of spatially correlated data, 213p. Ph.D. thesis, Stanford University, USA (1990)

Rivoirard, J.: Introduction to Disjunctive Kriging and Nonlinear Geostatistics, 89p. Centre de Géostatistique, Ecole des Mines de Paris (1990)

Alabert, F.G.: Stochastic imaging of spatial distributions using hard and soft information. M.Sc. thesis, Stanford University, California (1987)

Journel, A.G.: The indicator approach to estimation of spatial distributions. In: Proceedings of the 17th APCOM (International Symposium on the Application of Computers and Mathematics in the Mineral Industry), SME-AIME, Golden, Colorado, EUA, pp. 793–806 (1982)

Pilger, G.G.: Critérios para Locação Amostral Baseados em Simulação Estocástica. Dissertação de Mestrado, 127p. Programa de Pós-Graduação em Engenharia de Minas, Metalúrgica e de Materiais (PPGEM). Universidade Federal do Rio Grande do Sul (2000)

Pilger, G.G., Costa, J.F.C.L., Koppe, J.C.: Optimizing the value of a sample. In: Application of Computers and Operations Research in the Mineral Industry, Phoenix, Proceedings of the 30th International Symposium, vol. 1, pp. 85–94. Society for Mining, Metallurgy and Exploration, Inc. (SME), Littleton (2002)

Koppe, V.C.: Metodologia para Comparar a Eficiência de Alternativas para Disposição de Amostras, 236p. Tese de Doutorado. Programa de Pós-Graduação em Engenharia de Minas, Metalúrgica e de Materiais (PPGEM). Universidade Federal do Rio Grande do Sul (2009)

Acknowledgements

The authors would like to thank UFRGS and Anglo American for technical and financial support.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this paper

Cite this paper

Neves, C.M.S., Costa, J.F.C.L., Souza, L., Guimaraes, F., Dias, G. (2023). Methodology for Defining the Optimal Drilling Grid in a Laterite Nickel Deposit Based on a Conditional Simulation. In: Avalos Sotomayor, S.A., Ortiz, J.M., Srivastava, R.M. (eds) Geostatistics Toronto 2021. GEOSTATS 2021. Springer Proceedings in Earth and Environmental Sciences. Springer, Cham. https://doi.org/10.1007/978-3-031-19845-8_13

Download citation

DOI: https://doi.org/10.1007/978-3-031-19845-8_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-19844-1

Online ISBN: 978-3-031-19845-8

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)