Abstract

If a technology lacks social acceptance, it cannot realize dissemination into society. The chapter thus illuminates the ethical, legal, and social implications of robotic assistance in care and daily life. It outlines a conceptual framework and identifies patterns of trust in human–robot interaction. The analysis relates trust in robotic assistance and its anticipated use to open-mindedness toward technical innovation and reports evidence that this self-image unfolds its psychological impact on accepting robotic assistance through the imagined well-being that scenarios of future human–robot interaction evoke in people today. All findings come from the population survey of the Bremen AI Delphi study.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

- Artificial intelligence

- AI

- Robots

- Robotic assistance

- Trust

- Trustworthiness

- Social acceptance

- Ethics

- Human–robot interaction

- Well-being

- Care

- Everyday life

1.1 Introduction

That artificial intelligence and robots will change life is widely expected. International competition alone will ensure continuing investments in this key technology. No country will be able to maintain its economic competitiveness if it does not invest in research and the development of such a key technology. However, this premise complicates things if AI applications do not meet with the necessary acceptance in a country’s society, including acceptance by social interest groups and, thus, acceptance in the population. Populations in democratically constituted, liberal societies using to a greater extent technologies that they do not want to use is a difficult scenario to imagine.

This raises the question of AI’s social and ethical acceptance. How should the development of this technology advance to gain and secure this acceptance? The key lies in the perceived trustworthiness of the technology and, consequently, the reasons that lead people and interest groups to attest to this property of AI and its applications. For instance, as the Royal Society (2017) puts it, using the example of machine learning: “Continued public confidence in the systems that deploy machine learning will be central to its ongoing success, and therefore to realizing the benefits that it promises across sectors and applications” (p. 84).

Trustworthiness

The trustworthiness of AI depends upon its consistency with suitably appearing normative (political and ethical) beliefs and their underlying interests. Ethical guidelines, such as those that the EU Commission has published, represent this approach to trustworthiness very well (European Commission Independent High-Level Expert Group on Artificial Intelligence, 2019). For instance, AI systems should support human autonomy and decision-making, be technically robust and take a preventive approach to risks, ensure prevention of harm to privacy, and be transparent. Also, they should ensure diversity, non-discrimination, fairness, and accountability. These guidelines went into the “ecosystem of trust,” a regulatory framework for AI laid down in the European Commission’s White Paper on Artificial Intelligence, in which “lack of trust” is “a main factor holding back a broader uptake of AI” (European Commission, 2020, p. 9). Consequently, a “human-centric” approach to the development and use of AI technologies, “the protection of EU values and fundamental rights such as non-discrimination, privacy and data protection, and the sustainable and efficient use of resources are among the key principles that guide the European approach” (European Commission, 2021, p. 31).

In a broader sense, such an approach to trustworthiness applies to any interest groups in politics, economy, and society that express normative beliefs in line with their interests. However, the relevant views are not only those of interest groups but also those among the population of a country, where normative beliefs determine whether a technology like AI appears trustworthy. Ideas of fairness, justice, and transparency are no less relevant for the people than for interest groups. Then, it is less about the technology itself than about the interests that lie behind its applications and their integrity. An important use case is in the labor market, for the (pre)selection of job seekers, described in more detail below.

However, relevant drivers of perceived trustworthiness include not only normative beliefs but also attitudes, expectations, psychological needs, and the hopes and fears relating to AI and robots, in a situation where people lack personal experience with a technology that is still very much in development. In such a situation, trust depends heavily on whether people trust a technology with which they have had no primary experience.

Trust

The ability to develop trust is one of the most important human skills. Self-confidence in one’s abilities is certainly a key factor. Trust also plays a paramount role in people’s lives in many other respects—for example, from a sociological point of view, as trust in fellow humans, social institutions, and technology. Social systems cannot function without trust that is so functional because it helps people to live and survive, in a world whose complexity always requires more information and skills than any single person can have. I need not be able to build a car to drive it, but I must trust that the engineers designed it correctly. Not everyone is a scientist, but in principle, everyone can develop trust in the expertise of those who have the necessary scientific skills. In everyday life, verifying whether claims correspond to reality is often difficult. Then, the only option is to ask yourself whether you want to believe what you hear and if criteria exist that justify your confidence in their credibility. In short, life in the highly complex modern world does not work without trust. This applies even more to future technologies, such as AI and robots.

Malle and Ullman (2021, p. 4) cite dictionary entries that define “trust” as “firm belief in the reliability, truth, or ability of someone or something”; as “confident expectation of something”; as the “firm belief or confidence in the honesty, integrity, reliability, justice, etc. of another person or thing.” In line with these, the authors relate their own concept of trust to persons and agents, “including robots,” and postulate that trust’s underlying expectation can apply to multiple different properties that the other agent might have. They also postulate that these properties make up four major dimensions of trust: “One can trust someone who is reliable, capable, ethical, and sincere” (Malle & Ullman, 2021, p. 4).

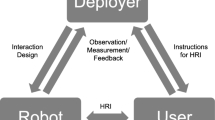

The acceptance of AI and robots requires trust and additional ingredients, a selection of which this chapter highlights. The selection includes the perceived utility and reliability of AI and robots, as well as their closeness to human life. We look at a wider array of areas of application, as well as robotic assistance in the everyday life and care of people. We ask about their respective acceptance, pay special attention to the role that respondents assign to communication in human–robot interaction, and relate this acceptance (i.e., the anticipated willingness to use) to patterns of trust in robotic assistance and autonomous AI, using latent variable analysis. As we detail below, this analysis reveals a pattern that trust in the capability, safeness, and ethical adequacy of AI and robots will build.

Well-Being in Human–Robot Interaction

Trust in AI and robots is one key factor; well-being is a second one. Both prove to be key factors in AI and robots in immediate, everyday human life. People have communication needs that they expect their social interactions to meet. People exchange ideas, take part in different types of conversations, express thoughts and feelings, develop empathy, expect respect and fairness—occasionally also affection and touch—and also react in interpersonal encounters to content, interaction partners, and the course of such encounters with gestures and facial expressions. Interpersonal interaction can be a very complex structure comprising basic and higher needs, mutual expectations, and verbal and extraverbal stimuli and responses. Complexity is one thing, but social interaction is not only complex. People generally want to feel comfortable in their encounters with other people and find recognition and fairness, and sometimes even more—for example, security. Exceptions prove the rule, but for many people, the search for appreciation and social recognition is recognizable as a basic need. People tend to look for pleasant situations and avoid unpleasant situations as much as possible—at least in general. On the one hand, this describes a situation of interaction between people that can serve as a benchmark for the overwhelmingly difficult task of developing robots that may at least partially substitute for people in such interactions. If people generally expect to have pleasant interpersonal interactions, they will do the same when interacting with robots. On the other hand, this describes a situation highly relevant for attempts to gain acceptance among the population for interactions with robots. This is only possible in the future because people must evaluate such scenarios of human–robot interaction through the emotionally tinted ideas that these scenarios trigger in them today. Since one cannot have acquired any experience with scenarios that do not yet exist, definitions of trust that relate to human–robot interaction cover exactly this uncertainty, as Law and Scheutz (2021, p. 29) put it:

For example, if persons who have never worked with or programmed a robot before coming in contact with one, they will likely experience a high level of uncertainty about how the interaction will unfold. (…) Therefore, people choosing to work with robots despite these uncertainties display a certain level of trust in the robot. If trust is present, people may be willing to alter their own behavior based on advice or information provided by the robot. For robots who work directly and closely with people, this can be an important aspect of a trusting relationship

The Individual’s Self-image

In the present context, we assume that acceptance depends on trust and well-being, and these factors, in turn, on the image of herself that a person possesses. We assume particularly that people who see themselves as open to technical innovation are likely to develop this trust and anticipated well-being, while we expect the opposite from people who rely less on technical innovation and more on the tried and tested. Above all, people who always want to be among the first to try out technical innovations (early adopters) are likely to be open-minded toward AI and interaction with robots, at least substantially more often than others.

We also look at people who orient themselves toward science rather than religion, regarding life issues, a concept that comes from the sociology of religion and refers to a deeper orientation than just a superficial interest in science (Wohlrab-Sahr & Kaden, 2013). We take it up in the context of AI because the very concept of artificial intelligence suggests relating it to the natural intelligence of a person, just to understand what artificial intelligence could mean. Without knowledge of the technical fundamentals of artificial intelligence, such as machine learning, AI can certainly assume a wide variety of meanings, including imaginary content with religious connotations. Accordingly, we assumed that a religiously shaped self-image can go hand-in-hand with a comparatively greater reserve toward AI.

Chapter Overview

This chapter presents findings from the population survey of the Bremen AI Delphi study. The focus is on trust in robotic assistance and willingness to use it, as well as the expected personal well-being in human–robot interaction. Using recent data from Eurostat, the European Social Survey, and the Eurobarometer survey, Chap. 2 extends the analysis to Germany and the EU. We ask if AI could lead to discrimination and whether the state should work as a regulatory agency in this regard. While we confine the exposition to statistical analysis, Chap. 5 discusses in detail the legal challenge of AI. Chapter 2 also investigates the worst-case scenario of cutthroat competition for jobs, using expert ratings from the Delphi. Chapter 3 describes the methodological basis of the study and explains the choice of statistical techniques in this chapter. Two further interfaces merit particular mention. Chapter 4 examines what one can learn from research on robots designed for harsh environments, while Chap. 6 addresses the “communication challenge” of human–robot interaction. Then, Chap. 7 addresses elderly care and the ethical challenges of using assistive robotics in that field.

1.2 Acceptance

1.2.1 Potential for Acceptance Meets Skepticism

In Germany, a high potential for AI acceptance prevails, reflecting an analysis of data from three Eurobarometer studies (European Commission, 2012; European Commission & European Parliament, 2014, 2017). These studies posed questions about the image that people have of robots and AI. Whereas in Germany in 2012, the proportion of those who “all in all” had a “very” or “fairly positive” image of robots was 75%, in 2014, it was 72%. For 2017, the question expanded to include the image of robots and AI, resulting in 64% choosing a “very” or “fairly” positive image in this regard.

A similar picture emerges for our survey in Bremen, where a positive view of robots and artificial intelligence also prevails. A “fairly positive” or “very positive” image of robots and artificial intelligence represent 75% of the responses, and the same proportion (75%) considers robots and artificial intelligence “quite probable” or “quite certain” to be “necessary because they can do work that is too heavy or too dangerous for humans.”Footnote 1 In addition, 61% consider robots and AI to be “good for society because they help people do their work or do their everyday tasks at home.” The majority even sees the expected consequences of AI for the labor market and one’s own workplace as positive rather than negative, as described below. This is in line with the result of an analysis of the comparative perception of 14 risks, which we report in more detail elsewhere (Engel & Dahlhaus, 2022, pp. 353–354). There we asked respondents to rank from a list the five potential risks that worry them most. Respondents hardly regarding “digitization/artificial intelligence” as such a risk (12th place out of 14) is noteworthy; only the specific risk of “abuse/trade of personal data on the Internet” received a top placement in this ranking (fourth place, after “climate change,” “political extremism/assaults,” and “intolerance/hate on the Internet”).

However, at the same time only 33% regard robots and artificial intelligence as “quite probable” or “quite certain” “technologies that are safe for humans.” Only 28% view them as “reliable (error-free) technologies,” and only 24% as “trustworthy technologies.” Other indicators also show this very clearly, especially if specific areas (see below) solicit trust and acceptance. Thus, a high potential for acceptance meets considerable skepticism and a correspondingly wide scope for exploiting this potential.

1.2.2 The Closer to Humans, the Greater the Skepticism toward Robots

In which areas should robots have a role primarily, and in which areas should robots (if possible) have no role? Table 1.1 shows the list that we gave the respondents to answer these two separately asked questions. To rule out question-order effects (the so-called primacy and recency effects), we re-randomized the area sequence for each interview. The ranking asked for places 1 to 5.

When asked about first place, 28% named industry, 16% search and rescue services, 16% space exploration, 10% manufacturing, and 10% marine/deep-sea research. Four of these five areas also shape the preference for second place. There, 26% named marine/deep-sea research, 15% space exploration, 15% industry, 13% health care, and 10% manufacturing. Industry, space exploration, and deep-sea research also dominate the remaining places, followed by manufacturing and health care.

The preferences at the other pole are also noteworthy. When asked where robots should not be in use at all, four areas dominate: caring for people, private everyday life, education, and leisure.

For a more compact picture, we calculated the probability that an area is part of the respective TOP 5 preference set and plotted the two corresponding distributions against each other (Table 1.2 and Fig. 1.1). While industry, space exploration, and marine/deep-sea research are clearly the favorite areas, respondents endorse keeping three areas free of robots: care of people, people’s private everyday lives, and education. While these areas polarize responses the most (Fig. 1.1), the following area clusters do the same, though not as dramatically as the former: search and rescue services, health care, manufacturing, and transport/logistics, on the one hand; on the other hand, military, leisure, and service sectors.

For a subset of the areas, an interesting comparison is possible with data for Germany, collected some years ago as part of a Eurobarometer study (European Commission, 2012). Figure 1.2 shows the result of this data analysis. Even if the percentages are not directly comparable across Figs. 1.1 and 1.2 (due to different calculation bases, partly different question wording), the rough pattern relates them to one another and reveals remarkable stability over time. As is true today, the use of robots in space exploration, search and rescue services, and manufacturing had already met with comparatively high levels of acceptance in 2012; the lack of acceptance in care, education, and leisure appears similarly stable. Otherwise, two changes stand out: the use of robots in the military appears more negative today; conversely, their use in health care appears more positive today.

1.2.3 Respondents Find It Particularly Difficult to Imagine Conversations with Robots

We foresee an area comprising two challenges, arising on the premise that assistance robots for the home or for care will only find acceptance in the long term if they can interact with people in a way that people perceive as pleasant communication. We can hardly imagine a human–machine interaction that aligns with repeated frequent encounters but does not satisfy human communication needs. This applies to the extent that humans’ inclination toward anthropomorphism assigns assistance robots the role of digital companions in daily interaction (Bovenschulte, 2019; Bartneck et al., 2020). Programming assistant robots with the appropriate communicative skills is the first major challenge; the second lies in the fact that humans still find communicating with a robot extremely difficult to imagine at all. This applies to daily life in general, as Fig. 1.3 and the next paragraph outline, and specifically to robotic assistance in care.

Figure 1.3 displays box plots of the interpolated quartiles (see the appendix, Table 1.7 for the underlying survey-weighted distributions). The introductory question to this block asked if the respondent could imagine conversational situations in which a robot that specializes in conversations would later keep him/her company at home. In Fig. 1.3, this appears in the middle of the chart. The pertaining median of 2.3 indicates a mean value slightly above “probably not,” with the middle 50% of responses ranging between 1.5 (this value equals a lower bound exactly in between 1 = “not at all” and 2 = “probably not”) and 3.2 (this upper bound lies slightly above the 3 = “possibly” that indicates maximum uncertainty). The respondents consider it unlikely that a robot will keep them company at home in the future. They are even less able to imagine special kinds of conversations—for example, trivial, everyday conversations, in case a respondent feels lonely or ever needs advice on life issues. Respondents nearly completely rule out convivial family discussions in which a robot participates. The same applies to imagining the use of robots that look and move like a pet (Table 1.8). Only conversations in old age with someone no longer mobile were not strictly ruled out, though, in this regard too, the mean value remains slightly below the 3 = “possibly” choice, and the range of the middle 50% of responses includes the 2 = “probably not” and excludes the 4 = “quite probable” at the same time. This is certainly due to the “human factor” in interpersonal communication; humans are humans, robots are machines, no matter how excellent their robotic skills are. Convincing people that robots will later be able to communicate with people in the same way that humans do with each other today will probably be very difficult.

1.2.4 Respondents Can Imagine Help with Household Chores and Care More Easily than Talks with Robots

Can the respondents imagine getting help with household chores? The interview question was: “Research is working on developing robots that will later help people with household chores. We think of examples of this kind: setting and clearing the table, loading and unloading the dishwasher, taking crockery out of cupboards and stowing them back in, fetching and taking away items. For the moment, please imagine that such household robots are already available today: And regardless of financial aspects: Could you imagine receiving help in this way at home?” In Fig. 1.3, the second box plot from the right graphs the pertinent data from Table 1.7: a mean value (median) of 3.2 (slightly above “possibly”) and a range from 2.2 to 4.3 that excludes “probably not” and includes “quite probable.” Therefore, respondents more easily imagined getting help around the house this way than having conversations with robots.

1.2.5 Robotic Assistance in Care Is as Imaginable as Robotic Assistance with Household Chores

About the same level of acceptance characterizes robotic assistance in care. The survey asked respondents to indicate if they would consent to the involvement of an assistant robot in the care of a close relative and their own care. Two box plots in Fig. 1.3 graph the pertinent data from Table 1.7 in the appendix. The mean values of the two distributions lie slightly above “possibly,” with the middle 50% of responses clearly excluding “probably not” and including “quite probable,” in the case of respondent’s care. Expressed in percentages, this implies that a third of respondents would find “quite probable” or “quite certain” agreeing to the involvement of an assistant robot in the care of a close relative. This proportion increases from 32.4% to 39.1% for the respondent’s care (Table 1.3, rows labeled “all”).

Twenty-seven percent of the respondents indicated that care is a sensitive issue for them. When asked whether the questions about care “may have been perceived as too personal,” 73% answered with “not at all,” 19% with “a little bit,” 7% with “fairly personal,” and 1% with “a lot too personal.” Table 1.3 collapses the last three groups and shows for the resulting “sensitive” group how much this group agrees with the participation of a robot in care. Then, only 22.8% would consider “quite probable” or “quite certain” the involvement of an assistant robot in the care of a close relative, and only 29.3% would agree to the involvement of an assistant robot in the respondent’s care (Table 1.3, rows labeled “sensitive”). Therefore, approval is significantly lower if the topic of “care” is not only of abstract importance. If it is also personally relevant, the approval values drop by almost 10 percentage points.

Irrespective of these results, a little more than half of the respondents expect the involvement of assistance robots in care in the future. In the interview we started the block with questions about care as follows: “The need for care is already a major issue in society, especially for people in need of care and their families themselves. The situation is made even more difficult by a lack of trained specialists. In research, this situation has triggered the development of assistance robots for care. This raises an extremely sensitive question: What would your expectation be: Will it happen within the next ten years that people and robots in care facilities will share the tasks of looking after people in need of care?” Table 1.4 shows that 51.9% expect this.

However, such a development would not meet with unanimous approval. Only about a third of the survey participants would rate this positively. We asked “if robots were used to care for people in need of care,” would it be perceived as “very good,” “good,” “not so good,” or “not at all good.” Nine percent voted for very good, 26% said it would be good, 37% said it would be not so good, and 23% said it was not at all good (6% did not know).

1.3 Trust in Robotic Assistance and Autonomous AI

Acceptance presupposes trust, and this trust is only available to a limited extent. Figure 1.4 shows this for seven indicators. These concern the use cases “selection of job seekers” (S), “legal advice” (L), “algorithms” (A), and “autonomous driving” (C). Again, the results appear as box plots. We refer to Fig. 1.4 and these indicators in the next sections.

1.3.1 Trust in the Integrity of Applicant Selection

To gain trustworthiness, AI as a technology must appear reliable (error-free) and safe for humans. But this is not just about the technology itself. Possible hidden interests on the part of those developing AI or commissioning its development also play a decisive role, so this is also about the interests behind the technology. From a normative (ethical or political) point of view, this is clear, for example, in the recommendations for trustworthy AI, developed for the EU Commission. However, to gain acceptance, AI must also comply with ethical standards from the population’s perspective, as clearly appears in the example of applicant selection in the labor market.

We asked the respondents four related questions, starting with: “Please imagine, in large companies, the preselection of applications for vacancies would be carried out automatically by intelligent software. Would you trust that such a preselection would only be based on the applicant’s qualifications?” In Fig. 1.4, the second box from left, labeled “S: qualified,” describes the responses to this survey question, again in terms of median and upper/lower bound of the interquartile range (also reported in Engel & Dahlhaus, 2022, p. 359, Table 20.A3). This box corresponds to a mean value of 2.6, with the middle 50% of responses ranging between 1.5 and 3.6. Accordingly, the central response tendency is between “probably not” and “possibly,” while the middle 50% of the answers include “probably not” and exclude “quite probable.”

We relate this trust to the respondent’s preference of selection mode and observe the expected close correlation. “Imagine again, in large companies, the preselection under applications for vacancies would be made automatically by intelligent software. What would you personally prefer: automated or human-made preselection?” The percentages in Table 1.9 reveal very clearly that the more the respondents trust that only qualifications count, the more they vote for automated preselection of job applicants and the less they vote for people preselecting.

A related finding is also noteworthy, concerning the two remaining survey questions of the present block. They explore the belief that automated preselection protects applicants from unfair selection. The first was: “Imagine again, in large companies, the preselection under applications for vacancies would be made automatically by intelligent software. Would you trust that such a preselection would effectively protect applicants from unfair selection or discrimination?” In Fig. 1.4, this question is labeled “S: Fair,” the left-most box plot. With a mean value of 2.4 and a lower/upper bound of 1.6 and 3.5 of the middle 50% of responses, respondents regard this as just as unlikely as only the applicant’s qualification counting. Though the respondents less often prefer automated to human applicant preselection (21% vs. 61.9%; no matter: 11.4%, don’t know 5.7%), they consider it possible that automated preselection guards more effectively against discrimination than human preselection. The follow-up question was worded that way: “Imagine again, in large companies, the preselection under applications for vacancies would be made automatically by intelligent software. Would you trust that such a preselection would protect applicants more effectively from unfair selection and discrimination than a human preselection?” In Fig. 1.4, this question is labeled “S: fairer” (the second box plot from the right). Here, we obtain a mean value of 3.1 (slightly above “possibly”) and a lower/upper bound of 2.2 and 4.0 of the middle 50% of responses that excludes “probably not” and includes “quite probable.”

1.3.2 Legal Advice

AI will likely transform not only simple routine activities but also highly skilled academic professions. Legal advice is just one example. We wanted to know how much people trust legal advice when it is delivered by a robot: “Please imagine that you need legal advice and that you contact a law firm on the Internet. There a robot takes over the initial consultation. Would you trust that it can advise you competently?” In Fig. 1.4, this item is labeled “L: competent.” The pertaining quartiles are Q1 = 1.9, Q2 = 2.8, and Q3 = 3.7. They indicate a mean response slightly below “possibly” and a middle range of responses that includes “probably not” but excludes “quite probable.”

1.3.3 Algorithms

Relating to algorithms, uncertainty and skepticism also prevail. Despite wide use of comparison portals, do people trust them? We asked: “Please imagine that you are looking for a comparison portal on the Internet to buy a product or service there. Would you trust that the algorithm would show you the best comparison options in each case?” In Fig. 1.4, the item is labeled “A: best options.” Here, the major response tendency is “uncertainty” in a double sense: a mean tendency slightly below “possibly,” with the middle 50% of responses excluding both “probably not” and “quite probable” (Q1 = 2.2; Q2 = 2.9; Q3 = 3.5).

1.3.4 Self-Driving Cars

The development of autonomous driving is already very advanced, and very likely, self-driving cars will soon be a normal part of the city streetscape. Accidents with such cars during practical tests typically get substantial media attention around the world. That may explain why people are surprisingly still quite skeptical about this technology. We phrased two survey questions that way: “It is expected that self-driving cars will take part in road traffic in the future. Will you be able to trust that the technology is reliable?” In Fig. 1.4, this item is labeled “C: reliable.” Here, too, we observe a mean response below “possibly” and lower/upper bounds of the middle 50% of responses that include “probably not” but exclude “quite probable” (Q1 = 1.8; Q2 = 2.7; Q3 = 3.7). At least, the respondents trust in the ethical programming involved, insofar as they trust the “safety first” aspect. In Fig. 1.4, this question is labeled “C: safetyfirst”. We asked: “Will you be able to trust that self-driving cars will be programmed to put the safety of road users first?” In this regard, the mean response between “possibly” and “quite probable,” with the middle 50% of responses excluding “probably not” and including “quite probable” (Q1 = 2.4; Q2 = 3.5; Q3 = 4.2).

1.3.5 Patterns of Trust and Anticipated Use of Robotic Assistance

Do the indicator variables of trust in robotic assistance and its anticipated use constitute one single basic orientation toward AI and robots that proves invariant across use cases, functions, and contexts? Or should we assume two more or less correlated basic orientations: on the one hand, trust, and on the other, acceptance? Or do people judge this technology in a more differentiated, context-dependent manner, according to the functions and tasks to be fulfilled?

Latent correlations between the factors described in Table 1.5

The confirmatory factor analysis (CFA) detailed in the appendix was carried out to answer these questions. It shows that the assumption of a more differentiated structure achieves the best fit of model and data (Table 1.10). Figure 1.5 reports the correlations among the seven factors of trust and anticipated use of robotic assistance that correspond to this latter model. The seven factors involved in this correlation matrix rest on 19 indicator variables, most of which this chapter introduced earlier. The appendix details how these variables constitute the factors in Table 1.11, along with a documentation of question wording and factor loadings.

Respondents who can imagine involving robot assistants in their own care or the care of close relatives can also imagine communicating with robots that specialize in this at home. These ideas are closely related; we observe the relationship at the highest correlation (r = 0.66). Conversely, this means that without a willingness to communicate with robot assistants, there is no willingness to involve robots in one’s care. Noticeably, talk and drive also correlate very strongly (r = 0.64). Pointedly overstated, anyone who can imagine communicating with a robot at home also has confidence in the technology of self-driving cars, and vice versa. This close relationship between the belief that autonomous driving is reliable and safe for humans and the anticipated readiness to communicate with robots at home might indicate not only the particularly important role of communication in both AI use fields but also the expectation that assistant robots at home should be as competent as autonomously acting AI.

A third correlation greater than 0.5 concerns advise and decide (r = 0.57); that is, the confidence in competent robotic advice in an important field (e.g., legal advice) and the readiness for getting robotic advice in decision-making. At the same time, decide correlates least with choose (r = 0.17)—that is, the belief that automated preselection would protect job applicants from unfair selection and discrimination. This weak relationship is interesting, insofar as it concerns technology capabilities, on the one hand and, on the other, interests behind special technology applications. Stated otherwise, highly capable technologies can also be used in the pursuit of interests that people can evaluate quite differently in normative (political and ethical) terms. The perceived performance of a technology is one thing; the perceived integrity of its application is another. Here, both represent widely independent assessment dimensions that require separate consideration.

1.4 Accepting Robotic Assistance and Talking with Robots

In addition to the latent factors and their indicator variables, the present confirmatory factor analysis includes imagining getting help with household chores. This observed variable is regressed on the two latent factors talk and care. While talk’s estimate of effect proves statistically significant:

care’s estimate of effect approaches such two-tailed significance only approximately:

If this were a linear regression, b would indicate the expected change in the target y for a unit change in x1 (while holding x2 constant at the same time). However, in the present case, the ordinal scale measures each of the model’s observed variables (1 = not at all, …, 5 = quite certain) used throughout this chapter; thus, probit regressions estimate all relationships between latent factors and observed variables. Then, the estimates of effect indicate how individuals’ values on talk and care affect the probability of y falling into specified regions on the target scale.

Figure 1.6 illustrates this for one of two latent factors, talk. In this figure, the outer (dashed) pair of vertical lines indicate the observed minimum and maximum values [−1.8; 1.8] on the latent talk scale, while the inner (dotted) pair of vertical lines [−0.6; 0.5] indicate the first and third quartile on this scale of factor scores.

Viewed from left to right, the graphs show the curvilinear course of the probabilities that the answers given on the ordinal y scale are

-

less than or equal to 1 (“not at all”),

-

in the range of 1 < y ≤ 2 (greater than “not at all,” including “probably not”),

-

in the range of 2 < y ≤ 3 (greater than “probably not,” including “possibly”),

-

in the range of 3 < y ≤ 4 (greater than “possibly,” including “quite probable”),

-

greater than 4 (greater than “quite probable”).

With increasing talk values (i.e., with stronger beliefs in one’s accepting conversations with specially trained robots and being kept company by them at home), the probability curves behave as expected: They fall for “not at all” and they consistently rise for “quite probable.” The probabilities between these extremes also develop consistently. In this regard, the graphs in Fig. 1.6 show how the turning point from increasing to decreasing probability values shifts from left to right, depending on whether the probability is considered for smaller or larger values of observed y.

1.5 Technical Innovation, Religion, and Human Values and the Tried and Tested as Elements of the Individual Self-Image

AI and robots represent future technologies. Therefore, assuming that people who are open-minded toward technical innovations will more likely accept them than people who tend to rely on the tried and tested is reasonable. In addition, we assume greater acceptance of robotic assistance among people more oriented toward science than religion, regarding life issues.

The confirmatory factor analysis that Table 1.12 reports is used to compute factor scores on these three dimensions of self-image for each respondent. As expected, the people tend either to be open to technical innovations or to rely on the tried and tested (factor correlation = −0.43). The personal proximity/distance to the market and fashion of technical achievements also plays a role in this contrast.

Conversely, the orientation toward science versus religion in life—as a third dimension—contributes only a partial contrast to the overall picture. On the one hand, this orientation proves to be independent of the openness to technical innovations (0.01ns); on the other hand, it correlates negatively with the orientation toward human values and the tried and tested (−0.39). Regardless of their openness to technical innovations, concerning life issues, people accordingly tend to orient themselves more toward science than human values and religion.

Table 1.6 shows how these dimensions of the individual self-image correlate with the dimensions of trust in AI and robots and their anticipated use. Table 1.6 shows particularly the correlations between the respective scales of factor scores, revealing a clear pattern in this regard. Except for the statistically insignificant relation to choose, openness to technical innovation is consistently associated with positive correlations while—again, except for the statistically insignificant relation to choose and here also to safe—the orientation toward human values and the tried and tested is consistently associated with negative correlations. Therefore, whether someone is open to technical innovations and wants to be among the first to try them out or, on the contrary, relies more on human values and the tried and tested and less on the acquisition of technical achievements, makes a difference.

In terms of statistically significant correlations, the third dimension of self-image is not quite as effective. Those who orient themselves more toward science than religion when it comes to life issues trust competent legal advice by a robot more and would also tend to accept the participation of an assistant robot in one’s care. Such an orientation also favors the imagining of feeling comfortable with anticipated situations of human–robot interaction.

1.6 Feeling at Ease with Imagined Situations of Human–Robot Interaction

Whether AI applications will be accepted for the future depends crucially on the feelings they trigger in people today. Because the applications do not yet exist in peoples’ everyday lives, they lack personal experience from which they could form attitudes toward AI and robots. Instead, judgments today depend on people imagining what they may face in this regard in the future. Therefore, we asked the respondents how uncomfortable or comfortable they would feel in eight fictitious situations in which humans interact with robots and, via a confirmatory factor analysis detailed elsewhere. Engel and Dahlhaus (2022, p. 360, Table 20.A4) found that these assessments constitute a single factor. Figure 1.7 plots this feel-good factor against the open-mindedness toward technological innovation. The scattergram also distinguishes the respondents’ willingness to get robotic help with household chores, which this chapter describes earlier, and reveals two major relationships: first, the stronger this open-mindedness is, the stronger the feel-good scores are; and second, the higher willingness scores cluster in the upper-right region of the scatterplot and the lower willingness scores in its lower-left region. This expresses all three variables correlating strongly and positively with each other and confirms an equivalent result regarding another target variable, the willingness to seek AI-driven decision support.Footnote 2

We regard the AI feel-good factor as a mechanism by which open-mindedness toward technical innovation leads to anticipated AI use. Formally, it is an intervening variable. A simple test can prove if open-mindedness about technological innovation, via this anticipated feeling comfortable with imagined situations of human–robot interaction, results in the willingness to accept such robotic assistance at home. Regarding the effect of open-mindedness (x) on accepting this assistance (y), a probit regression yields a statistically significant estimate of the effect:

This direct effect would have to become zero if the feel-good factor were included in the model as a presumably intervening variable. This is exactly what is happening here. If we extend the model by this factor, the direct effect drops to zero

while we observe at the same time two statistically significant estimates of effect, a first (linear regression) effect for the relation of open-mindedness (x) toward feel-good (z)

and a second (probit regression) effect for the relation of feel-good (z) toward acceptance (y)

yielding an explained variance of R2 = 0.31.

1.7 Trustworthiness and Well-Being in the Context of Robotic Assistance

Germans largely have a positive image of artificial intelligence and robots, but they trust this technology to a significantly lower extent. This involves trust in both the technology and the integrity of its applications. The closer AI gets to humans, the more the population questions its acceptance. We observe great acceptance of AI in space exploration and deep-sea research, and at the same time, we observe substantial reservations about its use in people’s daily lives. This represents a great challenge for the development of systems of robotic assistance for everyday life and the care of people. However, because large parts of the population have a positive image of AI, there exists a fair potential to convince people (always well-founded) of the trustworthiness of this technology. Following the patterns of trust we describe above, such persuasion campaigns could aim toward specific elements of trust, such as trust in the capability, safety, and ethical adequacy of AI and robotic assistance.

In any case, the further development of AI applications should take people’s ideas, needs, hopes, and fears into account. From the analysis above, for example, we can learn that the population is critical of communicating with robots in the domestic context. But we also learn that the readiness to let robots assist in one’s care depends largely on this imagined willingness to talk with robots. Furthermore, respondents assign the ability to talk to someone in need of care only a very subordinate role in the qualification profile of a care robot, as Chap. 6 shows.

This requires much persuasion in other respects as well. People judge scenarios of future human–robot interactions based on the emotionally charged ideas that such scenarios trigger in them today. In fact, without primary experience, one can only imagine what such an imaginary situation would be like. The point is just that these beliefs affect the anticipated willingness to use robotic assistance, regardless of how well-founded or unfounded. Therefore, conveying a reliable basis of experience and relying on maximum of transparency in all relevant respects regarding the further development of robotic assistance appear very useful.

Notes

- 1.

The figures in this section were presented in a German-speaking public talk held at the University of Bremen in early 2020. See the video at https://ml.zmml.uni-bremen.de/video/5e6a5179d42f1c7b078b4569

- 2.

Available at https://github.com/viewsandinsights/AI

References

Bartneck, C., Belpaeme, T., Eyssel, F., Kanda, T., Keijsers, M., & Sabanovic, S. (2020). Human-robot interaction: An introduction. Cambridge University Press.

Bovenschulte, M. (2019). Digitale Lebensgefährten – der Anthropomorphismus sozialer Beziehungen [Digital companions - the anthropomorphism of social relationships]. Büro für Technikfolgenabschätzung beim Deutschen Bundestag (TAB). Themenkurzprofil Nr. 31. Retrieved December 28, 2021, from https://doi.org/10.5445/IR/1000133933

Engel, U., & Dahlhaus, L. (2022). Data quality and privacy concerns in digital trace data. In U. Engel, A. Quan-Haase, S. Liu, & L. Lyberg (Eds.), Handbook of computational social science, Vol. 1 - Theory, case studies and ethics (pp. 343–362). Routledge. https://doi.org/10.4324/9781003024583-23

European Commission. (2012, February–March). Brussels: Eurobarometer 77.1. TNS OPINION & SOCIAL, Brussels [Producer]. GESIS, Cologne [Publisher]: ZA5597, dataset version 3.0.0, 2014. Retrieved from https://doi.org/10.4232/1.12014

European Commission. (2020). White paper on artificial intelligence – A European approach to excellence and trust. Retrieved December 28, 2021, from https://ec.europa.eu/info/sites/default/files/commission-white-paper-artificial-intelligence-feb2020_en.pdf

European Commission. (2021). Annexes to the Communication from the Commission to the European Parliament, the European Council, the Council, the European Economic and Social Committee and the Committee of the Regions. Fostering a European approach to Artificial Intelligence. Retrieved December 28, 2021, from https://op.europa.eu/en/publication-detail/-/publication/01ff45fa-a375-11eb-9585-01aa75ed71a1/language-en

European Commission & European Parliament. (2014, November–December). Brussels: Eurobarometer 82.4. TNS opinion, Brussels [Producer]. GESIS, Cologne [Publisher]: ZA5933, dataset version 6.0.0, 2018. Retrieved from https://doi.org/10.4232/1.13044

European Commission & European Parliament. (2017, March). Brussels: Eurobarometer 87.1. TNS opinion, Brussels [Producer]. GESIS, Cologne [Publisher]: ZA6861, data set version 1.2.0. Retrieved from https://doi.org/10.4232/1.12922

European Commission Independent High-Level Expert Group on Artificial Intelligence. (2019). Ethics guidelines for trustworthy AI. Retrieved December 28, 2021, from https://ec.europa.eu/futurium/en/ai-alliance-consultation.1.html

Law, T., & Scheutz, M. (2021). Trust: Recent concepts and evaluations in human-robot interaction. In C. S. Nam & J. B. Lyons (Eds.), Trust in human-robot interaction (pp. 27–57). Academic Press. https://doi.org/10.1016/B978-0-12-819472-0.00002-2

Malle, B. F., & Ullman, D. (2021). A multidimensional conception and measure of human-robot trust. In C. S. Nam & J. B. Lyons (Eds.), Trust in human-robot interaction (pp. 3–25). Academic Press. https://doi.org/10.1016/B978-0-12-819472-0.00001-0

The Royal Society. (2017). Machine learning: The power and promise of computers that learn by example. Retrieved December 28, 2021, from https://royalsociety.org/~/media/policy/projects/machine-learning/publications/machine-learning-report.pdf

Wohlrab-Sahr, M., & Kaden, T. (2013). Struktur und Identität des Nicht-Religiösen: Relationen und soziale Normierungen [Structure and identity of the non-religious: Relations and societal norms]. Kölner Zeitschrift für Soziologie und Sozialpsychologie, 65, 183–209. https://doi.org/10.1007/s11577-013-0223-8

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Beetz, M. et al. (2023). Trustworthiness and Well-Being: The Ethical, Legal, and Social Challenge of Robotic Assistance. In: Engel, U. (eds) Robots in Care and Everyday Life. SpringerBriefs in Sociology. Springer, Cham. https://doi.org/10.1007/978-3-031-11447-2_1

Download citation

DOI: https://doi.org/10.1007/978-3-031-11447-2_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-11446-5

Online ISBN: 978-3-031-11447-2

eBook Packages: Social SciencesSocial Sciences (R0)