Abstract

Artificial Intelligence (AI) has huge potential to bring accuracy, efficiency, cost savings and speed to a whole range of human activities and to provide entirely new insights into behaviour and cognition. However, the way AI is developed and deployed for a great part determines how AI will impact our lives and societies. For instance, automated classification systems can deliver prejudiced results and therefore raise questions about privacy and bias; and, the autonomy of intelligent systems, such as, e.g. self-driving vehicles, raises concerns about safety and responsibility. AI’s impact concerns not only the research and development directions for AI, but also how these systems are introduced into society and used in everyday situations. There is a large debate concerning how the use of AI will influence labour, well-being, social interactions, health care, income distribution and other social areas. Dealing with these issues requires that ethical, legal, societal and economic implications are taken into account. In this paper, I will discuss how a responsible approach to the development and use of AI can be achieved, and how current approaches to ensure the ethical alignment of decisions made or supported by AI systems can benefit from the social perspective embedded in non-Western philosophies, in particular the Ubuntu philosophy.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Introduction

Nowadays, Artificial Intelligence (AI) is almost ubiquitous. We can hardly open a newspaper or tune in to a news show without getting some story about AI. But AI means different things to different people.

As AI is increasingly impacting many aspects of life, the awareness that it has the potential to impact our lives and our world as no other technology has done before is rightfully raising many questions concerning its ethical, legal, societal and economical effects. However, whereas the dangers and risks of application of AI without due consideration of its societal, ethical or legal impact, are increasingly acknowledged, the potential of AI to contribute to human and societal well-being cannot be dismissed. A comprehensive analysis of the role of AI in achieving the Sustainable Development Goals (Vinuesa et al. 2020) in which I participated, concluded that it has the potential to shape the delivery of all 17 goals, contributing positively to 134 targets across all the goals, but it may also inhibit 59 targets (Vinuesa et al. 2020).

Ensuring the responsible development and use of AI is becoming a main direction in AI research and practice. Governments, corporations and international organisations alike are coming forward with proposals and declarations of their commitment to an accountable, responsible, transparent approach to AI, where human values and ethical principles are leading. This is a much-needed development, one to which I have dedicated my efforts and research in the last few years.

Currently, there are over 600 AI-related policy recommendations, guidelines or strategy reports, which have been released by prominent intergovernmental organisations, professional bodies, national-level committees and other public organisations, non-governmental and private for-profit companies.Footnote 1 A recent study of the global landscape of AI ethics guidelines shows that there is a global convergence around five ethical principles: Transparency, Justice and Fairness, Non-Maleficence, Responsibility and Privacy (Jobin et al. 2019). Nevertheless, even though organisations agree on the need to consider these principles, how they are interpreted and applied in practice, varies significantly across the different recommendation documents.

At the same time, the growing hype around ‘AI’ is blurring its definition and shoving into the same heap concepts and applications of many different sorts. A hard needed first step in the responsible development and use of AI is to ensure a proper AI narrative, one that demystifies the possibilities and the processes of AI technologies, and that enables all to participate in the discussion on the role of AI in society. Understanding the capabilities and addressing the risks of AI requires that we have a clear understanding of what it is, how it is applied and what are the opportunities and risks involved.

The paper is organised as follows. After a brief discussion on the different perspectives on what consists of an AI system, I present in section “Ensuring the Responsible Development and Use of AI”, current efforts towards the responsible and trustworthy development and use of AI. In section “From an Individualistic to a Social Conception of AI”, I describe the need to extend current AI research, from an individualistic to a social conception of AI.

What Is AI and Why Should We Care?

Technological developments have brought forward many potential benefits but at the same time, the risks and problems posed by AI-driven applications are increasingly being reported. All those many guidelines for AI governance and regulation have the risk to become void without an understanding of what AI is and what it can, and cannot, do. Current AI narratives bring forward benefits and risks and describe AI in many different ways, from the obvious next step in digitisation to some kind of magic. Magic in the sense that it can know all about us, and use that knowledge to decide about us or for us in possibly unexpected ways, either solving all our problems, or destroying the world in the process. The reality is, as usual, somewhere in the middle. In the following, I briefly describe some of the ways AI is often misunderstood and conclude with a reflection of the significance to the current efforts towards AI governance.

Currently, AI is mostly associated with Machine Learning (ML). Machine Learning, and in particular, Neural Networks or Deep Learning, is a subset of AI techniques that uses statistical methods to enable computers to perceive some characteristics of their environment. Current techniques are particularly efficient in perceiving images, written or spoken text, as well as the many applications of structured data. By analysing many thousands of examples (typically a few million), the system is able to identify commonalities in these examples, which then enable it to interpret data that it has never seen before, which is often referred to as prediction.

AI Is Not Intelligent

John McCarthy, who originally coined the term Artificial Intelligence, defined it as “the study and design of intelligent agents”. In this definition, which is still one of the most common definitions of AI, the concept of intelligence refers to the ability of computers to perform tasks commonly associated with intelligent beings, i.e. humans or other non-human animals. The question remains of what is human (or animal) intelligence. Commonly associated with the ability of the mind to reach correct conclusions about what is true and what is false, and about how to solve problems (Colman 2015), there is no single accepted definition of intelligence. Moreover, intelligence is a multifaceted concept. Psychologists debate on issues such as types of intelligence, the role of nature versus nurture in intelligence, how intelligence is represented in the brain, and the meaning of group differences in intelligence. Major theories include Sternberg’s triarchic theory (Sternberg 1984), Gardner’s theory of multiple intelligences (Gardner 2011) and Piaget’s theory of development (Piaget 1964). Many characterise human intelligence as more than an analytical process and to include creative, practical and other abilities. These abilities, for a large part associated with socio-cultural background and context, are far from being possible to be replicated by AI systems, even if these may approach analytical intelligence for some (simple) tasks.

AI Is Not Artificial

AI is not magic. It will not solve all our problems, nor can it exist without the use of natural resources and the work of legions of people. In a recent book, ‘The Atlas of AI’ (Crawford 2021), Kate Crawford describes the field as a collection of maps that enable the reader to traverse places, their relations and their impact on AI as an infrastructure. From the mines where the core components of hardware originate, to the warehouses where human labourers are mere servants to the automated structure, in an uneasy reminder of Chaplin’s Modern Times, to the hardship of data classification by low paid workers in data labelling farms, Crawford exposes the hard reality of the hidden side of AI success. Concluding with the powerful reminder that AI is not an objective, neutral and universal computational technique, but is deeply embedded in the social, political, cultural and economic reality of those that build, use and mostly control it (Dignum 2021).

AI Is Not the Algorithm

AI is based on algorithms. The concept of ‘algorithm’ is achieving magical proportions, used right and left to signify many things, de facto seen as a synonym to AI. But, even though AI uses algorithms, as does any other computer program or engineering process, AI is not the algorithm.

The easiest way to understand an algorithm is as a recipe, a set of precise rules to achieve a certain result. Every time you add two numbers, you are using an algorithm, as well as when you are baking an apple pie. However, by itself, the recipe has never turned into an apple pie; and, the end result of your pie has as much to do with your baking skills and your choice of ingredients, as with the choice for a specific recipe. The same applies to AI algorithms: for a large part the behaviour and results of the system depends on its input data, and on the choices made by those that developed, trained and selected the algorithm. In the same way as we have the choice to use organic apples to make our pie, in AI we also have the choice to use data that respects and ensures fairness, privacy, transparency and all other values we hold dear. This is what Responsible AI is about, and includes demanding the same requirements from the ones that develop the systems that affect us.

Responsible AI

AI is first and foremost technology that can automatise simple, lesser, tasks. At the present, AI systems are largely incapable of understanding meaning. An AI system can correctly identify cats in pictures or cancer cells in scan images, but it has no idea of what a cat or a cancer cell is. Moreover, AI system can only do this if there are enough people performing the tasks (classification, collection, maintenance…) that are needed to make the system function, misleadingly, in an autonomous manner.

But it is much more, both in terms of techniques used, as in terms of societal impact and human participation. As such, AI can be best understood as a socio-technical ecosystem. In order to understand AI, it is necessary to recognise the interaction between people and technology, and how complex infrastructures affect and are affected by society and by human behaviour.

As such, AI is not just about the automation of decisions and actions, the adaptability to learn from the changes affected in the environment, and the interactivity required to be sensitive to the actions and aims of other agents in that environment, and decide when to cooperate or to compete. It is mostly about the structures of power, participation and access to technology that determine who can influence which decisions or actions are being automated, which data, knowledge and resources are used to learn from, and how interactions between those that decide and those that are impacted are defined and maintained.

A responsible, ethical, approach to AI will ensure transparency about how adaptation is done, responsibility for the level of automation on which the system is able to reason, and accountability for the results and the principles that guide its interactions with others, most importantly with people. In addition, and above all, a responsible approach to AI makes clear that AI systems are artefacts manufactured by people for some purpose, and that those which make these have the power to decide on the use of AI. It is time to discuss how power structures determine AI and how AI establishes and maintains power structures, and on the balance between, those who benefit from, and those who are harmed by the use of AI (Crawford 2021).

Ensuring the Responsible Development and Use of AI

Ethical AI is not, as some may claim, a way to give machines some kind of ‘responsibility’ for their actions and decisions, and in the process, discharge people and organisations of their responsibility. On the contrary, ethical AI gives the people and organisations involved more responsibility and more accountability: for the decisions and actions of the AI applications, and for their own decision of using AI in a given application context. When considering effects and the governance thereof, the technology, or the artefact that embeds that technology, cannot be separated from the socio-technical ecosystem of which it is a component. Guidelines, principles and strategies to ensure trust and responsibility in AI, must be directed towards the socio-technical ecosystem in which AI is developed and used. It is not the AI artefact or application that needs to be ethical, trustworthy or responsible. Rather, it is the social component of this ecosystem that can and should take responsibility and act in consideration of an ethical framework such that the overall system can be trusted by the society. Having said this, governance can be achieved by several means, softer or harder. Currently several directions are being explored, the main ones are highlighted in the remainder of this section. Future research and experience will identify which approaches are the most suitable, but given the complexity of the problem, it is very likely that a combination of approaches will be needed.

Regulation

AI regulation is a hot topic, with many proposers and opponents. The recent proposal by the European Commission envisions a risk-based approach to regulation that ensures that people can trust that AI technology is used in a way that is safe and compliant with the law, including the respect of fundamental human rights.

The proposal implements most of the 7 requirements of the Ethics Guidelines for Trustworthy AI into specific requirements for ‘high-risk’ AI. However, it does not deal explicitly with issues of inclusion, non-discrimination and fairness. Minimising or eliminating discriminatory bias or unfair outcomes is more than excluding the use of low-quality data. The design of any artefact, such as an AI system, is in itself an accumulation of choices and choices are biased by nature as they involve selecting an option over another. Technical solutions at dataset level must be complemented by socio-technical processes that help avoid any discriminatory or unfair outcomes of AI.

Moreover, successful regulation demands clear choices about what is being regulated: is it the technology itself, or the impact, or results of its application? By focusing on technologies, or methods, i.e. by regulating systems that are based on “machine learning, logic, or statistical approaches”, such as described in the AI definition used in the European Commission’s proposal, we run the risk of seeing organisations evading the regulation, simply by classifying their applications differently. Conversely, there are a plethora of applications based on, e.g. statistics that are not AI.

A future-proof regulation should focus on the outcomes of systems, whether or not these systems fall in the current understanding of what is ‘AI’. If someone is wrongly identified, is denied human rights or access to resources, or is conditioned to believe or act in a certain way, it does not matter whether the system is ‘AI’ or not. It is simply wrong. Moreover, regulation must also address the inputs, processes and conditions under which AI is developed and used are at least as important. Much has been said about the dangers of biased data and discriminating applications. Attention for the societal, environmental and climate costs of AI systems is increasing. All these must be included in any effort to ensure the responsible development and use of AI.

At the same time, AI systems are computer applications, i.e. are artefacts, and as such subject to existing constraints, legislation, for which due diligence obligations and liabilities apply. That is, already now, AI does not operate in a lawless space. Before defining extra regulations, we need to start by understanding what is already covered by existing legislation.

A risk-based approach to regulation, as proposed by the European Commission, is the right direction to take, but needs to be informed by a clear understanding of what is the source of those risks. Moreover, it requires to not merely focus on technical solutions at the level of the algorithms or the datasets, but rather on developing socio-technical processes, and the corporate responsibility, to ensure that any discriminatory or unfair outcomes are avoided and mitigated. Independently of whether we call the system ‘AI’ or not.

Standardisation

Standards are consensus-based agreed-upon ways of doing things by providing what they consider to be the minimum universally-acknowledged specifications. Industry standards are proven to be beneficial to organisations and individuals. Standards can help reduce costs and improve efficiency of organisations by providing consistency and quality metrics, the establishment of a common vocabulary, good-design methodologies and architectural frameworks. At the same time, standards provide consumers with confidence in the quality and safety of products and services.

Most standards are considered soft governance; i.e. non-mandatory to follow. Yet, it is often in the best interest of companies to follow them to demonstrate due diligence and, therefore, limit their legal liability in case of an incident. Moreover, standards can ensure user-friendly integration between products (Theodorou and Dignum 2020).

AI standards work to support the governance of AI development and use is ongoing at ISO and IEEE, the two leading standards bodies. Such standards can support AI policy goals in particular where it concerns safety, security and robustness of AI, guarantees of explainability, and means to reduce bias in algorithmic decisions (Cihon 2019).

Jointly with IEC, ISO has established a Standards Committee on Artificial Intelligence (SC 42). Ongoing SC 42 efforts are, so far, limited and preliminary (Cihon 2019). On the other hand, IEEE’s Standards Association global initiative on Ethically Aligned Design is actively working on vision and recommendations to address the values and intentions as well as legal and technical implementations of autonomous and intelligent systems to prioritise human well-being (IEEE 2016). This is the joint work of over 700 international researchers and practitioners. In particular, the P7000Footnote 2 series aims to develop standards that will eventually serve to underpin and scaffold future norms and standards within a new framework of ethical governance for AI/AS design. Currently, the P7000 working groups are working on candidate standard recommendations to address issues as diverse as system design, transparency in autonomous systems, algorithmic bias, personal, children, student and employer data governance, nudging, or, the identification and rating the trustworthiness of news sources. Notably, the efforts on assessment of impact of autonomous and intelligent systems on human well-being is now available as an IEEE standard.Footnote 3

Assessment

Responsible AI is more than the ticking of some ethical ‘boxes’ or the development of some add-on features in AI systems. Nevertheless, developers and users can benefit from support and concrete steps to understand the relevant legal and ethical standards and considerations when making decisions on the use of AI applications. Impact assessment tools provide a step-by-step evaluation of the impact of systems, methods or tools on aspects such as privacy, transparency, explanation, bias or liability (Taddeo and Floridi 2018).

It is important to realise, as described in Taddeo and Floridi (2018) that even though these approaches “can never map the entire spectrum of opportunities, risks, and unintended consequences of AI systems, they may identify preferable alternatives, valuable courses of action, likely risks, and mitigating strategies. This has a dual advantage. As an opportunity strategy, foresight methodologies can help leverage ethical solutions. As a form of risk management, they can help prevent or mitigate costly mistakes, by avoiding decisions or actions that are ethically unacceptable”.

Currently, much effort is being put on the development of assessment tools.Footnote 4 The EU Guidelines for trustworthy AI are accompanied by a comprehensive assessment framework which was developed based on a public consultation process.

Finally, it is important to realise that any requirements for trustworthy AI are necessary but not sufficient to develop human-centred AI. That is, such requirements need to be understood and implemented from a contextual perspective, i.e. it should be possible to adjust the implementation of the requirement such as transparency based on the context in which the system is used. That is requirements such as transparency should not have one fixed definition for all AI systems, but rather be defined based on how the AI system is used. At the same time, any AI technique used in the design and implementation should be amenable to explicitly consider all ethical requirements. For example, it should be possible to explain (or to show) how the system got to a certain decision or behaviour.

Assessment tools need to be able to account for this contextualisation, as well as ensuring alignment with existing frameworks and requirements in terms of other types of assessment, such that the evaluation of trust and responsibility of AI systems provides added value to those developing and using it, rather than adding yet another bureaucratic burden.

Codes of Conduct and Advisory Boards

A professional code of conduct is a public statement developed for and by a professional group to reflect shared principles about practice, conduct and ethics of those exercising the profession; describe the quality of behaviour that reflects the expectations of the profession and the community; provide a clear statement to the society about these expectations, and enable professionals to reflect on their own ethical decisions.

A code of conduct supports professionals to assess and resolve difficult professional and ethical dilemmas. While there in the case of ethical dilemmas there is not a correct solution, the professionals can give account of their actions by referring to the code. In line with other socially sensitive professions, such as medical doctors or lawyers, i.e. with the attendant certification of ‘ethical AI’ can support trust. Several organisations are working on the development of codes of conduct for data and AI-related professions, with specific ethical duties. Just recently ACM, the Association for Computing Machinery, the largest international association of computing professionals, updated their code of conduct.Footnote 5 This voluntary code is “a collection of principles and guidelines designed to help computing professionals make ethically responsible decisions in professional practice. It translates broad ethical principles into concrete statements about professional conduct”. This code explicitly addresses issues associated with the development of AI systems, namely issues of emergent properties, discrimination and privacy. Specifically, it calls out the responsibility of technologists to ensure that systems are inclusive and accessible to all and requires that they are knowledgeable about privacy issues.

At the same time, the role of an AI Ethicist is becoming a hot topic as large businesses are increasingly dependent on AI and as the impact of these systems on people and society becomes increasingly more evident, and not always for the best. Recent scandals both about the impact of AI in bias and discrimination, as on the way businesses are dealing with their own responsibility, specifically on the role and treatment of whistle-blowers, have increased the demand for clear and explicit organisational structures to deal with the impact of AI.

Many organisations have since established the role of chief AI ethics officer, or similar. Others, recognising that the societal and ethical issues that arise from AI are complex and multi-dimensional, and therefore require insights and expertise from many different disciplines and an open participation of different stakeholders, have established AI ethics boards or advisory panels.

Awareness and Participation

Inclusion and diversity are a broader societal challenge and central to AI development. It is therefore important that as broad a group of people as possible have a basic knowledge of AI, what can (and can’t) be done with AI, and how AI impacts individual decisions and shapes society. A well-known initiative in this area is Elements of AI,Footnote 6 initiated in Finland with the objective to train one per cent of EU citizens in the basics of artificial intelligence, thereby strengthening digital leadership within the EU.

In parallel, research and development of AI systems must be informed by diversity, in all the meanings of diversity, and obviously including gender, cultural background and ethnicity. Moreover, AI is not any longer an engineering discipline and at the same time there is growing evidence that cognitive diversity contributes to better decision-making. Therefore, developing teams should include social scientists, philosophers and others, as well as ensuring gender, ethnicity and cultural differences. It is equally important to diversify the discipline background and expertise of those working on AI to include AI professionals with knowledge of, amongst others, philosophy, social science, law and economy. Regulation and codes of conduct can specify targets and goals, along with incentives, as a way to foster diversity in AI teams (Dignum 2020).

From an Individualistic to a Social Conception of AI

The dominant approach to AI has so far been an individualistic, rational one. Russell and Norvig’s classic AI text book defines AI along two dimensions (Russell and Norvig 2010): how it reasons (human-like or rationallyFootnote 7) and what it ‘does’ (think or act). Human-like approaches aim to understand and model how the human mind works, and rational approaches aim at developing systems that result in the optimal level of benefit or utility for an individual. Both approaches are well aligned with the Western philosophy statement “I think therefore I am”, fundamentally conceptualising an AI system as an individual entity.

Intelligent agents are typically characterised as bounded rational, acting towards their own perceived interests. For instance, by identifying and applying patterns in (human-generated) data, machine learning systems mimic and extend the human reasoning and actions embedded in that data, whereas symbolic logic approaches (the so called ‘good old-fashioned AI’, or GOFAI) aim to capture the laws of rational thought and action, resulting in an idealised model of human reasoning.

Human-Like or Rational?

That is, rationality is often a central assumption for agent deliberation (Dignum 2017). Moreover, intelligent systems are expected to hold consistent world views (beliefs), and to optimise action and decision based on a set of given preferences (often accuracy has highest priority). This view on rationality entails that agents are expected, and designed, to act rationally in the sense that they choose the best means available to achieve a given end, and maintain consistency between what is wanted and what is chosen (Lindenberg 2001).

The main advantages of a rationality assumption are their parsimony and applicability to a very broad range of situations and environments, and their ability to generate falsifiable, and sometimes empirically confirmed, hypotheses about actions in these environments. This gives conventional rational choice approaches a combination of generality and predictive power not found in other approaches.

Unfortunately, this type of rational behaviour fits mostly with strategic choices, where information is all available or can be gathered at will. It does not really suit most human behaviour which is based on split second decisions, on habits, on social conventions and power structures. When the aim of AI systems is to develop models of societal behaviour or to develop systems that are able to interact with people in social settings, rationality is not enough to model human behaviour. This was exemplified before by all the application areas, where the rational behaviour needs to be combined with different types of behaviour in order to be effective. In reality, human behaviour is neither simple nor rational, but derives from a complex mix of mental, physical, emotional and social aspects. Realistic applications must moreover consider situations in which not all alternatives, consequences and event probabilities can be foreseen. Thus, it is impossible to ‘rationally’ optimise utility, as the utility function is not completely known, neither are the optimisation criteria known. This renders rational choice approaches unable to accurately model and predict a wide range of human behaviours. Already in 2010, Dignum and Dignum (2010) show how different types of variations and models cater for different applications, while no generic model exists that serves as a foundation for all models.

Both the human-like and the rational perspectives on AI are suited for a task-oriented view on the purpose of AI systems. That is, the system is expected to optimise the result of its actions for a specific purpose. Even though it is able to perceive its environment and adapt accordingly, it is mostly unaware of its own role in that environment, and of the fact that its actions contribute to change. Given the large impact of AI on society, a new modelling paradigm is needed that is able to account for this feedback loop of decision—action—context. That is, AI modelling needs to follow a social paradigm.

Social AI

Non-Western philosophies, and in particular Ubuntu, take a societal rather than an individual stance, which begs the question of how AI would be defined from the perspective of Ubuntu thought. Without trying to describe or fully understand Ubuntu philosophy, I will in the following apply some of its main tenets to show how these can be applied to AI concepts and development approaches.

Ubuntu expresses the deeply-held African ideals of one’s personhood being rooted in one’s interconnectedness with others, and emphasises norms for inter-personal relationships that contribute to social justice, such as reciprocity, selflessness and symbiosis. Community is at the core of Ubuntu, focusing on interconnectedness and caring for communal living, underpinned by values of cooperation and collaboration (Mugumbate and Nyanguru 2013). Solidarity, which requires people to be aware of and attentive to the needs of those around them, rather than focusing only on their own needs is therefore central in Ubuntu, with an emphasis on caring, caretaking and context (van Breda 2019).

As such, Ubuntu philosophy is essentially relational and defines morally right actions as those that that connect, rather than separate (i.e. honours communal relationships, reduces discord or promotes friendly relationships. The concept of community can best be understood as an (objective) standard that should guide what the majority wants, or what moral norms become central (Ewuoso and Hall 2019). This does not imply that individual rights are subordinated but that individuals pursue their own good through pursuing the common good (Lutz 2009).

Human rights set the foundational value of human dignity in terms of autonomy. This view, for a large part originating from Kantian philosophy, sets human rights as the ultimate ways of treating our intrinsically valuable capacity for self-governance with respect. It has therefore been argued that the collectivistic grounds of Ubuntu thought are at odds with this individual autonomy view. According to Metz, “While the Kantian theory is the view that persons have a superlative worth because they have the capacity for autonomy, the present, Ubuntu-inspired account is that they do because they have the capacity to relate to others in a communal way” (Metz 2011, p. 544). Or, as Metz also describes “Human rights violations are ways of gravely disrespecting people’s capacity for communal relationship, conceived as identity and solidarity [...]” (ibid., p. 545). In Ubuntu, human nature is special and inviolable due to its capacity for harmonious relationships. At the same time, no individual’s rights are greater than another, thus, every individual in a community, including both children and adults is important and should be heard and respected (Osei-Hwedie 2007).

With respect to the ethics of AI development and use, the above formulation of human dignity as the human capability to relate to others in a communal way, can account for, or justify, the resolution of moral dilemmas, where autonomy conflicts with beneficence or any of the other principles, as also proposed with respect to bioethics and medicine, or to ground the UN’s Sustainable Development Goals (Ewuoso and Hall 2019).

Specifically, the formulation of Ubuntu described above may be used to justify decisions in face of ethical dilemma(s), for example, where such a decision favours the action that enhances communal relationships, or the capacity for the same (ibid.). As such, this framework could usefully supplement utilitarian, individualistic and deontological approaches that are often embedded in AI ethics decision-making. As proposed for the case of clinical contexts, ethical decision-making in the context of AI systems, can also be extended with rules that state “A breach of an ethical principle is justifiable if, on the balance of probabilities, such a breach is more likely to enhance communal relationships (…)” (Ewuoso and Hall 2019).

Towards a Social Paradigm for AI

As discussed in section “What Is AI and Why Should We Care?”, AI is neither ‘artificial’ nor ‘intelligent’ but the product of choices involving theory and values. Current AI paradigm, as seen in section “Human-Like or Rational?”, rely, and are bound, but individualistic, theories of intelligence, thinking, rationality and human nature. As such, this paradigm supports the implementation of different reasoning and action approaches, corresponding to individual understandings of contexts and different reactions of agents to contexts. However, AI, like all technology, affects and changes our world, which in turn changes us. New paradigms are needed that address collective understanding and the effect of change in context and the feedback loop from change back to the individual and collective reasoning and behaviour. Modelling this feedback loop recognises that it is not just about which action is performed but, what kind of reasoning leads to that action, and which values and perceptions lead the observation of the context.

Given the transnational character of AI, it is also imperative to address the ways in which AI may impact or be accepted by society in various regions around the world. In particular, it is needed to position the African continent in global debates and policymaking in Responsible AI. For instance, initiatives such as Responsible AI Network—AfricaFootnote 8 and the African Observatory on Responsible AIFootnote 9 are aiming to understand how AI may impact or be accepted by society in various regions around the world, deepen the understanding of AI and its effects in (Sub-Saharan) Africa, and promote the development and implementation of locally appropriate evidence-led AI policies and enabling legislation.

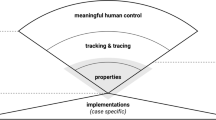

However, at the same time, it is as important to extend current conceptualisations of AI, with the relational worldview that characterise African thought, such as embedded in Ubuntu philosophy. Current AI paradigms strengthen existing power structures and prevent a truly societal understanding of the impact and challenges of AI for humanity and society. Given the impact AI systems can have on people, inter-personal interactions, and society as a whole, it seems to be relevant to consider a relational stance to approach the specification, development and analysis of AI systems. The deeply-held African vision of one’s personhood being rooted in one’s interconnectedness as expressed in Ubuntu philosophy can support integrating such a social perspective to AI—in terms of how it reasons (human-like or rationally) and what it ‘does’ (think or act), which would result in a new paradigm that considers social/collective reasoning and includes change, or reaction, as a third possible result of AI, next to thinking and acting. Figure 1 depicts this perspective on AI, extending the well-known dimensions defined in (Russell and Norvig 2010), here depicted in grey shading.

Collective or social reasoning is about modelling societal values and norms, and how these ground and influence human and rational thinking. In social action, outcomes are relative to the actions of others, where others are not just seen as opponents or obstacles on the decision-making, but as a positive force for achieving a joint endeavour.

Addressing change from the human-like perspective leads to approaches to AI that aim at enhance, rather than replace, human performance, and from a rational perspective, it concerns the development and optimisation of institutional infrastructures that maximise the effects of rational behaviour.

All these perspectives need to be brought together to address the impact of AI for a socially grounded and engaged perspective. This is no easy feat, but one for which there are no single models, nor simple approaches. It will require multidisciplinary and multi-stakeholder participation and to accept that any solution is always contingent and contextual.

Conclusions

Increasingly, AI systems will be taking decisions that affect our lives, in smaller or larger ways. In all areas of application, AI must be able to take into account societal values, moral and ethical considerations, weigh the respective priorities of values held by different stakeholders and in multicultural contexts, explain its reasoning and guarantee transparency. As the capabilities for autonomous decision-making grow, perhaps the most important issue to consider is the need to rethink responsibility. Being fundamentally tools, AI systems are fully under the control and responsibility of their owners or users. However, their potential autonomy and capability to learn, require that design considers accountability, responsibility and transparency principles in an explicit and systematic manner. The development of AI algorithms has so far been led by the goal of improving performance, leading to opaque black boxes. Putting human values at the core of AI systems calls for a mind-shift of researchers and developers towards the goal of improving transparency rather than performance, which will lead to novel and exciting techniques and applications. In particular, this requires to complement the currently predominant individualistic view of AI systems, to one that acknowledges and incorporates collective, societal, and ethical values at the core of the design, development and use of AI systems.

Biological evolution has long been revised from a ‘ladder’ view: a unilinear progression from ‘primitive’ to ‘advanced’. The same revision is also seen in anthropology: the idea that cultural evolution follows a ladder model, with small-scale decentralised societies at the bottom and hierarchical, state, societies at the top, where the top would be technologically more advanced, has been shown to be not only demeaning but also inaccurate (Eglash 1999). These fields have long since moved to a more dynamic, branching type model.Footnote 10 It is high time that AI models embrace such a branching view. Only then, can AI align with the diversity that truly reflects worldwide differences in cultural and philosophical thought. In the same way as biology or culture, intelligence is not linear, it is branching.

Notes

- 1.

See OECD’s AI Observatory https://oecd.ai/.

- 2.

- 3.

- 4.

A comprehensive list of existing frameworks is available at https://www.aiethicist.org/frameworks-guidelines-toolkits.

- 5.

- 6.

- 7.

Note that this terminology does not imply that human-like behaviour is not rational, but uses ‘rational’ to refer to utility optimising behaviour.

- 8.

- 9.

- 10.

References

Cihon, P., 2019. Standards for AI governance: International standards to enable global coordination in AI research & development. Future Humanity Inst. Univ. Oxf.

Colman, A.M., 2015. A dictionary of psychology. Oxford quick reference.

Crawford, K., 2021. The Atlas of AI. Yale University Press.

Dignum, V., 2017. Social agents: Bridging simulation and engineering. Commun. ACM 60, 32–34.

Dignum, V., 2020. Responsibility and artificial intelligence. Oxf. Handb. Ethics AI 4698, 215.

Dignum, V., 2021. AI-the people and places that make, use and manage it. Nature 593, 499–500.

Dignum, V., Dignum, F., 2010. Designing agent systems: State of the practice. Int. J. Agent-Oriented Softw. Eng. 4, 224–243.

Durham, William H, 1982. Interactions of genetic and cultural evolution: Models and examples. Hum. Ecol. 10 (2), 289–323.

Eglash, R., 1999. African fractals: Modern computing and indigenous design.

Ewuoso, C., Hall, S., 2019. Core aspects of Ubuntu: A systematic review. South Afr. J. Bioeth. Law 12, 93–103.

Gardner, H.E., 2011. Frames of mind: The theory of multiple intelligences. Hachette UK.

Gould, Stephen Jay, 1997. Redrafting the tree of life. Proc. Am. Philos. Soc. 141 (1), 30–54.

IEEE-EA, 2016. A vision for prioritizing human well-being with artificial intelligence and autonomous systems. IEEE Glob Initiat Ethical Consid. Artif Intell Auton Syst 13.

Jobin, A., Ienca, M., Vayena, E., 2019. The global landscape of AI ethics guidelines. Nat. Mach. Intell. 1, 389–399. https://doi.org/10.1038/s42256-019-0088-2

Lindenberg, S., 2001. Social rationality versus rational egoism, in: Handbook of Sociological Theory. Springer, pp. 635–668.

Lutz, D.W., 2009. African Ubuntu philosophy and global management. J. Bus. Ethics 84, 313–328.

Metz, T., 2011. Ubuntu as a moral theory and human rights in South Africa. Afr. Hum. Rights Law J. 11, 532–559.

Mugumbate, J., Nyanguru, A., 2013. Exploring African philosophy: The value of Ubuntu in social work. Afr. J. Soc. Work 3, 82–100.

Osei-Hwedie, K., 2007. Afro-centrism: The challenge of social development. Soc. Work. Werk 43.

Piaget, J., 1964. Part I: Cognitive development in children: Piaget development and learning. J. Res. Sci. Teach. 2, 176–186.

Russell, S., Norvig, P., 2010. Artificial intelligence: A modern approach. Third Edit. Prentice Hall 10, B978–012161964.

Sternberg, R.J., 1984. Toward a triarchic theory of human intelligence. Behav. Brain Sci. 7, 269–287.

Taddeo, M., Floridi, L., 2018. How AI can be a force for good. Science 361, 751–752.

Theodorou, A., Dignum, V., 2020. Towards ethical and socio-legal governance in AI. Nat. Mach. Intell. 2, 10–12.

van Breda, A.D., 2019. Developing the notion of Ubuntu as African theory for social work practice. Soc. Work 55, 439–450.

Vinuesa, R., Azizpour, H., Leite, I., Balaam, M., Dignum, V., Domisch, S., Felländer, A., Langhans, S.D., Tegmark, M., Fuso Nerini, F., 2020. The role of artificial intelligence in achieving the Sustainable Development Goals. Nat. Commun. 11, 233. https://doi.org/10.1038/s41467-019-14108-y

Acknowledgements

This work was partially supported by the Wallenberg AI, Autonomous Systems and Software Program (WASP), funded by the Knut and Alice Wallenberg Foundation and by the European Commission’s Horizon 2020 Research and Innovation Programme project HumaneAI-Net (grant 825619).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Dignum, V. (2023). Responsible Artificial Intelligence: Recommendations and Lessons Learned. In: Eke, D.O., Wakunuma, K., Akintoye, S. (eds) Responsible AI in Africa. Social and Cultural Studies of Robots and AI. Palgrave Macmillan, Cham. https://doi.org/10.1007/978-3-031-08215-3_9

Download citation

DOI: https://doi.org/10.1007/978-3-031-08215-3_9

Published:

Publisher Name: Palgrave Macmillan, Cham

Print ISBN: 978-3-031-08214-6

Online ISBN: 978-3-031-08215-3

eBook Packages: Social SciencesSocial Sciences (R0)