Abstract

The organisation of a test performance study (TPS) involves different steps that are mostly sequential, but some may be conducted simultaneously. This chapter details the following: the steps regarding the selection of the tests to be validated; the selection of the laboratories to participate in the TPS; the preparation of the materials and the dispatch of the samples; and the completion of the TPS (including the collection and analysis of the TPS results). The reader will be able to get the detailed information on how to define and plan timeline of the TPS, the appropriate number of samples (including replicates) and of laboratories that should be included in the TPS to ensure an appropriate statistical analysis, and how to perform basic analyses of the obtained data. In addition, this chapter covers the most important critical points which can endanger successful TPS organization providing the future TPS organisers in the field of plant health (but also in other similar fields) with the possibility to identify them in advance and carry-out successful TPS.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

3.1 The TPS Organisation Process

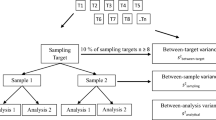

Organising a TPS involves different steps that are inter-connected. The steps are mostly sequential, but some may be conducted simultaneously, such as Selection of the tests for TPS (parts dedicated to preliminary studies) and Selection of the TPS participants (see Fig. 3.1). This chapter details the following: the steps regarding the selection of the tests to be validated (Sect. 3.2); the selection of the laboratories to participate in the TPS (Sect. 3.3); the preparation of the materials and the dispatch of the samples (Sect. 3.5); and the completion of the TPS (including the collection and analysis of the TPS results) (Sect. 3.6). There is the need to plan the appropriate number of samples (including replicates) and of laboratories that should be included in the TPS to ensure an appropriate statistical analysis. Based on the experience from the two rounds of TPS in the VALITEST Project, the expected time for the completion of a TPS is approximately 1 year. An example of a Gantt chart (designed for a TPS organised in the framework of the VALITEST Project) is given in Fig. 3.1. It is worth noting that the TPS presented in the Gantt chart was organised within strict time frames, and certain steps could not be prolonged due to time limitations connected to the duration of the Project. When organising a TPS outside of a specific project, it is worth considering a longer time frame for the organisation. Steps that could require more time than in the Gantt chart example are: selection of test for preliminary studies (in particular when many tests are available for the pest of interest, as was the case for TSWV), and preliminary studies and result analysis.

3.2 Selection of the Tests

The first step of the TPS consists of the selection of the tests to be evaluated in the TPS. The importance of this step depends on the available pest tests and the amount of available validation data for each test, plus expert knowledge on the particular pest. It is often not possible to validate all tests for a selected pest due to limited resources, are therefore criteria need to be defined (depending on the needs at the time), and tests need to be selected based on the most important ones. Unbiased analysis of the available data on the performance characteristics of the tests will enable informed decisions to be made on the selection of the most relevant tests for the TPS. During the process, the scope of the TPS needs to be kept in mind, while also considering the resources available, including the budget, staff, equipment and materials.

The first step of the process is the definition of the purpose and scope of the TPS. The second step is the definition of the weighted criteria and associated target values, to facilitate the selection of the tests to be included in the TPS. The use of weighted criteria allows an impartial evaluation of the validation data available. Once the criteria have been defined, it is possible to proceed with a comprehensive analysis of the validation data available. When validation data are lacking, tests may be selected for preliminary validation studies, which are conducted in the laboratory of the TPS organiser by authorised personnel supervised by the person responsible for the TPS organisation. After completion of the preliminary studies, the organiser analyses the results and selects the tests to be included in the TPS based on the previously defined criteria.

The result of the test selection process is a list of tests selected to be included in the TPS. These tests have extensive intralaboratory validation data, although an evaluation involving several laboratories has never been performed. Throughout the TPS, all of the procedures are documented, and records are maintained in accordance with the quality assurance system of the laboratory organising the TPS.

3.2.1 Definition of the TPS Scope

To be able to set the strategy and to define the priorities for the test selection, it is necessary to precisely define the scope of the TPS based on the aim of the study. The definition of the scope includes the selection of the methods that will be used, and for each method, the identification of: sample type (e.g., DNA, sample spiked with pest), matrix (e.g., seeds, leaves), purpose (e.g., detection, identification), controls, number of samples and maximum number of participants. The selection of the methods depends on the diagnostic needs for the pest(s). In some cases, tests are needed for fast on-site detection, while in other cases, detection of the pest at low levels is more important. Methods differ in terms of their reliable detection of pests in symptomatic or asymptomatic materials. In the framework of the definition of the TPS scope, the selection of the methods might also depend on the plant material available and the expertise of the TPS organiser.

Defining the scope of the TPS is an important step in TPS organisation, as it will impact on all the other steps. The scope of the TPS should be clearly defined regarding the aim of the test(s), and its (their) feasibility, as the majority of TPS have limited resources and tight time schedules. Table 3.1 provides an example from VALITEST to illustrate how the scope of the TPS was defined for the detection and identification of tomato spotted wilt orthotospovirus (TSWV) in symptomatic leaves of tomato (Solanum lycopersicum L.) (Table 3.1), using both serological (ELISA) and molecular (RT-PCR, real-time RT-PCR) methods. In addition, some tests were evaluated for their applicability for on-site use. The other scopes of the TPS organised in the framework of VALITEST were detailed in the deliverable reports of the Project (for details see Alič et al. 2020; Anthoine et al. 2020).

Due to time constraints, it was not possible to use infected plant material from tomato in the VALITEST TSWV TPS. As a result, the starting material included extracts of healthy tomato leaves spiked with different virus isolates at various concentrations. TSWV isolates are very diverse in terms of the host plant in which they are detected, geographic origin, and molecular and serological properties. Consequently, determination of the analytical specificity of the tests was considered essential. Different TSWV isolates (i.e., geographic, biological) were used to ensure that the tests selected covered the majority of the known TSWV isolates (to evaluate the analytical specificity [inclusivity]). The analytical specificity (exclusivity) of the selected tests was determined in the preliminary studies by including different orthotospovirus species similar to TSWV based on their serological and molecular properties. Usually, the main constraints in the organisation of the TPS are the availability of biological material, the availability of personnel and funding resources, and the limited time frame to conduct the TPS. Taking into account those constraints, the TPS organiser considered it feasible to include 22 samples and the relevant controls, following the guidelines described in Massart et al. (2022), to be analysed by approximately 20 participating laboratories. It is of utmost importance that all methods or tests that are planned to be included in a TPS are well established in the laboratory of the TPS organiser, and that appropriately trained or experienced personnel are available to perform and supervise the process.

3.2.2 Definition of Weighted Criteria and Targeted Values for the Selection of Tests

Usually, the number of tests available to detect a specific pest is significantly higher than the number of tests that can be included in a TPS, except for emerging pest for which the number of tests can be low. Not all available tests for a given pest are suitable for a TPS, and selection of the suitable tests should be impartial and transparent, and organised to achieve the best possible results with the resources available. Therefore, it is best to first define the criteria that have to be used for the selection of the tests. The list of criteria that can be used for the selection of tests may include the performance characteristics of the tests that are important for a particular intended use, the experience of the organiser, and the applicability of the test (see applicability, chemistry, instrument …). In addition, there is the need to define the type of value a criterion can take (e.g., quantitative, qualitative), called the criteria descriptors below, the targets to be reached by the test, and the relative weight of each criterion. The weights applied can be different for different uses of a test (e.g., laboratory, on-site). The weighted criteria need to be set to objectively select tests from a list of tests for a specific pest, each of which will have advantages and disadvantages depending on the scope of the TPS.

Criteria descriptors can be quantitative; e.g., the concentration of a pest that needs to be detected (not all pests can be counted easily; e.g., viruses). Descriptors can also be simple Yes/No answers, or relative levels. As already emphasised above, the criteria can be weighted differently to allow the selection of the appropriate tests for the defined scope of the TPS. The most important criteria are considered first (i.e., those with high weight); if some of the tests show similar values and performances, then less important criteria can be used as well (i.e., for those with medium or low weight). The important performance criteria for tests for diagnostic purposes are related to the following performance criteria: analytical sensitivity, analytical specificity (i.e., exclusivity, inclusivity), selectivity, repeatability and reproducibility. Other criteria can help to evaluate other properties of specific tests, such as applicability, reagents and equipment. Sometimes, when selecting between test with similar characteristics, a test with a higher sample throughput and easier test procedures can have an advantage. However, it is important to also evaluate how accessible or stable the required reagents are, and the equipment that is needed to perform a specific test for a specific scope. If a criterion is not relevant for a specific method or pest combination, it can be ignored (i.e., not given any weighting).

The values of the weights that are assigned to the criteria can differ depending on the scope of the TPS. For example, for emerging pests, the aim of the TPS might be to select tests to detect the pest at low concentrations in asymptomatic plant material (e.g., in seeds). Conversely, for testing of symptomatic material, detection at low concentrations is not critical.

The list of criteria defined here is not specific to TPS nor to plant health, and can be used for intralaboratory studies as well as for TPS in other fields. These criteria can thus be considered as the common rules for the selection of tests included in such studies, whereby they can be adapted to specific purposes when needed. Table 3.2 lists these common rules for the selection of tests for validation, which need to be defined and described to ensure the transparency of the selection process (for details see Alič et al. 2020; Anthoine et al. 2020). The TPS organiser should also define targeted values to be reached by a test for each criterion.

Table 3.2 is an example of how the targeted values and weights were defined for each criterion for a TPS organised for the detection and identification of TSWV. Here, it was very important that the test chosen detects all of the correct targets (i.e., here, part of the TSWV genome or an appropriate protein); thus, the choice of a test with the ‘wrong’ target would have significant impact on the performance. The availability of validation data was considered as moderately important, as the goal was to provide validation data. Analytical and diagnostic sensitivity were not the most important criteria in this case, as the goal was detection and identification in symptomatic plants; i.e., high concentrations of the pest were expected. However, analytical specificity was very important, as it is common that tests for detection and identification of TSWV cross-react with similar orthotospovirus species, and some of these can infect the same plant species (i.e., tomato in this case). On the other hand, as TSWV has a worldwide geographical distribution, it was important that the tests detected all isolates of the virus. As the plan was also to include a considerable number of laboratories for the evaluation of the tests (to subsequently provide enough data for reliable statistical analysis), the requirement for any specific equipment had to be avoided, as this would have led to the elimination of a considerable number of potential participating laboratories. For on-site detection tests, it was important that the reagents needed would be stable at room temperature for a reasonable period of time, and that the test did not require expensive equipment.

As mentioned before, these criteria can also be used in other studies, and to make them easily available to colleagues who might be interested, and an empty form of Table 3.2 can be downloaded with this book (Table 3.1).

3.2.3 Collection of Available Validation Data

After these ‘rules’ for the selection of the tests have been decided upon, the validation data should be collected to support the selection of the tests. Available validation data and other data about tests (e.g., matrices tested, processing of samples) can be collected through literature searches, internet searches, database searches, experience of the TPS organiser, EUPHRESCO final reports (https://www.euphresco.net/projects/portfolio), dedicated questionnaires or surveys on diagnostic tests used in different laboratories, and validation data from discussions with commercial kit providers. The authors of tests from scientific publications can also be contacted to obtain additional validation data on published tests. Sometimes, validation data for a test will exist, but might not be publicly available, or only be partially available. This communication is time consuming, but it enables a TPS organiser to make the best decisions in the test selection process.

In many cases the validation data for tests are not comparable (e.g., different sample types, units, volumes), and sometimes crucial information is missing or is not available (e.g., sample preparation and concentration). It is important to also evaluate the reported information about the number of tested targets and non-targets and the controls performed, and to compare these between the different tests available. In all of these cases, the expertise of the TPS organiser is invaluable to be able to judge the results reported and the other relevant information.

The biggest designated database of validation data for plant pests is the EPPO database on diagnostic expertise (available at: https://dc.eppo.int/), which contains a significant number of validation datasheets for pests that are considered important in the EPPO region. Laboratories are invited to submit their validation data to this database to help other laboratories when selecting tests, and to contribute to the better diagnostics of certain pests. In addition, the availability of the data can prevent duplication of work across different laboratories, so other laboratories can focus on the pests or tests for which there are no validation data available. Other more specialised databases where validation data can be found are the database of the ISHI-VEG validation reports of the International Seed Federation (available at: https://www.worldseed.org/our-work/phytosanitary-matters/seed-health/ishi-veg-validation-reports/) and the database of the International Seed Testing Association (ISTA) (available at: https://www.seedtest.org/en/method-validation-reports-_content%2D%2D-1%2D%2D3459%2D%2D467.html).

In 2021, new sources of validation data for diagnostic kits manufactured by small and medium-sized enterprises (SMEs) became available through the European Plant Diagnostic Industry Association (EPDIA) website. The role of the EPDIA is to ensure the marketability of SMEs by facilitating dialogue with stakeholders and decision makers. In parallel with the establishment of the EPDIA, an EU Plant Health Diagnostics Charter was developed to describe the quality procedures for the production and validation of commercial tests produced by EU manufacturers. This EU Charter will help to guarantee the quality and reliability of products for end users worldwide. The accession of manufacturers to the EPDIA and the EU Charter will allow SMEs to increase their competitiveness (Trontin et al. 2021; EPDIA Quality Charter available at https://www.epdia.eu). The EPDIA database can be searched according to different parameters, such as pests, methods and tests. The EPDIA database contains basic information on the kits (tests), and also validation data sheets provided by the manufacturers and validation data sheets about the availability of their kits in the EPPO database for diagnostic expertise. The database of the EPDIA is available at: https://pestdiagnosticdatabase.eu/.

As mentioned above, for some pests there are a huge number of tests available, including those from commercial providers and from scientific publications. Therefore, the collection of validation data is a time-consuming process that should be planned in advance. For example, for the TPS that was organised for the detection and identification of TSWV, collection of the available validation data took 3 months. TSWV is in the second place on the list of the top 10 economically most important plant viruses (Scholthof et al. 2011; Rybicki 2015), and has a wide host range of >1000 plant species (which include some important vegetables, such as tomato and pepper, and a variety of ornamental plants). This virus has been in the spotlight of diagnosticians and researchers worldwide. Therefore, it was expected that there would be numerous tests available for its detection and identification. After a thorough search through all of the available resources, a total of 76 tests was found, and all available validation data for these were collected. This process included searching the websites of commercial providers, communicating with them to clarify missing data, analysing the available validation data from databases, and collection of the available research articles in which detection of TSWV was included. On the other hand, for some pests that are only important in certain areas, or that are emerging, the relative lack of available tests means that the same step will take considerably less time. As an example, for a TPS on Cryphonectria parasitica, the number of tests available including commercial ones and tests from scientific publications was low (only three tests were available at the time).

3.3 Preliminary Studies

Preliminary studies (sometimes called pilot studies) allow TPS organisers to foresee unexpected events and to determine whether the TPS itself is feasible. Preliminary studies are usually carried out initially on a small number of samples. If the results of the small-scale preliminary studies do not meet expectations, or if they show that the study itself is not feasible, the TPS organiser should make appropriate adjustments to prevent further waste of time and resources. For the TPS on detection and identification of TSWV, the study included an evaluation of the possibility to use the same extraction buffer for all ELISA tests, which in all cases differ from the recommended manufacturer’s extraction buffers (see Sect. 3.3.3). However, for some kits the results of the preliminary studies showed that this change can affect the performance of the tests. Therefore, this change was not introduced in the TPS. Due to limited resources, the limited number of samples that can be included in a TPS and the tight time schedule, some performance criteria can only be determined in preliminary studies (e.g., analytical specificity). The first step of a preliminary study is usually the definition of the list of tests to be evaluated, with collection of the material to be used for the evaluation of the selected tests (e.g., isolates, strains, populations, plant materials). Then, an assessment of the results of these preliminary studies in comparison to previously adopted criteria is made. The results from preliminary studies can help the TPS organiser to select the best tests to be included in the TPS based on its scope.

3.3.1 Definition of a List of Tests Subjected to Preliminary Studies

There are numerous sources available that can be used to collect tests for any particular pest (see Sect. 3.2.3). Data from all of the available tests should be collected systematically according to the criteria presented in Table 3.2, and their performance should be judged based on those predefined weighted criteria. For this step, the experience and critical judgement of the TPS organiser is crucial to define the tests for preliminary studies. Where there are not enough data collected to make an informed decision on test selection, the TPS organiser can use additional available resources to better define the performance criteria of some tests; e.g., for PCR-based tests, in-silico analysis can be carried out to check the specificity of the primers and probes, to make an informed decision on test selection. It is important that all of these selection steps are documented, and the TPS organiser should keep records that explain the selection of the tests.

The selection of the tests for the TPS on the detection and identification of TSWV was a difficult process, considering the number of tests available. Taking into account the scope and predefined criteria, the analytical specificity (i.e., exclusivity, inclusivity) was defined as the most important criterion. The reason for this was that it was known that tomato can be affected by a number of other orthotospoviruses besides TSWV, including alstroemeria necrotic streak virus (ANSV), groundnut bud necrosis virus (GBNV), groundnut ringspot tospovirus (GRSV), tomato chlorotic spot tospovirus (TCSV), tomato yellow (fruit) ring virus (TYRV), tomato zonate spot virus (TZSV), tomato necrotic ringspot virus (TNRV), watermelon silver mottle tospovirus (WSMoV) and capsicum chlorosis orthotospovirus (CaCV) (EFSA, 2012). The symptoms caused by those orthotospoviruses, and also by infection with some other viruses, can be similar, and therefore laboratory testing is needed to identify the causal virus species. In addition, even though TSWV is no longer on the list of quarantine pests in the EU (Regulation (EU) 2019/2072 2019), it is still a very important pathogen and had a status of a regulated non-quarantine pest. The importance of TSWV for the production of agricultural plants is highlighted by the significant losses that can occur as a consequence of TSWV infection, combined with its extensive host range. An increasing problem was the emergence of TSWV resistance-breaking isolates (Turina et al. 2012). The resistance-breaking isolates can overcome the Sw-5 resistance gene in tomato, to cause significant losses, and as this gene provides the only commercially available resistance to TSWV in tomato, it is very important to limit or prevent the further spread of these isolates (Aramburu and Martí 2003; Ciuffo et al. 2005; Turina et al. 2012). Therefore, accurate detection and identification of TSWV is an important step to establish effective control strategies. Based on these criteria, the tests suitable for the detection and identification of TSWV in symptomatic leaves of tomato were defined.

As indicated, after an extensive search for TSWV detection by commercial providers of tests and in scientific papers (with information collected from websites and through direct contact), a total of 76 different tests were evaluated for inclusion in the preliminary studies:

-

13 ELISA tests (DAS-, TAS-, B-fast, ELISA with specific single chain antibodies);

-

2 luminex tests;

-

2 tissue-blot immunoassays (TBIAs);

-

2 dot-blot immunoassays (DBIAs);

-

4 on-site detection tests (lateral flow devices [LFDs], rapid immune gold);

-

2 dot-blot hybridisation tests;

-

36 reverse transcription (RT)-PCR or immunocapture (IC) RT-PCR tests;

-

8 real-time RT-PCR tests (SYBR green, TaqMan);

-

4 RT loop-mediated isothermal amplification (LAMP) or IC-RT-LAMP tests;

-

1 RT thermostable helicase-dependent DNA amplification (RT-HAD) test;

-

1 hyperspectral imaging and outlier removal auxiliary classifier generative adversarial nets (OR-AC-GAN) test;

-

1 microarray test.

All 76 of these tests for detection and/or identification of TSWV were based on the biological, serological and molecular properties of the pathogen. Biological tests such as mechanical inoculation of test plants do not allow pathogen identification, and although widely used, serological detection is often hampered by cross-reactions with other similar orthotospovirus species (Hassani-Mehraban et al. 2016). The molecular tests such as RT-PCR and real-time RT-PCR were developed based on amplification of different genomic parts. Molecular tests are usually more sensitive compared to biological and serological tests; however, they can also cross-react with other orthotospovirus species or they do not detect all TSWV isolates. Consequently, the main challenge for the detection and identification of TSWV was the selection of the appropriate method and test. The process and reasoning for the test selection for the TPS on TSWV was the following.

The ELISA method was taken into consideration because it is widely used and 13 tests were evaluated. Some of these tests were excluded because the commercial provider had stopped production of the test or was in the process of changing the antisera, or because the TPS organiser could not obtain the required information on the validation data despite direct communication with the company. ELISA with specific single-chain antibodies was described in one scientific publication. However, this was excluded because the antibodies are not commercially available. In total, five ELISA tests were selected for the preliminary studies (Table 3.3).

Tests based on Luminex technology were not selected for the preliminary studies because this requires specific equipment that is not available for many diagnostic laboratories, and the TPS organiser did not have experience with this method. TBIA and DBIA tests were not selected for the preliminary studies due to the lack of validation data, and because the interpretation of the results in some cases is difficult, as the results can depend on the experience of the person reading them. In addition, the TPS organiser did not have experience with these methods.

For the on-site detection methods, four tests were taken into consideration, and two were selected for the preliminary studies (Table 3.3). These tests were selected because of their practicality for on-site use. Two of these tests were excluded because there was no commercial kit available.

Altogether, 52 molecular tests were considered. The LAMP method was not selected because the protocols were in Chinese and Japanese only. IC-RT-LAMP tests were not selected because these tests required several steps, and in addition, IC-RT-LAMP is not widely used in diagnostic laboratories in the EU (i.e., there were no EU research publications, and this was not included in the EPPO and IPPC diagnostic protocols for TSWV detection). SYBR green real-time RT-PCR, dot blot hybridisation, RT-HAD, OR-AC-GAN and microarrays were not selected because they were not frequently used in diagnostic laboratories, with the consequent lack of validation data. In addition, OR-AC-GAN and microarrays require specific equipment that was not considered as standard laboratory equipment. Among the 34 conventional RT-PCRs considered, eight were selected for the preliminary studies based on the availability of validation data and based on the results of in-silico analysis (Table 3.3). Among these eight selected tests, seven were considered to be TSWV specific, and one was a generic test for orthotospoviruses. The generic test (Hassani-Merhaban et al. 2016) allowed the detection of American clade 1 orthotospoviruses, which includes TSWV. This generic test was selected because it allowed identification of orthotospovirus species by Sanger sequencing of the RT-PCR product. IC-RT-PCR tests were not selected because the performance of these tests requires additional steps compared to conventional RT-PCR. In addition, IC-RT-PCR is not widely used in diagnostic laboratories in the EU (i.e., there were no EU research publications, and this was not included in the EPPO and IPPC diagnostic protocols for TSWV detection). From the five available TaqMan real-time RT-PCRs, four were selected for the preliminary studies based on the availability of validation data and based on the results of in-silico analysis (Table 3.3). One commercial TaqMan real-time RT-PCR test was not selected for the preliminary studies because the protocol was available in Russian only.

In the TPS on TSWV, the validation data collected for the tests varied across companies and publications. For some tests, extensive validation data were available, for others, there were little or none. Therefore, comparisons of the different tests based on the available validation data was very difficult.

3.3.2 Collecting Isolates/Strains/Populations and Plant Material

Depending on the scope of a particular TPS and the availability of different materials and quarantine requirements, different types of samples can be used for evaluation of the tests in a TPS. The material can be obtained from various sources, which include international and national collections, collections of the TPS organiser, and through interlaboratory exchanges. It is very important that the material selected covers the variability in the target. It is highly recommended that reference materials are used whenever possible (see Sect. 3.6.2). If no reference material is available, well characterised material prepared and tested in the laboratory of the TPS organiser should be used (i.e., internal reference material). Considering the seasonal nature of plant production and the possible lack of naturally infected plants or material in the country of the TPS organiser, or if fresh material is not available, or if the material is not available in sufficient quantities for all of the participants, it is possible to use spiked material, where the tested analyte is spiked into the healthy matrix defined in the scope of the TPS. Pure cultures and DNA/RNA extracts can also be used. For more details, see Sects. 3.2.1 and 3.6.2.

The TPS organiser should plan in advance the isolates that will be required for the preliminary studies, and later for the TPS, and how they will be provided. It is important to consider whether a Letter of Authorisation or other import permits might be needed, and to consider the relevant quarantine regulations. If the isolates are obtained through collaboration with a colleague, the TPS organiser should consider signing a Material Transfer Agreement, which is a written contract that governs the transfer of tangible research materials between two organisations when the recipient intends to use them for their own research purposes. All isolates collected for inclusion in the TPS should first be evaluated in preliminary studies.

For the study on TSWV, it was important to include different isolates of TSWV, to cover the different populations of the virus as well as the isolates of other orthotospoviruses. These isolates were obtained from the collection of the TPS organiser and from the commercial collection of the Leibniz Institute DSMZ - German Collection of Microorganisms and Cell Cultures (DSMZ). In addition, some isolates were provided by fellow researchers. In total, 11 species of orthotospoviruses were included in the preliminary studies. TSWV was represented with 15 isolates, impatiens necrotic spot virus (INSV) with five isolates, chrysanthemum stem necrosis virus (CSNV), TCSV and TYRV with two isolates, and ANSV, CaCV, GRSV, iris yellow spot virus (IYSV), melon severe mosaic virus (MSMV) and WSMoV with one isolate each (Table 3.4).

3.3.3 Evaluation of the Tests Selected for the Preliminary Studies

The tests selected for the preliminary studies are evaluated internally by the TPS organiser, to provide missing validation data for the selection of the final tests to include in the TPS, and to identify any difficulties for the TPS organisation. The number of samples included in the preliminary studies can be different than those for the TPS, based on resources, availability of isolates/strain/populations, and the diagnostic parameters for which data are required. Therefore, performance characteristics, such as, inclusivity, exclusivity and selectivity, which usually require many samples to be prepared, can be determined in preliminary studies based on a panel of different isolates/strains/populations of the target organism, with non-target pests that might cross-react with the target organism, and if appropriate with healthy plant samples (i.e., matrix controls). Throughout the whole process, the EPPO guidelines should be followed (EPPO PM 7/98(5) 2021a). Trained or experienced personnel should perform the analyses. During the process, the personnel should be supervised and all of the steps should be carried out to avoid cross-contamination. All of the documents describing the selection process in the preliminary studies should be adequately filed, as well as all of the protocols, including any changes made to them and the reasons leading to the decisions on how the test selection or any test modifications were implemented. The documentation should be stored in accordance with the quality assurance system of the TPS organiser, and should provide traceability of all of the steps.

Some modifications to the test protocols can be made, but these should be first examined in the laboratory of the TPS organiser. For example, when several ELISA tests are available from commercial providers, each provider recommends different sets of buffers. However, as a TPS organiser is limited in terms of resources and time, some modifications (e.g., the use of the same buffers for all of the ELISA tests) can be introduced to facilitate preliminary studies and as a final result to include more tests in the TPS and to allow more validation data to be produced and to standardise the testing conditions. The producers of commercial tests might benefit from such a study, as getting valuable information on how their tests perform under certain conditions can be of common interest; however, it is recommended that the TPS organiser communicates these adaptations to the kit providers. Communication with kit producers is important because kits are optimised with specific chemicals/buffers, and if changes are made this might affect their performance. Results obtained in that way might not reflect the “true” performance of the kit. The TPS organiser can conduct a small comparison study before any decision on the possibility to use different chemicals/buffers is made.

Regarding the TPS on TSWV, among the 19 tests that were included in the preliminary studies, eight tests were selected for the TPS (Table 3.3, bold text): two DAS-ELISA, two tests for on-site detection, one conventional RT-PCR, and three real-time RT-PCRs (RT-qPCR), each based on predefined criteria. The results of the preliminary studies obtained for the tests selected for the TPS are presented in Table 3.4. Although all available serological tests cross-reacted with other orthotospoviruses, the best in terms of analytical sensitivity were selected because of their robustness and because they were widely used in many diagnostic laboratories (e.g., ELISA tests) or their practicality for on-site detection. For the molecular methods, only tests that did not show cross-reactions with other orthotospoviruses were selected for the TPS.

When the final selection of the tests to be included in the TPS has been achieved, and when these include tests from commercial providers, the decision (with justification) of the TPS organiser should be communicated to the company, respecting confidentiality. In addition, if tests from companies are selected, the TPS organiser should inform the company about the extent of the TPS as soon as the information is available (i.e., number of samples, number of participants); the availability of reagents at the time of the TPS might be a limiting factor, and this should be identified and anticipated by the TPS organiser. This will enable the company to produce enough reagents in time for all of the participants. In addition, this can lead to the establishment of good practice in communication with the companies.

3.4 Selection of the TPS Participants

The selection of competent laboratories is critical for a TPS. This section describes the steps required to select the TPS participants, which includes identification of potential participants, establishment of criteria for selecting participants, invitation for participation of potential participants, and establishing a contract with the participants (Fig. 2.2).

3.4.1 Identification of Potential Participants for a TPS

Potential participants in a TPS can be identified in different ways, such as through surveys, the EPPO database on diagnostic expertise, professional networks, previous participation in proficiency tests or TPS, and social media. Ideally, all laboratories including diagnostic laboratories, private laboratories at commercial companies, and laboratories at public institutions, might have the opportunity to express their interest in taking part in a TPS.

3.4.2 Definition of Weighted Criteria for the Selection of the TPS Participants

The criteria for the selection of the TPS participants must be determined in advance, on the basis of the requirements of the TPS (e.g., see Table 3.5). A target value and the relative weighting need to be assigned to each criterion, to give greater importance to the most critical ones. Weighted criteria need to be set to objectively select the participants for a TPS, with an emphasis on availability of the applicants to perform the tests within the required timeframe, their technical expertise in the use of the methods, authorisation to work with the specific pest, the possibility to obtain import documentation in time (e.g., a Letter of Agreement, if needed), and the quality assurance system in place in the participating laboratory. Other criteria that might be considered in the selection are, for example, technical expertise on the pest group, previous participation in proficiency tests or TPS, or known ability to perform all of the methods selected for the TPS. All criteria considered of high importance must be met by the participants, to be sure that they are proficient and can correctly perform the selected tests, to enable the correct analysis and evaluation of the TPS results. Further criteria (weighted as less important) can be used to allow the objective selection of qualified participants in case there are too many laboratories applying to take part in the TPS.

Depending on the scope of the TPS, the expected number of participants, and the target pest of the TPS, different criteria can be used as the most important for selection of the potential participants. VALITEST TPS organisers considered the following criteria as the most important for selection of the participants: appropriate authorisation to work with a particular pest; appropriate equipment and facilities; commitment to perform analyses on time; willingness to implement all of the tests within the method; technical expertise; and existing quality assurance and traceability. However, exceptions can be made if required; e.g., if the TPS is organised for a ‘new’ pest, as was the case in the TPS for tomato brown rugose fruit virus, laboratories are expected to lack technical expertise with the pest, and therefore this criterion was not decisive in the selection of potential participants. If a TPS for a pest that has a limited area of distribution is being organised, it can be expected that the number of potential participants will be limited, and therefore, the decisions should be made with more relaxed criteria. Future TPS organisers should have all of these aspects in mind during the planning stage of a TPS.

Additionally, information about participants equipment is required, to help the TPS organiser to plan the TPS and to interpret the results obtained.

3.4.3 Invitations and Analysis of the Eligibility of Potential TPS Participants

After the organiser of a TPS has identified the potential participants for the TPS and defined the criteria that should be met by the participants of the TPS, invitation letters for participation in the TPS should be send out. An invitation letter must contain the name of the pest that will be the target of the TPS, with a description of the scope of the TPS, and the details of which methods will be evaluated in the TPS (Appendix 1). It should also inform the potential participants about the timeline and the deadlines. To assess the eligibility of an interested laboratory for participation in the TPS, the invitation letters should be sent along with a TPS participant information form that asks the potential participants about their experience with the diagnostic methods, pest groups and quality assurance, and about their available equipment (Table 3.5). Potential participants should respect the deadlines to provide the requested information. If a potential participant does not return the form with the requested data filled in by the deadline, it can be considered that they are not interested in taking part in the TPS.

Responses from interested laboratories are then evaluated by the TPS organiser using the weighted criteria described above to select the best TPS participants, and their participation is confirmed by sending them an acceptance email. It is difficult when organising a TPS to estimate how many potential participants will be interested in taking part in a TPS. For the TPS organiser this information is important, because it significantly affects the TPS process, especially in terms of the preparation of the materials and to ensure sufficient participation to allow proper statistical analysis of the data. Therefore, we present here the experience gained in the VALITEST Project based on the 12 TPS organised, which shows that a response rate of 20% to 70% can be expected (Table 3.6). It should be taken into consideration that in some cases, not all of the registered laboratories will be able to provide their results (Table 3.6) for different reason, such as inability to obtain import permits or chemicals needed to perform the analyses on time, and in the case of a global crisis (e.g., the COVID-19 outbreak).

Participant selection can significantly affect the final results. It is generally acknowledged that laboratories participating in a TPS should be proficient so that the results obtained reflect the performance of the tests and not the proficiency of laboratories. Thus, the criteria and weighted values used for the selection of participants should be well established to select proficient laboratories. If a participant provides results that are far from the expected results, that particular dataset may also be excluded from the analysis. However, the selected criteria and weighted values should not lead to the exclusion of too many laboratories. Indeed at least 10 datasets per test are needed to perform the statistical analysis and to provide results with high confidence (EPPO PM 7/122(1)). In addition, if the criteria used are too strict, only highly proficient laboratories will be selected and a high proportion of concordant datasets can be expected. As a consequence, the performance characteristics of the test(s) might be overestimated and might not reflect the global diagnostic community.

Usually in a TPS this decision is based on the self-assessment of competence of the potential participants, which can be biased and subjective, but this is a risk that is accepted by TPS organisers. It is worth mentioning that during the selection process, it is important to have open communication with the participants, as many misunderstandings can be resolved and competent laboratories will not be excluded; on the other hand, unqualified laboratories will not participate in the TPS. The whole process therefore requires dedicated time that should be appropriately considered during the planning of the TPS.

3.5 Contracts and Technical Information for TPS Participants

A TPS organiser should provide all of the relevant information regarding the TPS to the participants, which includes the definition of the scope of the TPS, the contract and the technical sheets, including information regarding specific requirements, such as a Letter of Authorisation. The TPS organiser is required to treat the information on the participants as confidential, if this status is not defined differently.

A TPS organiser should foresee potential difficulties in the course of the TPS, and therefore should provide the information regarding these to the TPS participants in advance. Among the difficulties that can affect the course of a TPS, the most important and frequent include delays in obtaining the authorisation document (e.g., a Letter of Authorisation) required for quarantine pests and in obtaining the necessary chemicals and reagents. However, if the TPS organiser informs the participants of those potential difficulties sufficiently in advance, their impact can be avoided, or at least minimised.

The contract serves to define the rights and obligations of the parties involved in a TPS, (i.e., the organiser and the participating laboratory). In addition, the contract contains a detailed description of the timelines and the detailed conditions of participation for the interested laboratories. The TPS contract can also serve as a registration form. An example template of a contract is given in Appendix 2. Appendix 3 includes a template that can be used to collect the contact information of participating laboratories which might be used in future steps of a TPS (such as sending of the samples and submission of the reports). There are different ways to collect such data, and in the framework of VALITEST, they were collected using an MS Excel template.

Contracts need to be accompanied with the TPS technical sheet that contains a general overview of the TPS, with the required information about the tests, sample panels and important dates (planning of the TPS), and the detailed experimental protocols for performing each test. The technical data sheet should also include a list of all of the necessary consumables and the quantities that will need to be ordered by the participants of the TPS. An example template of a TPS technical sheet is given in Appendix 4.

The TPS organiser should establish and maintain open and transparent communications with the TPS participating laboratories. Although this can be time consuming for both sides, it can be crucial to avoid misunderstandings.

3.6 Preparation and Dispatch of Samples and Reagents

For TPS (as for any other interlaboratory comparisons), it is important that the samples match as closely as possible the materials encountered in routine testing, if it is not defined differently in the aim and the scope of the TPS. This includes the matrix (e.g., host plant), the target (e.g., pest) and the concentrations (e.g., infection levels) (EPPO PM 7/122(1)).

3.6.1 Definition of the Panel of Samples

Depending on the scope of a particular TPS, different types of samples can be prepared, such as fresh naturally infected plant material, DNA/RNA, spiked matrix, artificially inoculated matrix, freeze dried infested plant material, samples that mimic infested material, pure cultures, traps and pest specimens. It is very important that the panel of samples is appropriate for each diagnostic method included in a TPS, depending on the pest/ matrix combination, covering:

-

the range of concentrations and genetic diversity of the target pest;

-

the diversity of the uninfested material (when relevant) for the selectivity assessment;

-

the diversity of the non-target organisms that occur in the same ecological area.

In addition, the quantities of samples prepared for the TPS needs to be sufficient to enable evaluation of the selected performance characteristics in-house and interlaboratory, and the homogeneity and stability testing (see Sect. 3.6.3). When possible, TPS organisers should prepare additional samples to cover unexpected events that can occur during a TPS, such as damage during transport and prolonged stability testing requirements. For example, additional stability testing was required during the VALITEST Project. Due to the COVID-19 outbreak, some laboratories were not able to perform the analyses in a timely manner. By performing additional stability testing, TPS organisers confirmed that the samples were still fit for purpose at the time when the participating laboratories submitted their results.

The numbers of samples sent to the participating laboratories should be sufficient to allow statistical evaluation of the performance characteristics of interest. However, the participating laboratories should not become overloaded, so the TPS organiser should take into account the time and resources participants need to invest (e.g., having a maximum of 24 samples including the controls, which corresponds to the number of tubes that can be used in a standard laboratory centrifuge).

The samples should be accompanied by detailed instructions on how the participating laboratories should handle them upon receipt. The TPS organiser is responsible for providing appropriate coding of the samples. If possible, sample codes should be different for each of the participating laboratories to avoid disclosure of the identities of the participants.

Ideally, a standardised sample panel should be used when possible. This contributes to a more robust comparison of the data obtained in different validation studies, and to the application of standardised statistical analysis of the data. However, each TPS organiser can create their sample panel based on the performance criteria that should be evaluated during the TPS. Recently, significant effort has been invested in the standardisation of sample panels to ensure that they are suitable for statistical analysis of the data, in addition to calculation of the standard diagnostic parameters, as explained in EPPO PM 7/122(1).

In the framework of VALITEST, a sample panel was proposed (Massart et al. 2022) and listed the following recommendations:

-

a minimum of five dilution points from a sample that contains the target pest and three replicates of the serial dilutions for the determination of the analytical sensitivity using the probability of detection model. The range of serial dilutions must cover the limit of detection, as determined during the preliminary studies by the validation organiser.

-

a minimum of three samples free from the target pest (i.e., negative samples) and two samples infected with the target pest (i.e., positive samples), which should be independent of each other, and which are used for determination of the diagnostic sensitivity and diagnostic specificity. At least one positive sample should have a low concentration of the target pest; e.g., close to the limit of detection estimated during the preliminary studies by the validation organiser.

-

a minimum of two replicates for each of three negative samples and two positive samples, with the samples independent of each other, for determination of the repeatability and reproducibility using the accordance and concordance of Langton et al. (2002). By using accordance and concordance, it is possible to determine if a particular laboratory performs poorly, or if a particular sample or test is performed poorly (see more details in Massart et al. 2022).

-

to determine the analytical specificity of a test, it is very important to have closely related species included in the sample panel (at the expense of the non target).

The composition of a sample panel is heavily dependent on the performance criteria that need to be evaluated within the TPS. For example, if a sample panel only contains samples with high concentrations of the target and/or samples with concentrations close to the limit of detection, the test results of the TPS will only provide the reproducibility at the extremes of the target concentrations. Thus, this will not provide an answer to the question of whether the test is appropriate to monitor the infection status in particular host species. If the aim is to provide data on the analytical specificity of the test and cross-reactions with closely related organisms are expected, these need to be included in the sample panel. In addition, the sample panel needs to contain appropriate controls to monitor the processes (in the laboratories of the TPS organiser and the TPS participants).

Prior to sending the samples to the participants, the TPS organiser needs to define or establish the assigned values for the samples, i.e., the value attributed to a particular property of an interlaboratory test sample (EPPO PM 7/122(1)). The TPS organiser is also responsible for documenting the procedure in which the assigned values were determined.

Assigned values can be given to the test items in various ways, with the two most commonly used being to assign the reference values on the true health status of the test items, or to assign values based on the results of the tests in preliminary studies which are also expected to be used by the participants later in the TPS. In some cases, when samples are between the positive and negative thresholds of a test or the specimens show overlapping morphological characters, the assigned value can be declared as ‘inconclusive’ (EPPO PM 7/122(1)).

For the TPS for the detection and identification of TSWV, different strategies were used for the different methods. For the molecular tests, the samples were defined as positive when they contained TSWV, and negative if they contained other orthotospoviruses or if they were without any virus. However, for ELISA and the LFD tests, high dilutions of samples containing TSWV were considered as negative if the best performing test gave negative results under extensive testing during the preliminary studies.

In the TPS for TSWV, the full panel included 22 samples (S-1 to S-22), one or two positive controls (i.e., positive isolation/ amplification controls), and one negative control (i.e., negative isolation control) (Table 3.7). The sample panel was assembled with some slight modifications from the proposed sample panel described above to obtain data on analytical specificity (i.e., five isolates of similar orthotospoviruses were included at the expense of the negative samples and the heavily contaminated positive samples). The expected results were determined based on the results of the preliminary studies.

3.6.2 Reference Material

To prepare the samples of a TPS, the use of reference material is recommended. In the field of plant health, the commercial availability of reference material or certified reference material is limited, and consequently reference material might need to be produced by individual diagnostic laboratories, or by companies that offer positive or negative controls as part of their kits (EPPO PM 7/147 2021b). A reference material in the sense of the ISO is any material that is sufficiently homogeneous and stable with respect to one or more specified properties, and where the suitability for its intended use has been established in a measurement procedure (ISO Guide 30:2015). The term “reference material” is a generic term, where the properties might be quantitative or qualitative. The uses here can include calibration of a measurement system, assessment of a measurement procedure, assignment of values to other materials, and quality control. Accordingly, a certified reference material is defined as a material characterised by a metrologically valid procedure for one or more specified properties and accompanied by a certificate that includes the value of the specified property, its associated uncertainty, and a statement of metrological traceability (ISO Guide 17034). The International Vocabulary of Metrology gives the following definition of a reference material: “a material, sufficiently homogeneous and stable with reference to specified properties, which has been established to be fit for its intended use in measurement or in examination of nominal properties” (Anonymous, 2008). Reference materials provide essential traceability in testing, and they are used, for example: (i) for detection and identification; (ii) to demonstrate the accuracy of the results; (iii) to calibrate or verify equipment; (iv) to monitor laboratory performance; (v) to validate or verify tests; and (vi) to enable comparisons of tests (EPPO PM 7/84(2) 2018b).

In the VALITEST Project, a list was drafted of the criteria to be considered for the production of reference materials to be used in TPS (Chappé et al. 2020; Trontin et al. 2021). If needed, TPS organisers can include additional criteria in the list based on their own experience. The identified criteria are the intended use of the material, and its identity, commutability level (i.e., level of agreement between test results obtained with a biological reference material and with an authentic sample), traceability (i.e., information on the source of the material), homogeneity (e.g., non-homogenous material might introduce measurement uncertainties), stability (which should be determined with the ‘worst-case scenario’ approach; e.g., using the PCR test that targets the longest DNA fragments), assigned value (i.e., expected result of the test) and purity (which describes the presence or absence in the biological reference material of components that might interfere with the results of a test, including non-target organisms, and when relevant, components of the matrix; e.g., false positive results) (Chappé et al. 2020; EPPO PM 7/147 2021b). Depending on the intended use, the reference material might need to fulfil all ofthe criteria, or some criteria might not be relevant (Chappé et al. 2020; EPPO PM 7/147 2021b).

To describe the reference material used in the TPS for detection and identification of TSWV, a set of descriptors were adapted from Chappé et al. (2020), as shown in Table 3.8.

3.6.3 Stability and Homogeneity Studies

To ensure the stability and homogeneity of the samples included in a TPS, the TPS organiser should test the complete batch of samples. If this is not feasible, representative samples should be selected, and the selection should be justified and documented. If the TPS organiser is providing the chemicals (e.g., primers/primers-probe separately or in mixes), their homogeneity and stability should also be tested. Sample homogeneity should be tested after the samples are fully prepared and ready for distribution to the participants, but before they are shipped (EPPO PM 7/122(1)). If this is not feasible to include a complete batch of the samples in homogeneity testing, according to currently available recommendations at least 10 randomly selected samples are tested in duplicate (for each pest/matrix/infestation level, including negative samples) (see also ISO 13528).

According to EPPO PM 7/122(1), the TPS organiser should also demonstrate that the samples are sufficiently stable to ensure that they do not undergo significant changes throughout the TPS, including during their storage and transport. If necessary, and especially if the transport requires special conditions (e.g., dry ice), stability tests should be performed under conditions that mimic the transport and storage conditions. Alternatively, the samples can be sent to the participant with the adverse environmental or transport conditions, and then returned unopened for testing to the TPS organiser laboratory. Stability should be tested before samples are dispatched (to ensure that the samples are stable enough to be included in the TPS). Generally, stability testing before sample dispatch is coupled with the homogeneity study, to spare resources and avoid doubling the work in the laboratory. In some cases (e.g., when the samples are prepared as an extract of the pest), based on the previous experience with the pest and taking into account the timeframe of the TPS, when the TPS organiser considers that the stability of the samples can be affected, stability testing can be performed also during the various stages of the TPS. This practice retains as many as possible of the datasets for the final evaluation. During the TPS on detection and identification of TSWV (where the samples were prepared as extracts), stability tests were performed each week starting 7 days after the samples were dispatched to the participants. Stability testing should also be conducted after the deadline for participants to perform the analysis, to confirm that the stability of the samples has been maintained throughout the TPS. In some cases, the TPS organiser has to be prepared to extend the stability studies, because some TPS participants might not manage to perform the analysis before the specified deadline (e.g., as in the case of VALITEST, there was a global pandemic that affected the normal workflow in the majority of the laboratories involved). For material that have been shown to be stable over time (e.g., Globodera spp. cysts, some fungal spores), stability testing is not required. If it is not possible to test whole batches of samples, currently available guidelines recommend, when possible, to test a minimum of three randomly chosen samples in duplicate (for each pest/matrix/infestation level, including the negative controls) (see also ISO 13528).

The number of samples tested for the stability and homogeneity might deviate from the recommendations, and this should be documented. If samples are not stable and homogeneous, they can affect the assigned values, and their impact on the evaluation of the results should be estimated. Such samples might be rejected for use in the TPS, or they can be used under specific conditions that should be clearly stated. If the participating laboratories evaluate measurements or tests on test items (e.g., the samples) that are not considered stable any more, these datasets should be excluded from further evaluation (e.g., if the laboratory returns the results too late).

For the TPS for TSWV, stability was carefully assessed because a plant extract known to be unstable to orthotospoviruses was used. During the preliminary studies, aliquots of the last three positive dilutions of one TSWV sample were stored at −20 °C and analysed with the eight tests after 2, 5 and 8 weeks of storage. The results were similar to those obtained with freshly prepared extracts. Stability was also evaluated under conditions that mimicked the transport and storage conditions. The samples were stored at different temperatures and for different times before being tested: for up to 18 weeks at less than −15 °C; and for 2 weeks at less than −15 °C, 3 days on dry ice, and then 5 weeks at less than −15 °C. For the stability and homogeneity testing prior to shipment of the samples and throughout the period of the TPS, six randomly selected aliquots of all of the samples and primer/primer-probe mixtures prepared for the TPS were selected and analysed using the tests included in the TPS. The last stability testing was conducted on the deadline to perform the analysis, which was prolonged due to the COVID-19 situation. All of the results were documented and stored.

3.6.4 Dispatch of Samples and Reagents

The TPS samples need to be dispatched together with the instruction sheet, and along with an acknowledgement of receipt of the panel of samples (for immediate return), and a results form for the participants to fill in after performing the tests. The TPS instruction sheet is intended to help the TPS participants upon their receipt of the parcel, for the identification of the samples, for their storage and analysis, for any special precautions needed, and for the submission of the results.

The following information should be included in the instruction sheet:

-

Requirements on how to handle the test items, controls, and/or reagents in the participant laboratory (storage upon receipt);

-

Details about the number of sample panels and test items received by the participating laboratory;

-

Information about the test items and samples, and for which test they should be used;

-

Panel code (participant ID);

-

Explanation of how the samples were coded for the different tests or methods;

-

The time schedule of the testing;

-

The detailed instructions to prepare and condition the test items;

-

Other instructions if needed, such as safety requirements, special precautions for handling and destroying of material;

-

Specific and detailed instructions for entering, recording and submitting the results and the associated deviations and difficulties;

-

The latest date when the results should be submitted;

-

The TPS organiser contact information;

-

The time frame for the release of the TPS report to the participants.

An example template of an instruction sheet is given in Appendix 5.

Another document that should be given to the participants along with the dispatch of the samples is the acknowledgement of receipt of the panel of samples. With this document, the TPS organiser wants to determine whether the transport conditions might have affected the test items. An example of an acknowledgement form developed by the partners of the VALITEST Project and used in the TPS for the detection and identification of TSWV is given in Appendix 6.

To minimise the possibility of human error in processing the TPS results, the TPS organiser needs to prepare a results form that can be easily manipulated and used to extract the data provided by participants (some examples of results forms are given in Appendix 7). The appropriate format for this document can be MS Excel, using drop-down menus to enter the data where possible, and formulas to calculate the means where appropriate. Also, online recording of the results on different platforms (e.g., google sheets or similar) can be used especially in the cases with a high number of tests and/or participants involved, to enable easier collection, processing and interpretation of the results. In addition to the results of the sample analysis, the participants should be asked to provide other data that are needed to contextualise the results provided, and to interpret the results appropriately.

The TPS organiser should take into account the different policies and laws in the countries of the TPS participants regarding the shipment and receipt of plant materials, especially packages that need to be provided with import permits (e.g., Letters of Authority) due to the presence of quarantine pests in the samples. Where the TPS test items need to be sent on dry ice, it is very important for the TPS organiser to use the services of a reliable courier, to ensure that the samples are delivered within an appropriate time frame and without damage. Some countries have limitations on the acceptance of packages on dry-ice, and some have long customs procedures that can affect the stability of the samples. Therefore, the TPS organiser should anticipate such problems in advance, and appropriate measures should be taken to minimise any impact or damage that would require exclusion of some datasets from the TPS.

3.7 Collecting the TPS Results and Analysing the Data

The TPS participants should send back the completed results forms to the organiser or complete an online results form. The TPS organiser analyses the TPS results and summarises the conclusions on the performances of the tests. During the analysis of the results, the TPS organiser might have to exclude all or some results from some TPS participants; e.g., if there is suspicion of contamination during the processing of the samples, or if the analysis was not performed according to the protocols provided. If there were any deviations from the recommended protocols by any of the participants, this should be recorded, and their potential impact on the results should be assessed.

The performance of the tests is evaluated through determination of their performance characteristics, and through comparisons of the performance characteristics of the different tests (or methods, if the same panel of samples was used). To perform the correct statistical analysis, a TPS requires a minimum number of participating laboratories; i.e., at least 10 valid laboratory datasets per test (EPPO PM 7/122(1); Chabirand et al. 2017). However, it is recognised that this might be a constraint in plant-pest diagnostics. If the results of less than 10 laboratories were used to evaluate the performance of the tests, the report should contain a disclaimer that the results might not be reliable, and that any deviation might be the result of the processing of an insufficient number of datasets.

The main performance characteristics for validation of tests include the following (EPPO PM 7/98(5) and PM 7/122(1)):

-

Analytical sensitivity

-

Analytical specificity, inclusivity

-

Analytical specificity, exclusivity

-

Diagnostic specificity

-

Diagnostic sensitivity

-

Selectivity

-

Repeatability

-

Reproducibility

-

Robustness

-

Accuracy

After obtaining the data from the TPS participants, the TPS organiser needs to carry out the full evaluation. The first step is the definition of the outlier results. The TPS organiser can decide how to define the outliers on a case-by-case basis. Frequently, controls provided to participants with the test panel are used as a quality check of the datasets, and only the datasets with concordant results for all the controls are considered as valid. Additionally, the TPS organiser can use other methods to identify outliers; e.g., for the TPS for TSWV, the results of the healthy tomato samples were assessed, and datasets of laboratories where there were two or more (out of three) false positive results for the healthy tomato samples were also excluded. Another option to identify outliers can be to use different graphical tools, such as heat maps, which allow rapid visualisation of outlier results that are too far away from the results of the other laboratories (Fig. 3.2). If needed, in particular cases, additional outliers can be defined, such as incomplete datasets. Incomplete datasets and datasets with incorrect results of controls can be considered as the first level of outliers to be excluded. Outliers identified only through graphical analysis should be checked carefully in terms of whether they should indeed be excluded or whether they are a part of the method robustness.

The next step in the results analysis is to evaluate the performance of the individual tests. First, the performance can be described in terms of the number and proportion (%) of the results that were inconclusive, true negatives (negative agreement), false negatives (negatives deviations), true positives (positive agreements) and false positives (positives deviations). The number and proportion (%) of concordant and non-concordant results can also be calculated. Inconclusive results can be treated as non-concordant. When it is not possible to reasonably interpret inconclusive results, these can be excluded for the calculation of the performance characteristics.

The calculations can be carried out according to the layout of Table 3.9.

The following parameters can be calculated:

-

Diagnostic sensitivity = true positives / (false negatives + true positives);

-

Diagnostic specificity = true negatives / (false positives + true negatives);

-

False positive rate = false positives / (false positives + true negatives) = 1 - diagnostic specificity;

-

False negative rate = false negatives / (false negatives + true positives) = 1 - diagnostic sensitivity;

-

Relative accuracy = (true positives + true negatives) / total number of samples;

-

Power = true positives / assigned positives;

-

Positive predictive value = probability that subjects with a positive screening test actually have the disease = true positives / (true positives + false positives);

-

Negative predictive value = probability that subjects with a negative screening test actually do not have the disease = true negatives / (false negatives + true negatives);

-

Diagnostic odds ratio, as a measure of the effectiveness of a diagnostic test. This is defined as the ratio of the odds of the test being positive if the subject has a disease, relative to the odds of the test being positive if the subject does not have the disease. It is thus calculated as: Diagnostic odds ratio = (true positives / false positives) / (false negatives / true negatives).

Harmonising of the analysis and presentation of the results of a TPS can enable easier analyses of different TPS reports. In the framework of VALITEST, significant efforts were made to find the appropriate way to harmonise the presentation of the results from different TPS. This will enable future TPS organisers to present the main results of their TPS in the same (or similar) way(s).

As each TPS has its own specificities, problems and unexpected obstacles, the TPS organiser should apply appropriate measures to limit or prevent these from having any impact on the final result of the TPS. For example, in the TPS for TSWV, there were problems during the analysis of the raw data obtained from the TPS participants for the real time RT-PCR tests. It was apparent by the “raw” results received from the TPS participants that some results were not interpreted correctly (taking into account all provided Cq values), and some laboratories had clear problems with contamination. Therefore, some of the results submitted by TPS participants were corrected to retain as many datasets as possible in the analysis. This was necessary because the TPS participants used different approaches to set up Cq cut-off values: some set the Cq cut-off value at a too low level, while some did not use any Cq cut-off value even though they had some late signals in the negative controls. Cq cut-off values are equipment, material and chemistry dependent, and need to be verified in each laboratory before the tests are implemented. However, it was not possible to check this for all of the TPS participants separately. Therefore, a fixed Cq cut-off value of 35 was introduced for all of the RT-qPCR datasets except when the Cq cut-off value applied by a TPS participant appeared to be reasonable. This and similar modifications can be carried out by the TPS organiser to respect the ‘trueness’ of the results, and to keep as much data as possible in the calculations of the statistical parameters, which will provide more reliable measurements of the performances of the tests.

Below, an example from the data analyses of the TPS for TSWV is presented. First, each test was analysed separately (Table 3.10), and then the tests within each method were compared (Table 3.11). The colour coding is consistent across Tables 3.10 and 3.11, with concordant results in green, inconclusive results in yellow, and non-concordant results in red. In this way it is possible to judge which test is better for which purpose. Tables 3.10 and 3.11 can serve as templates for the analysis of the results of other TPS.

To facilitate their comparisons, the results summarising the performances of the different tests can also be presented in graphical forms, using histograms or scatter plots (e.g., see Figs. 3.3, 3.4, and 3.5). Whatever representation is used, it is of utmost importance to be consistent with the data presentation. If the results of tests were obtained based on different sample panels they must not be presented and compared on the same plots, as such representation can be misinterpreted and lead to the disqualification of perfectly good tests. Wrong presentation of the results can lead to the wrong interpretation of the TPS results.

From the data on the diagnostic sensitivity (DSE) and diagnostic specificity (DSP), it is possible to calculate the likelihood ratios (LR). The positive (+) and negative (−) likelihood ratios can be calculated as:

-

LR+ = DSE / (1-DSP)

-

LR− = DSP / (1-DSE)

The greater the LR+ (or LR–) for any particular test, the more likely that a positive (or negative) test result is a true positive (or true negative). Likelihood ratios of >10 are considered to be indicative of highly informative (and potentially conclusive) tests.