Abstract

Quality of life (QoL) is a subjective term often determined by various aspects of living, such as personal well-being, health, family, and safety. QoL is challenging to capture objectively but can be anticipated through a person’s emotional state; especially positive emotions indicate an increased QoL and may be a potential indicator for other QoL aspects (such as health, safety). Affective computing is the study of technologies that can quantitatively assess human emotions from external clues. It can leverage different modalities including facial expression, physiological responses, or smartphone usage patterns and correlate them with the person’s life quality assessments. Smartphones are emerging as a main modality, mostly because of their ubiquitous availability and use throughout daily life activities. They include a plethora of onboard sensors (e.g., accelerometer, gyroscope, GPS) and can sense different user activities passively (e.g., mobility, app usage history). This chapter presents a research study (here referred to as the TapSense study) that focuses on assessing the individual’s emotional state from the smartphone usage patterns. In this TapSense study, the keyboard interaction of n = 22 participants was unobtrusively monitored for 3 weeks to determine the users’ emotional state (i.e., happy, sad, stressed, relaxed) using a personalized machine learning model. TapSense can assess emotions with an average AUCROC of 78%(±7% std). We summarize the findings and reflect upon these in the context of the potential developments within affective computing at large, in the long term, indicating a person’s quality of life.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

Introduction

Emotions have an enormous impact on our momentary performance, health, and way of relating to others, hence on the quality of a persons’ life. In particular, the experience of unpleasant (or pleasant) emotions is directly related to an individual’s well-being. Emotions are influenced by subjective experiences and memories and the context the individual is in, and it seems almost impossible to measure this phenomenon objectively, reliably, and validly. Indeed, capturing human emotional states has been a challenging task for researchers for decades, leading to numerous theories about emotions, moods, and feelings. Specifically, psychometrics focuses on the theory and techniques of psychological measurements, including the QoL measurements. The emerging field of affective computing promises to overcome some methodological difficulties that lead to limitations in traditional methods of psychometrics. Affective computing is the study of technologies that can quantitatively measure human emotion from different clues. It is based on the hypothesis that an individual’s digital footprint is highly correlated with their perceptions, feelings, and resulting behaviors and that extracting and analyzing this data collected over time can prove that “your smartphone knows you better than you may think.” In addition to the fact that people use their digital devices extensively, being the first and last thing used during a normal day [1]—this statement becomes even more valid.

In this chapter, we discuss the current development within the affective computing area while focusing on assessing emotions via personal technologies. Firstly, we define emotions as a complex interplay of different components (sensory, cognitive, physiological, expressive, motivational) over time. Several emotion theories have been developed in the past years, along with emotions being classified into three dimensions: valence, arousal, and dominance [2]. Among these emotion theories, the Component Process Model [3] stands out, revealing the emotional process that leads to an individual’s perception and processing of negative and positive life experiences. As an extension of this model, the Emotional Competence Model [4] hypothesizes that mental well-being and adverse psychopathology (e.g., anxiety, depression) greatly depends on a well-functioning emotional process. This depends on the individual’s experienced emotional response, perception of the situation, adequate appraisal, and emotional regulation. Hence, emotional competence plays a key role in maintaining a person’s quality of life. Furthermore, the knowledge and perception of emotions are basic abilities that may elicit more adaptive emotion regulation strategies (like an acceptance of an uncontrollable stressor).

To capture individuals’ emotions (e.g., for clinical or research reasons), mostly self-reports for negative and positive emotions are conducted using psychometrically validated questionnaires of concepts as, e.g., stress, depression, and well-being with a recall period of days to months. These assessment instruments face the problem that (i) self-reports are rather subjective and often biased by, e.g., time and motivation to fill out often many questions and (ii) they are influenced to a great deal by the current psychological state interfering with the recall of someone’s mood days to weeks ago. To overcome these issues, new assessment methods arose in the past years using personal digital technologies. For example, the Experience Sampling Method (ESM) , also known as Ecological Momentary Assessment (EMA) [5,6,7], is increasingly used in psychology to trigger self-reports for emotions and behaviors momentarily, i.e., as closely as possible to the subject’s daily life experiences, periodically (randomly or at fixed intervals) or in an event-driven fashion [6, 8].

Traditionally, different modalities such as facial expression [9,10,11,12,13], speech prosody [14,15,16,17,18,19,20], physiological signals like ECG, EEG, HR, GSR blood, brain, posture, [21,22,23,24,25,26,27] are explored for emotion assessment. Additionally, other sources can be used to extract emotions using smartphones and internet usage, which is discussed in this chapter. To determine the emotion states, often these affect-aware systems deploy a machine learning model. Therefore, conventional machine learning models like Support Vector Machine (SVM), Random Forest, Bayesian approaches were used in affective computing [28, 29]. In these approaches, first, a set of features (which can distinguish one emotion from another) were extracted manually from, e.g., the physiological signals. These features were correlated with the emotion ground truth labels (self-reports) to construct the emotion inference model. With the latest advances in the Deep Neural Network models, the conventional approaches were replaced by state-of-the-art AI models such as Convolutional Neural Network (CNN), Long Short Term Memory (LSTM), Recurrent Neural Network (RNN) [28, 29]. The advances in this field have helped to overcome the manual feature engineering effort and helped obtain very high classification performance in the affect classification. The advances in affective computing have led to many affect-aware applications such as emotion-aware music player, affective tutor, mood monitor, which influence the quality of life [30,31,32,33,34]. The key working principle of such emotion-aware applications is to collect physiological and behavioral data from different modalities and to train a machine learning model for emotion inference.

Given the current accelerating scale of developments in personal technologies, new assessment techniques for emotions emerged. We present the design and development of a smartphone keyboard interaction-based emotion assessment application. Specifically, among different types of interactions performed in smartphones, keyboard interactions are highly interesting. They represent the input/output interaction between the user and the phone for, e.g., information, communication, or entertainment [35]. Additionally to the interaction itself, the interactive content may be of high interest. The research shows that not only individuals often express momentary emotions on social networks’ platforms [36, 37], but also a person’s language was found to reveal his/her momentarily psychological state [38].

In our research, we focus on the smartphone interaction itself. Hence, we have designed and implemented an Android application TapSense , which can unobtrusively log users’ typing patterns (without actual content) and trigger self-reports for four types of emotion (happy, sad, stressed, relaxed) leveraging the ESM method. Different typing features like typing speed and error rate are extracted from the typing data and correlated with the emotion self-reports to develop a personalized emotion assessment model. However, as the conventional ESM-driven self-report collection for model construction is labor-intensive and fatigue-inducing, we also investigate how the self-report collection approach can be further optimized for suitable probing moments and reduced probing rate. So, we also have developed an adaptive 2-phase ESM schedule (integrated into TapSense), which balances the probing rate and self-report collection time and probes the individual at the opportune moments. The first phase balances between probing rate and self-report collection time and trains an ‘inopportune moment assessment’ model. The second phase operationalizes such a model so that no triggering is done at an inopportune moment. We investigate the implications of this ESM design on the emotion classification performance.

We evaluate the proposed approach in a 3-week ‘in-the-wild study involving 22 participants. Our first important result demonstrates that using smartphone keyboard interaction; we can determine the emotion states (happy, sad, stressed, relaxed) with an average AUCROC of 78%. The next major result shows the performance of the proposed ESM approach. It demonstrates that the proposed 2-phase ESM schedule (a) assesses inopportune moments with an average accuracy (AUCROC) of 89% (b) reduces the probing frequency by 64% (c) while enabling the collection of the self-reports in a more timely manner, with a reduction of 9% on average in elapsed time between self-report sampling and event occurrence. The proposed design also helps to improve self-report response quality by (a) improving valid response rate to 96% and (b) yielding a maximum improvement of 24% in emotion classification accuracy (AUCROC) over the traditional ESM schedules.

The chapter is organized as follows. Firstly, in Sect. 2, we discuss the definitions and the nature of emotions and their importance for the daily life experience, which influences an individual’s quality of life. In Sect. 3, we present traditional psychometric assessment instruments for emotions and different assessment methods leveraging affective computing developments and beyond. The background, study design, and empirical evaluation of TapSense—a smartphone-based approach for assessing emotions are presented in Sect. 4. We discuss and conclude the chapter findings in Sect. 5.

Background and Related Work

This section presents the definition and models covering the concept of emotions (2.1), their importance for the quality of life (2.2). It attempts to capture emotions via traditional (2.3) and more novel, data-driven approaches (2.3).

Definition of Relevant Domain Concepts

Emotions, moods, and psychological states play a key role in our personal, professional, and social life. Also, interpersonal relationships, professional success, and mental well-being depend greatly on how we cope with stressful events and navigate adverse emotional experiences. Often the terms: emotions, effects, feelings, and moods are confused in language usage. Feelings most commonly involve invariably a direct response of the autonomic nervous system (ANS) involving organ functions (e.g., change in respiration pattern, adrenaline rush). At the same time, an umbrella term refers to all basic senses of feelings, ranging from unpleasant to pleasant (valence) and from excited to calm (arousal). Moods differ from feelings, emotions, and affects in that they are experienced as extended in time (c.f., mood stability by Peters, 1984) but are also subject to certain situational fluctuations [39]. Very similar is the psychological concept of state, referring to a person’s mental state at a certain point in time, introduced by Cattell and Scheier [40] as a counterpart to the concept of timely persisting (personality, motivational, cognitive) traits. In contrast, emotions are a much more complex mental construct consisting of several components as the physiological response and lasting from minutes to hours.

Research on emotions is usually based on the central evolutionary importance of emotions for human survival. It defines emotions as “a genetic and acquired motivational predisposition to respond experientially, physically and behaviorally to certain internal a02nd external variables” [41]. In the context of survival, emotions imply complex communication patterns and information [42,43,44], as the feedback of an individual’s inner state on different levels enables a biological adaptation to the physical and psychosocial environment. Therefore, emotions are further viewed as complex, genetically anchored behavioral chains that contribute to an individual’s homeostasis through various feedback loops [45]. Since the research of Ekman [46], it has become known that elementary emotions such as fear, joy, or sadness show themselves independently of the respective culture. These basic emotions are closely coupled to simultaneously occurring neuronal processes. However, how people communicate and express visible parameters, such as facial expression, are influenced by the values, roles, and socialization practices that vary across cultures [47, 48], age, and gender [49].

Emotions can be divided categorically into primary, secondary, and combined forms: primary emotions are fundamental, while secondary emotions are emotions about emotions (such as guilt over gloating). Ekman distinguishes six basic emotions: happiness, sadness, anger, fear, surprise, and disgust [46]. In contrast, Izard [50] speaks of ten fundamental emotions (1) interest/excitement, (2) pleasure/joy, (3) surprise/fright, (4) sorrow/pain, (5) anger/rage, (6) disgust/repugnance, (7) disdain/contempt, (8) fear/contempt, (9) shame/shyness/humiliation, and (10) guilt/repentance. Another way to categorize emotions relates to their highly variable multidimensional nature: emotions can be categorized into the dimensions as positive or negative (polarity/valence), strong or weak (intensity/arousal), easy or hard to arouse (reactivity), and based on the situation they occur (idiosyncratic vs. universal situation) [51]. Following partly those dimensions, Russel’s Circumplex model is the most commonly used emotion model to capture emotion on a continuous scale [52]. It represents every emotion as a tuple of valence and arousal. There exist also a valence, arousal, and dominance model (more commonly known as the VAD model), which captures every emotion as a set of triplets (valence, arousal, dominance) in a continuous scale [2] (see Fig. 10.1).

The VAD (Valence-Arousal-Dominance ) model spanned across the six basic emotions [53]

Following the earlier mentioned, there exists an empirically validated theoretical process model of emotion—the Component Process Model (CPM) [54] and the Emotional Competence Process Model (ECP) , further implying the adaptive and maladaptive emotional functioning [4]. In the CPM, emotions are identified within the overall process in which low-level cognitive appraisals, particularly relevance processing, trigger physical reactions, behaviors, and subjective feelings (Fig. 10.2). As a foundation, CPM provides a differentiated theory of the various dimensions of emotions. Emotions are interpreted here as synchronizing several components that interact over time during a defined process regarding the emergence, appraisal, awareness, regulation, and knowledge of emotions. For example, an emotional situation emerges due to a specific trigger (e.g., a job interview), evoking in the appraisal (“I hope I perform well—the interviewer does not look friendly at all”) which results in a certain emotional reaction (e.g., adrenaline release, sweating, nervousness, tension). At the moment, a person is aware of this condition and the fact that they can regulate their condition (e.g., by taking a deep breath). They can categorize their emotional reaction (and those of others in return).

The emotional response components cover sensory, cognitive, physiological, motivational, and expressive components [55]. Initially, the sensory component enables a subject to recognize an emotional event through the senses (e.g., see, feel). Through the cognitive component , the individual can identify possible relationships between itself and the event based on its subjective experiences. The individual then makes a subjective evaluation of the perception of the event (appraisal). This goes along with the two-factor theory of emotions. As early as the 1960s, Schachter and Singer pointed to the cognitive evaluation of a physical response as key to the subsequent emotional sensation [56]. A subject can react to the same event with a different evaluation—depending on his personal world view, value system, and current physiological state. It is resulting in different physical responses to feelings. Depending on the subjective evaluation outcome, the individual reacts by releasing certain neurotransmitters and hormones, thus changing its physiological state (physical component ). This altered state corresponds to the experience of an emotion. According to Lazarus’ appraisal theory [57], the emotional experience first arises through cognitive evaluation and interpreting an emotional stimulus as manageable or not. The motivational component follows the event’s evaluation and is modulated by the current physiological (or emotional) state. The motivation to a certain action of a person is oriented to an actual-target comparison and the prediction of the effect of conceivable actions. For example, the emotion of anger can result in both the motivation for an attack action (e.g., in the case of a supposedly inferior opponent) and the motivation for a flight action (e.g., in the case of a supposedly superior opponent). The expressive component refers to the way emotion is expressed. This primarily concerns nonverbal behavior, such as facial expressions, speech, and gestures.

Comprehensive illustration of the Component Process Model of Emotion (adapted from Scherer 2001) [58]

Importance of Feelings Moods, Psychological States

From an evolutionary perspective, emotions play a very important role in motivation, behavior, and attention: they make us act, direct our attention to certain stimuli that might have pleasant or unpleasant consequences for us, and give us signals so that we adjust our behavior to obtain or avoid those consequences. In a modern context, it is not only about our survival and related incentives but also about secondary incentives, such as money, status, entertainment, or others. Moreover, emotions regulate the intensity and duration of different behaviors and cause the learning of those behaviors that were successful under certain conditions (e.g., joy has a pleasant effect on us and motivates us to repeat the behavior) and mark in memory (e.g., via disgust, anger) those that led to failure. In the same way, emotions function in regulating our social interactions, being reinforced by a pleasant (or non-pleasant) effect. This leads to the formation of bonds or rivalries, which provide us with orientation in the social structure. Some distinct emotions may have even a more specific function, as shown in Table 10.1 [59, 60]. It is part of our daily lives to be confronted with the experience of such emotions and to respond to them. How people deal with different emotional events varies widely. The distinction between “positive” and “negative” emotions is questionable due to emotions’ functionality.

As depicted in the ECP, there is a great body of literature showing that both positive and negative emotions greatly impact our well-being and mental health (see meta-analysis [61]). It has been demonstrated, for example, that people suffering from depression have difficulties in identifying (Rude & McCarthy, 2003), bearing and accepting their emotions [62, 63]. In numerous disorders, the presence of undesirable affective states (such as anxiety or depressed mood) in an inappropriate intensity or duration is among a diagnostic criterion of the disorder (e.g., in anxiety disorders or depression). Also, a whole range of cognitive and behavioral symptoms of mental disorders can be understood as dysfunctional attempts to avoid or terminate such undesirable states. Examples include alcohol or substance abuse, self-injurious behavior, or eating attacks.

Given the nature of variety in the emotional response and experience regarding intensity, duration, personality, and situational aspects, it is poorly defined when a certain emotional phenomenon can be considered inappropriate, abnormal, or even psychopathological [64]. This poses a challenge to psychometricians, researchers, and clinical diagnosticians to make the most valid (clinical) judgment or classification and eventually initiate appropriate and effective treatment.

Assessment of Emotions

Traditional assessment of emotions is based on self-reports via questionnaire and interview (3.1).Footnote 1 For many years now, other approaches aiming to capture a person’s emotions more objectively and independently than subjective self-reports were developed. In the following, assessment methods deriving from physiology-based emotion assessment such as blood and brain (3.2), expression-based emotion assessment such as facial expression, speech, and posture (3.3) are discussed. Moreover, digital footprints of emotions can be approached via social network platforms and smartphone data collection (3.4), including momentary ecological assessment. In the final part of this section, we compare the advantages and disadvantages of the presented assessment methods (3.5).

Self-Report-Based Methods for Emotion Assessment

In the traditional psychometric approaches, emotions are usually measured with self-report questionnaires, leveraging time- and cost-efficient instruments and enabling access to the cognitive component of someone’s emotions. It is noteworthy that those instruments aim to assess single emotions and approach “concepts” as stress, depression, or well-being, indicating well-functioning (or dysfunctional) emotional processes over time. Questionnaires differ on how they are evaluated regarding their standardized psychometric properties as test objectivity, reliability, and validity [65]. Usually, factor analyses are conducted to evaluate the underlying constructs’ factor structure in the designed questionnaire. Standardization is usually carried out using large and representative samples, which allow the classification of individual test results compared to the norm sample. Ideally, t-values or percentiles are defined for this purpose based on extensive psychometric studies. For the distinction between pathological and healthy reactions and clinical diagnosis, clinical samples are also collected.

To capture the emotion self-reports in the general population, often different scales are used, guided by the above-mentioned emotion models. In Table 10.2, an overview of some well-established questionnaires, including the item number and recall period, is given. For example, the Self-Assessment Manikin (SAM) is a non-verbal pictorial assessment technique that directly measures the pleasure, arousal, and dominance associated with a person’s affective reaction [77]. Similarly, there is an Affect Balance Scale (ABS), based on a model that posits the existence of two independent conceptual dimensions—positive effect (PAS) and negative affect (NAS)—each related to overall psychological well-being by an independent set of variables [68]. The more widely accepted PANAS scale is a 10-item mood scale that comprises the Positive and Negative Affect Schedule (PANAS) [67]. Additionally, a flourishing scale determines psychological flourishing and feelings [92] in relevant areas such as purpose in life, relationships, self-esteem, feelings of competence, and optimism.

To make a clinical diagnosis of an affective disorder, clinical questionnaires are based on the clinical diagnostic criteria: symptom description, duration, intensity, distress, and psychosocial consequences based on the classification criteria of DSM-V [93] and ICD-10 [94]. However, for a valid diagnosis, a structured clinical interview is the gold standard (e.g., SKID [95]. Nevertheless, clinical questionnaires are frequently used to validate the diagnosis and evaluation of the treatment process and follow-up. For example, the Beck’s Depression Inventory (BDI-II , [80]) is commonly used in research to diagnose depression. The BDI-II contains 30 items referring to depressive symptoms someone may have experienced the past two weeks with different intensity. Assessing depressive symptoms in nine items, the PHQ-9 corresponds to the depression module of the Patient Health Questionnaire (PHQ, [78]). Unlike many other depression questionnaires, the PHQ-9 captures one of the nine DSM-IV criteria for diagnosing “major depression” with each question. Again, due to depression classification criteria, the recall period is two weeks. The Depression Anxiety Stress Scale (DASS, [72]) covers 42 Items on those three related emotional states in the last week by not focusing on one specific affective disorder. In contrast to assessing mental illness, there are fewer instruments for assessing mental well-being. For example, the Warwick-Edinburgh Mental Well-Being Scale (WEMWBS) aims to assess positive feelings an individual may have experienced to a certain extent in the past two weeks.

Overall, questionnaires to assess emotions (for clinical use) have the advantage of not requiring experienced experts, lead to scalable, comparable results, and are time and cost-efficient than clinical interviews. Besides, the object is an individual’s emotional experience, and therefore the subjectivity of self-reports makes sense to capture someone’s psychological strain. However, self-report questionnaires face big shortcomings in the assessment of emotions over time. Most instruments (covering the classification criteria of DSM-V and ICD-10) refer to a recall period of up to two weeks (see Table 10.2 for examples). Therefore, they are greatly biased by memory effects [96] and the motivation of an individual [97]. Studies are showing the questionnaire results are more negative when filled on Mondays while Saturday mornings are “the happiest moment during the week” and consequently provoke more positive test results [98, 99]. Other bias factors are fatigue and other non-assessed personal conditions (e.g., bad news occurring just that specific assessment day), as well as misunderstanding of the items and social desirability (aiming “to look good,” even in anonymous surveys) [100, 101]. This specific subjectivity is wanted to a somewhat extent since it also informs the examiner about a person’s perception and interpretation. Nevertheless, the objectivity of assessment is always limited greatly by these interpretation biases.

Physiology-Based Emotion Assessment

As mentioned above, emotions are represented not only by the cognitive but also physical and expressive components. While the cognitive aspect of emotion cannot be observed directly, the physical and expressive aspects are often manifested in different bodily signals. An emotional state influences underlying human biology and psychophysiology, as well as the resulting behaviors. For example, stress can be manifested in hormonal changes; anxiety can be manifested in terms of a high pulse rate, while happiness can be expressed via laughter. As we point out in the following subsections, some of these manifestations are captured and modeled to determine the emotion in affective computing.

Emotional Assessment from Blood

The emotional state of the human may be assessed via blood-based analytics, as the emotional state influences the individual’s hormonal status. Especially in the field of biological psychiatry research, plasmatic biomarkers have been leading endeavors. The five most named plasmatic biomarkers (BDNF, TNF- alpha, IL-6, C-reactive protein, and cortisol) are classically used to predict psychiatric disorders like schizophrenia, major depressive disorder, or bipolar disorder [21]. In a meta-analysis, patterns of variation of those features were identified between those most important psychiatric disorders. The results indicated robust variations across studies but also showed similarities among disorders. The authors conclude that the implemented biomarkers may be interpreted as transdiagnostic systemic consequences of psychiatric illness rather than diagnostic markers. This is in line with another review of Funalla et al. showing evidence for diagnostic biomarkers associated with obsessive-compulsive disorder (OCD) but did not show diagnostic specificity [102]. A commonly used indicator for stress is the cortisol concentration found in human blood or saliva [103]. Overall, in this chapter, we do not focus on plasmatic biomarkers; we mention these for completeness.

Emotional Assessment from Brain

Other biological features to determine emotions with a rather high accuracy can be extracted from brain electroencephalography (EEG) [22]. With the help of EEG, the assessment of the brain’s summed electrical activity is enabled by recording the voltage fluctuations on the surface of the head. Emotion extraction is consequently based on arousal and categorized into valence and excitation. EEG evaluation is traditionally performed by pattern recognition by the trained evaluator or by an automatic evaluation. For emotions, the patterns of alpha and beta waves are key classifiers. The alpha wave is associated with mild relaxation or relaxed alertness, with eyes closed (frequency range between 8 and 13 Hz). A beta wave has different causes and meanings and may occur during constant tensing of a muscle or during active concentration (frequency range between 13 and 30 Hz). According to Choppin (2000), high valence is associated with high beta power in the right parietal lobe and high alpha power in the brain’s frontal lobe [104]. High beta power in the parietal lobes is associated with higher arousal in emotions, while the alpha activity is lower but also located in the parietal lobes. More specifically, negative emotions are represented by activity in the right frontal lobe, whereas positive emotions result in high power in the brain’s left frontal part. EEG was found to achieve 88.86% accuracy for four emotions: sad, scared, happy, and calm [105]. After assessing the EEG waves and extracting the particular emotional features, classifiers are trained for emotion identification. Popular is the Canonical Correlation Analysis (CCA) [106], Artificial Neural Network (ANN) [107], Fisher linear discriminant projection [24], and Adaptive Neuro-Fuzzy Interference System (ANFIS) [108]. Using K-Nearest Neighbor (KNN) [109], and Support Vector Machine (SVM) [105, 110] Mehmood and Lee (2016) used five frequency bands (beside alpha and beta, delta, theta, gamma waves) and identified the four emotions sad, scared, happy and calm with an accuracy rate of 55% (KNN) and 58% (SVM).

In a more clinical setting, Khodayari-Rostamabd [111] used EEG data to predict the pharmaceutical treatment response of schizophrenic patients . A set of features was classified using the kernel partial least squares regression method to perform response prediction on the positive and negative syndrome scale (PANSS) with 85% accuracy. In another sample aiming to predict psychopharmacological treatment response (SSRI), the same research group extracted candidate features from the subject’s pre-treatment EEG using a mixture of factor analysis (MFA) model in a sample with patients suffering from depression [112]. The proposed method’s specificity is 80.9%, while sensitivity is 94.9%, for an overall prediction accuracy of 87.9%. Besides EEG, also functional Magnetic Resonance Imaging (fMRI) is used to assess emotions, especially by exploring the amygdala activity [113, 114]. Zhang et al. [114] analyzed connectivity change patterns in an fMRI data-driven approach in 334 healthy participants before and after inducing stress. Besides, the participants’ cortisol level was taken to classify pre- and post-stress states. The machine learning model revealed that the discrimination relied on activation in the dorsal anterior cingulate cortex, amygdala, posterior cingulate cortex, and precuneus and with a 75% accuracy rate. The advantage of using EEG for assessing emotions is that the data extraction is independent of facial or verbal expression that could be impaired due to, e.g., paraplegia, facial paralysis, but the necessity of a lab and the complex, costly installation and maintenance of equipment is a big disadvantage not only for the practical field but also research projects [22].

Emotional Assessment from Physiological Signal Collection

A lot has been written about assessment of the emotional state of the individual from the physiological state—via, e.g., EEG (mentioned above), Electrocardiogram (ECG), Electromyography (EMG), Electrooculography (EOG), Galvanic Skin Response (GSR), Heart Rate (HR), Body Temperature (T), Blood Oxygen Saturation (OXY), Respiration Rate (RR), or Blood Pressure analytics (BP) [115,116,117,118]. From the technical perspective, the existing physiological signal-driven emotion assessment methods can also be divided into three categories (a) traditional machine learning methods, (b) deep neural network-based method, and (c) sequence-based models.

Conventional Machine Learning Approach

In the case of traditional machine learning-based approaches, first, a set of features are extracted from the captured data, and then different algorithms are used for model construction. Apart from the time domain characteristics, to leverage the spectral-domain characteristics such as power spectral density (PSD), spectral entropy (SE) is computed using Fast Fourier Transform (FFT) or Short-term Fourier Transform (STFT).

SVM is probably the most widely used in physiological signal base emotion recognition among various machine learning algorithms. Das et al. extracted Welch’s PSD of ECG and Galvanic Skin Response (GSR) signals for emotion recognition [119]. Liu et al. extracted a set of features from EEG and eye signals and used a linear SVM to determine three emotion states [120]. However, as regular SVM does not work in the imbalanced dataset, Liu et al. constructed an imbalanced support vector machine to solve the imbalanced dataset problem, which increased the punishment weight to the minority class and decreased the punishment weight majority class [121]. A few authors also used KNN (K = 4) to classify four emotions with the four features extracted from ECG, EMG, GSR, and RR [121]. In [115], the authors collected 14 features of 34 participants as they watch three sets of 10-min film clips eliciting fear, sadness, and neutrality, respectively. Analyses used sequential backward selection and sequential forward selection to choose different feature sets for 5 classifiers (QDA, MLP, RBNF, KNN, and LDA). Wen et al. used RF to classify five emotional states with features extracted from OXY, GSR, and HR [122].

Deep Learning-Based Approach

Among different deep learning-based approaches, CNN is one of the most widely used. Martinez et al. trained an efficient deep convolution neural network (CNN) to classify four cognitive states (relaxation, anxiety, excitement, and fun) using skin conductance and blood volume pulse signals [123]. Giao et al. used the Convolutional Neural Network (CNN) for feature abstraction from EEG signal [124]. In [125], several statistical features were extracted and sent to the CNN and DNN. Song et al. used dynamical graph convolutional neural networks (DGCNN), which could dynamically learn the intrinsic relationship between different EEG channels represented by an adjacency matrix to facilitate feature extraction [126]. DBN is also widely used for emotion recognition. It learns a deep input feature through pre-training. Zheng et al. introduced a recent advanced deep belief network (DBN) with differential entropy features to classify two emotional categories (positive and negative) from EEG data, where a Hidden Markov Model (HMM) was integrated to accurately capture a more reliable emotional stage switching [127]. Huang et al. extracted a set of features and applied DBN in mapping the extracted feature to the higher-level characteristics space [128]. In the work of [129], instead of the manual feature extraction, the raw EEG, EMG, EOG, and GSR signals directly inputted to the DBN, where the high-level features according to the data distribution could be extracted.

Sequence-Based Models

To capture the temporal aspects of the physiological signals, often sequence-based models are used. For example, Li et al. applied CNN first to extract features from EEG and then applied LSTM to train the classifier, where the classifier performance was relevant to the output of LSTM in each time step [130]. In the work of [131], an end-to-end structure was proposed, in which the raw EEG signals in 5 s-long segments were sent to the LSTM networks, in which autonomously learned features. Liu et al. proposed a model with two attention mechanisms based on multi-layer LSTM for the video and EEG signals, which combined temporal and band attentions [132].

Overall, as the captured signals are noisy, several pre-processing techniques are often used to eliminate the noise introduced from different sources such as crosstalk, measurement error, and instrument interference. The commonly used preprocessing techniques include filtering [133], Discrete Wavelet Transform (DWT) [134], Independent Component Analysis (ICA) [135], Empirical mode decomposition (EMD) [136].

The overall psychophysiological approach for emotional assessment is cumbersome and not straightforward to be deployed in real-time for real-time accurate emotion recognition. Besides, the required complex laboratory setup (e.g., EEG) is time and cost-intensive.

Expression-Based Emotion Assessment

Following the cognitive and physical components of emotions, we focus on expressing emotions in this section. We describe facial and verbal emotion recognition, as well as how posture may reflect human emotional states.

Facial Emotion Recognition (FER)

We broadly divide facial emotion recognition-related works into the following two groups (a) conventional FER approach and (b) deep learning-based FER approach.

Conventional FER Approach

For automatic FER systems, various types of conventional approaches have been studied. First, all these approaches assess the face region and then extract a set of geometric features, appearance features, or a hybrid of geometric and appearance features on the target face. For the geometric features, the relationship between facial components is used to construct a feature vector for training [137, 138]. For example, Ghimire and Lee [138] used two types of geometric features based on 52 facial landmark points’ position and angle. First, the angle and Euclidean distance between each pair of landmarks within a frame are calculated. Second, the distance and angles are subtracted from the corresponding distance and angles in the video sequence’s first frame. For the classifier, two approaches are used, using multi-class AdaBoost with dynamic time warping, and SVM on the boosted feature vectors.

The appearance features are usually extracted from the global face region [139] or different face regions containing different types of information [140,141,142,143]. An example of using global features includes the exploration by Happy et al. [139]. The authors utilized a local binary pattern (LBP) histogram of different block sizes from a global face region as the feature vectors. They classified various facial expressions using a principal component analysis (PCA). This method’s classification performance is poor as it cannot reflect local variations of the facial components to the feature vector. A few explorations also used features from different face regions as they may have different levels of importance, unlike a global-feature-based approach. For example, the eyes and mouth contain more information than the forehead and cheek. Ghimire et al. [144] extracted region-specific appearance features by dividing the entire face region into domain-specific local regions. An incremental search approach is used to identify important local regions, reducing feature vector size, and improving classification performance.

For hybrid features , some approaches [144, 145] have combined geometric and appearance features to complement the two approaches’ weaknesses and provide even better results in certain cases.

Deep Learning-Based FER Approach

The most adopted one in deep neural network-based FER is CNN. The main advantage is to completely remove or highly reduce the dependence on physics-based models and/or other pre-processing techniques by enabling “end-to-end” learning directly from input images [146]. Breuer and Kimmel [147] investigated the suitability of CNNs on different FER datasets and showed the capability of networks trained on emotion assessment and FER-related tasks. Jung et al. [148] used two different types of CNN: the first extracts temporal appearance features from the image sequences. The second CNN extracts the temporal geometry features from temporal facial landmark points. These two models are combined using a new integration method to boost the performance of facial expression recognition.

However, as CNN-based methods are not suitable for capturing the temporal sequence, a hybrid approach combining both CNN (for spatial features) and LSTM (for temporal sequence) was developed. LSTM is a special type of RNN capable of learning long-term dependencies. Kahou et al. [149] proposed a hybrid RNNCNN framework for propagating information over a sequence using a continuously valued hidden-layer representation. In this work, the authors presented a complete system for the 2015 Emotion Recognition in the Wild (EmotiW) Challenge [150]. They proved that a hybrid CNN-RNN architecture for a facial expression analysis could outperform a previously applied CNN approach using temporal averaging for aggregation. Kim et al. [151] utilized representative expression-states (e.g., the onset, apex, and offset of expressions), specified in facial sequences regardless of the expression intensity. Hasani and Mahoor [152] proposed the 3D Inception-ResNet architecture followed by an LSTM unit that together extracts the spatial relations and temporal relations within the facial images between different frames in a video sequence. Graves et al. [153] used a recurrent network to consider the temporal dependencies present in the image sequences during classification. This study compared the performance of two types of LSTM (bidirectional LSTM and unidirectional LSTM) and proved that a bidirectional network provides significantly better performance than a unidirectional LSTM.

In summary, hybrid CNN-LSTM (RNN) based FER approaches combine an LSTM with a deep hierarchical visual feature extractor such as a CNN model. Therefore, such a hybrid model can learn to recognize and synthesize temporal dynamics for tasks involving sequential images. Each visual feature determined through a CNN is passed to the corresponding LSTM, and it produces a fixed or variable-length vector representation. The outputs are then passed into a recurrent sequence-learning module. Finally, the predicted distribution is computed by applying softmax [154, 155]. A limitation of this approach is the challenge of capturing the facial expression in real-time in daily life as a natural reaction to an emotional experience. Additionally, privacy issues can be a problem if a person does not want to be visually recorded during such intimate moments.

Speech Based Emotion Recognition (SER)

The existing literature on speech emotion recognition (SER) is also broadly divided into the following two categories (a) Conventional SER approach and (b) deep learning-based SER approach.

Conventional SER Approach

In traditional SER systems, there are mainly three steps—(a) signal pre-processing, (b) feature extraction, and (c) classification. At first, acoustic pre-processing such as denoising, segmentation is carried out to determine relevant units of the speech signal [156,157,158]. Once the pre-processing is done, several short-term characteristics of the signal such as energy, formants, and pitch are extracted, and short-term classification of the speech segment is done [159]. On the contrary, for long-term classification, mean, standard deviation is used [160]. Among prosodic features, the intensity, pitch, rate of spoken words, and variance play an important role in identifying various types of emotions from the input speech signal [161]. The relationship between different vocal parameters and their relation to emotion is often explored in SER. Parameters such as intensity, pitch, and rate of spoken words, and quality of voice are frequently considered [162]. The intensity and pitch are often correlated to activation so that the value of intensity increases along with high pitch and vice versa [163, 164]. Factors that affect the mapping from acoustic variables to emotion include whether the speaker is acting, there are high speaker variations, and the individual’s mood or personality [165, 166].

In the existing SER literature, there are two types of classifiers—linear and non-linear. Linear classifiers usually perform classification based on object features with a linear arrangement of various objects [166]. In contrast, non-linear classifiers are utilized for object characterization in developing the non-linear weighted combination of such objects [167,168,169,170]. The GMMs are utilized for the representation of the acoustic features of sound units. The HMMs, on the other hand, are utilized for dealing with temporal variations in speech signals [171].

Deep Learning-Based SER Approach

In SER approaches, different types of deep neural networks are used. Senigallia et al. used a 2D CNN with Phoneme data as input data to determine 7 emotion states [172]. Zhao et al. combined Deep 1D and 2D CNN for high-level learning features from input audio and log-mel spectrograms for emotion classification [173]. Convolutional Neural Network (CNN) also uses the layer-wise structure and can categorize the seven universal emotions from the defined speech spectrograms [174]. In [175], an SER technique based on spectrograms and deep CNN is presented. The model consists of three fully connected convolutional layers for extracting emotional features from the speech signal’s spectrogram images. Pablo et al. obtained emotional expressions that are spontaneous and can easily be classified into positive or negative [176]. Mao et al. trained the CNN to learn affect salient features and achieved robust emotion recognition with the variational speaker, language, and environment [177].

The hybrid networks consisting of CNN and RNN are also used in SER [178,179,180]. This enables the model to obtain both frequency and temporal dependency in a given speech signal. Sometimes, a reconstruction-error-based RNN for continuous speech emotion recognition is also used [181]. SER algorithms based on CNNs and RNNs have been investigated in [180]. The deep hierarchical CNNs architecture for feature extraction has also been combined with LSTM network layers. It was found that CNN’s have a time-based distributed network that provides results with greater accuracy. Zhao et al. used a hybrid RCNN model to determine basic emotion [182]. Wootaek et al. used a deep hierarchical feature extraction architecture of CNNs combined with LSTM network layers for better emotion recognition [180]. Like FER, capturing the speech in real-time as a reaction to the emotional experience is a challenging issue. In addition to interfering with privacy, someone may express emotions without using expressed language because they are alone or do not want to speak, especially in emotional moments. Although individuals tend to adapt quickly to being observed, the awareness of being recorded might interfere with someone’s speech and expression of emotions.

Emotional Assessment from Posture

In contrast to research on automatic emotion recognition focusing on facial expressions or physiological signals, little research has been done on exploiting body postures. However, they can be useful for emotion recognition and even more accurate than facial features [23]. Bodily postures refer to the physical expression component of emotions and an important channel of communication. Since it is challenging to categorize the expressed posture to discrete emotions due to the variety of validated emotion poses, researchers in this field focus first on defining features, aiming for understanding the cohesion and dimensional ratings with high validity [183]. Therefore, studies recorded actors displaying concrete, discrete emotions, and the recordings were then rated by the study participants categorizing these emotions. For example, Lopez et al. asked study participants to categorize emotion postures depicting five emotions (joy, sadness, fear, anger, and disgust) and to rate valence and arousal for each emotion pose. Besides a successful categorization of all emotion categories, participants accurately identified multiple distinct poses within each emotion category. The dimensional rating of arousal and valence showed interesting overlaps and distinctions, increasing further granularity of distinct emotions. Similarly, the Emotion Recognition Test (GERT) [85] was developed to test the emotional recognition ability using video clips with sound simultaneously presenting facial, vocal, and emotional expression based on the posture by using various data and video material and relatively large samples to validate. Individuals using the GERT test material are asked to watch video clips and rate the displayed emotions to assess their emotion recognition ability.

Postures are also captured using movement sensor data from smartwatches or mobile phones [184]. Quiroz et al. observed the movement sensor logged by the smartwatches of 50 participants differentiated between the emotions happy, sad, and neutral as a response to an emotional stimulus in an experimental setting. Furthermore, the response was validated by additional data from the Positive Affect and Negative Affect Schedule Questionnaire (PANAS). Emotional states could be assessed well by self-report and data obtained from the smartwatch with high accuracy across all users for classification of happy versus sad emotional states. Although the categories here depict only two emotions and other categorization difficulties need to be evaluated, movement sensors’ usage still appears promising for emotion recognition purposes. Another smartphone-based approach also uses information about postures for recognizing emotions but uses self-reported body postures [185]. A mobile application was developed that classifies (based on the nearest neighbor algorithm) inserted poses into Ekman’s six basic emotion categories and a neutral state. Emotion recognition accuracy was evaluated using poses reported by a sample of users.

Although digital devices may be included to capture postures in real-time, this data collection is challenging to conduct in a person’s daily life and close to their natural expression of emotions. Due to the necessity of leveraging, e.g., the use of cameras, this method is obtrusive and implies the same privacy issues we discussed in the context of FER and SER.

Internet-Use Based Emotion Assessment

This subsection presents the emotion assessments based on the internet usage of an individual. Firstly, analysis on social networks (3.4.1) is discussed, followed by smartphone-based emotion assessment (3.4.2) and smartphone-based experience sample methods (3.4.3).

Social Network Analysis

Digital records of an individual’s behavior are extracted, including linguistic style, sentiment, online social networks, and other activity traces, which can be used to infer the individual’s psychological state. In particular, social networking platforms are becoming increasingly popular. They have recently been used more extensively to study emotions, as they are easily accessible to users, and researchers can collect the necessary information with the users’ consent. Based on this approach, Chen et al. aimed to identify users with depression or at risk of depression by assessing the individual’s expressed emotions from Twitter posts over time [36]. In another study, voluntarily shared Facebook Likes for N = 58,000 users were used to predict several highly sensitive personality attributes [37]: sexual orientation, ethnicity, religious and political views, personality traits, intelligence, happiness, use addictive substances, parental separation, age, and gender. All attributes were predicted with high accuracy, especially the ethnic origin and gender. Other emerging approaches focus on Spotify music or Instagram picture extraction as a feature to predict personality or mood outcomes [186].

Furthermore, studies focus on language content in social media networks or messaging systems to discover depressive symptoms, the so-called Natural Language Processing (NLP) [187]. The depressive language was characterized by more negative and extreme words such as “always, everybody, never” [188]. The challenge with this data is that communication on Twitter, Facebook may be heavily distorted by aspects of social desirability and specific motivations that drive someone to express themselves on the Internet. Furthermore, data collection from someone’s account may face privacy issues.

Smartphone-Based Emotion Assessment

Smartphones are personal devices that individuals carry around with them almost all the time [189]. They include a plethora of onboard sensors (e.g., accelerometer, gyroscope, GPS) and can sense different user activities passively (e.g., mobility, app usage history) [190]. In this subsection, we review the smartphone-based methods for emotion assessment in its user’s natural daily environments. We consider the usage-based assessment methods, as well as touch-based ones.

Usage-Based Emotion Recognition Methods

The smartphone provides numerous data sources for collecting real-world data about emotions. For example, defined FER and SER assessments can also be extracted from the smartphone’s camera and microphone. Other smartphone-based sensing sources are connectivity (WIFI on/off), smartphone status (screen, battery, power-saving mode), calls (type, duration), text messages (type, length), notifications (apps, category), calendar (initial query, logging of new entries), technical data (anonymized user ID, IP address, mobile phone type) [191]. Harari et al. [192] sensed conversations, phone calls, text messages, and messaging and social media applications for individual trait assessment. Namely, they collected sensing data in five semantic categories (communication & social behavior, music listening behavior, app usage behavior, mobility, and general day- & night-time activity) and used a machine learning approach (random forest, elastic net) to predict personality traits.Footnote 2 MoodScope proposed to infer mood exploiting multiple information channels, such as SMS, email, phone call patterns, application usage, web browsing, and location [143]. In EmotionSense, Rachuri et al. used multiple Emotional Prosody Speech and Transcripts library features to train the emotion classifier [195]. In the same vein, researchers also demonstrated that aggregated features obtained from smartphone usage data could indicate the Big-Five personality traits [196]. We also find that there are multiple works, which use different information sources to infer the presence of a particular emotional state. For example, Pielot et al. tried to infer boredom from smartphone usage patterns like call details, sensor details, and others [197]. In their work on assessing stress, Lu et al. built a stress classification model using several acoustic features [198]. Similarly, Bogomolov et al. showed that daily happiness [199] and daily stress [200] could be inferred from mobile phone usage, personality traits, and weather data.

Touch-Based Emotion Recognition Methods

Widespread availability of touch-based devices and a steady increase [35] in the usage of instant messaging apps open a new possibility of inferring emotion from touch interactions. Therefore, research groups started to focus on typing patterns (Shapsough et al., 2016) using a built-in sensor (a smart keyboard) and using machine learning techniques to assess emotions based on different aspects of typing. For example, Lee et al. designed a Twitter client app and collected data from various onboard sensors, including typing (e.g., speed), to predict one user’s emotion in the pilot study [201]. Similarly, Gao et al. used multiple finger-stroke-related features to identify different emotional states during touch-based gameplay [202]. Ciman et al. assessed stress conditions by analyzing multiple features of smartphone interaction, including swipe, scroll, and text input interactions [203]. Kim et al. [204] proposed an emotion recognition framework analyzing touch behavior during app usage, using 12 attributes from 3 onboard smartphone sensors. Although focused on narrow application scenarios, all of these works point to the value of touch patterns in emotion assessment.

Smartphone-Based Experience Sampling Method Design

One of the key requirements to develop a smartphone-based emotion assessment system is to collect emotion ground truth labels, which are typically collected as emotion self-reports by deploying an Experience Sampling Method (ESM), also known as an Ecological Momentary Assessment (EMA). The Experience Sampling Method (ESM) is a widely used tool in psychology and behavioral research for in-situ sampling of human behavior, thoughts, and feelings [5, 205]. The ubiquitous use of smartphones and wearable devices helps in the more flexible design of ESM, aptly termed as mobile ESM (mESM) [206,207,208]. It allows the collection of rich contextual information (e.g., sensor information, application usage data) along with behavioral data at an unprecedented scale and granularity. Frameworks like Device Analyzer, UbiqLog, AWARE, ACE, MobileMiner, or MQoL-Lab [190] have been designed to infer user’s context based on sensor data, application usage details of the smartphones [142, 209,210,211,212,213]. While these frameworks help in the automatic logging of sensor data, self-reports related to various aspects of human life (like emotion) still require direct input from the user.

Balancing Probing Rate and Self-Report Timeliness

In the ESM studies, the participant burden mainly arises from repeatedly answering the same survey questions. Time-based, event-based schedules are the most commonly used ESM schedules [214]. Time-based approaches aim to reduce probing rate (at the cost of fine granularity), while event-driven ones try to collect self-report timely (at the cost of a high probing rate). Recently, hybrid ESM schedules are designed combining time-based and event-based ones to trade-off between probing rate and self-report timeliness [215]. With the proliferation of smartphones, and other wearable devices, more intelligent and less intrusive survey schedules, including these limiting the maximum number of triggers, increasing the gap between two consecutive probes, have been designed. Several open-source software platforms, like ESP [216], my experience [217], psychology [218], Personal Analytics Companion [219], are available on different mobile computing platforms to cater to ESM experiments.

Maintaining Response Quality Via Interruptibility-Aware Designs

Recent advancements in interruptibility-aware notification management recommend several strategies to probe at opportune moments leveraging contextual information (e.g., placing between two activities like sitting and walking, after completing one task such as messaging or reading a text on mobile) [220,221,222]. In [223], the authors showed that features like the last survey response, phone’s ringer mode, and user’s proximity to the screen could predict whether the recipient will see a notification within a few minutes. Leveraging these findings, intelligent notification strategies were developed, which resulted in a higher compliance rate and improved response quality [224, 225]. However, one of the major challenges of using such details in mobile-based ESM design is resource overhead and privacy. Designing ESM schedules based on the underlying study can overcome such limitations [226]. Ideally, an ESM schedule shall optimize the probing rate (like time-based schedules), reduce latency (like event-based schedules), and probing moments (like interruptibility-aware schedules) (Table 10.3).

Pros and Cons in Different Emotion Assessment Approaches

In the previous sections, we have presented different approaches to assess emotions. Table 10.4 summarizes the pros and cons of the different self-report and sensing methods of data collection. We pointed out that self-report questionnaires have the advantage of being rather a time and cost-efficient (for assessors) and enable to reveal cognitions that are otherwise hard to capture. Furthermore, the subjectivity of an individual’s view on their emotions expressed via self-reporting might be wanted in some contexts (e.g., for clinical diagnostics). However, self-reports are challenged by numerous confounding variables as fatigue, interpretation and memory biases, non-assessed personal conditions, misunderstanding of the items, and social desirability [100, 101]. Some physiological assessment methods might be more objective (like EEG or blood) but require a laboratory and complex setup and controlled environment. Due to this limitation, real-time assessment of emotions close to an individual’s everyday life experience is not possible.

Additionally, some research is based on induced emotional states. Emotional reactions can be induced in experimental settings. However, the transfer and generalizability of such results into an individual’s real-life is doubtable. Besides, the period of data collection is often limited, and collecting a high volume of data from a large number of participants is difficult. Finally, the participants need to participate actively and contribute to the data collection effort (via self-reports).

In most cases, the data collection cannot be done passively and, consequently, lacks unobtrusiveness. Moreover, most of the discussed methods focus only on one data collection source (e.g., speech, EEG alpha waves, or social network analysis). They are, therefore, very limited regarding the complex emotional process described in the CPM [4].

In the context of emotional assessment, novel, personal sensing methods embedded in daily life via wearables and smartphones promise to overcome some of those issues by providing real-time observations [143, 197, 207, 209,210,211]. These devices can capture data passively from different modalities without user intervention, log app usage behavior, and leverage different computational models on the device for emotion inference. As a result, these devices are very promising to determine emotion-based behavior based on different usage patterns. However, the approach is still novel, and some participants are concerned about their privacy [227]. These concerns must be taken into account seriously but contrasted by the fact that personal information is shared openly on the Internet nowadays. This ambiguity is labeled as Privacy Paradox and known since 2006 [228].

Tapsense: Smartphone Typing Based Emotion Assessment

This chapter specifically focuses on assessing the individual’s emotional state from the smartphone usage patterns via the authors’ TapSense study. Therefore, in this section, we first describe the overall research approach of the keyboard interaction study. We focus on a typing-based emotion assessment scenario, which helps to identify the key requirements to design the emotion assessment model and the self-report collection approach using an Experience Sampling Method (ESM) (Sect. 5.1.). In Sect. 5.2, the TapSense field study and data analysis are presented, and in Sect. 5.3. evaluated. In Sect. 5.4, the study is discussed.

Background

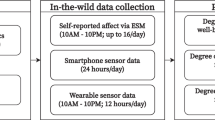

The overall approach for the TapSense study is shown in Fig. 10.3. First, we gathered a set of requirements to design the keyboard interaction-based emotion assessment tool, followed by the actual design and implementation of the TapSense application. We discuss the study in detail and analyze the collected data. Finally, we evaluate the performance of the TapSense application and discuss the lessons learned from this study.

Requirements

TapSense study relies on the users’ smartphone usage patterns. We explain the scenario of typing-based emotion assessment in Fig. 10.4. As the user performs typing activity, we extract his/her typing sessions and the amount of time he/she stays in a single mobile application without. For example, when a user uses WhatsApp without switching to other applications from t1 till t2, then we define elapsed time between t1 and t2 as a Typing Session. Once the user completes the session, he/she is probed via an ESM, i.e., emotion self-report, which is considered the emotion ground truth. Later, several features are extracted from the typing sessions and correlated with the emotion self-report to develop an emotion assessment model. This scenario suggests consideration of the following requirements,

-

Trace keyboard interaction for emotion assessment: The key requirements while determining emotions from the typing sessions is to make sure that (a) the typing details are captured correctly so that the relevant features can be extracted (b) the emotion ground truths are collected (ESM) and (b) an accurate emotion assessment model is constructed. We discuss these aspects further in this section.

-

ESM design for self-report collection: Probing a user after every session may induce fatigue due to many probes. So, the probing moments should be chosen in such a manner that it captures the user’s response accurately (i.e., before it fades away from the user’s memory) and at the same time, the probing rate is not too high. We discuss in detail the ESM design further in this section.

Design and Implementation of TapSense

TapSense consists of the following key components as in Fig. 10.5. TapLogger records the user’s typing activity. It implements a virtual keyboard for tracing keyboard interactions. ProbeEngine runs on the phone to generate the user’s ESM notifications and collects the ESM responses. The typing details and the associated emotion self-reports are made available at the server via the Uploader module that synchronizes with the server occasionally, or, if the user is offline, once the user connects to the Internet. The emotion assessment model is constructed on the server-side to determine the different emotional states from the typing details and the emotion self-reports. In parallel, a set of typing features is also extracted to construct the inopportune moment assessment model, which feeds back the ProbeEngine to optimize the probe generation. Next, we discuss the two key components of TapSense (a) emotion assessment from keyboard interaction, (b) ESM design for the emotion self-report collection.

TapLogger: Keyboard Interaction Collection

The TapLogger module of TapSense implements an Input Method Editor (IME) [229] provided by Android OS, and we refer to it as the TapSense keyboard (Fig. 10.6). It is the same as any QWERT keyboard; it provides similar functionalities as any Google keyboard. We have selected a standard keyboard because we aimed to provide similar functionalities. The user’s keyboard interaction experience does not deviate much from what he/she is used to. It differs from others, as it has the additional capability of logging user’s typing interactions, which, for security reasons, is not available in Google keyboard. To ensure user privacy, we do not store or record the characters typed. The logged information is the timestamp of each tap event, i.e., when a character is entered and the key input’s categorical type, such as an alphanumeric key or delete key.

ProbeEngine: Emotion Self-Report Collection (ESM)

The ProbeEngine module of TapSense issues the ESM self-report probes by delivering a self-report questionnaire (Fig. 10.7). This survey questionnaire provides the option (happy, sad, stressed, relaxed) to record ground truth about the user’s emotion while typing. This captures four largely represented emotions from four different quadrants of the Circumplex model [52], as shown in Fig. 10.8. We select these discrete emotions as their valence-arousal representation is unambiguous on the Circumplex plane. Any discrete emotion and its unambiguous representation on the valence-arousal plane are equivalents [230]. We also include the “No Response” option to select this option to indicate the current probing moment is inopportune.

Emotion Assessment Model Construction

The emotion assessment model in TapSense is responsible for determining the four emotion states based on the keyboard interaction pattern. This is implemented on the server-side once the typing interaction details and the emotion self-reports details are available.

Emotion Assessment Features

From raw data collected within every Typing Session, we extract a set of typing features as defined in Table 10.5. For every session, we compute the ITDs, i.e., the elapsed time between two consecutive keypress events for all the presses. We derive the mean of all ITDs in the session and use it as typing speed. We define it as the Mean Session ITD (MSI). We compute the backspace and delete keys present in a session and use it as a feature. This is used as the representation of typing mistakes made in a session.

Similarly, we use the fraction of special characters in a session, session duration, and typed text length in a session as features. Any non-alphanumeric character is considered a special character. We use the last emotion self-report as a label for the model [215, 231]. However, at the later stage, when the TapSense model is operational, we use the predicted emotion for the last session as the feature value for the current session.

Emotion Assessment Model

Trees-based machine learning approaches have been accurate in the context of emotion assessment in the past [201, 232]. We design a Random Forest (RF) based personalized multi-state emotion assessment model using the features described in Table 10.5 to assess the emotions. As typing patterns vary across individuals, derived, features will vary. Hence we construct a personalized model. We implement these models in Weka [233], building 100 Random Forest decision trees with a maximum depth of the tree set as ‘unlimited’ (i.e., the tree is constructed without pruning). We then derive the RF models’ performance by deriving the mean and variability of the accuracy for the 100 RF-based models.

Experience Sampling Method Design

The ESM used in TapSense is optimized in two phases. Phase 1 balances probing rate and timeliness of self-report collection, and Phase 2 tries to probe at the opportune moments when the user’s attention is available. We achieve this by designing a two-phase ESM [234]. We summarize it in Fig. 10.9. In Phase 1, we combine policy-based schedules to balance probing rate and timeliness and learn the inopportune moment assessment model. In Phase 2, we make the inopportune moment assessment model operational. We discuss both phases in detail now.

Phase 1: Balancing ESM Probing Frequency and Timeliness

The collection of ESM emotion self-reports at the end of every typing session would help collect the labels close to the event, but it would lead to the generation of too many probes and user burden. To trade off these two conflicting requirements, we first assess the quality of the session itself, i.e., we make sure that there is a sufficient amount of typing done in a typing session for it to be considered. We issue the ESM probe only (a) if the user has performed a sufficient amount of typing, i.e., a minimum L = 80 characters in a typing session, and (b) a minimum time interval, i.e., W = 30 minutes has elapsed since the last ESM probe. To ensure the labels are collected close to the typing session, we use the polling interval parameter (T = 15 seconds) to check if the user has performed a sufficient amount of typing within a session. We describe the selection of threshold values based on initial field trials in Appendix 1. We name this ESM schedule the Low Interference High Fidelity (LIHF) ESM schedule (Fig. 10.9 (Phase 1)).

Phase 2: Inopportune Moment Assessment Model

As we collect self-reports, we obtain both “No Responses” and valid emotion responses. We leverage these labels to build the inopportune moment assessment model (Table 10.6).

We use typing session duration and the typing length in a session as features since lengthy and longer typing sessions may indicate high user engagement and not be the ideal moment for triggering a probe. Besides, there may be some types of applications like media, games when the users may not be interrupted for probing. So, we include the application type also as a feature. We categorize the applications into one of the 7 categories: Browsing, Email, Media, Instant Messaging (IM), Online Social Network (OSN), SMS, and ‘Misc,’ following the application’s description in the Google Play Store. Moreover, we use the label of the last ESM probe response as a feature. We use it to determine whether the user continues to remain occupied in the current session and if he/she marked the previous session with “No Response.” However, once the model is operational and deployed, we use the predicted value of the inopportune moment for the last session as the current session’s feature value. Table 6summarizes the features used to implement the model. We construct a Random Forest-based prediction model to assess the inopportune moments for all the users. The model is augmented with the LIHF schedule to assess and eliminate inopportune probes (Fig. 10.9 [Phase 2]).

TapSense: Field Study and Data Analysis

In this section, we discuss the TapSense field study and the dataset collected from the study.

Study Participants

We recruited 28 university students (22 males, 6 females, aged 24–35 years) to evaluate TapSense. We installed the application on their smartphones and instructed them to use it for 3 weeks. Three participants left the study in between, and the other three participants have recorded less than 40 labels. We have discarded these 6 users and collected data from the remaining 22 participants (18 males, 4 females). The ethics committee approved the study under the approval order IIT/SRIC/SAO/2017.

Instruction and Study Procedure

During the field study, we executed only Phase 1, where we implement the LIHF schedule for self-report collection. We instructed participants to select the TapSense keyboard as the default keyboard. We informed the participants that when they switch from an application after completing typing activity, they may receive a survey questionnaire as a pop-up to record their emotions. We also advised the participants not to dismiss the pop-up if they are occupied; instead, they were asked to record “No Response” if they do not want to record emotion at that moment.

Collected Dataset

We have collected 4609 typing sessions during this study period, which constitute close to 200 hours of typing labeled with an emotional state of all the participants (N = 22). Out of these sessions, we record 642 “No Response” sessions, which is nearly 14% of all recorded sessions. Notably, the actual number of ESM triggers is less than the number of typing sessions because, as per the LIHF policy, if two sessions are close (as defined by W in Fig. 10.8), only one ESM will be triggered to cater to both the sessions. We summarize the final dataset in Table 10.7.

EMA Self-Report Analysis

The users have reported two types of responses (a) One of the four valid emotions or (b) “No Response.” While the valid emotion labels are used to construct the emotion assessment model, the “No Response” labels are important to design the inopportune moment assessment model for the ESM.

Emotion Labels Analysis

We show the distribution of different emotion states for every user in Fig. 10.10. We have observed that ‘relaxed’ is the most dominant emotional state for most of the users. Overall, we have acquired 14%, 9%, 30%, 47% sessions tagged with happy, sad, stressed, and relaxed emotion states.

No Response Analysis

We show the user-wise distribution of “No Response” sessions in Fig. 10.11a. Although for most users, the fraction of “No Response” labels is relatively low, for a few users, it is more than 40%. We observe the application-wise distribution of “No Response” sessions in Fig. 10.11b; the majority of the “No Response” labels are associated with Instant Messaging (IM) applications like WhatsApp. We also compare the distribution of total “No Response| and total valid emotion labels at weekday, weekend, working hour (9 am-9 pm), and non-working hour in Fig. 10.11c. We infer the working hour based on the timestamp of the ESM response. We compute the percentage of total “No Response,” and the percentage of total other sessions is recorded at these times. However, in our dataset, we do not observe any major differences among these distributions. We also explore the time-wise distribution of No Response sessions in Fig. 10.11d, which indicates that a small number of No Response sessions were recorded during the late-night from 3 am onwards. This can be attributed to overall less engagement during late night.

TapSense Evaluation

In this section, first, we discuss the experiment setup. Then we evaluate the emotion classification performance and the ESM performance. Finally, we discuss the limitations of the study.

Experimental Setup