Abstract

If the microphysical domain is deterministic, this would seem to leave God with only two ways of influencing events: setting initial conditions or law-breaking intervention. Arthur Peacocke and Philip Clayton argue there is a third possibility, if there is strong emergence. We will examine four candidates for emergence: of intentionality from computational animal behavior, of sentience from biology, of biology from chemistry, and of chemistry from finite quantum mechanics. In all four cases, a kind of finite-to-infinite transition in modeling is required, and in each case a kind of randomness is involved, potentially opening up a third avenue for divine action.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

1 Modes of Divine Action

We suppose that God intends particular events and outcomes in the history of the world: God’s interests are not limited to general facts or patterns. Nonetheless, it seems clear that God does value the preservation of regular patterns—if He had no such interest, science would be impossible. As many philosophers and theologians (e.g., Thomas Aquinas, C. S. Lewis) have pointed out, the valuing of regular patterns does not preclude the possibility of miracles, in the sense of pattern-breaking interventions. Lewis argued in Miracles: A Preliminary Study (Lewis 1947) that in some cases it is the breaking of the pattern that is the central point of divine action.

If miracles involved the “violation of natural law,” as David Hume argued, that might count as a powerful objection to them. However, it is easy to agree with Thomas Aquinas that no such violation of the laws of nature is required, since it is built into the very nature of every creature to respond concordantly with every divine intention, whether general or particular (Summa Contra Gentiles 3.100).

Nonetheless, even if miracles are a real option, it makes sense to explore non-miraculous possibilities for particular divine interventions. Since God obviously values the uniformity of microphysical patterns, we can expect that He would act wherever possible in ways that preserve that uniformity. One alternative is the front-loading of His specific intentions into the universe’s initial conditions. This is a real possibility also, but it does face certain potential difficulties. First, a thoroughly deterministic world would rule out creaturely free will or autonomy (unless we assume compatibilism). If we preserve free will and incompatibilism by allowing rational creatures to interfere with the deterministic pattern of the physical world, then we again face a world in which the beautiful microphysical patterns are often spoiled. Second, any dramatic event produced by such fine-tuning of initial conditions (like the simulation of an audible voice from thin air) would involve such dramatic departure from average, statistically expected processes as to constitute a disruption of thermodynamic and other macroscopic regularities.

Consequently, there is good reason to explore the possibility of a third option. Philip Clayton (2006) andArthur Peacocke (2006) have argued that the phenomenon of emergence provides such an additional option for divine action. What is emergence, and how might it be relevant to the possibilities of divine action?

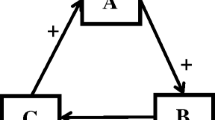

Our three-way distinction divides divine actions into those (i) that break the laws of physics, (ii) that use the laws of physics (by setting initial conditions), and (iii) that transcend the laws of physics (through emergence). This distinction should not be confused with a more traditional distinction between different definitions of “miracle.” Thomas Aquinas defined a miracle as a direct divine action that exceeds the causal power of every created agent (Summa Theologiae I, Q100, a4). Peter van Inwagen (1988) has defined a miracle as God’s acting indirectly by altering in an ad hoc, lawless way the fundamental causal powers of some created thing. A third possibility would be to define a miracle as God’s building (in an ad hoc, lawless way) a special or extraordinary event into the causal powers of particular things from the very beginning (e.g., giving certain fundamental particles at the Big Bang the power to sustain the weight of Jesus when they form some water in the Sea of Galilee). Our distinction is largely independent of these categories. Miracles in any of these three senses could be cases either of breaking or of transcending the laws of microphysics. God’s using the laws of physics (our second category) would be non-miraculous (by all three definitions).

2 The Metaphysics of Emergence

“Emergence” is a term that dates back to Samuel Alexander (1920) and that was used to label the group of thinkers called TheBritish Emergentists (McLaughlin 1992), which included, especially, C. D. Broad (1925).Footnote 1 The germ of the idea can be found in J. S. Mill (1872 [1843], Book III, Chapter 6, section 1). The term is currently used by both philosophers and scientists in a variety of meanings, many mutually exclusive. The notion of emergence is supposed to indicate both a measure of dependency on the microphysical (the higher level emerges “from” the microphysical) and a measure of independence (the higher “emerges” from the microphysical). The confusion enters in trying to make sense of how to combine these two elements without contradiction.

Some philosophers and scientists speak of a merely epistemological, computational, or conceptual emergence of higher domains (like chemistry, biology, and psychology) from microphysics, where this means simply that we are incapable of reconstructing or predicting the higher from the lower, due to limitations in our abilities to observe, measure, and (especially) compute higher-level facts from lower-level ones. Such epistemic or anthropocentric emergence is real but irrelevant to our concerns in this paper. What we need is ontological emergence, implying a measure of real independence and autonomy of the “higher” levels from microphysical facts.

The most common approach to making sense of ontological emergence is to suppose that higher-level facts supervene with nomological but not with metaphysical necessity upon the lower-level facts. The modern notion of supervenience was introduced by G. E. Moore (1922) and R. M. Hare (1952) to describe the relationship between evaluative and descriptive or “natural” facts: the evaluative facts supervene on the natural facts, in the sense that, once all of the natural facts are given, the evaluative facts follow with metaphysical necessity. In this version of supervenience, there are no two metaphysically possible worlds with the same natural facts but different evaluative facts.

It is possible, however, to have a weaker notion of supervenience: one in which there may be metaphysically possible worlds that agree in the base facts but disagree in the supervening facts, but there can be no pairs of “nomologically” possible worlds that do so. In other words, we have to suppose that there exist “laws of emergence” of some sort, which are metaphysically contingent but which nonetheless impose some kind of regular dependency of the higher levels on the lower.

However, this form of ontological emergence is still of no help to us in the present context, since higher-level facts are still tied rigorously and inflexibly to the lower-level laws or patterns, the breaking of which would constitute miraculous intervention. Consequently, ontological emergence of this kind introduces no third option for divine action.

In the last thirty years, a new form of ontological emergence has appeared—a causal notion, which dispenses entirely with the constraint of supervenience altogether. Timothy O’Connor (O’Connor 1994; O’Connor and Wong 2005) andPaul Humphreys (1997) are the leading figures in this movement. In this model, the higher-level facts are causally dependent on the lower-level for their initial appearance in nature, but they can subsequently evolve with independent causal power. If the higher-level causal powers are indeterministic in character, then the domain of higher-level facts can evolve into states that violate supervenience (in both senses).

This sort of causal emergence might seem to provide a third option for divine causation, if we can assume that God can directly influence the exercise of the causal powers at the higher level or directly add or subtract causal powers at the higher level. However, on reflection, this mode of divine causation is once again easily assimilated to the category of the miraculous. Nothing in the O’Connor-Humphreys model rules out the possibility that causation at the higher levels is deterministic, in which case God would once again have to disrupt regular patterns in order to intervene. It is the indeterminism, if there is any, and not the emergence, that is doing the real work in making space for divine action.

We can, however, modify the causal model of emergence slightly in order to secure a genuinely new route for divine action. We have to focus on the causal laws by which the lower-level facts determine the higher-level facts. We will argue in Sects. 14.3, 14.4, 14.5, and 14.6 that there is good reason to suppose that the causal joints between the lower and higher levels are genuinely random and un-patterned. If so, God would be free to fine-tune this nexus in such a way as to produce particular events at will without disrupting any regular or general patterns.

What do we mean by random in this context? We propose using recent mathematical definitions of product or algorithmic randomness, and then we apply these definitions to the causal “laws” or constraints by which the lower-level facts cause and sustain higher-level facts. That is, we will seek to define what it is for a causal law to be random. A random causal law is one that does not impose any general or regular pattern on the causal nexuses that it underwrites. Consequently, God is free to jury-rig a random causal law in such a way as to produce specific and particular events in history, without sacrificing any regularity of pattern.

Random sequences of events are much more common than non-random ones. The set of random sequences has measure one in the space of possible sequences. A measure one randomness property corresponds to the absence of a measure zero property (a property had by only the members of a special or unusual subclass of sequences). Martin-Löf (1966) proposed that we could define a random sequence as an infinite sequence that cannot be effectively determined to violate any measure one randomness property. By effectively determined, Martin-Löf meant determined by a computationally effective (recursive) procedure. So, a random sequence is one that cannot be effectively proved to belong to any such special subclass (Dasgupta 2011).

Random sequences are highly incompressible. The only effective description we can give of such a sequence is simply to list the members of the sequence one by one.

We can now state that a causal law (such as the law by which lower-level states cause and sustain higher-level ones) is random if and only if there is an infinite sequence of ordered pairs of states (the first belonging to the lower level and the second to the higher level) such that each pair instantiates the law and such that the sequence as a whole is Martin-Löf random. A pair of states instantiates a causal law just in case it represents a causal transition that conforms to the law, and neither state includes any features that are causally irrelevant to the transition. For example, suppose that we assign numbers to possible states of a system by means of an effective code—something like Gödel numbers for the description of the state in an appropriate scientific language. A causal law is random if there is an infinite random sequence of pairs of numbers (one for the lower-level states and one for the higher-level states) that instantiate the law.

Non-randomcausal laws reflect real regularities or patterns in nature. Newton’s laws, Einstein’s laws of general relativity, the dynamics of quantum mechanics—all of these are highly non-random. Sequences of the kind mentioned in the last paragraph that conform to these laws would be highly compressible, if we let the ordered pairs represent prior and posterior conditions of an isolated system. Given the first number in any ordered pair, we could use the general equations of force and motion to deduce the second member. In the case of random laws, this would be impossible. The correct result in each case would be entirely ad hoc, not computable from any more general law.

If the causal laws by which lower-level facts cause higher-level ones are random, then God would have been free to jury-rig these laws in order to produce very specific outcomes in the history of the world without disrupting any regularity or pattern, since the laws of emergent causation are in any case pattern-free, whether jury-rigged in this way or not. We can suppose that the random laws of emergent causation are also highly non-local, that is, the emergent higher-level state depends on the totality of lower-level states at the time in question throughout the universe (or throughout the backward time-cone of the higher-level state, if we take relativity into account). This would give God maximum flexibility in adjusting the laws of emergent causation to produce in particular cases the precise higher-level fact that He intends—so long as exactly the same lower-level condition (at the cosmic scale) never recurs, the adjustment of the random law in each particular case would have no implications for any past or future case of emergent causation. God could have arranged “in advance” (i.e., from eternity) for a vast menu of possible interventions, in anticipation of the variety of future contingent events. Alternatively, God could intervene directly or indirectly at the emergent level without violating any non-random pattern, since the laws of emergence are in any case random.

In order to give God a truly free hand, one more condition needs to be added. If, as on the O’Connor model of causal emergence, we allow for horizontal causation at the higher level (i.e., the direct causation of some later higher-order facts by other, earlier ones), then we will have to stipulate that at least some of the horizontal higher-order causal laws at each level must be themselves random (and perhaps also indeterministic). Otherwise, there would be exhaustive and deterministic patterns at the higher level that would exclude non-miraculous divine action.

There is one more difficulty to consider: the threat of epiphenomenalism. A domain of emergent facts is epiphenomenal if its members lack the capacity to influence lower-level facts. Where the emergent facts are epiphenomenal, all causation goes in one direction: from the lower level to the higher, and not vice versa. If the emergent levels are epiphenomenal, then jury-rigging the random laws of causal emergence gives God some real control over the higher levels of facts, but the influence would remain very subtle, non-public, and probably ephemeral and modest, since the influence could never precipitate down to influence the flow of physical events without disrupting the regular and deterministic patterns at that lowest level. We will suggest a way around this problem in Sect. 14.7, exploiting certain facts about emergence in quantum mechanics.

To summarize, we can define a precise model of emergence—causally random emergence—that would provide God with a third option for action in particular cases. The world exhibits CR (causally random) emergence if and only if there are two disjoint class of possible facts, the “lower-level facts” L and the “higher-level facts” H such that:

-

1)

Both sets of facts are fully real—they are not merely useful fictions.

-

2)

Neither set supervenes on the other with metaphysical necessity.

-

3)

Whenever a fact f from H is realized at time t, it is either caused by some plurality of facts from L (if there were no other H-facts in f’s immediate backward time-cone) or caused by some other fact from H and sustained at time t by a plurality of facts from L.

-

4)

The lower-to-higher (bottom-up) emergent causal laws (the vertical laws of emergence) that underwrite condition (3) are Martin-Löf random (in the sense described earlier).

-

5)

If there are any horizontal higher-level causal laws (laws connecting higher-level facts at one time to later higher-level facts), these are also random.

Is there any reason to think that causally random emergence actually occurs? In the following four sections, we will provide four cases that suggest that it does.

3 The Emergence of Meaning, Intentionality, and Mathematical Knowledge

There is good reason to think that the behavior of human organisms (considered biologically) is finite in complexity, whether considered individually or collectively. That is, the total set of our behavioral dispositions (including our linguistic dispositions) is finite. There are only finitely many internal states that our brains can be in, only finitely many perceptually distinguishable situations a human community could find itself in, and only a finite number of muscular contractions that could result from internal decisions. Thus, human society at any point in time could be modeled as a finite automaton.

It is well known that no finite set of linguistic dispositions can fix the semantic meaning of our languages, so long as our language includes the basic notions needed for arithmetic: natural number, 0, successor, +, ×. Thanks to Gödel’s incompleteness theorems, we know that no effectively computable axiom system can capture all of the truths of arithmetic. And, thanks to Gödel’s completeness theorem, we know that any consistent system that is incomplete can be given non-standard models, models in which “number,” “successor,” or other terms are given disparate mathematical interpretations. Thus, our behavioral dispositions are not sufficient to provide unique interpretations to our arithmetical notions, as was observed by Ludwig Wittgenstein (1953) and developed by Saul Kripke (1982).

Since the sub-intentional realm, if we include within it all biological, chemical, and physical facts, is not causally connected with the Platonic realm of natural numbers, there is nothing in that realm that is sufficient to ground determinate mathematical meaning. Nonetheless, it seems obvious that we know what we mean by terms like “number” and “successor.” All of mathematics, including mathematical logic and formal semantics, presupposes that we are able to think determinate mathematical thoughts. Consequently, there must exist a higher-order domain of meaning and intentionality, a domain that cannot supervene with metaphysical necessity upon the sub-intentional domain. Moreover, the causal laws by which the sub-intentional domain causes and sustains this domain of mathematical intentionality must be Martin-Löf random, since if those laws were computable, the non-intentional domain would be, contrary to our demonstration, sufficient to fix determinate meanings. Thus, the realm of mathematical intentionality must be CR-emergent, relative to the realm of sub-intentional facts.Footnote 2

There is, in addition, another argument based on Gödel’s theorems, an argument introduced by J. R. Lucas (1961) and defended by Roger Penrose (1994). Gödel’s incompleteness results show that any consistent, computable axiomatization of number theory is incomplete. In particular, no such consistent, computable axiomatization can be capable of proving its own consistency or soundness. Suppose, for reductio ad absurdum, that human mathematical cognition is computational, that is, it can be accurately modeled by a Turing machine, by a system of recursive functions. If so, human mathematical insight would be recursively (effectively) axiomatizable by some formal system S. Now, suppose further that if such a system were to exist, we could in principle recognize that S axiomatizes at least some of our mathematical insight. Suppose, finally, that our mathematical insight represents real knowledge and that we can know that it does so. From these assumptions and Gödel’s theorem, we can prove a contradiction. If we can recognize that S axiomatizes (some of) our mathematical insight and we know that that insight constitutes knowledge, then we can also recognize that S is sound (i.e., that all of the theorems of S are true). This implies that S is consistent (since any set of truths is consistent). Hence, we can prove that S is consistent. But, by hypothesis S axiomatizes all of our mathematical insight, then S itself must prove that S is consistent. By Gödel’s theorem, this is possible only if S is inconsistent. Contradiction.

There are only three possible ways to avoid this contradiction. We have to suppose that at least one of the following theses is true:

-

1.

Human mathematical intuition is not in fact computable (and so cannot be modeled by a recursively axiomatizable system).

-

2.

We cannot know that we have any mathematical knowledge.

-

3.

We cannot know that the system that actually and exhaustively axiomatizes our mathematical intuition is a representation of any of our mathematical knowledge.

Thesis 1 supports the CR emergence of human cognition from the computational functioning of the nervous system. Thesis 2 seems utterly implausible. So, the only real alternative is thesis 3. However, it is hard to believe that if our mathematical insight is generated by an algorithm, it is generated by one that is utterly alien and unrecognizable—one that does not correspond in any intelligible way to mathematical truths that we can recognize.

Mathematical intentionality is plausibly connected with the human capacity for free will. There is a long tradition within the Aristotelian tradition that includes ibn Sīna and Thomas Aquinas of associating free will with the rational soul, and the rational soul with our capacity to grasp universals, including the universals of mathematics. From a reductive point of view, human behavior can be described as random, which on the level of intentionality can be redescribed as “free.”Footnote 3

4 The Emergence of Phenomenal Qualia

In an important but often overlooked essay, Robert M. Adams (1987) has argued that the phenomenal qualities (called “qualia” in recent philosophy of mind literature) of sentient experience are emergent relative to the non-phenomenological facts of physics and chemistry (and biology, for that matter). As it turns out, the sort of emergence that Adams adumbrates fits precisely to our definition of CR emergence.

Adams points out that we believe both that there are certain ways that things appear to us in vision, smell, taste, hearing, and touch, ways that correspond to our perception of colors, flavors, sounds, and so on, and that these ways of appearing are correlated with and caused by certain biophysical facts, such as brain states. Red things appear in vision in a certain way to us, a way that differs from the way yellow things appear in vision, and from the way roses appear in smell. Moreover, red things tend to look the same way over time, and they do so because our experiences of red are somehow caused by the same sorts of physical and biophysical conditions.

However, Adams points out, when we try to explain these facts, we find that any causal laws that we can imagine will turn out to be random laws, in the sense defined in Sect. 14.2 (i.e., algorithmically random). According to Adams, a more general, non-random causal law would have to take something like the following mathematical form L (Adams 1987, 255):

Here “p” would be a variable ranging over a class of physical (non-phenomenological) states, and “q” a variable ranging over the entire class of phenomenological facts. “F” would have to be a function that, when applied to an arbitrary physical fact, yields in an effectively computable way some number or other mathematical value (vector, matrix, or whatever). Similarly, “S” would have to be a computable function that, when applied to an arbitrary phenomenological fact, yields a mathematical value of the same kind. When the two values match, for some p and q, the general law would enable us to deduce the particular causal law that p-situations cause q-situations.

However, as Adams convincingly argues, it is simply impossible to believe that there is any function like S.

There is no plausible, non-ad hoc way of associating phenomenal qualia in general with a range of mathematical values, independently of their empirically discovered correlations with physical states. The independence requirement is crucial here. … [In its absence, the “explanation”] would merely restate the correlation of phenomenal and physical states. (Adams 1987, 256–7)

In other words, a function like S would be possible only if we used the specific correlation facts to associate the phenomenal facts with such a mathematical value, but in that case, S itself would be algorithmically random, not effectively computable.

So, we have reason to suppose that phenomenal qualia are CR-emergent, relative to the class of biophysical, non-phenomenological facts. If this is right, we face an interesting question: what is the relationship between the domain of meaning and cognition, on the one hand, and sensory phenomenology, on the other? It seems pretty clear that sensory phenomenology could not help in fixing the meanings of our mathematical sentences or in guiding our mathematical intuitions. It’s also hard to see how facts about meaning or cognition could determine the phenomenal qualia associated with biophysical conditions. It seems that we have here two distinct domains of CR-emergent fact: the domain of thought and intentionality (especially mathematical thought) and the domain of phenomenology and sensation.

We could think of the sentient soul as the causally emergent product of interactions of millions of neurons in the organ (the brain) of an organism. As species evolved to produce evermore complex organisms with higher number of neuron cells and higher connectivity between the cells, the sentient soul as an entity appeared. This entity, the soul, does not exist in a single cell. These single cells or small groups of cells are “alive” but do not contain a soul (as the seat of sentience). If the brain is dead through the loss of a sufficient number of cells and their connections, the organism is a vegetable, that is, without such a soul. The organism in this case is alive only. Animals have souls, but plants don’t because they lack the connectivity between cells to reach the threshold of the creation of the sentient soul.

It can be observed that as the number of cells increase in any one organism the complexity increases along with the cellular division of labor (Herculano-Houzel 2009). Most notably in higher forms the brain is the one organ whose number of cells increases logarithmically compared to other organs relative to body size. This increase in number of brain cells can be seen in mammals and in primates. The increase is not only in number of brain cells but more importantly in the connections between these neurons and how they are organized, that is, the architecture or neuronal wiring (Hoffman 2014). Our hypothesis is that the entity what we call a soul emerges as a result of the complex numbers and interactions and architecture among the brain cells. When an organism dies, the cells are still alive by definition, since they are still metabolizing, dividing, and interacting with the environment, but no one would claim the cell has a soul. The difference between multicellular life and the life of the individual cells is a case of random emergence. Similarly, as the number of brain cells and connections increase within mammals the rational consciousness emerges in humans and their species compared to other forms. This also can be lost when a person’s higher brainfunctions are destroyed, even when the sentient soul persists.

There is a further emergence of certain rational or superrational feelings or attitudes and their manifestation, such as love and altruism. These emerge again as a result of increased connections between cells. All of these emergent features appear gradually in evolutionary history. It is not a matter of all or none, although there may be a go/no-go threshold within the evolutionary tree of species that are now extinct. We hypothesize that there are random mathematical functions that describe this emergence, similar to the use of fractal scaling to describe the brain’s organized variability. An important feature of fractal objects is that they are invariant, in a statistical sense, over a wide range of scales (Hoffman, Evolution of the HumanBrain). Such invariance or regularity at one level of description is consistent with the randomness of the complete functional relationship.

5 The Emergence of Life

Teleological language and concepts are ubiquitous and ineliminable in biology. Enzymes are proteins with the natural function of catalyzing certain chemical reactions. Genes are chains of nucleic acid with the function of coding for the production of certain enzymes. A nucleus is a molecular structure with the function of housing and facilitating the function of genes, and so forth. If we suppose that these teleological functions are merely “heuristic,” we have to ask, heuristic for what? To what further discoveries do teleological models lead? Only to still more biological knowledge, that is, to more teleological knowledge. It would be crazy to suppose that all of biology is merely a fiction, useful only as a tool for additional chemical and physical discoveries. In fact, physics and chemistry can do quite well on their own: they stand in no need of biology. Biology exists for its own sake, and biological inquiry never escapes from the teleological domain.

As Georg Toepfer has put it:

teleology is closely connected to the concept of the organism and therefore has its most fundamental role in the very definition of biology as a particular science of natural objects. … The identity conditions of biological systems are given by [teleological] functional analysis, not by chemical or physical descriptions. … This means that, beyond the [teleological] perspective, which consists in specifying the system by fixing the roles of its parts, the organism does not even exist as a definite entity. (Toepfer 2012, 113, 115, 118)

This was recognized by the Neo-Kantians of the early twentieth century:

We even have to define this science [biology] as the science of bodies whose parts combine to a teleological ‘unity.’ This concept of unity is inseparable from the concept of the organism, such that only because of the teleological coherence we call living things ‘organisms.’ Biology would therefore, if it avoided all teleology, cease to be the science of organisms as organisms. (H. Rickert 1929 [1902], 412, cited and translated by Toepfer 2012, 113)

The chemist and philosopher Michael Polanyi (1967, 1968) also recognized the emergence of life from physics and chemistry.

Evolution itself presupposes a strong form of teleology in the very idea of reproduction. No organism ever produces an exact physical duplicate of itself. In the case of sexual reproduction, the children are often not even close physical approximations to either parent at any stage in their development. An organism successfully reproduces itself when it successfully produces another instance of its biological kind. This presupposes a form of teleological realism (Deacon 2003).

The most plausible attempt to remove teleology from biological science is that of functionalism, as developed by F. P. Ramsey (1929), David K. Lewis (1966), and Robert Cummins (1975). In this tradition, biological functions are identified with complex, recursively specified behavioral dispositions. In a recent paper, Alexander Pruss and one of us argued (Koons and Pruss 2017) that such an identification cannot succeed. We made use of a thought experiment that was created by Harry Frankfurt (1969) to refute the idea that freedom of choice can be analyzed in terms of the availability of alternative actions: namely, the thought experiment of the potential manipulator. We are to suppose that we have an organism with certain biological teleo-functions. We introduce into the thought experiment a potential manipulator who (for some reason) wants the organism to follow a certain fixed behavioral script. If the organism were to show signs of being about to deviate from the script, then the manipulator would intervene, altering the organism’s internal constitution and causing it to continue to follow the script. We are to imagine that in fact the organism spontaneously and fortuitously follows the script exactly, and, as a consequence, the manipulator never intervenes.

Frankfurt introduced such a thought experiment to challenge the idea that freedom of the will requires alternative possibilities. Koons and Pruss used it to show that the existence of biological functions is independent of the organism’s functional organization—its system of behavioral dispositions, which links the dispositions to inputs, outputs, and each other. It is obvious that the presence of an inactive, external manipulator cannot deprive the organism of its biological functions. However, the manipulator’s presence is sufficient to deprive the organism of all of its normal behavioral dispositions: under the circumstances, it is impossible for the organism to deviate from the manipulator’s script. If the manipulator’s script says that at time t + 1 the organism is to be in state S, then that is what would happen, no matter what state the organism were in at time t.

Moreover, biological malfunctioning is surely possible as a result of injury or illness. A functionalist reduction of biological teleology cannot incorporate the effects of every possible injury or illness, since there are no limits to the complexity of the sort of phenomenon that might constitute an injury or illness. Injury can prevent nearly all behavior—so much so, as to make the remaining behavioral dispositions (both internal and external) so non-specific as to fail to distinguish one teleological function from another. Consider, for example, locked-in syndrome, as depicted in the movie The Diving-Bell and the Butterfly. Therefore, the true theory linking teleology with behavioral dispositions must contain postulates that specify the normal connections among states.

Without resorting to realism about teleology, our only account of normalcy would be probabilistic. Thus, a system normally enters state Sm from state Sn as a result of input Im provided it is likely to do this. However, serious injury or illness can make a malfunctioning subsystem rarely or never do what it should, yet without challenging the status of the subsystem as, say, a subsystem for visual processing of shapes. And, again, an inactive but potential Frankfurtian manipulator, whether external or internal, can change what the system is likely to do without actually manipulating the system in any way.

So, we have good reason to think of biological teleology as something both real and non-supervenient on the underlying physics and chemistry. We can, therefore, reasonably adopt the thesis of the causal emergence of biology. Moreover, the possible existence of a wide variety of environments and evolutionary histories for any given biochemical structure, as well as the potentially infinite number and varieties of illness, defect, and injury that prevent any simple deduction of biological purpose from actual functioning, together make it very likely that the laws of causal emergence in this case are algorithmically random.

What is the relationship between the emergence of thought and sensation, on the one hand, and biological teleology, on the other? In this case, we have good grounds for seeing some kind of downward causation at work: causation from mind to biology.Footnote 4 The content of our mental states, the operations of mathematic cognition, and the phenomenal states associated with neural functioning are all highly relevant to determining the true biological function of the relevant neural processes.

6 The Emergence of Thermodynamics and Chemistry

Finally, we turn to the case of thermodynamics and chemistry, in light of the quantum revolution of the early twentieth century. One of us has recently argued (Koons 2018b, 2019, 2021) that quantum thermodynamics provides some good reason for suspecting that chemistry and thermodynamics are causally emergent from the underlying quantum mechanical physics (whether traditional particle physics or quantum field theory).

We can plausibly derive the dynamical laws of quantum statistical mechanics from the dynamical laws of ordinary QM, but the space of possibilities defined by QSM is not reducible to the space defined by ordinary QM (Ruetsche 2011, 290). Hence, quantum statistical mechanics, and related quantum theories of thermodynamics, solid-state physics, and chemistry, are real and do not supervene (with either metaphysical or nomological necessity) on the quantum-mechanical facts of the constituent particles.

In classical mechanics, in contrast, the space of possible boundary conditions consists in a space each of whose “points” consists in the assignment (with respect to some instant of time) of a specific location, orientation, and velocity to each of a class of micro-particles. The totality of microphysical assignments in classical physics is both complete and universal with respect to the natural world. As long as we could take this for granted, the reduction of macroscopic laws to microscopic laws seemed sufficient to ensure the nomological supervenience of the macroscopic world on the microscopic. However, the quantum revolution has called into question the completeness of the microphysical descriptions, opening up the possibility of causally emergent phenomena at other levels of scale.

In the case of quantum thermodynamic systems, the whole is greater than the sum of its parts—in a very literal sense. Any mere collection of fundamental particles has, in itself, only finitely many degrees of freedom (as measured by the position and momentum of each particle), while thermal systems (as modeled in quantum statistical mechanics) have infinitely many degrees of freedom (Primas 1980, 1983; Sewell 2002). In fact, the models of quantum statistical mechanics are infinite in any even stronger sense: they consist of infinitely many subsystems, represented by a non-separable Hilbert space. This inflation of degrees of freedom would have been extremely implausible in classical statistical mechanics, where we know that there can be, in any actual system, only finitely many degrees of freedom, since the particles (atoms, molecules) survive as discrete, individual entities. In quantum mechanics, individual particles (and finite ensembles of particles, like atoms and molecules) seem to lose their individual identity, merging into a kind of quantum goo or gunk. Hence, there is no absurdity in supposing that the whole has more degrees of freedom (even infinitely more) than are possessed by the individual molecules, treated as an ordinary multitude or heap.

In algebraic quantum thermodynamics, physicists add new operators that commute with each other (forming a non-trivial “center”). These new “observables” are represented by distinct representation spaces, not by vectors in a single Hilbert space, and are thereby exempted from such typical quantal phenomena as superposition and complementarity. The von Neumann-Stone theorem entails that only algebras with infinitely many degrees of freedom (and non-separable spaces) can contain such non-quantal observables (in a non-trivial center). These new observables can then be used to define key thermodynamic properties like temperature, phase of matter (solid, liquid, etc.), and chemical potential. The thermodynamic properties do not supervene with metaphysical necessity on the quantum wavefunction for the world’s fundamental particles and waves, since any model of the latter is separable and finite, lacking the non-quantal observables needed for thermodynamics and chemistry.

Are the causal laws by which thermodynamic states (modeled by infinite algebraic models) emerge from pure quantum states random? Quantum statistical models depend on selecting an appropriate GNS (Gelfand-Naimark-Segal) representation, one based on a particular vector in the Hilbert space (Sewell 2002, 19–27). The discovery of an appropriate GNS representation in each application involves an element of creativity and judgment on the part of the physicist: there is no simple and general recipe or algorithm. Hence, it is at least possible that the emergent causal law is random.

In the case of horizontal causation at the level of thermodynamics, Primas (1990) has shown that in the most important cases, we can show that the dynamics is nonlinear and stochastic. The horizontal causal laws are, therefore, random in the algorithmic sense, as required.

Is there downward causation from biology to thermodynamics and chemistry? Without a doubt, the general direction of biological thinking, from the time of the synthesis of urea by Friedrich Wöhler in 1828, has been to emphasis “upward” causation, explaining biological function in chemical terms. However, the holism of quantum mechanics provides a real avenue for the determination of chemical form by the wider “classical” environment of each molecule, including the biological environment. Molecules can “inherit” or “acquire” classical properties (including stable molecular structure) from their environments, despite the fact that they can be observed in superposed quantal states when isolated. It is only the molecule as “dressed” by interaction with its environment that can spontaneously break the strict symmetry of the Schrödinger equations, and it is only a partially classical environment that can induce the quasi-classical properties of the dressed molecule. In order to produce the superselection rules needed to distinguish stable molecular structures, the environment must have infinitely many degrees of freedom, due to its own thermodynamic emergence (Primas 1980, 102–5; 1983, 157–9). It seems possible that the shape of such thermodynamic emergence could be molded in a top-down fashion by persistent biological structures and processes.

R. F. Hendry, a leading philosopher of chemistry, agrees that a molecule’s acquisition of classical properties from its classical environment, thereby breaking its microscopic symmetry, should count as form of “downward causation”:

This super-system (molecule plus environment) has the power to break the symmetry of the states of its subsystems without acquiring that power from its subsystems in any obvious way. That looks like downward causation. (Hendry 2010, 215–6)

7 Downward Causation in Modern Quantum Theory

How far down does downward causationgo? How far down does it have to go, for the RC-emergence model to provide a viable option for divine action? In order to answer these questions, we must first ask the following: What domain constitutes the lowest level of nature? One plausible answer would be that the lowest domain consists of the interaction of fundamental particles (electrons, quarks, photons, and so on) or of quantum fields. In order to distinguish this lower level from that of thermodynamics and chemistry, we would have to suppose that the correct models for the fundamental interactions would involve only finitely many degrees of freedom, as in standard, finitary models whose dynamics are defined by the Schrödinger equation. Quantum cosmologists contend that we should model the evolution of the entire cosmos by means of a single quantum “wavefunction.”

Such models are strictly deterministic (in fact, the Schrödinger evolution of the quantum wave is much more strictly deterministic than was classical, Newton-Maxwell dynamics). However, they face a serious problem: they define (via Born’s rule) the probability of detecting any particular result of any measurement, but such measurements seem to involve a kind of interruption (a “wave collapse”) in the seamless, deterministic evolution of the wavefunction. The “measurement problem” concerns how to reconcile such apparent collapses with the underlying dynamics, and how to define when and how such collapses occur (if at all).

The Everettian or many-worlds interpretation attempts to do away with the measurement problem by denying that any such collapse ever occurs. Instead, the seamless evolution of the wavefunction according to Schrödinger’s law represents a constantly branching world, one in which all possible results of each measurement are observed on different macroscopic “branches.” Everettians have difficulty explaining the meaning of the probabilities generated by Born’s rule: it seems that every result occurs with probability one, not with a probability corresponding to the square of the amplitude of the wavefunction at a corresponding vector.

Alexander Pruss (2018) and one of us (Koons 2018a) have argued that the best way to fix this problem is to take all but one of the Everettian branches to represent mere potentialities (as Heisenberg 1958 had proposed). The one actual branch is actualized by the exercise of causal powers by “substantial forms” at the chemical, biological, and personal levels. The Pruss-Koons model can be called the “traveling forms” interpretation (the world’s forms travel together along the branches of the macroscopic tree structure of the Everettian model). The addition of the parameter of actuality renders the Everettian model consistent with causal emergence: although the whole system of branches supervenes on the microphysical quantum wavefunction, the fact of which branch is uniquely actual does not.

On the traveling forms interpretation, downward causation never reaches the level of the evolving quantum wavefunction, but this is relatively innocuous, since that wavefunction represents only the physical potentialities of the world’s matter: it does not exhaust what is true of the actual state of the world. So long as God can influence the emergent levels, He is free to determine which of the Everettian branches is actualized at each point in time. Hence, the influence of God’s action through causal emergence can be public, significant, and long-lasting.

8 Some Theological Reflections

Many miracles in the Abrahamic tradition might be best thought of as cases of emergent intervention. It is striking that many divine actions can best be thought of as altering only human intentionality or experience. For all three traditions, one of the most important divine actions is that of inspiring prophetic knowledge and proclamation. This can be realized at the purely intentional level, or, in the case of visions and audible voices, at the level of phenomenal qualia. Similar accounts could be given of such miracles as the prolongation of daylight at Jericho (Joshua 10) and for King Hezekiah (2 Kings 20), Moses’ burning bush (Exodus 3), Elisha’s floating ax-head (2 Kings 6), Balaam’s speaking donkey (Numbers 22), and the star of Bethlehem (Matthew 2).

In the Islamic tradition, prophetic inspiration is the most important and central form of miracle. Ibn Sīna (980–1037 C.E.) placed the emphasis on the purely intentional level: God provided Mohammed with knowledge and discernment, and Mohammed’s own mind was responsible for transposing this information into the linguistic or symbolic level (Renard 1994, 6). Other thinkers, such as Mulla Sadra (1572–1641 C.E.), insisted that divine action encompasses the symbolic or imaginative level as well, which would correspond to the emergence of sentience (Rahman 1973, 242). This combination of intellectual and imaginative action was probably also involved in Mohammed’s Night of Power, the Mi’raj, in which he had a vision of the fourth heaven.

Miraculous healings often occur at a bio-functional and teleological level: curing of paralysis, epilepsy, mental illness, blindness, and deafness. Many other healings, such as the elimination of leprosy, might also be purely biological in nature (via, perhaps, the re-tooling of the immune system). Alterations in animal behavior would be placed in this category, as in six of the ten plagues of Egypt in Exodus, and Daniel in the lion’s den (Daniel 6).

At the level of chemistry and thermodynamics, we could place Jesus’ turning water into wine, the manna in the desert of Sinai (assuming that this did not have a natural explanation), the rendering harmless of poison and snakebite (2 Kings 4, Acts 28), and the unburnable napkin associated with the life of Mohammed (Renard 1994, 143). Miraculous rain and its absence might be explained in thermodynamic terms, as might Mohammed’s transformation Muqawais’ stony ground into fertile soil (Renard 1994, 143).

The miracles that violate physical patterns are the exception rather than the rule: the three men in the fiery furnace (Daniel 3), Jesus’ walking on water, the feeding of the five thousand, the multiplication of the widows’ oil (1 Kings 17, 2 Kings 4), and the bottomless water skin of Mohammed (Renard 1994, 143). We would probably have to include all of the various resurrections: for example, the Shunnamite’s son (2 Kings 4), the widow of Zarephath’s son (1 Kings 17), Lazarus (John 11), the widow’s son at Nain (Luke 7), the daughter of Jairus, and Tabitha (Acts 9), since these would have involved more than merely chemical alterations (especially in the case of Lazarus, who had been dead for four days).

Clayton (2006) and Peacocke (2006) argue that emergent intervention supports panentheism, a more naturalistic and immanent conception of God than is compatible with classical theism. This conclusion is based on the premise that God can alter emergent phenomena only by changing the ultimate, cosmic context of local events. However, we have argued that God can obtain these results by jury-rigging random laws of causal emergence. This model is fully compatible with the classical theism of ibn Sīna or Thomas Aquinas, with their timeless and utterly transcendent God.

Notes

- 1.

Although the concept of emergence is a relatively late arrival, philosophers in the Abrahamic tradition have long been influenced by and contributors to an Aristotelian tradition that attributes real natures and causal powers to organisms and other relatively large-scale entities. Philosophers like Avicenna, Maimonides, and Thomas Aquinas exemplify this tradition.

- 2.

Our argument would suggest that machine learning and artificial intelligence are intrinsically limited, because they lack the sort of ontological emergence required for true insight. Artifacts like computers can only emulate or mimic the knowledge of truly rational creatures with emergent natures.

- 3.

Such freedom need not contradict God’s perfect knowledge, because He is outside the dimensions that restrict human life, including the dimension of time. We are constrained by the dimension of time, but He is not, so He can know the future without interference.

- 4.

Such downward causation is consistent with the randomness of the biological domain, so long as it is also governed by random causal laws. What we’re calling downward causation here is a form of what we defined as horizontal causation above: causation of some emergent facts by other emergent facts. In Sect. 14.7, we’ll address the problem of how far “down” such downward causation can go, consistent with our model.

Bibliography

Adams, Robert M. 1987. Flavors, Colors, and God. In The Virtue of Faith and Other Essays in Philosophical Theology, 243–262. New York: Oxford University Press.

Alexander, Samuel. 1920. Space, Time, and Deity. London: Macmillan.

Broad, C.D. 1925. The Mind and Its Place in Nature. 1st ed. London: Routledge & Kegan Paul.

Clayton, Philip. 2006. Emergence from Quantum Physics to Religion: A Critical Appraisal. In The Re-Emergence of Emergence: The Emergentist Hypothesis from Science to Religion, ed. Philip Clayton and Paul Davies, 303–322. Oxford: Oxford University Press.

Cummins, Robert. 1975. Functional Analysis. The Journal of Philosophy 72 (20): 741–765. https://doi.org/10.2307/2024640.

Dasgupta, Abhijit. 2011. Mathematical Foundations of Randomness. In Philosophy of Statistics, Handbook of the Philosophy of Science: Volume 7, ed. Prasanta Bandyopadhyay and Malcolm Forster, 641–670. Amsterdam: Elsevier.

Deacon, Terence W. 2003. The Hierarchic Logic of Emergence: Untangling the Interdependence of Evolution and Self-Organization. In Evolution and Learning: The Baldwin Effect Reconsidered, ed. B.H. Weber and D.J. Depew, 273–308. Cambridge, MA: MIT Press.

Frankfurt, Harry. 1969. Alternate Possibilities and Moral Responsibility. Journal of Philosophy 66 (23): 829–839. https://doi.org/10.2307/202383.

Hare, R.M. 1952. The Language of Morals. Oxford: Oxford University Press.

Heisenberg, Werner. 1958. Physics and Philosophy: The Revolution in Modern Science. London: George Allen and Unwin.

Hendry, Robin Findlay. 2010. Emergence vs. Reduction in Chemistry. In Emergence in Mind, ed. Cynthia MacDonald and Graham MacDonald, 205–221. Oxford: Oxford University Press.

Herculano-Houzel, Suzana. 2009. The Human Brain in Numbers: A Linearly Scaled-Up Primate Brain. Frontiers in Human Neuroscience 3. https://doi.org/10.3389/neuro.09.031.2009.

Hoffman, Michel A. 2014. Evolution of the Human Brain: When Bigger Is Better. Frontiers in Neuroanatomy 8. https://doi.org/10.3389/fnana.2014.00015.

Humphreys, Paul. 1997. How Properties Emerge. Philosophy of Science 64 (1): 1–17. https://www-jstor-org.ezproxy.lib.utexas.edu/stable/188367

Koons, Robert C. 2018a. The Many-Worlds Interpretation of Quantum Mechanics: A Hylomorphic Critique and Alternative. In Neo-Aristotelian Perspectives on Contemporary Science, ed. William M.R. Simpson, Robert C. Koons, and Nicholas J. Teh, 61–104. London: Routledge.

———. 2018b. Hylomorphic Escalation: A Hylomorphic Interpretation of Quantum Thermodynamics and Chemistry. American Catholic Philosophical Quarterly 92 (1): 159–178. https://doi.org/10.5840/acpq2017124139.

———. 2019. Thermal Substances: A Neo-Aristotelian Ontology for the Quantum World. Synthese. https://doi.org/10.1007/s11229-019-02318-2.

———. 2021. Powers Ontology and the Quantum Revolution. European Journal for Philosophy of Science 11 (1): 1–28.

Koons, Robert C., and Alexander R. Pruss. 2017. Must a Functionalist Be an Aristotelian? In Causal Powers, ed. Jonathan Jacobs, 194–204. Oxford: Oxford University Press.

Kripke, Saul. 1982. Wittgenstein on Rules and Private Language. Cambridge, MA: Harvard University Press.

Lewis, C.S. 1947. Miracles: A Preliminary Study. New York: Macmillan.

Lewis, David K. 1966. An Argument for the Identity Theory. Journal of Philosophy 63 (1): 17–25. https://doi.org/10.2307/2024524.

Lucas, J.R. 1961. Minds, Machines, and Gödel. Philosophy 36 (137): 120–124. https://www-jstor-org.ezproxy.lib.utexas.edu/stable/3749270

Martin-Löf, Per. 1966. The Definition of a Random Sequence. Information and Control 9: 602–619.

McLaughlin, Brian. 1992. The Rise and Fall of British Emergentism. In Emergence or Reduction? Essays on the Prospects of Nonreductive Physicalism, ed. A. Beckermann, H. Flohr, and J. Kim, 49–93. Berlin: Walter de Gruyter.

Mill, J.S. 1872 [1843]. System of Logic. 8th ed. London: Longmans, Green, Reader, and Dyer.

Moore, G.E. 1922. Philosophical Studies. London: Routledge.

O’Connor, Timothy. 1994. Emergent Properties. American Philosophical Quarterly 31 (2): 91–104. https://www-jstor-org.ezproxy.lib.utexas.edu/stable/20014490

O’Connor, Timothy, and Hong Yu Wong. 2005. The Metaphysics of Emergence. Noûs 39 (4): 658–678.

Peacocke, Arthur. 2006. Emergence, Mind, and Divine Action: The Hierarchy of the Sciences in Relation to the Human Mind-Brain-Body. In The Re-Emergence of Emergence: The Emergentist Hypothesis from Science to Religion, ed. Philip Clayton and Paul Davies, 257–278. Oxford: Oxford University Press.

Penrose, Roger. 1994. Shadows of the Mind: A Search for the Missing Science of Consciousness. Oxford: Oxford University Press.

Polanyi, Michael. 1967. Life Transcending Physics and Chemistry. Chemical Engineering News 45: 54–66.

———. 1968. Life’s Irreducible Structure. Science 160: 1308–1312.

Primas, Hans. 1980. Foundations of Theoretical Chemistry. In Quantum Dynamics for Molecules: The New Experimental Challenge to Theorists, ed. R.G. Woolley, 39–114. New York: Plenum Press.

———. 1983. Chemistry, Quantum Mechanics, and Reductionism: Perspectives in Theoretical Chemistry. Berlin: Springer-Verlag.

———. 1990. Induced Nonlinear Time Evolution of Open Quantum Objects. In Sixty-Two Years of Uncertainty: Historical, Philosophical, and Physical Inquiries into the Foundations of Quantum Mechanics, ed. Arthur I. Miller, 259–280. New York: Plenum Press.

Pruss, Alexander R. 2018. A Traveling Forms Interpretation of Quantum Mechanics. In Neo-Aristotelian Perspectives on Contemporary Science, ed. William M.R. Simpson, Robert C. Koons, and Nicholas J. Teh, 105–122. London: Routledge.

Rahman, Fazlur. 1973. The Philosophy of Mulla Sadra. Albany: SUNY Press.

Ramsey, F.P. 1929. Theories. In The Foundations of Mathematics and Other Logical Essays, ed. R.B. Braithwaite, 212–236. Paterson: Littlefield and Adams.

Renard, John. 1994. All the King’s Falcons: Rumi on Prophets and Revelation. Albany: SUNY Press.

Rickert, Heinrich. 1929. Die Grenzen der naturwissenschaftlichen Begriffsbildung: Eine logische Einleitung in die historischen Wissenschaften. 1st ed., 1902. Tübingen: Mohr (Siebeck). Cited and translated by Toepfer 2012, 113.

Ruetsche, Laura. 2011. Interpreting Quantum Theories: The Art of the Possible. Oxford: Oxford University Press.

Sewell, G.L. 2002. Quantum Mechanics and Its Emergent Macrophysics. Princeton: Princeton University Pres.

Toepfer, Georg. 2012. Teleology and Its Constitutive Role for Biology as the Science of Organized Systems in Nature. Studies in History and Philosophy of Science Part C: Studies in History and Philosophy of Biological and Biomedical Sciences 43 (1): 113–119. https://doi.org/10.1016/j.shpsc.2011.05.010.

Van Inwagen, Peter. 1988. The Place of Chance in a World Sustained by God. In Human and Divine Action: Essays in the Metaphysics of Theism, ed. Thomas V. Morris, 211–235. Ithaca: Cornell University Press.

Wittgenstein, Ludwig. 1953. Philosophical Investigations. London: Blackwell.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this chapter

Cite this chapter

Koons, R.C., Dajani, R. (2022). Divine Action and the Emergence of Four Kinds of Randomness. In: Clark, K.J., Koperski, J. (eds) Abrahamic Reflections on Randomness and Providence. Palgrave Macmillan, Cham. https://doi.org/10.1007/978-3-030-75797-7_14

Download citation

DOI: https://doi.org/10.1007/978-3-030-75797-7_14

Published:

Publisher Name: Palgrave Macmillan, Cham

Print ISBN: 978-3-030-75796-0

Online ISBN: 978-3-030-75797-7

eBook Packages: Religion and PhilosophyPhilosophy and Religion (R0)