Abstract

This contribution defines a methodology for the direct offline programming of robotic high-precision assembly tasks without the need for real-world teach-in, even for less-accurate lightweight robots. Using 3D scanning technologies, the relevant geometrical relations of the offline programming environment are adjusted to the real application. To bridge remaining accuracy gaps, tactile insertion algorithms are provided. As repetitive inaccuracy compensation through tactile search is considered wasteful, a method to automatically adapt the robot program to continuously increase precision over time, taking into account multiple influence sets is derived. The presented methodology is validated on a real-world use case from electronics production.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Motivation

Many manual assembly tasks use the sensory abilities of the human worker to achieve high precision. Automation of such tasks usually involves special high-accuracy manipulators and tedious calibration of the application. Such systems are usually expensive and cannot be included into the material flow of existing manual production lines, in the sense of a hybrid system. To allow a safe robot operation in a manual system without the need for complete encapsulation of the automated process, lightweight robots (LWR) are often chosen. The lightweight construction of these manipulators however, further decreases their inherent accuracy [19]. The manual teach-in of such tasks is also time-consuming, which is why most programs are prepared in simulation tools by offline programming (OLP) and are then adapted to the actual application via re-teaching of key interaction points. This approach however greatly decreases system flexibility, as new product or variant introduction thus requires a manual touch-up on the real system resulting in production downtimes. Furthermore, the teach-in of such processes is often itself not accurate enough by itself, resulting in iterative teach-point optimization.

To address this, we introduce a methodology for the direct OLP of high-precision assembly tasks that still allows for the use of low-accuracy LWRs. The system is also capable of automatic program adaption for increasing process accuracy over time.

First, we introduce the relevant state of the art concerning high-precision robotic assembly, often referenced as peg-in-hole, as well as 3D scanning for production system planning. Based on this, we derive a need for action and requirements for such a method. In the third chapter, the new approach is described, going into detail on main features. We then validate the approach on a real scenario from power electronic production. The results are discussed and the contribution is concluded on an outlook of further planned research activities.

2 State of the Art

As our approach combines strategies of robotic high-precision assembly and 3D scanning, relevant research approaches in both fields are presented. From this, we derive a research gap to allow direct OLP of high precision assembly tasks and detail our contribution.

2.1 Robotic Peg-In-Hole Assembly

Robotic peg-in-hole assembly is categorized in five strategies by Li and Qiao: Sensing-information based, compliant mechanism-based, environment constraint-based, sensing constraint-based and human-inspired [16]. Xu et al. use a differentiation between contact-model-based and contact-model-free strategies [25]. The latter involve learning-by-demonstration techniques and reinforcement-learning approaches, which are custom to every application and thus hardly reusable. Contact-model-based approaches involve environment-constraint-based strategies and sensing-based compliant control. As shown in Metzner et al. [19], sensing-information-based methods offer great potential for economic automation of high-precision assembly even without highly accurate manipulators. Another advantage of such systems is the ability to reuse compensation routines and thus to increase engineering efficiency. In this contribution, we focus on force/torque-sensing (FTS) based systems, as in contrary to vision-based systems, high precision can be achieved without the need for online setup of the vision system and without the need for markers.

FTS-based precision assembly has been demonstrated by Jasim et al. A spiral-shaped search pattern with continuous surface contact is introduced [11]. This concept is abstracted in multiple contributions, e.g. to ambidextrous robots [26]. More general strategies have been implemented through the LIAA project, leading to the PiTaSC library [20]. Lately, commercial software systems, like Drag&Bot or Artiminds RPS have been introduced, that also offer FTS- and vision-based compensation modules [6]. However, all of these approaches are based on development, combination and parametrization on the real application, leading to longer system setup times and an inherent invariability to variant changes, new product introduction or processual changes, as a touch-up of the real system is required, leading to lengthy down-times. Metzner et al. present an approach for offline program synthesis to allow an offline preparation and parametrization of the program [19]. However, the application still needs to be calibrated with the real robot, as the simulation scene for OLP frequently deviates from the real application more drastically than can be compensated with the search strategies. Furthermore, all of the above approaches are static, meaning that a data-driven internal optimization of the robot frames is not performed, leading to wasteful repeated search times under the presence of poorly taught points or changes in the system.

2.2 3D-Scanning of Industrial Settings for Production Planning

3D-Scanning is used mostly for planning of new production systems in brown-field scenarios [18]. Scans are often taken using LiDAR-Scanners, also used for surveying, archaeology and building process documentation. This procedure allows for the capturing of very large areas on a factory scale, are however only partially suited for fine adjustment of small individual components due to resolution restrictions. Such scans are most suited for global planning of layouts, media in- and outlets and clearances.

Precise local scanning is done using either simultaneous localization and mapping (SLAM) approaches or structure-from-motion (SfM) routines. In SLAM approaches, an initial frame from an RGBD-sensor is iteratively complemented with succeeding frames that are fitted into the generated map [3]. These maps are mostly created using handheld RGBD-sensors and offer a time-efficient way to capture 3D scenes. However, such sensors suffer from significant sensor noise, which, complemented with fitting error propagation from the SLAM routine leads to subpar scanning accuracy.

SfM routines base on feature matching on numerous RGB images with identical optical parameters, which are then used to calculate the relative capturing poses for each frame based on a sparse reconstruction on the feature level. In a next step, this information is used to perform a dense reconstruction of the scene using multi-view stereo approaches [22, 23]. Such approaches have shown to offer high accuracy, especially on texture-rich settings, whilst only requiring a simple RGB camera for capturing. However, industrial application and validation for fine adjustment of virtual planning approaches has not been shown.

2.3 Research Gap and Need for Action

This contribution aims to enable a direct offline programming of high-precision assembly by providing a methodological model for deviations influencing accuracy in assembly. A compensation of the different deviations is conducted using CAD calibration from 3D scans and FTS-based tactile insertion strategies. Furthermore, an approach for data-driven optimization of the robot interaction frames is presented. We extend the state of the art by providing a systematic classification of accuracy influences in high-precision assembly tasks and corresponding strategies for their compensation. We furthermore quantitatively evaluate the usage of SfM approaches for the detailed 3D capturing of production systems for precise OLP. We extend existing strategies for FTS-based deviation compensation by data-driven optimization and validate the functionality on a realistic test scenario. We also demonstrate the integrated applicability of the methodology by an offline implementation of a robust sub-millimeter assembly process for an electronics device without any manual touchups.

3 Methodology

The methodology focuses on compensating three general types of accuracy influences: global independent deviations, systemic dependent deviations and random deviations. The global independent deviations are static biases for the entire system and independent of other operational parameters or time. They can be distinguished in macro-deviations and micro-deviations. Macro-deviations stem mostly from differences in geometric dependencies between the virtual ideal planning scene and the real system. Micro global deviations are caused by absolute accuracy errors of the manipulator as well as manufacturing and assembly tolerances of system components.

Dependent deviations occur due to manufacturing and assembly tolerances of the parts, e.g. varying by supplier, the work piece carriers or the material supply channels. They are thus dependent on at least one other parameter. Random deviations include the repeatability precision of the manipulator and other random external influences.

To compensate for global independent deviations, a one-time adaption of the robot interaction points is sufficient. For macro-deviations, this is achieved by integrating the real system geometry into the virtual planning scene through 3D scanning, as detailed in Sect. 3.1. Independent micro-deviations, dependent deviations and random influences are compensated by using tactile assembly strategies, see Sect. 3.2. As the tactile compensation of deviations is as such a wasteful, time-consuming process, a data-driven approach to further differentiate the independent micro-deviations and dependent deviations, as well as their dependent parameter, is shown in Sect. 4.2. The goal is to eliminate all systemic deviations, leaving only the random deviations, which however occur on a much smaller scale than the other influences.

3.1 Precision 3D-Scanning and Surface Reconstruction

To allow a low-cost 3D scanning of the real system, we use a structure-from-motion (SfM)-based approach for photogrammetric reconstruction from high-resolution RGB images. The real system is photographed with static camera parameters from different viewpoints ensuring a high overlap between pictures. Through inter-picture feature matching, the geometric dependency of the picture viewpoints, and thus the 3D structure of the system is derived. The resulting point cloud is then reconstructed into a surface model to allow fine adjusting with the virtual system model in the simulation/OLP tool, in this case Tecnomatix Process Simulate. Most relevant for robot OLP is the robot base, the work piece and the material supply 6D pose, as those are used to define the interaction points in the robot world coordinate system. Fine-tuning can be done manually or aided by e.g. ICP routines [2]. To implement ICP-based approaches for known parts, the CAD model is subsampled into a point cloud. Unknown parts, for which no CAD model is available, are categorized based on their geometry. Elements compounded from a few primitive geometries, such as the floor, tables and pillars, are estimated using a RANSAC approach. Here, the individual elements are fitted with the most likely primitive model based on fitting error minimization [7, 21]. More complex elements of the 3D scan, such as freeform surfaces and cables, are meshed using implicit function approaches, allowing for an efficient processing of large point sets [1, 4, 5, 12,13,14,−15]. All other reconstructed geometry from the scan, which is not used for interaction point determination is used for collision-free path planning.

3.2 Tactile Assembly Strategies and Data-Driven Program Adaption

The general approach for tactile assembly used in this contribution is detailed in Metzner et al. [19]. The assembly process is divided into three phases: approach (A), search (S) and insertion (I). Dependent on part and assembly-specific parameters, the different variants for each phase are selected and parameterized. For compensation of micro-deviations, the search phase is most important, as the total deviation is determined here.

The results of each individual search phase in every dimension is recorded together with relevant process parameters, such as carrier ID, base part manufacturer ID or material supply ID. The global deviation for each point is determined after a small sample of test runs as the mean of the found positions in each dimension.

The dependent variable compensation requires more data, depending on the magnitude of the impact of each variable. Through a statistical hypothesis test, significant deviations between the actual goal value and the distribution of recent points of one class of one parameter are identified. As variance homogeneity cannot be assumed, the dependent variable may also influence the distribution, and sample size will also differ, a two-sided test statistic for independent samples according to Welch is used [24]. In case a significant deviation is found, the difference of the old and new arithmetic mean is saved as an additional compensation factor linked to the investigated parameter value. A new target position for each parameter set is calculated on the robot controller by adding up all such parameter-linked deviations.

4 Validation

First, each functional part of the methodology is validated individually on a common setup. A fully integrated execution of the entire methodology is also demonstrated. We validate our system on a scenario from electronics production, the assembly of through-hole-devices (THD) into printed circuit boards (PCB). This process is very challenging to automate, as assembly tolerances are usually only tenths of millimeters, to allow the robust soldering, and the parts are inherently fragile. We use a UR10 LWR equipped with an ATI FT AXIA 80 force-torque sensor (FTS) and a Weiss CRG 200–085 gripper for our setup. The system also consists of a workpiece carrier system for the PCBs and a material supply in the form of a tray.

4.1 Evaluation of Scanning Accuracy

The photogrammetric 3D scanning is validated by direct comparison of a point cloud and surface model generated by our SfM pipeline using a Sony Alpha 6000 camera (6000 × 4000 pixel), see Fig. 1, with those of a FARO Quantum V2/Blue LLP scanner. The Faro Scanner is based on a calibrated coordinate measuring arm with a laser line sensor and has a nominal measuring accuracy below 25 μm. It is thus chosen as a baseline ground truth for comparison with our scan. Directly using the Faro scanner itself is not feasible in most applications due to long setup and calibration times, reachability issues and very high initial hardware cost in comparison to a simple handheld camera as used by our approach.

The distance measurements, trimmed at 3 mm maximum distance and limited to the robot base and work piece carrier with PCB to account for differences in scanned portions of the system, is shown in Fig. 2. The distance measurement is done in CloudCompare [9], using quadratic function distance calculation (k = 6) to account for cloud resolution differences, see also Girardea-Montaut [8]. Most points fall well under an absolute distance of 1 mm (green) (median distance 0.63 mm). Especially feature-rich areas, such as the edges of PCB, carrier and robot base are very accurately captured. These distinct features are also the most suitable for fine-adjustment of CAD geometries. The same observation is made comparing the surface models.

4.2 Performance of Compensation Strategies

As the functionality of our search and insertion strategies has already been shown in Metzner et al. [19], we focus on the validation of the scan-to-execution approach and our data-driven compensation strategies. The scan-based OLP methodology is validated by using the 3D scan to OLP a program implementing our methods. Both material supply and assembly position are derived from the scan and optimized via tactile strategies. The program is transferred to the real setup and executed without further adaption or calibration. As shown in Fig. 3b, the first execution run results in a visible offset (1) which is compensated by the tactile search (2). In the second run (3), this independent deviation is compensated to a degree that an insertion is possible without a search.

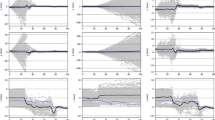

To validate our data-driven approach, we conduct a baseline analysis, repeatedly measuring the same assembly position of a mechanically fixed PCB by a four-point tactile approach in positive and negative x-y to quantify the robot’s inherent measurement uncertainty. Then, we enhance a robot program for insertion of a THD into a circular hole by specified offsets in the perpendicular plane to the insertion vector. The reference goal location is determined as precisely as possible using the average hole center from the baseline test. The reached compensation values for each input parameter set, determined through a spiral-search approach, are saved. We then compare the resulting calculated offset values from our data-driven approach with the specified ones to evaluate the functionality of our compensation approach.

We recreate both independent as well as dependent deviations. For the dependent deviation, we also log the dependent parameter value set for our analysis. Asymmetries are deliberately added to the induced dependent deviations to simulate a biased parameter population in the initial compensation phase, which is likely in reality.

We implement three parameters, labeled manufacturer (M), carrier ID (C) and material feed (F). M and F each have two parameter values (A/B, 1/2), C has three (1/2/3). The magnitude of influence from each parameter, see Table 1, is derived from actual tolerance values of a similar application in electronics production. For each parameter in each test run, a random number from a normal distribution using the absolute deviation and its standard deviation s is drawn using the polar method [17]. For each M and F, we conduct 450 test runs, for each C 300, resulting in 900 test cycles plus 75 baseline test for both standard and optimized insertion.

In 1950 insertion tests, no failure is recorded. The distribution of specified and found deviations is shown in Fig. 4. We optimize the search points using the mean deviation calculated for each M, C and F parameter value as well as the asymmetry in global distribution (Δxmean = 0.14 mm; Δymean = 0.49 mm). The mean search time without optimization is 1.23 s, with 71% of insertions requiring a search. With our approach, on the same data points, no search is ever required.

5 Discussion

The findings on the point cloud and surface model accuracy show that our 3D scanning method is capable of reducing deviations of the virtual OLP scene to the real application into the submillimeter area. Even though further influences for deviations, such as the robot absolute accuracy, exist, a successful execution of a high-precision THD assembly process without touchups is demonstrated. We furthermore validate our data-driven approach for compensation of dependent deviations and find a total elimination of search times. We thus conclude that even deviation influences as low as 0.1 mm can be determined and permanently compensated after a reasonable data gathering period, even when an LWR is used.

6 Conclusion and Outlook

In this contribution, we propose a methodology for direct OLP of high-precision assembly tasks through a combination of 3D scanning and tactile assembly routines including data-driven program adaption approaches based on defined deviation influences for continuous, automatic process improvement. We quantitatively evaluate the individual methods as well as the overall methodology. It is found that our approach is capable of robust OLP of processes with high accuracy requirements. Further research topics include the applicability on further use-cases and the validation on real-life industrial production data.

References

Agarwala, A.: Efficient gradient-domain compositing using quadtrees. ACM Trans. Graph. 26, 94 (2007)

ArtiMinds Robotics GmbH Artiminds Robot Programming Suite (2020). https://www.artiminds.com/de/. Accessed 24 Jul 2020

Arun, K.S., Huang, T.S., Blostein, S.D.: Least-squares fitting of two 3-d point sets. IEEE Trans.. Pattern Anal. Mach. Intell. 9, 698–700 (1987)

Bailey, T., Durrant-Whyte, H.: Simultaneous localization and mapping (SLAM): part II. IEEE Robot. Automat. Mag. 13, 108–117 (2006)

Calakli, F., Taubin, G.: SSD: smooth signed distance surface reconstruction. Comput. Graph. Forum 30, 1993–2002 (2011)

Crane, K., Weischedel, C., Wardetzky, M.: The heat method for distance computation. Commun. ACM 60, 90–99 (2017)

drag and bot GmbH Drag & Bot: Industrieroboter wie ein Smartphone bedienen (2020). https://www.dragandbot.com/de/. Accessed 24 Jul 2020

Fischler, M.A., Bolles, R.C.: Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 24, 381–395 (1981)

Girardea-Montaut, D.: Distance Computation: Cloud-Cloud Distances (2015). https://www.cloudcompare.org/doc/wiki/index.php?title=Distances_Computation

Girardea-Montaut, D: Cloud Compare (2019). https://www.cloudcompare.org/

Jasim, I.F., Plapper, P.W., Voos, H.: Position identification in force-guided robotic peg-in-hole assembly tasks. Proc. CIRP 23, 217–222 (2014)

Kazhdan, M., Hoppe, H.: Screened Poisson surface reconstruction. ACM Trans. Graph. (tog) 32, 1–13 (2013)

Kazhdan, M., Hoppe, H.: An adaptive multi-grid solver for applications in computer graphics. Comput. Graph. Forum 38, 138–150 (2019)

Kazhdan, M., Bolitho, M., Hoppe, H.: Poisson surface reconstruction. In: Proceedings of the fourth Eurographics symposium on Geometry processing, vol. 7 (2006)

Kazhdan, M., Chuang, M., Rusinkiewicz, S., et al.: Poisson surface reconstruction with envelope constraints. Comput. Graph Forum 39, 173–182 (2020)

Li, R., Qiao, H.: A survey of methods and strategies for high-precision robotic grasping and assembly tasks—some new trends. IEEE/ASME Trans. Mechatron. 24, 2718–2732 (2019)

Marsaglia, G., Bray, T.A.: A convenient method for generating normal variables. SIAM Rev. 6(3), 260–264 (1964)

Melcher, D., Küster, B., Stonis, M. et al.: Optimierung von Fabrikplanungsprozessen durch Drohneneinsatz und automatisierte Layoutdigitalisierung. Wissenschaftliche Gesellschaft für Technische Logistik (2018)

Metzner, M., Leurer, S., Handwerker, A. et al. High-precision assembly of electronic devices with lightweight robots through sensor-guided insertion. Proc. CIRP (2020)

Nägele, F., Halt, L., Tenbrock, P. et al.: Composition and incremental refinement of skill models for robotic assembly tasks. In: The Third IEEE International Conference on Robotic Computing: IRC 2019: Proceedings: 25–27 February 2019, Naples, Italy. IEEE, Piscataway, NJ, pp. 177–182(2019)

Schnabel, R., Wahl, R., Klein, R.: Efficient RANSAC for point-cloud shape detection. Comput. Graph. Forum 26, 214–226 (2007)

Schönberger, J.L., Frahm, J.-M.: Structure-from-motion revisited. In: Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

Schönberger, J.L., Zheng, E., Pollefeys, M. et al.: Pixelwise view selection for unstructured multi-view stereo. In: European Conference on Computer Vision (ECCV) (2016)

Welch, B.: The generalisation of student’s problems when several different population variances are involved. Biometrika 34, 28–35 (1947)

Xu, J., Hou, Z., Liu, Z. et al. (2019) Compare Contact Model-based Control and Contact Model-free Learning: A Survey of Robotic Peg-in-hole Assembly Strategies

Zhang, K., Shi, M., Xu, J., et al.: Force control for a rigid dual peg-in-hole assembly. Assembly Automation 37, 200–207 (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this paper

Cite this paper

Metzner, M. et al. (2022). An Approach for Direct Offline Programming of High Precision Assembly Tasks on 3D Scans Using Tactile Control and Automatic Program Adaption. In: Schüppstuhl, T., Tracht, K., Raatz, A. (eds) Annals of Scientific Society for Assembly, Handling and Industrial Robotics 2021. Springer, Cham. https://doi.org/10.1007/978-3-030-74032-0_18

Download citation

DOI: https://doi.org/10.1007/978-3-030-74032-0_18

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-74031-3

Online ISBN: 978-3-030-74032-0

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)