Abstract

This chapter covers the interdependencies of temperature and the reliable operation of multiprocessor systems on chip (MPSoC). Starting with the assessment of temperature values for the different cores either through measurement or estimation, it is shown which methods on system level can be applied to balance the thermal stress in the system and thus to come to an evenly distributed probability of errors. Special focus is given to task migration as a system-level means, which is supported by an on-chip interconnect virtualization technique that eases fast and transparent switch-over of communication channels. Overall, it is shown how mechanisms on different levels of the system stack can be combined to cooperate across layers for improving system reliability.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

1 Overview

The VirTherm3D project is part of SPP1500, which has its origins in [10] and [9]. The main cross-layer contributions of VirTherm3D are outlined in Fig. 1. The green circles are our major contributions spanning from the physics to circuit layer and from the architecture to application layer. These contributions include physical modeling of thermal and aging effects considered at the circuit layer as well as communication virtualization at architecture level to support task relocation as part of thermal management at architecture level. Our minor contributions span from the circuit to architecture layer and include reliability-aware logic synthesis as well as studying the impact of reliability with figures of merit such as probability of failure.

2 Impact of Temperature on Reliability

Temperature is at the core of reliability. It has a direct short-term impact on reliability, as the electrical properties of circuits (e.g., delay) are affected by temperature. A higher temperature leads to circuits with higher delays and lower noise margins. Additionally, temperature impacts circuits indirectly as it stimulates or accelerates aging phenomena, which in turn, manifest themselves as degradations in the electrical properties of circuits.

The direct impact of temperature in an SRAM memory cell can be seen in Fig. 2. Increasing the temperature increases the read delay of the memory cell. This is because increased temperature degrades performance of transistors (e.g., a reduction in carrier mobility μ), which affects the performance of the memory cell. Therefore, increasing temperature directly worsens circuit performance and thus negatively impacts the reliability of a circuit. If the circuit has a prolonged delay due to the increased temperature, then timing violations might occur. If the circuit has a degraded noise margin, then noise (e.g., voltage drops or radiation-induced current spikes) might corrupt data.

Shift in SRAM memory cell read delay as a direct impact of temperature. Taken (from [3])

Next to directly altering the circuit properties, temperature also has an indirect impact, which is shown in Fig. 3. Temperature stimulates aging phenomena (e.g., Bias Temperature Instability (BTI)) degrading the performance of transistors (e.g., increasing the threshold voltage V th) over time. Increasing the temperature accelerates the underlying physical processes of aging and thus increases aging-induced degradations.

Indirect impact of temperature stimulating aging. Taken (from [4])

Because of the two-fold impact of temperature, i.e., by reducing circuit performance directly and indirectly via aging, it is crucial to be considered when estimating the reliability of a circuit. The temperature at an instant of time (estimated either via measurement or simulation) governs the direct degradation of the circuit, i.e., the short-term direct impact of temperature. Temperature over time governs the long-term indirect impact, as aging depends on the thermal profile (i.e., the thermal fluctuations over a long period). How to estimate temperature correctly both the temperature at an instant as well as the thermal profile is discussed in Sect. 3.

After the temperature is determined via temperature estimation, the impact of temperature on reliability must be evaluated. This is challenging, as the impact of temperature occurs on physical level (e.g., movement of electrical carriers in a semiconductor as well as defects in transistors for aging), while the figures of merit are for entire computing systems (e.g., probability of failure, quality of service). To overcome this challenge, Sect. 4 discusses how to connect the physical to the system level with respect to thermal modeling. To obtain the ultimate impact of the temperature, the figures of merit of a computing system are obtained with our cross-layer (from physical to system level) temperature modeling (see Fig. 4).

Temperature can be controlled. Thermal management techniques reduce temperature by limiting the amount of generated heat or making better use of existing cooling (e.g., distribution of generated heat for easier cooling). Thus, to reduce the deleterious impact of temperature on the figures of merit of systems, temperature must be controlled at system level. For this purpose, Sect. 5 discusses system-level thermal management techniques. These techniques limit temperature below a specified critical temperature to ensure that employed safety margins (e.g., are not violated time slack to tolerate thermally induced delay increases), thus ensuring the reliability of a computing system.

To support the system management in migrating tasks away from thermal hotspots and thus reducing thermal stress, special virtualization features are proposed to be implemented in the interconnect infrastructure. They allow for a fast transfer of communication relations of tasks to be migrated and thus help to limit downtimes. These mechanisms can then also be applied for generating task replica to dynamically introduce redundancy during system runtime as a response to imminent reliability concerns in parts of the SoC or if reliability requirements of an application change.

As mentioned before, temperature estimation and modeling cross many abstraction layers. The effects of temperature originate from the physical level, where the physical processes related to carriers and defects are altered by temperature. Yet the final impact of temperature has to pass through the transistor level, gate level, circuit level, architecture level all the way to the system level, where the figures of merit of the system can be evaluated. The system designer has to maintain the figures of merit for his end-user, therefore limiting temperature with thermal management techniques and evaluating the impact of temperature on the various abstraction layers. Therefore, Sect. 7 discusses thermal estimation, modeling, and management techniques with a focus on how to cross these abstraction layers and how to connect the physical to the system level. In practice, interdependencies between the low abstraction layers and the management layer do exist. The running workload at the system level increases the temperature of the cores. Hence, the probability of error starts to gradually increase. In such a case the management layer estimates the probability of error based on the information received from the lower layers and then attempts to make the best decision. For instance, it might allow the increase in the probability of error but at the cost of enabling the adaptive modular redundancy (AMR) (details in Sect. 6.3) or maybe migrating the tasks to other cores that are healthier (i.e., exhibit less probability of error).

3 Temperature Estimation via Simulation or Measurement

Accurately estimating the temperature of a computing system is necessary to later evaluate the impact of temperature. Two options exist: (1) Thermal simulation and (2) Thermal measurement. Both options must estimate temperature with respect to time and space. Figure 5 shows a simulated temporal thermal profile of a microprocessor. Temperature fluctuates visibly over time and depends on the applications which are run on the microprocessor.

Figure 9 shows a measured spatial thermal map of a microprocessor. Temperature is spatially unequally distributed across the processor, i.e., certain components of the microprocessors have to tolerate higher temperatures. However, the difference in temperature is limited. This limit stems from thermal conductance across the chip counteracting temperature differences. Thermal conductance is mainly via the chip itself (e.g., wires in metal layers), its packaging (e.g., heat spreaders), and cooling (e.g., heat sink).

3.1 Thermal Simulation

Thermal simulations are a software-based approach to estimate the temperature of a computing system. Thermal simulations consist of three steps: (1) Activity extraction, (2) Power estimation, (3) Temperature estimation. The first step extracts the activity (e.g., transistor switching frequency, cache accesses) of the applications running on the computing system. Different applications result in different temperatures (see Fig. 5). The underlying cause is a unique power profile for each application (and its input data), originating from unique activities per application.

Once activities are extracted, the power profiles based on these activities are estimated. Both steps can be performed on different abstraction layers. On the transistor level, transistor switching consumes power, while on the architecture level each cache access consumes a certain amount of power (depending on cache hit or cache miss). Thus activity would be transistor switching/cache accesses and this would result in a very fine-grained power profile (temporally as well as spatially) for the transistor level. At the architecture level, a coarse-grained power profile is obtained with a time granularity per access (potentially hundreds of cycles long) and space granularity is per entire cache block.

With the power profiles known, the amount of generated heat (again spatially and temporally) is known. A thermal simulator then uses a representation of thermal conductances and capacitances with generated heat as an input heat flux and dissipated heat (via cooling) as an output heat flux to determine the temperature over time and across the circuit.

Our work in [4] exemplifies a thermal simulation flow in Fig. 6. In this example, SRAM memory cell accesses are used to estimate transistor switching and thus power profiles for the entire SRAM array. These power profiles are then used with the microprocessor layout (called floorplan) and typical cooling settings in a thermal simulator to get thermal maps in Fig. 7.

Flow of a thermal simulation (updated figure from [4]).

The work in [13] models temperature on the system level. Individual processor cores of a many-core computing system are the spatial granularity with seconds as the temporal granularity. Abstracted (faster, simpler) models are used to estimate the temperature per processor core, as a transistor level granularity would be unfeasible with respect to computational effort (i.e., simulation time).

While thermal simulations have the advantage of being able to perform thermal estimations without physical access to the system (e.g., during early design phases), they are very slow (hours of simulation per second of operation) and not accurate. Estimating activities and power on fine-grained granularities is an almost impossible task (layout-dependent parasitic resistances and capacitances, billions of transistors, billions of operations per second), while coarse-grained granularities provide just rough estimates of temperature due to the disregard of non-negligible details (e.g., parasitics) at these high abstraction levels (Fig. 8).

Thermal estimation at the system level with cores as the spatial granularity (from [13])

3.2 Thermal Measurement

If physical access to actual chips is an option, then thermal measurement is preferable. Observing the actual thermal profiles (temporally) and thermal maps (spatially) intrinsically includes all details (e.g., parasitics, billions of transistors, layout). Thus, a measurement can be more accurate than a simulation. Equally as important, measurements operate in real time (i.e., a second measured is also a second operated) outperforming simulations.

The challenge of thermal measurements is the resolution. The sample frequency of the measurement setup determines the temporal resolution and this is typically in the order of milliseconds, while simulations can provide nano-second granularity (e.g., individual transistor switching). However, since thermal capacitances prevent abrupt changes of temperature as a reaction to abrupt changes in generated heat, sample rates in milliseconds are sufficient. The spatial resolution is equally limited by thermal conductance, which limits the thermal gradient (i.e., difference in temperature between two neighboring component; see Figs. 7 and 9).

Measured spatial thermal map of a microprocessor (from [2])

The actual obstacle for thermal measurements is accessibility. A chip sits below a heat spreader and cooling, i.e., it is not directly observable. The manufacturers include thermal diodes at a handful of locations (e.g., 1 per core), which measure temperature in-situ, but these diodes are both inaccurate (due to their spatial separation from the actual logic) and spatially very coarse due to their limited number.

Our approach (Fig. 10) [2, 14] is to cool the chip through the PCB from the bottom-side and measure the infrared radiation emitted from the chip directly. Other approaches cool the chip with infrared-transparent oils to cool the chip from the top, but this results in heat conductance limiting image fidelity and turbulence in the oil limiting image resolution (see Fig. 11). Our approach does not suffer from these issues and delivers crisp high-resolution infrared images from a camera capable of sampling an image every 20 ms with a spatial resolution of 50 μm. Thus a lucid thermal profile and thermal map are achieved including all implementation details of the chip, as actual hardware is measured.

High fidelity infrared thermal measurement setup (from [2])

Limited infrared image lucidity and fidelity due to oil cooling on top of the chip (from [2])

4 Modeling Impact of Temperature at System Level

Modeling the impact of temperature on a computing system is a challenging task. Estimation of the figures of merit of a computing system can only be performed on the system level, while the effects of temperature are on the physical level. Thus, many abstraction layers have to be crossed while maintaining accuracy and computational feasibility (i.e., keep simulation times at bay). In this section we discuss how we tackle this challenge, starting with the selection of figures of merit, followed by the modeling of the direct impact of temperature and finally aging as the indirect impact of temperature.

4.1 Figures of Merit

The main figures of merit at the system level with respect to reliability are probability of failure P fail and quality of service (e.g., PSNR in image processing). Probability of failure encompasses many failure types like timing violations, data corruption and catastrophic failure of a component (e.g., short-circuit). A full overview of abstraction of failures towards probability of failure is given in the RAP (Resilience Articulation Point) chapter of this book. Typically, vendors or end-users require the system designer to meet specific P fail criteria (e.g., P fail < 0.01). Quality of service describes how well a system provides its functionality if a specific amount of errors can be tolerated (e.g., if human perception is involved or for classification problems).

For probability of failure, the individual failure types have to be estimated and quantified without over-estimation due to common failures (as in our work [3], where a circuit with timing violations might also corrupt data). In that work the failure types such as timing violations, data corruption due to voltage noise, and data corruption due to strikes of high-energy particles are covered. These are the main causes of failure in digital logic circuits as a result of temperature changes (e.g., excluding mechanical stress from drops). The probability of failure is spatially and temporarily distributed (see Figs. 12 and 13) and therefore has to be estimated for a given system lifetime (temporally) and for total system failure (combined impact of spatially distributed P fail (e.g., sum of failures or probability that only 1 component out of 3 fail (modular redundancy)).

Spatial distribution of P fail across an SRAM array under two different applications (from [4])

Temporal distribution of P fail, which increases over time due to aging (from [3])

Quality of service means observing the final output of the computing system and analyzing it. In our work [5, 7] we use the peak signal-to-noise ratio (PSNR) of an output image from an image processing circuit (discrete cosine transformation (DCT) in a JPEG encoder).

4.2 Direct Impact of Temperature

To model the direct impact of temperature, we start at the lowest abstraction layers. Compact transistor models (e.g., BSIM) describe the current flow through the channel of a transistor and the impact of temperature on that current flow. These models are then used in circuit simulators to characterize standard cells (build from transistors) in terms of power consumption and propagation delay [5, 21]. Characterizing the standard cells (see Fig. 14) under different temperatures (e.g., from 25 to 125 ∘C) captures the impact of temperature on the delay and power consumption of these cells. This information is then gathered in a cell library (a single file containing all delay and power information for these cells) and then circuit and architecture level tools (e.g., static timing analysis tools, gate level simulators) can be used to check individual failure types (e.g., timing violations in static timing analysis) for computing systems (e.g., microprocessors) under various temperatures.

Flow of the characterization of standard cells and the subsequent use of degradation-aware cell libraries to obtain timing violations (from [12])

4.3 Aging as Indirect Impact of Temperature

Aging is stimulated by temperature (see Fig. 3) and therefore temperature has an indirect impact on reliability via aging-induced degradations. Aging lowers the resiliency of circuits and systems, thus decreasing reliability (an increase in P fail) as shown in Fig. 15.

Link between aging (increasing susceptibility) and P fail (from [6])

For this purpose our work [6, 18, 20] models aging, i.e., Bias Temperature Instability (BTI), Hot-Carrier Injection (HCI), Time-Dependent Dielectric Breakdown (TDDB) and the effects directly linked to aging like Random Telegraph Noise (RTN). All these phenomena are modeled with physics-based models [18, 20], which can accurately describe their temperature dependencies in the actual physical processes (typically capture and emission of carriers in the defects in the gate dielectric of transistors [4, 19]) of these phenomena.

Our work [6, 18] considers the interdependencies between these phenomena (see Fig. 16) and then estimates the degradation of the transistors. Then the transistor modelcards (transistor parameter lists) are adapted to incorporate the estimated degradations and use these degraded transistor parameters in standard cell characterization.

Interdependencies between the aging phenomena (from [6])

During cell characterization it is important to not abstract, as ignoring the interactions between transistors (counteracting each other when switching) results in underestimations of propagation delay [21] and ignoring the operating conditions (load capacitance, signal slew) of the cells [5, 20] misrepresents actual cell delay and power consumption.

After all necessary information is gathered, cells are characterized under different temperatures (like in the previous subsection) but not only with altered transistor currents (modeling the direct impact of temperature) but with additionally degraded transistors parameters (modeling the indirect impact of temperature via aging). Thus we combine both the direct and indirect impact into a single standard cell characterization to obtain delay and power information of standard cells under the joint impact of temperature and temperature-stimulated aging.

5 System-Level Management

To limit the peak temperature of a computing system and distribute the temperature evenly, we can employ system-level thermal management techniques. These techniques limit or distribute the amount of generated heat and thus ensure that the temperature stays below a given critical temperature. The two techniques presented in this section are task migration [13] and voltage scaling [17].

5.1 Voltage Scaling

Voltage scaling reduces the supply voltage of a chip or component (e.g., a processor core) to lower the power consumption and thus lower the generated heat. As a first-order approximation, lowering the voltage results in a quadratic reduction of the consumed (dynamic) power. Therefore lowering the voltage even slightly has a considerable impact on the generated heat and thus exhibited temperature.

Voltage scaling has various side-effects. As the driving strength of transistors is also reduced, when the supply voltage is reduced, voltage scaling always prolongs circuit delays. Hence, voltage scaling has a performance overhead, which has to be minimized, while at the same time the critical temperature should not be exceeded.

Another side-effect is that voltage governs the electric field, which also stimulates aging [17]. When voltage increases, aging-induced degradation increases and when voltage reduces aging recovers (decreasing degradation). In our work in [17] we showed that voltage changes within a micro-second might induce transient timing violations. During such ultra-fast voltage changes, the low resiliency of the circuit (at the lower supply voltage) meets the high degradation of aging (exhibiting from operation at the high voltage). This combination of high degradation with low resiliency leads to timing violations if not accounted for. Continuing operation at the lower voltage recovers aging, thus resolving the issue. However, during the brief moment of high degradation violations occurred.

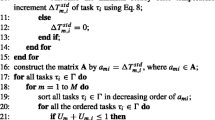

5.2 Task Migration

Task migration is the process of moving applications from one processor core to another. This allows a hot processing core to cool down, while a colder processor core takes over the computation of the task. Therefore, temperature is more equally distributed across a multi- or many-core computing system.

A flow of our task migration approach is shown in Fig. 17. Sensors in each core measure the current temperature (typically thermal diodes). As soon as the temperature approaches the critical value, then a task is migrated to a different core. The entire challenge is in the question “To which core is the task migrated?” If the core to which the task is migrated is only barely below the critical temperature, then the task is migrated again, which is costly since each migration stalls the processor core for many cycles (caches are filled, data has to be fetched, etc.).

Flow of our task migration approach to bound temperature in a many-core computing system (from [13])

Therefore our work in [13] predicts the thermal profile and makes decisions based on these predictions to optimize the task migration with as little migrations as possible while still ensuring that the critical temperature is not exceeded.

Another objective which has to be managed by our thermal management technique is to minimize thermal cycling. Each time a processor core cools down and heats up again it experiences a thermal cycle. Materials shrink and expand under temperature and thus thermal cycles put stress on bonding wires as well as soldering joints between the chip and the PCB or even interconnects within the chip (when it is partially cooled/heated).

Therefore, our approach is a multi-objective optimization strategy, which minimizes thermal cycles per core, limits temperature below the critical temperature and minimizes the number of task migrations (reducing performance overheads).

6 Architecture Support

To support the system-level thermal management in the migration of tasks that communicate with each other the underlying hardware architecture provides specific assistance functions in the communication infrastructure. This encompasses a virtualization layer in the network on chip (NoC) and the application of protection switching mechanisms for a fast switch-over of communication channels. Based on these features an additional redundancy mechanism—called adaptive modular redundancy (AMR)—is introduced, which allows to run tasks temporarily with a second or third replica to either detect or correct errors.

6.1 NoC Virtualization

To support the system management layer in the transparent migration of tasks between processor cores within the MPSoC an interconnect virtualization overlay is introduced, which decouples physical and logical endpoints of communication channels. Any message passing communication among sub-tasks of an application or with the I/O tile is then done via logical communication endpoints. That is, a sending task transmits its data from the logical endpoint on the source side of the channel via the NoC to the logical endpoint at the destination side where the receiving task is executed. Therefore, the application only communicates on the logical layer and does not have to care about the actual physical location of sender and receiver tasks within the MPSoC. This property allows dynamic remapping of a logical to a different physical endpoint within the NoC and thus eases the transparent migration of tasks by the system management. This is shown in Fig. 18, where a communication channel from the MAC interface to task T1 can be transparently switched over to a different receiving compute tile/processor core. Depending on the migration target of T1 the incoming data will be sent to the tile executing the new T1’ [8]. In Sect. 6.2 specific protocols are described to reduce downtime of tasks during migration.

Communication virtualization layer (from [8])

To implement this helper function, both a virtualized NoC adapter (VNA) and a virtualized network interface controller (VNIC) are introduced that can be reconfigured in terms of logical communication endpoints when a task migration has to be performed [15].

VNA and VNIC target a compromise between high throughput and support for mixed-criticality application scenarios with high priority and best effort communication channels [11]. Both are based on a set of communicating finite state machines (FSMs) dedicated to specific sub-functions to cope with these requirements, as can be seen in Fig. 19. The partitioning into different FSMs enables the parallel processing of concurrent transactions in a pipelined manner.

6.2 Advanced Communication Reconfiguration Using Protection Switching

The common, straight-forward method for task relocation is Stop and Resume: Here, first the incoming channels of the task to be migrated are suspended, then channel state together with the task state are transferred, before the channels and task are resumed at the destination. The key disadvantage is a long downtime. Therefore, an advanced communication reconfiguration using protection switching in NoCs to support reliable task migration is proposed [16, 22, 23], which is inspired by protection switching mechanisms from wide area transport networks. Two alternatives to migrate communication relations of a relocated task are dualcast and forwarding as shown in Fig. 20 for a migration of task B to a different location executing B’. The procedure is to first establish an additional channel to the compute tile where the task is migrated to (location of B’). This can be either done from A being the source of the channel (dualcast, Fig. 20a) or from B the original location of the migrated task (forwarding, Fig. 20b). Then it has to be ensured that the buffers at the source and destination tiles of the migration are consistent. Finally, a seamless switch-over (task and channels) takes place from the original source to the destination. This shall avoid time-costly buffer copy and channel suspend/resume operations with a focus on low-latency and reliable adaptions in the communication layer.

The different variants have been evaluated for an example migration scenario as depicted in Fig. 20c: In a processing chain consisting of 7 tasks in total, the FORK task, which receives data from a generator task and sends data to three parallel processing tasks, is migrated to tile number 0. Figure 21 shows the latencies of the depicted execution chain during the migration, which starts at 2.5 ⋅ 106 cycles assuming FORK is stateless. The results have been measured using an RTL implementation of the MPSoC [16]. In Fig. 21a the situation is captured for a pure software-based implementation of the migration, whereas Fig. 21b shows the situation when all functions related to handling the migration are offloaded from the processor core. In this case task execution is not inhibited by any migration overhead, which corresponds to the situation when the VNA performs the associated functionality in hardware.

Results for task migration scenarios (from [16]). (a) Relocation without offload. (b) Relocation with offload

As can be seen from Fig. 21b, offloading migration protocols helps to reduce application processing latency significantly for all three variants. The dualcast and forwarding variants enable a nearly unnoticeable migration of the tasks. However, the investigations in [16] show that when migrating tasks with state, the handling of the task migration itself becomes the dominant factor in the migration delays and outweighs the benefits of the advanced switching techniques.

6.3 Adaptive Modular Redundancy (AMR)

Adaptive modular redundancy (AMR) enables the dynamic establishment of a redundancy mechanism (dual or triple modular redundancy, DMR/TMR) at run-time for tasks that have a degree of criticality that may vary over time or if the operating conditions of the platform have deteriorated so that the probability of errors is too high. DMR will be used if re-execution is affordable, otherwise TMR can be applied, e.g., in case realtime requirements could not be met. AMR functionality builds upon the aforementioned services of the NoC. To establish DMR the dualcast mechanism is used and the newly established task acts as replica instead taking over the processing as in the case of migration. (For TMR two replica are established and triple-cast is applied.) Based on the running task replica, the standard mechanisms for error checking/correction and task re-execution if required are applied.

The decision to execute one or two additional replica of tasks is possibly taken as a consequence of an already impaired system reliability. On the one hand this helps to make these tasks more safe. On the other hand it increases system workload and the associated thermal load, which in turn may further aggravate the dependability issues. Therefore, this measure should be accompanied with an appropriate reliability-aware task mapping including a graceful degradation for low-critical tasks like investigated in [1]. There, the applied scheme is the following: After one of the cores exceeds a first temperature threshold T1 a graceful degradation phase is entered. This means that tasks of high criticality are preferably assigned to cores in an exclusive manner and low-critical tasks are migrated to a “graceful degradation region” of the system. Thus, potential errors occurring in this region would involve low-critical tasks only. In a next step, if peak temperature is higher than a second threshold T2, low-critical tasks are removed also from the graceful degradation region (NCT ejection) and are only resumed if the thermal profile allows for it.

In [1] a simulation-based investigation of this approach has been done using the Sniper simulator, McPAT and Hotspot for a 16-core Intel Xeon X5550 running SPLASH-2 and PARSEC benchmarks. Tasks have been either classified as uncritical (NCT) or high-critical (HCT) with permanently redundant execution. As a third class, potentially critical tasks (PCT) are considered. Such tasks are dynamically replicated if the temperature of the cores they run on exceeds T1. In the experiment, financial analysis and computer vision applications from the benchmark sets are treated as high-critical tasks (HCT). The FFT kernel as used in a wide range of applications with different criticality levels is assumed to be PCT.

In a first experiment the thermal profile has been evaluated for normal operation and the two escalating phases. As can be seen from Fig. 22 the initial thermal hotspots are relaxed at the expense of new ones in the graceful degradation region. In turn, when moving to the NCT ejection phase the chips significantly cool down.

Thermal profile during different phases (from [1]). The maximum system temperatures are 363 K, 378 K, and 349 K, respectively. (a) Initial scheduling. (b) Graceful degradation. (c) NCT ejection

In a further investigation, 10,000 bit-flips have been injected randomly into cache memories independent of the criticality of the tasks running on the cores. This has been done both for a system using the mechanisms described above and as a reference for a fully unprotected system.

Figure 23 shows the resulting number of propagated errors for the different task categories. In the protected system, all errors injected into cores running HCTs are corrected, as expected. For PCTs only those errors manifest themselves in a failure that were injected when the task was not protected due to a too low temperature of the processor core. In general, not all injected errors actually lead to a failure due to masking effects in the architecture or the application memory access pattern. This can be seen for the unprotected system where the overall sum of manifested failures is less than the number of injected errors.

Number of propagated errors per task criticality (from [1])

7 Cross-Layer

From Physics to System Level

(Fig. 24) In our work, we start from the physics, where degradation effects like aging and temperature do occur. Then we analyze and investigate how these degradations alter the key transistor parameters such as threshold voltage (V th), carrier mobility (μ), sub-threshold slope (SS), and drain current (I D). Then, we study how such drift in the electrical characteristics of the transistor impacts the resilience of circuits to errors. In practice, the susceptibility to noise effects as well as to timing violations increases. Finally, we develop models for error probability that describe the ultimate impact of these degradations at the system level.

Interaction between the System Level and the Lower Abstraction Levels

(Fig. 24) Running workloads at the system level induce different stress patterns for transistors and, more importantly, generate different heat over time. Temperature is one of the key stimuli when it comes to reliability degradations. Increase in temperature accelerates the underlying aging mechanisms in transistors as well as it increases the susceptibility of circuits to noise and timing violations. Such an increase in the susceptibility manifests itself as failures at the system level due to timing violations and data corruption. Therefore, different running workloads result in different probabilities of error that can be later observed at the system level.

Key Role of Management Layer

The developed probability of error models helps the management layer to make proper decision. The management layer migrates the running tasks/workload from a core that starts to have a relatively higher probability of error to another “less-aged” core. Also the management layer switches this core from a high-performance mode (where high voltage and high frequency are selected leading to higher core temperatures) to a low-power mode (where low voltage and low frequency are selected leading to lower core temperatures) when it is observed that a core started to have an increase in the probability of error above an acceptable level.

Scenarios of Cross-Layer Management and existing Interdependencies

In the following we demonstrate some examples of existing interdependencies between the management layer and the lower abstraction layers.

Scenario-1: Physical and System Layer

The temperature of a core increases and therefore the error probability starts to gradually rise. If a given threshold is exceeded and the core has performance margins, the first management decision would be to decrease voltage and frequency and thus limit power dissipation and in consequence counteract the temperature increase of the core.

Scenario-2: Physical, Architecture, and System Layer

If there is no headroom on the core, the system management layer can now decide to migrate tasks away from that core, especially if they have high reliability requirements. Targets for migration would especially be colder, less-aged cores with a low probability of errors. With such task migrations, temperature within a system should be balanced, i.e., relieved cores can cool down, while target cores would get warmer. Further, on cores that can cool down again some of the deleterious effects start to heal, leading to a reduction in the probability of errors. In general, by continuously balancing load and as a result also temperature among cores the management layer will take care that error probabilities of cores become similar thus avoiding the situation that one core fails earlier than others. During task migrations the described support functions in the communication infrastructure (circuit layer) can be applied.

Scenario-3: Physical, Architecture, and System Layer

If there is no possibility to move critical tasks to a cold core with low error probability, the management layer can employ adaptive modular redundancy (AMR) and replicate such tasks. This allows to counter the more critical operating conditions and increase reliability by either error detection and task re-execution or by directly correcting errors when otherwise realtime requirements would not be met. However, in these cases the replica tasks will increase the overall workload of the system and thus also contribute thermal stress. In this case, dropping tasks of low criticality is a measure on system level to counter this effect.

In general, the described scenarios always form control loops starting on physical level covering temperature sensors and estimates of error probabilities and aging. They go either up to the circuit level or to the architecture/system level, where countermeasures have to be taken to prevent the system from operating under unreliable working conditions. Therefore, the mechanisms on the different abstraction levels as shown in the previous sections interact with each other and can be composed to enhance reliability in a cross-layer manner.

Further use cases tackling probabilistic fault and error modeling as well as space- and time-dependent error abstraction across different levels of the hardware/software stack of embedded systems IC components are also subject of the chapter “RAP (Resilience Articulation Point) Model.”

8 Conclusion

Reliability modeling and optimization is one of the key challenges in advanced technology. With technology scaling, the susceptibility of transistors to various kinds of degradation effects induced by aging increases. As a matter of fact, temperature is the main stimulus behind aging and therefore controlling and mitigating aging can be done through a proper thermal management. Additionally, temperature itself has also a direct impact on the reliability of any circuit manifesting itself as an increase in the probability of error. In order to sustain reliability, the system level must become aware of the degradation effects occurring at the physical level and how they then propagate to higher abstraction levels all the way up to the system level. Our cross-layer approach provides the system level with accurate estimations of the probability of errors, which allows the management layer to make proper decisions to optimize the reliability. We demonstrated the existing interdependencies between the system level and lower abstraction levels and the necessity of taking them into account via cross-layer thermal management techniques.

References

Alouani, I., Wild, T., Herkersdorf, A., Niar, S.: Adaptive reliability for fault tolerant multicore systems. In: 2017 Euromicro Conference on Digital System Design (DSD), pp. 538–542 (2017). https://doi.org/10.1109/DSD.2017.78

Amrouch, H., Henkel, J.: Lucid infrared thermography of thermally-constrained processors. In: 2015 IEEE/ACM International Symposium on Low Power Electronics and Design (ISLPED), pp. 347–352. IEEE, Piscataway (2015)

Amrouch, H., van Santen, V.M., Ebi, T., Wenzel, V., Henkel, J.: Towards interdependencies of aging mechanisms. In: Proceedings of the 2014 IEEE/ACM International Conference on Computer-Aided Design, pp. 478–485. IEEE, Piscataway (2014)

Amrouch, H., Martin-Martinez, J., van Santen, V.M., Moras, M., Rodriguez, R., Nafria, M., Henkel, J.: Connecting the physical and application level towards grasping aging effects. In: 2015 IEEE International Reliability Physics Symposium, p 3D-1. IEEE, Piscataway (2015)

Amrouch, H., Khaleghi, B., Gerstlauer, A., Henkel, J.: Towards aging-induced approximations. In: 2017 54th ACM/EDAC/IEEE Design Automation Conference (DAC), pp. 1–6. IEEE, Piscataway (2017)

Amrouch, H., van Santen, V.M., Henkel, J.: Interdependencies of degradation effects and their impact on computing. IEEE Des. Test 34(3), 59–67 (2017)

Boroujerdian, B., Amrouch, H., Henkel, J., Gerstlauer, A.: Trading off temperature guardbands via adaptive approximations. In: 2018 IEEE 36th International Conference on Computer Design (ICCD), pp. 202–209. IEEE, Piscataway (2018)

Ebi, T., Rauchfuss, H., Herkersdorf, A., Henkel, J.: Agent-based thermal management using real-time i/o communication relocation for 3d many-cores. In: Ayala, J.L., García-Cámara, B., Prieto, M., Ruggiero, M., Sicard, G. (eds.) Integrated Circuit and System Design. Power and Timing Modeling, Optimization, and Simulation, pp. 112–121. Springer, Berlin (2011)

Gupta, P., Agarwal, Y., Dolecek, L., Dutt, N., Gupta, R.K., Kumar, R., Mitra, S., Nicolau, A., Rosing, T.S., Srivastava, M.B., et al.: Underdesigned and opportunistic computing in presence of hardware variability. IEEE Trans. Comput. Aided Des. Integr. circuits Syst. 32(1), 8–23 (2012)

Henkel, J., Bauer, L., Becker, J., Bringmann, O., Brinkschulte, U., Chakraborty, S., Engel, M., Ernst, R., Härtig, H., Hedrich, L., et al.: Design and architectures for dependable embedded systems. In: Proceedings of the Seventh IEEE/ACM/IFIP International Conference on Hardware/Software Codesign and System Synthesis, pp. 69–78. ACM, New York (2011)

Herkersdorf, A., Michel, H.U., Rauchfuss, H., Wild, T.: Multicore enablement for automotive cyber physical systems. Inf. Technol. 54, 280–287 (2012). https://doi.org/10.1524/itit.2012.0690

Hussam, A., Jörg, H.: Evaluating and mitigating degradation effects in multimedia circuits. In: Proceedings of the 15th IEEE/ACM Symposium on Embedded Systems for Real-Time Multimedia (ESTIMedia ’17), pp. 61–67. Association for Computing Machinery, New York (2017). https://doi.org/10.1145/3139315.3143527

Khdr, H., Ebi, T., Shafique, M., Amrouch, H.: mDTM: multi-objective dynamic thermal management for on-chip systems. In: 2014 Design, Automation & Test in Europe Conference & Exhibition (DATE), pp. 1–6. IEEE, Piscataway (2014)

Prakash, A., Amrouch, H., Shafique, M., Mitra, T., Henkel, J.: Improving mobile gaming performance through cooperative CPU-GPU thermal management. In: Proceedings of the 53rd Annual Design Automation Conference, p. 47. ACM, New York (2016)

Rauchfuss, H., Wild, T., Herkersdorf, A.: Enhanced reliability in tiled manycore architectures through transparent task relocation. In: ARCS 2012, pp. 1–6 (2012)

Rösch, S., Rauchfuss, H., Wallentowitz, S., Wild, T., Herkersdorf, A.: MPSoC application resilience by hardware-assisted communication virtualization. Microelectron. Reliab. 61, 11–16 (2016). https://doi.org/10.1016/j.microrel.2016.02.009. http://www.sciencedirect.com/science/article/pii/S0026271416300282. SI: ICMAT 2015

van Santen, V.M., Amrouch, H., Parihar, N., Mahapatra, S., Henkel, J.: Aging-aware voltage scaling. In: 2016 Design, Automation & Test in Europe Conference & Exhibition (DATE), pp. 576–581. IEEE, Piscataway (2016)

van Santen, V.M., Martin-Martinez, J., Amrouch, H., Nafria, M.M., Henkel, J.: Reliability in super-and near-threshold computing: a unified model of RTN, BTI, and PV. IEEE Trans. Circuits Syst. I Regul. Pap. 65(1), 293–306 (2018)

van Santen, V.M., Diaz-Fortuny, J., Amrouch, H., Martin-Martinez, J., Rodriguez, R., Castro-Lopez, R., Roca, E., Fernandez, F.V., Henkel, J., Nafria, M.: Weighted time lag plot defect parameter extraction and GPU-based BTI modeling for BTI variability. In: 2018 IEEE International Reliability Physics Symposium (IRPS), pp. P-CR.6-1–P-CR.6-6 (2018). https://doi.org/10.1109/IRPS.2018.8353659

van Santen, V.M., Amrouch, H., Henkel, J.: Modeling and mitigating time-dependent variability from the physical level to the circuit level. IEEE Trans. Circuits Syst. I Regul. Pap. 1–14 (2019). https://doi.org/10.1109/TCSI.2019.2898006

van Santen, V.M., Amrouch, H., Henkel, J.: New worst-case timing for standard cells under aging effects. IEEE Trans. Device Mater. Reliab. 19(1), 149–158 (2019). https://doi.org/10.1109/TDMR.2019.2893017

Wallentowitz, S., Rösch, S., Wild, T., Herkersdorf, A., Wenzel, V., Henkel, J.: Dependable task and communication migration in tiled manycore system-on-chip. In: Proceedings of the 2014 Forum on Specification and Design Languages (FDL), vol. 978-2-9530504-9-3, pp. 1–8 (2014). https://doi.org/10.1109/FDL.2014.7119361

Wallentowitz, S., Tempelmeier, M., Wild, T., Herkersdorf, A.: Network-on-chip protection switching techniques for dependable task migration on an open source MPSoC platform.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2021 The Author(s)

About this chapter

Cite this chapter

van Santen, V.M., Amrouch, H., Wild, T., Henkel, J., Herkersdorf, A. (2021). Thermal Management and Communication Virtualization for Reliability Optimization in MPSoCs. In: Henkel, J., Dutt, N. (eds) Dependable Embedded Systems . Embedded Systems. Springer, Cham. https://doi.org/10.1007/978-3-030-52017-5_8

Download citation

DOI: https://doi.org/10.1007/978-3-030-52017-5_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-52016-8

Online ISBN: 978-3-030-52017-5

eBook Packages: EngineeringEngineering (R0)