Abstract

In order to systematically plan, specify, and execute system-level test for smart grids in a cyber-physical and multi-domain manner, the ERIGrid holistic testing approach is introduced. Also, the corresponding test description and templates are discussed.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

1 Introduction

System-level validation of smart grid solutions can be a complex effort. A typical smart grid solution, such as a distribution grid centralized demand response control system encompasses multiple disciplines (market, ICT, automation, infrastructure) and physical infrastructures (e.g. electricity, communication networks). Interactions among automation systems, enabling ICT, and electricity infrastructure are in the nature of such solutions and make testing the integrated system a necessity.

As motivated in Sect. 1.2.2, appropriate testing for such Cyber-physical Energy Systems (CPES) is challenging as it requires availability of multi-disciplinary engineering expertise, as well as suitable tool integration regarding the testing platforms [17]. A re-organization of testing practices in research and industry is ongoing to harvest the benefits of the advanced integration of system components using suitable testing tool chains and workflows.

In this chapter, we aim to support this re-organisation of testing practice, by offering answers to the following questions:

- i.:

-

How can system validation efforts be framed as experiments in order to account for complex system requirements and functions, the multi-disciplinary experts, and the wide variety of employed experimental platforms?

- ii.:

-

What information is necessary to record in an experiment description, to fully document purpose, structure and execution of experiments for coordinated both planning and reporting purposes?

This chapter offers a viewpoint for harmonization of system validation efforts by focusing in the problem of test formulation. Considering question i., At first, the problem of system testing is formulated, which leads to a generalized procedural pattern, to be called ‘holistic testing procedure’, introduced in Sect. 2. Here ‘holistic’ refers to the procedure’s generality, as it should be, in principle, applicable to simple as well as very complex testing problems. To address question ii., a test description method is introduced in Sect. 3 which is based on the named procedure.

1.1 Testing Procedure and Test Description

In the smart energy domain, a significant attention has been given to the abstract and structured description of system solution requirements, e.g. with use cases and SGAM [1]. However, abstract requirements specification is insufficient to derive test descriptions immediately. A “test specification gap” can be identified between those requirements and the structured preparation of validation efforts. And this gap further increases with increasing complexity of cyber-physical system structure of solutions, as well as advancements in test platform technology.

1.2 Holistic Testing for System Validation

A clear and formalized test description can improve the reusability and reproducibility of tests. It can facilitate both the preparation and execution of tests in spite of increasing complexity due to multi-domain systems and advanced experimental platforms. A structured approach also helps the identification of relevant test parameters and targets involving multiple domains. A speedup is also needed in R&D activities that require component characterization and system validation experiments.

To frame the problem of dealing with workflows and tool chain integration for testing, we define:

Holistic testing is the process and methodology for the evaluation of a concrete function, system or component (object under investigation) within its relevant operational context (system under test), as required by the test objectives.

Here, Object under Investigation (OuI) is the component (hardware or software) that is subject to the test objective(s). Note that in system validation, there can be a number \(n\ge 2\) of OuIs. The concept of OuI replaces related concepts used in practice, such as “device under test” (commonly abbreviated DUT), or “equipment under test”.

The System under Test (SuT) refers to the system configuration that includes all relevant behaviors and interactions that are required to examine the test objectives. The OuI is thus a subset of the SuT, and the remaining aspects of the SuT are simulated, emulated, or realised by the testing platform.

The holistic testing concept thus provides a scaffold for the formulation of procedures, description methods and tool chains for testing:

-

procedures take a user in steps through a testing campaign, sequencing tasks and outcomes appropriately;

-

description methods ask the right questions and structure the outcomes in a harmonized and with a common interpretation;

-

tool chains support and integrate the workflows and descriptions with suitable test platforms.

The approach to test description presented here is based on three basic aspects of testing: (i) The object and purpose of test (i.e. What is tested and why), (ii) the test elements and test protocol, and (iii) the physical or virtual facility (i.e. test platform) employed to realize the experiment.

In this vision, the scoping and design of validation tests and experiments is facilitated by offering a better formal framing and a procedural guideline.

2 Toward Procedures for System Validation

The need for system validation has been previously expressed, and holistic testing has been formulated as a concept to organise procedures, tools and descriptions. In this section the procedural view on the system validation problem is introduced by first discussing the role of testing in the development context, introducing a ‘holistic’ procedural view on testing, and finally presenting a specific procedure for integrating development and testing with different test platforms.

2.1 Purpose of Testing in the Development Process

Experiments play a role in the early stages of a technical design as well as in the final stages where technical solutions are evaluated against technical specifications and system level requirements. Systems design processes in industry follow the general scheme of the V-model [9], as mentioned in Sect. 2.1. This V-model can be interpreted classically as waterfall sequential process, but can also be used for modern concurrent engineering as a conceptual hierarchy, where the V-model establishes a strong coupling of requirements specification and testing: at every stage of development, experiments are based on (a) requirements identified earlier in the design process, (b) an assembly of components validated in a previous stage of testing, and (c) the appropriate type of test platform.

The conceptual difference between design and testing is easily obscured at early development stages. In (simulation-based) design, the focus is on structural and parametric changes to a (simulation) model, which lead to an incremental adaptation of a system design. In contrast, for testing, the system is fixed, and an experiment is set up to quantify a property or to validate a hypothesis (e.g., function, performance) about the present system design. As the system grows in scale and complexity, also the formulation of a test hypothesis becomes non-trivial; on one hand it is driven by the more complex system requirements, on the other hand also larger and more complex experimental setups are required. A holistic test description would support this re-framing from engineering design to test design, helping to narrow down the test purpose and test system requirements.

2.2 The Need for System Testing and Its Support

Section 2 outlined basic needs for system validation, and highlighted some of the existing approaches. The need for testing an integrated solution has been motivated in Sect. 2.2. In spite of different test realisations, there is common agreement that ‘System testing’ refers to testing at higher levels of system integration. With reference to Fig. 1, this notion of system testing thus refers mainly to the testing variants ‘functional validation’ and ‘system validation’.

At the more basic levels, for components and sub-systems, requirements and test specifications are likely made by the developers themselves. For the higher levels, typically a long time passed between the initial formulation of functional and system requirements and the developed solution, increasing the gap between requirements and test execution.

Further, as outlined above, a wide variety of test platforms for multi-domain system testing are becoming available. Today, these test platforms have sufficient complexity of their own to concern the user with, rather than the object under investigation.

2.3 A Generic Procedure for System Validation

A procedural support can be useful when adopting a complex test platform attempting validation of a complex integrated control solution.

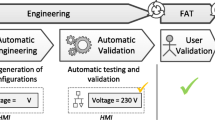

A holistic view on testing procedures is illustrated in Fig. 2. At the outset, this procedure template connects the system definition and use cases with a test objective in a test case. Once this link is fully established, the test specification captures fully the requirements for an experimental setup. The test platform can now be identified and suitably configured, even as a complex one that connects several research infrastructures (here: RI a and RI b). The experiment execution in the infrastructure and subsequent result evaluation may now lead to judging the test as successful, returning information with reference to the specifications and test case; or it may lead to a re-iteration of the specifications.

Depending on the kinds of test purposes, relevant test platforms, devices or systems under test, etc., different procedures and methodologies are applicable. Under the conceptual frame of this holistic test procedure, the ERIGrid project defined specific approaches within co-simulation, multi-RI experiments, and hardware-in-the-loop testing. For instance, a concrete test procedure, the “testing chain”, as described in Sect. 2. To address the work with large-scale systems in a co-simulation context, an approach was formulated in [23], as reported in Sect. 4.

Finally, the holistic test description methodology, outlined in Chap. 3, offers systematic support for the formulation of concrete testing initiatives of any complexity and suits as semantic framework for further testing harmonization and test automation.

2.4 Testing Chain

The state-of-the-art in testing involves simulation, lab testing and field testing in that sequence. This testing approach lacks smooth transition and lacks coverage of smart grid functionalities. The “Testing chain” approach [4, 16], however, covers the whole range of testing possibilities including simulation, Software-In-the-Loop (SIL) [3], CHIL, Power Hardware-In-the-Loop (PHIL) and field testing sequentially. Such method can investigate the whole range of functions and hardware in the test system resulting in cost efficient validation. This kind of testing is composed of a series of tests with increasing complexity and realism. This is the general approach to follow for developing a new component or algorithm which affects system behaviour. The gradually increasing realism of the testing chain allows to develop a product in a relevant environment saving time and money. This could be suitable for the device manufacturers and software developers. Indeed, the first step of a developing phase is a pure simulation experiment; then, if the results are good, the object under test is tested in a more relevant environment (from a CHIL experiment, where there is a real behaviour of the controller, to the PHIL, where the experiment takes into account the real behaviour of the whole OuI). All these steps are recommended before testing the OuI in the real environment. Testing products in environments with increasing complexity helps to identify and solve any critical aspect that could affect the performances.

Figure 3 provides an overview of the proposed testing chain. In Stage 1 investigations performed in a pure software simulated environment are usually carried out in steady state or transient conditions. This enables the functionality test of the control algorithm but does not represent adequately the interface between power and control systems.

Stage 2 of the testing chain proposes the use of two dedicated software tools for executing the power system model and controller separately. This SIL simulation or co-simulation technique allows the exchange of information in a closed loop configuration. After verifying the correct behaviour of the control algorithm in Stages 1 and 2, Stage 3 deals especially with the performance validation of the actual hardware controller by the use of a CHIL setup. CHIL testing provides significant benefits compared to simulation-only and SIL experiments. Using RT Simulator for executing power system models in real time, the actual hardware controller can be tested including all kinds of communication interfaces and potential analogue signal measurements by interfacing it with the RT Simulator.

The final Stage 4, before actual field-testing and implementation, of the proposed testing chain approach is the integration of real physical power hardware controlled by the hardware controller. This combined CHIL and PHIL is called Power System-in-the-Loop (PSIL) and includes the controller as well as power apparatus like inverter, motors, etc. This testing technique is closest to a field test of a component, which still can be implemented in a laboratory: it integrates real-time interactions between the hardware controller, the physical power component and the simulated power system test-case executed on the RT Simulator. Despite the high complexity to ensure stable, safe and accurate experiments, a PSIL setup enables an investigation, not as a single and separate entity, but as a holistic power system. This technique is proven to validate entire functionalities of real hardware controller, interdependencies and interactions between real power components in an entire flexible and repeatable laboratory environment.

In terms of the holistic test procedure, the testing chain realises several iterations, utilizing a static frame for the system under test, enabling efficient re-use of test systems and configurations. At the same time, it advances the OuI in each testing step from model concept, to software, to hardware prototype. The test objectives will be adapted at each stage.

Application of the Testing Chain concept: The testing chain concept has been adopted for a study case aiming at generating systematic improvements on the performance of a converter control function. Details of the test case descriptions are found in Sect. 4.1 and the full study case with results are reported in Sect. 4.

3 ERIGrid Holistic Test Description Methodology

This section introduces the developed formal concepts of test description, based on related work on testing and on requirements engineering in the energy systems domain. First relevant background is introduced, then the method is presented and finally exemplified based on application experiences.

3.1 The Requirements and Semantics of Test Description

Testing and experimentation with system solutions occurs in context of a design and engineering process, as outlined in Sect. 2.1. From this point of view, engineering requirements and their formalisation in shape of use cases and system configurations are one input to a holistic test description. The test execution itself requires a device or object(s) to be tested as well as the test platform.

3.1.1 Energy System Semantics and Requirements

The existing energy system semantics (or information models) are presented on the left side of Fig. 4. The common information model (CIM/IEC61970-61968) [7, 8], OPC UA data model [21] and IEC 61850 data model [6] are widely utilized in the electrical domain. These standards address the functional, semantic, and syntactic configurations of a system. However, the technical and dynamic configurations are provided by the specific implementation technologies. Given these specific modelling standards for electric domain, describing and modelling the other domains such as ICT and thermodynamics in the system specifications requires further support. Nevertheless, the energy system semantics can be used as building blocks for the CPES design.

The Smart Grids Architecture Model (SGAM) proposes an interoperability architecture that covers mainly the conceptual and semantical interactions in a multi-domain smart grid. The link between SGAM and a validation is presented as a methodology based on use-case reference designation and specifications [1]. The SGAM methodology uses IEC 62559 for energy system design and provided the tailored use case template for this purpose. In this concept, a use case is considered as the basis for defining a system, its functionality and interaction necessary for the experiment design. It involves also the definition of Basic Application Profiles (BAP) and Basic Application Interoperability Profiles (BAIOP) as modular elements for the specification of system and subsystem. BAP and BAIOP represent the basic building blocks for the CPES and can provide possible skeletons for setting up interoperability validation experiment [19].

It is noteworthy that the use-case specifications provided in BAP and BAIOP involve specifically the system/sub-system architecture, but that they lack guidelines for the test specifications, implementation and technologies.

3.1.2 Testing Semantics

The link from the above information models and requirements to the validation setup is obscured, hence, the specification gap introduced in Sect. 2.1.

In the communication domain, ETSI provides a set of testing semantics including the ETSI test description suite, which consists of Test Description language (TDL) [14], the Test Purpose Language (TPLan) [13], and the Testing and Test Control Notation Version 3 (TTCN-3). While TPlan addresses the objective and scope of the test regardless to the testing environment, TDL fills the methodology gap between TPLan and the complex executable semantic. TDL and TPLan are mapped then to TTCN-3, where it specifies syntax, glossaries and templates to characterize a test configuration and procedure. However, still a corresponding test system is needed for the execution, i.e., the TTCN-3 semantic needs to be mapped down to an execution platform and can be integrated with system types of other languages (ASN.1, XML, C/C++). Besides, as a test specification semantic, TTCN-3 requires a domain specified syntax and vocabularies to enable comprehensive communication among its elements. The concept of abstract test suite in TTCN-3 standard [22] represents test descriptions in information technology. By defining formal (standardized) testing semantics and syntax, TTCN-3 enabled test automation [20], a software suit for conformance testing [24], and to promote reusability and possibility for further integration of new elements into the framework [5]. For instance, TPLan, TDL and TTCN-3 are utilized in information domain. However, in order to apply them to CPES assessment and validation, there is missing a means to establish a concrete link to energy system specifications, as the ETSI suite is not meant to interface physical structures and functions. This gap may be filled by integration of a complementing energy system semantic for testing.

The holistic test description addresses both energy system semantics and testing semantics, offering specification levels that relate to energy systems use cases and structural descriptions, while offering descriptions levels associated with a particular test platform. This multi-level specification is conceptually similar to those defined in the ETSI suite of TPLan, TDL, and TTCN-3.

3.2 The ERIGrid Test Description for System Validation

The holistic test description is a set of documents and graphical representations intended to support its users in the definition of complex tests. It follows the system validation procedure outlined in Sect. 2.3. In that it can lead to a better planning of the experiments and help in the mapping of those experiments to different laboratories by contributing to clarify the test objectives, setups and parameters of interest. The whole process and some of its key concepts are illustrated in Fig. 5.

The three key levels of in test description are:

A Test Case provides a set of conditions under which a test can determine whether or how well a system, component or one of its aspects is working given its expected function.

A Test Specification defines the test system (i.e. how the object under investigation is to be embedded in a specific system under test), which parameters of the system will be varied and observed for the evaluation of the test objective, and in what manner the test is to be carried out (test design).

An Experiment Specification defines by what exact means a given test specification is to be realized in a given laboratory infrastructure.

From the practical perspective, the Holistic Test Description (HTD) is a set of templates for each level, and an associated graphical notation for system configurations. It constitutes a flexible framework that can be adapted according to the users’ needs or the test cases to be applied. The steps of the holistic test procedure (Fig. 2) from the abstract conception of the experiment to the physical implementation in a laboratory are:

-

1.

Test Case (TC)

-

2.

Qualification Strategy (QS)

-

3.

Test Specification (TS)

-

4.

Experiment Realisation Plan

-

5.

Experiment Specification (ES)

-

6.

Results Annotation

-

7.

Experiment Evaluation

The TC template collects the motivation for the test. It frames the purpose of the test, the domains and sub-domains with their connectivity, the test setup, the relevant functions and the metrics to identify whether the test performed has been successful. The TC is an essential part of the testing effort as it represents the first clarification of the test objectives. For complex experiments, a single TC can have several linked TSs and ESs downstream. To support the early drafting process for test case development, the TC template is suggested to be filled in a Canvas format (see Fig. 6), which represents all components of the test case template in visual relation. The TC formulations typically go through several refinements between initial conception and final documentation of a testing campaign. Especially for complex test cases, it is common to break down a test objective into several PoIs and various Test Criteria for each PoI. It outlines how the OuI is going to be characterized or validated by means of a set of tests.

The next steps, the QS, TS, ERP all support the concretization and breaking down of a test case toward executable experiments. The QS is focused on describing this break-down, in a free-form textual description, but can also be represented as a graph expressing the hierarchical relation between TC and multiple different TSs and ESs. The TS addresses a specific PoI in detail and defines a concrete test system configuration, the test design, the parameterization, metrics and test sequences. The Experiment Realization Plan aims to identify at which particular RIs the respective TSs could be implemented in terms of hardware, software, models. Up to this moment, the methodology assumes that the description of the TC is independent of the RI. In practice, this assumption is not always valid, so that information from the laboratory can influence e.g. the acceptable complexity of a TS. The ES defines the mapping of the TS to the components, structure and procedures of a laboratory. As it is required to know many details about the components, measuring devices, expected uncertainties, etc. it should be prepared in collaboration between a technical manager of the RI and the user.

From experience, information the laboratories available to external users is typically insufficient to plan an experiment without the involvement of local experts. Here the HTD approach can be particularly helpful in facilitating the communication between external users and laboratory staff. As a guideline, the external user should be ‘owner’ of the steps from TC to TS. The local staff however, should ‘own’ the ES, to ensure a feasibility and integrity of the experiment design with laboratory capabilities.

The last two steps of the procedure, Results Annotation and Experiment Evaluation are not subject to the HTD framework. The process of registering the results of the tests depends on the test itself and the only advice given to the users is to keep them traceable among the different test platforms, time resolutions and data formats. A method for exchangeable file formats and annotation of experiment result data is found in [10]. The results obtained in the testing process provide feedback for the clarification of the TS. The final evaluation of the conducted experiments serves as input for the refinement of the holistic TC.

3.3 Holistic Test Description: Key Concepts

A comprehensive framework for test description requires the introduction of a few concepts and their definition to contrast with the blurry lines of their everyday use.

Test objective is the purpose for carrying out the test. These can be divided into three categories:

-

Characterization test: a measure is given without specific requirements for passing the test. Examples: characterizing performance of a system; developing a simulation model.

-

Validation test: functional requirements and abstract measures are provided but are subject to interpretation; qualitative test criteria. Example: is a controller ready for deployment?

-

Verification test: Tests where requirements are formulated as quantitative measures and thresh-olds of acceptable values are quantified. Example: Testing if a component conforms to a standard.

3.3.1 System Configurations in Test Descriptions

System configurations, use cases and test cases form a logical chain that can be applied throughout a development project. The main concepts are as follows:

System(s) Configuration is an assembly of (sub-)systems, components, connections, domains, and attributes relevant to a particular test case.

A Component is constituent part of a system which cannot be divided into smaller parts without losing its particular function for the purpose of investigation Remark: In a system configuration, components cannot further be divided; connections are established between components.

A System is defined by a system-boundary, and can be composed of sub-systems, or components that cannot be further decomposed in the relevant context. It is described as a set of interrelated elements considered in a defined context as a whole and separated from their environment. Remark: In a system configuration, a system, which may be divided into sub-systems, represents a grouping of components; functionality can be attributed to systems and components and vice versa.

Connections defines how and where components are connected, and connections are associated with a domain.

Domain is an area of knowledge or activity in the context of smart grids characterized by a set of concepts and terminology understood by practitioners in that area, typically infrastructure-specific operation areas such as electricity, heat, primary energy resources or ICT. Multi-domain components thus act as interface (conversion) between domains. Finally, a domain can be divided into sub-domains.

Attributes define the characteristics of components and systems, such as parameters and states.

Constraints describe limitations of component attributes, systems, domains or functionality.

For each layer of test description a different interpretation of the system configuration is relevant, as illustrated in Table 1 and Fig. 7. Table 1 provides an overview of the differences between the different SCs.

As an example of the three levels, Fig. 7 shows system configurations from a test involving coordinated voltage control of remotely controllable Photovoltaic (PV) inverters.

In the GSC, only coupling domains and high-level subsystems are specified, while the number of units involved is not specified. The Test System (SSC) identifies the OuI as a single inverter but requires both the coordinated voltage controller and several other inverters to be connected to a distribution system. Finally, in the experiment setup (ESC), elements required to emulate signals for the OuI are specified. These, together with a specification sheet (not shown), serve as a complete documentation of the experimental setup. Only one PV inverter is seen in a PHIL setup, while the voltage controller is implemented in software, while the other inverters as well as the distribution grid are simulated on a digital real-time simulator.

3.4 Remarks on Quantitative Assessment

As most testing is quantitative, also a framework for quantitative selection of test parameters and result evaluation is needed.

The statistical concept of Design of Experiments (DoE) has been developed to address result significance and reproducibility in experimentation. In its essence, DoE provides a statistical framework to explore the influence of different factors on a system’s response. The concepts of DoE have so far mostly been adopted in single research fields and have had a difficult standing in strongly interdisciplinary research fields like cyber-physical energy systems. An exception is given in [19], where it has been applied to interoperability testing in CPES relation to recent standards developments. Further application of DoE in the field is thus promising. The DoE methodology can be seen as an intrinsic part of a HTD, and the HTD is meant to facilitate DoE application in complex and interdisciplinary settings. It provides testing with the statistical groundwork for efficient experimentation, result reproducibility and significance of the outcome against noise in the tested system. The detailed mapping between DoE and holistic testing has been discussed in [18].

4 Application Examples

In this section, two test cases are presented with the aim of exemplifying the use of the HTD methodology. The full description of these test cases with their implementations and results are presented in Chap. 6.

4.1 Example 1: Testing Chain

The HTD has been applied to a test case aiming at demonstrating the potential of a multi-site testing chain with varied testbeds, as noted above in Sect. 2.4. The test case involves three laboratory infrastructures in three countries with three different test implementations. The three-step process of the test-chain implementation is illustrated in (Fig. 8).

As the test chain involves the implementation of a similar test case in three laboratories and also due to the need for model and results exchange among the involved laboratories, harmonized specification of the test case with unified template was crucial. The utilization of the HTD methodology in this test case involved three stages: jointly specifying of the test case and the qualification strategies, specification of the test by partners with common purposes of investigation and finally the specification of the experiments by the individual laboratory infrastructures. Short version of the test case description of this test is presented below:

-

Name of the Test Case: Testing of converter controller through multi-site testing chain with varied testbeds

-

Narrative: This test case aims at demonstrating the potential of a multi-site testing chain with varied testbeds for generating systematic improvements on the performance of a converter control function.

-

Function Under Investigation: Converter RMS controller (receiving P/Q setpoints and setting d/q axis current setpoints)

-

Object Under Investigation: Converter RMS controller subsystem

-

Domain Under Investigation: Electric power domain, Control domain Purpose of Investigation:

-

PoI 1: Characterization of converter controller influence of the system performance.

-

PoI 2: Validation of model exchange among RIs.

-

PoI 3: Validate improved control system performance.

-

-

System Under Test—illustrated in Fig. 9:

-

Function Under Test: Converter Q/V and P/f controller algorithm, inner current controller, a low voltage distribution grid connecting five loads, four PV and a battery.

-

Test Criteria: Settling time, overshoot, damping factor and peak time for a step response after step changes of PV output and the load connected with the PV.

The testing campaign was carried out as well and is reported in Sect. 6.

4.2 Example 2: Coordinated Voltage Control

Another example case can be the Coordinated Voltage Control (CVC) case involving flexibility from DER, communication infrastructure and centralized optimized control. The related use case was introduced in Sect. 2.3. To specify this test case, the three level specification templates of the HTD are applied detailing the test from generic to specific to laboratory level plans. The three level specifications are associated with test case, test specification and experiment specification respectively. The main questions addressed in the test case template are why the test is needed and what the objectives are. Some of the main specifications of the CVC test are presented below.

-

The Narrative: For a Distribution Management System (DMS) Voltage controller in development stage (simple implementation) the performance of the DMS algorithm and controller should be evaluated under realistic conditions. This test could be seen as the last step before installing the DMS in the field.

-

The system under test: includes DMS, DER, OLTC, transformer, distribution lines, telecom network as shown in (Fig. 1).

-

Object under investigation: DMS controller.

-

Domain under investigation: Electric power, ICT.

-

-

Function under test: includes DER P,Q control, measurements, OLTC tap control, communication via ICT.

-

Function under investigation: optimization in the controller, state estimation.

-

-

Test objectives/PoI: Characterization and validation of the DMS controller.

-

Convergence of the optimization (validation).

-

Performance of the optimization under realistic. conditions (characterization)

-

Accuracy of the state estimation (characterization).

-

-

Target measures:

-

1. convergence (when/how often?), 2. How fast?, 3. solution quality.

-

Voltage deviation of all the nodes from 1 pu, number of tap changes, network losses.

-

Voltage, P, Q estimation errors.

-

-

Variability attributes: Load patterns (realistic, annual variation); Communication attributes (packet loss, delays).

-

Quality attributes (thresholds): 1.2: convergence within 2 sec (validation), 3.* estimation quality characterized with confidence 95%.

After the CVC test case is described with the details of purposes of investigation, logical break down of the test case into sub-tests follow. In this process, a strategy will be developed identifying testbeds targeting to meet the requirements of the test case. Finally, in the experiment specification details of the components to be used in the test, such as type and ratings of OLTC and DER, are specified. Furthermore, the connectivity of the components and also the actual values of the variability attributes, such as load patterns, are specified. The full specification of the CVC test can be found in [15]. A detailed similar test case is provided in Chap. 6.

5 Conclusion

As advanced testing platforms are becoming part of a multi-disciplinary development process, also testing campaigns need to integrate information of multiple viewpoints. To support the planning and documentation, this chapter presented a model and method for detailed test planning that is suitable for even complex test campaigns. This method, called ‘holistic test description’, relates system requirements to test design and testing platforms; it complements the analytical design of experiments with a test engineering process. For further details and instructions on the described method, please refer to [12]. Templates, guidelines and further examples are also found on the corresponding GitHub site.Footnote 1

With the testing chain, a prototypical process for integrated multi-stage system development validation was introduced. An abstract testing process was outlined, so that the presented tools for handling the information between system requirements and test platform configuration. This chapter has illustrated how the management of testing campaigns can be supported by a structured approach on information management and the systematic use of advanced test platforms.

Going forward, further advanced testing will be introduced. Then, in Chap. 6 two example testing campaigns are reported, and an overall evaluation of the here introduced test description method is summarised in Chap. 7.

References

Methodologies to facilitate Smart Grid system interoperability through standardization, system design and testing. Smart Grid Mandate CEN-CENELEC-ETSI Smart Grid Coordination Group, Tech. Rep., (2014)

Holistic test description templates, ERIGrid (2019). https://github.com/ERIGrid/Holistic-Test-Description

Babazadeh, D., Chenine, M., Zhu, K., Nordström, L., Al-Hammouri, A.: A platform for wide area monitoring and control system ICT analysis and development. In: 2013 IEEE Grenoble Conference, pp. 1–7 (2013)

Brandl, R., Kotsampopoulos, P., Lauss, G., Maniatopoulos, M., et al.: Advanced testing chain supporting the validation of smart grid systems and technologies. In: 2018 IEEE Workshop on Complexity in Engineering (COMPENG), pp. 1–6. IEEE (2018)

Broy, M., Jonsson, B., Katoen, J.P., Leucker, M., Pretschner, A.: Model-Based Testing of Reactive Systems: Advanced Lectures (Lecture Notes in Computer Science). Springer, Berlin (2005)

International Electrotechnical Commission: IEC61850 - Power Utility Automation. Technical report TC 57 - power system management and associated information exchange (2003)

International Electrotechnical Commission: Application integration at electric utilities - System interfaces for distribution management - Part 11: Common information model (CIM) extensions for distribution. Technical report, TC 57 - Power system management and associated information exchange (2013)

International Electrotechnical Commission: Energy management system application program interface (EMS-API) - Part 301: Common information model (CIM) base. Technical report, TC 57 - Power system management and associated information exchange (2014)

Forsberg, K., Mooz, H.: System engineering for faster, cheaper, better. In: INCOSE International Symposium, vol. 9, pp. 924–932. Wiley Online Library (1999)

Gehrke, O., Jensen, T.: Definition of a common data format. Deliverable, SmILES Consortium (2018)

Heussen, K., Morales Bondy, D.E., Nguyen, V.H., Blank, M., et al.: D-NA5.1 Smart Grid configuration validation scenario description method. Deliverable D5.1, ERIGrid Consortium (2017)

Heussen, K., Steinbrink, C., Abdulhadi, I.F., Nguyen, V.H. et al.: ERIGrid holistic test description for validating cyber-physical energy systems. Energies 12(14) (2019)

European Telecommunications Standards Institute: Methods for testing and specification (mts); tplan: A notation for expressing test purposes. Technical report, ETSI ES 202 553 V1.2.1 (2009)

European Telecommunications Standards Institute: ETSI test description language. Technical report (2018). https://tdl.etsi.org

Kotsampopoulos, P., Maniatopoulos, M., Tekelis, G., Kouveliotis-Lysikatos, I., et al.: D-NA4.2a Training/education material and organization of webinars. Deliverable D4.3, ERIGrid Consortium (2018)

Maniatopoulos, M., Lagos, D., Kotsampopoulos, P., Hatziargyriou, N.: Combined control and power hardware in-the-loop simulation for testing smart grid control algorithms. IET Gener. Trans. Distrib. 11(12), 3009–3018 (2017)

van der Meer, A.A., Palensky, P., Heussen, K., Bondy, D.E.M., et al.: Cyber-physical energy systems modeling, test specification, and co-simulation based testing. In: 2017 Workshop on Modeling and Simulation of Cyber-Physical Energy Systems (MSCPES), pp. 1–9 (2017)

van der Meer, A.A., Steinbrink, C., Heussen, K., Morales Bondy, D.E., et al.: Design of experiments aided holistic testing of cyber-physical energy systems. In: 2018 Workshop on Modeling and Simulation of Cyber-Physical Energy Systems (MSCPES), pp. 1–7. IEEE (2018)

Papaioannou, I., Kotsakis, E., Masera, M.: Smart grid interoperability testing methodology: a unified approach towards a European framework for developing interoperability testing specifications. In: EAI International Conference on Smart Cities Interoperability and Standardization (2017)

Schieferdecker, I.: Test automation with TTCN-3-state of the art and a future perspective. In: IFIP International Conference on Testing Software and Systems, pp. 1–14. Springer, Berlin (2010)

TC 65/SC, E.: IEC 62541-1: OPC unified architecture - part 1: Overview and concepts. Technical report, International Electrotechnical Commission (IEC) (2010)

ETSI Centre for Testing and Interoperability: TTCN-3 tutorial. Technical report, (2013), available at: http://www.ttcn-3.org/files/ETSI_TTCN3_Tutorial.pdf, accessed 17.04.2020

Widl, E., Spiegel, M., Findrik, M., Bajraktari, A., et al.: D-JRA2.2 Smart Grid ICT assessment method. Deliverable D8.2, ERIGrid Consortium (2018)

Zeiss, B., Kovacs, A., Pakulin, N., Stanca-Kaposta, B.: A conformance test suite for ttcn-3 tools. Int. J. Softw. Tools Technol. Trans. 16(3), 285–294 (2014)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2020 The Author(s)

About this chapter

Cite this chapter

Heussen, K. et al. (2020). Test Procedure and Description for System Testing. In: Strasser, T., de Jong, E., Sosnina, M. (eds) European Guide to Power System Testing. Springer, Cham. https://doi.org/10.1007/978-3-030-42274-5_2

Download citation

DOI: https://doi.org/10.1007/978-3-030-42274-5_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-42273-8

Online ISBN: 978-3-030-42274-5

eBook Packages: EnergyEnergy (R0)