Abstract

We study time-stepping methods based on Hermite-Birkhoff interpolation. For linear problems, the methods are designed so that their stability characteristics are identical to those of standard implicit Runge–Kutta methods based on Gauss-Legendre quadrature (Hairer and Wanner, Solving ordinary differential equations II, stiff and differential-algebraic problems. Springer, New York, 1996). However, in contrast, only a single nonlinear system whose dimension matches that of the original problem must be solved independent of the method order; that is, they are singly implicit. Besides outlining the construction of the methods and establishing some of their basic properties, we carry out illustrative computations both on standard test problems and a spectral discretization of an initial-boundary value problem for the Schrödinger equation.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

Over the past decade a number of works have appeared which exploit the unique properties of Hermite-Birkhoff interpolation in space to construct arbitrary-order discretization methods for hyperbolic [1, 2, 4,5,6,7, 10, 14, 16,17,18,19] as well as Schrödinger [3] equations. The precise form of the interpolant in a single cell, which here we write in one dimension labelled t, is

where Π 2m+1 denotes the polynomials of degree 2m + 1. (In higher dimensions one uses a tensor-product cell interpolant based on vertex data consisting of mixed derivatives of order through m in each Cartesian coordinate.)

In contrast, there has been little work on analogous methods for time discretization. A recent exception is the manuscript by Liu et al. [15]. They develop methods for second-order semilinear hyperbolic equations using interpolants of the form (1)–(2) combined with a reformulation of the evolution problem using exact solutions of the linear part. They demonstrate excellent long-time performance.

The outline of the paper is as follows. In Sect. 2 we list a few properties of piecewise Hermite-Birkhoff interpolation. In Sect. 3 we construct the time-stepping schemes and establish some basic results, with a few numerical experiments described in Sect. 4.

2 Basic Properties of Hermite-Birkhoff Interpolation

Hermite interpolants have a number of interesting properties which make them very attractive for the solution of differential equations; see, e.g., [2]. Here we will mainly use the simplest. Precisely, for t ∈ (t j−1, t j), the Peano representation of the local error can be easily derived by noting that \(e=u- \ mathcal {I} u\) solves the two point boundary value problem

Thus

where the kernel K j is the Green’s function for (3). Simple scaling arguments combined with the transformation t = t j−1 + zh j then show that \(e=O(h_j^{2m+2})\) where h j = t j − t j−1 is the time step. A fundamental feature of piecewise Hermite interpolation is the following orthogonality property. For any functions v(t), w(t)

which in particular implies that interpolation reduces the H m+1 seminorm.

3 Time-Stepping Methods

We begin by considering the initial value problem for a first-order system ordinary differential equations:

Given a discrete time sequence t j > t j−1, j = 1, …, N, with time steps h j = t j − t j−1 we write down the Picard integral formulation of the time evolution over a single step

The construction of our time integration formula proceeds in three steps. We denote by v j the approximation to u(t j).

-

1.

Given v j−1 and assuming for the moment that v j is known, use the differential equation to compute m scaled derivatives of its solution, V ℓ(t), satisfying V ℓ(t ℓ) = v ℓ, ℓ = j, j − 1. Setting

$$\displaystyle \begin{aligned} F_{\ell}(t)=f(V_{\ell}(t),t) , \end{aligned} $$(8)these are recursively defined by the formula

$$\displaystyle \begin{aligned} \frac {d^k V_{\ell}}{dt^k} (t_{\ell}) = \frac {d^{k-1} F_{\ell}}{dt^{k-1}} (t_{\ell}) , \ \ \ \ k=0, \ldots m. {} \end{aligned} $$(9) -

2.

Construct the Hermite-Birkhoff interpolant of this data; that is the polynomial P j−1∕2(t;v j−1, v j) of degree 2m + 1, satisfying

$$\displaystyle \begin{aligned} \frac {d^k P_{j-1/2}}{dt^k} (t_{\ell}; v_{j-1},v_j) = \frac {d^k V_{\ell}}{dt^k} (t_{\ell}); \ \ \ \ell=j-1,j, \ \ \ k=0, \ldots , m. {} . \end{aligned} $$(10) -

3.

Approximate (7) by replacing u(t ℓ) by v ℓ and replacing the integral by a q + 1-point quadrature rule with f evaluated at the Hermite interpolant:

$$\displaystyle \begin{aligned} v_j = v_{j-1} + h_j \sum_{k=0}^q w_k f(P_{j-1/2}(t_{j,k}; v_{j-1}, v_j)) . {} \end{aligned} $$(11) -

4.

Solve (11) for v j. Note that this is a system of d nonlinear equations for any m; that is, unlike standard implicit Runge–Kutta methods, the size of the nonlinear system is independent of the order.

We remark that we have not studied in detail the unique solvability of (11) in the stiff case. In our numerical experiments we used the solution at the current time step as an initial approximation for Newton iterations and simply accepted the solution to which the iterates converged.

To emphasize the ideas we write down some specific examples of methods with m = 1 and m = 2 making the simplifying assumption of autonomy; that is f = f(u). The derivation of methods of arbitrary order is straightforward and the formulas can be trivially obtained using software capable of symbolic computations. To apply them at higher order one must evaluate higher derivatives of f, which is also possible using automatic differentiation tools [11].

Example (m = 1)

Set

Now the interpolant P j−1∕2(t;v j−1, v j) is given by:

where

We next introduce a quadrature rule which is exact for polynomials of degree 3. Possible choices include the 2-point Gauss-Legendre (14) rules, or the 3-point Gauss-Radau (15) or Gauss-Lobatto rules. Note that by using two different rules we obtain a possible error indicator. Here are the two different methods used below. Note that the methods are identical if f is linear.

A time step is executed by solving the nonlinear system, (14) or (15), for v j.

Example (m = 2)

Now we also need the second time derivative of u,

where J(u) is the Jacobian derivative. The Hermite interpolant can now be written:

where

Again we can now use, for example, the 3-point Gauss-Legendre or 4-point Gauss-Radau quadrature rules to produce the equation we must solve for v j.

3.1 Stability and Consistency

The consistency of the method is a straightforward consequence of its construction, and its linear stability properties can also be established.

Theorem 1

Assume that the quadrature rule has positive weights and is exact for polynomials of degree 2m + 1. Then the implicit Hermite method is A-stable and accurate of order 2m + 2.

Proof

Assume that f is smooth and that u(t) ∈ C 2m+2(0, T). Using (4), standard estimates for quadrature errors, and the Picard formula (7) we find for the truncation error

Now consider the Dahlquist test problem, f(u) = λu. In this case all quadrature rules which are exact for the Hermite interpolant produce the same method. As interpolation is linear, we have that the coefficients of the interpolant are linear combinations \(h_j^k \lambda ^k v_{j-1}\) and \(h_j^k \lambda ^k v_j\), k = 0, …m. The Picard integral then increases the powers of h j λ by one so that the implicit system (11) can be rearranged to:

where Q ±(h j λ) are polynomials of degree m + 1. Consistency implies

The only rational function of the given degree with this accuracy is the diagonal Padé approximant. We thus conclude that our methods are A-stable [12].◇

4 Numerical Experiments

Our first experiments treat standard problems from the ode literature and are restricted to the fourth and sixth order methods described above with either Gauss-Legendre or Gauss-Radau quadrature. Our practical implementations employ the classical Aitken algorithm adapted to Hermite interpolation to directly evaluate P j−1∕2(t j,k, v j−1, v j) and solve (11) using Newton’s method with the Jacobian of the implicit system approximated by finite differences. For adaptive computations we

-

1.

Compute v j using the Gauss-Radau-based formulas,

-

2.

Compute a residual, ρ j, by substituting v j into the Gauss-Legendre-based formulas.

We then adjust the time step by the simple rule

while also imposing a minimum time step.

Our final experiment examines the use of the method for evolving spectral discretizations of initial-boundary value problems for the Schrödinger equation.

4.1 Arentsorf Orbit

We first consider the problem of computing a periodic solutions of the restricted three-body problem which we reformulate as a first-order system of four variables:

(For graphs of the solution see [13, Ch. II].)

We note that this problem is not considered to be stiff. The main difficulty is a need for very small time steps when the orbits approach the singularities of f. However, we use it to verify convergence at the design order when (woefully inefficient) uniform time steps are employed and to test the utility of our naive time step adaptivity algorithm.

Results for fixed (small) time steps are displayed in Table 1. We observe that convergence is at design order and that the results for the two quadrature formulas are comparable, though the fourth order Radau method is somewhat more accurate than Gauss-Legendre with roles reversed at sixth order. The sixth order methods are more accurate with larger time steps. The error is simply \(\sqrt {(y_1(T)-y_1(0))^2+(y_2(T)-y_2(0))^2}\).

Results for adaptive computations with m = 2 are shown in Table 2. Obviously, the adaptive methods lead to a very significant reduction in the number of time steps; an accuracy of 10−7 is achieved with 264 steps of the adaptive method while 35,000 uniform steps are required. Due to the sensitivity of the problem, the global error is much larger than the error tolerance, but is reduced in proportion to it.

4.2 Van der Pol Oscillator

Our second example is the van der Pol oscillator problem, which again we rewrite as a first order system:

We solve up to T = 11 using the adaptive method with m = 2. We plot the solution and the time step histories for a tolerance of 10−10 in Fig. 1. Note that very small steps are needed to resolve the fast transitions, while the problem is quite stiff in the regions where y is nearly constant. Plots for the other tolerances tested, 10−6 and 10−10, are similar though the number of time steps required varies.

4.3 Schrödinger Equation

Lastly, we apply the to evolve a Fourier pseudospectral discretization of the nonlinear Schrödinger equation. Precisely we consider the real problem

for x ∈ (−8, 8), t ∈ (0, 3) with periodic boundary conditions \(\frac {\partial v}{\partial x}=\frac {\partial w}{\partial x}=0\) at x = ±1. We approximate the periodization of the exact solitary wave solution

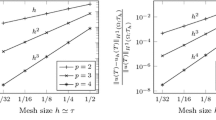

with c = 2π, r = π , s = π 2 − 25. We note that the amplitude of the solitary wave is reduced by about 17 digits at a distance of 8 from its peak so that the interaction with periodic copies is negligible over the simulation time. We use 512 Fourier modes in the computation of the derivatives and experiments show that this is sufficient to represent the solitary wave to machine precision. The implicit system was solved using Newton iterations each time step. In Fig. 2 we present results for m = 3 (8th order) with varying time step and for m varying from 1 to 5 (order 4 through 12) with h = 10−2. In both cases we observe rapid convergence. We also tabulate the errors at the final time and calculate the convergence rates when m = 3 in Table 3. The results are clearly consistent with the design order.

5 Conclusions and Future Work

In conclusion, we have demonstrated that Hermite-Birkhoff interpolation can be used to develop singly-implicit A-stable timestepping methods of arbitrary order. A number of possible generalizations and improvements to the method are possible. These include

-

1.

Stability analysis for variable coefficient or nonlinear problems using the projection properties (5);

-

2.

Improved time step/order adaptivity;

-

3.

Preconditioning of the implicit system for applications to partial differential equations such as spectral/pseudospectral discretizations of equations of Schrödinger type (e.g. integration preconditioners [8, 9]);

-

4.

Development of IMEX schemes combining Hermite and Taylor polynomials.

References

Appelö, D., Hagstrom, T.: On advection by Hermite methods. Pac. J. Appl. Math. 4,125–139 (2012)

Appelö, D., Hagstrom, T.: Solving PDEs with Hermite Interpolation. Lecture Notes in Computational Science, pp. 31–49. Springer, Berlin (2015)

Appelö, D., Kreiss, G., Wang, S.: An explicit Hermite-Taylor method for the Schrödinger equation. Commun. Comput. Phys 21, 1207–1230 (2017)

Appelö, D., Hagstrom, T., Vargas, A.: Hermite methods for the scalar wave equation. SIAM J. Sci. Comput. 40, A3902–A3927 (2018)

Chen, R., Hagstrom, T.: P-adaptive Hermite methods for initial value problems. ESAIM: Math. Model. Numer. Anal. 46, 545–557 (2012)

Chen, X., Appelö, D., Hagstrom, T.: A hybrid Hermite-discontinuous Galerkin method for hyperbolic systems with application to Maxwell’s equations. J. Comput. Phys. 257, 501–520 (2014)

Chidwagyai, P., Nave, J.-C., Rosales, R., Seibold, B.: A comparative study of the efficiency of jet schemes. Int. J. Numer. Anal. Model.-B 3, 297–306 (2012)

Coutsias, E., Hagstrom, T., Hesthaven, J., Torres, D.: Integration preconditioners for differential operators in spectral τ methods. Houst. J. Math. 1995, 21–38 (1996). Special issue: ICOSAHOM

Coutsias, E., Hagstrom, T., Torres, D.: An efficient spectral method for ordinary differential equations with rational function coefficients. Math. Comp. 65, 611–635 (1996)

Goodrich, J., Hagstrom, T., Lorenz, J.: Hermite methods for hyperbolic initial-boundary value problems. Math. Comp. 75, 595–630 (2006)

Griewank, A.: Evaluating Derivatives: Principles and Techniques of Algorithmic Differentiation. SIAM, Philadelphia (2000)

Hairer, E., Wanner, G.: Solving Ordinary Differential Equations II, Stiff and Differential-Algebraic Problems. Springer, New York (1996)

Hairer, E., Norsett, S., Wanner, G.: Solving Ordinary Differential Equations I, Nonstiff Problems. Springer, New York (1992)

Kornelus, A., Appelö, D.: Flux-conservative Hermite methods for simulation of nonlinear conservation laws. J. Sci. Comput. 76, 24–47 (2018)

Liu, C., Iserles, A., Wu, W.: Symmetric and arbitrarily high-order Birkhoff-Hermite time integrators and their long-time behaviour for solving nonlinear Klein-Gordon equations. J. Comput. Phys. 356, 1–30 (2018)

Seibold, B., Rosales, R., Nave, J.-C.: Jet schemes for advection problems. Discrete Contin. Dyn. Syst. Ser. B 17, 1229–1259 (2012)

Vargas, A., Chan, J., Hagstrom, T., Warburton, T.: GPU Acceleration of Hermite Methods for Simulation of Wave Propagation. Lecture Notes in Computational Science, pp. 357–368. Springer, Berlin (2017)

Vargas, A., Chan, J., Hagstrom, T., Warburton, T.: Variations on Hermite methods for wave propagation. Commun. Comput. Phys. 22, 303–337 (2017)

Vargas, A., Hagstrom, T., Chan, J., Warburton, T.: Leapfrog time-stepping for Hermite methods. J. Sci. Comput. 80, 289–314 (2019)

Acknowledgements

This work was supported in part by NSF Grant DMS-1418871. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author and do not necessarily reflect the views of the National Science Foundation.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2020 The Author(s)

About this paper

Cite this paper

Gu, R., Hagstrom, T. (2020). Hermite Methods in Time. In: Sherwin, S.J., Moxey, D., Peiró, J., Vincent, P.E., Schwab, C. (eds) Spectral and High Order Methods for Partial Differential Equations ICOSAHOM 2018. Lecture Notes in Computational Science and Engineering, vol 134. Springer, Cham. https://doi.org/10.1007/978-3-030-39647-3_8

Download citation

DOI: https://doi.org/10.1007/978-3-030-39647-3_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-39646-6

Online ISBN: 978-3-030-39647-3

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)