Abstract

By the Peano kernel theorem, this paper establishes the convergence rates for Hermite-Fejér interpolation with the observed function values and its first derivatives at Gauss-Jacobi pointsystems. These error bounds share that for a function analytic in the Bernstein ellipse \(\mathcal {E}_{\rho }\), the error decays exponentially; while for a functions of finite regularity, the error decays depending on the regularity.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

For an arbitrarily given system of points

Faber [3] in 1914 showed that there exists a continuous function f(x) in [−1, 1] for which the Lagrange interpolation sequence L n[f] (n = 1, 2, …) is not uniformly convergent to f in [−1, 1], where \( \omega _n(x)=(x-x_1^{(n)})(x-x_2^{(n)})\cdots (x-x_n^{(n)})\)

Whereas, based on the Chebyshev pointsystem

Fejér [4] in 1916 proved that if f ∈ C[−1, 1], then there is a unique polynomial H 2n−1(f, x) of degree at most 2n − 1 such that limn→∞∥H 2n−1(f) − f∥∞ = 0, where H 2n−1(f, x) is determined by

This polynomial is known as the Hermite-Fejér interpolation polynomial.

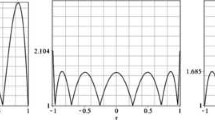

It is of particular notice that the above Hermite-Fejér interpolation polynomial converges much slower compared with the corresponding Lagrange interpolation polynomial at the Chebyshev pointsystem (3) (see Fig. 1).

∥H 2n−1(f, x) − f(x)∥∞, ∥L n(f, x) − f(x)∥∞ and \(\|H^*_{2n-1}(f,x)-f(x)\|{ }_{\infty }\)at x = −1: 0.001: 1 by using Chebyshev pointsystem (3) for \(f(x)=\sin (x)\), \(f(x)= \frac {1}{1+25x^2}\) and f(x) = |x|3, respectively

To get fast convergence, the following Hermite-Fejér interpolation of f(x) at nodes (1) is considered [6, 7]:

where \(h_k^{(n)}(x)=v_k^{(n)}(x)\left (\ell _k^{(n)}(x)\right )^2\), \(b_k^{(n)}(x)=(x-x_k^{(n)})\left (\ell _k^{(n)}(x)\right )^2\) and \( v_k^{(n)}(x)=1-(x-x_k^{(n)})\frac {\omega _n^{\prime \prime }(x_k^{(n)})}{\omega _n^{\prime }(x_k^{(n)})}. \)

Fejér [5] and Grünwald [7] also showed that the convergence of the Hermite-Fejér interpolation of f(x) also depends on the choice of the nodes. The pointsystem (1) is called normal if for all n

while the pointsystem (1) is called strongly normal if for all n

for some positive constant c.

Fejér [5] (also see Szegö [12, pp 339]) showed that for the zeros of Jacobi polynomial \(P_n^{(\alpha ,\beta )}(x)\) of degree n (α > −1, β > −1)

For (strongly) normal pointsystems, Grünwald [7] showed that for every f ∈ C 1(−1, 1), \(\lim _{n\rightarrow \infty }\|H^*_{2n-1}(f)-f\|{ }_{\infty }=0\) if \(\{x_k^{(n)}\}\) is strongly normal satisfying (7) and \(\{f'(x_k^{(n)})\}\) satisfies

while \(\lim _{n\rightarrow \infty }\|H^*_{2n-1}(f)-f\|{ }_{\infty }=0\) in [−1 + 𝜖, 1 − 𝜖] for each fixed 0 < 𝜖 < 1 if \(\{x_k^{(n)}\}\) is normal and \(\{f'(x_k^{(n)})\}\) is uniformly bounded for n = 1, 2, ….Footnote 1

Moreover, Szabados [11] showed the convergence of the Hermite-Fejér interpolation (5) at the Chebyshev pointsystem (3) satisfies

where p ∗ is the best approximation polynomial of f with degree at most 2n − 1 and \(\|f-p^*\|{ }_{C^{1}[-1,1]}=\max _{0\le j\le 1}\|f^{(j)}-{p^*}^{(j)}\|{ }_{\infty }\).

Hermite-Fejér interpolation has plenty of use in computer geometry aided geometric design with boundary conditions including derivative information. The convergence rate under the infinity norm has been extensively studied in [5,6,7, 11, 14]. The efficient algorithm on the fast implementation of Hermite-Fejér interpolation at zeros of Jacobi polynomial can be found in [17].

In this paper, the following convergence rates of Hermite-Fejér interpolation \(H^*_{2n-1}(f,x)\) at Gauss-Jacobi pointsystems are considered.

-

If f is analytic in \(\mathcal {E}_{\rho }\) with |f(z)|≤ M, then

$$\displaystyle \begin{aligned} \|f(x)-H^*_{2n-1}(f,x)\|{}_{\infty}=\left\{\begin{array}{ll} {\displaystyle O\left(\frac{4\tau_nM[2n\rho^2+(1-2n)\rho]}{(\rho-1)^2\rho^{2n}}\right)},& \gamma\le 0,\\ {\displaystyle O\left(\frac{n^{2+2\gamma}[2n\rho^2+(1-2n)\rho]}{(\rho-1)^2\rho^{2n}}\right)},&\gamma> 0\end{array},\right. \, \gamma=\max\{\alpha,\beta\} \end{aligned} $$(9)where

$$\displaystyle \begin{aligned} \tau_n=\left\{\begin{array}{ll} O(n^{-1.5-\min\{\alpha,\beta\}}\log n),& \mbox{if }{-1<\min\{\alpha,\beta\}\le\gamma\le -\frac{1}{2}}\\ O(n^{2\gamma-\min\{\alpha,\beta\}-\frac{1}{2}}),& \mbox{if }{-1<\min\{\alpha,\beta\}\le -\frac{1}{2}<\gamma\le 0}\\ O(n^{2\gamma}),& \mbox{if }{-\frac{1}{2}<\min\{\alpha,\beta\}\le \gamma}\end{array}.\right. \end{aligned} $$(10) -

If f(x) has an absolutely continuous (r − 1)st derivative f (r−1) on [−1, 1] for an integer r ≥ 3, and a rth derivative f (r) of bounded variation V r = Var(f (r)) < ∞, then

$$\displaystyle \begin{aligned} \|f(x)-H^*_{2n-1}(f,x)\|{}_{\infty}=\left\{\begin{array}{ll} {\displaystyle O\left(n^{-r}\log n\right)}, &\gamma\leq -\frac{1}{2}, \\ {\displaystyle O\left(n^{2\gamma-r+1}\right)},&\gamma>-\frac{1}{2},\end{array}\right. \end{aligned} $$(11)while if f(x) is differentiable and f′(x) is bounded on [−1, 1], then

$$\displaystyle \begin{aligned} \begin{array}{lll} \|f(x)-H^*_{2n-1}(f,x)\|{}_{\infty} &=&\left\{\begin{array}{ll} {\displaystyle O\left(n^{-1}\log n\right)}, &\gamma\leq -\frac{1}{2}, \\ {\displaystyle O\left(n^{2\gamma}\right)},&\gamma>-\frac{1}{2}.\end{array}\right.\end{array} \end{aligned}$$

Comparing these results with

which is sharp and attainable (see Fig. 2), we see that \(H^*_{2n-1}(f,x)\) converges much faster than H 2n−1(f, x) for analytic functions or functions of higher regularities (see Fig. 1). Particularly, H 2n−1(f, x) diverges at Gauss-Jacobi pointsystems with γ ≥ 0, whereas, \(H^*_{2n-1}(f,x)\) converges for functions analytic in the Bernstein ellipse or of finite limited regularity.

For simplicity, in the following we abbreviate \(x_k^{(n)}\) as x k, \(\ell _k^{(n)}(x)\) as ℓ k(x), \(h_k^{(n)}(x)\) as h k(x), and \(b_k^{(n)}(x)\) as b k(x). A ∼ B denotes there exist two positive constants c 1 and c 2 such that c 1 ≤|A|∕|B|≤ c 2.

2 Main Results

Suppose f(x) satisfies a Dini-Lipschitz condition on [−1, 1], then it has the following absolutely and uniformly convergent Chebyshev series expansion

where the prime denotes summation whose first term is halved, \(T_j(x)=\cos {}(j\cos ^{-1}x)\) denotes the Chebyshev polynomial of degree j.

Lemma 1

-

(i)

(Bernstein [ 2 ]) If f is analytic with |f(z)|≤ M in the region bounded by the ellipse \(\mathcal {E}_{\rho }\) with foci ± 1 and major and minor semiaxis lengths summing to ρ > 1, then for each j ≥ 0,

$$\displaystyle \begin{aligned} |c_j|\le {\displaystyle\frac{2M}{\rho^j}}. \end{aligned} $$(13) -

(ii)

(Trefethen [ 13 ]) For an integer r ≥ 1, if f(x) has an absolutely continuous (r − 1)st derivative f (r−1) on [−1, 1] and a rth derivative f (r) of bounded variation V r = Var(f (r)) < ∞, then for each j ≥ r + 1,

$$\displaystyle \begin{aligned} |c_j|\le{\displaystyle\frac{2V_r}{\pi j(j-1)\cdots(j-r)}}. \end{aligned} $$(14)

Suppose − 1 < x n < x n−1 < ⋯ < x 1 < 1 in decreasing order are the roots of \(P_n^{(\alpha ,\beta )}(x)\) (α, β > −1), and \(\{w_j\}_{j=1}^n\) are the corresponding weights in the Gauss-Jacobi quadrature.

Lemma 2

For j = 1, 2, …, n, it follows

where σ n = +1 for even n and σ n = −1 for odd n.

Proof

Let \(z_n=\int _{-1}^1(1-x)^{\alpha }(1+x)^{\beta }[P_n^{(\alpha ,\beta )}(x)]^2dx\) and K n the leading coefficient of \(P_n^{(\alpha ,\beta )}(x)\). From Abramowitz and Stegun [1], we have

Furthermore, by Szegö [12, (15.3.1)] (also see Wang et al. [15]), we obtain

which implies the desired result (15). □

Lemma 3

For j = 1, 2, …, n, it follows

Proof

From \( w_j=O\left ( \frac {2^{\alpha +\beta +1}\pi }{n}\left (\sin \frac {\theta _j}{2}\right )^{2\alpha +1}\left (\cos \frac {\theta _j}{2}\right )^{2\beta +1}\right ) \) Szegö [12, (15.3.10)], we see for \(x_j=\cos \theta _j\) that \( (1-x_j^2)w_j=O\left (\frac {2^{\alpha +\beta +3}\pi }{n}\left (\sin \frac {\theta _j}{2}\right )^{2\alpha +3}\left (\cos \frac {\theta _j}{2}\right )^{2\beta +3}\right ) \), which derives the desired result. □

Lemma 4 ([10, 16])

For t ∈ [−1, 1], let x m be the root of the Jacobi polynomial \(P_n^{(\alpha ,\beta )}\) which is closest to t. Then for k = 1, 2, …, n, we have

Lemma 5 (Szegö [12, Theorem 8.1.2])

Let α, β be real but not necessarily greater than − 1 and \(x_{k}=\cos \theta _{k}\) . Then for each fixed k, it follows

where j k is the kth positive zero of Bessel function J α.

Lemma 6

For k = 1, 2, …, n, it follows

Proof

Note that \(P_n^{(\alpha ,\beta )}(x)\) satisfies the second order linear homogeneous Sturm-Liouville differential equation [12, (4.2.1)]

By \(\omega _n(x)=\frac {P_n^{(\alpha ,\beta )}(x)}{K_n}\), we get

In addition, by Lemma 5 with \(x_j=\cos \theta _j\), we see that \(\theta _1\sim \frac {1}{n}\). Similarly, by \(P_n^{(\alpha ,\beta )}(-x)=(-1)^nP_n^{(\beta ,\alpha )}(x)\) we have \(\theta _n\sim \frac {1}{n}\). These together yield

and then by (20) it deduces the desired result. □

Theorem 1

Suppose \(\{x_j\}_{j=1}^n\) are the roots of \(P_n^{(\alpha ,\beta )}(x)\) with α, β > −1, then the Hermite-Fejér interpolation (5) for f analytic in \(\mathcal {E}_{\rho }\) with |f(z)|≤ M at \(\{x_j\}_{j=1}^n\) has the convergence rate (9).

Proof

Since the Chebyshev series expansion of f(x) is uniformly convergent under the assumptions, and the error of Hermite-Fejér interpolation (5) on Chebyshev polynomials satisfies \(|E(T_{j},x)|=|T_j(x)-H^*_{2n-1}(T_{j},x)|=0\) for j = 0, 1, …, 2n − 1, then it yields

Furthermore, \(|E(T_{j},x)|=|T_j(x)-\sum _{i=1}^nT_j(x_i)h_i(x)-\sum _{i=1}^nT_j^{\prime }(x_i)b_i(x)|\). In the following, we will focus on estimates of |E(T j, x)| for j ≥ 2n.

In the case γ ≤ 0: Notice that the pointsystem is normal which implies h i(x) ≥ 0 for all i = 1, 2, …, n and for all x ∈ [−1, 1],

Then we have

Additionally, by Lemma 2, it obtains for j = 2n, 2n + 1, … that

(U j−1 is the second kind of Chebyshev polynomial of degree j − 1) since \(\sqrt {\frac {n!\Gamma (n+\alpha +\beta +1)} {\Gamma (n+\alpha +1)\Gamma (n+\beta +1)}}\) is uniformly bounded in n forα, β > −1 due to

which implies \(\frac {n!\Gamma (n+\alpha +\beta +1)} {\Gamma (n+\alpha +1)\Gamma (n+\beta +1)}\) is uniformly bounded in n and then \(\sqrt {\frac {n!\Gamma (n+\alpha +\beta +1)} {\Gamma (n+\alpha +1)\Gamma (n+\beta +1)}}\) is uniformly bounded. Here \(\Lambda _n=\max _{x\in [-1,1]}\sum _{i=1}^n|\ell _i(x)|\) is the Lebesgue constant. Then from

(see Szegö [12, pp 168, 354]) and

we have

Then by (22) and (23), we find |E(T j, x)|≤ 2 + jτ n < 2jτ n for j ≥ 2n, and consequently

which, directly following [18], leads to the desired result.

In the case γ > 0: From \(|E(T_{j},x)|=|T_j(x)-\sum _{i=1}^nT_j(x_i)h_i(x)-\sum _{i=1}^nT_j^{\prime }(x_i)b_i(x)|\), by Lemmas 3 and 6 we obtain

and

These together with

and then \(|E(T_{j},x)|=O\left (j^{2+2\gamma }\right )\) for j ≥ 2n, similar to the above proof in the case of γ ≤ 0, implies the desired result. □

From the definition of τ n, we see that when \(\alpha =\beta =-\frac {1}{2}\) the convergence order on n is the lowest. In addition, if f is of limited regularity, we have

Lemma 7 (Vértesi [14])

Suppose \(\{x_j\}_{j=1}^n\) are the roots of \(P_n^{(\alpha ,\beta )}(x)\) , for every continuous function f(x) we have

where w(f;t) = w(t) is the modulus of continuity of f(x), and \({\bar \gamma }=\max \left (\alpha , \beta , -\frac {1}{2}\right )\).

Theorem 2

Suppose \(\{x_j\}_{j=1}^n\) are the roots of \(P_n^{(\alpha ,\beta )}(x)\) (α, β > −1), and f(x) has an absolutely continuous (r − 1)st derivative f (r−1) on [−1, 1] for some r ≥ 3, and a rth derivative f (r) of bounded variation V r < ∞, then the Hermite-Fejér interpolation (5) at \(\{x_j\}_{j=1}^n\) has the convergence rate (11).

Proof

Consider the special functional L(g) = E n(g, x), where E n(g, x) is defined for ∀g ∈ C 1([−1, 1]) by

By the Peano kernel theorem for n ≥ r (see Peano [9] or Kowalewski [8]), E n(f, x) can be represented as

with \(K_{r}(t) = \frac {1}{(r-1)!}L\left ((x-t)^{r-1}_{+}\right )\) for r = 3, 4, ⋯, that is

where

Moreover, noting that

we get the following identity

where K 2(t) is defined by

In addition, it can be easily verified that K s(−1) = K s(1) = 0 for s = 2, 3, ….

Since f (r) is of bounded variation, directly applying the similar skills of Theorem 2 and Lemma 4 in [16], we get

and

respectively. Then from (27) and (28), we can obtain that

In addition, by Lemma 7, we have

Together (30) and (31), we can obtain the desired results by using

Finally, We use a function of analytic \(f(x)=\frac {1}{1+25x^2}\) and a function of limited regularity f(x) = |x|5 to show that the convergence rate of \(\|f(x)-H^*_{2n-1}(f,x)\|{ }_{\infty }\) is dependent on α and β in Fig. 3.

Notes

- 1.

In fact, Grünwald in [7] considered more general cases with any vector \(\{d_k^{(n)}\}\) instead of \(\{f'(x_k^{(n)})\}\).

References

Abramowitz, M., Stegun, I.A.: Handbook of Mathematical Functions. National Bureau of Standards, Washington (1964)

Bernstein, S.: Sur l’ordre de la meilleure approximation des fonctions continues par les polynômes de degré donné. Mem. Cl. Sci. Acad. Roy. Belg. 4, 1–103 (1912)

Faber, G.: Über die interpolatorische Darstellung stetiger Funktionen. Jahresber. Deut. Math. Verein. 23, 192–210 (1914)

Fejér, L.: Über Interpolation, Nachrichten der Gesellschaft der Wissenschaften zu Göttingen Mathematisch-physikalische Klasse, 66–91 (1916)

Fejér, L.: Lagrangesche interpolation und die zugehörigen konjugierten Punkte. Math. Ann. 106, 1–55 (1932)

Fejér, L.: Bestimmung derjenigen Abszissen eines Intervalles, für welche die Quadratsumme der Grundfunktionen der Lagrangeschen Interpolation im Intervalle ein Möglichst kleines Maximum Besitzt. Ann. della Sc. Norm. Super. di Pisa 1, 263–276 (1932)

Grünwald, G.: On the theory of interpolation. Acta Math. 75, 219–245 (1942)

Kowalewski, G.: Interpolation und Genäherte Quadratur. Teubner-Verlag, Leipzig (1932)

Peano, G.: Resto nelle formule di quadrature, espresso con un integrale definito. Rom. Acc. L. Rend. 22, 562–569 (1913)

Sun, X.: Lagrange interpolation of functions of generalized bounded variation. Acta Math. Hungar. 53, 75–84 (1989)

Szabados, J.: On the order of magnitude of fundamental polynomials of Hermite interpolation. Acta Math. Hungar. 61, 357–368 (1993)

Szegö, G.: Orthogonal polynomials, vol. 23. Colloquium Publications, Providence (1939)

Trefethen, L.N.: Approximation Theory and Approximation Practice. SIAM, Philadelphia (2012)

Vértesi, P.: Notes on the Hermite-Fejér interpolation based on the Jacobi abscissas. Acta Math. Acad. Sci. Hung. 24, 233–239 (1973)

Wang, H., Huybrechs, D., Vandewalle, S.: Explicit barycentric weights for polynomial interpolation in the roots or extrema of classical orthogonal polynomials. Math. Comput. 290, 2893–2914 (2012)

Xiang, S: On interpolation approximation: convergence rates for interpolation for functions of limited regularity. SIAM J. Numer. Anal. 54, 2081–2113 (2016)

Xiang, S., He, G.: The fast implementation of higher order Hermite-Fejér interpolation. SIAM J. Sci. Comput. 37, A1727–A1751 (2015)

Xiang, S., Chen, X., Wang, H: Error bounds for approximation in Chebyshev points. Numer. Math. 116, 463–491 (2010)

Acknowledgements

This author “Shuhuang Xiang” was supported partly by NSF of China (No.11771454). This author “Guo He” was supported partly by the Fundamental Research Funds for the Central Universities (No. 21618333), and the Opening Project at the Sun Yat-sen University (No. 2018010).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2020 The Author(s)

About this paper

Cite this paper

Xiang, S., He, G. (2020). On the Convergence Rate of Hermite-Fejér Interpolation. In: Sherwin, S.J., Moxey, D., Peiró, J., Vincent, P.E., Schwab, C. (eds) Spectral and High Order Methods for Partial Differential Equations ICOSAHOM 2018. Lecture Notes in Computational Science and Engineering, vol 134. Springer, Cham. https://doi.org/10.1007/978-3-030-39647-3_50

Download citation

DOI: https://doi.org/10.1007/978-3-030-39647-3_50

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-39646-6

Online ISBN: 978-3-030-39647-3

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)