Abstract

We present a spectral numerical scheme for solving Helmholtz and Laplace problems with Dirichlet boundary conditions on an unbounded non-Lipschitz domain \(\mathbb {R}^2 \backslash \overline {\Gamma }\), where Γ is a finite collection of open arcs. Through an indirect method, a first kind formulation is derived whose variational form is discretized using weighted Chebyshev polynomials. This choice of basis allows for exponential convergence rates under smoothness assumptions. Moreover, by implementing a simple compression algorithm, we are able to efficiently account for large numbers of arcs as well as a wide wavenumber range.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

We seek solutions of Helmholtz and Laplace equations in a two-dimensional plane after removing a finite collection of open finite curves—also called arcs. This setting can be found areas such as structural and mechanical engineering [2], and biomedical imaging [12]. Such problems pose the following challenges: (1) unbounded domains, which call for boundary integral methods with carefully chosen radiation conditions; (2) singular behaviors of solutions near arc endpoints; and (3) large number of degrees of freedom when the wavenumber or number of arcs increase.

Our approach is to recast the problem as a system of boundary integral equations defined on the arcs, so as to obtain an integral representation of the volume solution. Well-posedness for a single arc was proven in [10], with an extension to the multiple arcs case given in [6]. We will consider numerical approximations of the resulting surface densities based on Galerkin-Bubnov discretizations of the corresponding system of boundary integral equations.

In the present note, we start by briefly introducing a spectral scheme to account for general arcs as well as for a wide wavenumber range. We show that significant reduction in both memory consumption and computational work can be achieved by an ad hoc matrix compression algorithm. Moreover, we establish detailed interdependencies between compression parameters and accuracy. Numerical experiments validate our claims and point out further improvements.

2 Continuous Model Problem

Let the canonical domain (−1, 1) ×{0} be denoted by \(\widehat {\Gamma }\). We say that \(g : \widehat {\Gamma } \rightarrow \mathbb {C}\) is ρ-analytic if the function t↦g(t, 0) can be extended to an analytic function on the Bernstein ellipse of parameter ρ > 1 (cf. [11, Chapter 8]). We say that \(\Lambda \subset \mathbb {R}^2\) is a regular Jordan arc of class \(\mathcal {C}^m\), for \(m \in \mathbb {N}\), if it is the image of a bijective parametrization denoted by r = (r 1, r 2), such that its components are \(\mathcal {C}^m(\widehat {\Gamma })\)-functions, \(\mathbf {r} : \overline {\widehat {\Gamma }} \rightarrow \overline {\Lambda }\) and, ∥r ′(t)∥2 > 0, \(\forall \ t \in \overline {\widehat {\Gamma }}\), where \(\left \lVert \cdot \right \rVert _{2}\) is the Euclidean norm. Similarly, we define ρ-analytic arcs as those whose components are ρ-analytic. Throughout, we will assume that for any Λ regular Jordan arc, there exists an extension of Λ to \(\tilde {\Lambda }\), which is a closed and keep the same regularity.

Consider a finite number \(M\in \mathbb {N}\) of at least C

1 arcs, written \(\{\Gamma _i \}_{i=1}^M\), such that their closures are mutually disjoint. Moreover, we assume that there are disjoint domains Ωi whose boundaries are given by extensions \(\partial \Omega _i = \widetilde {\Gamma }_i\), for  . Let us define

. Let us define

We say that Γ is of class \(\mathcal {C}^m\), \(m \in \mathbb {N}\), if each arc Γi is of class \(\mathcal {C}^m\) and analogously for the ρ-analytic case. For i ∈{1, …, M}, let \({\mathbf {r}}_i:\widehat {\Gamma }\rightarrow \Gamma _i\) and \(g_i : \overline {\Gamma }_i \rightarrow \mathbb {C}\). We claim that g = (g 1, …, g M) is of class \(\mathcal {C}^m(\Gamma )\) if \(g_i \circ {\mathbf {r}}_i \in \mathcal {C}^m({\widehat {\Gamma }})\), for i ∈{1, …, M}, as well as for the analytic case.

Let \(G\subseteq \mathbb {R}^d\), d = 1, 2, be an open domain. For \(s \in \mathbb {R}\), we denote by H s(G) the standard Sobolev spaces, by \(H_{loc}^s(G)\) their locally integrable counterparts [9, Section 2.3], and by \(\widetilde {H}^{-s}(G)\) the corresponding dual spaces. The corresponding duality product (when the dual space of L 2(G) is identified with itself) is denoted 〈⋅, ⋅〉G. Finally, \(\widetilde {H}_{\langle 0 \rangle }^{s}(G)\) refers to mean-zero spaces [6, Section 2.3]. We will also make use of the following Hilbert space in \(\mathbb {R}^2\):

where \(\mathcal {D}^*(G)\) is the dual space of \(\mathcal {C}^\infty (G) = \cap _{n >1} \mathcal {C}^n(G)\). For \(s\in \mathbb {R}\) and for the finite union of disjoint open arcs Γ, we define piecewise spaces as

Spaces \(\widetilde {\mathbb {H}}^s(\Gamma )\) and \(\widetilde {\mathbb {H}}_{\langle 0 \rangle }^s(\Gamma )\) are defined similarly. Also, \({\mathbb {H}}^s(\widehat {\Gamma })\) is to be understood as the Cartesian product \(\prod _{i=1}^MH^s(\widehat {\Gamma })\). Finally, given an open bounded neighborhood G i such that Γi ⊂ ∂G i, Dirichlet traces are defined as extensions to H s(G i), for s ≥ 1∕2, of the following operator (applied to smooth functions):

where n i(y) is the unitary vector with direction \((r^{\prime }_{i,2}(t),-r^{\prime }_{i,1}(t))\) and t such that r(t) = y. For a function u defined in an open neighborhood of Γi such that \(\gamma ^+_i u = \gamma ^-_i u\), we denote \(\gamma _i u := \gamma _i^\pm u\).

Problem 1 (Volume Problem)

Let \(\mathbf {g}\in \mathbb {H}^{\frac {1}{2}}(\Gamma )\) and κ ≥ 0. We seek \(U \in H^1_{loc}(\Omega )\) such that

The behavior at infinity (3) depends on κ in the following way: if κ > 0, we employ the classical Sommerfeld condition [9, Section 3.9]. If κ = 0, we seek for solutions U ∈ W( Ω). This last condition was discussed in detail in [6, Remarks 3.9, 4.2 and 4.5] with uniqueness proofs for κ ≥ 0 provided in [6, Propositions 3.8 and 3.10].

For κ ≥ 0, we can express U solution of Problem 1 as

where

denotes the single layer potential generated at a curve Γi with G κ the corresponding fundamental solution, defined as in [9, Section 3.1]. It is direct from (4) that U solves (1)–(2) in Ω (see [9, Theorem 3.1.1]). Also, it displays the desired behavior at infinity as long as each λ i lies in the right functional space [6, Section 4]. In order to find the surface densities λ i, we take Dirichlet traces \(\gamma ^\pm _i\) of the S L j and impose boundary conditions (2). This naturally leads to the definition of weakly singular boundary integral operators:

and an equivalent boundary integral equation problem.

Problem 2 (Boundary Integral Problem)

Let \(\mathbf {g} \in \mathbb {H}^{\frac {1}{2}}(\Gamma )\). For κ > 0, we seek \(\boldsymbol {\lambda }=(\lambda _1,\ldots ,\lambda _M)\in \widetilde {\mathbb {H}}^{-\frac {1}{2}}(\Gamma )\) such that

where \( \boldsymbol {\mathcal {L}}[\kappa ]:\widetilde {\mathbb {H}}^{-\frac {1}{2}}(\Gamma )\rightarrow {\mathbb {H}}^{\frac {1}{2}}(\Gamma )\) is a matrix operator with entries \(\boldsymbol {\mathcal {L}}[\kappa ]_{ij} = \mathcal {L}_{ij}[\kappa ]\), for  . If κ = 0, we seek \(\boldsymbol {\lambda } \in \widetilde {\mathbb {H}}^{-\frac {1}{2}}_{\langle 0 \rangle }(\Gamma )\), given g in the dual space of the aforementioned space.

. If κ = 0, we seek \(\boldsymbol {\lambda } \in \widetilde {\mathbb {H}}^{-\frac {1}{2}}_{\langle 0 \rangle }(\Gamma )\), given g in the dual space of the aforementioned space.

Theorem 1 (Theorem 4.13 in [6])

For κ > 0, Problem 2 has a unique solution \(\boldsymbol {\lambda } \in \widetilde {\mathbb {H}}^{-\frac {1}{2}}(\Gamma )\) , whereas for κ = 0 a unique solution exists in the subspace \(\boldsymbol {\lambda } \in \widetilde {\mathbb {H}}^{-\frac {1}{2}}_{\langle 0 \rangle }(\Gamma )\) . Also, the following continuity estimate holds

3 Spectral Discretization

We present a family of finite dimensional subspaces in \(\widetilde {\mathbb {H}}^{-\frac {1}{2}}(\Gamma )\) that can be used to approximate the solution of Problem 2 (cf. [5, 7]). Let \(\mathbb {T}_N(\widehat {\Gamma })\) denote the space spanned by first kind Chebyshev polynomials, denoted by \(\{ T_n \}_{n=0}^N\), of degree lower or equal than N on \(\widehat {\Gamma }\), orthogonal with the L 2(−1, 1) inner product, under the weight w −1 with \(w(t):= \sqrt {1-t^2}\). Now, let us construct elements \(p^i_n = T_n \circ {\mathbf {r}}_i^{-1}\) over each arc Γi spanning the space \(\mathbb {T}_N(\Gamma _i)\). For practical reasons, we define the normalized space:

We account for edge singularities by multiplying the basis \(\{\bar {p}_n^i\}_{n=0}^N\) by a suitable weight:

wherein \(w_i:=w\circ {\mathbf {r}}^{-1}_i\). The corresponding basis for \({\mathbb {Q}}_N(\Gamma _i)\) will be denoted \(\{q^i_n\}_{n=0}^N\). By Chebyshev orthogonality, we can easily define the mean-zero subspace \( {\mathbb {Q}}_{N,{\langle 0 \rangle }}(\Gamma _i):={\mathbb {Q}}_N(\Gamma _i) \setminus {\mathbb {Q}}_0(\Gamma _i),\) spanned by \(\{ q^i_n \}^N_{n=1}\). With this definitions, we set the discretization space for a Galerkin-Bubnov solution of Problem 2 as

Problem 3 (Linear System)

For κ > 0, let \(N \in \mathbb {N}\) and \(\mathbf {g}\in \mathbb {H}^{\frac {1}{2}}( \Gamma )\) be the same as in Problem 2. Then, we seek coefficients \(\boldsymbol {\mathfrak {u}}=(\boldsymbol {\mathfrak {u}}_1,\ldots ,\boldsymbol {\mathfrak {u}}_M)\in \mathbb {C}^{M(N+1)}\), such that

Therein, we have defined the Galerkin matrix \(\boldsymbol {\mathsf {L}}[\kappa ] \in \mathbb {C}^{M(N+1) \times M(N+1)}\) composed of matrix blocks \(\mathsf {L}_{ij}[\kappa ]\in \mathbb {C}^{(N+1) \times (N+1)}\) whose entries are

There, \(\widehat {\mathcal {L}}_{ij}[\kappa ]\) is the weakly-singular operator whose kernel is parametrized by r i, r j and right-hand \(\boldsymbol {\mathfrak {g}}=(\boldsymbol {\mathfrak {g}}_1,\ldots ,\boldsymbol {\mathfrak {g}}_M) \in \mathbb {C}^{M(N+1)}\) with components

where \(\widehat {g}_i = g_i \circ {\mathbf {r}}_i\). The approximation \(\boldsymbol {\lambda }_N \in \mathbb {H}_N[\kappa ]\) is constructed as

For k = 0 we need g as in Problem 2; we also have \(\boldsymbol {\mathfrak {u}} \in \mathbb {C}^{MN}\), and \(\boldsymbol {\mathsf {L}}[0] \in \mathbb {C}^{MN \times MN}\) since the approximation space is \(\mathbb {H}_N[0]\). By conformity and density of these spaces in \(\widetilde {\mathbb {H}}^{-\frac {1}{2}}(\Gamma )\), one derives the following result:

Theorem 2 (Theorem 4.23 [5])

Let κ ≥ 0, \(m \in \mathbb {N}\) with m > 2, \(\Gamma \in \mathcal {C}^m\) , \(\mathbf {g} \in \mathcal {C}^m(\Gamma )\) , and λ be the only solution of Problem 2 . Then, there exists \(N_0 \in \mathbb {N}\) such that for every \(N> N_0 \in \mathbb {N}\) there is a unique \(\boldsymbol {\lambda }_N \in \mathbb {H}_N[\kappa ]\) solution of Problem 3 . Moreover, the following convergence rates hold

Moreover, if Γ and g are ρ-analytic with ρ > 1, we have the following super-algebraic convergence rates

where C( Γ, κ) is a positive constant, which do not depends on N.

Remark 1

Observe that the constants C( Γ, κ) and N 0 depend of the geometry and frequency. To the best of our knowledge previous convergence results for 2D arcs are somehow limited. For intervals, the result was established in [7] whereas for more general arc results are only obtained for the Laplace case [1]. Super-algebraic convergence rates can be achieved by the method detailed in [3], though their scheme is limited to intervals and to the case of elliptic problems (N 0 = 0). More complex cases are still an open problem.

4 Numerical Implementation and Compression Algorithm

Before fleshing out our proposed compression technique, we explain how L[κ] and \(\boldsymbol {\mathfrak {g}}\) of Problem 3 are computed. For the right-hand side, one must compute integrals of the form:

which corresponds to Fourier-Chebyshev coefficients of \(\widehat {g}(t)\) and can be approximated using the Fast Fourier Transform [11]. Computations for matrix terms L ij[κ] are split into two groups: (a) cross-interactions, where test and trial functions supports lie along curves Γi, Γj with i ≠ j; and (b) self-interactions, where both trial and test functions are defined on the same curve. As in the case of cross-interactions the integral kernel is smooth, the same computational procedure of the right-hand side is used.

For self-interactions, the kernel function has a singularity that can be characterized as

for \(t,s\in \widehat {\Gamma }\), where J 0 is the zeroth-order first kind Bessel function, and G r is a regular function. Thus, integration for the regular part is done as in the cross-interaction case, while integrals with the first term as kernel are obtained by convolution as integrals for \(\log |t-s|\) are known (see [7, Remark 4.2]).

Yet, as κ is increased, large values of N will be required. Hence, the need to compress the resulting matrix terms. As stated in [11, Chapters 7 and 8], the regularity of a function controls the decay of its Fourier-Chebyshev coefficients. Hence, as the entries of the matrix L[κ] are precisely such coefficients, for a smooth kernel one observes fast decaying terms. This implies that we can select small blocks to approximate the matrix and obtain a sparse approximation by discarding the remaining entries, based on a predetermined tolerance 𝜖 > 0. Specifically, the kernel function is smooth when we compute cross-interactions. Let the routine Quadrature(l,m) compute the term (l, m) of this interaction matrix using a 2D Gauss-Chebyshev quadrature. Given a tolerance 𝜖 > 0, we minimize the number of computations needed by performing the following binary search:

The algorithm returns the minimum number of columns required, N cols, by searching in the first row the minimum index such that the matrix entries’ absolute value is lower than 𝜖. The binary search is restricted to a depth \(L_{\max }\in \mathbb {N}\). The same procedure is used to estimate the number of rows, N rows, by executing a binary search in the first column. Once N cols and N rows are selected, we define \(N_\epsilon := \max \{ N_{rows}, N_{cols }\}\) and compute the block of size N 𝜖 × N 𝜖 as in the full implementation.

The matrix compression percentage will strongly depend on the regularity of the arcs involved. For ρ-analytic arcs, using [11, Theorem 8.1] we can prove the lower bound:

where Υ is an upper bound of the absolute value of the kernel in the corresponding Bernstein ellipse. However, since compression is done by a binary search, the bound for the compression rate depends on L max as

Compression of self-interaction blocks does not follow the same ideas. In fact, these blocks can be characterized as two perturbations over the canonical case, \(\Gamma = \widehat {\Gamma }\) for κ = 0, leading to a diagonal matrix. Namely, these are

-

1.

A low frequency perturbation caused by the mapping \(r_i:\widehat {\Gamma } \mapsto \Gamma \), similar to the cross-interaction case.

-

2.

A frequency perturbation that creates banded matrices.

In order to reduce memory consumption—though not computational time—we discard the entries of the self-interaction matrices lower than the given tolerance.

As expected, matrix compression induces an extra error as it perturbs the original linear system solved by λ N in Problem 3. We denote by L 𝜖[k] the matrix generated by the compression algorithm with tolerance 𝜖, and define the matrix difference ΔL 𝜖[k]:= L 𝜖[k] −L[k]. We seek to control the solution \(\boldsymbol {\mathfrak {u}}^\epsilon =\boldsymbol {y{u}} +\Delta \boldsymbol {\mathfrak {u}}\) of

where \(\boldsymbol {\mathfrak {u}}\) and \(\boldsymbol {\mathfrak {g}}\) are the same as in Problem 3. In order to bound this error, we will assume that, for every pair of indices (i, j) in the matrix L[k], we have,

Theorem 3

Let \(N \in \mathbb {N}\) be such there is only one λ N solution of Problem 3 . Then, there is a constant C( Γ, κ) > 0, not depending on N, such that

Proof

By [8, Section 1.13.2] we have that

and thus, we need to estimate \(\left \lVert \Delta \boldsymbol {\mathsf {L}}_{\epsilon }[k]\right \rVert _{2}\) and \(\left \lVert (\boldsymbol {\mathsf {L}}[k])^{-1}\right \rVert _{2}\). The bound for the first term is direct from (5) and matrix norm definitions. For the term \(\left \lVert (\boldsymbol {\mathsf {L}}[k])^{-1}\right \rVert _{2}\), by [9, Theorem 4.2.9], it holds

In particular, set \(\mathbf {g} \in \mathbb {H}^{\frac {1}{2}}(\Gamma )\) with only its first N Chebyshev coefficients different from zero, and let λ N be the solution of Problem 3 for this choice of g. By the above estimate, we have\( \|\boldsymbol {\lambda }_N \|{ }_{\widetilde {\mathbb {H}}^{-\frac {1}{2}}(\Gamma )} \leq C \|\mathbf {g}\|{ }_{{\mathbb {H}}^{\frac {1}{2}}(\Gamma )}\). On the other hand, by [4, Proposition 3.1], one retrieves

Therefore, we can bound \(\boldsymbol {\mathsf {L}}[k]^{-1} \boldsymbol {\mathfrak {g}}\) as

as stated. □

We can also estimate the error introduced by the compression algorithm in terms of the energy norm. In order to do so, define \((\boldsymbol {\lambda }_N^\epsilon )_i := \sum _{m=0}^N (\boldsymbol {\mathfrak {u}}_i^\epsilon )_m q_m^i\) in Γi. By the same arguments in the above proof, we obtain

where g is the same that in Problem 2.

Remark 2

Our compression algorithm produces a faster and less memory demanding implementation of the spectral Galerkin method at the cost of accuracy loss, similar to fast multipole or hierarchical matrices methods. Moreover, once we have compressed the matrix, we can implement a fast matrix vector product.

5 Numerical Results

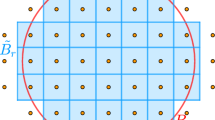

To illustrate the above claims, Fig. 1 presents convergence results for different wavenumbers, κ = 0, 25, 50, 100 for a configuration of M = 28 arcs. As the chosen geometry and excitation are given by analytic functions, Theorem 2 predicts exponential rate of convergence as observed numerically.

(a) Smooth geometry with M = 28 open arcs parametrized as \({\mathbf {r}}_i(t) =(a_it,c_i\sin {}(b_i t)+d_i)\), with a i ∈ [0.14, 0.25], b i ∈ [0, 0.2], c i ∈ [1, 2], d i ∈ [0, 20], t ∈ [−1, 1]. (b) Convergence results for different wavenumbers and a planewave excitation along (1, 1). Errors computed against an overkill solution using N = 660 per arc

Table 1 provides matrix compression results for κ = 100 and for the same geometry of Fig. 1. It presents the percentage of non-zero entries (%NNZ) and relative errors as bounded in Theorem 3 as functions of the maximum level of binary search (L max), tolerances (𝜖), and polynomial order per arc (Order). For low orders (Order < 60), relative errors are quite large, and therefore, most of the matrix terms are kept. This is due to an insufficient number of matrix entries to solve the problem with good accuracy (see Fig. 1), rendering compression pointless. On the other hand, once convergence is achieved, the compression error drastically decreases along with the percentage of matrix terms stored.

References

Atkinson, K.E., Sloan, I.H.: The numerical solution of first-kind logarithmic-kernel integral equations on smooth open arcs. Math. Comput. 56(193), 119–139 (1991)

Costabel, M., Dauge, M.: Crack singularities for general elliptic systems. Math. Nachr. 235(1), 29–49 (2002)

Hewett, D.P., Langdon, S., Chandler-Wilde, S.N.: A frequency-independent boundary element method for scattering by two-dimensional screens and apertures. IMA J. Numer. Anal. 35(4), 1698–1728 (2014)

Jerez-Hanckes, C., Nédélec, J.-C.: Explicit variational forms for the inverses of integral logarithmic operators over an interval. SIAM J. Math. Anal. 44(4), 2666–2694 (2012)

Jerez-Hanckes, C., Pinto, J.: High-order Galerkin method for Helmholtz and Laplace problems on multiple open arcs. Technical Report 2018-49, Seminar for Applied Mathematics, ETH Zürich (2018)

Jerez-Hanckes, C., Pinto, J.: Well-posedness of Helmholtz and Laplace problems in unbounded domains with multiple screens. Technical Report 2018-45, Seminar for Applied Mathematics, ETH Zürich (2018)

Jerez-Hanckes, C., Nicaise, S., Urzúa-Torres, C.: Fast spectral Galerkin method for logarithmic singular equations on a segment. J. Comput. Math. 36(1), 128–158 (2018)

Saad, Y.: Iterative Methods for Sparse Linear Systems. Computer Science Series. PWS Publishing Company, Boston (1996)

Sauter, S., Schwab, C.: Boundary Element Methods. Springer Series in Computational Mathematics. Springer, Berlin (2010)

Stephan, E.P.: A boundary integral equation method for three-dimensional crack problems in elasticity. Math. Methods Appl. Sci. 8(4), 609–623 (1986)

Trefethen, L.: Approximation Theory and Approximation Practice. Other Titles in Applied Mathematics. SIAM, Philadelphia (2013)

Verrall, G., Slavotinek, J., Barnes, P., Fon, G., Spriggins, A.: Clinical risk factors for hamstring muscle strain injury: a prospective study with correlation of injury by magnetic resonance imaging. Br. J. Sports Med. 35(6), 435–439 (2001)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2020 The Author(s)

About this paper

Cite this paper

Jerez-Hanckes, C., Pinto, J. (2020). Spectral Galerkin Method for Solving Helmholtz and Laplace Dirichlet Problems on Multiple Open Arcs. In: Sherwin, S.J., Moxey, D., Peiró, J., Vincent, P.E., Schwab, C. (eds) Spectral and High Order Methods for Partial Differential Equations ICOSAHOM 2018. Lecture Notes in Computational Science and Engineering, vol 134. Springer, Cham. https://doi.org/10.1007/978-3-030-39647-3_30

Download citation

DOI: https://doi.org/10.1007/978-3-030-39647-3_30

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-39646-6

Online ISBN: 978-3-030-39647-3

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)