Abstract

In this paper, we consider a minimalistic and behavioristic view of AIS to enable a standardizable mapping of both the behavior of the system and of the learner. In this model, the learners interact with the learning resources in a given learning environment following preset steps of learning processes. From this foundation, we make several subsequent arguments. (1) All intelligent digital resources such as intelligent tutoring systems (ITS) need to be well-documented with standardized metadata scheme. We propose a learning science extension of IEEE learning object metadata (LOM). specifically, we need to consider cognitive learning principles that have been used in creating the intelligent digital resources. (2) We need to consider AIS as whole when we record system behavior. Specifically, we need to record all four components delineated above (the learners, the resources, the environments, and the processes). We point to selected learning principles from the literature as examples for implementation of this approach. We concretize this approach using AutoTutor, a conversation-based ITS, serving as a typical intelligent digital resource.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

xAPI (experience API) [1] was initially established as a generic framework for storing human learners’ activities in both formal and informal learning environments. Each xAPI statement contains elements that record a learner’s behavior, answering Who, Did, What, Where, Result, and When questions. For example, the current xAPI specification for Who is designed to uniquely identify the human learner (with tag name actor) by and identification such as email. In addition, the collection of verb for Did is also entirely for human learner actions (with tag name verb), such as attempted, learned, completed, answered, etc. (see a list of xAPI verbs [2]).

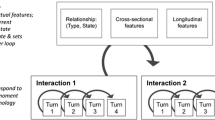

xAPI specification for actor and verb are appropriate and work well for traditional e-learning or distributed learning environments where the learning content and resources are mostly static with limited types of interactions. When considering adaptive instructional systems (AIS) such as intelligent tutoring systems (ITS), the existing specification for actor and verb may need to be extended. In fact, all existing ITS implementations use a computer to mimic a human tutor’s interactions with a human learner. To fully capture mixed-initiative interaction between the human learner and anthropomorphic computer tutors, we propose to establish an xAPI profile for AIS where behaviors for both the human learners and anthropomorphic AIS cast members are captured in the same learning record store. More importantly, the xAPI profile for AIS provides a standardized description of the behavior of anthropomorphic AIS.

In this paper, we will demonstrate the feasibility of such an xAPI profile for AIS by analyzing the AutoTutor Conversation Engine (ACE) for a conversation-based AIS called AutoTutor [3]. We will then extrapolate from this case to propose a general guideline for creating xAPI profiles for AIS. We argue that creating this kind of xAPI profile specifically for AIS constitutes a concrete and appropriate step toward establishing standards in AIS.

2 Adaptive Instruction System

Taken from the context of e-learning, the following definition of adaptivity fits well in the context of AIS:

“Adaptation in the context of e-learning is about creating a learner experience that purposely adjusts to various conditions (e.g. personal characteristics and interests, instructional design knowledge, the learner interactions, the outcome of the actual learning processes, the available content, the similarity with peers) over a period of time with the intention of increasing success for some pre-defined criteria (e.g. effectiveness of e-learning: score, time, economical costs, user involvement and satisfaction).”

Peter Van Rosmalen et al., 2006 [4]

In this definition, if “e-learning” is replaced with “learning”, we are looking at the entire educational system. In fact, the way we create curricula for different student populations (such as age and grades), build schools and learning communities, train teachers, and implement educational technologies all deliberately build toward having adaptive learning environments for students. When broadly defined, the concept and framework of AIS dates back over 40 years [5] and has been studied by generations of learning scientists [6,7,8].

3 A Four-Component Model of AIS

For the purpose of this paper, we take a minimalistic, behavioristic view of AIS that contains only four components in the following fashion:

The learners interact with the learning resources in a given learning environment following preset steps of learning processes.

The four components are learners, resources, environments, and processes. In most of the learning systems, human learners are at the center (learner centered design; [9]). There are several types of resources in AIS. Schools, classrooms, etc. are physical learning resources; teachers, librarians, etc. are human resources; static online content, audio/video, etc. are digital resources; computer tutors such as AutoTutor, etc. constitute intelligent digital resources. Types of environments and processes are classified based on type of learning theories implemented in the given AIS.

In the context of this paper, we specially consider AIS that include intelligent digital resources. For example, a conversation-based ITS such as AutoTutor [10, 11] delivers learning in a constructive learning environment that engages learners in a process that follows expectation-misconception tailored dialog in natural language. It is reasonable to assume that most of existing AISs with intelligent digital resources are created with certain guiding principles of learning.

4 Guiding Principles of Learning Systems

There have been numerous “learning principles” relevant to different levels of learning. For example, psychologists provide twenty key principles [12, 13] to answer five fundamental questions of teaching and learning (Appendix A). An IES report identified the following seven cognitive principles of learning and instruction (Appendix B) as being supported by scientific research with a sufficient amount of replication and generality [14]. Further, the 25 Learning Principles to Guide Pedagogy and the Design of Learning Environments [15] list what we know about learning and suggest how we can improve the teaching-learning interaction (Appendix C). The list provides details for each principle to foster understanding and guide implementation.

For example, Deep Questions (principle 18, Appendix C) indicates

“deep explanations of material and reasoning are elicited by questions such as why, how, what-if-and what-if not, as opposed to shallow questions that require the learner to simply fill in missing words, such as who, what, where, and when. Training students to ask deep questions facilitates comprehension of material from text and classroom lectures. The learner gets into the mindset of having deeper standards of comprehension and the resulting representations are more elaborate.”

Graesser and Person, 1994 [16]

Specifically, questions can be categorized into 3 categories and 16 types (Appendix D). Nielsen [17] extended the above taxonomy with five question types (Appendix E).

5 Learning Science Extension of LOM

Since early 2000s, the e-learning industry had been greatly benefited from IEEE LOM [18] and the Advanced Distributed Learning (ADL) SCORM [19, 20]. Most recently, there has been more effort devoted to enrich metadata for learning objects. For example, the creation of Learning Resources Metadata Initiative (LRMI) [21]. Although IEEE LOM and LRMI focus almost exclusively on the learning objects (resources), it has placeholders for other components, such as typicalAgeRangeFootnote 1 for learners; interactivityTypeFootnote 2 for processes; and contextFootnote 3 for environments. However, researchers started to notice its limitations when considered as metadata for learning objects in AIS such as ITS and it was suggested that metadata for learning contents should consider Pedagogical Identifiers [22].

It is definitional to say that all well-designed effective AIS with intelligent digital resources are based on some aspects of learning science. For example, in AutoTutor, one can easily identify the use of learning principles:

-

(6) in Appendix A: Clear, explanatory and timely feedback to students is important for learning.

-

(7) in Appendix B: Ask deep explanatory questions.

-

(18) of Appendix C: Deep Questions.

For any specific AutoTutor module, expectation-misconception tailored dialog works best if deep and complex questions (type 3) of Appendix D are asked as main question to start the dialog.

Unfortunately, detailed documentation at the level of foundational learning science is rarely available in existing AIS applications. Only a few AIS implementations provide documentation for each of the four components (learners, resources, environment, and processes) at the level of learning science. Our proposed approach is to first extend the existing metadata standards (such as IEEE LOM) to include learning science relevant metadata for each of the four components, and then make recommendations to enhance the xAPI statement. As an intuitive approach, we have “extended” the IEEE LOM with a “learning science extension” which could include a specific set of learning principles and associated implementation details (see Appendix F).

6 xAPI for AIS Behavior

Intuitively, xAPI is a way to record the process and result that the learner interacts with the learning resources in a given learning environment. Technically, xAPI is a “specification for learning technology that makes it possible to collect data about the wide range of experiences a person has (online and offline)” [23]. Functionally, xAPI

(1) lets applications share data about human performance.(2) lets you capture (big) data on human performance, along with associated instructional content or performance context information. (3) applies human (and machine) readable “activity streams” to tracking data and provides sub-APIs to access and store information about state and content. (4) enables nearly dynamic tracking of activities from any platform or software system—from traditional Learning Management Systems (LMSs) to mobile devices, simulations, wearables, physical beacons, and more.

Experience xAPI - ADL Initiative [24]

The most important components of xAPI statements are actor, verb, and activity. xAPI has been learner centered, where actor is always the learner, verb is the action of the learner, and activity is relatively flexible to include only limited information of learning environment and process.

As we have pointed out earlier, most AIS derive design and functionality from learning science theory. For each interaction between the learner and the system sophisticated computations govern progression, involving all other three components (resources, environments, and processes). For example, in AutoTutor, an expectation-misconception tailored dialog involves only one learner’s action (the input), but multiple processes and components of the AIS are involved. Some of the steps enumerated below (steps 1.3, 2.2, 3.1) are based on theories of learning.

-

1.

Evaluate learner’s input. The result is a function of

-

1.1.

stored expectations,

-

1.2.

stored misconceptions,

-

1.3.

semantic space, etc.

-

1.1.

-

2.

Construct feedback. The feedback is a function of

-

2.1.

the evaluation outcome,

-

2.2.

dialog rule, etc.

-

2.1.

-

3.

Deliver feedback. The delivery of feedback is a function of

-

3.1.

the delivery agent (teacher agent or student agent),

-

3.2.

the types of delivery technology,

-

3.3.

the time (latency) of the feedback, etc.

-

3.1.

Unfortunately, when the learning record store (LRS) records learner behavior for such an AIS, only the input and result (or feedback) are recorded, but no system behavior. To capture wholistic behavior of an AIS, we need to consider all system behavior. So we necessarily extend the current xAPI behavior data specification, such that

-

actor includes all components of the AIS. This includes not just the learner, but also digital resources such as an ITS.

-

verb includes actions of the AIS. This includes not just learner’s actions, but also the actions of the AIS.

-

activity includes extended LOM that have detailed documentation of AIS at the level of learning science.

As an example, when we tried to send systems behavior to the LRS, this is a sample list of statements from the LRS in reverse order, where statement #13 is the starting of the tutoring. Here a human learner John Doe, an intelligent digital resource is TR_DR_Q2_Basics, and Steve is the agent that represents the intelligent digital resource.

-

1.

John Doe listen Steve TR_DR_Q2_Basics on Hint/Prompt

-

2.

TR_DR_Q2_Basics follow_rule_StartExpectation John Doe

-

3.

TR_DR_Q2_Basics follow_rule_FB2MQMetaCog John Doe

-

4.

TR_DR_Q2_Basics Evaluate John Doe on TutoringPack Q1

-

5.

TR_DR_Q2_Basics follow_rule_TutorHint John Doe

-

6.

TR_DR_Q2_Basics transition John Doe on TutoringPack Q1

-

7.

John Doe answer Steve TR_DR_Q2_Basics on Main Question

-

8.

John Doe listen Steve TR_DR_Q2_Basics on Main Question

-

9.

TR_DR_Q2_Basics follow_rule_Start John Doe

-

10.

TR_DR_Q2_Basics follow_rule_StartTutoring John Doe

-

11.

TR_DR_Q2_Basics follow_rule_AskMQbyTutor John Doe

-

12.

TR_DR_Q2_Basics follow_rule_Opening John Doe

-

13.

TR_DR_Q2_Basics transition John Doe on Tutor Start

There are three actions (bold) for the learner (John Doe). First is listen to Steve (#8) and then answer (#7) Steve when the Main Question was delivered and finally listen (#1) to Steve as he delivers hints. But there are multiple actions for the AIS: five actions (#13 to #9) to prepare the delivery of the Main Question, and five actions (#6 to #2) to prepare the delivery of the hint after John Doe answered the Main Question.

7 Discussion

When we consider AIS within a simple four component model that involves the learners, resources, environments, and processes, we need to have a way to document the implementation with as much details as possible. This is especially true when we assume an AIS with intelligent digital resources is built with the guidance of learning science. To do this, we propose to extend the IEEE LOM of the intelligent digital resource to include a learning science extension. This approach in AIS is almost the same as the practice in health science in which all medicine seeking approval from the Food and Drug Administration (even over-the-counter medicine) must include detailed information, such as chemical compounds, potential side effects, and best way to administer the medicine. This information associated with medicine is for physicians and for patients. The proposed learning science extension of LOM for the intelligent digital resources in AIS is for teachers and learners. Furthermore, when it is stored in LRS, this information can be used for either post-hoc and real-time analysis of AIS.

In addition to learning science extension of LOM for intelligent digital resources, we have also proposed to extended the limitation of xAPI statements to include all behaviors of AIS. More importantly, we need to make intelligent digital resources as a legitimate actor and record its actions together with the standard descriptions of environments and processes. When we have the learning science extension of LOM for intelligent digital resources and make the LRS store action from an intelligent digital resource in the same way as a human learner’s actions, we are literally proposing a “symmetric” view of AIS: the human learner and the intelligent digital resource are exchangeable in the stored AIS behavior data (in xAPI). The only difference is that the actions of human learners are observed behavior and actions of intelligent digital resources are programmed.

8 Conclusions

We advocate a simple four component model of AIS that include learners, resources, environments, and processes. We consider this approach particularly valuable for those AIS that include intelligent digital resources. We assume intelligent digital resources are created with the guidance of learning science (such as learning principles), and consequently we proposed to use the learning science extension of IEEE LOM to document the intelligent digital resources as metadata. We further argue that behavior data records for AIS with intelligent digital resources should include behaviors of all AIS including behavior of human learners and the intelligent digital resources. The proposed learning science extension of LOM for intelligent digital resources and modified xAPI for AIS are tested in a conversation based AIS where AutoTutor is the intelligent digital resources.

Notes

- 1.

<educational> <typicalAgeRange/> </educational> take values such as target learners’ age.

- 2.

<educational> <interactivityType/> </educational> take values such as “Exposed”, “Active”, etc.

- 3.

<educational> <context/> </educational> take values such as “Training”, “Higher Education”, etc.

References

ADL Initiative Homepage. https://adlnet.gov/. Accessed 9 Feb 2019

xAPI Vocabulary & Profile Publishing Server. http://xapi.vocab.pub/. Accessed 9 Feb 2019

Graesser, A.C., Chipman, P., Haynes, B.C., Olney, A.: AutoTutor: an intelligent tutoring system with mixed-initiative dialogue. IEEE Trans. Educ. 48(4), 612–618 (2005)

Van Rosmalen, P., Vogten, H., Van Es, R., Passier, H., Poelmans, P., Koper, R.: Authoring a full life cycle model in standards-based, adaptive e-learning. J. Educ. Technol. Soc. 9(1), 72–83 (2006)

Atkinson, R.C.: Adaptive Instructional Systems: Some Attempts to Optimize the Learning Process. Stanford University, Palo Alto (1974)

Park, O.-C., Lee, J.: Adaptive instructional systems. Educ. Technol. Res. Dev. 25, 651–684 (2003)

Durlach, P.J., Spain, R.D.: Framework for instructional technology: Methods of implementing adaptive training and education. Defense Technical Information Center, January 2014

Shute, V.J., Psotka, J.: Intelligent tutoring systems: Past, present, and future. Armstrong Lab Brooks AFB TX Human Resources Directorate (1994)

Soloway, E., Guzdial, M., Hay, K.E.: Learner-centered design: the challenge for HCI in the 21st century. Interactions 1(2), 36–48 (1994)

Nye, B.D., Graesser, A.C., Hu, X.: AutoTutor and family: a review of 17 years of natural language tutoring. J. Artif. Intell. Educ. 24(4), 427–469 (2014)

Graesser, A.C., Wiemer-Hastings, K., Wiemer-Hastings, P., Kreuz, R.: AutoTutor: a simulation of a human tutor. Cogn. Syst. Res. 1(1), 35–51 (1999)

Lucariello, J.M., Nastasi, B.K., Dwyer, C., Skiba, R., DeMarie, D., Anderman, E.M.: Top 20 psychological principles for PK–12 education. Theory Pract. 55(2), 86–93 (2016)

Top 20 Principles for Pre-K to 12 Education. https://www.apa.org/ed/schools/teaching-learning/principles/index.aspx. Accessed 31 Jan 2019

Pashler, H., et al.: Organizing instruction and study to improve student learning. National Center for Education Research, Institute of Education Sciences, US Department of Education, Washington, DC (2007)

Graesser, A.C., Halpern, D.F., Hakel, M.: 25 principles of learning. Task Force on Lifelong Learning at Work and at Home Washington, DC (2008)

Graesser, A.C., Person, N.K.: Question asking during tutoring. Am. Educ. Res. J. 31(1), 104 (1994)

Nielsen, R.D., Buckingham, J., Knoll, G., Marsh, B., Palen, L.: A taxonomy of questions for question generation. In: Workshop on the Question Generation Shared Task and Evaluation Challenge (2008)

Duval, E., Hodgins, W., Sutton, S., Weibel, S.L.: Metadata principles and practicalities. D-lib Mag. 8(4), 1082–9873 (2002)

Poltrack, J., Hruska, N., Johnson, A., Haag, J.: The next generation of SCORM: innovation for the global force. In: The Interservice/Industry Training, Simulation & Education Conference, I/ITSEC (2012)

Fletcher, J.D., Tobias, S., Wisher, R.A.: Learning anytime, anywhere: advanced distributed learning and the changing face of education. Educ. Res. 36(2), 96–102 (2007)

LRMI Homepage. http://lrmi.dublincore.org/. Accessed 7 Feb 2019

DeFalco, J.A.: Proposed standard for an AIS LOM model using pedagogical identifiers. In: Rosé, C.P., et al. (eds.) Artificial Intelligence in Education, London, United Kingdom, p. 43 (2018)

xAPI Homepage. https://xapi.com/overview/. Accessed 10 Feb 2019

Experience xAPI—ADL Initiative. https://adlnet.gov/research/performance-tracking-analysis/experience-api/. Accessed 10 Feb 2019

Acknowledgment

The research on was supported by the National Science Foundation (DRK-12-0918409, DRK-12 1418288), the Institute of Education Sciences (R305C120001), Army Research Lab (W911INF-12-2-0030), and the Office of Naval Research (N00014-12-C-0643; N00014-16-C-3027). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of NSF, IES, or DoD. The Tutoring Research Group (TRG) is an interdisciplinary research team comprised of researchers from psychology, computer science, and other departments at University of Memphis (visit http://www.autotutor.org).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

1.1 A Top 20 Principles for Pre-K to 12 Education Divided in Five Types [12]

How Do Students Think and Learn?

-

(1)

Students’ beliefs or perceptions about intelligence and ability affect their cognitive functioning and learning.

-

(2)

What students already know affects their learning.

-

(3)

Students’ cognitive development and learning are not limited by general stages of development.

-

(4)

Learning is based on context, so generalizing learning to new contexts is not spontaneous but instead needs to be facilitated.

-

(5)

Acquiring long-term knowledge and skill is largely dependent on practice.

-

(6)

Clear, explanatory and timely feedback to students is important for learning.

-

(7)

Students’ self-regulation assists learning, and self-regulatory skills can be taught.

-

(8)

Student creativity can be fostered.

What Motivates Students?

-

(9)

Students tend to enjoy learning and perform better when they are more intrinsically than extrinsically motivated to achieve.

-

(10)

Students persist in the face of challenging tasks and process information more deeply when they adopt mastery goals rather than performance goals.

-

(11)

Teachers’ expectations about their students affect students’ opportunities to learn, their motivation and their learning outcomes.

-

(12)

Setting goals that are short-term (proximal), specific and moderately challenging enhances motivation more than establishing goals that are long-term (distal), general and overly challenging.

Why Are Social Context, Interpersonal Relationships, and Emotional Well-Being Important to Student Learning?

-

(13)

Learning is situated within multiple social contexts.

-

(14)

Interpersonal relationships and communication are critical to both the teaching-learning process and the social-emotional development of students.

-

(15)

Emotional well-being influences educational performance, learning and development.

How Can the Classroom Best Be Managed?

-

(16)

Expectations for classroom conduct and social interaction are learned and can be taught using proven principles of behavior and effective classroom instruction.

-

(17)

Effective classroom management is based on (a) setting and communicating high expectations, (b) consistently nurturing positive relationships and (c) providing a high level of student support.

How to Assess Student Progress?

-

(18)

Formative and summative assessments are both important and useful but require different approaches and interpretations.

-

(19)

Students’ skills, knowledge and abilities are best measured with assessment processes grounded in psychological science with well-defined standards for quality and fairness.

-

(20)

Making sense of assessment data depends on clear, appropriate and fair interpretation.

1.2 B Seven Principles from an IES Report [14]

-

(1)

Space learning over time.

-

(2)

Interleave worked example solutions with problem-solving exercises.

-

(3)

Combine graphics with verbal descriptions.

-

(4)

Connect and integrate abstract and concrete representations of concepts.

-

(5)

Use quizzing to promote learning.

-

(6)

Help students manage study.

-

(7)

Ask deep explanatory questions.

1.3 C 25 Learning Principles to Guide Pedagogy and the Design of Learning Environments [15]

(1) Contiguity Effects. (2) Perceptual-motor Grounding. (3) Dual Code and Multimedia Effects. (4) Testing Effect. (5) Spaced Effects. (6) Exam Expectations. (7) Generation Effect. (8) Organization Effects. (9) Coherence Effect. (10) Stories and Example Cases. (11) Multiple Examples. (12) Feedback Effects. | (13) Negative Suggestion Effects. (14) Desirable Difficulties. (15) Manageable Cognitive Load. (16) Segmentation Principle. (17) Explanation Effects. (18) Deep Questions. (19) Cognitive Disequilibrium. (20) Cognitive Flexibility. (21) Goldilocks Principle. (22) Imperfect Metacognition. (23) Discovery Learning. (24) Self-regulated Learning. (25) Anchored Learning. |

1.4 D Graesser and Person [16] Classification of Questions

-

(1)

Simple or shallow

-

(a)

Verification: Is X true or false? Did an event occur?

-

(b)

Disjunctive: Is X, Y, or Z the case?

-

(c)

Concept completion: Who? What? When? Where?

-

(d)

Example: What is an example or instance of a category?

-

(a)

-

(2)

Intermediate

-

(a)

Feature specification: What qualitative properties does entity X have?

-

(b)

Quantification: What is the value of a quantitative variable? How much?

-

(c)

Definition questions: What does X mean?

-

(d)

Comparison: How is X similar to Y? How is X different from Y?

-

(a)

-

(3)

Deep or complex

-

(a)

Interpretation: What concept/claim can be inferred from a pattern of data?

-

(b)

Causal antecedent: Why did an event occur?

-

(c)

Causal consequence: What are the consequences of an event or state?

-

(d)

Goal orientation: What are the motives or goals behind an agent’s action?

-

(e)

Instrumental/procedural: What plan or instrument allows an agent to accomplish a goal?

-

(f)

Enablement: What object or resource allows an agent to accomplish a goal?

-

(g)

Expectation: Why did some expected event not occur?

-

(h)

Judgmental: What value does the answerer place on an idea or advice?

-

(a)

1.5 E Nielson [17] Taxonomy of Question Types

-

1.

Description Questions

-

1.1.

Concept Completion: Who, what, when, where?

-

1.2.

Definition: What does X mean?

-

1.3.

Feature Specification: What features does X have?

-

1.4.

Composition: What is the composition of X?

-

1.5.

Example: What is an example of X?

-

1.1.

-

2.

Method Questions

-

2.1.

Calculation: Compute or calculate X.

-

2.2.

Procedural: How do you perform X?

-

2.1.

-

3.

Explanation Questions

-

3.1.

Causal Antecedent: What caused X?

-

3.2.

Causal Consequence: What will X cause?

-

3.3.

Enablement: What enables the achievement of X?

-

3.4.

Rationale Questions

-

3.4.1.

Goal Orientation: What is the goal of X?

-

3.4.2.

Justification: Why is X the case?

-

3.4.1.

-

3.1.

-

4.

Comparison Questions

-

4.1.

Concept Comparison: Compare X to Y?

-

4.2.

Judgment: What do you think of X?

-

4.3.

Improvement: How could you improve upon X?

-

4.1.

-

5.

Preference Questions

-

5.1.

Free Creation: requires a subjective creation.

-

5.2.

Free Option: select from a set of valid options.

-

5.1.

1.6 F Example of Learning Science Extension of IEEE LoM for AutoTutor

Editing interface for learning science extension in AutoTutor authoring tool. It only considered the 25 learning principles for the current version.

The XML for learning principles. For the specific AutoTutor Module, only “Deep_Questions” is explicitly relevant.

Editing interface for learning science extension in AutoTutor Authoring tool to specify type of seed questions asked in a given AutoTutor Module.

The XML for Question Type for the specific AutoTutor Module, The question type implemented in this AutoTutor module is Causal consequence.

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Hu, X. et al. (2019). Capturing AIS Behavior Using xAPI-like Statements. In: Sottilare, R., Schwarz, J. (eds) Adaptive Instructional Systems. HCII 2019. Lecture Notes in Computer Science(), vol 11597. Springer, Cham. https://doi.org/10.1007/978-3-030-22341-0_17

Download citation

DOI: https://doi.org/10.1007/978-3-030-22341-0_17

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-22340-3

Online ISBN: 978-3-030-22341-0

eBook Packages: Computer ScienceComputer Science (R0)