Abstract

Color represents a primary feature in the field of Art and Cultural Heritage, which can also be of help in defining the conservation state of an artwork. The color identification by means of a digital camera represents a non-destructive methodology which makes use of a non-expensive and portable device and enables a spatial analysis which is not allowed to a colorimeter. The present study compares an original method for camera characterization with two approaches reported in the literature. The comparison is based on parameters such as the Pearson correlation coefficient and the ΔE00 colorimetric difference, computed according to the CIEDE2000 formula. The data sets used for both the “training” and the “validation” processes are (a) the 24 tiles of the Color Checker Passport Photo X-Rite color scale and (b) 30 samples of oil painting laid down on a canvas prepared according to the indications of Giorgio Vasari in his renowned “Le vite”. The data so far available clearly show that our original method leads to results which are similar or better than those furnished by the literature methods.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Color is one of the most important properties of artworks, and color changes provide an indication of an art object’s age and conservation state. As a consequence, the monitoring of color changes with time is a useful preventive diagnostic tool for Cultural Heritage preservation. A color change is usually quantified by dedicated color measuring devices, such as colorimeters and spectrophotometers, and analyzed in a device-independent color space as CIE L*a*b* [1,2]. The artwork surface analyzed by common devices is a point area, and thus measurement of heterogeneous and large surfaces provides non-homogeneous values; this limitation can be overcome with the use of a digital camera, i.e., a device acquiring color information over a much wider surface, only limited by the illumination area [3]. Moreover, a digital camera is less expensive than a dedicated color-measuring spectrophotometric device.

A major problem when using a digital camera for measuring color is that consumer-level sensors (of either CCD or Cmos type) are typically uncalibrated. As a consequence, even though the raw camera output is declared to be converted in sRGB representation, it is not color-accurate according to sRGB standard definition. In order to face this problem a color calibration algorithm is then required to transform color digital values into L*a*b* values in agreement with spectrophotometric measures.

Leon et al. [4] have considered different calibration algorithms, viz. (a) linear and quadratic models for L*a*b* regression starting from RGB values, and (b) a direct model where RGB values are transformed into XYZ values, in turn used to derive L*a*b* values, (c) a gamma model which linearizes sRGB data before applying method b (transformation into XYZ values and then calculation of L*a*b* values) and (d) a “neural network” predicting L*a*b* values from RGB ones. Cheung et al. [5] have considered the gamma correction approach to calibrate digital photos, evaluating three different techniques for the linearization of sRGB values: spectral sensitivities, luminance and mean reflectance.

The aim of the present study is to compare the two most effective camera calibration methods among those outlined above (i.e., the regression method and the approach with gamma correction preceding linearization) with an original method we have specifically designed and implemented.

2 Materials and Approaches

Three methods have been tested and compared with the purpose of achieving the best calibration of camera pictures, taking colorimetric measurements as reference values.

They are all supervised methods, what means that the setting of the calibration parameters is optimized according to a training set, where each digital-camera image is labelled with the reference color parameters obtained with the colorimeter.

Comparison of methods performance is based on the error computed when applying each method to the samples of the training set (to evaluate precision) or to a validation set (to evaluate robustness).

2.1 Materials

The training set and the validation set are made of color samples (see Figs. 1 and 2 below), each measured with both the colorimeter and the camera.

X-Rite ColorChecker Passport Photo is a photographic color scale made of 24 tiles for maintaining uniformity and color control in digital photography. Along with color patterns, it also contains gray patches shading from white to black.

Oil Painting Samples.

A linen canvas has been purposely prepared according to the indications provided by the Italian painter, architect and art historian Giorgio Vasari in his renowned historiographical text “Le Vite” [6]. Accordingly, the linen texture was treated with a few layers of rabbit glue, a layer of dough (made of walnut oil and flour), further three layers of rabbit glue, to reach a homogeneous uniform thickness on the linen surface, and finally with the preparatory layer, i.e. a mix of linseed oil, white lead [biacca: (PbCO3)2·Pb(OH)2] and different ochres [mixed Fe(II) and Fe(III) oxides, generally in presence of Mn(IV), Al(III) and Si(IV) oxides, with different amounts of hydration water molecules]; once the preparatory layer dried, thirty color samples were laid down with the oil painting technique, using different pigments (mainly ochres), each pigment dispersion being prepared in 3 or 4 different w/w compositions. The pigments were: ombra naturale, ombra bruciata, terra di Siena naturale, terra di Siena bruciata, white lead, malachite, cinnabar and a pink pigment (obtained by mixing white lead and cinnabar). The color measurements on the paint samples were repeated monthly, with both the spectrophotometer and the camera.

2.2 Data and Image Acquisition Tools

The data were acquired with both a colorimeter and a digital camera, the Matlab and Excel softwares being used for the mathematical treatment.

Colorimeter.

Reference measurements were made by the Konica Minolta CM2600d spectrophotometer, a handheld, portable instrument designed to estimate the color parameters. The settings are summarized in Table 1.

Digital Camera.

The digital images were taken with the following image acquisition system:

-

A Panasonic Lumix DMC-FZ200 camera was placed vertically at 46.5 cm from the samples. The angle between the axis of the lens and the sources of illumination was approximately 45°.

-

Illumination was achieved with 2 OSRAM, Natural Daylight 23 W fluorescent lights, color temperature 6500 K.

-

The photos were shot in a dark room.

-

The settings of the camera are summarized in Table 2.

Table 2. Camera setup.

2.3 Training and Validation Sets

The training set consisted of the 24 tiles of the X-Rite ColorChecker Passport Photo, on one side (reference set), and of the 30 painting samples on canvas on the other side; the data (colorimetric measurements and digital-camera acquisitions) were collected in March 2018.

The validation set is represented herein by the painting samples on canvas measured with the colorimeter and acquired with the camera in April 2018.

The colorimetric (Konica Minolta CM2600d) value of each painting sample is the average of 5 spot areas and refers to the device-independent L*a*b* space. Likewise, the colorimetric value of each tile of the X-Rite scale is the average of 4 spot areas.

The color measurements taken with the digital camera refer to the sRGB values of the digital image. In this case, the color value of each sample (either the X-Rite tile or the canvas paint samples) is the average of 5 different areas of 16 × 16 pixels for each of 9 successive camera acquisitions.

3 Calibration Methods

The main purpose of the methods is to estimate the transformation from the camera output given in sRGB space to the L*a*b* space which minimizes the error with respect to the colorimetric L*a*b* values.

Method 1: Matrix-Based Method Through Polynomial Modelling

According to Hong et al. [7] and to Johnson [8], the most appropriate transformation from the sRGB space to the L*a*b* space requires a polynomial regression with least-squares fitting.

The applied polynomial, P[11], is as follows:

The best matrix is estimated through the pseudo-inverse methodology by using the training set values; R, G, and B refer to the color channels as acquired by the camera on the training set; the target values are the Ls, as, bs values read by the spectrophotometer on the same training set.

Once the matrix elements are estimated, the transformation is applied independently to the training set and the validation set thus obtaining the calibrated values Lc, ac, bc.

Method 2: Method Based on the Gamma-Correction Technique

This method consists of two parts:

-

1.

estimation of the non-linearity of digital data (gamma correction);

-

2.

application of a polynomial modelling.

The gamma correction was performed through the luminance-based technique as both Valous et al. [9] and Cheung et al. [10] have described.

The gray tiles of the ColorChecker Passport Photo (A4, B4, C4, D4, E4, and F4) were considered to calculate the non-linearity parameter.

The calculated gamma factor was applied to the camera responses for the remaining X-Rite colors so that their sRGB values were corrected for non-linearity. Polynomial modelling was applied to corrected values using the vector P[11] above.

Method 3: Our Original Approach

An original, alternative approach has been devised and implemented in this study. The initial idea is that a proper calibration must lead to an ideal y = x linear correlation, with unitary slope and without offset between photographic and spectrophotometric data (i.e. null intercept).

Starting from the training set, a general-purpose transform from the sRGB to the L*a*b* space was applied to the digital camera output, thus obtaining Lp, ap, bp.

In Figs. 3, 4 and 5, the scatter plots of uncalibrated digital color data vs. spectrophotometric values are shown.

The parameters to be used for camera calibration are then estimated according to the linear regression analysis applied separately to L*, a*, and b* data:

where (αL, βL), (αa, βa), and (αb, βb) are the regression coefficients, the β’s being the intercepts representing the systematic errors to be corrected by the calibration process.

Since the target is to find calibrated L*, a*, and b* values which better reproduce the colorimeter values it holds that:

Then the proposed transformation is:

As shown in Figs. 3, 4 and 5, the scatter plots displaying the uncalibrated digital color data versus spectrophotometric values show a strong linear correlation: Pearson coefficient are 0.9797, 0.9832, and 0.9596, for L*, a*, and b*, respectively.

In addition, the coefficient of determination is very close to one for L* and a* (0.9675 and 0.9693, respectively) proving that a linear relation exists between uncalibrated and calibrated data.

Indeed, by considering the b* scatterplot and the related coefficient of determination (0.9193), the independent calibration of the b* parameter is expected to be of lower quality. As consequence, a calibration step in the (a, b) space is proposed, by applying the matrix method only to these two chromaticity features. In such a case, the terms of the polynomial P[6] are experimentally proved to be sufficient:

The mapping can now be represented by:

4 Results

After the analysis of measure reliability through a test-retest evaluation, the three methods have been applied. Their performance evaluation is based on statistical measures (such as correlation coefficients) as well as on color-distance measures.

The analysis of the Pearson coefficients shows a general improvement when comparing the values before and after calibration.

As far as it concerns the training set (reported in Table 3), the best outcome is that achieved by method 2 but is clear how this same method performs very poorly on the validation set (third line in Table 4).

One can also notice that, on the training set, methods 1 and 3 improve or preserve correlation for L* and a*, while b* is less improved by method 3: indeed, on the whole method 1 is better than method 3.

On the contrary, with the validation set the situation is reversed: model 1 improves b* but it gets worse for the other components; model 3 improves b*, preserves the correlation coefficient for L* (as expected by design), and performs better than method 1 on a* component.

What described above is also evident when analyzing the error in L*, a*, and b* components (∆L*, ∆a*, and ∆b*) between spectrophotometric data and calibrated camera values.

When considering the training set (Table 5), method 2 achieves the smallest error, while method 3 shows the largest ∆L*, ∆a*, and ∆b* values, confirming its lower accuracy with respect to the other models.

On the other side, when considering the validation set (Table 6), method 2 has the smallest ∆L* and ∆a* values, but the b* error is dramatically increased. Method 1 has the largest ∆L* but negligible ∆b*. Method 3, performs better than method 1 as dealing with L*, a*, and root-mean-square errors.

When using color metrics, the color distance ∆E00, calculated according to formula CIEDE2000 is applied. The equation used is based on the mathematical observations and implementations analyzed by Sharma et al. [11], and the final formula is:

where ∆L, ∆C and ∆H are the luminance, chroma and hue difference respectively, KL, KC and KH are parametric weighting factors, that we set to unity, while Rt, SL, SC and SH are terms computed in relation to C and H values.

When referring to the evaluation on the training set, the values reported in Table 7 have been found. The ∆E00 between the reference colorimetric values and the digital data without calibration is 8.8 for X-Rite gray tiles and 13.5 for colored X-Rite tiles and pigments. After applying each of the three methods a smaller distance is achieved, and the improvement is even more evident for the colored tiles.

In general, method 1 achieves a better calibration when compared with method 3. Such a result means that method 1 is very precise and accurate in calibrating the same dataset used for the training phase.

When evaluating performances with the validation set, the values in Table 8 are reported. After acquisition, without any calibration, ∆E00 is close to 13. By applying the calibration methods, the minimum ∆E00 (7.3) is achieved by our model; ∆E00 of model 1 and of method 2 are 7.9 and 9.8, respectively.

Even though results are not yet in line with the recommendation for the Cultural Heritage field, where the accepted limit is ∆E00 = 3 [12]), it is interesting to notice how the new method 3 has given positive results as compared to the other two methods.

This result suggests that our method is more robust than method 1 and method 2. Especially, a too large precision of method 1 in the evaluation of the training set should be a symptom of overfitting.

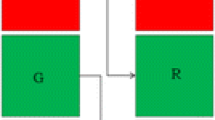

Finally, in Fig. 6 a color display of two data samples is reported, to provide an optical visualization of the comparison of the different methods.

Sample color display; from left to right: colorimetric value, uncalibrated camera output, camera output calibrated with method 1, 2, and 3. (a) sample within the training set; (b) sample within the validation set: in this case the color display calibrated with method 2 is not available because the validation set used for this method is different from that used for the other two methods.

5 Conclusions

Despite the intrinsic differences in the samples used in the present work (i.e., the ColorChecker Passport Photo tiles and the paintings on canvas) it has been possible to achieve interesting insights into supervised calibration methods and their performances: while method 1 proved to be the most precise and accurate on the same sample set used during the training phase, method 3 proves more effective in the capability to be generalized (robustness).

Robustness is indeed a major desired aspect in a calibration method, especially when it must be applied to cultural heritage problems. In fact, when artistic artworks cannot be measured with a colorimeter, but their image might instead be acquired by a color camera, such an approach could represent a crucial color analytical method if the camera data could be confidently esteemed precise and reliable enough in the identification and characterization of pigments.

Optimization of our method can still be pursued. For example, calibration errors can also be related to the photographic elaboration of reflections on different surfaces. In this context, a database with a larger quantity of samples will be used in future developments, taking surface properties into proper consideration. In order to prove how the proposed approach can be generalized, the results for different cameras will be considered in future works.

References

Sanmartìn, P., Chorro, E., Vàzque-Nion, D., Martìnez-Verdù, F.M., Prieto, B.: Conversion of a digital camera into a non-contact colorimeter for use in stone cultural heritage: the application case to Spanish granites. Measurement 56, 149–220 (2014)

Ibraheem, N.A., Hasam, M.M., Khan, R.Z., Mishra, P.K.: Understanding color models: a review. ARPN J. Sci. 2, 265–275 (2012)

Jackman, P., Sun, D.W., ElMasry, G.: Robust colour calibration of an imaging system using a colour space transform and advanced regression modelling. Meat Sci. 91, 402–407 (2012)

Leòn, K., Mery, D., Pedreschi, F., Leòn, J.: Color measurement in L*a*b* units from RGB digital images. Food Res. Int. 39, 1084–1091 (2006)

Cheung, V., Westland, S., Thomson, M.: Accurate estimation of the nonlinearity of input/output response for color cameras. Color Res. Appl. 29, 406–412 (2004)

Vasari, G.: Le Vite de’ più eccellenti pittori, scultori et architettori (often more simply referred to as “Le Vite”), 1st edn. 1550 (Torrentino, Florence), 2nd edn. 1556 (Giunti, Venice)

Hong, G., Luo, M.R., Rhodes, P.A.: A study of digital camera colorimetric characterization based on polynomial modelling. Color Res. Appl. 26, 76–84 (2001)

Johnson, T.: Methods for characterizing colour scanners and digital cameras. Displays 16, 183–191 (1996)

Valous, N.A., Mendoza, F., Sun, D.W., Allen, P.: Colour calibration of a laboratory computer vision system for quality evaluation of pre-slides hams. Meat Sci. 81, 132–141 (2009)

Cheung, T.V.L., Westland, S.: Accurate estimation of the non-linearity of input-output response for color digital cameras. In: The Science and Systems of Digital Photography Conference, Rochester, pp. 366–369 (2003)

Sharma, G., Wu, W., Dalal, E.N.: The CIEDE2000 color-difference formula: implementation notes, supplementary test data, and mathematical observations. Color Res. Appl. 30, 21–30 (2004)

Barbu, O.H., Zaharide, A.: Noninvasive in situ study of pigments in artworks by means of Vis, IRFC image analysis and X-Ray fluorescence spectrometry. Color Res. Appl. 41(3), 321–324 (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Manfredi, E., Petrillo, G., Dellepiane, S. (2019). A Novel Digital-Camera Characterization Method for Pigment Identification in Cultural Heritage. In: Tominaga, S., Schettini, R., Trémeau, A., Horiuchi, T. (eds) Computational Color Imaging. CCIW 2019. Lecture Notes in Computer Science(), vol 11418. Springer, Cham. https://doi.org/10.1007/978-3-030-13940-7_15

Download citation

DOI: https://doi.org/10.1007/978-3-030-13940-7_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-13939-1

Online ISBN: 978-3-030-13940-7

eBook Packages: Computer ScienceComputer Science (R0)