Abstract

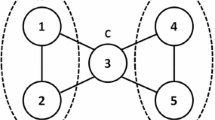

This paper presents a novel approach known as the cross estimation network (CEN) for fitting the datasets obtained from psychological or educational tests and estimating the parameters of item response theory (IRT) models. The CEN is comprised of two subnetworks: the person network (PN) and the item network (IN). The PN processes the response pattern of individual respondent and generates an estimate of the underlying ability, while the IN takes in the response pattern of individual item and outputs the estimates of the item parameters. Four simulation studies and an empirical study were comprehensively and rigorously conducted to investigate the performance of CEN on parameter estimation of the two-parameter logistic model under various testing scenarios. Results showed that CEN effectively fit the training data and produced accurate estimates of both person and item parameters. The trained PN and IN adhered to AI principles and acted as intelligent agents, delivering commendable evaluations for even unseen patterns of new respondents and items.

Similar content being viewed by others

Notes

We emphasize that the \(-2LL\) serves as a relative fit index rather than an absolute one. This means it lacks a predefined threshold indicating acceptable model data fit. Instead, it enables comparison among alternative models. In this case, the \(-2LL\) assists in determining the best-fitting version among the four CEN variants.

The visualization of the trained CEN models, specifically, the PN and IN, can be found in the Supplementary material.

As the benchmark method for comparison with CEN, the MMLE-EM algorithm was used for estimating the item parameters \(\varvec{a}\) and \(\varvec{b}\), while the EAP algorithm was utilized to estimate the person parameters \(\varvec{z}\). Note that both person and item parameters were unknown in this task. Therefore, the MMLE-EM algorithm was used initially to generate estimates of item parameters by integrating out person parameters. Subsequently, the EAP algorithm was utilized to generate person parameter estimates based on the obtained item parameter estimates.

In comparison with CEN, the EAP algorithm was used to estimate new person parameters based on the calibrated (known) item parameters obtained in the EvalTrain task; the MLE algorithm was applied to estimate new item parameters based on the estimated (known) person parameters acquired also in that task.

In both tasks, the EAP algorithm, serving as reference method, was implemented based on the actual item parameters.

Given that the actual person parameters were assumed to be known in this study, the MLE algorithm was used to estimate the item parameters based on the known person parameters in both EvalTrain and EvalTest tasks.

The results here may not be regarded as a fair comparison between MLE and CEN. MLE leverages the actual person parameters and completes a “fitting” to the new response patterns, whereas CEN, consisting of the trained subnets, does not directly use that information and does not perform a “fitting” to the new patterns when estimating the new item parameters. The inclusion of MLE results is solely for reference purposes, which readers should be conscious of.

In the third column, where the MSE and bias results of the MLE/EAP methods were presented, in line with our expectations, there is no significant difference among the various scenarios regarding the estimation of each parameter.

References

Abadi, M., Barham, P., Chen, J., Chen, Z., Davis, A., Dean, J., ..., Zheng, X. (2016). TensorFlow: A system for large-scale machine learning. In: Osdi (vol. 16, pp. 265-283).

Abiodun, O. I., Jantan, A., Omolara, A. E., Dada, K. V., Mohamed, N. A., & Arshad, H. (2018). State-of-the-art in artificial neural network applications: A survey. Heliyon, 4(11), e00938.

Abu-Naser, S. S., Zaqout, I. S., Abu Ghosh, M., Atallah, R. R., & Alajrami, E. (2015). Predicting student performance using artificial neural network. In: The faculty of engineering and information technology.

Agrawal, P., Girshick, R., & Malik, J. (2014). Analyzing the performance of multilayer neural networks for object recognition. In: Computer vision-eccv 2014: 13th European conference, Zurich, Switzerland, september 6-12, 2014, proceedings, part vii 13 (pp. 329–344).

Birnbaum, A. (1968). Some latent trait models and their use in inferring an examinee’s ability. Statistical Theories of Mental Test Scores.

Bock, R. D., & Aitkin, M. (1981). Marginal maximum likelihood estimation of item parameters: Application of an em algorithm. Psychometrika, 46(4), 443–459.

Bock, R. D., & Lieberman, M. (1970). Fitting a response model for n dichotomously scored items. Psychometrika, 35(2), 179–197.

Briot, J.-P. (2021). From artificial neural networks to deep learning for music generation: History, concepts and trends. Neural Computing and Applications, 33(1), 39–65.

Cai, L. (2010). Metropolis-hastings robbins-monro algorithm for confirmatory item factor analysis. Journal of Educational and Behavioral Statistics, 35(3), 307–335.

Chen, P., & Wang, C. (2016). A new online calibration method for multidimensional computerized adaptive testing. Psychometrika, 81, 674–701.

Cheng, S., Liu, Q., Chen, E., Huang, Z., Huang, Z., Chen, Y., ..., Hu, G. (2019). Dirt: Deep learning enhanced item response theory for cognitive diagnosis. In: Proceedings of the 28th acm international conference on information and knowledge management (pp. 2397-2400).

Collobert, R., & Weston, J. (2008). A unified architecture for natural language processing: Deep neural networks with multitask learning. In: Proceedings of the 25th international conference on machine learning (pp. 160-167).

Converse, G., Curi, M., Oliveira, S., & Templin, J. (2021). Estimation of multidimensional item response theory models with correlated latent variables using variational autoencoders. Machine learning, 110(6), 1463–1480.

Crumbaugh, J. C., & Maholick, L. T. (1964). An experimental study in existentialism: The psychometric approach to Frankl’s concept of noogenic neurosis. Journal of Clinical Psychology, 20(2), 200–207.

Curi, M., Converse, G. A., Hajewski, J., & Oliveira, S. (2019). Interpretable variational autoencoders for cognitive models. In: 2019 international joint conference on neural networks (pp. 1-8).

Dempster, A. P., Laird, N. M., & Rubin, D. B. (1977). Maximum likelihood from incomplete data via the EM algorithm. Journal of the Royal Statistical Society: Series B (Methodological), 39(1), 1–22.

Elzamly, A., Hussin, B., Abu-Naser, S. S., Shibutani, T., & Doheir, M. (2017). Predicting critical cloud computing security issues using Artificial Neural Network (ANNs) algorithms in banking organizations.

Ioffe, S., & Szegedy, C. (2015). Batch normalization: Accelerating deep network training by reducing internal covariate shift. In: International conference on machine learning (pp. 448-456).

Kingma, D. P., & Welling, M. (2013). Auto-encoding variational bayes. arXiv:1312.6114.

Li, C., Ma, C., & Xu, G. (2022). Learning large Q-matrix by restricted boltzmann machines. Psychometrika, 87(3), 1010–1041.

Liang, M., & Hu, X. (2015). Recurrent convolutional neural network for object recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3367-3375).

Lord, F. M. (1952). A theory of test scores. Psychometric Monographs.

Lord, F. M., Novick, M. R., & Birnbaum, A. (1968). Statistical theories of mental test scores. Addison-Wesley.

Meijer, R. R., & Nering, M. L. (1999). Computerized adaptive testing: Overview and introduction. Applied Psychological Measurement, 23(3), 187–194.

Muraki, E. (1992). A generalized partial credit model: Application of an em algorithm. ETS Research Report Series, 1992(1), i–30.

Murphy, K. P. (2012). Machine learning: A probabilistic perspective. MIT press.

Otter, D. W., Medina, J. R., & Kalita, J. K. (2020). A survey of the usages of deep learning for natural language processing. IEEE Transactions on Neural Networks and Learning Systems, 32(2), 604–624.

Patz, R. J., & Junker, B. W. (1999). Applications and extensions of mcmc in irt: Multiple item types, missing data, and rated responses. Journal of Educational and Behavioral Statistics, 24(4), 342–366.

Patz, R. J., & Junker, B. W. (1999). A straightforward approach to markov chain monte carlo methods for item response models. Journal of Educational and Behavioral Statistics, 24(2), 146–178.

Paule-Vianez, J., Gutiérrez-Fernández, M., & Coca-Pérez, J. L. (2020). Prediction of financial distress in the spanish banking system: An application using artificial neural networks. Applied Economic Analysis, 28(82), 69–87.

Peterson, J. C., Bourgin, D. D., Agrawal, M., Reichman, D., & Griffiths, T. L. (2021). Using large-scale experiments and machine learning to discover theories of human decision-making. Science, 372(6547), 1209–1214.

Pramerdorfer, C., & Kampel, M. (2016). Facial expression recognition using convolutional neural networks: state of the art. arXiv:1612.02903 .

Rasch, G. (1960). Probabilistic models for some intelligence and attainment tests. Copenhagen: Danish Institute for Educational Research.

Rezende, D. J., Mohamed, S., & Wierstra, D. (2014). Stochastic backpropagation and approximate inference in deep generative models. In: International conference on machine learning (pp. 1278-1286).

Samejima, F. (1969). Estimation of latent ability using a response pattern of graded scores. Psychometrika Monograph Supplement.

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., & Salakhutdinov, R. (2014). Dropout: A simple way to prevent neural networks from overfitting. The Journal of Machine Learning Research, 15(1), 1929–1958.

Stocking, M. L. (1988). Scale drift in on-line calibration. ETS Research Report Series, 1988(1), i–122.

Suhara, Y., Xu, Y., & Pentland, A. (2017). Deepmood: Forecasting depressed mood based on self-reported histories via recurrent neural networks. In: Proceedings of the 26th international conference on world wide web (pp. 715-724).

Szegedy, C., Toshev, A., & Erhan, D. (2013). Deep neural networks for object detection. Advances in Neural Information Processing Systems, 26.

Tsutsumi, E., Kinoshita, R., & Ueno, M. (2021). Deep item response theory as a novel test theory based on deep learning. Electronics, 10(9), 1020.

Urban, C. J., & Bauer, D. J. (2021). A deep learning algorithm for high-dimensional exploratory item factor analysis. Psychometrika, 86(1), 1–29.

van der Linden, W. J. (2016). Handbook of item response theory, volume two: Statistical tools. CRC Press.

van der Linden, W. J., & Glas, C. A. (2000). Computerized adaptive testing: Theory and practice. Springer.

Wainer, H., & Mislevy, R. J. (1990). Item response theory, item calibration, and proficiency estimation. In H. Wainer (Ed.), Computerized adaptive testing: A primer (pp. 65–102). Hillsdale, NJ: Erlbaum.

Wang, D., He, H., & Liu, D. (2017). Intelligent optimal control with critic learning for a nonlinear overhead crane system. IEEE Transactions on Industrial Informatics, 14(7), 2932-2940.

Woodruff, D. J., & Hanson, B. A. (1996). Estimation of item response models using the em algorithm for finite mixtures.

Yadav, S. S., & Jadhav, S. M. (2019). Deep convolutional neural network based medical image classification for disease diagnosis. Journal of Big Data, 6(1), 1–18.

Yeung, C.-K. (2019). Deep-irt: Make deep learning based knowledge tracing explainable using item response theory. arXiv:1904.11738.

Young, T., Hazarika, D., Poria, S., & Cambria, E. (2018). Recent trends in deep learning based natural language processing. IEEE Computational Intelligence Magazine, 13(3), 55–75.

Funding

This work was partially supported by the National Natural Science Foundation of China (grant no. 32071092) and the Research Program Funds of the Collaborative Innovation Center of Assessment for Basic Education Quality (grant nos. 2022-01-082-BZK01 and BJZK-2021A1-20019).

Author information

Authors and Affiliations

Contributions

Longfei Zhang: conceptualization, methodology, software, formal analysis, investigation, validation, visualization, writing – original draft, writing – review and editing. Ping Chen: resources, supervision, writing – review and editing.

Corresponding author

Ethics declarations

Conflicts of interest

All authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendices

Appendix A: Reference methods for CEN

Appendix B: Parameter-estimate mappings in Simulation Study 4

The parameter-estimate mappings of CEN_1H_NL (first row) and CEN_3H_NL (second row) are illustrated under various scenarios in test setting of \(N=1000\) and \(M=90\), EvalTest task, Simulation Study 4. Blue points represent underestimations for the parameters, while red points indicate overestimations. Straight lines in corresponding colors connect parameter-estimate pairs with absolute differences greater than threshold 0.4

Appendix C: Parameter-estimate mappings in Simulation Study 1

The parameter-estimate mappings of CEN_1H_NL (a) and CEN_3H_NL (b) are illustrated in test setting of \(N=1000\) and \(M=90\), EvalTrain task, Simulation Study 1. Note that the threshold for depicting differences is set at 0.3, which is more stringent than the threshold used in Fig. 14. Lines representing parameters \(\varvec{z}\) are intentionally omitted in this illustration to avoid visual clutter

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, L., Chen, P. A neural network paradigm for modeling psychometric data and estimating IRT model parameters: Cross estimation network. Behav Res (2024). https://doi.org/10.3758/s13428-024-02406-3

Accepted:

Published:

DOI: https://doi.org/10.3758/s13428-024-02406-3