Abstract

Images of emotional facial expressions are often used in emotion research, which has promoted the development of different databases. However, most of these standardized sets of images do not include images from infants under 2 years of age, which is relevant for psychology research, especially for perinatal psychology. The present study aims to validate the edited version of the Tromsø Infant Faces Database (E-TIF) in a large sample of participants. The original set of 119 pictures was edited. The pictures were cropped to remove nonrelevant information, fitted in an oval window, and converted to grayscale. Four hundred and eighty participants (72.9% women) took part in the study, rating the images on five dimensions: depicted emotion, clarity, intensity, valence, and genuineness. Valence scores were useful for discriminating between positive, negative, and neutral facial expressions. Results revealed that women were more accurate at recognizing emotions in children. Regarding parental status, parents, in comparison with nonparents, rated neutral expressions as more intense and genuine. They also rated sad, angry, disgusted, and fearful faces as less negative, and happy expressions as less positive. The editing and validation of the E-TIF database offers a useful tool for basic and experimental research in psychology.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Processing of external stimuli is conceived as a key factor in the evolutionary success of our species (Carretié et al., 2004; Pascalis & Kelly, 2009). Cognitive studies on this topic (Öhman, 1997; Öhman et al., 2000; Sokolov, 1963) have observed that our nervous system automatically reorients its processing resources towards two kinds of important stimulation: novel stimuli (i.e., unknown or unexpected) and emotional and motivational stimuli (i.e., important for the individual, such as food, danger, partners, social hierarchy). Specifically, the processing of emotional stimuli (e.g., emotional expressions) is essential for successful social interaction (Jack & Schyns, 2015; Keltner et al., 2019), as the human processing of social behavior is based on the constant practice of attributing mental states from emotional expressions (e.g., facial, verbal) in what has been widely called Theory of Mind (Brüne & Brüne-Cohrs, 2006; Molenberghs et al., 2016). Social affective stimuli are preferentially processed by the nervous system of the human species (Eslinger et al., 2021) because the satisfaction of most human needs involves social interaction, especially in children (Brown & Brown, 2015).

Some studies found that newborns and 3-, 6-, and 9-month-old infants show attentional preference for faces over distractors (Frank et al., 2009; Johnson et al., 1991; Libertus et al., 2017), indicating the evolutionary importance of face processing in socio-cognitive and social relationship development. Indeed, problems with selecting socially relevant versus irrelevant information has been associated with worse social interactions. For instance, these problems have often been observed in individuals with autism spectrum disorder (ASD, Frazier et al., 2017; Vacas et al., 2021, 2022).

On the other hand, correct attentional orienting and a realistic understanding of children´s facial expressions are particularly important to parenthood. Parents experience certain psychological changes, which help them better adapt to their new roles and enhance their responsiveness to children (Zhang et al., 2020). Reciprocally, infants communicate their needs and mental states mainly through vocalizations and facial expressions, which convey salient information that elicits affection and nurturing from adults (Liszkowski, 2014). A parent’s ability to accurately comment on their infant’s mental state is pivotal for the development of a secure attachment relationship and adaptive social functioning in children (Katznelson, 2014; Meins et al., 2001).

Images of emotional facial expressions are often used in emotion research. In recent years, one of the most important and frequently used facial picture sets has been the Karolinska Directed Emotional Faces database (KDEF) developed by Lundqvist et al. (1998). The KDEF includes 490 pictures showing 70 individuals (35 women and 35 men) displaying seven different emotional expressions (happy, sad, angry, fearful, disgusted, surprised, and neutral) from five different angles. The KDEF has been used in a wide range of research topics, especially in cognitive (Blanco et al., 2021; Calvo & Lundqvist, 2008; Kuehne et al., 2019) and clinical studies (Calvo & Avero, 2005; Duque & Vázquez, 2015; Sanchez et al., 2013; Unruh et al., 2020). Nevertheless, the KDEF has some limitations, such as not including emotional expressions from different age groups. For instance, this database does not include children’s pictures, which are especially relevant in research areas such as perinatal psychology. Several studies have found that, compared to pregnant women from a control group, depressed pregnant women disengage their gaze faster from children's faces with negative emotions (Pearson et al., 2010). However, this finding was not observed when negative emotional expressions from adults were displayed (Pearson et al., 2010). A recent study found that attentional biases towards infant faces are only present in expecting parents (women and men) with depressive symptoms but not in women with major depression (Bohne et al., 2021), revealing the importance of emotional self-relevant material when examining cognitive processes. However, for most of these studies, infant faces were sourced from publicly available data online due to the absence of standardized sets of emotional expressions for this purpose.

Numerous studies have found that infant stimuli, especially if they display emotional content, are prioritized in the attentional system of adults (Brosch et al., 2007; Thompson-Booth et al., 2014). This attentional preference elicits caregiving behaviors in adults which are essential for infant survival (Lorenz, 1943; Stern, 2002). Furthermore, previous research observed gender differences in the processing of children´s emotional faces. Women were faster at processing both positive and negative infant faces (Proverbio et al., 2006), showed more decoding accuracy (Proverbio et al., 2007) and rated all images as more arousing and clearer than men (Maack et al., 2017).

In addition to gender differences, some studies have addressed whether parenthood has differential effects on the processing of infant faces. For example, a review conducted by Parsons et al. (2010) reported that parents showed more arousing activity than nonparents in the reward-related mesolimbic dopamine system in response to images or videos of children. Similarly, Proverbio et al. (2006) observed that parents’ brain activity was significantly higher than that of nonparents during the processing of infant facial expressions that varied in valence and intensity.

To conduct these types of studies, several databases with facial expressions of children have been developed, for example, the Child Affective Facial Expression (CAFE) database (LoBue & Thrasher, 2015), the NIMH Child Emotional Faces Picture Set (NIMH-ChEFS) (Egger et al., 2011) or the Dartmouth Database of Children Faces (Dalrymple et al., 2013). These sets include pictures with different emotional expressions (happy, sad, angry, etc.) from children between 2 and 16 years old, but they do not contain emotional faces of infants under 2 years of age. To solve this limitation, Maack et al. (2017) created the Tromsø Infant Faces database (TIF), a standardized and freely available set containing full-color images of the emotional expressions of 18 Caucasian infants aged 4 to 12 months old. Another set of affective facial pictures of infants is the City Infant Faces Database (Webb et al., 2018), which consists of 154 facial stimuli expressed by babies aged between 0 and 12 months old. However, the use of this set is limited because the children’s parents took the pictures, and therefore, the images are too heterogeneous in terms of image quality, head position, etc.

According to some authors, to maximize the emotional salience of facial expressions, it is recommended to limit the displayed image to the facial area, removing nonrelevant information such as hair, neck, and other surrounding parts (Calvo & Lundqvist, 2008). Also, previous studies have used black and white or grayscale stimuli to reduce distracting elements such as color and brightness of the pictures (Blanco et al., 2017; Calvo et al., 2008). Following these guidelines, the main objective of this study was to edit the photographs contained in the TIF database and validate them in a large sample of participants. According to the original study (Maack et al., 2017), the images were assessed on five subjective scales: (a) depicted expression, (b) clarity, (c) intensity, (d) valence and (e) genuineness of the expression.

Additionally, our second purpose was to explore differences in the processing of infant faces between men and women, and parents and nonparents. Therefore, considering prior findings, the following was expected:

-

1)

Compared with men, women would show more decoding accuracy (Proverbio et al., 2007).

-

2)

Compared with men, women will rate all types of facial expressions as clearer and more intense. No differences were expected regarding valence and genuineness (Maack et al., 2017).

-

3)

In comparison with nonparents, parents will show greater decoding accuracy due to their life experience (Proverbio et al., 2006).

-

4)

In comparison with nonparents, parents will rate neutral and sad expressions as clearer and more intense. No differences were expected regarding valence and genuineness (Maack et al., 2017).

Methods

Participants

A sample of 480 participants (350 women) voluntarily took part in the study after providing informed consent. Participants who rated less than 80% of the facial expressions in each block were excluded from the data analysis. No differences were found between blocks in the number of participants excluded [X2 (3) = 2.104, p = .551]. Sixty-three participants were excluded from Block 1, 53 from Block 2, 55 from Block 3, and 57 from Block 4.

All participants were aged between 18 and 77 (mean = 40.88, SD = 14.94). Similar to prior studies (Moltó et al., 2013), the sample had a distribution ratio of women to men of 2:1. Regarding parental status, 276 participants (57.5%) reported being parents.

Sample size was previously calculated using G*Power 3.1.9.4 software for a repeated measures analysis of variance (ANOVA) within-between interactions. In an attempt to obtain a small effect size (.15), considering an alpha level of .01, four groups (women–men, parents–nonparents), seven measures (one for each type of emotional expression), expecting a statistical power of .95, and a correlation among repeated measures of .20, G*Power results indicated that a minimum sample of 196 participants would be necessary to obtain significant differences. However, since previous validation studies have used samples with over 200 participants (Grimaldos et al., 2021; Goeleven et al., 2008; Kurdi et al., 2017; Lang et al., 2008), we decided to increase the number of participants.

Materials

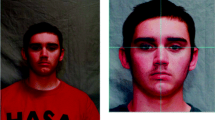

The pictures were obtained from the Tromsø Infant Faces database. We obtained permission to edit and validate the images from the corresponding author (Gerit Pfuhl) of the original version. The TIF database is composed of emotional facial expressions from 18 infants (10 girls and 8 boys) ranging in age from 4 to 12 months. For each infant there are two images of happy expressions, one of a sad expression, one of a disgusted expression, one of a neutral expression, and one depicting surprise, fear, or anger. This set of 119 original TIF pictures was edited for this study. First, following Calvo & Lundqvist (2008), the pictures were cropped to remove nonrelevant information (every child was wearing a white cap tied under her/his chin) and fitted in an oval window. Then, all images were converted to grayscale for the purpose of homogenizing visual aspects such as brightness (Fig. 1).

Procedure

The study was set up using Qualtrics (Provo, UT, 2017) and distributed through social media. The study was launched in Spanish.

To avoid cognitive overload and fatigue, the 119 images were divided into four blocks. The first block consisted of 30 pictures and was rated by 110 participants. The second block consisted of 30 pictures and was rated by 128 participants. The third block consisted of 30 pictures and was rated by 124 participants. The fourth block consisted of 29 images and was rated by 118 participants. Images were randomly assigned to each block.

Emotion, clarity, intensity, valence, and genuineness ratings were obtained for each picture. Participants completed the survey on their computers or mobile devices. Each facial expression and the five rating scales were presented on the same screen. First, participants were asked to choose the emotion displayed by the infant (i.e., “What emotion do you think the baby is experiencing? Happy, sad, surprised, disgusted, fearful, angry, or neutral”). Then, clarity, intensity, valence, and genuineness were measured for each image (i.e., “How would you rate the emotion expressed by the baby according to the following characteristics?”). A five-point Likert scale was used, ranging from “0 = very ambiguous” to “5 = very clear” (clarity), “0 = very weak” to “5 = very strong” (intensity), “0 = very negative” to “5 = very positive” (valence), and “0 = not real” to “5 = very real” (genuineness). Each emotional expression was rated by a minimum of 109 and a maximum of 128 participants (M = 120, SD = 7.10).

The mean duration of the survey was 23 minutes. Participation was voluntary and participants did not receive any reward. The study was approved by the university ethics committee and was conducted in compliance with the Declaration of Helsinki.

Statistical analyses were performed using SPSS 25 and G*Power 3.1.9.4.

Data analysis

Descriptive statistics (mean and standard deviation) were obtained for each picture.

To analyze differences in accuracy, clarity, intensity, valence, and genuineness, we conducted one-way ANOVAs using the different emotional expressions as independent variables. Post hoc tests (Games-Howell) were used to further analyze significant differences.

To explore differences between women and men, and parents and nonparents, linear mixed models (LMM) were conducted (estimated using maximum likelihood). LMM cope with missing data more easily than repeated-measures ANOVA. In the case of this dataset and due to the random assignment of the pictures to the different blocks, a number of participants were excluded because they did not rate facial expressions of any emotion. For this reason, an LMM approach was most appropriate.

We included accuracy, clarity, intensity, valence, and genuineness as the dependent variables. and added emotion, gender, parental status, and multiple interactions as fixed effects. We included Participant as a random effect. Age was used as a covariate. To explore significant differences, corrected Bonferroni post hoc tests were used.

Results

Sample characteristics

Sample characteristics are summarized in Table 1. No differences in age [F(3, 479) = .189; p = .904], gender [X2(3) = 5.958, p = .114], or parental status distributions [X2(3) = 1.156, p = .764] were found between the four blocks. However, we found differences in age regarding gender (t(200.582) = 2.906, p = .004, g = 2.37, 95% CI = [1.54, 8.02]) and parental status, (t(478) = −17.163, p = .000, d = .16, 95% CI = [−20.77, −16.50]). The mean age for women and men was 39.59 (SD = 14.04) and 44.37 (SD = 16.69) years, respectively. The mean age for parents and nonparents was 48.80 (SD = 10.91) and 30.17 (SD = 12.83) years, respectively.

Accuracy, clarity, intensity, valence, and genuineness analyses

As a means to explore differences between the original version of the TIF and the edited version (E-TIF), accuracy rates were calculated for each picture using the proportion of participants who correctly identified the original TIF target emotion. The mean accuracy rate was 59.53% (SD = 24.63, 95% CI = [55.05, 64.00]). The one-way ANOVA reached marginal significance [F(6, 112) = 2.123, p = .056, η2 = .10]; however, corrected Bonferroni post hoc tests did not show statistical differences between different emotions in accuracy (all ps > .10). Mean accuracy rates were 69.98% (SD = 26.28, 95% CI = [60.17, 79.79]) for happy expressions, 67.15% (SD = 26.68, 95% CI = [44.85, 89.44]) for fearful expressions, 64.25% (SD = 16.70, 95% CI = [51.42, 77.09]) for angry expressions, 61.18% (SD = 24.95, 95% CI = [46.10, 76.26]) for surprised expressions, 52.94% (SD = 26.16, 95% CI = [42.37, 63.51]) for neutral expressions, 52.88% (SD = 24.29, 95% CI = [36.57, 69.20]) for disgusted expressions, and 50.70% (SD = 17.68, 95% CI = [42.86, 58.53]) for sad expressions.

Additional analyses were conducted to compare ratings of clarity, intensity and valenceFootnote 1 for the overall set of images with those found in the original version. On average, images from the original version of the TIF were rated as more clear (t (118) = 8.780, p < .001, r = .62), more intense (t (118) = 10.941, p < .001, r = .71) and more positive (t (118) = 8.466, p < .001, r = .61) than the edited ones. Results are shown in Table 2.

To explore clarity, intensity, valence and genuineness of emotional expressions, the highest-rated emotion (i.e., highest percentage of agreement) was used as the valid emotion for every edited picture. Of the original 119 images, 25 expressions were rated as happy, 17 as sad, 17 as angry, 12 as surprised, 8 as disgusted, 12 as fearful, and 25 as neutral. Three expressions were similarly rated as different emotions, so we labelled these faces as ambiguous.

A one-way ANOVA revealed that there were statistically significant differences in clarity [F(6, 109) = 9.489, p < .001, η2 = .34], intensity [F(6, 109) = 17.561, p < .001, η2 = .49], valence [F(6, 109) = 83.628, p < .001, η2 = .82] and genuineness [F(6, 109) = 5.997, p < .001, η2 = .25] between the different emotional expressions (Table 3). Post hoc analyses (Bonferroni corrected) revealed that neutral expressions were rated as less clear than happy, sad, angry, surprised, and fearful expressions (all ps < .009). No differences were found between neutral and disgusted expressions (p = .817).

Regarding intensity, neutral expressions were rated as less intense than the other emotional expressions (p < .035). However, no differences were found among other emotional expressions (all ps > .122).

Regarding valence, post hoc analyses showed that happy faces were rated as more positive than the rest of expressions (all ps < .028). Sad expressions were evaluated as the most negative, and statistically significant differences were observed in comparison to surprised (p < .001) and neutral expressions (p < .001). No differences were observed between sad expressions and other negative emotions like disgust (p = .096), anger (p = .446) and fear (p = .999). Neutral expressions were rated as more positive than sad, angry, disgusted, and fearful expressions (all ps < .001), but no differences were found in comparison with surprised images (p = .363).

Finally, neutral expressions were rated as less genuine than happy, sad, angry, surprised, and fearful expressions (all ps < .026). Neutral pictures did not differ from the ones depicting disgust (p = .894) in genuineness. No differences were shown among other emotional expressions in genuineness (all ps > .364).

Gender and parental status differences

A linear mixed model (LMM) was conducted using accuracy as the dependent variable. Emotion, gender, parental status, and multiple interactions were added as fixed effects. A significant main effect of gender was found [F(1, 463.185) = 5.368, p = .021, η2 = .011], showing that women were more accurate than men at identifying emotions across all types of expressions (Table 4). Mean accuracy ratings for women and men were 52.4% (SD = .70, 95% CI = [51.10, 53.70]) and 49.5% (SD = 1.10, 95% CI = [47.40, 51.60]), respectively. A marginally significant parental status × emotion interaction effect was found [F(6, 460.490) = 2.088, p = .053, η2 = .026]. Corrected Bonferroni post hoc tests showed that parents tended to be more accurate than nonparents at identifying neutral expressions (p =.004, Table 5). No interaction effects between gender and emotion [F(6, 460.494) = 1.248, p = .281, η2 = .016] or gender × parental status × emotion [F(6, 460.492) = .470, p = .830, η2 = .006] were found.

The LMM for clarity showed a significant interaction between gender and emotion [F(6, 466.883) = 4.767, p < .001, η2 = .057]. Post hoc analyses (Table 4) revealed that women rated disgusted (p = .003) and surprised expressions (p = .024) as clearer, while men rated expressions of anger (p = .040) as clearer. Happy, sad, fearful and neutral expressions were similarly rated in clarity by women and men (all ps >.30).

The interaction parental status × emotion also reached significance [F(6, 466.883) = 3.359, p = .003, η2 = .041]; however, corrected Bonferroni post hoc tests did not show statistical differences between parents and nonparents in clarity (all ps > .067). The gender × parental status × emotion three-way interaction was not significant [F(6, 466.883) = .982, p = .437, η2 = .005].

Regarding intensity, the interaction between gender and emotion from the LMM was significant [F(6, 479.162) = 3.447, p = .002, η2 = .041]. We conducted corrected Bonferroni post hoc tests and we observed that women evaluated sad (p =.001) and disgusted expressions (p =.040) as more intense in comparison with men (Table 4). Moreover, the interaction between parental status and emotion was also significant [F(6, 479.162) = 5.885, p < .001, η2 = .068]. Corrected Bonferroni post hoc tests (Table 5) showed that parents, in comparison with nonparents, rated neutral expressions as more intense (p =.011) and angry faces as less intense (p =.003). The gender × parental status × emotion three-way interaction was not significant [F(6, 479.162) = 1.019, p = .412, η2 = .012].

Regarding valence, the LMM showed a significant gender × emotion interaction [F(6, 455.662) = 2.699, p = .014, η2 = .034]. After the Bonferroni tests, we found that women rated happy expressions more positively (p = .030) and fearful faces more negatively (p = .029) in comparison with men (Table 4). Moreover, a significant parental status × emotion interaction was found, [F(6, 455.662) = 5.694, p < .001, η2 = .070]. Post hoc analyses showed that parents, in comparison with nonparents (Table 5), rated sad, angry, disgusted, fearful and neutral expressions less negatively (all ps <.013) and happy expressions less positively (p = .001). No differences were found in surprised faces (p = .917). No significant gender × parental status × emotion three-way interaction was found [F(6, 455.662) = .869, p = .517, η2 = .011].

The LMM for genuineness showed a significant main effect for gender [F(1, 480.075) = 12.453, p < .001, η2 = .025]. Specifically, women rated all types of expressions as more genuine in comparison with men (Table 4). Mean genuineness ratings for women and men were 3.84 (SD = .03, 95% CI = [3.78, 3.90]) and 3.63 (SD = .05, 95% CI = [3.53, 3.73]), respectively. The gender × emotion interaction was not significant [F(6, 469.849) = 1.769, p = .104, η2 = .022]. On the other hand, the interaction parental status × emotion reached significance [F(6, 469.849) = 2.679, p = .014, η2 = .033]. Corrected Bonferroni tests (Table 5) showed that parents rated neutral expressions as more genuine (p =.011) in comparison with nonparents. The gender × parental status × emotion three-way interaction was not significant [F(6, 469.849) = .745, p = .613, η2 = .009].

Discussion

The main objective of the present research was to edit and validate the E-TIF database in a large sample of participants. We edited and validated a total of 119 infant pictures to make them suitable for experimental research, especially in perinatal psychology. Images were edited, removing nonrelevant information, and converted to grayscale following the guidelines used in previous studies (Calvo & Lundqvist, 2008). Then, each infant face was assessed according to five dimensions: depicted emotion, clarity, intensity, valence, and genuineness. Overall, results showed that neutral expressions were less clear, intense, and genuine than emotional expressions. These results are consistent with the original version of the TIF (Maack et al., 2017). In addition, valence scores were useful to discriminate between positive, negative, and neutral facial expressions. Therefore, the E-TIF database offers a valid resource for future emotion and cognitive research.

Our second purpose was to explore differences in the processing of infant faces between men and women, and parents and nonparents. Regarding these objectives, our first hypothesis (i.e., we expected that women would show more decoding accuracy across all types of expressions) was confirmed. We found a main effect of gender that revealed that women were more accurate at recognizing emotions in children.

Regarding clarity, our second hypothesis was only partially supported. Women rated disgusted and surprised expressions as clearer compared to men. These results are in line with previous studies that found that women are better at recognizing emotions of disgust and surprise (Baptista Menezes et al., 2017), perhaps because both emotions are classified by some authors as ambiguous (Palermo & Coltheart, 2004; Pochedly et al., 2012). Similar results were obtained regarding intensity. Women rated emotions of sadness and disgust as more intense. However, when exploring valence and authenticity, unexpected results were observed; women rated happy expressions more positively, fearful faces more negatively, and all types of expressions as more genuine compared to men.

This female advantage in the processing of emotional facial expressions of infants has been explained through some evolutionary hypotheses as the “primary caretaker hypothesis” (Babchuk et al., 1985; Baptista Menezes et al., 2017; Hampson et al., 2006; Parsons et al., 2021). These authors hypothesized that gender differences in identifying infant emotional expressions are related to selective pressures pertinent to the caretaking role. That is, the gender that has predominantly taken the responsibility of infant caretaking through evolutionary time showed better skills that are important for the survival of offspring, regardless of prior personal caretaking experience. In most mammalian animals, this function falls on females (Trivers, 1972). On the other hand, some authors argue that these differences could be explained by gender-typed socialization experiences, where women are provided more opportunities to assume caretaking roles (Hall, 1984; Henley, 1977; Weitz, 1974).

Our third hypothesis was not confirmed. We did not observe a general greater accuracy in the recognition of emotions in the parent´s group. Although this result was unexpected, it is in line with the original version of the TIF (Maack et al., 2017). Previous studies found that there is a familiarity effect on the accuracy of emotion recognition, i.e., familiar faces are more likely to be correctly identified (Huynh et al., 2010; Li et al., 2019; Liccione et al., 2014). Since the TIF stimuli used in our study was unfamiliar to our participants, unfamiliarity could be used to explain why parents did not show greater accuracy at emotion recognition in comparison with nonparents. Considering these findings, it could be hypothesized that the parents’ advantage would be visible only when the stimuli used for emotion recognition were from their descendants (Ranote et al., 2004; Swain et al., 2007).

Our fourth hypothesis was only partially confirmed. We found that parents rated neutral expressions as more intense, but not sad expressions, as we hypothesized. Some authors suggest that parents’ range of intensity may be wider because they are frequently exposed to the intense emotions of their children. As a consequence, they are likely to assign lower intensity to infant emotional faces (Arteche et al., 2016). Our results were consistent with the idea of overexposure. We also found that parents, in comparison with nonparents, rated neutral expressions as more genuine. They also rated sad, angry, disgusted, and fearful faces as less negative, and happy expressions as less positive.

The edited E-TIF database has some strengths but also some limitations. Regarding strengths, we believe that the edition of the images (i.e., removing nonrelevant information and converting to grayscale) is very relevant for studies that assess attentional biases in facial emotions because nonrelevant information (e.g., hair, ears, etc.) and color variations can affect visual attentional deployment. Moreover, this edition allows researchers to use these stimuli in combination with others from similar databases, for example, the edited KDEF (Goeleven et al., 2008; Sanchez & Vazquez, 2013). Finally, a key strength of the present study was the large sample used to validate this edited version of the E-TIF. One of the most important limitations in the validation of emotional facial expressions is the low participation of men (Goeleven et al., 2008; Sanchez & Vazquez, 2013; Webb et al., 2018), which limits the use of stimuli in this population. In our study, the sample had a distribution ratio of women to men of 2:1, not unlike previous large studies on image validation (Grimaldos et al., 2021; Moltó et al., 2013). Moreover, the size of the sample allowed for the validation of infant facial expressions on parents, unlike previous studies that created and validated similar stimuli (Webb, et al., 2018).

Some limitations need to be acknowledged. First, the edited E-TIF pictures were rated as less clear, intense, and positive than the original ones. This finding could be explained by the modifications performed on the images, suggesting that the removal of nonrelevant information and the conversion to grayscale could decrease the clarity and intensity of expressions. Nevertheless, this result could be also explained by cross-cultural differences between the Norwegian and Spanish sample. In fact, cultural variation in emotion intensity perception has been well documented (Ekman et al., 1987; Engelmann & Pogosyan, 2013; Matsumoto, 1990).

Second, the physical characteristics of the stimuli (e.g., size, resolution, and brightness) were not consistent between participants due to the survey being completed on their own computers or mobile devices. While online studies facilitate participant recruitment, they present the main limitation of lack of control over the conditions in which the study is carried out. Nevertheless, during the last 2 years in which travel restrictions and lockdowns were frequent, information and communications technology (ICT) were the only tools available for research. On the other hand, some authors consider that the use of ICT tools (mainly smartphones) for psychological research has some advantages such as a more naturalistic approach (Wang & He, 2015).

The third limitation is that the TIF database only contains Caucasian infants, which could limit its use on non-Caucasian samples. Although there are some databases with images of infants from different ethnicities (Cheng et al., 2015), none of them include images of infants under 2 years of age from different ethnicities. Further research is necessary to develop databases that are able to close this gap.

Despite these limitations, the edition and validation of the E-TIF database offers a useful tool for basic and experimental research in psychology. Although these validated and edited images can be used in studies with different samples, perinatal psychology studies could benefit greatly from this resource. Available studies in this field (e.g., attentional biases in perinatal depression) have used stimuli (i.e., infant faces) collected from public sources online due to the absence of standardized sets of emotional expressions for this purpose. We hope our validated edition can help researchers conduct future empirical studies to improve knowledge on perinatal depression, as well as available psychological treatments.

Availability of data and materials

The datasets generated during the current study are available on the OSF repository, https://osf.io/qm8zy/?view_only=44cd89982d6944acada30e6ae8a8eb9d. However, the edited images of the E-TIF database are not publicly available during the current study because they contain sensitive material (image of infants). They are available upon request for research purposes by e-mailing aduquesa@upsa.es.

Code availability

Not applicable

Notes

Genuineness ratings were not reported in the original version of the TIF.

References

Arteche, A. X., Vivian, F. A., Dalpiaz, B. P. Y., & Salvador-Silva, R. (2016). Effects of sex and parental status on the assessment of infant faces. Psychology and Neuroscience, 9(2), 176–187.

Babchuk, W. A., Hames, R. B., & Thompson, R. A. (1985). Sex differences in the recognition of infant facial expressions of emotion: The primary caretaker hypothesis. Ethology and Sociobiology, 6(2), 89–101.

Baptista Menezes, C., Corrêa, J., Ely, F., Flores, P., Foletto, J., & Lemos, S. J. (2017). Gender and the capacity to identify facial emotional expressions. Estudios de Psicologia, 22(1), 1–9.

Blanco, I., Nieto, I., & Vazquez, C. (2021). Emotional SNARC: Emotional faces affect the impact of number magnitude on gaze patterns. Psychological Research, 85(5), 1885–1893.

Blanco, I., Serrano-Pedraza, I., & Vazquez, C. (2017). Don’t look at my teeth when I smile: Teeth visibility in smiling faces affects emotionality ratings and gaze patterns. Emotion, 17(4), 640.

Bohne, A., Nordahl, D., Lindahl, Å. A. W., Ulvenes, P., Wang, C. E. A., & Pfuhl, G. (2021). Emotional infant face processing in women with major depression and expecting parents with depressive symptoms. Frontiers in Psychology, 12, 1–15.

Brosch, T., Sander, D., & Scherer, K. R. (2007). That baby caught my eye... attention capture by infant faces. Emotion, 7(3), 685–689.

Brown, S. L., & Brown, R. M. (2015). Connecting prosocial behavior to improved physical health: Contributions from the neurobiology of parenting. Neuroscience and Biobehavioral Reviews, 55, 1–17.

Brüne, M., & Brüne-Cohrs, U. (2006). Theory of mind: Evolution, ontogeny, brain mechanisms and psychopathology. Neuroscience & Biobehavioral Review, 30(4), 437–455.

Calvo, M. G., & Avero, P. (2005). Time course of attentional bias to emotional scenes in anxiety: Gaze direction and duration. Cognition & Emotion, 19(3), 433–451.

Calvo, M. G., & Lundqvist, D. (2008). Facial expressions of emotion (KDEF): Identification under different display-duration conditions. Behavior research methods, 40(1), 109–115.

Calvo, M. G., Nummenmaa, L., & Avero, P. (2008). Visual search of emotional faces: Eye-movement assessment of component processes. Experimental Psychology, 55(6), 359–370.

Carretié, L., Hinojosa, J. A., Martín-Loeches, M., Mercado, F., & Tapia, M. (2004). Automatic attention to emotional stimuli: Neural correlates. Human brain mapping, 22(4), 290–299.

Cheng, G., Zhang, D.-J., Guan, Y.-S., & Chen, Y.-H. (2015). Preliminary establishment of the standardized Chinese Infant Facial Expression of Emotion. [Preliminary establishment of the standardized Chinese Infant Facial Expression of Emotion.]. Chinese Mental Health Journal, 29(6), 406–412.

Dalrymple, K. A., Gomez, J., & Duchaine, B. (2013). The Dartmouth database of children’s faces: Acquisition and validation of a new face stimulus set. PloS one, 8(11), e79131.

Duque, A., & Vázquez, C. (2015). Double attention bias for positive and negative emotional faces in clinical depression: Evidence from an eye-tracking study. Journal of Behavior Therapy and Experimental Psychiatry, 46, 107–114.

Egger, H. L., Pine, D. S., Nelson, E., Leibenluft, E., Ernst, M., Towbin, K. E., & Angold, A. (2011). The NIMH Child Emotional Faces Picture Set (NIMH-ChEFS): A new set of children’s facial emotion stimuli. International Journal of Methods in Psychiatric Research, 20(3), 145–156.

Ekman, P., Friesen, W. V., O’Sullivan, M., Chan, A., Diacoyanni-Tarlatzis, I., Heider, K., et al. (1987). Universals and cultural differences in the judgments of facial expressions of emotion. Journal of Personality and Social Psychology, 53, 712–717.

Engelmann, J. B., & Pogosyan, M. (2013). Emotion perception across cultures: The role of cognitive mechanisms. Frontiers in Psychology, 4, 1–10.

Eslinger, P., Jr., Anders, S., Ballarini, T., Boutros, S., Krach, S., Mayer, A. V., Moll, J., Newton, T. L., Schroeter, M. L., Oliveira-Souza, R., Raber, J., Sullivan, G. B., Swain, J. E., Lowe, L., & Zahn, R. (2021). The neuroscience of social feelings: Mechanisms of adaptive social functioning. Neuroscience and Biobehavioral Reviews, 128, 592–620.

Frank, M. C., Vul, E., & Johnson, S. P. (2009). Development of infants’ attention to faces during the first year. Cognition, 110(2), 160–170.

Frazier, T. W., Strauss, M., Klingemier, E. W., Zetzer, E. E., Hardan, A. Y., Eng, C., & Youngstrom, E. A. (2017). A meta-analysis of gaze differences to social and nonsocial information between individuals with and without autism. Journal of the American Academy of Child and Adolescent Psychiatry, 56(7), 546–555.

Goeleven, E., De Raedt, R., Leyman, L., & Verschuere, B. (2008). The Karolinska directed emotional faces: A validation study. Cognition and Emotion, 22(6), 1094–1118.

Grimaldos, J., Duque, A., Palau-Batet, M., Pastor, M. C., Bretón-López, J., & Quero, S. (2021). Cockroaches are scarier than snakes and spiders: Validation of an affective standardized set of animal images (ASSAI). Behavior Research Methods, 53(6), 2338–2350.

Hall, J. A. (1984). Nonverbal sex differences: Communication accuracy and expressive style. Baltimore Johns Hopkins University Press.

Hampson, E., van Anders, S. M., & Mullin, L. I. (2006). A female advantage in the recognition of emotional facial expressions: Test of an evolutionary hypothesis. Evolution and Human Behavior, 27(6), 401–416.

Henley, N. (1977). Body politics: Power, sex, and nonverbal communication. Prentice Hall.

Huynh, C., Vicente, G. I., & Peissig, J. (2010). The effects of familiarity on genuine emotion recognition. Journal of Vision, 10(7), 628.

Jack, R. E., & Schyns, P. G. (2015). The human face as a dynamic tool for social communication. Current Biology, 25(14), R621–R634.

Johnson, M. H., Dziurawiec, S., Ellis, H., & Morton, J. (1991). Newborns’ preferential tracking of face-like stimuli and its subsequent decline. Cognition, 40(1–2), 1–19.

Katznelson, H. (2014). Reflective functioning: A review. Clinical Psychology Review, 34(2), 107–117.

Keltner, D., Sauter, D., Tracy, J., & Cowen, A. (2019). Emotional expression: Advances in basic emotion theory. Journal of Nonverbal Behavior, 43, 133–160.

Kuehne, M., Schmidt, K., Heinze, H. J., & Zaehle, T. (2019). Modulation of emotional conflict processing by high-definition transcranial direct current stimulation (HD-TDCS). Frontiers in Behavioral Neuroscience, 13, 224.

Kurdi, B., Lozano, S., & Banaji, M. R. (2017). Introducing the open affective standardized image set (OASIS). Behavior Research Methods, 49(2), 457–470.

Lang, P. J., Bradley, M. M., & Cuthbert, B. N. (2008). International Affective Picture System (IAPS): Affective ratings of pictures and instruction manual. Technical Report A-7. The Center for Research in Psychophysiology, University of Florida.

Li, J., He, D., Zhou, L., Zhao, X., Zhao, T., Zhang, W., & He, X. (2019). The effects of facial attractiveness and familiarity on facial expression recognition. Frontiers in Psychology, 10, 2496–2508.

Libertus, K., Landa, R. J., & Haworth, J. L. (2017). Development of attention to faces during the first 3 years: Influences of stimulus type. Frontiers in Psychology, 8, 1976.

Liccione, D., Moruzzi, S., Rossi, F., Manganaro, A., Porta, M., Nugrahaningsih, N., Caserio, V., & Allegri, N. (2014). Familiarity is not notoriety: Phenomenological accounts of face recognition. Frontiers in Human Neuroscience, 8, 672–682.

Liszkowski, U. (2014). Two sources of meaning in infant communication: preceding action contexts and act-accompanying characteristics. Philosophical transactions of the Royal Society of London. Series B, Biological Sciences, 369, 20130294.

LoBue, V., & Thrasher, C. (2015). The Child Affective Facial Expression (CAFE) set: Validity and reliability from untrained adults. Frontiers in psychology, 5, 1532.

Lorenz, K. (1943). Die angeborenen formen möglicher erfahrung. Zeitschrift für Tierpsychologie, 5(2), 235–409.

Lundqvist, D., Flykt, A., & Öhman, A. (1998). The Karolinska Directed Emotional Faces—KDEF. Karolinska Institute, Department of Clinical Neuroscience, Psychology Section.

Maack, J. K., Bohne, A., Nordahl, D., Livsdatter, L., Lindahl, Å. A., Øvervoll, M., ... & Pfuhl, G. (2017). The Tromso Infant Faces Database (TIF): Development, validation and application to assess parenting experience on clarity and intensity ratings. Frontiers in Psychology, 8, 409.

Matsumoto, D. (1990). Cultural similarities and differences in display rules. Motivation and Emotion, 14, 195–214.

Meins, E., Fernyhough, C., Fradley, E., & Tuckey, M. (2001). Rethinking maternal sensitivity: Mothers’ comments on infants’ mental processes predict security of attachment at 12 months. Journal of Child Psychology and Psychiatry, and Allied Disciplines, 42(5), 637–648.

Molenberghs, P., Johnson, H., Jason, J. D., & Mattingley, J. B. (2016). Understanding the minds of others: A neuroimaging meta-analysis. Neuroscience and Biobehavioral Reviews, 65, 276–291.

Moltó, J., Segarra, P., López, R., Esteller, À., Fonfría, A., Pastor, M. C., & Poy, R. (2013). Adaptación española del" International Affective Picture System"(IAPS). Tercera parte. Anales de Psicología/Annals of Psychology, 29(3), 965–984.

Öhman, A. (1997). As fast as the blink of an eye: Evolutionary preparedness for preattentive processing of threat. In P. J. Lang, R. F. Simons, & M. Balaban (Eds.), Attention and orienting. Sensory and motivational processes (pp. 165–184). Lawrence Erlbaum Associates.

Öhman, A., Hamm, A., & Hugdahl, K. (2000). Cognition and the autonomic nervous system: Orienting, anticipation, and conditioning. In T. Cacioppo, L. G. Tassinary, & G. G. Berntson (Eds.), Handbook of psychophysiology (pp. 533–575). Cambridge University Press.

Palermo, R., & Coltheart, M. (2004). Photographs of facial expression: Accuracy, response times, and ratings of intensity. Behavior Research Methods, Instruments, & Computers, 36(4), 634–638.

Parsons, C. E., Young, K., Murray, L., Stein, A., & Kringelbach, M. L. (2010). The functional neuroanatomy of the evolving parent-infant relationship. Progress in Neurobiology, 91(3), 220–241.

Parsons, C. E., Nummenmaa, L., Sinerva, E., Korja, R., Kajanoja, J., Young, K. S., Karlsson, H., & Karlsson, L. (2021). Investigating the effects of perinatal status and gender on adults’ responses to infant and adult facial emotion. Emotion, 21(2), 337–349.

Pascalis, O., & Kelly, D. J. (2009). The origins of face processing in humans: Phylogeny and ontogeny. Perspectives on Psychological Science, 4(2), 200–209.

Pearson, R. M., Cooper, R. M., Penton-Voak, I. S., Lightman, S. L., & Evans, J. (2010). Depressive symptoms in early pregnancy disrupt attentional processing of infant emotion. Psychological medicine, 40(4), 621–631.

Pochedly, J. T., Widen, S. C., & Russell, J. A. (2012). What emotion does the “facial expression of disgust” express? Emotion, 12(6), 1315–1319.

Proverbio, A. M., Brignone, V., Matarazzo, S., Del Zotto, M., & Zani, A. (2006). Gender differences in hemispheric asymmetry for face processing. BMC Neuroscience, 7, 44.

Proverbio, A. M., Matarazzo, S., Brignone, V., Del Zotto, M., & Zani, A. (2007). Processing valence and intensity of infant expressions: The roles of expertise and gender. Scandinavian Journal of Psychology, 48, 477–485.

Qualtrics. (2017). Qualtrics. UT.

Ranote, S., Elliott, R., Abel, K. M., Mitchell, R., Deakin, J. F., et al. (2004). The neural basis of maternal responsiveness to infants: An fMRI study. Neuroreport, 15, 1825–1829.

Sanchez, A., & Vazquez, C. (2013). Prototypicality and intensity of emotional faces using an anchor-point method. Spanish Journal of Psychology, 16, E7.

Sanchez, A., Vazquez, C., Marker, C., LeMoult, J., & Joormann, J. (2013). Attentional disengagement predicts stress recovery in depression: An eye tracking study. Journal of Abnormal Psychology, 122, 303–313.

Sokolov, E. N. (1963). A probabilistic model of perception. Soviet Psychology and Psychiatry, 1(2), 28–36.

Stern, D. N. (2002). The first relationship: Infant and mother. Harvard University Press.

Swain, J. E., Lorberbaum, J. P., Kose, S., & Strathearn, L. (2007). Brain basis of early parent-infant interactions: Psychology, physiology, and in vivo functional neuroimaging studies. Journal of Child Psychology and Psychiatry, 48, 262–287.

Thompson-Booth, C., Viding, E., Mayes, L. C., Rutherford, H. J., Hodsoll, S., & McCrory, E. J. (2014). Here’s looking at you, kid: Attention to infant emotional faces in mothers and non-mothers. Developmental science, 17(1), 35–46.

Trivers, R. (1972). Parental investment and sexual selection. In B. Campbell (Ed.), Sexual selection and the descent of man (pp. 139–179). Aldine Press.

Unruh, K. E., Bodfish, J. W., & Gotham, K. O. (2020). Adults with autism and adults with depression show similar attentional biases to social-affective images. Journal of Autism and Developmental Disorders, 50(7), 2336–2347.

Vacas, J., Antolí, A., Sánchez-Raya, A., Pérez-Dueñas, C., & Cuadrado, F. (2021). Visual preference for social vs. non-social images in young children with autism spectrum disorders. An eye tracking study. PLoS ONE, 16(6), e0252795.

Vacas, J., Antolí, A., Sánchez-Raya, A., Pérez-Dueñas, C., & Cuadrado, F. (2022). Social attention and autism in early childhood: Evidence on behavioral markers based on visual scanning of emotional faces with eye-tracking methodology. Research in Autism Spectrum Disorders, 93, 101930.

Wang, W., & He, J. (2015). Smartphones: A game changer for psychological research. Journal of Ergonomics, 5, e136.

Webb, R., Ayers, S., & Endress, A. (2018). The City Infant Faces Database: A validated set of infant facial expressions. Behavior Research, 50(1), 151–159.

Weitz, S. (1974). Nonverbal communication: Readings with commentary. Oxford U. Press.

Zhang, K., Rigo, P., Su, X., Wang, M., Chen, Z., Esposito, G., Putnick, D. L., Bornstein, M. H., & Du, X. (2020). Brain responses to emotional infant faces in new mothers and nulliparous women. Scientific Reports, 10(1), 9560–9570.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature. This research was funded by the Ministerio de Ciencia e Innovación (Spain) (Programa Estatal I+D+I, PID2020-116030GA-I00, PGC Tipo A).

Author information

Authors and Affiliations

Contributions

Conception and design of the study: AD & CC

Data collection: GP & GS

Data analysis and interpretation: AD, GP & GS

Drafting the article: AD, AS & BP

Critical revision of the article: AS & CC

Final approval of the version to be published: AD, GP, GS, AS, BP & CC.

Corresponding author

Ethics declarations

Ethics approval

The study was conducted in accordance with the Declaration of Helsinki, and the protocol was approved by the Ethics Committee of Pontifical University of Salamanca (Acta 20/7/20).

Consent to participate

All subjects provided informed consent for inclusion before they participated in the study.

Consent for publication

Participants signed informed consent forms regarding the publication of their data.

Conflict of interest

All authors declare that they have no conflicts of interest.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Duque, A., Picado, G., Salgado, G. et al. Validation of the Edited Tromsø Infant Faces Database (E-TIF): A study on differences in the processing of children's emotional expressions. Behav Res 56, 2507–2518 (2024). https://doi.org/10.3758/s13428-023-02163-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-023-02163-9