Abstract

Many aspects of humans’ dynamic decision-making (DDM) behaviors have been studied with computer-simulated games called microworlds. However, most microworlds only emphasize specific elements of DDM and are inflexible in generating a variety of environments and experimental designs. Moreover, despite the ubiquity of gridworld games for Artificial Intelligence (AI) research, only some tools exist to aid in the development of browser-based gridworld environments for studying the dynamics of human decision-making behavior. To address these issues, we introduce Minimap, a dynamic interactive game to examine DDM in search and rescue missions, which incorporates all the essential characteristics of DDM and offers a wide range of flexibility regarding experimental setups and the creation of experimental scenarios. Minimap specifically allows customization of dynamics, complexity, opaqueness, and dynamic complexity when designing a DDM task. Minimap also enables researchers to visualize and replay recorded human trajectories for the analysis of human behavior. To demonstrate the utility of Minimap, we present a behavioral experiment that examines the impact of different degrees of structural complexity coupled with the opaqueness of the environment on human decision-making performance under time constraints. We discuss the potential applications of Minimap in improving productivity and transparent replications of human behavior and human-AI teaming research. We made Minimap an open-source tool, freely available at https://github.com/DDM-Lab/MinimapInteractiveDDMGame.

Similar content being viewed by others

Notes

Demo of the game: http://janus.hss.cmu.edu:5701/demo/

a modern, high-performance, web framework for building APIs with Python 3.6+ (https://fastapi.tiangolo.com/).

JavaScript library designed to simplify the client-side manipulation of an HTML page (https://jquery.com/).

JavaScript library for drawing functionality using the HTML5 canvas element (https://p5js.org/).

Socket-io client library (https://socket.io/)

https://osf.io/5gmsc/?view_only=b7b13bcae1da448e8c3a5d58ad976e34. According to the preregistration, we manipulated another level of structural complexity, adding five extra blocks and critical victims were distributed in ten rooms. We did not observe any results standing out in this condition compared to the complex setting; thus, we do not report them in this work.

Multiplayer version of Minimap: https://github.com/DDM-Lab/team-minimap-ted

References

Bamford, C. (2021). Griddly: A platform for ai research in games. Software Impacts, 8, 100066.

Bamford, C., Jiang, M., Samvelyan, M., & Rocktäschel, T. (2022). Griddlyjs: A web ide for reinforcement learning. arXiv preprint arXiv:2207.06105

Beattie, C., Köppe, T., Duéñez-Guzmán, E. A., & Leibo, J. Z. (2020). Deepmind lab2d. arXiv preprint arXiv:2011.07027

Bellemare, M. G., Naddaf, Y., Veness, J., & Bowling, M. (2013). The arcade learning environment: An evaluation platform for general agents. Journal of Artificial Intelligence Research, 47, 253–279.

Botvinick, M., Ritter, S., Wang, J. X., Kurth-Nelson, Z., Blundell, C., & Hassabis, D. (2019). Reinforcement learning, fast and slow. Trends in cognitive sciences

Brehmer, B. (1989). Feedback delays and control in complex dynamic systems. In: Computer-based management of complex systems, pp. 189–196. Springer

Brehmer, B. (1992). Dynamic decision making: Human control of complex systems. Acta psychologica, 81(3), 211–241.

Brehmer, B. (1995). Feedback delays in complex dynamic decision tasks (pp. 103–130). Complex problem solving: The European perspective.

Brehmer, B., & Dörner, D. (1993). Experiments with computer-simulated microworlds: Escaping both the narrow straits of the laboratory and the deep blue sea of the field study. Computers in human behavior, 9(2–3), 171–184.

Cegarra, J., Valéry, B., Avril, E., Calmettes, C., & Navarro, J. (2020). Openmatb: A multi-attribute task battery promoting task customization, software extensibility and experiment replicability. Behavior research methods, 52(5), 1980–1990.

Chevalier-Boisvert, M., Willems, L., & Pal, S. (2018). Minimalistic gridworld environment for gymnasium. https://github.com/Farama-Foundation/Minigrid

Corral, C. C., Tatapudi, K. S., Buchanan, V., Huang, L., & Cooke, N. J. (2021). Building a synthetic task environment to support artificial social intelligence research. In: Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 65, pp. 660–664. SAGE Publications Sage CA: Los Angeles, CA

Cronin, M. A., Gonzalez, C., & Sterman, J. D. (2009). Why don’t well-educated adults understand accumulation? a challenge to researchers, educators, and citizens. Organizational behavior and Human decision Processes, 108(1), 116–130.

Dancy, C. L., & Ritter, F. E. (2017). Igt-open: An open-source, computerized version of the iowa gambling task. Behavior research methods, 49(3), 972–978.

De Leeuw, J. R. (2015). jspsych: A javascript library for creating behavioral experiments in a web browser. Behavior research methods, 47(1), 1–12.

Diehl, E., & Sterman, J. D. (1995). Effects of feedback complexity on dynamic decision making. Organizational behavior and human decision processes, 62(2), 198–215.

Edwards, W. (1962). Dynamic decision theory and probabilistic information processings. Human factors, 4(2), 59–74.

Elliott, T., Mills, V., et al. (2007). Investigating naturalistic decision making in a simulated microworld: What questions should we ask? Behavior Research Methods, 39(4), 901–910.

Forbus, K. D., & Hinrichs, T. R. (2006). Companion cognitive systems: A step toward human-level ai. AI magazine, 27(2), 83–83.

Fothergill, S., Loft, S., & Neal, A. (2009). Atc-labadvanced: An air traffic control simulator with realism and control. Behavior Research Methods, 41(1), 118–127.

Freeman, J., Huang, L., Wood, M., & Cauffman, S. J. (2021). Evaluating artificial social intelligence in an urban search and rescue task environment. In: AAAI Fall Symposium on Theory of Mind for Teams

Funke, J. (1995). Experimental research on complex problem solving (pp. 243–268). Complex problem solving: The European perspective.

Gonzalez, C. (2004). Learning to make decisions in dynamic environments: Effects of time constraints and cognitive abilities. Human Factors, 46(3), 449–460.

Gonzalez, C. (2005). Decision support for real-time, dynamic decision-making tasks. Organizational Behavior and Human Decision Processes, 96(2), 142–154.

Gonzalez, C. (2017). Decision-making: a cognitive science perspective. The Oxford handbook of cognitive science, 1, 1–27.

Gonzalez, C., & Dutt, V. (2011). A generic dynamic control task for behavioral research and education. Computers in Human Behavior, 27(5), 1904–1914.

Gonzalez, C., Fakhari, P., & Busemeyer, J. (2017). Dynamic decision making: Learning processes and new research directions. Human factors, 59(5), 713–721.

Gonzalez, C., Lerch, J. F., & Lebiere, C. (2005). Instance-based learning in dynamic decision making. Cognitive Science, 27(4), 591–635.

Gonzalez, C., Thomas, R. P., & Vanyukov, P. (2005). The relationships between cognitive ability and dynamic decision making. Intelligence, 33(2), 169–186.

Gonzalez, C., Vanyukov, P., & Martin, M. K. (2005). The use of microworlds to study dynamic decision making. Computers in human behavior, 21(2), 273–286.

Guss, W. H., Houghton, B., Topin, N., Wang, P., Codel, C., Veloso, M., & Salakhutdinov, R. (2019). Minerl: A large-scale dataset of minecraft demonstrations. arXiv preprint arXiv:1907.13440

Henninger, F., Shevchenko, Y., Mertens, U. K., Kieslich, P. J., & Hilbig, B. E. (2022). lab. js: A free, open, online study builder. Behavior Research Methods, 54(2), 556–573.

Johnson, M., Hofmann, K., Hutton, T., & Bignell, D. (2016). The malmo platform for artificial intelligence experimentation. In: Ijcai, pp. 4246–4247

Jones, R. E., McNeese, M. D., Connors, E. S., Jefferson Jr, T., & Hall Jr, D. L. (2004). A distributed cognition simulation involving homeland security and defense: The development of neocities. In: Proceedings of the human factors and ergonomics society annual meeting, 48, pp. 631–634. SAGE Publications Sage CA: Los Angeles, CA

Kerstholt, J. H., & Raaijmakers, J. G. (2002). Decision making in dynamic task environments. In: Decision making, pp. 219–231. Routledge

Klein, G. A. (2017). Sources of power: How people make decisions. MIT press

Lake, B. M., Ullman, T. D., Tenenbaum, J. B., & Gershman, S. J. (2017). Building machines that learn and think like people. Behavioral and brain sciences, 40

Lematta, G. J., Coleman, P. B., Bhatti, S. A., Chiou, E. K., McNeese, N. J., Demir, M., & Cooke, N. J. (2019). Developing human-robot team interdependence in a synthetic task environment. In: Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 63, pp. 1503–1507. SAGE Publications Sage CA: Los Angeles, CA

Loft, S., Hill, A., Neal, A., Humphreys, M., & Yeo, G. (2004). Atc-lab: An air traffic control simulator for the laboratory. Behavior Research Methods, Instruments, & Computers, 36(2), 331–338.

Madhavan, P., Gonzalez, C., & Lacson, F. C. (2007). Differential base rate training influences detection of novel targets in a complex visual inspection task. In: Proceedings of the human factors and ergonomics society annual meeting, 51, pp. 392–396. SAGE Publications Sage CA: Los Angeles, CA

Marusich, L. R., Bakdash, J. Z., Onal, E., Yu, M. S., Schaffer, J., O’Donovan, J., ... Gonzalez, C. (2016). Effects of information availability on command-and-control decision making: performance, trust, and situation awareness. Human factors, 58(2), 301–321.

McNeese, N. J., Demir, M., Cooke, N. J., & Myers, C. (2018). Teaming with a synthetic teammate: Insights into human-autonomy teaming. Human factors, 60(2), 262–273.

Mehlhorn, K., Newell, B. R., Todd, P. M., Lee, M. D., Morgan, K., Braithwaite, V.A., ... Gonzalez, C. (2015). Unpacking the exploration-exploitation tradeoff: A synthesis of human and animal literatures. Decision, 2(3), 191.

Nguyen, T. N., & Gonzalez, C. (2020). Effects of decision complexity in goal-seeking gridworlds: A comparison of instance-based learning and reinforcement learning agents. In: Proceedings of the 18th intl. conf. on cognitive modelling

Nguyen, T. N., Phan, D. N., & Gonzalez, C. (2022). Speedyibl: A comprehensive, precise, and fast implementation of instance-based learning theory. Behavior Research Methods

Omodei, M. M., & Wearing, A. J. (1995). The fire chief microworld generating program: An illustration of computer-simulated microworlds as an experimental paradigm for studying complex decision-making behavior. Behavior Research Methods, Instruments, & Computers, 27(3), 303–316.

O’Neill, T., McNeese, N., Barron, A., & Schelble, B. (2022). Human-autonomy teaming: A review and analysis of the empirical literature. Human factors, 64(5), 904–938.

Osman, M. (2010). Controlling uncertainty: A review of human behavior in complex dynamic environments. Psychological bulletin, 136(1), 65.

Peirce, J., Gray, J. R., Simpson, S., MacAskill, M., Höchenberger, R., Sogo, H., ... Lindeløv, J. K. (2019). Psychopy2: Experiments in behavior made easy. Behavior research methods, 51(1), 195–203.

Peirce, J. W. (2009). Generating stimuli for neuroscience using psychopy. Frontiers in neuroinformatics, 2, 10.

Rafols, E. J., Ring, M. B., Sutton, R. S., & Tanner, B. (2005). Using predictive representations to improve generalization in reinforcement learning. In: IJCAI, pp. 835–840

Schelble, B. G., Flathmann, C., McNeese, N. J., Freeman, G., & Mallick, R. (2022). Let’s think together! assessing shared mental models, performance, and trust in human-agent teams. Proceedings of the ACM on Human-Computer Interaction, 6(GROUP), 1–29.

Schmid, U., Ragni, M., Gonzalez, C., & Funke, J. (2011). The challenge of complexity for cognitive systems

Sterman, J. D. (1989). Misperceptions of feedback in dynamic decision making. Organizational behavior and human decision processes, 43(3), 301–335.

Stoet, G. (2017). Psytoolkit: A novel web-based method for running online questionnaires and reaction-time experiments. Teaching of Psychology, 44(1), 24–31.

Todd, P. M., Hills, T. T., & Robbins, T. W. (2012). Cognitive search: Evolution, algorithms, and the brain. MIT press

Zhao, M., Eadeh, F. R., Nguyen, T. N., Gupta, P., Admoni, H., Gonzalez, C., & Woolley, A. W. (2022). Teaching agents to understand teamwork: Evaluating and predicting collective intelligence as a latent variable via hidden markov models. Computers in Human Behavior, p. 107524

Acknowledgements

This research was sponsored by the Defense Advanced Research Projects Agency and the Army Research Office and was accomplished under Grant Number W911NF-20-1-0006. (DARPA-ASIST program)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

A Minimap: Generic instructions

B Customizaing experiments with minimap

This section presents how to set up an experiment with Minimap as an introductory tutorial and web service API methods in the current version of Minimap.

B.1 Preparation

Running the Minimap server requires MySQL database.

-

Install MySQL for your operating system (Win/Mac/ Linux)

-

Check that MySQL are installed. Open a terminal or a command prompt and run:

-

Connect to the MySQL server, run

user represents the user name of your MySQL account. Substitute appropriate values for your setup. MySQL will display a prompt to enter the corresponding password.

-

From the mysql prompt, create a database:

-

In a command shell, import the SQL script named script.sql provided in the project folder

B.2 Installation/Quick start

-

In a command shell, go to the main folder that contains the requirements.txt file.

-

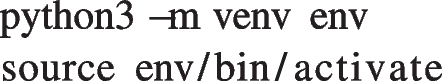

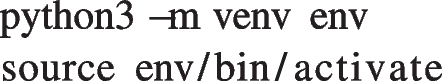

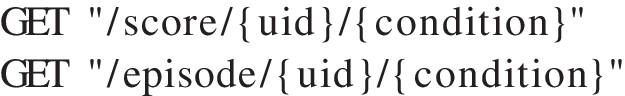

Create a virtual Python Environment is recommended by running the following commands in your shell. (This may be different on your machine. If it does not work, look at how to install a virtual python env based on your system):

-

Install the required python libraries by running this command in your shell:

-

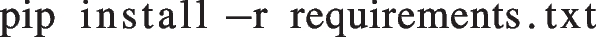

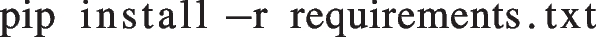

In the file mission/db.py, update the database configuration according to the database setup in the local machine, as in the following example:

-

To start the server, run:

-

While the server is running, navigate to: http://0.0.0.0:5700

-

To stop the server, run Ctrl+C

B.3 Running an experiment with minimap

-

After the server is started, launch the default search and rescue mission by connecting to:

The port number can be configured in the server.py file.

-

To check the replay interface, visit:

-

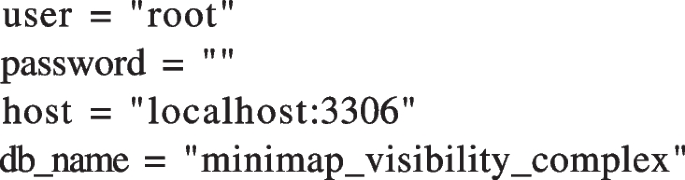

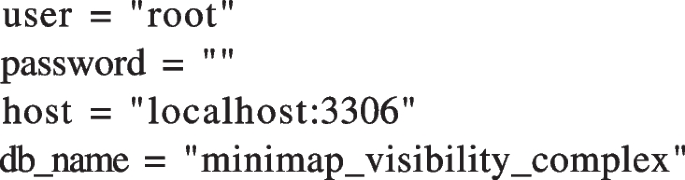

Web service APIs for getting information about the playing score and the current game episode:

B.4 Directory structure of an experiment

The experiment folder called mission contains the following fundamental sub-folders and files:

The mission/static folder contains all the static files the game will serve. In particular, the mission/static/ data folder includes all the base or new map template designs, while the mission/static/js folder compriese of all the files defining the behavior of the game. The mission/templates folder contains all the html files that define the game’s interface.

The database configuration is declared in the mission/ db.py file while mission/main.py file controls the web service APIs available for use. Importantly, as described earlier, all essential DDM features are fully customizable in Minimap. One can configure the experiment settings by changing the appropriate values in the mission/config. json file.

B.5 User modifiable configuration parameters

We now describe how experimenters can customize the game setting through Minimap configuration to fit the scientific requirements of the scientific endeavor.

The experiment settings are specified in the file mission/config.json. This file contains the variables needed to define the functioning of the experiment as follows:

Condition Name

As it is likely that an experiment will be run under multiple conditions, the parameter “cond_name” is used to identify each experimental condition. One can change the default condition name, and make it short and descriptive.

Interface Modification

Minimap, by default, features the environment as illustrated in Fig. 1 provided that the environment design is customizable. To create different environments, one can customize the Minimap layouts, including the location and the number of objects on the map (e.g., victims, walls, obstacles, and doors). Technically, this can be achieved without requiring specialized technical knowledge: modifying the base map designed in the Excel file, placed in the folder (mission/static/map_design.xlsx), and then saving it as a CSV file. Afterward, it is needed to specify in the config.json file that the newly designed layout is used for the game (“load_new_map”: 1, 0 otherwise) together with the name of the new map in CSV configuration (“map_file”: “map_design.csv”). The width of each tile/cell in the gridworld is set by the variable “tile_width”. One can increase or decrease this value to adjust the size of the map. Detailed instructions on how to modify a task interface are presented in B.7.

Time Constraints

The variable “game_duration” controls the duration of playing time within an episode. The value is in seconds, so for a 5-minute mission, the duration is set to 300.

Complexity

Minimap provides two levels of structural complexity: simple and complex. For example, the configuration file above makes the game environment complex with (“complexity”: “complex”). Structural complexity is determined based on the number of obstacles and the arrangement of critical victims (see Fig. 2 and “Complexity” for more details). One can freely create new maps with different layouts and numbers of objects by following the steps described in the interface modification section.

Visibility

Minimap includes three levels of visibility to manipulate, namely the field of view (fov), map view (map), and full view (full). This degree of visibility is specified by “visibility” in the config.json file. With a field of view, the individual can only obse80rve part of the environment within their restricted vision area. In contrast, with a map view, they can see the overview structure of the map in addition to their vision area (Fig. 3) for more details). Conversely, the full view gives the player a complete picture of the environment, including where the victims are.

Time Victims Die

The moment victims die in the search and rescue task can be varied through the variable “victim_die”. For example, in the above configuration file, critical victims die or disappear from the building after two minutes (120 seconds) of the game.

Delayed Time

The delay in the individual’s actions, associated with the dynamic complexity, is integrated into Minimap by the variable “delayed_time”, measured in seconds. The affected activities include victim-saving and door-opening. In the example above, the delay period is set to one second, meaning that after the player executes an action of rescuing a victim, it takes one second for the effort to take effect. The action occurs immediately with a delayed time of zero.

Perturbation Time

The variable “perturbation_time” controls when a perturbation or a sudden change within an episode occurs measured in seconds. For example, during a 5-minute mission, if the perturbation takes place after 2 minutes of gameplay, then the value to 120.

Perturbation Map

The variable “perturbation_map” specifies the file associated with the new scenario layout once a perturbation occurs. The file is in CSV format as in the map design file.

Number of Playing Episodes

The number of times an individual can perform the task is a variable in Minimap. For example, “max_episode” in the config.json file above controls the number of playing episodes “max_episode”: 8 i.e., the default value is 8). This feature allows experimenters to run experiments multiple times to examine the human learning process.

B.6 Reproducing the experiments on complexity and opaqueness with Minimap

To reproduce each experimental condition described in “Proof of concept: Experiment on complexity and opaqueness with minimap”, one can configure the corresponding parameters in the config.json file under folder mission as follows.

Condition 1. Simple FoV:

Condition 2. Simple Map:

Condition 3. Complexity FoV:

Condition 4. Complexity Map:

Start the server and launch the new task scenario by connecting to:

B.7 An example of creating a task scenario

This section describes a step-by-step guide for creating a task scenario in Minimap. Suppose that in this new scenario, a player is instructed to navigate the \(19 \times 19\) environment to save three types of victims: critical (red) victims, serious (yellow), and minor (green) victims. The player needs to play 10 episodes of a 3-minute game. A perturbation occurs after one minute of the game, which entails the sudden appearance of rubble and an increased number of serious and critical victims. The serious and critical victims vanish when two minutes elapse.

B.7.1 Step 1: Creating the environment layout

We create a map design file in the Excel configuration (e.g., “map_design_c.xlsx” as illustrated in Fig. 10a). Afterward, we save it in the CSV (“map_design_c.csv”) and place it in the folder mission/static/ (see Fig. 10b).

The code number of each object in the environment, together with their associated action and reward, is described in Table 3. One can easily adjust the value of rewards and/or the number of button presses needed to obtain each object. The reward for obtaining each object and the number of keystrokes required for such action is defined in the column “Press” and “Reward”. For instance, change the values of the two columns “Press” and “Reward” in the third row of the table to adjust the number of key presses needed and the reward received by saving the green objects.

B.7.2 Step 2: Adding perturbations

We can intensify the task dynamic with sudden perturbation in the middle of the gameplay (within an episode). To do that, we design a new setting when the perturbation happens in the Excel file. When one minute elapses, the player will suddenly experience the appearance of rubble and an increase in critical and serious victims. We name the file, e.g., “map_perturbation_c.xlsx” as illustrated in Fig. 11a. We also save the design file in the CSV format and place it in the folder mission/static/ (see Fig. 11b).

B.7.3 Step 3: Configurating experiment parameters

For the task described above, we adjust the file config.json in the folder mission as the following:

Since we use the newly designed interface for the task, the value of “load_new_map” variable is set to 1 (true). We also increase the value of tile_width variable to 20 to expand the size of the grid environment. By default, the visibility (opaqueness) is set to full view (“full”). We can assign the value of the variable visibility to “fov” or “map” for displaying the task with the field of view or map structure, respectively (Fig. 12).

B.7.4 Step 4: Running the task

We start the server by typing:

Once the server is started, launch the new task scenario by connecting to:

The interface of the new task with the full view and map view is illustrated in Fig. 13.

Fig. 13a illustrates the environment when the disruption happens (i.e., rubble appears, and the number of critical (red) and serious (yellow) victims grows). Figure 13b shows the setting after the time in “victim_die” variable elapses (i.e., the critical and serious victims disappear).

A-.7.5 Step 5: Repalying recorded data files

We can replay the recorded data files by entering

Then select the data file to display the playback illustrated in Fig. 14.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Nguyen, T.N., Gonzalez, C. Minimap: An interactive dynamic decision making game for search and rescue missions. Behav Res 56, 2311–2332 (2024). https://doi.org/10.3758/s13428-023-02149-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-023-02149-7