Abstract

Virtual reality (VR) has been shown to be a potential research tool, yet the gap between traditional and VR behavioral experiment systems poses a challenge for many behavioral researchers. To address the challenge posed, the present study first adopted a modularity design strategy and proposed a five-module architectural framework for a VR behavioral experiment system that aimed to reduce complexity and costs of development. Applying the five-module architectural framework, the present study developed the SkyrimVR-based behavioral experimental framework (SkyBXF) module, a basic experimental framework module that adopted and integrated the classic human behavior experiment structure (i.e., session-block-trial model) with the modifiable VR massive gaming franchise The Elder Scrolls V: Skyrim VR. A modified version of previous behavioral research to investigate the effects of masked peripheral vision on visually-induced motion sickness in an immersive virtual environment was conducted as a proof of concept to showcase the feasibility of the proposed five-module architectural framework and the SkyBXF module developed. Behavioral data acquired through the case study were consistent with those from previous behavioral research. This indicates the viability of the proposed five-module architectural framework and the SkyBXF module developed, and provides proof that future behavioral researchers with minimal programming proficiency, 3D environment development expertise, time, personnel, and resources may reuse ready-to-go resources and behavioral experiment templates offered by SkyBXF to swiftly establish realistic virtual worlds that can be further customized for experimental need on the go.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Virtual reality (VR) has enormous potential as a research tool and has steadily made its way into mainstream psychology over the last two decades (Loomis et al., 1999). As a research tool, VR can present stimuli that are not readily available or easily presented in natural contexts, and researchers can manipulate virtual environments to perform in ways that natural environments do not (Bohil et al., 2011). It can also improve the reproducibility of psychological research because code-defined virtual environments can be repeated with greater precision than traditional laboratory surroundings (Pan & Hamilton, 2018). Hence, VR is a unique tool that enables effective experimental control and high ecological validity, as well as repeatability, and thus has a unique appeal in the field of experimental psychology (Casasanto & Jasmin, 2018; de la Rosa & Breidt, 2018; Kourtesis et al., 2020; Pan & Hamilton, 2018; Peeters, 2019)

Although VR has been shown to be a research tool with great potential, the significant gap between traditional and VR behavioral experiment systems makes it challenging to design VR behavioral experiments in the same manner that conventional behavioral experiments are designed. In psychological research, conventional psychological experimental software is frequently employed to present different stimuli to participants and record behavioral data, such as accuracy, reaction times, behavioral responses, and so on (Garaizar & Vadillo, 2014; Mathôt et al., 2012). For instance, commercial psychological experimental software tools such as E-Prime, DirectRT, Inquisit, and SuperLab are available (Stahl, 2006), as are open-source or open-access software tools such as DMDX (Forster & Forster, 2003; Garaizar & Reips, 2015), Psychology Experiment Building Language (PEBL) (Mueller & Piper, 2014), Psychophysics Toolbox (Brainard & Vision, 1997), and PsychoPy (Peirce et al., 2019). To bridge the gap between traditional and VR behavioral experiment systems, it is essential to implement core functions/features of previously successful psychological experimental software into current VR behavioral experiment systems. Furthermore, numerous challenges must be acknowledged and addressed.

VR behavioral experiment system architecture

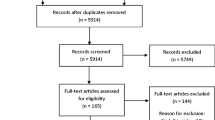

The numerous distinctions between conventional and VR behavioral experiments have created a huge gap for those interested in conducting or implementing a VR behavioral experiment. Building realistic virtual environments remains a complicated, expensive, and time-consuming process for many research groups (Trenholme & Smith, 2008). To reduce the complexity and costs of designing and creating a VR behavioral experiment, researchers should adopt a modularity design strategy that takes full advantage of the various mature components and applications that are already available on the market to build VR behavioral experiment systems, thereby reducing the required workload and increasing software reusability (Vasawade et al., 2015). The present study merged the typical architectural framework for game engines (Gregory, 2018; Zarrad, 2018) and human behavioral experiments (Brookes et al., 2020) to propose the following architectural framework for a VR behavioral experiment system (see Fig. 1). Based on the proposed architectural framework, the present study introduces the five fundamental modules (Hardware module, Interface module, 3D Engine module, Experimental Content module, and Experimental Framework module) required for a VR behavioral experiment system and briefly discusses new findings, as well as existing solutions for each module below. Finally, the current study tested the proposed VR behavioral experiment system architecture by creating the SkyrimVR-based behavioral experimental framework (hereby referred to as SkyBXF), an experimental framework module built upon a modifiable VR massive gaming franchise that aims to provide future behavioral researchers ready-to-go templates to establish realistic virtual worlds that can be further customized for experimental need. Furthermore, we employed SkyBXF to improve the data collection procedure of a VR behavioral experiment in a previous study (Liu & Chen, 2019) as a proof of concept to showcase the feasibility of the proposed VR behavioral experiment system architecture.

Hardware modules

Hardware modules include VR displays that render virtual scenes; motion tracking systems that continuously track the user’s body position and head movements in order to update the camera in the virtual environment and change the user’s point of view. It also includes VR-ready computer hardware such as processors, graphics cards, and other components required for three-dimensional (3D) rendering and VR displays. Over the last decade, a new generation of VR head-mounted displays (HMD) has started to gain popularity, such as the HTC Vive and Oculus Rift for PC VR, as well as Cardboard and Gear VR for mobile VR (Tynes & Seo, 2019). While cost-effective HMD devices for mobile VR exist, high-end PC-based HMDs such as HTC Vive (Niehorster et al., 2017) and Oculus Rift (Chessa et al., 2019) integrate VR displays and highly accurate real-time motion tracking, as well as six degrees of freedom (DoF) controllers, to provide a high level of immersion and interactivity (Kourtesis et al., 2020), and have been evaluated/validated as suitable for research purposes. Thus, both HTC Vive and Oculus Rift can be used as an excellent hardware platform for developing VR behavioral experiments.

Interface modules

Interface modules are a collection of APIs (application programming interface), including both device-level (device layer)-oriented interfaces and software-oriented application interfaces (application interface). For experimenters developing VR behavioral experiments, Interface module APIs are the interfaces between the VR application runtime and the system and hardware for executing necessary tasks such as accessing the controller status, obtaining the current user’s tracking location, and so on.

Previously, various VR devices, development environments, and runtime systems required a complex assortment of APIs to facilitate communication between interfaces, significantly increasing development difficulty. The recent emergence of OpenXR (Khronos Group, 2019) unified numerous technical standard systems, achieving broad cross-platform application and device interoperability across VR, augmented reality (AR), and mixed reality (MR). The newly introduced standard OpenXR, a standard that is now supported by major VR hardware manufacturers and development engines, significantly alleviates the issue of idiosyncrasies between underlying VR devices, development environments, and runtime systems, therefore reducing development difficulty.

3D engine module

The 3D Engine module contains a collection of essential components for creating virtual environments, such as the creation and rendering of virtual objects, virtual world collision and physics engines, and 3D audio. Additionally, it serves as a hub for other modules, such as handling computer graphics processing units, receiving motion tracking data, and updating and re-rendering the virtual scene’s state.

A significant range of software packages is available for the 3D Engine module including current popular gaming engines such as Unity3D and Unreal Engine (Spanlang et al., 2014) and numerous other engines. The advantage of game engines is that they typically have a robust assets library in addition to 3D engine functionality, such as 3D model resources, character animation libraries, and so on. For example, Unity3D and Unreal feature an extensive library of tutorials and online documentation, as well as an active user community (Kourtesis et al., 2020; Starrett et al., 2021). Other 3D gaming engines may possess built-in 3D model resources, character animation libraries, and/or unique rendering/physics engines that enable the 3D gaming engine to stand out amongst the numerous 3D engines developed to date, all of which aids in reducing the user’s learning curve when they decide to create VR environments, also reducing the complexity and costs to develop VR scenes.

Experimental content module

The Experimental Content module is the virtual scene that the experimental developer must construct in order to display 3D stimuli. This module typically includes the required collection of 3D models, character data, character models, textures, and scripts for executing the scene, as well as UI components and other components necessary to present a virtual scene.

Realistic virtual environments are time-consuming and expensive to create and maintain (Trenholme & Smith, 2008). High-definition models, textures required for infrastructures, people, landscapes, flora and fauna, and weather systems, support for user interaction and the UI design, feedback on the environment and object behavior, such as collision detection, and nonvisual features of the virtual scene, such as audio cues, are examples of what is required (Smith & Trenholme, 2009; Trenholme & Smith, 2008). While many game engines today provide rich resource packages and toolkits necessary to create virtual scenes, creating realistic virtual world content still requires a high level of programming proficiency and expertise, as well as a significant investment of time, personnel, and resources.

The video game industry has undergone technological advancements in recent years that coincide with the development of virtual reality technology, and many video games can now be played in a 3D or head-mounted display-based virtual reality environment, rather than the traditional 2D virtual environment (Pallavicini et al., 2019; Roettl & Terlutter, 2018). There are numerous advantages to using massive gaming franchises that support VR as the basis for developing content for VR behavioral experiments. Massive gaming franchises are often extensively tested, have a high level of usability and stability, and are easily compatible with existing operating systems. Furthermore, most massive gaming franchises possess large development teams and ample budgets, and therefore are capable of delivering extremely realistic, state-of-the-art virtual scenes. Most importantly, massive gaming franchises tend to release editing/modding tools to the user community to increase their video game franchise’s sustainability and longevity. Such editing/modding tools tend to come with a simplified GUI, online tutorials, and many features to aid users who have little or no programming proficiency to create and develop their own content and virtual scenes. These tools could also be used to develop experimental content for VR behavioral experiments.

Experimental framework module

In addition to realistic virtual environments and scenes, it is necessary to integrate the fundamental framework of experimental design and the experimental structure into the virtual scene. For instance, the structure of conventional behavioral experiments includes (but is not limited to) components such as sessions, blocks, and trials. It also requires the definition, operationalization, and manipulation of independent variables, as well as means to measure/record dependent variables.

There exist several behavioral experiment framework toolkits that are completely independent of experimental content and are freely available to researchers. These toolkits and solutions frequently integrate the framework for behavioral experiments into existing 3D game engines, such as the Unity Experiment Framework (Brookes et al., 2020), a Unity project that provides the core framework for behavioral experiments to develop VR behavioral experiments. Similarly, Landmarks (Starrett et al., 2021) and the Experiments in Virtual Environments (EVE) framework (Grübel et al., 2017) are both Unity projects that primarily focus on the investigation of spatial cognition and navigation. Toggle toolkit (Ugwitz et al., 2021) provides a collection of simple-to-use triggers and toggles that facilitate the creation and recording of interactions in behavioral experiments. Beyond Unity (and Unity Inspector)-based projects, there is vexptoolbox (Schuetz et al., 2022), which provides a behavioral experimentation framework toolkit for the Vizard VR platform capable of creating trial-based experimental designs and recording experimental data.

In summary, due to advances in hardware, interfaces, and the underlying development tools, as well as the simplification of VR environments creation tools over the past decades (Bierbaum et al., 2001); various options are available to date for the creation and development of a VR behavioral experiment. Nevertheless, behavioral research employing VR remains a rarity because developing a VR behavioral experiment requires a certain level of proficiency in programming and experience in developing game engine software (Kourtesis et al., 2020) or simply because it is too costly to outsource and create. This conundrum parallels that of digital presentation software (such as Forethought PowerPoint, later acquired and known as Microsoft PowerPoint) that revolutionized and replaced traditional methods of “communication” over the past three decades. Despite the plethora of powerful tools/functions integrated into the latest version of PowerPoint and its relative ease of use, users with both little and abundant experience in PowerPoint presentation design opt to use built-in PowerPoint templates or downloadable templates to create PowerPoint slides rather than starting from scratch, highlighting the fact that presentation tools (including VR environment presentation tools) should acknowledge the need for ready-to-go templates. The same is true in the field of virtual scene development. The majority of small topic groups lack professional experience and skills in virtual scene design and development, and creating adequately realistic virtual scenes from scratch. For VR scenes, the most attractive templates are naturally the existing virtual reality digital games. To this end, the present study proposes the use of modifiable VR games for academic research purposes, specifically massive gaming franchises that support VR and further modding, as the basis to establish an experimental framework module for behavioral research, with the aim of creating a VR behavioral experimental system capable of implementing and reusing ready-to-go resources and behavioral experiment templates.

Modifiable VR games

Massive gaming franchises that support VR gameplay often invest huge amounts of manpower and resources to create immersive and realistic 3D environments that are virtually impossible for individual labs and groups to achieve. The use of digital games as stimulus material in experiments has been around for a long period of time (Washburn, 2003), and for the issue of experimental controllability, selection, and control of experimental stimuli; game modifications (aka "modding") can be performed to modify the game content in order to make it usable for research purposes (Elson & Quandt, 2016). Thus, massive gaming franchises that support VR gameplay and modding can be readily used to establish realistic virtual worlds that can be further customized for experimental needs. An example of a modification tool that is compatible across two massive gaming franchises that support VR is Creation Kit (Bethesda Game Studios, 2012), a robust modification tool that enables extensive customization of two massive gaming franchises: The Elder Scrolls V: Skyrim VR and Fallout 4 VR.

Creation kit

Creation Kit is a modification tool developed by Bethesda Game Studios as the editing software used to create content for the Elder Scrolls series modding community (Bethesda Game Studios, 2012). It enables modifications to games created using the Creation Engine, including the addition or manipulation of objects, graphics effects, character behavior, dialogue scenes, missions and events, and internal and external environments. Experimenters can use practically any existing resources or create new content/resources in games created using the Creation Engine to build simple virtual environment with little to no programming or scripting expertise via the graphical user interface Creation Kit offers.

The Elder Scrolls V: Skyrim VR

The Elder Scrolls V: Skyrim is a Bethesda Game Studios action role-playing video game that was released worldwide on November 11, 2011. The Elder Scrolls V: Skyrim VR (subsequently referred to as SkyrimVR in this study) is a virtual reality version of The Elder Scrolls V: Skyrim that debuted in April 2018 (Bethesda Game Studios, 2018) on STEAM, a massive gaming franchise that has been employed in previous research (Cierro et al., 2020).

SkyrimVR is an open-world game created with the Creation Engine with a massive map filled with varied terrain and scenery ranging from desert, tropical rainforest, frozen tundra/mountains, grassy plains, lush meadows, to various more landscapes set loosely in a fantasy Medieval Scandinavian culture. These landscapes are brought to life with SkyrimVR’s large library of resources, characters, and 3D objects ranging from 15,000+ inanimate everyday objects such as pots, pans, tables, drawers, houses, and citadels, to over 400+ animate models of characters/animals/plants/non-player characters available for users to edit and mod, along with a well-developed weather system that changes over time as well as a large modding community that provides a plethora of downloadable items with various features for refining or modifying the game’s functionality. However, SkyrimVR (not including mods and user-generated content) lacks resources, characters, and 3D objects that come from the modern era (no skyscrapers, modern buildings, cars, electrical appliances, etc.); an issue that could be alleviated by accessing the resources in another VR game (Fallout 4 VR) created with the Creation Engine. These distinct characteristics make games created via the Creation Engine such as SkyrimVR an ideal scenario template for VR behavioral experiments; an excellent experimental content module.

This study proposes to build VR behavioral experiment scenarios based on the modifiable massive gaming franchise SkyrimVR, a concept that has been proposed in previous literature (Elson & Quandt, 2016) and employed in recent oculometric research (Cierro et al., 2020). According to the VR behavioral experiment system architectural framework the present study proposed, SkyrimVR intrinsically includes four out of the five fundamental modules required for a VR behavioral experiment, namely the Experimental Content module, 3D Engine module, Interface module, and the Hardware module. Furthermore, it supports popular HMD hardware systems such as HTC Vive, Oculus Rift, and Windows Mixed Reality. Moreover, SkyrimVR comes with the modification tool, Creation Kit, that provide experimenters access to practically all existing in-game resources and/or the ability to create new content/resources to create a VR behavioral experiment according to a specific behavioral experiment design and experimental needs. In comparison to previous VR behavioral experiment systems, The Elder Scrolls V: Skyrim and Creation Kit together offer significant resource-richness and ease-of-use advantages.

A SkyrimVR-based behavioral experimental framework (SkyBXF)

Graphical user interface

Questionnaire implementation

To assist researchers in developing virtual reality behavioral research using SkyrimVR, the following study developed the SkyBXF module that aims to significantly reduce complexity and costs of development. SkyBXF provides a basic experimental framework for developing VR behavioral experiments. It is an experimental framework module created to work on SkyrimVR that adopts the classic human behavior experiment structure: the session-block-trial model (Brookes et al., 2020). This architecture enables researchers to easily create behavioral experiments. Additionally, it enables researchers to import questionnaires, surveys, tests, scales, and/or inventories into the virtual environment of SkyrimVR for the purpose of participant assessment and evaluation. All settings are configured via a simple-to-use graphical user interface. At the end of each block, SkyBXF exports participant information and questionnaire data in JSON format. Based on successful conventional behavioral experiment software (e.g., E-Prime, PsychoPy), the authors devised a data merge program that allows researchers to easily export experimental data (JSON format) to CSV files on a per-participant basis, enabling researchers to import the data into conventional data analysis software for analysis.

SkyBXF requires minimal to no programming skills to operate, providing a solution for behavioral studies that plan to employ VR environments by leveraging SkyrimVR’s extensive resource collection as well as providing simple experimental design and control via SkyBXF’s graphical user interface. In comparison to other tools to date, SkyBXF further reduces complexity and costs of development and features a lower entry point for users. Completing the last mile, to allow experimenters without programming experience to create simple VR behavioral experiments, a Human Computer Interface (HCI) model of the SkyBXF is provided in Fig. 2.

HCI model of the SkyBXF framework. The left panel (boxed in orange) shows the experimental settings and parameters that need to be set using the UI interface before the experiment starts, while the right panel (boxed in blue) depicts the experimental process as it is experienced by the participants.

Simultaneously, researchers with more advanced coding skills and a desire for further development may look into further online resources that the SkyrimVR community has to offer. The Creation Kit enables preprocessing of the stimulus material, namely the content utilized in the experiment, as well as direct creation of custom sceneries, non-player characters, and scripting, allowing those with sufficient mastery of the Creation Kit greater freedom of customization..

SkyBXF provides a simple graphical user interface (GUI) that allows researchers to easily set up behavioral experiments and enter participant information with minimal to no coding required (see Fig. 3). The interface consists of two pages: a settings page that allows the declaration and implementation of the starting and ending position in a virtual environment (this is done by moving the player-controlled in-game avatar/character to the preferred location in-game, followed by selecting and clicking the corresponding option “Set Start Position” or “Set End Position” in SkyBXF to implement the start and finish line of a virtual task or virtual walk); defining the structure of the VR behavioral experiment (e.g., session number, block number, trial number, and setting the duration of rest between trials). Participant information mainly includes demographic information such as the ID number assigned to each participant, participant’s gender, and age. Participants’ demographic information and session-block-trial settings will be automatically exported after the experiment. For greater software reusability, users may also directly reuse the configuration file (config.json) from a previous experiment to quickly reinstate experimental settings.

SkyBXF offers a questionnaire import function for researchers to import questionnaires/surveys/tests into SkyrimVR. By reproducing existing behavioral questionnaires/surveys/tests into the JSON file included in SkyBXF (namely, the Questionnaire.json file) according to the predetermined format/template (see Fig. 4). SkyBXF will automatically import the questionnaire into SkyrimVR with the correct mod settings (for more details on SkyBXF implementation see Availability section below). Researchers can check whether there are content errors in the questionnaire through the user interface in SkyrimVR. Each question will appear as a floating message window in SkyrimVR with the corresponding options/answers/choices to each questionnaire item shown slightly below each question. Participants respond to each question through a controller of their choice (HTC Vive controller, Xbox 360 controller, mouse, keyboard, and any other input device SkyrimVR supports) so the questionnaire can be completed whilst the headset is donned. SkyBXF can administer questionnaires/tests/assessments/evaluations at the beginning and end of each trial, namely pre-trial and post-trial in the behavioral experiment, which can be set by checking in the user interface. The end of each trial in the experiment is triggered and currently depends on the location designated by the “Set End Position” function in the SkyBXF settings page set by the researcher.

As illustrated in Fig. 4, SkyBXF includes a Simulator Sickness Questionnaire (SSQ) template, and the $ sign inserted in front of each character serves as a translation function. Translation files are titled after the plugin, and the language it is in follows the file name, for example: "uiextensions_english.txt" and "uiextensions_chinese.txt." The file format is shown in Fig. 5; each line contains a token, followed by a tab, and then the translation that will be used to replace the token, i.e., $token string[TAB]Token String.

Data merge

At the end of each trial, experimental data will be exported and recorded into separate JSON files, such that a behavioral research design consisting of multiple sessions, blocks, and trials will generate multiple corresponding JSON files to record experimental data in a session, block, and trial format. In order to aid researchers in extraction of experimental data and further analyses of experimental results, a Data Merge program (see Fig. 6) was created using C# and integrated into the SkyBXF framework proposed (executable files provided in the SkyBXF downloadable package, for details see Availability section below). The Data Merge program organizes data extracted from the multiple JSON files created by SkyBXF and exports all session, block, and trial data of any given participant or several/all participants simultaneously into a CSV file format—a file format that is compatible and can be easily imported into Excel, SPSS, and various widely used data analysis software.

The typical structure of the experimental result data folder of each participant and the typical JSON file structure of the questionnaire evaluation results are shown in the figure above (see Fig. 7).

Case study: The effect of masked peripheral vision on visually-induced motion sickness in immersive virtual environment

The use of VR technology has gradually made its way into mainstream psychological research in the last two decades (Diemer et al., 2015). However, there are barriers, such as visually induced motion sickness (VIMS). Many studies have postulated theories on why VIMS occurs; one of the most commonly cited theories on VIMS is the sensory conflict theory (Reason & Brand, 1975). This theory postulates the visual information that is specifying self-motion conflicts with the lack of self-motion specified by the physical cues, leading to a sensory mismatch which many posit is the dominant cause of motion sickness-like symptoms (Keshavarz et al., 2015). Previous literature suggested this type of sensory mismatch may be alleviated by either increasing physical movement or decreasing the visually mediated experience of self-motion (Rebenitsch & Owen, 2016). It was posited that peripheral vision played a key role in evoking visually mediated experience of self-motion in the absence of physical movement through space (aka vection). The present study aimed to employ SkyBXF to improve a VR behavioral experiment reported in a previous study (Liu & Chen, 2019) as a proof of concept to showcase the feasibility of the proposed VR behavioral experiment system architecture. The experiment tests the hypothesis that masking a portion of peripheral vision may reduce the degree of sensory mismatch between visual and somatosensory inputs, therefore reducing reported VIMS symptoms.

Method

Participants

Twenty-three undergraduate volunteers (17 female; mean age = 21.9 ± 1.53) participated. All participants recruited had normal or corrected-to-normal vision and had normal cognitive and motor function.

The sample size was determined based on effect sizes reported in a previous virtual walk study conducted (Liu & Chen, 2019). Prior to beginning this study, a priori efficacy calculations (effect size = 0.67; alpha error = 0.05; efficacy > 0.80) were done using G*Power 3.1.9.7 and results revealed a minimal sample size of 20.

Materials and apparatus

Based on The Elder Scrolls V: Skyrim VR, Creation Kit-SSE (version v1.1.50) and SkyBXF were used to create the two virtual environments employed in the following behavioral experiment. Walking speed as well as game time and game speed were fixed throughout the entire experiment. Clear visual indicators (green arrows) and corresponding triggers (detecting player presence within a certain distance/boundary as indicated in red squares enveloping the green arrow in Fig. 8) guided participants along the virtual route that took approximately 2 minutes to complete. SkyrimVR settingsFootnote 1 were manipulated to fix the field of view to either 110° (no-masked peripheral vision condition) or 82.5° (masked peripheral vision condition). Experimental materials, stimuli, and procedure were identical to those in a previous study (Liu & Chen, 2019) except for data recording. Previously, behavioral data were recorded manually, whilst all behavioral data in the present study were recorded through SkyBXF.

An overhead view of the virtual walk route in the formal experiment created via Creation Kit based on The Elder Scrolls V: Skyrim VR. The route begins from DragonBridgeExterior01 (X-Position: −25, Y-Position: 20), and ends at (X-Position: −22, Y-Position: 18), on the Tamriel continent. The green arrows in the center of the red boxes represent the visual indicators used to guide users through the designated virtual route. The red boxes indicate the distance/boundary to detect player presence and trigger the removal of the current green arrow and the appearance of the next green arrow.

Procedure

The experiment consists of two virtual environments: a tutorial virtual environment and a formal experiment virtual environment. All participants were given a briefing on the virtual walk experiment before they signed an informed consent form, wore the HTC Vive VR headset, and completed the brief tutorial virtual environment. They were reminded they could terminate the experiment at any given time and would be given breaks in between trials during the formal experiment. The formal experiment was a within-subject design consisting of four trials (with A representing masked peripheral vision trials, and B representing no-masked peripheral vision trials, for details see Fig. 9). Half of the participants were randomly assigned to the ABBA trial sequence whereas the remaining half was assigned to the BAAB trial sequence. Prior to each trial, an SSQ (Kennedy et al., 1993) consisting of 16 items was employed to assess participants’ motion sickness symptoms. Then participants were instructed to follow the green arrow within the virtual environment and completed a virtual walk task while standing or walking within the 2×2-meter room-scale play area (calibrated and determined by SteamVR Room Setup), or a combination of both. During the tutorial and formal virtual environment, the experimenter would advise participants to stand still during the virtual walk, solely relying on the Xbox 360 controller to move around (as well as make turns) in the virtual environment. However, participants would at times reflexively walk a few steps or turn while standing; the experimenter would remind participants to stand still after the trial finished and most if not all participants would refrain from moving around and stand still for the remainder of the experiment. Upon completing a virtual walk task, an SSQ was employed again to assess motion sickness symptoms post-trial, followed by a rest break, where participants removed headgear, sat down on a sofa, closed their eyes, and listened to 1 minute of ambient music (Bandari: Snowdream). Participants were given as much time as they need to recuperate before they stood up and donned the HTC Vive VR headset before the next trial began. The aforementioned experimental procedure was approved by the institutional review board (IRB) and is in accordance with the sixth revision of the Declaration of Helsinki (2008).

Analyses and results

All data from 23 participants (a total of 92 trials) were recorded via SkyBXF. The data merge application provided within the SkyBXF module was applied to organize data extracted from the 92 JSON data files created for each trial. All session, block, and trial data of any given participant or several/all participants were then simultaneously exported into a CSV file which was imported and analyzed using SPSS 18.

The Total Severity (TS), Disorientation (D), Oculomotor (O), and Nausea (N) scores were calculated based on the SSQ item ratings obtained before and after each trial. Pre-trial scores were then subtracted from post-trial scores to obtain the magnitude of change in perceived motion sickness symptom ratings before the virtual walk began (baseline) and after the virtual walk ended (post-trial). All subsequent analyses were conducted upon the magnitude of change in perceived motion sickness symptom ratings.

Four separate paired t-tests were conducted on the within-subjects independent variable peripheral vision (masked vs. no-masked) with the magnitude of change in perceived motion sickness symptom ratings for Total Severity, Nausea, Oculomotor, and Disorientation as the dependent variables. Results revealed a significant difference in magnitude of change in perceived motion sickness symptom ratings for Total Severity, t(22) = 4.19, p < .001; Nausea, t(22) = 4.37, p < .001; Oculomotor, t(22) = 3.49, p = .002; and Disorientation, t(22) = 2.48, p = .021 between masked and no-masked peripheral vision conditions. Unanimously, the magnitude of change in perceived motion sickness symptom ratings for the masked peripheral vision condition was significantly reduced compared to the no-masked peripheral vision condition. Mean and SEM are shown in Fig. 10.

Summary

The present study adopted a modularity design strategy and proposed a five-module architectural framework for a VR behavioral experiment system. A review of the literature unveiled the different issues influencing each of the modules as well as solutions proposed. Although existing solutions facilitate the creation and development of VR behavioral experiments, building realistic virtual environments remains a complicated, expensive, and time-consuming process for many research groups (Trenholme & Smith, 2008). Furthermore, the need to possess programming abilities and experience developing game engine software (Kourtesis et al., 2020) further increases development difficulty. One solution proposed in the literature was the use of digital games as stimulus material in experiments (Washburn, 2003). However, the issue of experimental controllability, selection, and control of experimental stimuli, as well as game modifications ("modding") may not exist to make it usable for research purposes (Elson & Quandt, 2016). Thus, the present study created the SkyBXF module, an experimental framework module that aims to provide future behavioral researchers ready-to-go templates to establish realistic virtual worlds that can be further customized for various experimental needs.

A previous study on the effect of masked peripheral vision on visually-induced motion sickness in immersive virtual environment (Liu & Chen, 2019) was repeated in the present study as a proof of concept to showcase the feasibility of establishing a VR behavioral experiment using the proposed five-module architectural framework in tandem with the SkyBXF module created. Results observed are consistent with laboratory measurements from previous studies (Lin et al., 2002; Zhang et al., 2021) suggesting that narrowing the field of view (FOV) through the peripheral visual area helps reduce motion sickness symptoms. In addition, the three SSQ subscale scores revealed the same D>N>O features as a prior study (Stanney et al., 1997). According to previous studies, the scores of the three subscales within the SSQ (Nausea, Oculomotor, and Disorientation) may provide additional insights to aid in distinguishing between different sources of motion sickness. For example, when Disorientation scores are higher than Nausea scores; with Oculomotor scores being the lowest amongst the three subscales (i.e., D>N>O profile). Stanney et al. (1997) reported that the D>N>O profile resembles that of VR sickness; distinguishing VR sickness from other forms of motion sickness. The present study developed the SkyBXF module with the aim of reducing complexity and costs of development to promote replication and reusability, such that users only need to install SkyrimVR, Creation Kit, and SkyBXF to obtain a ready-to-go VR behavioral experiment template to conduct/replicate research on visually-induced motion sickness. This template can be further customized for various experimental needs with minimal requirements on personnel programming proficiency, 3D environment development expertise, time, and resources. The results obtained were consistent with those reported in previous research and observations, providing proof to indicate the viability of SkyBXF in behavioral research.

Our future efforts include (but are not limited to) maintaining the program to ensure compatibility with SkyrimVR updates and developing SkyBXF to accommodate another Bethesda VR game built on the Creation Engine: Fallout 4 VR (which has a different but equally huge resource library relative to SkyrimVR). Features may be added or modified if a clear need arises. The project is open-source, thus allowing researchers in the field to implement and share such additions.

Data availability

SkyBXF is freely available to download via GitHub as a package (https://github.com/ZeminL/SkyBXF/releases). Following the readme.md document, SkyBXF can be installed and integrated into The Elder Scrolls V: Skyrim VR built for Windows 7 and above PCs. Documentation and support are available in the SkyBXF forum webpage (https://github.com/ZeminL/SkyBXF/wiki). The package is open-source under the GNU General Public License 3.0. The related packages and mods installation for SkyBXF are available via the same GitHub webpage, and are written in the requirements.txt file.

Notes

Masked/no-masked peripheral vision is a function of SkyrimVR which could be enabled/disabled in the SkyrimVR SETTINGS Menu under the SYSTEM subpage by selecting/unselecting the “FOV filter while turning” and “FOV filter while moving” options respectively.

References

Bethesda Game Studios. (2012). Creation Kit Wiki. Retrieved October 7, 2022, from https://www.creationkit.com/index.php?title=Landing_page

Bethesda Game Studios. (2018). The Elder Scrolls V: Skyrim VR. Retrieved October 7, 2022, from https://www.creationkit.com/index.php?title=Landing_page

Bierbaum, A., Just, C., Hartling, P., Meinert, K., Baker, A., & Cruz-Neira, C. (2001, March). VR Juggler: A virtual platform for virtual reality application development. In: Proceedings IEEE Virtual Reality 2001 (pp. 89–96). IEEE.

Bohil, C. J., Alicea, B., & Biocca, F. A. (2011). Virtual reality in neuroscience research and therapy. Nature Reviews Neuroscience, 12(12), 752–762. https://doi.org/10.1038/nrn3122

Brainard, D. H., & Vision, S. (1997). The psychophysics toolbox. Spatial Vision, 10(4), 433–436.

Brookes, J., Warburton, M., Alghadier, M., Mon-Williams, M., & Mushtaq, F. (2020). Studying human behavior with virtual reality: The Unity Experiment Framework. Behavior Research Methods, 52(2), 455–463. https://doi.org/10.3758/s13428-019-01242-0

Casasanto, D., & Jasmin, K. M. (2018). Virtual reality. In A. M. De Groot & P. Hagoort (Eds.), Research methods in psycholinguistics and the neurobiology of language (pp. 174–189). John Wiley & Sons.

Chessa, M., Maiello, G., Borsari, A., & Bex, P. J. (2019). The perceptual quality of the oculus rift for immersive virtual reality. Human–Computer Interaction, 34(1), 51–82.

Cierro, A., Philippette, T., Francois, T., Nahon, S., & Watrin, P. (2020, June). Eye-tracking for Sense of Immersion and Linguistic Complexity in the Skyrim Game: Issues and Perspectives. In: ACM Symposium on Eye Tracking Research and Applications (pp. 1-5).

de la Rosa, S., & Breidt, M. (2018). Virtual reality: A new track in psychological research. British Journal of Psychology, 109(3), 427–430. https://doi.org/10.1111/bjop.12302

Diemer, J., Alpers, G. W., Peperkorn, H. M., Shiban, Y., & Mühlberger, A. (2015). The impact of perception and presence on emotional reactions: A review of research in virtual reality. Frontiers in Psychology, 6, 26. https://doi.org/10.3389/fpsyg.2015.00026

Elson, M., & Quandt, T. (2016). Digital games in laboratory experiments: Controlling a complex stimulus through modding. Psychology of Popular Media Culture, 5(1), 52. https://doi.org/10.1037/ppm0000033

Forster, K. I., & Forster, J. C. (2003). DMDX: A Windows display program with millisecond accuracy. Behavior Research Methods, Instruments, & Computers, 35(1), 116–124. https://doi.org/10.3758/BF03195503

Garaizar, P., & Reips, U. D. (2015). Visual DMDX: A web-based authoring tool for DMDX, a Windows display program with millisecond accuracy. Behavior Research Methods, 47(3), 620–631. https://doi.org/10.3758/s13428-014-0493-8

Garaizar, P., & Vadillo, M. A. (2014). Accuracy and precision of visual stimulus timing in PsychoPy: No timing errors in standard usage. PloS One, 9(11), e112033. https://doi.org/10.1371/journal.pone.0112033

Gregory, J. (2018). Game Engine Architecture, Third Edition (3rd ed.). A K Peters/CRC Press.

Grübel, J., Weibel, R., Jiang, M. H., Hölscher, C., Hackman, D. A., & Schinazi, V. R. (2017). EVE: A Framework for Experiments in Virtual Environments. In T. Barkowsky, H. Burte, C. Hölscher, & H. Schultheis (Eds.), Spatial Cognition X (pp. 159–176). Springer.

Kennedy, Robert S.., Lane, Norman E.., Berbaum, Kevin S.., & Lilienthal, Michael G.. (1993). Simulator Sickness Questionnaire: An Enhanced Method for Quantifying Simulator Sickness. The International Journal of Aviation Psychology, 3(3), 203–220. https://doi.org/10.1207/s15327108ijap0303_3

Keshavarz, B., Riecke, B. E., Hettinger, L. J., & Campos, J. L. (2015). Vection and visually induced motion sickness: How are they related? Frontiers in Psychology, 6, 472. https://doi.org/10.3389/fpsyg.2015.00472

Khronos Group. (2019). OpenXR (Version 1.0). The Khronos Group Inc. Retrieved October 7, 2022, from http://www.khronos.org/openxr/

Kourtesis, P., Korre, D., Collina, S., Doumas, L. A., & MacPherson, S. E. (2020). Guidelines for the development of immersive virtual reality software for cognitive neuroscience and neuropsychology: The development of virtual reality everyday assessment lab (VR-EAL), a neuropsychological test battery in immersive virtual reality. Frontiers in Computer Science, 12. https://doi.org/10.3389/fcomp.2019.00012

Lin, J. W., Duh, H. B. L., Parker, D. E., Abi-Rached, H., & Furness, T. A. (2002, March). Effects of field of view on presence, enjoyment, memory, and simulator sickness in a virtual environment. In: Proceedings IEEE virtual reality 2002, (pp. 164-171). IEEE.

Liu, Z. M., Chen, Y. H. (2019). The effect of masked peripheral vision on visually-induced motion sickness in immersive virtual environment. Advances in Psychological Science, 27(suppl.), 16–16. https://journal.psych.ac.cn/xlkxjz/EN/Y2019/V27/Isuppl./16

Loomis, J. M., Blascovich, J. J., & Beall, A. C. (1999). Immersive virtual environment technology as a basic research tool in psychology. Behavior Research Methods, Instruments, & Computers, 31(4), 557–564. https://doi.org/10.3758/bf03200735

Mathôt, S., Schreij, D., & Theeuwes, J. (2012). OpenSesame: An open-source, graphical experiment builder for the social sciences. Behavior Research Methods, 44(2), 314–324. https://doi.org/10.3758/s13428-011-0168-7

Mueller, S. T., & Piper, B. J. (2014). The psychology experiment building language (PEBL) and PEBL test battery. Journal of Neuroscience Methods, 222, 250–259. https://doi.org/10.1016/j.jneumeth.2013.10.024

Niehorster, D. C., Li, L., & Lappe, M. (2017). The accuracy and precision of position and orientation tracking in the HTC vive virtual reality system for scientific research. i-Perception, 8(3), 2041669517708205.

Pallavicini, F., Pepe, A., & Minissi, M. E. (2019). Gaming in Virtual Reality: What Changes in Terms of Usability, Emotional Response and Sense of Presence Compared to Non-Immersive Video Games? Simulation & Gaming, 50(2), 136–159. https://doi.org/10.1177/1046878119831420

Pan, X., & Hamilton, A. F. D. C. (2018). Why and how to use virtual reality to study human social interaction: The challenges of exploring a new research landscape. British Journal of Psychology, 109(3), 395–417. https://doi.org/10.1111/bjop.12290

Peeters, D. (2019). Virtual reality: A game-changing method for the language sciences. Psychonomic Bulletin & Review, 26(3), 894–900. https://doi.org/10.3758/s13423-019-01571-3

Peirce, J., Gray, J. R., Simpson, S., MacAskill, M., Höchenberger, R., Sogo, H., Kastman, E., & Lindeløv, J. K. (2019). PsychoPy2: Experiments in behavior made easy. Behavior Research Methods, 51(1), 195–203. https://doi.org/10.3758/s13428-018-01193-y

Reason, J. T., & Brand, J. J. (1975). Motion sickness. Academic Press.

Rebenitsch, L., & Owen, C. (2016). Review on cybersickness in applications and visual displays. Virtual Reality, 20(2), 101–125. https://doi.org/10.1007/s10055-016-0285-9

Roettl, J., & Terlutter, R. (2018). The same video game in 2D, 3D or virtual reality – How does technology impact game evaluation and brand placements? PLOS ONE, 13(7), e0200724. https://doi.org/10.1371/journal.pone.0200724

Schuetz, I., Karimpur, H., & Fiehler, K. (2022). vexptoolbox: A software toolbox for human behavior studies using the Vizard virtual reality platform. Behavior Research Methods, 1–13. https://doi.org/10.3758/s13428-022-01831-6

Smith, S. P., & Trenholme, D. (2009). Rapid prototyping a virtual fire drill environment using computer game technology. Fire Safety Journal, 44(4), 559–569. https://doi.org/10.1016/j.firesaf.2008.11.004

Spanlang, B., Normand, J.-M., Borland, D., Kilteni, K., Giannopoulos, E., Pomés, A., González-Franco, M., Perez-Marcos, D., Arroyo-Palacios, J., Muncunill, X. N., & Slater, M. (2014). How to Build an Embodiment Lab: Achieving Body Representation Illusions in Virtual Reality. Frontiers in Robotics and AI, 1, 9. https://doi.org/10.3389/frobt.2014.00009

Stahl, C. (2006). Software for Generating Psychological Experiments. Experimental Psychology, 53(3), 218–232. https://doi.org/10.1027/1618-3169.53.3.218

Stanney, K. M., Kennedy, R. S., & Drexler, J. M. (1997, October). Cybersickness is not simulator sickness. In: Proceedings of the Human Factors and Ergonomics Society annual meeting (vol. 41, no. 2, pp. 1138–1142). SAGE Publications. https://doi.org/10.1177/107118139704100292

Starrett, M. J., McAvan, A. S., Huffman, D. J., Stokes, J. D., Kyle, C. T., Smuda, D. N., ... & Ekstrom, A. D. (2021). Landmarks: A solution for spatial navigation and memory experiments in virtual reality. Behavior Research Methods, 53(3), 1046–1059. https://doi.org/10.3758/s13428-020-01481-6

Trenholme, D., & Smith, S. P. (2008). Computer game engines for developing first-person virtual environments. Virtual Reality, 12(3), 181–187. https://doi.org/10.1007/s10055-008-0092-z

Tynes, C., & Seo, J. H. (2019, July). Making Multi-platform VR Development More Accessible: A Platform Agnostic VR Framework. In: International Conference on Human-Computer Interaction (pp. 440-445). Springer. https://doi.org/10.1007/978-3-030-23528-4_59

Ugwitz, P., Šašinková, A., Šašinka, Č., Stachoň, Z., & Juřík, V. (2021). Toggle toolkit: A tool for conducting experiments in unity virtual environments. Behavior Research Methods, 53(4), 1581–1591. https://doi.org/10.3758/s13428-020-01510-4

Vasawade, R., Deshmukh, B., & Kulkarni, V. (2015, 4-6 Feb). Modularity in design: A review. 2015 International Conference on Technologies for Sustainable Development (ICTSD), 2015, pp. 1-4. https://doi.org/10.1109/ICTSD.2015.7095892.

Washburn, D. A. (2003). The games psychologists play (and the data they provide). Behavior Research Methods, Instruments, & Computers, 35(2), 185–193. https://doi.org/10.3758/BF03202541

Zarrad, A. (2018). Game engine solutions. Simulation and Gaming (pp. 75-87). https://doi.org/10.5772/intechopen.71429

Zhang, Q., Yamamura, H., Baldauf, H., Zheng, D., Chen, K., Yamaoka, J., & Kunze, K. (2021). Tunnel Vision – Dynamic Peripheral Vision Blocking Glasses for Reducing Motion Sickness Symptoms. In: 2021 International Symposium on Wearable Computers (pp. 48–52). https://doi.org/10.1145/3460421.3478824

Author’s note

The establishment of the College of Liberal Arts Virtual Reality Lab (Wenzhou-Kean University) was coordinated by both authors with the help of two WKU colleagues: Ruiyao Gao and Menglu Xu. We are grateful to them for their help. We also thank Shu-jie Chen our senior experimental technician at Wenzhou University for sharing her knowledge regarding virtual learning environment that provided us valuable insight and guidance in the conceptualization of the work. Both authors contributed to the conceptualization of the work. Both authors are responsible for all the statistical analyses. The article was written by LZM and edited by CYH. LZM is responsible for carrying out the literature review and work mentioned in Introduction, VR behavioral experimental system architecture, SkyrimVR-based behavioral experimental framework, Case-study, and Conclusion. We have no conflicts of interest to disclose.

Funding

This research is supported by Zhejiang Provincial Higher-Education Teaching Reform Project (JG20190471); the Scientific and Technological Innovation Activity Plan of College students in Zhejiang Province (New Seedling Talents Program, 2021R429043); the Zhejiang Provincial Natural Science Foundation of China (LY20F020031).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval

The experiment was approved by the research ethics board at the College of Education, Wenzhou University, and was run in accordance with the sixth revision of the Declaration of Helsinki (2008).

Consent to participate

Informed consent was obtained from all individual participants included in the study.

Consent for publication

All individual participants consent to publication of their anonymized data.

Competing interests

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Portions of the findings in the study conducted were presented as a poster at The 4th China Vision Science Conference (CVSC2019), Chengdu, Sichuan Province, China. We have no conflicts of interest to disclose.

Supplementary information

ESM 1

(CSV 1 kb)

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, ZM., Chen, YH. A modularity design approach to behavioral research with immersive virtual reality: A SkyrimVR-based behavioral experimental framework. Behav Res 55, 3805–3819 (2023). https://doi.org/10.3758/s13428-022-01990-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-022-01990-6