Abstract

Continuous flash suppression (CFS) is a popular masking technique used to manipulate visual awareness. By presenting a rapidly changing stimulus to one eye (the ‘mask’), a static image viewed by the other (the ‘target’) may remain invisible for many seconds. This effectiveness affords a means to assess unconscious visual processing, leading to the widespread use of CFS in several basic and clinical sciences. However, the lack of principled stimulus selection has impeded generalization of conclusions across studies, as the strength of interocular suppression is dependent on the spatiotemporal properties of the CFS mask and target. To address this, we created CFS-crafter, a point-and-click, open-source tool for creating carefully controlled CFS stimuli. The CFS-crafter provides a streamlined workflow to create, modify, and analyze mask and target stimuli, requiring only a rudimentary understanding of image processing that is well supported by help files in the application. Users can create CFS masks ranging from classic Mondrian patterns to those comprising objects or faces, or they can create, upload, and analyze their own images. Mask and target images can be custom-designed using image-processing operations performed in the frequency domain, including phase-scrambling and spatial/temporal/orientation filtering. By providing the means for the customization and analysis of CFS stimuli, the CFS-crafter offers controlled creation, analysis, and cross-study comparison. Thus, the CFS-crafter—with its easy-to-use image processing functionality—should facilitate the creation of visual conditions that allow a principled assessment of hypotheses about visual processing outside of awareness.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

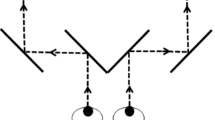

The past decades have witnessed continued interest in the question of the effects of unconscious visual stimuli on human sensory perception and behavior (Erdelyi, 1974; Eriksen, 1960; Dixon, 1971; Holender, 1986: Kihlstrom, 1987; Merikle & Daneman, 1998; Logothetis, 1998; Breitmeyer, 2015). Attempts to answer that question have deployed a variety of strategies to manipulate visual awareness, including visual masking, attentional distraction, ambiguous figural information, motion-induced blindness, and visual crowding to name a few (see reviews by Kim & Blake, 2005; Lin & He, 2009). Paramount among those strategies has been binocular rivalry (BR), a very popular technique for transiently abolishing visual awareness (for an overview of work on and ideas about BR, see reviews by Walker, 1978; Wolfe, 1986; Alais & Blake, 2005, 2015; Blake et al., 2014). During BR, a visual stimulus viewed by one eye is temporarily suppressed from awareness by simultaneous presentation of a dissimilar stimulus viewed by the other eye. Variants of BR that entail interocular suppression have also been introduced, including flash suppression (Wolfe, 1984) eye-swap BR (e.g., Logothetis et al., 1996), and generalized flash suppression (Wilke et al., 2003).

A new, highly potent form of interocular suppression, dubbed continuous flash suppression (CSF), burst on the scene 17 years ago (Fang & He, 2005; Tsuchiya & Koch, 2005), gaining pre-eminence as a tool for manipulating awareness (see Fig. 1A). In its original instantiation, CFS was induced by presenting to one eye a dynamic sequence of complex, colorful images (successive arrays of Mondrian-like geometric figures) updated at a rate of 10 Hz pitted against a single, smaller, weaker target image presented to the corresponding retinal area of the other eye. The list of stimuli that successfully generate CFS has since expanded to include dynamic arrays comprising random sequences of binary visual noise (Fang & He, 2005), random sequences of natural scene images (Kim et al., 2017), pointillist natural images (Cha et al., 2019), overlapping letters (Eo et al., 2016), and temporally and spatially filtered noise images (Han et al., 2016; Han & Alais, 2018). Regardless of the type of image sequence used to induce CFS, this form of interocular competition has the potential to suppress the target stimulus from awareness for many seconds at a time. Indeed, one of the strengths of CFS is the prolonged duration of visual suppression it produces – up to several tens of seconds, compared to just several seconds with BR (Blake et al., 2019). Another benefit is the ability to dictate the initially dominant percept, which is always the dynamic sequence. This control over awareness at onset allows immediate assessment of the impact of the suppressed target viewed by the other eye (e.g., Rothkirch et al., 2012). In addition, CFS tends to uniformly suppress relatively large targets whereas during BR, large stimuli tend to be experienced in piecemeal fashion comprising intermingled portions of each eyes’ views (Blake et al., 1992).

Summary of CFS publications. To assess impact, we compiled a database of abstracts, dissertations, review articles and empirical studies from the period 2004 to 2019. Publications were extracted from Google Scholar and were only included if CFS was a main topic or was used as a technique. A The number of CFS publications per year. There is a monotonic increase in the number of CFS publications per year, projected to reach approximately 100 CFS publications within the next 2–3 years. B The proportion of CFS publications sorted by disciplines (bottom panel), estimated from publication titles using MATLAB’s inbuilt clustering and word cloud algorithms (top panel). Although CFS was first developed within the basic vision sciences to manipulate visual awareness, it has been adopted in a variety of research domains (e.g., multisensory perception, affective neuroscience, eye-movement control, contrast gain-control, attention) and in diverse subject populations (young healthy adults, developmentally challenged people, individuals diagnosed with psychosis)

These benefits afforded by CFS for the study of awareness and visual suppression have spawned a flood of papers that have deployed the technique to study problems within diverse fields of basic and clinical science, as summarized in Fig. 1B. This growing popularity of CFS has also created something of a hodgepodge of results, including replication failures that lead to conflicting conclusions. This situation arises, in part, from the lack of systemized procedures for creating CFS which, in turn, introduces variability in CFS suppression strength and, hence, the incidence of target awareness. Although CFS is generally more effective than BR, its strength of suppression as gauged by the average duration of target invisibility varies substantially among individuals (e.g., Blake et al., 2019; Stein et al., 2011). It is therefore not unusual for studies to exclude participants or trials with insufficient suppression periods or partial suppression when viewing CFS, but such post-hoc data selection can adversely impact the generality of CFS results. A review of the CFS literature reveals exclusion rates ranging from 14 % (Korisky et al., 2019) to 50 % of tested participants (Sklar et al., 2012), which is a liability that compromises experimental efficiency and external validity of results. In addition, post hoc data selection may underestimate the true level of awareness in the remaining trials or participants (an effect referred to as regression bias), which in turn may lead to spurious claims of ‘vision without awareness’ in this subsample (Rothkirch et al., 2022; Shanks, 2017). It is worth noting that, besides suppression duration, there are alternative ways to define CFS effectiveness and target awareness. For example, CFS effectiveness can be gauged using forced-choice judgment tasks where performance requires registration of some aspect of the target stimulus such as a brief increase in its contrast (Tsuchiya et al., 2006) or its spatial location relative to a fixation mark (e.g., Sklar et al., 2012). In a similar vein, oculomotor reflexes have been used to gauge residual effectiveness of targets suppressed during CFS (e.g., Rothkirch et al., 2012). While these measures are more objective, they do not mitigate concerns arising from post hoc data selection.

The typical motivation for using CFS is to render a target invisible, so a preferable alternative to post hoc data selection would be to optimize procedures to generate effective suppression from the outset. Although a seemingly simple solution, it is not always clear what steps are sufficient to achieve that optimization, and brute-force strategies that have been used in earlier work introduce problems of their own. Part of the variability in CFS efficacy stems from its sensitivity to eye dominance, where stronger target suppression is typically obtained when the target is presented to the non-dominant eye (Yang et al., 2010). Studies keen on exploiting this characteristic would want to assess eye dominance prior to CFS testing, but there is currently no consensus or guideline about which eye dominance test to utilizeFootnote 1 (see also Ding et al., 2018). Compounding this difficulty is the variability in CFS potency when attempting to suppress certain categories of target stimuli. For example, some studies (Moors et al., 2016; Yang et al., 2007) have found that suppression durations for images of faces tend to be relatively brief (e.g., around 2–3 s). Similarly, contrast thresholds for perceiving a moving target are more strongly impacted when the CFS masker itself incorporates motion and not just flicker as characteristic of a standard Mondrian sequence (Moors et al., 2014). In fact, some features of multidimensional targets, including color (Hong & Blake, 2009) and flicker (Zadbood et al., 2011), may resist CFS suppression even when form information defining the appearance of those targets is erased from awareness. This kind of differential susceptibility of stimulus qualities during CFS, dubbed ‘fractionation’ (Moors et al., 2017; Zadbood et al., 2011), complicates interpretation of results from CFS studies of putatively assessing unconscious visual processing. As an aside, one strategy used to induce more potent CFS suppression is to reduce target contrast to ensure greater robustness of target invisibility. But without appropriate control conditions (Blake et al., 2006), this manipulation may overly degrade the target signal and exaggerate the apparent strength of suppression, to an extent that null effects are inevitable (cf. Sterzer et al., 2014). Finally, even when effective CFS is induced initially, its strength tends to wane over successive trials and sessions (Blake et al., 2019; Kim et al., 2017; Ludwig et al., 2013), limiting the amount of testing that can be conducted for each participant.

These challenges in optimizing suppression effectiveness complicate existing concerns about how one defines “unawareness” when using CFS (Moors, 2019; Moors et al., 2016; Moors & Hesselmann, 2018), and whether or not observed “unconscious” effects can be attributed to low-level or high-level stimulus properties (Moors et al., 2017). Work aimed at elucidating the underlying mechanisms of interocular suppression report distributed processes along early and late stages of visual processing (Nguyen et al., 2003; Sterzer et al., 2014), including those underlying BR (Tong et al., 2006) and CFS (Han & Alais, 2018; Yang & Blake, 2012; Yuval-Greenberg & Heeger, 2013). These distributed processes may contribute to involvement of both general (e.g., high-level) and feature-selective (i.e., low-level) components of interocular suppression (Han & Alais, 2018; Stuit et al., 2009). Such distributed involvement, in turn, may differentially impact suppression of certain stimulus features or components to a lesser extent than others, potentially driving the conclusions of unconscious visual processing and stimulus preservation in CFS. In other words, these differences may affect the residual extent of unconscious visual processing (Sklar et al., 2012) and the type of stimulus properties that survive suppression, which may explain why some CFS findings, such as dorsal stream preservation (Almeida et al., 2008, 2010; Fang & He, 2005), are not supported by some behavioral (Rothkirch & Hesselmann, 2018) and neuroimaging studies (Hesselmann & Malach, 2011). Differential interference along hierarchically organized processing streams may also explain why certain stimuli or stimulus properties are difficult to suppress, e.g., fearful facial expressions (Hedger et al., 2015) and temporal content (Zadbood et al., 2011), and why high-level priming effects can occur in certain CFS regimes (Gelbard-Sagiv et al., 2016).

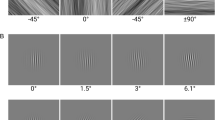

From the above considerations emerges a clear lesson: our understanding of the consequences of unconscious processing during CFS would benefit from a standardized procedure for the assessment and statistical analysis of awareness (Rothkirch & Hesselmann, 2017), and a more principled, standardized design of stimulus content and mode of presentation for effective CFS suppression. With regards to the latter, some key spatiotemporal parameters have been shown to affect CFS effectiveness (Han & Alais, 2018; Moors et al., 2014; Yang & Blake, 2012; see also review by Pournaghdali & Schwartz, 2020) and interocular suppression (Alais & Parker, 2012; Fahle, 1982; O’Shea et al., 1997; Stuit et al., 2009; Yang & Blake, 2012), presented briefly as follows. In sum, processes engaged by the target need to be given due consideration when making decisions on the mask properties. Within the spatial domain, more effective suppression is obtained when the mask has a similar spatial frequency (Yang & Blake, 2012), chromatic (Hong & Blake, 2009) and orientation (Han & Alais, 2018) content to the target. The presence of sharp edges in discretely updated CFS images potentiates masking effectiveness (Baker & Graf, 2009; Han et al., 2018; Han et al., 2021), and more structurally complex targets are better suppressed by masks with higher image entropy, e.g., using pattern elements with textured, pink noise fill (Han et al., 2021). Smaller mask sizes are recommended when narrow orientation bandwidths are used (Han et al., 2019), because like in BR (Blake et al., 1992), the increased collinearity is likely to produce more perceptual mixtures with larger mask sizes. Temporally, ensure that the mask has a similar temporal frequency to temporal modulating targets (Han et al., 2018; Han & Alais, 2018), or a lower frequency when paired with static targets static (Han et al., 2016).

Manipulating and matching target-mask spatiotemporal properties allows us to use a higher target contrast while still achieving robust suppression. However, fine control of these properties requires an involved knowledge of image processing, spectral analysis, and spectral filtering. Currently, there are available resources for generating Mondrian noise patterns (Hebart, n.d.) and for building CFS experiments (Nuutinen et al., 2018). Critically lacking, however, are the means for systematically creating CFS test and masking stimuli tailored to selectively engage neural mechanisms with specific spatio-temporal properties. To promote standardization of this crucial ingredient of CFS studies, we introduce in this paper CFS-crafter, an open-source app that allows novice users and experts to analyze and manipulate spatiotemporal content of CFS stimuli with a rudimentary knowledge of spectral analysis and spectral filtering. In disseminating this app, we envision its use would facilitate more effective stimulus choices, better matched target in practice, and a means to quantify CFS stimuli and thus clarify the implications of suppression of those stimuli for unconscious visual processing.

Description of the CFS-crafter

The image processing toolbox in MATLAB provides a means to obtain fine control over the spatiotemporal attributes of any image sequence. However, usage of the toolbox requires detailed knowledge of image processing techniques such as filtering procedures and frequency domain operations, which may present an obstacle to novice users and experts interested in precisely controlling or standardizing CFS stimuli (for example, to match the spatial or temporal content of target and mask). The CFS-crafter app simplifies this challenge. Designed to be as streamlined as possible, the app is a point-and-click interface that incorporates spectral and non-spectral image processing strategies. Not to be confused with a tool for presenting CFS stimuli or running CFS experiments, it can be used to generate animated CFS masks, to customize their properties and to conduct analyses on targets (i.e., monocularly viewed test stimuli to be suppressed) and CFS masks (animations viewed by the other eye). Although effective usage of the app requires a rudimentary understanding of spectral analysis and spectral filtering, help files are provided in the app to support novice users and experts to achieve stimulus design and analysis that allow them to tailor stimuli for their specific experimental question.

The open-source app can be downloaded from GitHub (https://github.com/guandongwang/cfs_crafter), available as a MATLAB application (file named “cfs_MATLAB.mlappinstall”) used with later versions of MATLAB (e.g., R2020a) or as a standalone application (file named “CFS-crafter Windows Installer.exe” for PC users and “CFS-crafter Mac Installer.app” for Mac users). The process of installation is straightforward for both versions of the CFS-C. For the MATLAB application, and an installation prompt is provided within MATLAB when the installation file is loaded (Fig. 2A, left panel). The installed program is then loaded onto MATLAB’s Apps menu, providing easy access to the app’s functionalities. In the standalone version, users will be prompted to install MATLAB Runtime (Fig. 2A, left panel), a freely available library that allows users to operate compiled MATLAB applications without a license. When the installation process for MATLAB runtime is complete, the standalone version will be in a CFS_Crafter folder under Applications on a Macintosh operating system (or Mac OS) and under the Program Files folder on a Windows operating system.

Setting up the CFS-crafter. A Once the application file is loaded, the user can install the CFS-crafter onto MATLAB’s App tab. The standalone version prompts the installation of MATLAB runtime, which allows the CFS-crafter to be used as a regular program, without a MATLAB license. As a standalone program, the CFS-crafter appears in the Applications directory on a Mac OS. B After the users have entered the display screen information in the homepage, they can choose to create a mask sequence (Creation), construct a sequence from a pre-selected set of images (Conversion), customize their existing sequence (Modification) and/or analyze their CFS stimuli set for spatial, temporal or orientation content. Information about these modules is also provided in the help button

Figure 2B describes the homepage of the CFS-crafter. Here, the user is given the option to specify the properties of the testing display (e.g., screen resolution and refresh rate), the properties of the CFS mask (e.g., duration and size) and the viewing distance from observer to testing display. Although the CFS-crafter can extract and prefill some of the current display properties (e.g., resolution), the user should review these parameters before performing any tasks with the app, especially if the experiment is being conducted with a different display from that used with the CFS-crafter. The homepage allows the user to indicate the type of task required and, depending on the selection submitted, a different interface will be initiated for mask generation, stimulus conversion, customization, and stimulus analyses. To demonstrate the use of these functionalities, we now walk through three ways an experimenter can match or evaluate the spatiotemporal content of targets and CFS masks. As several technical terms will be used in this paper, a glossary explaining each of these terms will be provided in Appendix 1.

The temporal content of the dynamic sequence

Suppose an experimenter needs to suppress a static, chromatic target from visual awareness. To match the processes engaged by the chromatic target, which are likely to be more parvocellular in nature (Derrington & Lennie, 1984; Shapley et al., 1981), the experimenter could use a mask with low temporal frequency content. A common and straightforward method of generating a low temporal frequency mask is to vary the number of pattern updates in each second (Tsuchiya & Koch, 2005; Zhan et al., 2019; Zhu et al., 2016). This captures the number of changes in the mask’s spatial pattern per second, and is usually quoted units of Hertz, but it is not equivalent to temporal frequency. Pattern update rate is a coarse temporal metric that usually overstates the true temporal frequency value because it ignores the variation in luminance over time. To illustrate this point, Fig. 3A tracks the real-time luminance changes of a single pixel in a greyscale, dynamic Mondrian sequence with a pattern update rate of 10 Hz. Instead of modulating at a rate of 10 Hz, the pixel’s luminance varies randomly with each new pattern configuration, occasionally trending in the same direction for several frames (low frequency change) or reversing direction from frame to frame (high frequency), or even having a constant value between one pattern and the next. The temporal frequency spectrum is therefore complex and variable. Moreover, it varies with the number of grey levels used in the Mondrian pattern. Including more grey levels increases the proportion of smaller luminance changes, resulting in lower modulation rates (Fig. 3B). Decreasing the number of grey levels, on the other hand, increases the proportion of luminance reversals between frames and increases the effective modulation rate. A particular pattern update rate, when quoted in pattern changes per second, is therefore not necessarily comparable across studies and may explain the inconsistency in reported ‘optimal’ update rates for CFS, e.g., 3–12 Hz (Tsuchiya & Koch, 2005), 6 Hz (Zhu et al., 2016) or 4, 6, and 8 Hz (Zhan et al., 2019). These values also likely overestimate the optimal luminance modulation. Indeed, using CFS animations composed of pink noise images (patterns with spatial frequency spectra more closely approximating spectra comprising natural images), peak CFS strength occurs around 1 Hz (Han et al., 2016). Understanding the underlying luminance modulation rate of CFS animations is important for inferring likely neural mechanisms, because high and low modulation frequencies more strongly activate magnocellular and parvocellular neurons, respectively (Skottun & Skoyles, 2008).

The difference between pattern update rate and temporal frequency. A Pixel luminance timeline. A pattern that updates every 100 ms would achieve a maximum temporal frequency of 5 Hz if, an only if, there were a luminance reversal every update. This rarely occurs, however, as consecutive luminance values will sometimes trend in the same direction, producing a complex, unpredictable step-function in luminance that creates a waveform modulating at a lower temporal frequency (right panel). The pixel outlined in red in successive animation Patterns 1 and 2 transitions from very dark to very light, as indicated by the first two bars in the histogram in the left-hand part of Panel A, but as shown by the luminance variations in that pixel over the next eight changes, the magnitude and polarity of luminance change is unpredictable within this 1-s period. B The temporal profile of pixel timelines varies with the number of grey levels used to generate the Mondrian sequence. Decreasing the number of grey levels increases the proportion of larger luminance changes between pattern updates (right panel). This broadens the temporal frequency spectrum and raises the likelihood of a maximum 5-Hz frequency modulation being achieved (although it remains a low probability). In contrast, increasing the number of grey levels increases the likelihood of slower, trending changes in luminance. This biases the temporal frequency spectrum to low frequencies and makes the likelihood of 5-Hz modulation exceedingly rare. C To accurately represent the color and temporal information of a mask sequence, all sequences processed by the CFS-crafter must be in a 4D matrix. The FFT is used in spectral analyses, where the spatial frequency of each image is represented by the x and y-axes, and the temporal frequency of each pixel timeline is represented by the z-axis

One goal of the CFS-crafter is to allow users to quantify and manipulate spatial luminance modulations and, thereby, create effective, well-defined stimuli that, in turn, will yield data that allow more definitive conclusions about the mechanisms promoting CFS masking. To serve this purpose, the CFS-crafter uses spectral filtering and spectral analytic techniques. Based on Fourier’s theorem that any complex time series can be decomposed into a sum of sinusoids (Brigham & Morrow, 1967), the periodic luminance content of a pixel in a CFS animation can be extracted with the fast Fourier transform (FFT). This transforms the time series (i.e., the animation sequence) into a frequency representation and averaging the FFTs of a selection of pixels provides a good estimate of the animation sequence’s temporal frequency content. This temporal frequency spectrum can be analyzed or manipulated with temporal filters (i.e., to boost or to attenuate certain frequencies) and then back-transformed with an inverse Fourier transform to a time series in which the animation has a known temporal frequency content. The CFS-crafter represents mask sequences as four-dimensional (4D) matrices in MATLAB’s .mat file format; these matrices represent the spatial (x, y), temporal (z) and color content (red, blue, and green layers) of the sequences. Spectral content is extracted from the sequence using a 1D FFT, where temporal information is represented by the z-axis and the spatial information of each image in the mask sequence is represented by the x and y-axes (Fig. 3C).

There are two ways to create a temporally low-pass CFS animation with the CFS-crafter. In the first approach (illustrated in Fig. 4), one starts with a ready-made dynamic sequence that the user wishes to customize into a temporally low-pass sequence. This sequence can be provided as a 4D matrix (in the same format as the sequences generated by the CFS-crafter) in the Modification interface (Fig. 4A), accessed by selecting the Start button on the homepage. Here, the user specifies the location of the 4D matrix (choose mask directory) and selects the mask property to be manipulated. Modification options include spatial frequency and temporal frequency of the CFS sequence, as well as orientation and the phase of the spatial patterns (e.g., scrambling phase to remove edges and form). The user can also provide a set of pre-selected pattern images, but this will require constructing the 4D matrix with the CFS-crafter in the Conversion interface (Fig. 4B), before performing any modification procedures. In this interface, the user can specify the image input directory and desired mask parameters such as pattern update rate and RMS contrast (defined as the average standard deviation of pixel luminance across the whole sequence). Note that the CFS-crafter scales all pixel luminance values within a range of 0 to 1, and clipping would occur for sequences with larger RMS contrasts (e.g., > 30 %). The user is advised to evaluate the resulting images in the preview page (Fig. 4C), which will load when the conversion process is completed. The CFS-crafter also resizes in the input images according to the desired width and height, allowing the user to submit images of any size. However, to avoid unwanted changes in image aspect ratio, we recommend reviewing and cropping input images where necessary. In the preview page, the user is allowed to review individual frames of the mask sequence and to obtain basic mask information. Satisfied with the converted mask sequence, the experimenter can save the sequence as a .mat file, close the conversion interface, and proceed to the Modification interface.

Modifying existing image sequences with the CFS-crafter. A The Modification interface provides four types of spatiotemporal manipulations. In this example, the user is opting to conduct a low-pass temporal filter on a pre-saved 4D matrix representing the mask sequence. Filter options include log-Gaussian, Butterworth, Gaussian, or ideal filter. B Users can generate a 4D matrix using the Conversion interface. To do so, add a pre-selected set of images, enter the desired parameters such as the mask update rate and initiate the conversion process with the Start button. C A preview page will be loaded after every major operation (e.g., completion of customization or conversion process). The page contains details about the generated sequence (e.g., angular size), and the user can screen the individual image frames in the sequence. Depending on the type of operation performed, the user can choose to save the sequence as a .mat file, image files or as a mp4 video clip. As the current example previews the results of converting image files into a CFS sequence, the option for exporting image files is disabled. D To demonstrate the effect of a Gaussian filter and an ideal filter, we compare a low-pass, Gaussian spatial filter (𝜎 = 3) with a low-pass, ideal spatial filter. Although both filters have a cut-off frequency at 5 cycles per degree (cpd), the ideal filter has a sharper transition between filtered and unfiltered frequencies. This sharper transition comes with a cost: compared to the Gaussian filter, images processed by an ideal filter have a higher probability of ringing artefacts (left panel). Ideal filters will produce no artefacts with noise images as those images contain no structured phase information (i.e., they are phase incoherent). In the temporal domain, large changes in pixel luminance (e.g., black to white) may occur between patterns. When processed with an ideal filter, spurious temporal modulations in the form of ringing artefacts will occur

The following steps will generate a temporally low-pass mask sequence using the Modification interface. Users first select the Temporal option, after which they can opt to extract a range of temporal frequencies (bandpass), high frequencies (high-pass), or in this example, low frequencies (low-pass). These operations can be performed using either an ideal filter that fully preserves the amplitudes of selected frequencies (passband) and excludes out-of-range content (van Drongelen, 2018), or smoothed-edged filters such as the Gaussian or Butterworth filter (see examples in Fig. 4D). In practice, smooth-edged filters are recommended for all but noise images as they reduce the probability of ringing artefacts that tend to occur with ideal filters, although they do blur the demarcation between filtered and unfiltered frequencies, as the shape of these filters are gradually ramped on and off over a defined range of frequencies. The user is therefore advised to determine the choice of filter type according to the needs of the experiment. To help facilitate this decision, information is provided for each type of property manipulation (i.e., spatial, temporal) and these details are accessible through the help button in the Modification interface. After entering the desired parameter selections, the user can initiate the customization process by selecting the Start button. A preview page will load once the customization process is complete, and the user can review the individual images of the mask sequence by manually entering specific frame numbers into a dialog box, using the slider or by selecting the Play button. Basic mask information, such as the image’s angular size (expressed in units of degrees of visual angle subtended at the retina) and its duration, is also provided in the preview page. The user can choose to save the temporally low-pass filtered mask sequence as a .mat file or as an mp4 video clip (Fig. 4C). To represent the modified temporal information accurately, every frame in the sequence needs to be exported. Image formats such as .jpeg are therefore disabled for temporally filtered sequences to avoid performance issues, but they remain available for spatially manipulated sequences.

In the second approach, the user does not have ready-made sequences or pre-selected pattern images (see Fig. 5). Mask creation is a supported functionality in the CFS-crafter, and it can be accessed by selecting the Start button from the homepage. This selection will load the mask generation interface (Fig. 5A), where the user is provided with several mask options, including patterns composed of geometric shapes, spatial noise images (e.g., white or pink noise) and patterns composed of natural object elements. The creation of the former two types of pattern sequences is straightforward; select the desired mask type, pattern characteristics, mask parameters (e.g., mask update rate) and click the Start button. In general, patterned images are composed of randomly sized elements located randomly around the mask area (method from Hebart, n.d.). Each element is also set to one of five colors (i.e., black, red, green, blue, or yellow) or one of six different grey levels (i.e., 0, 20, 40, 60, 80 and 100% of the maximum luminance).

Creating new masks with the CFS-crafter. A The Creation interface allows the synthesis of several types of masks according to desired mask parameters (e.g., mask duration). In this example, the user has opted to make a masking pattern comprising face elements. B A tracing interface will load when the user clicks on the Start Tracing button. Here, the user can trace up to five face images with an elliptical tracing method. Completed traces will appear as a row in this interface, and the user is free to delete any unwanted traces before submitting them with the Finish button. C The mask generation process is initiated when the user selects the Start button on the mask generation interface. Upon completion, a mask (leftmost panel) with varying face sizes, spatial locations, and contrast would be obtained. To illustrate the variety offered by the CFS-crafter, other forms of possible mask types are shown, and these include colored geometric patterns and spatially pink noise

Unlike geometric shapes, there is no simple mathematical equation that allows the generation of natural objects in the CFS-crafter. Therefore, an extra step of object extraction is required to generate patterns with object elements. Using MATLAB’s assisted tracing methods, face elements are extracted with an elliptical trace and other forms of objects are extracted using freehand tracing. A user wishing to create a dynamic pattern sequence composed of facial elements (akin to Han et al., 2021) would select Traced Item > Face > Start Tracing to load the tracing interface (Fig. 5B), which permits up to five image traces. To trace a face image (.jpg, .jpeg, .png or .tiff formats), the user must specify the input directory under Choose source image and select the Start Tracing button. The face image would be presented as an interactive object, and the user is now able to position an elliptical mask over the desired portion of the face image. Completed traces are submitted by double-clicking on the interactive object, and the user is allowed to delete and replace these extracted objects. Traces that have yet to be submitted can be deleted by Right Click > Delete Ellipse or by pressing the Esc button on the keyboard. When ready to generate the face element mask, the user selects the Finish button which closes the tracing interface. The user is now free to initiate mask generation with the Start button on the mask generation page. As before, a preview page would be loaded when the mask generation process is completed, and the user would need to save the mask sequence as a .mat file before proceeding with temporal manipulation in the Modification interface.

The spatial content of the dynamic sequence

The spatial qualities of a Mondrian sequence can be quantified with its spatial frequency content. Like temporal frequency, spatial patterns of pixel luminance across an image can be decomposed into sinusoidal components with an FFT. The spatial information of each image is represented by x and y dimensions in the 4D spectrum (Fig. 3C), which describe the distribution of phase structure of different spatial frequencies at different spatial orientations. As higher and lower spatial frequencies are typically linked to edges and solid areas, respectively, the spectral spatial content of mask images presents opportunities for fine-tuned spatial manipulations. This motivates the use of FFT techniques as the mainstay strategy for spatial manipulation in the CFS-crafter.

Following our example with the chromatic static target, one may also wish to enhance suppression effectiveness by matching the spatial qualities of the mask and target. Assuming a narrowband, high spatial frequency target, one can remove the low spatial frequency components of the Mondrian images to create a spatially high-pass mask. This is achieved by selecting the Spatial frequency component in the customization interface which, like the temporal frequency manipulation, will present different filter options. The user can now choose the type of filter (e.g., Gaussian, Butterworth) and the range of frequencies to preserve (e.g., high-pass or a band of higher spatial frequencies). Alternatively, the user may be interested in how mask pattern edges affect the suppression of a spatially broadband chromatic target. Here, the user would preserve the mask’s broadband spatial frequency content (to match the target’s spatially broadband content) and would manipulate the spatial coherence of the mask images using the Phase Scramble component of customization interface. Under this selection, the user can specify a range of spatial frequencies for the randomization operation or opt to randomize the phase structure of all frequency components. If the user specified a range of frequencies, the phase structure of frequencies outside the selected range would be preserved, allowing the user to selectively manipulate the structural integrity of the image in different spatial frequency bands. In our current example, the user would choose a low-pass frequency range, specify the cut-off spatial frequency and the extent of phase scrambling, i.e., scrambling index of 0 for no randomization and an index of 1 for maximum randomization (Fig. 6A, top panel). The resulting mask image (Fig. 6A, bottom left panel) would contain intact edges and a randomized spatial layout for the solid areas. Conversely, to preserve solid areas (Fig. 6A, bottom right panel), the user would choose a high-pass frequency range for the phase-scrambling process.

Controlling spatiotemporal content with the CFS-crafter. A The CFS-crafter allows users to phase scramble specific ranges of spatial frequencies. In this example, the user chooses to phase scramble frequencies below 3 cpd (low-pass scrambling). Example images of low-pass and high-pass scrambling are illustrated in the bottom panel. B A user keen on evaluating the modification results can analyze the stimuli with the Analysis interface, which allows the user to input mask sequences (4D matrices in .mat file format) and single image files (e.g., .png). Users can input target and mask images/sequences into the interface and compare the properties of these stimuli. Users are also free to edit their selections, choose the appropriate analysis and save the results as a .mat file. For example, a user interested in edge density selects the Canny method and leaves the threshold entry blank. In response, MATLAB would use the default threshold for the Canny method. C To allow easy visual comparison, results for each type of stimulus are color coded and represented by a letter symbol. Analyses are also automatically matched to the content of the stimulus. For example, despite selecting spatial and temporal frequency analyses in the Analysis interface (B), the CFS-crafter only outputs temporal frequency results for Stimulus B, a greyscale mask sequence. D Users can evaluate the performance of their chosen edge detection method by selecting Preview Edges in the Analysis interface. Here, they can review a sample frame of each mask sequence or individual image. The edge detection parameters and computed edge density values for each type of stimulus are provided in this review page

Our descriptions thus far have presented temporal and spatial manipulations separately, but it is possible to simultaneously customize multiple spatiotemporal properties with the CFS-crafter. This capability does come with some practical limitations built into the program. For example, the option for phase scrambling will be disabled if the user had chosen to conduct orientation filtering, as orientation depends on phase coherence over frequency. In contrast, a user who opted to filter the spatial frequency components of a Mondrian sequence without altering the phase structure would be still able to conduct orientation filtering. Similarly, a user interested in conducting spatial and temporal would be able to do so by selecting the Spatial frequency and Temporal components. As spatial and temporal content are independent parameters, choices in the spatial domain will not affect or disable options within the temporal domain. Users are advised to review the information provided by the help button before manipulating multiple parameters.

The spatiotemporal similarity between target and mask

We have shown how the CFS-crafter can be used to generate compatible spatiotemporal properties between the target and mask. In general, masks (i.e., the CFS animation viewed by one eye) and targets (i.e., the weaker stimulus viewed by the other eye) sharing similar spatiotemporal properties produce more effective CFS suppression, e.g., longer suppression periods, larger target contrast thresholds (Han & Alais, 2018; Moors et al., 2014; Yang & Blake, 2012). However, there is also evidence suggesting that the similarity between target and mask may have little effect on some stimulus types, e.g., movement (Ananyev et al., 2017). The reasons for this discrepancy are unclear, although it is possible that CFS suppression may involve both feature and non-feature selective processes. Depending on the type of stimulus and task requirements, either process may have a larger or smaller effect on CFS task performance. Therefore, it is useful to quantify the spatiotemporal properties of CFS mask and target stimuli, as this would not only indicate the amount of target/mask overlap and potential low-level explanations for CFS results, but also provide information on how CFS suppression works with different types of stimuli.

Returning to our chromatic target example, suppose the user has collected data with two types of chromatic masks and obtained different patterns of results. In addition to comparing participant characteristics and data collection procedures between the two datasets, the user could also evaluate the spatiotemporal properties of the two mask sequences and their respective compatibility with the target. The Analysis interface (Fig. 6B) can be launched by selecting the Analysis button in the homepage. Here, the user can add the directories of the mask and target stimuli, and the user is free to edit their choices or refresh the list by clicking on the Reset button. Note that while mask sequences are required to be in a 4D matrix, target images can be loaded as .jpg, .png or .tiff file. Three main analysis categories are provided in the interface, namely, Basic Information, Spatial/Temporal Frequency and Color. Properties grouped under Basic Information provide a summary description of the stimulus and are presented in table format in the analysis output (Fig. 6C). These statistics include RMS contrast, image entropy, and edge density. RMS contrast is the main image contrast measure in the CFS-crafter, as it does not depend on the spatial distributions of contrast in the image, unlike other indices of contrast such as Michelson contrast (Kukkonen et al., 1993). This independence is well suited for the more complex images typically used in CFS, such as Mondrian patterns or natural object images.

The metrics of image entropy and edge density quantify image texture, and these measures have been linked to CFS suppression durations (Han et al., 2021). By definition, image entropy is the level of noise or randomness in an image’s pixel intensity distribution (Gonzalez et al., 2004). Entropy can distinguish images with uniform pattern elements from more textured images. For example, a white noise image will produce a higher entropy value than a square wave grating, as the latter has a more ordered arrangement of pixel intensity and only two grey levels (black and white). Edge density estimates the prevalence of edges in an image or image sequence. Although high spatial frequencies are often associated with edges in an image, edge density is a more direct measure that can capture the absence of edges in phase scrambled images. A major component of the edge density calculation is edge detection, which is typically achieved by considering the gradient changes in pixel intensity (Muthukrishnan & Radha, 2011). The CFS-crafter provides four different detection methods, of which the Canny edge detection method is default. This is because the Canny method uses two thresholds to detect strong and weak edges, and as a result, it is less susceptible to noise (Muthukrishnan & Radha, 2011). The Approximate Canny method is similar to Canny edge detection, except that it has faster execution times and lower edge detection accuracy. To aid the choice of detection methods, users are advised to review the information buttons before selecting a particular edge detection method. In addition, they can choose to evaluate the results of edge detection with the Preview Edges option (Fig. 6B), which loads a preview page and allows them to save these results as a .mat file (Fig. 6D).

The Spatial/Temporal Frequency analysis extracts the spatial frequency and temporal frequency amplitude spectrums from the input stimuli. Both measures are computed over the entire mask area, because it provides spatial coverage for all possible target sizes and locations (e.g., smaller targets that are presented in randomized locations or targets with the same size as the mask images). Unlike the Modification interface, spatial frequency detail can either be extracted from 4D mask sequences generated by the CFS-crafter or single image files. (Note: all images are converted to greyscale before conducting any spatiotemporal analyses. This increases processing efficiency and does not drastically alter the spatiotemporal results.) This allows the user to compare spatial frequency information between target and masks. As temporal frequency information can only be extracted from mask sequences, temporal analyses will not be conducted for single image files. As illustrated in Fig. 6C, each input stimulus is plotted as a separate line for each parameter, allowing easy comparison among the analyzed stimuli. Users who wish to save the graphical results would place their cursor over each graph and select the save icon (Fig. 6C). One can also save the spectrum data with the Save Results to File button, which produces a structure variable in the .mat file format. The spectrum data can be retrieved from this variable using MATLAB coding norms, e.g., analysis_results.frequency_results.spatial_frequency. Finally, basic color statistics can be computed using the Color option. Selecting this option will output the hue, saturation, and value (HSV) colormap as separate layers (i.e., third dimension) of a 3D matrix. Summary statistics for the stimulus’ HSV content are presented as separate tables (e.g., mean hue) and the distribution of HSV values are presented as separate plots (Fig. 6C). As with the other types of analyses, HSV results can also be saved to a .mat file for further use.

Continuing with the discussion of similarity between mask and target, imagine a scenario where a given chromatic mask sequence contains more low-pass spatial frequency content than does a target that portrays semantic content (e.g., an angry face). The user now faces two possible explanations for the observed results: 1) the high-level, semantic information of the target, or 2) the low-level spatial frequency differences between the target and mask. To evaluate these possibilities, the user can customize their target stimuli to maximize target/mask spatial compatibility. This is performed in the Modification interface, where the target stimulus can be submitted as a single image in typical image file formats (e.g., .jpg, .jpeg, .png), or as a 4D matrix (in the same file and structure format as those created with the CFS-crafter) if the chromatic target is also temporally modulating. Like mask sequences, temporally modulating targets that are not in the appropriate format can be converted into a 4D matrix with the Convert interface. The same practical limitations in customization apply to target stimuli. For example, phase scrambling is not permitted with orientation filtering in the Modification interface and selecting temporal filtering on a single image file would prompt an error message.

Recommendations when using the CFS-crafter

Processing efficiency

The codes supporting the capabilities of the CFS-crafter are programmed to be as streamlined as possible. Nevertheless, it is useful to discuss the performance limits of the application and how to use it more efficiently. Test runs on a Mac OS with Intel Core i7 and an Iris Plus Graphics 655 graphics card required approximately 5 s to conduct a full analysis (i.e., all analyses options selected) on a sequence comprising 120 images (200 by 200 pixels each). A similar period is required to generate a face element mask sequence with the same dimensions. However, we expect processing times to increase with larger image sizes and longer durations, especially since 4D matrices are used to represent mask sequences. For example, designing a 3-min colored mask sequence for a 120-Hz screen would require a total of 21,600 RGB frames. This rate of increase is slower in more efficient operating systems, and users are advised to consider their system specifications when processing large image sequences with the CFS-crafter. If a more efficient system is not available, the user may consider conducting the image processing in chunks or loop a shorter mask sequence during the experiment.

Conclusions and discussion

Continuous flash suppression (CSF) is a highly effective technique for erasing a normally visible target from visual awareness for several seconds at a time. Despite its purported effectiveness, the lack of standard procedures has presented challenges in data interpretation and effective CFS usage. To address these issues, we designed the CFS-crafter, an open-source MATLAB application that allows fine-control manipulation and analyses of CFS stimuli with no prior expertise in image processing. We hope that the increased accessibility to finer stimulus control and analyses would facilitate more effective usage of the technique among novice users and experts and promote more in-depth discussions about CFS mechanisms and findings. Lastly, the app and its source code are made available at GitHub, a publicly accessible, online platform (https://github.com/guandongwang/cfs_crafter), along with a discussion forum to encourage collaborative communication. GitHub users may even use the platform’s pull request to submit modifications or additional functionalities, which we will review and, if approved, incorporate into the CFS-crafter. We hope that the CFS-crafter will be useful to the research community, and that the open-source nature of the CFS-crafter will inspire additional resources for the effective use and study of CFS.

Notes

A possible solution is to assess eye dominance using the same target and mask stimuli in a pretest. Counterbalance the stimulus presentation between the eye to learn which eye/mask combination is most effective. Alternatively, make the eye of origin a factor in the experimental design by testing both combinations of stimulus conditions and eyes in the main experiment.

References

Alais, D., & Blake, R. (Eds.). (2005). Binocular rivalry. MIT Press.

Alais, D., & Blake, R. (2015). Binocular rivalry and perceptual ambiguity. In J. Wagemans (Ed.), Oxford Handbook of Perceptual Organization. Oxford University Press.

Alais, D., & Parker, A. (2012). Binocular rivalry produced by temporal frequency differences. Frontiers in Human Neuroscience, 6. https://doi.org/10.3389/fnhum.2012.00227

Almeida, J., Mahon, B. Z., Nakayama, K., & Caramazza, A. (2008). Unconscious processing dissociates along categorical lines. Proceedings of the National Academy of Sciences, 105(39), 15214–15218. https://doi.org/10.1073/pnas.0805867105

Almeida, J., Mahon, B. Z., & Caramazza, A. (2010). The Role of the Dorsal Visual Processing Stream in Tool Identification. Psychological Science, 21(6), 772–778. https://doi.org/10.1177/0956797610371343

Ananyev, E., Penney, T. B., & Hsieh, P.-J. (2017). Separate requirements for detection and perceptual stability of motion in interocular suppression. Scientific Reports, 7(1), 7230. https://doi.org/10.1038/s41598-017-07805-5

Baker, D. H., & Graf, E. W. (2009). Natural images dominate in binocular rivalry. Proceedings of the National Academy of Sciences, 106(13), 5436–5441. https://doi.org/10.1073/pnas.0812860106

Blake, R., O’Shea, R. P., & Mueller, T. J. (1992). Spatial zones of binocular rivalry in central and peripheral vision. Visual Neuroscience, 8(5), 469–478. https://doi.org/10.1017/S0952523800004971

Blake, R., Tadin, D., Sobel, K. V., Raissian, T. A., & Chong, S. C. (2006). Strength of early visual adaptation depends on visual awareness. Proceedings of the National Academy of Sciences, 103(12), 4783–4788. https://doi.org/10.1073/pnas.0509634103

Blake, R., Brascamp, J., & Heeger, D. J. (2014). Can binocular rivalry reveal neural correlates of consciousness? Philosophical Transactions of the Royal Society B: Biological Sciences, 369(1641), 20130211. https://doi.org/10.1098/rstb.2013.0211

Blake, R., Goodman, R., Tomarken, A., & Kim, H.-W. (2019). Individual differences in continuous flash suppression: Potency and linkages to binocular rivalry dynamics. Vision Research, 160, 10–23. https://doi.org/10.1016/j.visres.2019.04.003

Breitmeyer, B. G. (2015). Psychophysical “blinding” methods reveal a functional hierarchy of unconscious visual processing. Consciousness and Cognition: An International Journal, 35, 234–250. https://doi.org/10.1016/j.concog.2015.01.012

Brigham, E. O., & Morrow, R. E. (1967). The fast Fourier transform. IEEE Spectrum, 4(12), 63–70. https://doi.org/10.1109/MSPEC.1967.5217220

Cha, O., Son, G., Chong, S. C., Tovar, D. A., & Blake, R. (2019). Novel procedure for generating continuous flash suppression: Seurat meets Mondrian. Journal of Vision, 19(14), 1. https://doi.org/10.1167/19.14.1

Derrington, A. M., & Lennie, P. (1984). Spatial and temporal contrast sensitivities of neurones in lateral geniculate nucleus of macaque. The Journal of Physiology, 357(1), 219–240. https://doi.org/10.1113/jphysiol.1984.sp015498

Ding, Y., Naber, M., Gayet, S., Van der Stigchel, S., & Paffen, C. L. E. (2018). Assessing the generalizability of eye dominance across binocular rivalry, onset rivalry, and continuous flash suppression. Journal of Vision, 18(6), 6. https://doi.org/10.1167/18.6.6

Dixon, N. F. (1971). Subliminal perception: The nature of a controversy. McGraw-Hill.

Eo, K., Cha, O., Chong, S. C., & Kang, M.-S. (2016). Less Is More: Semantic Information Survives Interocular Suppression When Attention Is Diverted. Journal of Neuroscience, 36(20), 5489–5497. https://doi.org/10.1523/JNEUROSCI.3018-15.2016

Erdelyi, M. H. (1974). A new look at the new look: Perceptual defense and vigilance. Psychological Review, 81(1), 1–25. https://doi.org/10.1037/h0035852

Eriksen, C. W. (1960). Discrimination and learning without awareness: A methodological survey and evaluation. Psychological Review, 67(5), 279–300. https://doi.org/10.1037/h0041622

Fahle, M. (1982). Binocular rivalry: Suppression depends on orientation and spatial frequency. Vision Research, 22(7), 787–800. https://doi.org/10.1016/0042-6989(82)90010-4

Fang, F., & He, S. (2005). Cortical responses to invisible objects in the human dorsal and ventral pathways. Nature Neuroscience, 8(10), 1380–1385. https://doi.org/10.1038/nn1537

Gelbard-Sagiv, H., Faivre, N., Mudrik, L., & Koch, C. (2016). Low-level awareness accompanies “unconscious” high-level processing during continuous flash suppression. Journal of Vision, 16(1), 3. https://doi.org/10.1167/16.1.3

Gonzalez, R. C., Woods, R. E., & Eddins, S. L. (2004). Digital Image processing using MATLAB. Pearson.

Han, S., & Alais, D. (2018). Strength of continuous flash suppression is optimal when target and masker modulation rates are matched. Journal of Vision, 18(3), 3. https://doi.org/10.1167/18.3.3

Han, S., Lunghi, C., & Alais, D. (2016). The temporal frequency tuning of continuous flash suppression reveals peak suppression at very low frequencies. Scientific Reports, 6(1), 35723. https://doi.org/10.1038/srep35723

Han, S., Blake, R., & Alais, D. (2018). Slow and steady, not fast and furious: Slow temporal modulation strengthens continuous flash suppression. Consciousness and Cognition, 58, 10–19. https://doi.org/10.1016/j.concog.2017.12.007

Han, S., Lukaszewski, R., & Alais, D. (2019). Continuous flash suppression operates in local spatial zones: Effects of mask size and contrast. Vision Research, 154, 105–114. https://doi.org/10.1016/j.visres.2018.11.006

Han, S., Alais, D., & Palmer, C. (2021). Dynamic face mask enhances continuous flash suppression. Cognition, 206, 104473. https://doi.org/10.1016/j.cognition.2020.104473

Hebart, M. (n.d). Resources and Toolbox. Retrieved June 29 2022, from http://martin-hebart.de/webpages/code/stimuli.html

Hedger, N., Adams, W. J., & Garner, M. (2015). Fearful faces have a sensory advantage in the competition for awareness. Journal of Experimental Psychology: Human Perception and Performance, 41(6), 1748–1757. https://doi.org/10.1037/xhp0000127

Hesselmann, G., & Malach, R. (2011). The link between fMRI-BOLD activation and perceptual awareness is stream-invariant in the human visual system. Cerebral Cortex, 21(12), 2829–2837. https://doi.org/10.1093/cercor/bhr085

Holender, D. (1986). Semantic activation without conscious identification in dichotic listening, parafoveal vision, and visual masking: A survey and appraisal. Behavioral and Brain Sciences, 9(1), 1–66. https://doi.org/10.1017/S0140525X00021269

Hong, S. W., & Blake, R. (2009). Interocular suppression differentially affects achromatic and chromatic mechanisms. Attention, Perception & Psychophysics, 71(2), 403–411. https://doi.org/10.3758/APP.71.2.403

Kihlstrom, J. F. (1987). The cognitive unconscious. Science, 237(4821), 1445–1452. https://doi.org/10.1126/science.3629249

Kim, C.-Y., & Blake, R. (2005). Psychophysical magic: Rendering the visible ‘invisible’. Trends in Cognitive Sciences, 9(8), 381–388. https://doi.org/10.1016/j.tics.2005.06.012

Kim, H.-W., Kim, C.-Y., & Blake, R. (2017). Monocular Perceptual Deprivation from Interocular Suppression Temporarily Imbalances Ocular Dominance. Current Biology, 27(6), 884–889. https://doi.org/10.1016/j.cub.2017.01.063

Korisky, U., Hirschhorn, R., & Mudrik, L. (2019). “Real-life” continuous flash suppression (CFS)-CFS with real-world objects using augmented reality goggles. Behavior Research Methods, 51(6), 2827–2839. https://doi.org/10.3758/s13428-018-1162-0

Kukkonen, H., Rovamo, J., Tiippana, K., & Näsänen, R. (1993). Michelson contrast, RMS contrast and energy of various spatial stimuli at threshold. Vision Research, 33(10), 1431–1436. https://doi.org/10.1016/0042-6989(93)90049-3

Lin, Z. C., & He, S. (2009). Seeing the invisible: The scope and limits of unconscious processing in binocular rivalry. Progress in Neurobiology, 87, 17. https://doi.org/10.1016/j.pneurobio.2008.09.002

Logothetis, N. K. (1998). Single units and conscious vision. Philosophical Transactions of the Royal Society of London. Series B: Biological Sciences, 353(1377), 1801–1818. https://doi.org/10.1098/rstb.1998.0333

Logothetis, N. K., Leopold, D. A., & Sheinberg, D. L. (1996). What is rivalling during binocular rivalry? Nature, 380(6575), 621–624. https://doi.org/10.1038/380621a0

Ludwig, K., Sterzer, P., Kathmann, N., Franz, V. H., & Hesselmann, G. (2013). Learning to detect but not to grasp suppressed visual stimuli. Neuropsychologia, 51(13), 2930–2938. https://doi.org/10.1016/j.neuropsychologia.2013.09.035

Merikle, P. M. & Daneman, M. (1998). Psychological investigations of unconscious perception. Journal of Consciousness Studies, 5(1), 5–18.

Moors, P. (2019). What’s Up with High-Level Processing During Continuous Flash Suppression? In G. Hesselmann (Ed.), Transitions between Consciousness and Unconsciousness (1st ed., pp. 39–70). Routledge. https://doi.org/10.4324/9780429469688-2

Moors, P., & Hesselmann, G. (2018). A critical reexamination of doing arithmetic nonconsciously. Psychonomic Bulletin & Review, 25(1), 472–481. https://doi.org/10.3758/s13423-017-1292-x

Moors, P., Wagemans, J., & de-Wit, L. (2014). Moving Stimuli Are Less Effectively Masked Using Traditional Continuous Flash Suppression (CFS) Compared to a Moving Mondrian Mask (MMM): A Test Case for Feature-Selective Suppression and Retinotopic Adaptation. PLoS ONE, 9(5), e98298. https://doi.org/10.1371/journal.pone.0098298

Moors, P., Wagemans, J., & de-Wit, L. (2016). Faces in commonly experienced configurations enter awareness faster due to their curvature relative to fixation. PeerJ, 4, e1565. https://doi.org/10.7717/peerj.1565

Moors, P., Hesselmann, G., Wagemans, J., & van Ee, R. (2017). Continuous Flash Suppression: Stimulus Fractionation rather than Integration. Trends in Cognitive Sciences, 21(10), 719–721. https://doi.org/10.1016/j.tics.2017.06.005

Muthukrishnan, R., & Radha, M. (2011). Edge detection techniques for image segmentation. International Journal of Computer Science and Information Technology, 3(6), 259–267. https://doi.org/10.5121/ijcsit.2011.3620

Nguyen, V. A., Freeman, A. W., & Alais, D. (2003). Increasing depth of binocular rivalry suppression along two visual pathways. Vision Research, 43(19), 2003–2008. https://doi.org/10.1016/S0042-6989(03)00314-6

Nuutinen, M., Mustonen, T., & Häkkinen, J. (2018). CFS MATLAB toolbox: An experiment builder for continuous flash suppression (CFS) task. Behavior Research Methods, 50(5), 1933–1942. https://doi.org/10.3758/s13428-017-0961-z

O’Shea, R. P., Sims, A. J. H., & Govan, D. G. (1997). The effect of spatial frequency and field size on the spread of exclusive visibility in binocular rivalry. Vision Research, 37(2), 175–183. https://doi.org/10.1016/S0042-6989(96)00113-7

Pournaghdali, A., & Schwartz, B. L. (2020). Continuous flash suppression: Known and unknowns. Psychonomic Bulletin & Review, 27(6), 1071–1103. https://doi.org/10.3758/s13423-020-01771-2

Rothkirch, M., & Hesselmann, G. (2017). What We Talk about When We Talk about Unconscious Processing - A Plea for Best Practices. Frontiers in psychology, 8, 835. https://doi.org/10.3389/fpsyg.2017.00835

Rothkirch, M., & Hesselmann, G. (2018). No evidence for dorsal-stream-based priming under continuous flash suppression. Consciousness and Cognition, 64, 84–94. https://doi.org/10.1016/j.concog.2018.05.011

Rothkirch, M., Stein, T., Sekutowicz, M., & Sterzer, P. (2012). A direct oculomotor correlate of unconscious visual processing. Current Biology, 22(13), R514–R515. https://doi.org/10.1016/j.cub.2012.04.046

Rothkirch, M., Shanks, D. R., & Hesselmann, G. (2022). The Pervasive Problem of Post Hoc Data Selection in Studies on Unconscious Processing: A reply to Sklar, Goldstein and Hassin (2021). Experimental Psychology. https://doi.org/10.1027/1618-3169/a000541

Shanks, D. R. (2017). Regressive research: The pitfalls of post hoc data selection in the study of unconscious mental processes. Psychonomic Bulletin & Review, 24(3), 752–775. https://doi.org/10.3758/s13423-016-1170-y

Shapley, R., Kaplan, E., & Soodak, R. (1981). Spatial summation and contrast sensitivity of X and Y cells in the lateral geniculate nucleus of the macaque. Nature, 292(5823), 543–545. https://doi.org/10.1038/292543a0

Sklar, A. Y., Levy, N., Goldstein, A., Mandel, R., Maril, A., & Hassin, R. R. (2012). Reading and doing arithmetic nonconsciously. Proceedings of the National Academy of Sciences, 109(48), 19614–19619. https://doi.org/10.1073/pnas.1211645109

Skottun, B. C., & Skoyles, J. R. (2008). Temporal Frequency and the Magnocellular and Parvocellular Systems. Neuro-Ophthalmology, 32(2), 43–48. https://doi.org/10.1080/01658100701555121

Stein, T., Hebart, M. N., & Sterzer, P. (2011). Breaking Continuous Flash Suppression: A New Measure of Unconscious Processing during Interocular Suppression? Frontiers in Human Neuroscience, 5. https://doi.org/10.3389/fnhum.2011.00167

Sterzer, P., Stein, T., Ludwig, K., Rothkirch, M., & Hesselmann, G. (2014). Neural processing of visual information under interocular suppression: A critical review. Frontiers in Psychology, 5. https://doi.org/10.3389/fpsyg.2014.00453

Stuit, S. M., Cass, J., Paffen, C. L. E., & Alais, D. (2009). Orientation-tuned suppression in binocular rivalry reveals general and specific components of rivalry suppression. Journal of Vision, 9(11), 17–17. https://doi.org/10.1167/9.11.17

Tong, F., Meng, M., & Blake, R. (2006). Neural bases of binocular rivalry. Trends in Cognitive Sciences, 10(11), 502–511. https://doi.org/10.1016/j.tics.2006.09.003

Tsuchiya, N., & Koch, C. (2005). Continuous flash suppression reduces negative afterimages. Nature Neuroscience, 8(8), 1096–1101. https://doi.org/10.1038/nn1500

Tsuchiya, N., Koch, C., Gilroy, L. A., & Blake, R. (2006). Depth of interocular suppression associated with continuous flash suppression, flash suppression, and binocular rivalry. Journal of Vision, 6(10), 6. https://doi.org/10.1167/6.10.6

van Drongelen, W. (2018). Signal Processing for Neuroscientists. Elsevier Academic Press. https://ebookcentral.proquest.com/lib/qut/detail.action?docID=5357879

Walker, P. (1978). Binocular rivalry: Central or peripheral selective processes? Psychological Bulletin, 85(2), 376–389. https://doi.org/10.1037/0033-2909.85.2.376

Wilke, M., Logothetis, N. K., & Leopold, D. A. (2003). Generalized Flash Suppression of Salient Visual Targets. Neuron, 39(6), 1043–1052. https://doi.org/10.1016/j.neuron.2003.08.003

Wolfe, J. M. (1984). Reversing ocular dominance and suppression in a single flash. Vision Research, 24(5), 471–478. https://doi.org/10.1016/0042-6989(84)90044-0

Wolfe, J. M. (1986). Stereopsis and binocular rivalry. Psychological Review, 93(3), 269–282. https://doi.org/10.1037/0033-295X.93.3.269

Yang, E., & Blake, R. (2012). Deconstructing continuous flash suppression. Journal of Vision, 12(3), 8–8. https://doi.org/10.1167/12.3.8

Yang, E., Zald, D. H., & Blake, R. (2007). Fearful expressions gain preferential access to awareness during continuous flash suppression. Emotion, 7(4), 882–886. https://doi.org/10.1037/1528-3542.7.4.882

Yang, E., Blake, R., & McDonald, J. E. (2010). A New Interocular Suppression Technique for Measuring Sensory Eye Dominance. Investigative Ophthalmology & Visual Science, 51(1), 588. https://doi.org/10.1167/iovs.08-3076

Yuval-Greenberg, S., & Heeger, D. J. (2013). Continuous Flash Suppression Modulates Cortical Activity in Early Visual Cortex. Journal of Neuroscience, 33(23), 9635–9643. https://doi.org/10.1523/JNEUROSCI.4612-12.2013

Zadbood, A., Lee, S.-H., & Blake, R. (2011). Stimulus Fractionation by Interocular Suppression. Frontiers in Human Neuroscience, 5. https://doi.org/10.3389/fnhum.2011.00135

Zhan, M., Engelen, T., & de Gelder, B. (2019). Influence of continuous flash suppression mask frequency on stimulus visibility. Neuropsychologia, 128, 65–72. https://doi.org/10.1016/j.neuropsychologia.2018.05.012

Zhu, W., Drewes, J., & Melcher, D. (2016). Time for Awareness: The Influence of Temporal Properties of the Mask on Continuous Flash Suppression Effectiveness. PLOS ONE, 11(7), e0159206. https://doi.org/10.1371/journal.pone.0159206

Data availability

The study did not collect or analyze human subject data and was not pre-registered. Data sharing is therefore not applicable for experimental data regarding human subjects. However, the list of extracted citations and codes used to assess the popularity of CFS is available at the Open Science Framework (DOI: 10.17605/OSF.IO/9JQ8D). The open-source software developed in this study, CFS-crafter, is available at GitHub (https://github.com/guandongwang/cfs_crafter).

Funding

Author SH is funded by an International Fellowship granted by the Agency for Science, Technology and Research (A*STAR) of Singapore.

Author information

Authors and Affiliations

Contributions

SH conceived of the initial idea and developed it with DA, RB, and GW. GW programmed the CFS-crafter and developed the user interface in discussions with SH and DA. SH wrote an initial draft of the manuscript and all authors worked on the manuscript to shape it to its final form.

Corresponding authors

Ethics declarations

Conflicts of interest

The authors have no known conflict of interest to disclose.

Ethics approval

No approval of research ethics committees was required because the collection of human subject data and use of open-source human subject datasets were not required to develop the CFS-crafter.

Consent to participate and/or publish

Not applicable

Additional information

Open practice statement

This study was not preregistered and all materials are freely available online. Specifically, the CFS-crafter is accessible at GitHub (https://github.com/guandongwang/cfs_crafter), and the list of extracted citations andcodes used to assess the popularity of CFS can be found at the Open Science Framework (DOI: https://doi.org/10.17605/OSF.IO/9JQ8D).

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix 1

Appendix 1

Supplemental Glossary

-

1.

Continuous flash suppression (CFS): a form of dichoptic visual presentation where one eye views a rapidly changing image sequence (‘mask’) and the other views a weaker, smaller, static image (’target’), producing robust suppression of the target for extended periods of time.

-

2.

Dorsal-steam preservation: based on the notion of parallel pathways, the observation that the neural response related to the suppressed target may remain active in the dorsal stream whereas activity in the ventral stream is weakened or abolished.

-

3.

MATLAB©: a widely used programming platform designed by MathWorks© for computational tasks performed using matrix manipulations. In vision research, those manipulations include data analysis, signal processing (e.g., FFT) and construction of user interfaces for specific applications such as the CFS-crafter.

-

4.

Screen resolution: the number of pixels available within a video screen’s width and height for displaying visual images. This property of a display monitor places an upper bound on the spatial detail that can be portrayed in a visual image. For vision research, it is most usefully expressed in terms of pixels per degree of visual angle.

-

5.

Visual angle: the angular subtense of the image of an object formed on the retina, expressed in units of degrees, minutes and seconds. Visual angle is governed jointly by the size of the object and the distance from which it is viewed. Visual angle varies inversely with viewing distance.

-

6.

Refresh rate: the number of times per second (Hertz) that a visual image can be redrawn on a video display (typically around 100 Hz or so). This property of a display monitor places an upper bound on the temporal fidelity with which dynamic displays can be portrayed.

-

7.

Visual noise: Noise images are widely used because they are salient visual stimuli but contain no identifiable form or object. Traditionally, white noise was used as it is easy to make but another variety known as pink noise has very useful properties that make it the preferred type of noise.

-

8.

White noise: Setting every pixel in an image to a random luminance value creates white noise (binary noise is sometimes used, with each pixel randomly assigned black or white). Such images are broadband in spatial frequency: in principle, white noise contains all spatial frequencies at equal amplitude (true if the image is very large). Perceptually, however, white noise is high-frequency dominant because the visual system’s transfer function is characterized by strong ‘whitening’ (boosting of high frequencies). Inspecting the stimulus confirms this: there are no obvious low frequencies in white noise, only high-frequency texture.

-

9.

Pink noise: By converting a white noise image to the frequency domain using an FFT, its amplitude spectrum can be manipulated so that it declines with increasing frequency. High frequencies are thus attenuated to compensate for the visual system’s whitening effect. The result is that pink noise is perceived as white: all frequencies from low to high are present equally. This is evident at a glance: pink noise contains fine, medium and coarse texture (see Fig. 5C). The key advantage of pink noise is that its power spectrum corresponds closely with the power spectra found in images of natural or built environments and images of objects and faces, etc. Because images invariably have a pink spectrum, pink noise makes an excellent masking pattern by providing a matching distribution of energy over frequency. In CFS applications, pink noise is important for achieving effective suppression as white-noise masks may not completely suppress low-frequency target components.

-

10.

Temporal frequency: For a sine wave, the number of luminance modulations per second, measured in Hertz. For sequences of natural images or noise patterns such as a CFS masker, temporal frequency spectra are broadband and do not relate simply to the update rate. A Fourier analysis can be used to reveal the temporal spectrum of CFS makers, which typically contain most energy at temporal frequencies much lower than the masker update rate (Han et al., 2016). The temporal frequency of a CFS masker also depends on the number of grey/color levels used (see Fig. 3).

-

11.

Spatial frequency: For a sine wave, the number of luminance modulations per unit space, measured in cycles per degree. For natural images or noise patterns, spatial frequency spectra are broadband and must be quantified with a Fourier analysis.

-

12.

Fourier analysis/fast Fourier transform (FFT): Fourier analysis entails the decomposition of a complex signal into its sinusoidal components, most conveniently computed using the FFT algorithm. Note that the FFT returns two outputs: an amplitude spectrum and a phase spectrum (see below). In vision research, the signal for an FFT is usually an image varying in luminance over space and the FFT reveals the amplitude of the signal’s components, as well as their orientation and phase. For more detail, see Georgeson (1979). Fourier analysis can also be computed in three dimensions (x, y, t) to reveal the spatiotemporal spectra of image sequences such as CFS maskers.

-

13.

Spectral analysis: a method to examine how the components of an image or sequence of images vary over spatial or temporal frequency by visualizing the amplitude spectrum of a Fourier analysis.

-

14.

Amplitude spectrum: representation of the magnitude (contrast) of the sinusoidal components derived using Fourier analysis. As visual images are two dimensional, the amplitude spectrum is also two-dimensional and thus it also indicates the orientation of the image’s components. The dominant orientations in an image can usually be seen when inspecting a 2-D plot of the amplitude spectrum.

-

15.

Phase spectrum: The phase spectrum of an image shows the spatial position (relative to its wavelength) of every component in the amplitude spectrum. Unlike the amplitude spectrum, it is difficult to imagine how an image would appear based on its phase spectrum. Phase, however, is the critical determinant of an image’s spatial structure. For example, an edge is formed at the point where a series of spatial frequencies are present with the same (zero) phase.

-

16.

Phase scrambling: Because image structure arises from particular phase relationships among sinusoidal components (see Phase Spectrum), adding random offsets to the values in a phase spectrum will destroy the appearance of structure in the image (see Fig. 6A). This manipulation is useful as it removes any identifiable objects and structure in the image while maintaining the spatial frequency content and aggregate contrast (because the amplitude spectrum is separate and independent of the phase spectrum). Phase scrambling is maximal for random offsets in the range of 0–2pi, or partial scrambling can be achieved by reducing the range (e.g., 0–pi/2).

-

17.

Spatial filtering: an image processing technique whereby a subset of spatial frequency components (or orientation components: see Orientation Filtering) in an image can be attenuated or removed entirely. Typically, spatial filtering takes one of three forms:

-

a.

Low-pass: Medium and high frequencies are attenuated; image appears blurred.

-

b.

High-pass: Medium and low frequencies attenuated; image appears sharpened.

-

c.

Band-pass: A narrow frequency range is passed and the frequencies outside the range are attenuated.

In all filter types, parameters can be set to control how steeply the transition occurs from passed to attenuated frequencies, and where the transition begins on the frequency axis.

-

a.

-

18.