Abstract

Risky-choice and attribute framing effects are well-known cognitive biases, where choices are influenced by the way information is presented. To assess susceptibility to these framing types, the Resistance to Framing scale is often used, although its performance has rarely been extensively tested. In an online survey among university students from Bulgaria (N = 245) and North America (N = 261), we planned to examine the scale’s psychometric properties, structural validity, and measurement invariance. However, some of these examinations were not possible because the scale displayed low and mostly non-significant inter-item correlations as well as low item-total correlations. Followingly, exploratory item response theory analyses indicated that the scale’s reliability was low, especially for high levels of resistance to framing. This suggests problems with the scale at a basic level of conceptualization, namely that the items may not represent the same content domain. Overall, the scale in its current version is of limited use, at least in university student samples, due to the identified problems. We discuss potential remedies to these problems, as well as provide open code and data (https://osf.io/j5n6f) which facilitates testing the scale in other samples (e.g., general population, different languages and countries) to obtain a comprehensive picture of its performance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

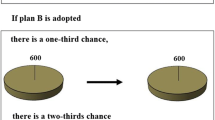

We face many choices every day, each of which may be guided by subtle but powerful forces. One such force lies in the way information is presented. Consider the following thought experiment: Suppose you have invested $6,000 in stocks of a green energy company. As the economy is currently experiencing a downturn, you have two alternatives, one will save $2,000 of your investment, while the other one saves everything with a 33% chance and nothing with a 67% chance. Which of these strategies would you prefer?

Now, consider that you instead learned that one strategy will make you lose $4,000 of your investment, while the other one makes you lose nothing with a 33% chance and everything with a 67% chance (adapted from Bruine de Bruin et al., 2007). How would you choose based on this information?

Although the two descriptions are objectively equivalent, individuals typically choose the safe option (i.e., saving $2,000) when framed as gains rather than losses, and the risky option (i.e., losing nothing with a 33% chance and everything with a 67% chance) when framed as losses rather than gains. This well-established phenomenon is known as the risky-choice framing effect, whereby systematically different responses are elicited by the way the risk (gains vs. losses) is presented (Levin et al., 1998; Tversky & Kahneman, 1981). A related phenomenon occurs when characteristics of objects (e.g., treatment) are described in a positive (e.g., 50% success rate) or negative (e.g., 50% failure rate) way, which is known as the attribute framing effect (Levin et al., 1998; Levin & Gaeth, 1988).

Resistance to risky-choice and attribute framing

The framing effect and its underlying mechanisms have been studied in various experimental contexts (e.g., Bloomfield, 2006; Fagley & Miller, 1997; Finn, 2008; Levin & Gaeth, 1988; Whitney et al., 2008) since it had first been demonstrated (Tversky & Kahneman, 1981). Thereby, competing claims about the framing effect and its mechanisms (e.g., Chick et al., 2016, Mandel & Kapler, 2018) are often based on a single task (e.g., variations of the Disease problem, Tversky & Kahneman, 1981; see Piñon & Gambara, 2005), limiting the reliability and validity of the findings. Different framing tasks in different domains (e.g., money or lives) are used to support these claims, which makes it difficult to compare the evidence for or against the competing viewpoints because the framing effect is sensitive to task content (Fagley & Miller, 1997). In addition, framing tasks are conceptualized for between-participants administration. This does not allow for calculating individual difference scores and thus investigating individual differences of framing effects (but see Mandel & Kapler, 2018).

The Resistance to Framing scale, a subscale of the Adult Decision-Making Competence Index (A-DMC; Bruine de Bruin et al., 2007), solves the above problems by using 14 positively and negatively framed tasks for both types of framing which vary by topic (e.g., money, human and animal lives, health). The scale thus potentially provides a unified (standard) measure of Resistance to Framing that is relatively content-independent. The within-participants administration of the scale allows for investigating individual differences by calculating individual scores. This within-participants administration is often used to identify cognitive predictors of Resistance to Framing, such as numeracy (Del Missier et al., 2012), cognitive ability (e.g., Raven’s standard progressive matrices; Bruine de Bruin et al., 2007), working memory (Hoffmann et al., 2020), cognitive and executive functions (e.g., cognitive flexibility and inhibitory control; Mäntylä et al., 2012, Piryaei et al., 2017), as well as cognitive reflection (Allred, 2018).

Although the scale is widely used, its performance has rarely been extensively tested. Previous research has exclusively tested the scale’s performance in terms of internal consistency and predictive validity in a US-American sample (Bruine de Bruin et al., 2007). Besides this research, several studies have validated the A-DMC as a whole and reported the internal consistency of the Resistance to Framing scale, which varied from Cronbach’s ɑ = .30 to .72 (Bavolar, 2013; Del Missier et al., 2012; Feng et al., 2013, as cited in Liang & Zou, 2018; Parker & Fischhoff, 2005). From this previous work, researchers have concluded that the Resistance to Framing Scale as part of the A-DMC is a valid and reliable instrument without extensively investigating its performance.

The factor structure underlying the Resistance to Framing scale remains subject to debate. When using the scale, researchers classically average over the absolute difference of the ratings in the positive and negative frame in each scenario (Bruine de Bruin et al., 2007). They thereby implicitly assume that the scale is unidimensional. This assumption is supported by evidence showing that risky-choice framing can be reduced to attribute framing, as systematically different choices in risky-choice framing scenarios result from framing effects of the riskless rather than the risky option (Kühberger & Gradl, 2013). Contrasting with this view, the original typology introduced by Levin et al. (1998) posits that risky-choice and attribute framing are distinct types based on what is framed (options with varying levels of risk vs. characteristics of an object), what is affected (risk preference vs. object evaluation), and how the effect is assessed (comparing choices of options vs. attractiveness of an object)—all of which are expected to influence the effect. Our reanalyses of previous data (Bavolar, 2013; Bruine de Bruin et al., 2007) before conducting this study supported two distinct types (Supplement 1).

The factor structure underlying the scale has not yet been investigated, although such an investigation is important because the standard practice of averaging over all absolute differences is only warranted as long as a one-factor model is empirically supported.

The present study

The present study extensively tested the performance of the Resistance to Framing scale in samples from Bulgaria and North America. This additional assessment contributes to existing studies in three key ways: First, it goes beyond the internal consistency of the overall scale by using exploratory item response theory models. These models allow us to examine each item’s performance and the scale’s measurement precision across different levels of susceptibility to framing. Second, the present study examines the structural and cross-country validity of the scale using confirmatory factor analyses, as recommended in guidelines for evaluating measurement instruments (Chan, 2014). Last, the current results suggest that the scale performs poorly in university student samples and should not be used in its current version—a conclusion that challenges previous assumptions that the scale is valid and reliable.

Method

To assess the performance of the Resistance to Framing scale, we conducted an online survey among Bulgarian and North American university students. We had preregistered our research questions, sampling plan, study design, and data analyses on the Open Science Framework (https://osf.io/uj5rz, September 11, 2020) before data collection. We assure that we reported how we determined our sample size, all data exclusions (if any), all manipulations, and all measures in the study (as suggested by Simmons et al., 2012). Deviations from the preregistration are reported in Supplement 2.

Sampling plan

Our preregistered target sample size was 250 complete responses per site after excluding responses categorized as duplicate, inattentive, or too fast (see Data exclusions). The data were collected as part of a larger research project to evaluate the dual-process account in Bulgaria and North America (Rachev et al., 2022). The sample size has been determined based on the fit and misfit of the confirmatory factor analysis (CFA) models and expected effect sizes used within this larger project. A priori power analyses for the present study with the R package semTools (Version 0.5-3; Jorgensen et al., 2020) indicated that 250 participants per site allowed us to detect fit and misfit of a one- (Resistance to Framing) and two-factor (risky-choice and attribute framing) model with more than 95% power (Supplement 1).Footnote 1

Participants

Participants were required to be at least 18 years old, citizens and residents of either Bulgaria or North America, native Bulgarian or English speakers, and university students with a non-psychological major. Characteristics of both samples are in Table 1.

Materials

The Resistance to Framing scale (Bruine de Bruin et al., 2007) consists of seven risky-choice and seven attribute framing items, with one positively and one negatively framed version per item. An example item of risky-choice framing is deciding between two investment strategies, as in the introduction of this paper. Participants indicate the strength of their preference for either strategy on a six-point scale with 1 Definitely would choose A and 6 Definitely would choose B. Resistance to Framing is then assessed by the absolute difference between the ratings for the positive and negative frame of every item (Bruine de Bruin et al., 2007), whereby lower scores represent lower susceptibility to framing.Footnote 2 Compared to the original scale, we slightly modified the phrasing of the instruction and two scenarios to make their meaning clearer.

The Bulgarian version of the Resistance to Framing scale was created from the original English version by five (risky-choice framing) and four (attribute framing) independent translators using forward and backward translation. In particular, three (risky-choice framing) and two (attribute framing) experienced translators translated the original items to Bulgarian and discussed discrepancies to arrive at a joint forward translation. After establishing the same procedure for the back translation with two additional translators, all translators and an additional adjudicator (the third author) compared the original version to the back translation and resolved discrepancies. Pilot-testing before data collection did not reveal any concerns about the items or response format.

Procedure

Participants in both sites were recruited from November 2020 to January 2021 via social media, emails, and universities. They were informed about the confidentiality and anonymity of their responses and provided written informed consent before starting the online survey. All individuals participated voluntarily and could determine their participation at any given moment. The study was approved by both local research ethics committees.

Participants completed the 30-min Qualtrics survey in their native language. They first created a withdrawal code and provided basic demographic information (i.e., age, gender, education, field and year of study, country of residence, citizenship, and native languages). Participants were then presented with either the positive or negative version of the risky-choice followed by the attribute framing block before being presented with the remaining versions. The framing problems within a block were presented in randomized order and forced-response format. Between the positive and the negative version, participants took approximately 10 min to complete the Bullshit Receptivity scale (Pennycook et al., 2015), the Actively Open-Minded Thinking scale (Baron & High II, 2019), and an attention check item (“Please select the option ‘neutral’ and proceed to the following question.”), all of which were part of the larger project. The survey ended with a debriefing and redirection to a separate survey where participants’ email addresses were recorded to enter a random draw of 165 (160 × $3 and 5 × $20) gift cards for Bulgarian participants and 90 (85 × $5 and 5 × $30) gift cards for North American participants.

Statistical analysis

All analyses were conducted using R (Version 4.0.2; R Core Team, 2020) with the statistical significance level set to α = .05.

Data exclusions

Participants were included in the analysis if they answered all items, provided informed consent, and completed the survey only once (i.e., unique combination of IP address, withdrawal code, and demographics including age, gender, major, and year of studies; Koo & Skinner, 2005). Participants were further included if they passed the attention check and did not complete the survey faster than 10 min.Footnote 3 In the Bulgarian and North American sample, 30 and 29 multivariate outliers, respectively, were excluded based on the generalized Cook’s distance using the R package influence.SEM (Version 2.2; Altoé & Pastore, 2018). Analyses including outliers are in Supplement 3.

Main analysis

We first inspected the descriptive statistics of each item, including mean, standard deviation, range, skewness, kurtosis, and inter-item as well as item-total correlations. To evaluate the factor structure, we fit a one-factor and two-factor CFA model in each site using the R package lavaan (Version 0.6-6; Rosseel, 2012).Footnote 4 The fit of each model was assessed using several fit indices (Table 5) and their preregistered standard cut-off values.Footnote 5 However, we refrained from comparing the models and from testing measurement invariance between sites, due to the low inter-item correlations (see Results). We used McDonald’s ω to assess the scale’s reliability as well as exploratory, non-preregistered item response theory (IRT) to get further insights into the scale’s measurement precision and the performance of each item. The IRT models were fit with the mirt package in R (Version 1.33.2, Chalmers, 2012) and full-information maximum likelihood estimation to account for the relatively small sample sizes (Forero & Maydeu-Olivares, 2009). We fit a graded response (Samejima, 1969) and a generalized partial credit model (Muraki, 1992), two commonly used polytomous models that apply to the data at hand. These models were compared based on the AIC and BIC as well as their sample-size adjusted counterparts (AICc and SABIC), and their fit was assessed based on multiple indices (Table 6).

Results

Descriptive statistics

Table 2 shows the descriptive statistics for both samples. Susceptibility to framing was generally low, especially for the attribute framing items. This was also supported by the skewness which indicated that all items were highly positively skewed (skewness > 1). Most inter-item correlations were small and non-significant (Tables 3 and 4). Item-total correlations (i.e., correlations between each item and the total score of all items excluding this item) ranged from .09 to .23 in Bulgaria and .04 to .32 in North America, which is below the recommended .30 (Boateng et al., 2018).

The network plots in Fig. 1 additionally visualize that few inter-item correlations are larger than r = .10. Moreover, we would expect either that all items of the scale are substantially positively correlated (which would point toward a one-factor model) or that the items form two clusters, risky-choice and attribute framing (which would point toward a two-factor model). However, neither pattern seems to be supported.

Network plots of the bivariate correlations between the items of the Resistance to Framing scale for A Bulgaria and B North America. Only bivariate correlations larger than r = .10 are displayed. Red edges indicate a negative correlation between two items, whereas green edges indicate a positive correlation

Structural validity

Inspecting the bivariate correlations already revealed that there may be problems with the structure of the scale. We nevertheless proceeded with the preregistered plan and fit one- and two-factor CFA models. The models’ structure was determined based on theory (see introduction) and the classification of the items into risky-choice and attribute framing proposed in the original article (Bruine de Bruin et al., 2007).

The hypothesized one- and two-factor model fit the Bulgarian data acceptably, based on the robust χ2-test, the robust RMSEA, and robust incremental fit indices (Table 5). However, in the one-factor model, two of 14 items (i.e., risky-choice: income tax and attribute framing: cheating) did not load significantly onto the factor. This problem persisted in the two-factor model where the attribute framing item cheating still did not load significantly onto its corresponding factor. More worryingly, all standardized factor loadings (Table 2) were low and below the recommended cut-off of .45 (Tabachnick et al., 2007).

The hypothesized one-factor model did not fit the North American data acceptably (Table 5), and five of the 14 items (i.e., risky choice: cancer, attribute framing: condom, beef, exams, and cancer) did not load significantly onto the factor. While the two-factor model fit well (Table 5), four of the 14 items (i.e., risky-choice framing: cancer, attribute framing: condom, budget, and cancer) still did not load significantly onto their corresponding factor. Once again, the factor loadings were small, and only three out of 14 met the recommended criterion. As such small factor loadings indicate problems at a basic level of conceptualization and may explain the very good model fit (reliability paradox; McNeish & Wolf, 2021), we refrained from comparing the models in each site and from testing measurement invariance between sites.

Reliability

The Bulgarian one-factor model indicated poor reliability (McDonald’s ω = .46), similar to the two-factor model with risky-choice (McDonald’s ω = .31) and attribute (McDonald’s ω = .35) framing. This corresponds to the scale’s reliability in the North American sample (McDonald’s ω = .51) and the two separate scales, risky choice (McDonald’s ω = .50) and attribute framing (McDonald’s ω = .34).

Non-preregistered IRT models

Assumption checks

To get more detailed insights into the performance of each item, we fit an exploratory polytomous graded response and generalized partial credit model. Polytomous IRT models were used, as the Resistance to Framing scale may not be unidimensional (see CFA results). In the Bulgarian sample, both assumptions for fitting IRT models were met: local independence (i.e., items are uncorrelated when conditioning on the latent traits), as evidenced by relatively low discrimination parameters and residual covariances, and monotonicity (i.e., increased probability of endorsing an item constantly goes hand in hand with an increase in the latent trait; Nguyen et al., 2014). In the North American sample, the assumption of monotonicity was violated, with serious violations for the risky-choice item Dropout (crit = 88), unclear violations for five items (40 ≤ crit ≤ 80; attribute framing: condom, beef, cheating, exams, and cancer), and no violations for the other eight items (Molenaar & Sijtsma, 2000, as cited in Crișan et al., 2019). The graphical analyses corroborated these statistical results. As monotonicity was likely violated, no IRT models were fit to the North American data.

Model comparison

Comparing both models showed that the graded response model fit the Bulgarian data better than the generalized partial credit model. As displayed in Table 6, AIC and BIC as well as their sample-size adjusted analogs were lower for the graded response model. The other fit statistics indicated that both models fit the data well, as shown by a non-significant M2* value, a low RMSEA and SRMSR, as well as a high TLI (except for the graded response model) and CFI. The S−χ2 statistic indicated no misfit for any of the items of the graded response model but misfit for the risky-choice dropout item (S−χ2(40) = 59.741, p = .023) when using the generalized partial credit model. We thus selected the graded response model to examine the reliability, test information, and standard errors of the scale.

In line with the reliability based on classical test theory, the IRT reliability was poor for risky-choice (0.52) and attribute framing (0.52). The test information in Fig. 2 shows how accurately the two subscales measure the latent traits, susceptibility to risky-choice (θ1) and attribute (θ2) framing. More test information thereby means higher measurement precision and lower standard errors of the underlying trait estimate (Nguyen et al., 2014). The test information was high for high levels of susceptibility to risky-choice and attribute framing, whereas it was very low for low levels (panel A). This means that high levels of susceptibility to framing can be measured with low standard errors and thus high precision, whereas low levels cannot be measured accurately (panel B). The test information of single items showed similar patterns: high test information and low standard errors for high levels of susceptibility to risky-choice and attribute framing, while for some items (e.g., risky-choice framing: disease), the test information decreased, and the standard error increased for extremely high levels of the underlying trait. The individual analysis of the test information function curves showed that among all items, the test information was highest for the two risky-choice framing items Disease (panel C) and Soldiers as well as the attribute framing item Parking. The test information and discriminating power (as indicated by a flat slope) were the lowest for the attribute framing item Cheating (panel D).

Test information function curve and test standard error for risky-choice (θ1) and attribute (θ2) framing (Bulgaria). Panel A displays the test information function curve for all items combined. Panel B displays the corresponding test standard error. Panel C and D display the test information function curve for the risky-choice framing item Disease and the attribute framing item cheating

Discussion

This study tested the performance of the Resistance to Framing scale. In an online survey among Bulgarian (N = 245) and North American (N = 261) university students, we planned to examine the scale’s psychometric properties, structural validity, and measurement invariance between sites. However, some of these examinations were not possible because the scale displayed low and mostly non-significant inter-item correlations as well as low item-total correlations. This suggests problems with the scale at a basic level of conceptualization, namely that the items may not represent the same content domain (Piedmont, 2014).

Confirmatory factor analyses suggested that the one- (one Resistance to Framing factor) and two-factor (resistance to risky-choice framing and resistance to attribute framing) model fit the data. However, this good fit may be caused by the low inter-item correlations, low factor loadings, and low reliability (reliability paradox; McNeish & Wolf, 2021). We, therefore, cannot make any conclusions about the factor structure, except that based on the inter-item correlations neither of the structures seemed to be supported.

The reliability was low in both sites, meaning that the individual items are only weakly related to each other and that participants’ observed scores do not reflect their true susceptibility to framing. The scale’s reliability in the present study was especially low compared to previous estimates. This could have resulted from the homogeneous nature of our student samples which displayed relatively low susceptibility to framing, thereby restricting variability and reducing reliability (Hedge et al., 2018). This could have also resulted from the short time interval between the two framing blocks which may have made it easy for participants to recognize that the task pairs are objectively equivalent and respond consistently across frames (but see Aczel et al., 2018). Exploratory item response theory analyses in the Bulgarian sample suggested that the scale can measure high levels of susceptibility to framing relatively accurately, while it is inaccurate for low levels. This means that the scale should be used with caution especially in samples where low susceptibility to framing is expected, for example presumably university students.

In addition, low reliability may result in biased correlation estimates between Resistance to Framing and other measures (Nimon et al., 2012). To correct for measurement error, we recommend computing disattenuated correlations or using latent variable modeling when using the scale. However, we would like to emphasize that this solution does not capture the full effect of measurement error on results. The most optimal way would be to improve the scale’s reliability, particularly since poor reliability has been a pervading problem of the Resistance to Framing scale and also of similar measures (e.g., Stanovich et al., 2016). Recently, a new risky-choice framing scale has become available (Berthet, 2021). Berthet was able to improve the scale’s reliability (Cronbach’s α 0.15 in Study 1 vs. 0.74 in Study 2) by increasing the number of items from four to eight and by slightly tweaking the cover stories in each pair of framing tasks (e.g., an accident caused by an autonomous vehicle vs. a train), while keeping the numbers and overall topic identical. The same approach may be applied to improve the reliability of both risky-choice and attribute framing of the Resistance to Framing scales. A positive result may not only represent good news for framing researchers but also point out that using identical tasks is suboptimal for within-participants designs.

An additional problem concerns the scoring of the scale. The original scoring system, also adopted in similar framing measures (Stanovich et al., 2016), is to average over the absolute differences in ratings of paired framing tasks. However, the framing effect is directional (see also Berthet, 2021), that is, higher risk-seeking is expected in the loss versus the gain frame, and higher approval is expected when an object is described in positive versus negative terms.Footnote 6 Based on theory, it is reasonable to use directional rather than absolute differences. Directional scoring is problematic, however, because inconsistencies in opposite directions cancel each other out, which underestimates susceptibility to framing in individuals who display “negative” framing effects on certain problems. Not surprisingly, Berthet (2021) reported that absolute scoring increased the internal consistency of his risky-choice framing scale relative to directional scoring. This paradox of better theoretical justification leading to less optimal measurement should be kept in mind when using the scale. As LeBoeuf and Shafir (2003) noted, within-participants administration of the same framing tasks measures the ability to stay consistent across frames, not the ability to resist the initial influence of the frame. The choice of the scoring system may, therefore, depend on what should be measured, and which question should be answered.

Limitations

This study has several limitations. Firstly, we found no clear answers to the questions of whether the scale’s structure and measurement invariance, due to low inter-item correlations. Secondly, we did not assess the convergent and discriminant validity of the scale, which would have allowed for a more comprehensive assessment, especially of the psychometric properties. Lastly, generalizability may be limited due to homogenous samples including highly educated, young, and in Bulgaria mainly female individuals. Generalizability may be further limited due to the short time frame between the two administrations and the relatively high—although for online surveys common—dropout rate (Galesic, 2006).

Summary

In sum, this study points to three potential problems with the Resistance to Framing scale. First, low inter-item and item-total correlations suggest that the items of the scale may not represent the same content domain. Second, due to these low correlations, it remains unclear whether the scale’s factor structure is one- or two-dimensional. Last, the low reliability of the scale may prevent accurate measurement of susceptibility to framing, especially among highly resistant individuals. By providing open code and data (https://osf.io/j5n6f), we support researchers in testing the scale with other samples (e.g., general population, different languages and countries) to obtain a comprehensive picture of its performance.

Data availability

This work was preregistered on the Open Science Framework (OSF; https://osf.io/uj5rz). The datasets generated during and/or analyzed during the current study are available in the OSF (https://osf.io/j5n6f).

Code Availability

The code used to prepare and analyze the data can be found on the OSF (https://osf.io/j5n6f). We used R (Version 4.0.2; R Core Team, 2020) and the R packages base (R Core Team, 2020), dplyr (Wickham, François, et al., 2020), ggplot2 (Wickham, 2016), grateful (Rodriguez-Sanchez, 2018), influence.SEM (Altoé & Pastore, 2018), lavaan (Rosseel, 2012), mirt (Chalmers, 2012), mokken (Van der Ark, 2007), MVN (Korkmaz et al., 2014), parameters (Lüdecke et al., 2020), pastecs (Grosjean & Ibanez, 2018), psych (Revelle, 2019), purrr (Henry & Wickham, 2020), readxl (Wickham & Bryan, 2019), Routliers (Delacre & Klein, 2019), sem (Fox et al., 2020), semPlot (Epskamp, 2019), semTools (Jorgensen et al., 2020), stringi (Gagolewski, 2020), tidyr (Wickham et al., 2020), tidyverse (Wickham et al. 2019), and viridis (Garnier, 2018).

Notes

In most studies, lower scores represent lower Resistance to Framing rather than susceptibility to framing by subtracting the mean absolute difference from the potential maximum (5).

We deviated from our preregistered criterion of 3.5 median absolute deviations of the overall time (Leys et al., 2019) because many participants completed the survey faster than 10 min (n = 51 of 341 in North America and n = 7 of 282 in Bulgaria). Not changing the outlier criterion would have led to a low median and including many responses with an unreasonably short completion time.

We used robust maximum-likelihood estimation, as multivariate normality was violated according to the Henze-Zirkler test (MVN, Version 5.8, Korkmaz et al., 2014; Bulgaria: HZ = 1.43, p < .001, North America: HZ = 1.58, p < .001).

We planned to evaluate model fit based on dynamic fit indices (McNeish & Wolf, 2021), as models with a lowly compared to a highly reliable scale attain better model fit when using the standard fixed cutoff values (Hu & Bentler, 1999), the so-called reliability paradox (Hancock & Mueller, 2011). Dynamic fit indices were, however, not available for these models (https://www.dynamicfit.app/cfa/); so, we used the standard cut-off values which were preregistered as a backup plan.

This point was also raised by Jonathan Baron in a personal communication.

References

Aczel, B., Szollosi, A., & Bago, B. (2018). The effect of transparency on framing effects in within-subject designs. Journal of Behavioral Decision Making, 31(1), 25–39. https://doi.org/10.1002/bdm.2036

Allred, G. A. (2018). Examining the influence of executive resources and mathematical abilities on framing biases [Doctoral dissertation, University of Nevada, Las Vegas]. UNLV. https://digitalscholarship.unlv.edu/cgi/viewcontent.cgi?article=4210&context=thesesdissertations

Baron, J. & High II, D. (2019). People who endorse actively open-minded thinking (AOT) are sensitive to cues indicating AOT of sources [Poster Presentation]. Society for Judgment and Decision-Making Annual Meeting, Montréal, Canada. http://www.sjdm.org/presentations/2019-Poster-Baron-Jonathan-endorse-AOT-cues.pdf

Bavolar, J. (2013). Validation of the adult decision-making competence in Slovak students. Judgment & Decision Making, 8(3), 386–392.

Berthet, V. (2021). The measurement of individual differences in cognitive biases: A review and improvement. Frontiers in Psychology, 12, Article 630177. https://doi.org/10.3389/fpsyg.2021.630177

Bloomfield, A. N. (2006). Group size and the framing effect. Memory & Cognition, 34(4), 929–937. https://doi.org/10.3758/BF03193438

Boateng, G. O., Neilands, T. B., Frongillo, E. A., Melgar-Quiñonez, H. R., & Young, S. L. (2018). Best practices for developing and validating scales for health, social, and behavioral research: A primer. Frontiers in Public Health, 6, 149. https://doi.org/10.3389/fpubh.2018.00149

Bruine de Bruin, W., Parker, A. M., & Fischhoff, B. (2007). Individual differences in adult decision-making competence. Journal of Personality and Social Psychology, 92(5), 938–956. https://doi.org/10.1037/0022-3514.92.5.938

Chan, E. K. H. (2014). Standards and guidelines for validation practices: Development and evaluation of measurement instruments. In B. D. Zumbo & E. K. H. Chan (Eds.), Validity and validation in social, behavioral, and health sciences (pp. 9–24). Springer.

Chick, C. F., Reyna, V. F., & Corbin, J. C. (2016). Framing effects are robust to linguistic disambiguation: A critical test of contemporary theory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 42(2), 238–256. https://doi.org/10.1037/xlm0000158

Cieciuch, J., & Davidov, E. (2015). Establishing measurement invariance across online and offline samples: A tutorial with the software packages Amos and Mplus. Studia Psychologica: Theoria et Praxis, 2(15), 83–99. https://doi.org/10.21697/sp.2015.14.2.06

Crișan, D. R., Tendeiro, J., & Meijer, R. (2019). The crit value as an effect size measure for violations of model assumptions in Mokken scale analysis for binary data. PsyArxiv. https://doi.org/10.31234/osf.io/8ydmr

Del Missier, F., Mäntylä, T., & De Bruin, W. B. (2012). Decision-making competence, executive functioning, and general cognitive abilities. Journal of Behavioral Decision Making, 25(4), 331–351. https://doi.org/10.1002/bdm.731

Fagley, N. S., & Miller, P. M. (1997). Framing effects and arenas of choice: Your money or your life? Organizational Behavior and Human Decision Processes, 71(3), 355–373. https://doi.org/10.1006/obhd.1997.2725

Finn, B. (2008). Framing effects on metacognitive monitoring and control. Memory & Cognition, 36(4), 813–821. https://doi.org/10.3758/MC.36.4.813

Forero, C. G., & Maydeu-Olivares, A. (2009). Estimation of IRT graded response models: limited versus full information methods. Psychological Methods, 14(3), 275–299. https://doi.org/10.1037/a0015825

Galesic, M. (2006). Dropouts on the web: Effects of interest and burden experienced during an online survey. Journal of Official Statistics, 22(2), 313–328.

Hancock, G. R., & Mueller, R. O. (2011). The reliability paradox in assessing structural relations within covariance structure models. Educational and Psychological Measurement, 71(2), 306–324. https://doi.org/10.1177/0013164410384856

Hedge, C., Powell, G., & Sumner, P. (2018). The reliability paradox: Why robust cognitive tasks do not produce reliable individual differences. Behavior Research Methods, 50(3), 1166–1186. https://doi.org/10.3758/s13428-017-0935-1

Hoffmann, J. A., Bareuther, L., Schmidt, R., & Dettmers, C. (2020). The relation between memory and decision-making in multiple sclerosis patients. Multiple Sclerosis and Related Disorders, 37, Article e101433. https://doi.org/10.1016/j.msard.2019.101433

Hu, L. T., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling: A Multidisciplinary Journal, 6(1), 1–55. https://doi.org/10.1080/10705519909540118

Koo, M., & Skinner, H. (2005). Challenges of internet recruitment: A case study with disappointing results. Journal of Medical Internet Research, 7(1), Article e126. https://doi.org/10.2196/jmir.7.1.e6

Kühberger, A., & Gradl, P. (2013). Choice, rating, and ranking: Framing effects with different response modes. Journal of Behavioral Decision Making, 26(2), 109–117. https://doi.org/10.1002/bdm.764

LeBoeuf, R. A., & Shafir, E. (2003). Deep thoughts and shallow frames: On the susceptibility to framing effects. Journal of Behavioral Decision Making, 16(2), 77–92. https://doi.org/10.1002/bdm.433

Levin, I. P., & Gaeth, G. J. (1988). How consumers are affected by the framing of attribute information before and after consuming the product. Journal of Consumer Research, 15(3), 374–378 https://www.jstor.org/stable/2489471

Levin, I. P., Schneider, S. L., & Gaeth, G. J. (1998). All frames are not created equal: A typology and critical analysis of framing effects. Organizational Behavior and Human Decision Processes, 76(2), 149–188. https://doi.org/10.1006/obhd.1998.2804

Leys, C., Delacre, M., Mora, Y. L., Lakens, D., & Ley, C. (2019). How to classify, detect, and manage univariate and multivariate outliers, with emphasis on pre-registration. International Review of Social Psychology, 32(1), 1–10. https://doi.org/10.5334/irsp.289

Liang, S., & Zou, Y. (2018). Validation of adult decision-making competence in Chinese college students. Judgment & Decision Making, 13(4), 393–400.

Mandel, D. R., & Kapler, I. V. (2018). Cognitive style and frame susceptibility in decision-making. Frontiers in Psychology, 9, Article e1461. https://doi.org/10.3389/fpsyg.2018.01461

Mäntylä, T., Still, J., Gullberg, S., & Del Missier, F. (2012). Decision making in adults with ADHD. Journal of Attention Disorders, 16(2), 164–173. https://doi.org/10.1177/1087054709360494

McNeish, D., & Wolf, M. G. (2021, October 25). Dynamic fit index cutoffs for confirmatory factor analysis models. Psychological Methods. Advance online publication. https://doi.org/10.1037/met0000425

Molenaar, I. W., & Sijtsma, K. (2000). MSP5 forWindows [Computer program]. Groningen, The Netherlands: ProGAMMA.

Muraki, E. (1992). A generalized partial credit model: Application of an EM algorithm. ETS Research Report Series, 1992(1), 1–35. https://doi.org/10.1002/j.2333-8504.1992.tb01436.x

Nguyen, T. H., Han, H. R., Kim, M. T., & Chan, K. S. (2014). An introduction to item response theory for patient-reported outcome measurement. The Patient-Patient-Centered Outcomes Research, 7(1), 23–35. https://doi.org/10.1007/s40271-013-0041-0

Nimon, K., Zientek, L. R., & Henson, R. K. (2012). The assumption of a reliable instrument and other pitfalls to avoid when considering the reliability of data. Frontiers in Psychology, 3, Article e102. https://doi.org/10.3389/fpsyg.2012.00102

Parker, A. M., & Fischhoff, B. (2005). Decision-making competence: External validation through an individual-differences approach. Journal of Behavioral Decision Making, 18(1), 1–27. https://doi.org/10.1002/bdm.481

Pennycook, G., Cheyne, J. A., Barr, N., Koehler, D. J., & Fugelsang, J. A. (2015). On the reception and detection of pseudo-profound bullshit. Judgment and Decision Making, 10(6), 549–563.

Piedmont, R. L. (2014). Inter-item correlations. In A. C. Michalos (Ed.), Encyclopedia of quality of life and well-being research. Springer. https://doi.org/10.1007/978-94-007-0753-5_1493

Piñon, A., & Gambara, H. (2005). A meta-analytic review of framing effect: Risky, attribute and goal framing. Psicothema, 17(2), 325–331.

Piryaei, S., Khademi Ashkzari, M., Nejati, V., Arshadi, N., & Talkhabi, M. (2017). Cognitive functions and the model of decision-making competence: The specific case of organizational setting. International Journal of Behavioral Sciences, 11(2), 67–73 http://www.behavsci.ir/article_67975.html

Rachev, N. R., Geiger, S. J., Vintr, J., Bühler, V., Kirilova, D., Nabutovsky, A., & Nelsson, J. (2022). Actively open-minded thinking, bullshit receptivity, and susceptibility to framing: Evaluating the dual process account in North America and Bulgaria. European Journal of Psychologcial Assessment. https://doi.org/10.1027/1015-5759/a000685

Samejima, F. (1969). Estimation of latent ability using a response pattern of graded scores. Psychometrika, 34(1), 1–97.

Simmons, J. P., Nelson, L. D., Simonsohn, U. (2012). A 21 word solution. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=2160588

Stanovich, K. E., West, R. F., & Toplak, M. E. (2016). The rationality quotient: Toward a test of rational thinking. MIT press.

Tabachnick, B. G., Fidell, L. S., & Ullman, J. B. (2007). Using multivariate statistics (Vol. 5, pp. 481–498). Pearson.

Tversky, A., & Kahneman, D. (1981). The framing of decisions and the psychology of choice. Science, 211(4481), 453–458. https://doi.org/10.1126/science.7455683

Whitney, P., Rinehart, C. A., & Hinson, J. M. (2008). Framing effects under cognitive load: The role of working memory in risky decisions. Psychonomic Bulletin & Review, 15(6), 1179–1184. https://doi.org/10.3758/PBR.15.6.1179

R Package Citations

Altoé, G. & Pastore, M. (2018). Influence.SEM: Case influence in Structural Equation Models (Version 2.2) [Computer software]. https://CRAN.R-project.org/package=influence.SEM

Chalmers, R. P. (2012). mirt: A multidimensional item response theory package for the R environment (Version 1.33.2) [Computer software]. Journal of Statistical Software, 48(6), 1–29. https://doi.org/10.18637/jss.v048.i06

Delacre, M. & Klein O. (2019). Routliers: Robust outliers detection (Version 0.0.0.3) [Computer software]. https://CRAN.R-project.org/package=Routliers

Epskamp, S. (2019). SemPlot: Path diagrams and visual analysis of various Sem packages’ output (Version 1.1.2) [Computer software]. https://CRAN.R-project.org/package=semPlot

Fox, J., Nie, Z., & Byrnes, J. (2020). Sem: Structural Equation Models (Version 3.1-11) [Computer software]. https://CRAN.R-project.org/package=sem

Gagolewski, M. (2020). R package stringi: Character string processing facilities (Version 1.5.3) [Computer software]. http://www.gagolewski.com/software/stringi/

Garnier, S. (2018). Viridis: Default color maps from ’Matplotlib’ (Version 0.5.1) [Computer software]. https://CRAN.R-project.org/package=viridis

Grosjean, P. & Ibanez, F. (2018). Pastecs: Package for analysis of space-time ecological series (Version 1.3.21) [Computer software]. https://CRAN.R-project.org/package=pastecs

Henry, L. & Wickham, H. (2020). Purrr: Functional programming tools (Version 0.3.4) [Computer software]. https://CRAN.R-project.org/package=purrr

Jorgensen, T. D., Pornprasertmanit, S., Schoemann, A. M., Rosseel, Y., Miller, P., Quick, C., Garnier-Villarreal, M., Selig, J., Boulton, A., Preacher, K., Coffman, D., Rhemtulla, M., Robitzsch, A., Enders, C., Arslan, R., Clinton, B., Panko, P., Merkle, E., Chesnut, S., … Mansolf, M. (2020). semTools: Useful tools for Structural Equation Modeling (Version 0.5-3) [Computer software]. https://CRAN.R-project.org/package=semTools

Korkmaz, S., Goksuluk, D., & Zararsiz, G. (2014). MVN: An R Package for assessing multivariate normality. The R Journal, 6(2), 151–162 https://journal.r-project.org/archive/2014-2/korkmaz-goksuluk-zararsiz.pdf

Lüdecke, D., Ben-Shachar, M. S., Patil, I., & Makowski, D. (2020). Parameters: Extracting, computing and exploring the parameters of statistical models using R. Journal of Open Source Software, 5(53), Article e2445. https://doi.org/10.21105/joss.02445

R Core Team (2020). R: A language and environment for statistical computing (Version 4.0.2) [Computer software]. R Foundation for Statistical Computing. https://www.R-project.org/

Revelle, W. (2019). Psych: Procedures for psychological, psychometric, and personality research (Version 1.9.12.31) [Computer software]. https://CRAN.R-project.org/package=psych

Rodriguez-Sanchez, F. (2018). Grateful: Facilitate citation of R packages (Version 0.0.2) [Computer software]. https://github.com/Pakillo/grateful

Rosseel, Y. (2012). lavaan: An R package for Structural Equation Modeling. Journal of Statistical Software, 48(2), 1–36 http://www.jstatsoft.org/v48/i02/

Van der Ark, L. A. (2007). Mokken scale analysis in R. Journal of Statistical Software, 20(11), 1–19 https://www.jstatsoft.org/article/view/v020i11

Wickham, H. (2016). ggplot2: Elegant graphics for data analysis. Springer-Verlag https://ggplot2.tidyverse.org

Wickham, H. & Bryan, J. (2019). Readxl: Read Excel files (Version 1.3.1) [Computer software]. https://CRAN.R-project.org/package=readxl

Wickham, H., Averick, M., Bryan, J., Chang, W., D’Agostino McGowan, L., François, R., Grolemund, G., Hayes, A., Henry, L., Hester, J., Kuhn, M., Pedersen, T. L., Miller, E., Milton Bache, S., Müller, K., Ooms, J., Robinson, D., Paige Seidel, D., Spinu, V., … Yutani, H. (2019). Welcome to the tidyverse. Journal of Open Source Software, 4(43), Article e1686. https://doi.org/10.21105/joss.01686

Wickham, H., François, R., Henry, L., & Müller, K. (2020). dplyr: A grammar of data manipulation (Version 1.0.2) [Computer software]. https://CRAN.R-project.org/package=dplyr

Acknowledgments

We thank Vanessa Bühler, Desislava Kirilova, Anna Nabutovsky, and Joakim Nelsson for assisting with the data collection.

Funding

Open access funding provided by University of Vienna. This research was partially supported by grants from the Faculty of Philosophy at Sofia University St. Kliment Ohridski and the University of Toronto.

Author information

Authors and Affiliations

Contributions

Sandra J. Geiger: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Project administration, Visualization, Writing-original draft preparation

Jáchym Vintr: Investigation, Methodology, Validation, Writing-review & editing

Nikolay R. Rachev: Funding acquisition, Investigation, Methodology, Supervision, Writing-original draft preparation

Corresponding author

Ethics declarations

Conflict of interest

We have no conflicts of interest to disclose.

Ethics Approval

This study was performed in line with the principles of the Declaration of Helsinki. Approval was granted by the Ethics Committee of Sofia University St. Kliment Ohridski and the University of Toronto.

Consent to participate

Informed consent was obtained from all individual participants included in the study.

Consent for publication

Not applicable.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

ESM 1

(DOCX 404 kb)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Geiger, S.J., Vintr, J. & Rachev, N.R. A reassessment of the Resistance to Framing scale. Behav Res 55, 2320–2332 (2023). https://doi.org/10.3758/s13428-022-01876-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-022-01876-7