Abstract

Brain and behavioural asymmetries have been documented in various taxa. Many of these asymmetries involve preferential left and right eye use. However, measuring eye use through manual frame-by-frame analyses from video recordings is laborious and may lead to biases. Recent progress in technology has allowed the development of accurate tracking techniques for measuring animal behaviour. Amongst these techniques, DeepLabCut, a Python-based tracking toolbox using transfer learning with deep neural networks, offers the possibility to track different body parts with unprecedented accuracy. Exploiting the potentialities of DeepLabCut, we developed Visual Field Analysis, an additional open-source application for extracting eye use data. To our knowledge, this is the first application that can automatically quantify left–right preferences in eye use. Here we test the performance of our application in measuring preferential eye use in young domestic chicks. The comparison with manual scoring methods revealed a near perfect correlation in the measures of eye use obtained by Visual Field Analysis. With our application, eye use can be analysed reliably, objectively and at a fine scale in different experimental paradigms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Accurate quantification of animal behaviour is crucial to understanding its underlying mechanisms. Historically, behavioural measurements were collected manually. However, with technological progress, automated data collection and analyses have expanded (Anderson & Perona, 2014), making behavioural analyses more precise, reliable and effortless for the experimenter (Lemaire et al., 2021; Versace et al., 2020a, b; Wood & Wood, 2019). Thus, computational ethology constitutes a promising research avenue, especially for research on laterality (Vallortigara, 2021).

Automated data collection may allow finer and more objective behavioural analyses than the ones provided by manual coding. However, many of the currently available tracking techniques and software can be complex to use and even inaccurate in some experimental conditions (such as in poor and changing illumination, low contrast, etc.). The open-source toolbox DeepLabCut copes with these limitations (Mathis et al., 2018; Nath et al., 2019). DeepLabCut exploits deep learning techniques to track animals’ movements with unprecedented accuracy, without the need to apply any marker on the body of the animal (Labuguen et al., 2019; Mundorf et al., 2020; Worley et al., 2019; Wu et al., 2019), opening a new range of possibilities for measuring animal behaviour such as behavioural asymmetry data or preferential eye use. While different markerless software can track animals’ body parts (e.g. EthoVision XT, ANY-maze), we focus on DeepLabCut because it is open-source, accurate, does not require a pre-specified experimental setting and is widespread in behavioural research.

It is now clear that structural and functional asymmetries, once believed to be unique to humans, are widespread among vertebrates (Halpern et al., 2005; Rogers, 2015; Rogers et al., 2013; Vallortigara & Versace, 2017; Versace & Vallortigara, 2015) and invertebrates (Frasnelli, 2013; Frasnelli et al., 2012; Rogers, 2015). The study of sensory and perceptual asymmetries is a powerful tool for understanding functional lateralization, especially in animals with laterally placed eyes. For instance, in non-mammalian models, researchers can take advantage of anatomical features causing most of the information coming from each eye system to be processed by the contralateral brain hemisphere (Vallortigara & Versace, 2017). For example, birds have an almost complete decussation of the fibres at the optic chiasma (Cowan et al., 1961) and limited connections between the hemispheres due to a lack of corpus callosum (Andrew, 1991; Mihrshahi, 2006). Therefore, the information entering the left eye is mainly processed by the right hemisphere. With such anatomical structure, the preferential use of one eye most likely reflects the hemisphere in action. To date, temporary occlusion of one eye has been the main method used for behavioural investigation of eye asymmetries (Andrew, 1991; Chiandetti, 2017; Chiandetti et al., 2014; Chiandetti & Vallortigara, 2019; Güntürkün, 1985; Vallortigara, 1992; Vallortigara et al., 1999). However, studying spontaneous eye use without monocular occlusions is important to shed light on the lateralization of naturalistic behaviours. Indeed, a considerable amount of evidence has shown that animals actively use one or the other visual hemifield depending on the task (De Santi et al., 2001; Güntürkün & Kesch, 1987; Prior et al., 2004; Rogers, 2014; Santi et al., 2002; Schnell et al., 2018; Sovrano et al., 1999; Tommasi et al., 2000; Vallortigara et al., 1996) and/or motivational/emotional state (Andrew, 1983; Bisazza et al., 1998; De Boyer Des Roches et al., 2008; Larose et al., 2006).

A widely used method for testing preferences in eye use is the frame-by-frame analyses of video recordings (Fagot et al., 1997; Rogers, 2019). A drawback of this procedure is that it is tedious and may originate potential errors and biases (Anderson & Perona, 2014). Moreover, in animals with two foveae (or a ramped fovea) like birds, it is necessary to distinguish the use of frontal and lateral visual fields, making manual coding of these data even more complicated (Lemaire et al., 2019; Vallortigara et al., 2001). To address these issues, we developed an application for the automatic recording of eye use preferences for comparative neuroethological research. This application, named Visual Field Analysis, is based on DeepLabCut tracking (Nath et al., 2019) and enables eye use scoring as well as other behavioural measurements (see Josserand & Lemaire, 2020 for more details).

Measuring eye use is particularly relevant for species that have laterally placed eyes and therefore use their frontal and lateral visual fields differently (e.g., among birds, domestic chicks and king penguins, Vallortigara et al., 2001; Lemaire et al., 2019). The current study aims to experimentally validate the main function of Visual Field Analysis: scoring preferential eye use in animals. To do so, we assessed the accuracy of Visual Field Analysis in scoring preferential eye use of domestic chicks (Gallus gallus), while looking at an unfamiliar stimulus, in comparison with traditional manual scoring (an approach that has been used, for instance, in Dharmaretnam & Andrew, 1994; De Santi et al., 2001; Vallortigara et al., 2001; Rogers et al., 2004; Dadda & Bisazza, 2016; Schnell et al., 2016). Note that our application can also be used to measure other variables such as the level of locomotor activity (a measurement used in open field or runway tests, e.g., Gallup & Suarez, 1980; Gould et al., 2009; Ogura & Matsushima, 2011), and the time spent by an animal in different areas of a test arena (a measure widely used in recognition, generalization and spontaneous preference tests, which measure animals’ preferences between two or more stimuli, Wood, 2013; Rosa-Salva et al., 2016; Versace et al., 2016, Versace et al. 2020). For further details on the functioning and current limitations of this application, please see the full protocol published by Josserand and Lemaire (2020).

Methods

Subjects

The experimental procedures were approved by the Ethical Committee of the University of Trento and licenced by the Italian Health Ministry (permit number 53/2020). We used 10 chicks of undetermined sex (strain Ross 308). The eggs were obtained from a commercial hatchery (Azienda Agricola Crescenti) and incubated at the University of Trento under controlled conditions (37.7 °C and 40% humidity). Three days before hatching, we moved the eggs into a hatching chamber (37.7 °C and 60% humidity). Soon after hatching, the chicks were housed together in a rectangular cage (150 × 80 × 40 cm) in standard environmental conditions (30 °C and homogeneous illumination, adjusted to follow a natural day/night cycle) and in groups of a maximum of 40 individuals. Food (chick starter crumbs) and water were available ad libitum. The animals were maintained in these conditions for three days, until the test was performed. After the test, all animals were donated to local farmers.

Test

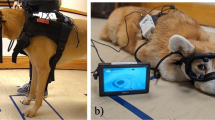

The test took place the third day post-hatching. Each chick was moved into an adjacent room and placed in a smaller experimental cage (45 × 20 × 30 cm) to begin the pretest habituation phase, which usually lasted about 30 minutes. The cage had a round opening (4 cm), and during the habituation phase the animal could pass its head through it at will, to inspect an additional empty compartment (20 × 20 × 30 cm, see Fig. 1). Young chicks tend to spontaneously perform this behaviour when given the opportunity. Once a subject was confidently passing its head through the opening, the proper test phase began, and a red cylinder (5 cm high, 2 cm in diameter) was added in the additional compartment (20 cm away). The subject’s head was then gently placed through the round opening by the experimenter and the animal was manually kept in this position for 30 seconds (Fig. 1). The behaviour of each animal was recorded with an overhead camera (GoPro Hero 5, 1290 × 720, ~ 25–30 fps) for 30 seconds. Each animal was tested only once.

Schematic representation of the testing condition for Experiment 1 (top view). In chicks, eyes are placed laterally on the head’s side, creating wide monocular visual fields often used for visual exploration of objects, and a small binocular overlap (see also Fig. 3). Due to the structure of the visual field of chicks (and other birds with laterally placed eyes), the right monocular visual field is projected on the right eye and the left visual field is projected on the left eye

Data acquisition using Visual Field Analysis

Data acquisition

To perform data acquisition, Visual Field Analysis requires three main inputs for each analysed subject (Fig. 2). The first input is the video recording of the animal’s behaviour. The second input is a file containing information about the tracking (x, y coordinates) of specific body parts (output file provided by DeepLabCut). Visual Field Analysis focuses on three points located on the head: the closest points to the left eye, the right eye and the top of the head. For the current experiment, the positions of these three points were manually labelled on 100 frames so that DeepLabCut could accurately generalize each point of interest on all video recordings. The third input corresponds to a spreadsheet where the experimenter manually enters specific information about the observed animal. Further information is provided in our protocol (Josserand & Lemaire, 2020).

To proceed with data acquisition, the extent of the frontal and lateral visual fields for the species under investigation must also be defined. In the current study, we subdivided the visual field as follows: two frontal visual fields (each 15° wide from the midline, Fig. 3), two lateral visual fields (each 135° wide starting from the frontal visual field line, Fig. 3) and the blind visual field (30° wide starting from the lateral visual field line, Fig. 3).

Schematic representation of a chick and its visual fields defined for the experiments. The yellow midline separates the left visual field from the right visual field. The green lines show the borders of the frontal vision (from the midline, 15° on each side), and the blue lines show the blind spot of the chick. Each angle can be manually chosen in the Visual Field Analysis program

Using the visual fields previously defined and the location of the stimuli, the program assesses in which portion (frontal or lateral) of the hemifield (left or right) the stimuli fall in each frame. For each frame, if the stimulus(i) is located within a visual field, a value of 1 is attributed to that visual field (see the light blue dashed line in Fig. 4a). If the stimulus is straddling two visual fields, the proportion of the object located within each visual field is attributed to each one of them (see the light green dashed line in Fig. 4b). The output through which Visual Field Analysis provides eye use data varies depending on the location and number of stimuli. In this experiment, Visual Field Analysis computed eye use for one stimulus.

Visualization of the projection lines defining each region of the visual field. The yellow line indicates the midline and delimitates each hemifield, providing the nasal margin of the frontal visual fields. The green lines delimitate the frontal visual fields from the lateral visual fields. The blue lines delimitate the lateral visual fields from the blind spot. In these pictures, referring to the setup of the current experiment, the visual field used to look at the stimulus is shown, which can be compared to the information reported on the left side of the pictures (within the dark rectangles on the top and bottom left corners of the images). Since our application allows the recording of visual field use for up to two simultaneously presented objects, in these images we can see two grey rectangles (reporting information on the eye use for each of the two objects). In the current example, where only one stimulus was present, the relevant information is presented in the grey rectangle in the upper part of the image (referring to the top stimulus), while the other can be ignored. A value is assigned to every visual field, indicating whether the stimulus was located inside it. A value of 1 for a given visual field indicates that the stimulus is located entirely within that visual field, such as in Fig. 4a. However, the stimulus can be straddling two visual fields, such as in Fig. 4b. Consequently, the program attributes different values depending on the portion of the stimuli extent (light blue dashed lines) located in a visual field (the stimulus extent is here defined by its borders)

Error threshold setting

As a strategy to identify and exclude frames inaccurately tracked by DeepLabCut, we implemented an error threshold approach. For each video, the experimenter can set an error threshold that specifies the acceptable range of distances between the three points tracked on the head of the animal. The error threshold is based on the average distance between each body part tracked by DeepLabCut and excludes frames that are too far from the average distance. As an example, the average distance between the ‘leftHead’ and ‘rightHead’ points was 58 pixels for subject 5 of the current experiment. For this animal, we chose a threshold of 3. This threshold corresponds to the number of standard deviations above which a frame is considered an outlier; thus, the absolute value of the threshold in terms of pixels is variable. With a threshold set at 3, 1.86% of the frames were preliminarily labelled as outliers. These frames were then manually inspected to address the accuracy of this process and could be removed from our analysis if visual observation confirmed them to be outliers (Fig. 5). The threshold used for each subject of the current experiment and the percentage of frames manually checked and excluded from the analyses at these different steps are reported in Table 1.

Two of the frames considered outliers in our example (from subject 5 of the current experiment), with a threshold of 3. The red circles on the images highlight the position of the labels. On image 5A, the chick has not placed its head inside the round opening yet, but DeepLabCut incorrectly placed the ‘leftHead’ (blue dot), ‘topHead’ (green dot) and ‘rightHead’ (red dot) on an empty portion of the screen, close to the stimulus. In image 5B, the chick started to insert its head in the round opening, but most of it is still invisible. DeepLabCut incorrectly located the ‘leftHead’, ‘topHead’ and ‘rightHead’ labels on the animal’s beak.

To help users choose an appropriate threshold and check the program’s accuracy, Visual Field Analysis has a built-in function to visualize frames. It is possible to visualize the frames removed from the analysis given the chosen error threshold, in addition to a set of randomly selected frames which are provided by the program to assess its accuracy.

Manual coding of the data

To assess the reliability of the eye use data provided by Visual Field Analysis, we manually checked all the frames analysed by Visual Field Analyses (7639 frames in total). The frames were checked and saved using a built-in function provided by Visual Field Analysis. Each frame was inspected by two independent coders, each of whom coded all the frames independently from the other. Then, the two experimenters compared their output, re-inspected all the frames for which a different coding was assigned and agreed on a final labelling of these frames.

The manual scores were attributed using the same score attribution method and the same visual field subdivisions as Visual Field Analysis. On each frame, the coders superimposed a transparent sheet, on which five lines originating from a central point represented the subdivisions of the chick visual field (see Fig. 3). For each frame, if the visual field delimitation lines of the translucent sheet used for manual scoring overlapped perfectly with the lines used by Visual Field Analysis for the attribution of the scoring (as visible in Fig. 4), the original automated scoring was considered accurate. In this case, the same original scoring provided by Visual Field Analysis was also reported for manual scoring. If there was not a perfect overlap, the original scoring was considered inaccurate and the frame was ‘relabelled’, providing new values for manual scoring. In this case, the same criteria as described above were followed: if the stimulus fell entirely within a visual field, a value of 1 was attributed to it. If the stimulus straddled two visual fields, the proportion of the stimulus located within each visual field was attributed to each one of them (e.g., 0.75 and 0.25).

Statistical analyses

The scores obtained for each visual field (frontal left, frontal right, lateral left and lateral right) were compared between scoring methods (Visual Field Analysis vs manual coding) using correlation tests (Pearson’s test) overall and per individual. An estimation of the number of frames needed to achieve a significant power for the correlation analysis was run prior to the study, assuming an r value of 0.9 (given the high accuracy of the tracking methodologies). According to this estimation, it would be sufficient to code only seven frames to achieve a statistically significant correlation. However, we deemed this to be insufficient to provide a reliable validation of our software. Thus, in order to provide the most precise estimation possible of the accuracy of our method, we decided to code all the frames available, greatly surpassing the minimum number required to achieve sufficient statistical power. As some videos were better tracked by DeepLabCut than others, we report the reliability of our program in relation to the tracking accuracy (measured as the percentage of frames that received identical scoring in the manual and automated scoring). The statistical tests were performed using RStudio version 4.0.2 (RStudio Team, 2020).

Results

Eye use data reliability

The Pearson’s correlation tests revealed an almost perfect, and highly significant, correlation between scoring methods, both when the data for the whole sample were taken into consideration and when the analysis was run at the single subject level. This was true for each visual field (see Table 1 for statistics).

The number of frames for which the manual scoring of the human coder was discrepant from that assigned by Visual Field Analysis (relabelled frames) is detailed for each subject in Table 1. No discrepancy between the manual coding and the automated coding was found for subjects 8 and 10. Thus, for these subjects, no frames were relabelled, and a perfect correlation was, of course, found between manual and automated scoring. One should note that the reliability of our application is directly dependent on the DeepLabCut tracking accuracy, which differs across conditions (different videos settings) and individuals (different behaviours). When the DeepLabCut tracking was 100% accurate, the output produced by Visual Field Analysis perfectly matched the manual scoring done by the human coders.

For the eight remaining subjects, the tracking accuracy (i.e., the percentage of frames that received identical scoring in the manual and automated scoring) fluctuated from 65.4 to 99.9%. Nonetheless, the reliability of the program for scoring eye use remained relatively high in all conditions (see Table 1 for statistics). Even when the tracking accuracy was at its lowest (65.4% for subject 9), the correlation between the coding provided by Visual Field Analysis and the manual coding remained strong for most visual fields (Pearson’s r ranging from 0.77 to 0.97), although it decreased in the frontal right visual field (Pearson’s r = 0.63).

Discussion

Automated and reliable assessments of visual field use can support the investigation of behavioural lateralization. Our results show that Visual Field Analysis can be reliably used to automatically assess eye use behaviour in animals with laterally placed eyes, replacing manual coding methods. The comparison between the manual and the automated scoring revealed a nearly perfect correlation between manual score and Visual Field Analysis score.

With optimum tracking conditions, the results provided by the application can be reliable at 100% (i.e., identical results can be obtained as with manual scoring). Visual Field Analysis excludes from the analyses frames with a low degree of likelihood (i.e., frames that DeepLabCut considered as being unlikely to be well tracked), thus keeping only frames with a level of confidence of being well tracked above 95%. It also excludes frames where the distance between the DeepLabCut’s labels is higher than a given threshold. Note that the two measures are often correlated: when DeepLabCut tracking is imperfect, both the number of frames considered as unlikely to be well tracked and the number of frames considered as outliers with the threshold method are high. Therefore, most of the frames that could be wrongly tracked are excluded from the analysis. Moreover, using a built-in function of our application, the user can manually visualize a certain number of random frames of a video to check the program performance. We suggest visualizing at least 100 frames per individual with more than 90% of tracking accuracy in order to achieve a performance similar to what is described in the current study. If the tracking accuracy is lower than 90%, we suggest training DeepLabCut again using a new set of frames or a different labelling method.

Our application opens a new range of possibilities for laterality research. It can be adapted to different species, when tested in controlled setups, and provides fast and reliable data acquisition. To date, we have run preliminary tests of our application with honeybees and zebrafish (unpublished data), in addition to domestic chicks, revealing good tracking performance with all these species. From the technical point of view, therefore, Visual Field Analysis offers sufficient flexibility to be employed in any species, as long as the visual field dimensions are known and the position of the eyes can be reliably estimated when it is video-recorded from above. We thus consider this application as also highly suited to studies that compare the performance of different species, especially among animals with laterally placed eyes and relatively weak inter-hemispheric connections (such as many non-mammalian vertebrates). In animals with frontally placed eyes and stronger inter-hemispheric coupling, eye use studies are less straightforward to interpret and thus less common. However, this is more reflective of the biological constraints of these species than a specific limitation of the current software, which in principle could be adapted to a variety of vertebrate species. For instance, even in the case of animals with frontally placed eyes, Visual Field Analysis can be used to measure the total amount of time spent looking toward a stimulus, regardless of the visual field used. This method could hence address issues of subjective coding on human and non-human animal playback experiments in which the position of the head is scored.

One of the advantages of Visual Field Analysis is that it allows the accurate processing of vast amounts of data, which would normally be unfeasible to process with manual coding methods. For instance, this kind of data can be easily gathered in cross-sectional studies in which the behaviour of the animals is continuously observed for protracted periods of time (rather than sampled at periodic intervals), as has been recently done in filial imprinting studies in chicks (Lemaire et al., 2021). Moreover, longitudinal studies investigating the ontogenesis and development of the same individuals over extended periods of their life span could also benefit from the ability to effortlessly process large quantities of data, as allowed by Visual Field Analysis. Note that a large amount of data can be processed even for short-duration tasks by recording the animal behaviour with high frame rates. This is particularly relevant to species moving their heads at a very high speed such as birds (Kress et al., 2015) and insects (Boeddeker et al., 2010; Hateren & Schilstra, 1999). The use of our application to encode visual field data could be thus particularly beneficial for these kinds of research.

Moreover, Visual Field Analysis not only assesses simple preferential eye use (whether the left or right eye is preferred); it can also investigate the use of sub-regions within each hemifield (frontal visual field vs lateral visual field), allowing the analysis of eye use behaviour at a fine level, which is very time-consuming if done manually as performed by Lemaire and collaborators (2019) in King Penguins. Alongside measuring eye use, the application allows the recording of other relevant behavioural measurements, such as the activity level of an animal’s head, while keeping track of its positions in different areas of a testing environment (for this last function, the performance of Visual Field Analysis was already validated in a previous study, showing once again very high correlation with the measurements obtained by traditional manual coding methods; Santolin et al., 2020). In addition, Visual Field Analysis can extract data on the movements of the heads of the animals, quantifying the amount of motion of this body part. This can be informative about changes in eye use and head saccades, a behaviour present in birds which is correlated with arousal (Golüke et al., 2019; Kjrsgaard et al., 2008). These other behavioural measurements provide additional information that can be analysed in relation to eye use behaviour or independently from it, allowing for richer behavioural assessments and more flexible use in different experimental designs.

However, our data acquisition technique is entirely dependent on tracking accuracy: a key component most tracking software still struggles with. To provide optimal results, in most tracking software the video recording quality has to be pretested and adjusted, while the experimental settings must be similar throughout the whole data acquisition phase. By using deep learning techniques, DeepLabCut started to overcome those limitations. For instance, DeepLabCut can be trained to recognize animal body parts in a wide range of scenarios as long as the video recordings are of sufficient quality. Moreover, DeepLabCut even allows the tracking of multiple animals at the same time (Lauer et al., 2021). In its current version, however, Visual Field Analysis allows the tracking of only one animal at a time, within an orthogonal arena. Moreover, the current version of this application provides only limited flexibility in the shape of the experimental arena and in the placement of the stimuli within it (see Josserand & Lemaire, 2020 for further details). Since this represents potentially the greatest limitation of this software, we are actively working to update Visual Field Analysis so that it can be used in a greater variety of experimental designs, both in terms of stimuli placement and in the shape of the experimental arena.

Given the numerous practical advantages offered by automated behaviour tracking methods compared to manual ones, we believe that automated methods should be chosen to ensure reproducible data analysis. Visual Field Analysis offers an important resource for research on behavioural lateralization, enabling the collection and analysis of a richer set of data, in a less time-consuming and more unbiased way.

References

Anderson D. J. J, Perona P. 2014. Toward a science of computational ethology. Neuron 84(1):18–31. https://doi.org/10.1016/j.neuron.2014.09.005. https://linkinghub.elsevier.com/retrieve/pii/S0896627314007934

Andrew R. J. 1983. Lateralization of Emotional and Cognitive Function in Higher Vertebrates, with Special Reference to the Domestic Chick. In: Ewert JP., Capranica R.R., Ingle D.J. (eds) Advances in Vertebrate Neuroethology. NATO Advanced Science Institutes Series (Series A: Life Sciences), vol 56. Springer, Boston, MA. https://doi.org/10.1007/978-1-4684-4412-4_22

Andrew RJ. 1991. Neural and behavioural plasticity: the use of the domestic chick as a model. Oxford University Press.

Bisazza A, Facchin L, Pignatti R, Vallortigara G. 1998. Lateralization of detour behaviour in poeciliid fish: the effect of species, gender and sexual motivation. Behavioural Brain Research 91(1–2):157–164. https://doi.org/10.1016/S0166-4328(97)00114-9. https://linkinghub.elsevier.com/retrieve/pii/S0166432897001149

Boeddeker N, Dittmar L, Stürzl W, Egelhaaf M. 2010. The fine structure of honeybee head and body yaw movements in a homing task. Proceedings of the Royal Society B 277 1899–1906. https://doi.org/10.1098/rspb.2009.2326

Chiandetti C. 2017. Manipulation of strength of cerebral lateralization via embryonic light stimulation in birds. p. 611–631. https://doi.org/10.1007/978-1-4939-6725-4_19

Chiandetti C, Vallortigara G. 2019. Distinct effect of early and late embryonic light-stimulation on chicks’ lateralization. Neuroscience 414:1–7. https://doi.org/10.1016/j.neuroscience.2019.06.036. https://linkinghub.elsevier.com/retrieve/pii/S0306452219304634

Chiandetti C, Pecchia T, Patt F, Vallortigara G. 2014. Visual hierarchical processing and lateralization of cognitive functions through domestic chicks’ eyes. Bingman VP, editor. PLoS One 9(1):e84435. https://doi.org/10.1371/journal.pone.0084435

Cowan, W. M., Adamson, L., & Powell, T. P. 1961. An experimental study of the avian visual system. Journal of Anatomy 95(Pt 4), 545–563. Retrieved from https://pubmed.ncbi.nlm.nih.gov/13881865

Dadda M, Bisazza A. 2016. Early visual experience influences behavioral lateralization in the guppy. Animal Cognition 19(5):949–958. https://doi.org/10.1007/s10071-016-0995-0

De Boyer Des Roches A, Richard-Yris M-A, Henry S, Ezzaouïa M, Hausberger M. 2008. Laterality and emotions: visual laterality in the domestic horse (Equus caballus) differs with objects’ emotional value. Physiology & Behavior 94(3):487–490. https://doi.org/10.1016/j.physbeh.2008.03.002. https://linkinghub.elsevier.com/retrieve/pii/S0031938408000759

De Santi A, Sovrano VA, Bisazza A, Vallortigara G. 2001. Mosquitofish display differential left- and right-eye use during mirror image scrutiny and predator inspection responses. Animal Behaviour 61(2):305–310. https://doi.org/10.1006/anbe.2000.1566. https://linkinghub.elsevier.com/retrieve/pii/S0003347200915665

Dharmaretnam M, Andrew RJ. 1994. Age- and stimulus-specific use of right and left eyes by the domestic chick. Animal Behaviour 48(6):1395–1406. https://doi.org/10.1006/anbe.1994.1375. https://linkinghub.elsevier.com/retrieve/pii/S0003347284713753

Fagot J, Rogers L, Ward J. 1997. Hemispheric specialisation in animals and humans: introduction. Laterality Asymmetries Body, Brain Cognition 2(3–4):177–178. https://doi.org/10.1080/713754272. http://www.tandfonline.com/doi/abs/10.1080/713754272

Frasnelli E. 2013. Brain and behavioral lateralization in invertebrates. Frontiers in Psychology 122:153–208. https://doi.org/10.1007/978-1-4939-6725-4_6

Frasnelli E, Vallortigara G, Rogers LJ. 2012. Left–right asymmetries of behaviour and nervous system in invertebrates. Neuroscience and Biobehavioral Reviews 36(4):1273–1291. https://doi.org/10.1016/j.neubiorev.2012.02.006. https://linkinghub.elsevier.com/retrieve/pii/S0149763412000322

Gallup GG, Suarez SD. 1980. An ethological analysis of open-field behaviour in chickens. Animal Behaviour 28(2):368–378. https://doi.org/10.1016/S0003-3472(80)80045-5. https://linkinghub.elsevier.com/retrieve/pii/S0003347280800455

Golüke S, Bischof H-J, Engelmann J, Caspers BA, Mayer U. 2019. Social odour activates the hippocampal formation in zebra finches (Taeniopygia guttata). Behavioural Brain Research 364:41–49. https://doi.org/10.1016/j.bbr.2019.02.013. https://linkinghub.elsevier.com/retrieve/pii/S0166432819301007

Gould, T. D., Dao, D. T., & Kovacsics, C. E. 2009. The open field test. In T. D. Gould (Ed.), Mood and anxiety related phenotypes in mice: Characterization using behavioral tests (pp. 1–20). Humana Press. https://doi.org/10.1007/978-1-60761-303-9_1

Güntürkün O. 1985. Lateralization of visually controlled behavior in pigeons. Physiology & Behavior 34(4):575–577. https://doi.org/10.1016/0031-9384(85)90051-4. https://linkinghub.elsevier.com/retrieve/pii/0031938485900514

Güntürkün O, Kesch S. 1987. Visual lateralization during feeding in pigeons. Behavioral Neuroscience 101(3):433–435. https://doi.org/10.1037/0735-7044.101.3.433

Halpern ME, Güntürkün O, Hopkins WD, Rogers LJ. 2005. Lateralization of the vertebrate brain: taking the side of model systems. The Journal of Neuroscience 25(45):10351–10357. https://doi.org/10.1523/JNEUROSCI.3439-05.2005

Hateren JH, Schilstra C. 1999. Blowfly flight and optic flow. II. Head movements during flight. The Journal of Experimental Biology 202 (11): 1491–1500. https://doi.org/10.1242/jeb.202.11.1491

Josserand, M., & Lemaire, B. S. 2020. A step by step guide to using Visual Field Analysis. https://doi.org/10.17504/protocols.io.bicvkaw6

Kjrsgaard A, Pertoldi C, Loeschcke V, Witzner Hansen D. 2008. Tracking the gaze of birds. Journal of Avian Biology 39(4):466–469. https://doi.org/10.1111/j.0908-8857.2008.04288.x

Kress K, van Bokhorst E, Lentink D. 2015. How Lovebirds Maneuver Rapidly Using Super-Fast Head Saccades and Image Feature Stabilization. PLoS One 10(7): e0133341. https://doi.org/10.1371/journal.pone.0133341

Labuguen, R., Bardeloza, D. K., Negrete, S. B., Matsumoto, J., Inoue, K., & Shibata, T. 2019. Primate markerless pose estimation and movement analysis using DeepLabCut. In 2019 Joint 8th International Conference on Informatics, Electronics & Vision (ICIEV) and 2019 3rd International Conference on Imaging, Vision & Pattern Recognition (icIVPR). IEEE. pp. 297–300. https://ieeexplore.ieee.org/document/8858533/

Larose C, Richard-Yris M-A, Hausberger M, Rogers LJ. 2006. Laterality of horses associated with emotionality in novel situations. Laterality Asymmetries Body, Brain Cogn. 11(4):355–367. https://doi.org/10.1080/13576500600624221

Lauer J, Zhou M, Ye S, Menegas W, Nath T, Rahman MM, Di Santo V, Soberanes D, Feng G, Murthy VN, et al. 2021. Multi-animal pose estimation and tracking with DeepLabCut. bioRxiv. https://doi.org/10.1101/2021.04.30.442096

Lemaire BS, Viblanc VA, Jozet-Alves C. 2019. Sex-specific lateralization during aggressive interactions in breeding king penguins. Rutz C, editor. Ethology. 125(7):439–449. https://doi.org/10.1111/eth.12868

Lemaire BS, Rucco D, Josserand M, Vallortigara G, Versace E. 2021. Stability and individual variability of social attachment in imprinting. Scientific Reports 11(1):7914. https://doi.org/10.1038/s41598-021-86989-3. http://www.nature.com/articles/s41598-021-86989-3

Mathis A, Mamidanna P, Cury KM, Abe T, Murthy VN, Mathis MW, Bethge M. 2018. DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nature Neuroscience 21(9):1281–1289. https://doi.org/10.1038/s41593-018-0209-y

Mihrshahi, R. 2006. The corpus callosum as an evolutionary innovation. Journal of Experimental Zoology Part B: Molecular and Developmental Evolution 306B(1), 8–17. https://doi.org/10.1002/jez.b.21067

Mundorf A, Matsui H, Ocklenburg S, Freund N. 2020. Asymmetry of turning behavior in rats is modulated by early life stress. Behavioural Brain Research 393:112807. https://doi.org/10.1016/j.bbr.2020.112807. https://linkinghub.elsevier.com/retrieve/pii/S0166432820305064

Nath T, Mathis A, Chen AC, Patel A, Bethge M, Mathis MW. 2019. Using DeepLabCut for 3D markerless pose estimation across species and behaviors. Nature Protocols 14(7):2152–2176. https://doi.org/10.1038/s41596-019-0176-0. https://www.biorxiv.org/content/early/2018/11/24/476531

Ogura, Y., & Matsushima, T. 2011. Social facilitation revisited: increase in foraging efforts and synchronization of running in domestic chicks. Frontiers in Neuroscience 5. https://doi.org/10.3389/fnins.2011.00091. http://journal.frontiersin.org/article/10.3389/fnins.2011.00091/abstract

Prior H, Wiltschko R, Stapput K, Güntürkün O, Wiltschko W. 2004. Visual lateralization and homing in pigeons. Behavioural Brain Research 154(2):301–310. https://doi.org/10.1016/j.bbr.2004.02.018

Rogers LJ. 2014. Asymmetry of brain and behavior in animals: tts development, function, and human relevance. genesis. 52(6):555–571. https://doi.org/10.1002/dvg.22741

Rogers L. J. 2015. Brain and Behavioral Lateralization in Animals. In: Wright J. D. (ed.), International Encyclopedia of the Social & Behavioral Sciences. 2nd ed. Vol 2. Oxford: Elsevier: 799–805

Rogers LJ. 2019. Left versus right asymmetries of brain and behaviour. MDPI. http://www.mdpi.com/books/pdfview/book/1889

Rogers LJ, Zucca P, Vallortigara G. 2004. Advantages of having a lateralized brain. Proceedings of the Royal Society B: Biological Sciences 271(SUPPL. 6):420–422. https://doi.org/10.1098/rsbl.2004.0200

Rogers LJ, Vallortigara G, Andrew RJ. 2013. Divided Brains. Cambridge University Press. http://ebooks.cambridge.org/ref/id/CBO9780511793899

Rosa-Salva O, Grassi M, Lorenzi E, Regolin L, Vallortigara G. 2016. Spontaneous preference for visual cues of animacy in naïve domestic chicks: The case of speed changes. Cognition 157:49–60. https://doi.org/10.1016/j.cognition.2016.08.014. https://linkinghub.elsevier.com/retrieve/pii/S0010027716302049

RStudio Team. 2020. RStudio: Integrated Development Environment for R. RStudio, PBC, Boston, MA URL http://www.rstudio.com/

Santi A, Bisazza A, Vallortigara G. 2002. Complementary left and right eye use during predator inspection and shoal-mate scrutiny in minnows. Journal of Fish Biology 60(5):1116–1125. https://doi.org/10.1111/j.1095-8649.2002.tb01708.x

Santolin C, Rosa-Salva O, Lemaire BS, Regolin L, Vallortigara G. 2020. Statistical learning in domestic chicks is modulated by strain and sex. Scientific Reports 10(1):15140. https://doi.org/10.1038/s41598-020-72090-8. http://www.nature.com/articles/s41598-020-72090-8

Schnell AK, Hanlon RT, Benkada A, Jozet-Alves C. 2016. Lateralization of eye use in cuttlefish: opposite direction for anti-predatory and predatory behaviors. Frontiers in Physiology 7:620. https://doi.org/10.3389/fphys.2016.00620

Schnell AK, Bellanger C, Vallortigara G, Jozet-Alves C. 2018. Visual asymmetries in cuttlefish during brightness matching for camouflage. Current Biology 28(17):R925–R926. https://doi.org/10.1016/j.cub.2018.07.019. https://linkinghub.elsevier.com/retrieve/pii/S0960982218309187

Sovrano VA, Rainoldi C, Bisazza A, Vallortigara G, Sovrano VA. 1999. Roots of brain specializations: preferential left-eye use during mirror-image inspection in six species of teleost fish. Behavioural Brain Research 106(1–2):175–180. https://doi.org/10.1016/S0166-4328(99)00105-9. https://linkinghub.elsevier.com/retrieve/pii/S0166432899001059

Tommasi L, Andrew R., Vallortigara G. 2000. Eye use in search is determined by the nature of task in the domestic chick (Gallus gallus). Behavioural Brain Research 112(1–2):119–126. https://doi.org/10.1016/S0166-4328(00)00167-4. https://linkinghub.elsevier.com/retrieve/pii/S0166432800001674

Vallortigara G. 1992. Right hemisphere advantage for social recognition in the chick. Neuropsychologia 30(9):761–768. https://doi.org/10.1016/0028-3932(92)90080-6. https://linkinghub.elsevier.com/retrieve/pii/0028393292900806

Vallortigara, G. 2021. Laterality for the next decade: computational ethology and the search for minimal condition for cognitive asymmetry. Laterality. https://doi.org/10.1080/1357650X.2020.18701.

Vallortigara G, Versace E. 2017. Laterality at the neural, cognitive, and behavioral levels. APA Handb Comp Psychol. 1. Basic C:557–577.

Vallortigara G, Regolin L, Bortolomiol G, Tommasi L. 1996. Lateral asymmetries due to preferences in eye use during visual discrimination learning in chicks. Behavioural Brain Research 74(1–2):135–143. https://doi.org/10.1016/0166-4328(95)00037-2. https://linkinghub.elsevier.com/retrieve/pii/0166432895000372

Vallortigara G, Regolin L, Pagni P. 1999. Detour behaviour, imprinting and visual lateralization in the domestic chick. Cognitive Brain Research 7(3):307–320. https://doi.org/10.1016/S0926-6410(98)00033-0. https://linkinghub.elsevier.com/retrieve/pii/S0926641098000330

Vallortigara G, Cozzutti C, Tommasi L, Rogers LJ. 2001. How birds use their eyes: opposite left-right specialization for the lateral and frontal visual hemifield in the domestic chick. Current Biology 11(1):29–33. https://doi.org/10.1016/S0960-9822(00)00027-0.

Versace E, Vallortigara G. 2015. Forelimb preferences in human beings and other species: multiple models for testing hypotheses on lateralization. Frontiers in Psychology 6. https://doi.org/10.3389/fpsyg.2015.00233

Versace E, Schill J, Nencini AMM, Vallortigara G. 2016. Naïve chicks prefer hollow objects. Rogers LJ, editor. PLoS One 11(11):e0166425. https://doi.org/10.1371/journal.pone.0166425

Versace E, Damini S, Stancher G. 2020a. Early preference for face-like stimuli in solitary species as revealed by tortoise hatchlings. Proceedings of the National Academy of Sciences of the United States of America 117(39):24047-24049. https://doi.org/10.1073/pnas.2011453117

Versace E, Caffini M, Werkhoven Z, de Bivort BL. 2020b. Individual, but not population asymmetries, are modulated by social environment and genotype in Drosophila melanogaster. Scientific Reports 10(1):4480. https://doi.org/10.1038/s41598-020-61410-7. http://www.nature.com/articles/s41598-020-61410-7

Wood JN. 2013. Newborn chickens generate invariant object representations at the onset of visual object experience. Proceedings of the National Academy of Sciences 110(34):14000–14005. https://doi.org/10.1073/pnas.1308246110

Wood SMW, Wood JN. 2019. Using automation to combat the replication crisis: a case study from controlled-rearing studies of newborn chicks. Infant Behavior & Development 57:101329. https://doi.org/10.1016/j.infbeh.2019.101329. https://linkinghub.elsevier.com/retrieve/pii/S0163638318301437

Worley NB, Djerdjaj A, Christianson JP. 2019. DeepLabCut analysis of social novelty preference. bioRxiv.:736983. https://doi.org/10.1101/736983. http://biorxiv.org/content/early/2019/08/15/736983.abstract

Wu, H., Mu, J., Da, T., Xu, M., Taylor, R. H., Iordachita, I., & Chirikjian, G. S. 2019. Multi-mosquito object detection and 2D pose estimation for automation of PfSPZ malaria vaccine production. In 2019 IEEE 15th International Conference on Automation Science and Engineering (CASE). IEEE. p. 411–417. https://ieeexplore.ieee.org/document/8842953/.

Acknowledgements

We thank Anastasia Morandi-Raikova for helping with the manual coding of the frames. We also thank Prof. Giorgio Vallortigara for funding MJ during her stay at CIMeC, and for his support for this project. Finally, we are also thankful to Lise Lecroq for sharing with us the illustration of Fig. 1.

Funding

Open access funding provided by Università degli Studi di Trento within the CRUI-CARE Agreement. This work was supported by the Fondazione Caritro. This project has also received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement No 833504 SPANUMBRA).

Author information

Authors and Affiliations

Contributions

BL implemented DeepLabCut. MJ designed and coded VFA. BL did part of the manual coding. EV contributed to the experimental design, and suggested ways to validating VFA tool. BL wrote the first draft with critical edits from ORS and EV. All authors contributed to the manuscript.

Corresponding author

Additional information

Open Practices Statement

Visual Field Analysis is open-source and entirely available in our GitHub. Videos and data are available on figShare (https://figshare.com/s/ecfc65e0bf4d562a0fc5). A step-by-step guide to running our program is available on protocols.io (Josserand and Lemaire 2020).

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Josserand, M., Rosa-Salva, O., Versace, E. et al. Visual Field Analysis: A reliable method to score left and right eye use using automated tracking. Behav Res 54, 1715–1724 (2022). https://doi.org/10.3758/s13428-021-01702-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-021-01702-6