Abstract

Psychology faces a measurement crisis, and mind-wandering research is not immune. The present study explored the construct validity of probed mind-wandering reports (i.e., reports of task-unrelated thought [TUT]) with a combined experimental and individual-differences approach. We examined laboratory data from over 1000 undergraduates at two U.S. institutions, who responded to one of four different thought-probe types across two cognitive tasks. We asked a fundamental measurement question: Do different probe types yield different results, either in terms of average reports (average TUT rates, TUT-report confidence ratings), or in terms of TUT-report associations, such as TUT rate or confidence stability across tasks, or between TUT reports and other consciousness-related constructs (retrospective mind-wandering ratings, executive-control performance, and broad questionnaire trait assessments of distractibility–restlessness and positive-constructive daydreaming)? Our primary analyses compared probes that asked subjects to report on different dimensions of experience: TUT-content probes asked about what they’d been mind-wandering about, TUT-intentionality probes asked about why they were mind-wandering, and TUT-depth probes asked about the extent (on a rating scale) of their mind-wandering. Our secondary analyses compared thought-content probes that did versus didn’t offer an option to report performance-evaluative thoughts. Our findings provide some “good news”—that some mind-wandering findings are robust across probing methods—and some “bad news”—that some findings are not robust across methods and that some commonly used probing methods may not tell us what we think they do. Our results lead us to provisionally recommend content-report probes rather than intentionality- or depth-report probes for most mind-wandering research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

A common view is that any study finding an effect under noisy conditions provides evidence that the underlying effect is particularly strong and robust. Yet, statistical significance conveys very little information when measurements are noisy. In noisy research settings, poor measurement can contribute to exaggerated estimates of effect size. This problem and related misunderstandings are key components in a feedback loop that perpetuates the replication crisis in science (Loken & Gelman, 2017, p. 584).

…[P]erhaps, there are even some who are of the opinion that things are actually going rather well in psychological measurement. This is not the case. The daily practice of psychological measurement is plagued by highly questionable interpretations of psychological test scores, which are directly related to the lack of integration between psychometrics and psychology. (Borsboom, 2006, p. 426)

Studies of mind-wandering, therefore, highlight one of the fundamental paradoxes in studying conscious experience: without the capacity for metacognitive access to our experiences, studies of conscious experience would be almost impossible; however, our access to our own experience means that the method of inquiry as part of an experiment may fundamentally alter the conscious experience itself (Konishi & Smallwood, 2016, p. 5)

Psychology is striving to improve its methodological practices to increase the information value of its empirical literature and the soundness of its theory. Journals and other institutions (such as open-science archiving platforms and granting agencies) now support well-powered designs, preregistration of hypotheses and analysis plans, alternative statistical approaches, replication of important findings, and open sharing of code and data. Yet these solutions to questionable research practices deal only indirectly with questionable measurement practices that impede scientific progress (e.g., Borsboom, 2006). Meta-scientific research on psychology, particularly in domains using self-reports, highlights a history of such poor measurement that Flake and Fried (2020) suggest we are “plagued by a measurement schmeasurement attitude.”

Most self-report measures in counseling psychology, for example, were reported without any psychometric properties and the modal number of items per scale was one (Meier & Davis, 1990). In seven health journals (2007–2010), 40–93% of scales were reported with no validation evidence and 35–80% with no reliability statistics (Barry et al., 2014). Articles published on psychological scales in Emotion, from 2001–2011, mostly described non-validated, one-item measures of unknown reliability (Weidman et al., 2017). In the Journal of Personality and Social Psychology, 80% of self-report scales published in 2014 included reliability data, but many reliabilities were inadequate, and most articles provided no additional psychometric information (Flake et al., 2017). Even more concerning than weak reporting practices is that validity information may be missing systematically from the literature. Hussey and Hughes (2020) analyzed a large dataset including 15 published psychological scales (ns ≈ 6700 per scale) and found that scales with less published validity evidence showed poorer psychometric properties. Many self-report instruments in psychology appear to be of poor or unknown quality.

Measurement of mind wandering

In 2006, Smallwood and Schooler published their seminal review of the emerging scientific literature on mind wandering. The next decade and a half saw rapid growth in mind-wandering research, particularly within cognitive psychology and neuroscience (see Callard et al., 2013), but also across such diverse contexts as aeronautics and astronautics (e.g., Casner & Schooler, 2014; Gontier, 2017), education (e.g., Wammes, Boucher, et al., 2016; Wammes, Seli, et al., 2016), human factors (e.g., Burdett et al., 2019; Walker & Trick, 2018), lifespan development (e.g., Jackson & Balota, 2012; Soemer et al., 2019; Stawarczyk et al., 2014), personality (e.g., Perkins et al., 2015; Robison et al., 2017), philosophy (e.g., Irving, 2016; Metzinger, 2013), and psychopathology (e.g., Chen et al., 2021; Hoffmann et al., 2018; Lanier et al., 2021; Makovac et al., 2019). And, despite a research pace and impact that has supported numerous reviews and theoretical commentaries (e.g., Christoff & Fox, 2018; Klinger, 2013; Mildner & Tamir, 2019; Mittner et al., 2016; Smallwood & Andrews-Hanna, 2013; Smallwood & Schooler, 2015), little published work has focused on the validity of subjects’ self-reported mind-wandering experiences. This gap is surprising, given psychologists’ general caution regarding introspective methods. In 2018, however, Head and Helton published a critique of mind-wandering research practices of probing subjects’ thought content within tasks. Weinstein (2018) followed with a review identifying nearly 70 ways in which mind wandering had been assessed in thought-probe studies, all without considering potential consequences for valid measurement. These critiques reminded mind-wandering researchers of the potential perils of self-reports and the value of skeptically validating introspective data.

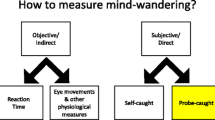

Psychological studies assess mind wandering in numerous ways that may vary in validity. Subjects might complete retrospective questionnaires about their mind-wandering frequency, or they might signal every time they realize they are mind-wandering during a task. The most common method, however, engages subjects in an activity that is unpredictably interrupted with thought probes to classify their immediately preceding thoughts (as an open-ended question, a continuous rating scale, or a forced choice among options). Such probed reports of task-unrelated thoughts (TUTs) should provide higher fidelity reports than other methods because they don’t require retrospection about ephemeral experiences (and aggregating them over minutes, hours, or weeks), and they don’t ask subjects to continuously monitor their subjective experiences.Footnote 1 The present study assesses the validity of such minimally retrospective, probed TUT reports.

General concerns about introspective self-reports

Psychological scientists may be among the most reliant on—and skeptical of—self-report data (Haeffel & Howard, 2010). That skepticism is long-standing, commonly understood to arise from the demise of Titchener’s structuralism (founded upon “systematic introspection”) and the ascendance of Watson’s behaviorism in the early 20th century.

Introspection and the imageless-thought controversy

As undergraduates learn in introductory courses (e.g., Anderson, 2005; Jahnke & Nowaczyk, 1998; Reisberg, 2016), early disagreements across laboratories about the existence of imageless thought could not be resolved from subjects’ first-person reports, thus leading the fledgling scientific field to abandon introspection as a central method. The problem with this narrative (see Hurlburt & Heavey, 2001), is that disagreements about imageless thought were not in the self-reports, but rather in theorists’ explanations for them. Monson and Hurlburt (1993) revisited the original investigations and found that it wasn’t only the subjects tested by the Külpe-Würzburg (pro-imageless-thought) school who described non-sensory, non-symbolic thoughts. Subjects tested in the anti-imageless-thought laboratories did, too, but their reports were explained away by the investigators. It was the theorists—not the subjects—who disagreed. Using modern methods, Hurlburt and colleagues regularly obtain reports of imageless, unsymbolized thinking (e.g., Heavey & Hurlburt, 2008; Hurlburt & Akhter, 2008). Irresolvable disagreements about introspections are thus not a likely barrier to valid TUT reports.

Faulty introspections biased by implicit causal theories

Hurlburt and Heavey (2001) noted a second concern about introspection: Self-reports are frequently biased and demonstrably incorrect (Nisbett & Wilson, 1977). People misreport reasons for their behaviors and experiences because: (a) many mental processes are consciously unavailable, yet (b) we have implicit theories to draw upon for “explanation” (see also Haidt et al., 2000; T. D. Wilson & Stone, 1985). These introspective errors are especially likely when subtle, surprising, or temporally distant events influence behavior.

Many skeptics of introspection forget, however, that Nisbett and Wilson (1977) distinguished the frequently erroneous self-reports about causal cognitive processes from those about the contents of consciousness (see also T. D. Wilson, 1994, 2002):

The individual…knows the focus of his attention at any given point in time; he knows what his current sensations are and has what almost all psychologists and philosophers would assert to be “knowledge” at least quantitatively superior to that of observers concerning his emotions, evaluations, and plans. (Nisbett & Wilson, 1977, p. 255)

Although people frequently err about the reasons why they feel, think, or do something, this does not imply that they frequently err about what they feel, think, or do. If thought reports focus on what is experienced rather than on why the experience came to be, then they should avoid biases from implicit causal theories. But introspective reports about consciousness can go awry in many ways, so obtaining maximally valid self-reports of mind-wandering is not trivial.

Descriptive experience sampling and careful reflections on valid self-reports

Hurlburt (1990, 1993, 2011) developed “Descriptive Experience Sampling” (DES) to study consciousness in daily life. Subjects wear an earpiece that beeps unpredictably; at each beep, subjects take stock (and written notes) of their momentary experience. Later, subjects engage in a collaborative interview with the investigator about each beep to clarify the experience. Perhaps because most DES reports can’t be corroborated by objective evidence (but see Hurlburt, 1993; Kühn et al., 2014), DES’s development has focused intensely on optimizing validity. Following Nisbett and Wilson (1977), subjects report experiences but not inferences about them to avoid bias from folk theories. Subjects report only experiences immediately preceding the signal to minimize forgetting and confabulation. To limit influence of implicit theories, subjects report on randomly selected episodes rather than on generalities or self-selected contexts. The introspection signal (i.e., beep) is clear and unambiguous to prevent interference from extraneous thoughts. DES minimizes demand characteristics by encouraging subjects to report all kinds of subjective experiences, to not lead the witness. Finally, the investigator and subject collaborate to clarify the subject’s vocabulary for describing experiences; subjects iteratively learn to communicate these experiences via feedback.

Whether or not DES is a “gold standard” self-report method, it is instructive to compare other methods to one that has taken validity so seriously. In fact, most studies that assess TUTs with thought probes share many DES features: Subjects typically report what they were thinking in the instant before the probe, and clearly signaled probes appear unpredictably. But some mind-wandering studies don’t meet these criteria, and most fall short of other DES principles. Let’s briefly consider three examples:

-

a)

Killingsworth and Gilbert (2010) probed over 2000 subjects for TUTs in daily life via a smartphone app. People receive many signals from their phones (e.g., calls, texts, notifications), so subjects likely didn’t always attend to their fleeting thought content at the signal. Moreover, subjects responded to a happiness question before reporting thought content, allowing further forgetting and, perhaps, reactivity to the mood question. Together, these design choices may have caused the higher-than-typical TUT rate found in this study (~50% vs. ~25–35%; Franklin, Mrazek, et al., 2013; Kane et al., 2007, Kane, Gross et al., 2017; Marcusson-Clavertz et al., 2016; McVay et al., 2009; Seli, Beaty et al., 2018; Song & Wang, 2012).

-

b)

Seli, Smilek, and colleagues have distinguished intentional and unintentional mind wandering (e.g., Seli et al., 2015; Seli, Risko, & Smilek, 2016a, 2016b), which is potentially critical for theory and practice (but see Murray and Krasich 2021). Probes ask whether thoughts were on-task, intentionally off-task, or unintentionally off-task. The potential concern is that subjects must convey not only the “what” of experience (i.e., whether thoughts were about the task), but also the “why” (i.e., whether mind-wandering was deliberate). Subjects’ reports might be influenced by implicit causal theories or by forgetting and confabulating how a TUT episode began or was maintained.

-

c)

Christoff, Irving, and colleagues argue that unconstrained thought movement is the defining feature of mind wandering (Christoff et al., 2016; Irving, 2016), and so Mills et al. (2018) asked subjects to report at each probe not only whether their thoughts were off-task but also whether they were freely moving. Although they instructed subjects to take a “mental snapshot” of the instant of the probe, thought movement cannot be determined from an instant, it must be monitored then recalled over time. Thus, probes for unconstrained, freely moving thought require retrospection over seconds, or minutes, and subjects may vary in how far back and how accurately they retrospect.

Although none of these examples is certain to reflect low fidelity self-reports, methods like these should prompt a skeptical consideration of validity.

Moreover, most mind-wandering studies using probed self-reports fail other DES criteria. They don’t consider demand characteristics of focusing subjects’ reports on, and repeatedly asking about, TUTs. Few studies describe how their instructions defined mind wandering, and so subjects may interpret the term or its connotations differently, biasing their reporting (see Seli, Kane, Smallwood, et al., 2018). Most investigators assume subjects comply with instructions to report only on immediately preceding thoughts, but Hurlburt and Heavey (2015) observe that many DES subjects only do so after iterative practice. Notably, few studies provide any thought-probe practice (Hu et al., 2012; Kane et al., 2016). Given the potential for bias and error, psychologists should critically examine the evidence for thought-report validity.

Evidence for probed TUT report validity

Construct validation involves the building and testing of a nomological net and the specification of causal processes for experiences and behaviors via inference from empirical findings (e.g., Borsboom et al., 2004; Cronbach & Meehl, 1955; Embretson, 1983). Researchers can thus find evidence in the mind-wandering literature that probed thought reports are valid. Even studies that were not conducted to assess the validity of TUT reports can inform whether they capture the construct.

We first consider measurement reliability as a condition for validity. In fact, individual differences in TUT rates are reliable across task and temporal contexts. Latent-variable studies that probe thoughts in multiple tasks across occasions find that TUT rates elicit a unitary factor (e.g., Hollis & Was, 2016; Kane et al., 2016; McVay & Kane, 2012b; Robison & Unsworth, 2017, 2018; Unsworth & McMillan, 2014; Unsworth & Robison, 2017b). People who mind-wander more in one task (and lab session) tend to mind-wander more in other tasks (and sessions), although TUT rates within laboratory tasks correlate only modestly-to-weakly with those from daily-life experience sampling (Kane, Gross et al. 2017; McVay et al., 2009). Measurable reliability of probed TUT rates does not guarantee the validity of thought reports, of course, because reliability might partially reflect consistency in implicit theories, (dis)honesty, or reactivity to task performance.

Substantive validity evidence comes from TUT rates varying in predictable ways with experimental manipulations of, and natural variation in, the activity context. Subjects report fewer TUTs during difficult than easy tasks (e.g., Rummel & Boywitt, 2014; Teasdale et al., 1993), during faster- than slower-paced tasks (e.g., Antrobus, 1968; Giambra, 1995), during less- than more-practiced tasks (e.g., Mason et al., 2007; Teasdale et al., 1995), and during earlier than later trials within a task (e.g., Antrobus et al., 1967; McVay & Kane, 2009; Thomson et al., 2014), even when some of these are manipulated between subjects, effectively blinding subjects to comparison of interest. TUTs also predict performance: Task error rates are higher, and RTs are more variable, immediately preceding TUT than on-task reports (e.g., Bastian & Sakur, 2013; McVay & Kane, 2012a; Seli, Cheyne, & Smilek, 2013; Smallwood et al., 2007; Wammes, Seli, et al., 2016), and subjects who report more TUTs also tend to perform worse (e.g., McVay & Kane, 2012b; Wammes, Seli, et al., 2016).

Of importance to validity, TUT–performance associations arise even: (a) for non-introspectable aspects of performance, such as intrasubject RT variability (e.g., Bastian & Sakur, 2013; Kam et al., 2012; McVay & Kane, 2012a); (b) in tasks without overt cues to performance, such as reading and lecture comprehension (e.g., Hollis & Was, 2016; Smallwood et al., 2008) and implicit learning (Franklin et al., 2016), and; (c) following errors that subjects have not detected and for subjects who detect few errors (Allen et al., 2013). Thus, TUT reports predict overt markers of inattention, even without performance feedback.

Finally, TUT reports covary with external indicators that are independent of subjects’ folk theories or performance reactivity. Psychophysiological and neuroscience methods demonstrate associations between TUT reports and pupil dilation (e.g., Franklin, Broadway, et al., 2013; Konishi et al., 2017; Unsworth & Robison, 2016), EEGs (e.g., Baird et al., 2014; Compton et al., 2019; Kam et al., 2011), and default-network activity (e.g., Christoff et al., 2009; Kucyi, 2018; Mittner et al., 2016). Ability constructs, such as working memory capacity and intelligence, predict TUT rates in lab tasks and daily-life contexts (e.g., Kane & McVay, 2012; Mrazek et al., 2012; Unsworth & Robison, 2017a). Subjects cannot intuit their neurophysiological responses, and they don’t know their standing on many cognitive constructs (and don’t have folk-theoretical commitments about their relations to TUTs), thus supporting the construct validity of TUT reports.

Together, these findings suggest that probed TUT reports are valid measures of mind wandering. We limited our discussion to results that were relatively immune to subjects’ beliefs, causal theories, or reactions to performance, thus providing compelling tests of construct validity. These studies, however, were not designed to skeptically evaluate validity and they didn’t compare probing methods to maximize validity or assess relative validity. We thus turn to recent research designed to interrogate the validity of TUT reports.

Explicit validation studies and concerns about probed mind-wandering reports

Few studies have rigorously evaluated the construct validity of probed thought reports. We first consider whether probes might alter subjects’ experiences or reports (Konishi & Smallwood, 2016). Next, we evaluate whether TUT reports may be contaminated by subjects’ awareness of task accuracy, explaining errors by inferring TUTs (Head & Helton, 2018). We then review the potentially biasing effects of different probe framings (Weinstein, 2018). Finally, we assess whether demand characteristics influence probed TUT reports.

Reactivity to probing

The reactivity of TUT reports to probing was assessed by four studies. Three tested whether probe rate affected TUT rates: Robison et al. (2019) probed subjects after 7% or 13% of task trials, Schubert et al. (2020) probed after 3% or 6% of trials, and Seli, Carriere, et al. (2013) presented 5–25 probes in a 15-min task . Results varied. Robison et al. (2019) found no probing effect on TUT rates, but both other studies found that higher probe rates yielded lower TUT rates. Regarding individual differences, Schubert et al. (2020) found no interactions of probe rate with theoretically informed covariates (such as working memory capacity) in predicting TUT rate. Varao-Sousa and Kingstone (2019) assessed whether students’ rate of self-caught TUTs during three lectures varied by including probes in only one of them. Self-caught TUTs (and their correlations with motivation and interest) did not differ across lectures. Thus, the few relevant studies provide inconsistent evidence that probe rate can alter reports, and none show probe rate to affect individual differences in TUTs.

Reactivity to performance

Head and Helton (2018) presented a go/no-go “SART” task with digits as the imperative stimuli; subjects in different between-subject conditions also saw incidental words or control screens between the digits, including prior to the final “catch trial” of the task. After the catch trial, subjects were asked whether they had just seen a word, then tried to recognize it among foils, and then reported their preceding thought content. Catch-trial TUT reports varied with catch-trial go/no-go accuracy, but not with memory performance. The authors thus argued that when probes follow no-go trials that frequently produce errors, performance appraisals bias subjects to report TUTs. In contrast, when performance accuracy is not salient, such as noticing an incidental word, TUT reports are not affected by performance. Unfortunately, Head and Hilton’s catch-trial method wasn’t a fair test because it virtually guaranteed invalid self-reports: It inserted multiple unexpected memory questions between the experience and report—thus interfering with access to the targeted conscious state; moreover, subjects made only the one thought report in the entire task, without forewarning, and only after reading multiple sentences defining mind wandering following the catch-trial memory tasks (D. Helton, personal communication, April 9, 2016).

Taking a more straightforward approach, however, Schubert et al. (2020) found evidence for reactivity by presenting probes after no-go and go SART trials, the former of which elicit salient errors. Subjects reported more TUTs following no-go than go trials, and more TUTs following no-go errors than accurate no-go responses. Both effects suggest some reactivity to performance. At the same time, go/no-go trial type did not interact with most variables of theoretical interest to predict TUTs, such as working memory capacity. The Schubert et al. (2020) findings thus indicate that TUT reports can be somewhat reactive to errors, affecting mean TUT rates, but they don’t convincingly demonstrate that mind wandering’s associations with other constructs reflect performance reactivity.Footnote 2

Biases from thought-probe framing

Four studies addressed Weinstein’s (2018) concern that the blithe proliferation of probing methods, without careful validation or consultation of self-report research (e.g., Krosnick, 1999; Schwarz, 1999), may hinder cumulative science. In two separate experiments, Robison et al. (2019) examined whether the instructional framing of mind-wandering as more positive or negative, or whether the number of thought-report options in each probe, would affect TUT reports. Instructional framing had no measurable effects. However, when probes offered only two response options (on-task vs. TUT), TUT rates were higher than with three response options (including “off-task” thoughts about one’s performance), and these were higher than with five options (including externally-oriented distractions and mind-blanking). Thus, subjects’ classification of TUTs may depend to some degree on the response options available.

Both Weinstein et al. (2018) and Schubert et al. (2020) tested whether subtle differences in probe framing influence TUT rates: Probes asked subjects whether they had just been mind-wandering (yes/no), or whether they had just been on-task (yes/no) in Weinstein et al. (2018), and probes presented the response options “on-task” on the left and “off-task” on the right—or vice versa—in Schubert et al. (2020). In Weinstein et al. (2018), mind-wandering-framed probes elicited higher TUT rates (M ± SD = 34 ± 23%) than did on-task-framed probes (23 ± 21%). In contrast, in Schubert et al. (2020), left–right framed probes had no effect on TUTs and no interactions with performance, probe rate, or working memory (a few higher-order, unpredicted interactions involving probe framing should be replicated before conclusions are warranted). In summary, probe framing and probe response options may have some modest effects on mean TUT rates, but we don’t yet have evidence for their effects in relation to experimental manipulations or individual differences.

Biases from demand characteristics

Two studies tested for demand characteristics. Zedelius et al. (2015) compared a control group to those told that an eyetracker would monitor their eyes while reading to verify their TUT reports. Probed TUT rates didn’t differ among groups. In contrast, Vinski and Watter (2012) primed honesty in half their subjects with a synonym task (Rasinski et al., 2005). Mean TUT rate during a subsequent SART was higher for the control than the honesty group, suggesting that controls over-reported TUTs. However, only the honesty group showed a prototypical RT effect, with faster go-trial RTs preceding TUTs than on task-reports, and it’s unclear why the control group did not. Given this odd RT pattern and the fact that, like many goal-priming studies, the original Rasinski honesty-prime finding has failed direct replication attempts (Pashler et al., 2013), we are skeptical of the findings. We see little compelling evidence for demand effects on TUT reports.

The present study: Goals, questions, and approach

Mind-wandering research has largely assumed that different methods yield comparably valid results (Weinstein, 2018). Recent studies of probe methods, however, suggest validity threats: TUT rates may be influenced by probing rate, reactivity to errors, and probe framing. The present study further assessed the construct validity of TUT reports. The primary aim was to test whether TUT-rate correlations, as well as mean TUT rates, were affected by probing subjects for three different report types used frequently in the literature.

Although it is of limited interest that probe types affect mean TUT rates (Robison et al., 2019; Weinstein et al., 2018), theoretical claims about mind wandering hinge on variation in TUT rates in response to experimental manipulations or in association with individual-differences constructs. Only Schubert et al. (2020) tested competing probes by correlating TUT rates with other variables. The present study further does so by probing in two tasks and by testing correlations with numerous theoretically motivated variables.

In contrast to subtle probe variations investigated previously (Robison et al., 2019; Schubert et al., 2020; Weinstein et al., 2018), which may underestimate probe-framing effects, the present study’s three primary probe types asked for reports about distinct thought dimensions: (a) TUT content, or the “what” of subjects’ experience (thinking about the task versus thinking about one’s current state, worries, everyday things, external distractions, or daydreams; e.g., McVay & Kane, 2009; Smallwood et al., 2011); (b) TUT intentionality, or the “why” of subjects’ experience (thinking about the task versus intentionally or unintentionally mind-wandering; e.g., Forster & Lavie, 2009; Seli, Risko, & Smilek (2016a); or, (c) TUT depth, or the “how much” of subjects’ experience (the graded extent to which their thoughts were on-task versus off-task; Christoff et al., 2009; Franklin et al., 2011; Mrazek et al., 2012). Each of these distinct probe types has been used frequently in the literature and so our conclusions should apply directly to prior (and future) mind-wandering research. Our main analytic approach collapsed over all response options reflecting TUTs in each probe-type and then compared TUT rates and TUT-rate associations across methods. Subsequent fine-grained analyses explored whether TUT-intentionality and TUT-depth reports were vulnerable to validity threats commonly associated with self-reports of intention (e.g., Nisbett & Wilson, 1977) and numerical rating scales (e.g., DuBois & Burns, 1975; Schwarz, 1999).

Our secondary aim was to explore the consequences of thought probes not including an option to report evaluative thoughts about performance (“task-related interference” [TRI]; Matthews et al., 1999; Smallwood, Obansawin, & Heim 2003). Subjects report TRI in response to both closed-ended (e.g., Jordano & Touron, 2017; Kane, Smeekens, et al., 2017; Mrazek et al., 2011; Stawarczyk et al., 2011) and open-ended probes (Jordano, 2018). Most studies of TUT, however, do not include a TRI response option, so TRI must be reported as another category (perhaps as on-task, as it’s task-related). Robison et al. (2019) used probes that included a TRI option or not. Although TUT rates changed somewhat across probe groups, on-task reports changed dramatically, suggesting that most TRI reports in no-TRI conditions are reported as on-task. The present study conceptually replicates and extends Robison et al.

To address all study aims about TUT measurement, we tested a large subject sample from two U.S. universities in two tasks with embedded thought probes, manipulating probe types between subjects (ns > 260 per group), following each thought probe with a confidence rating prompt, and following each probed task with a retrospective questionnaire assessment of off-task thinking. Across probe-type conditions, our analyses will compare TUT rate means and consistency across probed tasks, TUT-report confidence, associations with in-the-moment RT variability, associations with in-the-moment response accuracy, correlations with retrospective off-task reports, correlations with a composite measure of executive-control performance, correlations with a composite questionnaire measure of distractibility and restlessness, and correlations with a composite questionnaire measure of positive-constructive daydreaming. Thus, the study primarily takes a combined experimental–individual-differences approach to rigorously assess the construct validity of probed TUT rates (and TRI rates) elicited by different thought probes.

Method

Below we report how we determined our sample size and all data exclusions, manipulations, and measures in the study (Simmons et al., 2012). IRBs at the University of North Carolina at Greensboro (UNCG) and Western Carolina University (WCU) approved the study.

Subjects

We tested 760 UNCG and 348 WCU students (total N = 1108), from 2015 to 2018. UNCG and WCU are comprehensive state universities in North Carolina, USA, with UNCG in a more urban and WCU in a more rural setting; UNCG is a Minority-Serving Institution for African American students. Eligible subjects were 18–35 years old. We provide demographics for subjects from whom we analyzed data under Results.

Our stopping rule was the end of the semester in which we reached 210 subjects in each of four probe conditions who had completed the cognitive and questionnaire measures (exceeding the sample size to detect correlations ≥ .20 with two-tailed tests, an α-level of .05, and 80% power). We reached this mark early in the final semester and continued testing at UNCG to approximate 250 subjects per condition, allowing more precise correlation effect-size estimates in the .10–.20 range (Schönbrodt & Perugini, 2013). During the first two semesters at WCU (N = 173), subjects completed only the cognitive measures.

Apparatus and materials

We programmed all measures in E-Prime 1.2 or 2.0 software (Psychology Software Tools, Pittsburgh, PA 2012). Mac Mini computers with Acer V226WL 22″ widescreen LCD monitors (at UNCG), and Dell OptiPlex 9020 minitower computers with Dell P2214H 22″ widescreen LCD monitors (at WCU) presented all stimuli and recorded all responses.

Measures

We describe the cognitive tasks and self-report questionnaires in the order in which subjects completed them, followed by descriptions of the thought probes that appeared within two tasks.

Antisaccade letters (ANTI-LET)

On each of 90 trials, subjects directed attention away from a salient flashing cue to identify a masked letter (B, P, or R) at opposite side of the screen. Trials began with a central fixation array (***) for 200–1800 ms (in 400 ms increments). A flashing cue (=) then appeared 8.6 cm to the left or right for 100 ms, disappeared for 50 ms, appeared for 100 ms, then disappeared for 50 ms. The target letter then appeared 8.6 cm from fixation in the cue-opposite direction for 100 ms before being masked by an “H” (50 ms) then “8” (until response, or a maximum of 10 s). All stimuli appeared in Courier New 12 pt font. Subjects identified letters using keys labeled B, P, or R by stickers; a 400-ms blank screen followed each response. The task began with 36 trials of practice (12 trials for each letter) with masked target letters presented at central fixation, and then 12 practice trials with antisaccade cuing, all with visual accuracy feedback after each trial. Accuracy rate was the dependent variable. Due to a programming error, stimuli were presented slightly differently between the two sites. At WCU, the flashing cues and target arrows appeared 7.1 cm from fixation instead of 8.6 cm.

Semantic Sustained Attention to Response Task (SART)

On each trial, subjects pressed the space bar (“go”) when they saw an animal name (89% of trials) and withheld response (“no-go”) when they saw a vegetable name (11%). Each word appeared at fixation for 300 ms and was masked by 12 Xs for 1500 ms. The program divided trials into five seamless blocks of 135, each comprising three mini blocks that each presented 40 unique animals (“go” trials) and five unique vegetables (“no-go” trials). Probes appeared after three of the five no-go trials in each mini block, for 45 total.

Subjects first practiced 10 trials presenting boys’ names for “go” and girls’ names for “no-go;” the real task began with 10 unanalyzed buffer trials and then 675 critical trials. Dependent measures were a d′ accuracy score and intraindividual RT variability (i.e., the SD of each subject’s go-trial RTs); we subtracted each subject’s RTsd from the maximum RTsd value in the dataset, so higher scores meant better performance.

Dundee Stress State Questionnaire 1 (DSSQ1)

Immediately following the SART, subjects answered 12 questions (in random order) about their experiences, drawn from the Thinking Content subscale of the DSSQ (dropping items 2, 6, 10, and 12; Matthews et al., 1999). Each item asked about thought frequency of various topics during the SART; subjects responded by clicking their choice along a 1–5 scale labeled, “Never,” “Once,” “A Few Times,” “Often,” and “Very Often.” Each question remained onscreen until response. Subjects could skip a question by clicking a “Submit” icon without having clicked on a response choice. A small pop-up box then asked, “Did you mean to skip this question?” along with a “Yes” and a “No” option; if the subject clicked “Yes” the program moved to the next item, but if the subject clicked “No” the program re-presented the item. We separately coded six questions related to thoughts about task performance as a “TRI” subscale, and six questions related to TUTs as a “TUT” subscale. We used mean ratings for TRI and TUT items as dependent variables.

Antisaccade arrows (ANTI-ARO)

This antisaccade task presented 72 trials presenting masked target arrows pointing left, right, up, or down. Trials began with a central fixation array (***) for 250–2250 ms (in 500 ms increments). A flashing cue (=) then appeared 17.0 cm to the left or right of fixation for 80 ms, disappeared for 50 ms, appeared for 80 ms, then disappeared for 50 ms. The target then appeared 17.0 cm from fixation in the cue-opposite direction for 100 ms before being masked by a “+” (50 ms) and a “❖” symbol (until response, or a maximum of 10 s). Subjects responded with the 2, 4, 8, and 6 keys on the number keypad for down, left, up, and right arrows, respectively. Twenty practice trials (5 trials for each direction) presented masked arrows at fixation and with visual accuracy feedback after each trial. We used accuracy rate as the dependent variable.

Due to a programming error, stimuli were presented differently between the two sites. At WCU, the cues and arrows appeared only 14.4 cm from fixation. Because of this large difference between sites (which produced a 13% accuracy improvement from ANTI-LET to ANTI-ARO for WCU subjects but a 1% drop for UNCG subjects), we z-scored accuracy from this task separately by site before combining data across sites.

Arrow flanker (FLANKER)

We used this task only as a secondary source of thought-probe data. On each of 192 trials (divided into two seamless blocks of 96), subjects reported the direction of an arrow at fixation (“<” vs. “>”) flanked by four distractors. After a 500 ms blank screen, a fixation cross (“+”) appeared for 350 ms, followed by the stimulus array, which presented either neutral flankers (“•”; 48 trials), congruent flankers pointing the same direction as the target (48 trials), incongruent flankers pointing the opposite direction as the target (48 trials), or incongruent flankers pointing upward (48 trials), until response. Subjects pressed the “z” key (labeled with an “L” sticker) for left-pointing targets and the “/” key (labeled with an “R” sticker) for right-pointing targets.

The task began with 10 practice trials without flankers, then 10 practice trials with flankers. Thought probes appeared after four of the first 96 test trials and after 16 of the second 96 trials, for 20 total; half the probes followed trials presenting incongruent flankers pointing the opposite direction of the target and half followed trials presenting flankers pointing upwards.

Dundee Stress State Questionnaire 2 (DSSQ2)

Immediately following the flanker task, subjects again completed the DSSQ, here about thoughts during the flanker task. We analyzed mean ratings for the six TRI items and for the six TUT items.

Questionnaire battery

Following the cognitive tasks, subjects completed a 202-item self-report battery made up of items from several scales. Items appeared in a random order for each subject, except for 20 items at fixed positions to assess careless responding. All items presented a 1–5 response scale, labeled from left to right as, “Strongly Disagree,” “Disagree,” “Neutral,” “Agree,” and “Strongly Agree,” although not all the original questionnaires used this response format. As with the DSSQ, subjects could choose to skip individual questions and were prompted with a confirmation pop-up if they did so. We first describe the scales of interest from which we drew items (in alphabetical order), and then the scales that assessed inattentive and careless responding. For all scales of interest, we computed a mean score after reverse-scoring appropriate items.

AD/HD Rating Scale IV–Self-Report Version

We included the first 18 of 20 items (DuPaul et al., 1998), none of which explicitly mentioned AD/HD; nine items asked about inattentiveness symptoms (e.g., making careless mistakes) and 9 about hyperactivity (e.g., talking excessively). We modified all items to ask about childhood symptoms by beginning them with either, “During childhood…,” “As a child…,” or “When I was young…” We derived separate inattentiveness and hyperactivity scores from the scale.

Cognitive Failures Questionnaire–Memory and Attention Lapses (CFQ–MAL)

Based on principal components analysis from McVay and Kane (2009), we selected the top 12 loading items (all > .60) not mentioning mind wandering or daydreaming. Items asked about failures such as forgetting things at home and leaving a step out of a task (two questions were related to mind wandering: keeping one’s mind on a job and failing to notice one hasn’t been attending to an ongoing activity). Because the original subscale used frequencies (e.g., “never”, “very often”) as response options, we revised some items to fit a disagree–agree scale (e.g., we revised “Are you unable to find something that you put away only a couple of days ago?” to “I’m often unable to find something that I put away only a couple of days ago.”); these revisions yielded seven regularly scored items (higher ratings = more failures) and five reverse-scored items.

Creative Achievement Scale (CAS)

We included 12 of the original CAS items, about music, visual arts, or writing (four items each; Carson et al., 2005). In each domain, items asked about progressively more significant accomplishments. For example, in visual art, questions asked about taking art lessons, winning a prize at a juried art show, selling a piece of art, and having artwork critiqued in a significant publication.

Imaginal Process Inventory (IPI) Boredom scale

This IPI (Singer & Antrobus, 1970) subscale presented 12 items about being easily bored (with reverse-scored items about interest in everyday things).

IPI–Daydreaming Frequency scale

This IPI subscale presented 12 items about being lost in thought. Because the original subscale used frequencies as response options, we revised some items to fit a disagree–agree scale (e.g., we revised “I daydream” to “I daydream frequently”); these revisions yielded 10 regularly scored items (higher ratings = more daydreaming) and 2 reverse-scored items.

IPI–Mentation Rate scale

This IPI subscale presented 12 items about experiencing racing and active thoughts (with reverse-scored items about slow thoughts and mind-blanking).

IPI–Mind Wandering scale

This IPI subscale presented 12 items about mind-wandering frequency and concentration difficulties (with reverse-scored items about easily focusing).

IPI–Problem Solving Daydreams scale

This IPI subscale presented 12 items about solving problems, and seeing importance, in daydreams (with reverse-scored items about non-pragmatic daydreams).

Schizotypy–Magical Ideation scale

We used all 15 items from the short form of the Magical Ideation scale (Winterstein et al., 2011). This measure, designed to assess a dimension of positive schizotypy, asked about beliefs and experiences reflecting paranormal, superstitious, or bizarre influences on thought and behavior, or about everyday events having referential meaning (e.g., “I have sometimes felt that strangers were reading my mind;” “I have occasionally had the silly feeling that a TV or radio broadcaster knew I was listening to him.”); one reverse-scored item denied a common superstition.

Metacognitive Prospective Memory Battery

The questionnaire included only the seven “internal” items from the measure from Rummel et al. (2019), asking about strategies for fulfilling intentions not involving external aids (e.g., “In my mind, I make a list of things that I still have to complete.”).

Mind Wandering–Deliberate scale

The four items from Carriere et al. (2013) asked about intentional mind wandering (e.g., “I find mind-wandering is a good way to cope with boredom;” “I allow my thoughts to wander on purpose.”).

Mind Wandering–Spontaneous scale

The four items from Carriere et al. (2013) asked about propensity for unintentional mind wandering (e.g., “It feels like I don’t have control over when my mind wanders;” “I find my thoughts wandering spontaneously.”).

NEO Conscientiousness scale

We used nine NEO-FFI-3 items (McCrae & Costa Jr., 2010), with three items each representing Dutifulness, Achievement, and Self-Discipline facets. Items asked about dependability, goal striving, and being productive and efficient.

NEO Openness scale

We included all 12 NEO-FFI-3 items, with two items reflecting the Fantasy facet, one reflecting Actions, three reflecting Aesthetics, two reflecting Feelings, three reflecting Ideas, and one reflecting Values; it also included the remaining six Fantasy items from the NEO-PI-R (McCrae & Costa Jr., 2010). Items asked about imaginativeness and enjoying daydreams, learning new activities, aesthetic chills and pattern seeking, experiencing and noticing emotions, intellectual curiosity, and exposure to controversial viewpoints.

Spontaneous Activity Questionnaire scale (SAQ)

The eight SAQ items from Carriere et al. (2013) asked about fidgeting (e.g., “I often fidget when I am planning ahead for something”). Because the original subscale used frequencies as response options, we revised all items to fit a disagree–agree scale (e.g., we revised “I fidget” to “I fidget a lot”); these revisions yielded five regularly scored items (higher ratings = more fidgeting) and three reverse-scored items.

White Bear Suppression Inventory

The 15 items from Wegner and Zanakos (1994) asked about uncontrollable thoughts and avoiding unwanted thoughts (e.g., “I have thoughts that I cannot stop;” “I always try to put problems out of mind”).

Attentive Responding Scale (ARS) Infrequency subscale

Instructions for the questionnaire battery forewarned the inclusion of attention-check items, to minimize backfire effects from odd questions:

In this task, you will answer various questions about your everyday thoughts and experiences. For each question, please rate how strongly you disagree or agree with each statement. A few questions will be odd or silly, as a way to be sure you’re paying attention. Please just answer each question carefully and honestly.

The questionnaire included the six infrequency items (three reverse scored) from the 18-item ARS scale (Maniaci & Rogge, 2014), appearing here for all subjects as questions 31, 41, 71, 81, 131, and 141. These items were created to yield the same answers for all subjects (e.g., “I don’t like getting speeding tickets;” “I enjoy the music of Marlene Sandersfield;” “I’d rather be hated than loved”). In addition, we included two questions that directed subjects to select a response, inspired by the Directed Question Scale (Maniaci & Rogge, 2014): “I will show I am paying attention by selecting choice Strongly Agree;” “To show I’m reading this item carefully, I will select choice Strongly Disagree.” For all subjects, the directed items appeared as questions 101 and 121. From the six infrequency and two directed items we created an 8-item Infrequency scale.

For each of the original infrequency items, we scored it as a 0 if the subject selected a response on the appropriate side of neutral; we scored it as one point if the subject selected response “3” (Neither agree or disagree) and as two points if the subject selected either response on the inappropriate side of neutral. For each directed question item, we scored it as a 0 if the subject selected the correct response (1 or 5), but as a 1–4 for any erroneous response, reflecting the number of choices away from the correct response. For any skipped item in the Infrequency scale, the subject earned two points. Extrapolating from the cut-offs from Maniaci and Rogge (2014), who used more infrequency items and a slightly different scoring scheme, we dropped all questionnaire data for subjects scoring > 4.

ARS Inconsistency subscale

The questionnaire included the six inconsistency pairs from the 18-item ARS, with the paired-items appearing for all subjects as questions 11 and 151, 21 and 161, 51 and 171, 61 and 181, 91 and 191, and 111 and 201. Both members from each pair should yield consistent responses for a given subject, whether or not they endorse the items (e.g., “I enjoy relaxing in my free time” and “In my time off I like to relax”). We scored each item pair by taking the absolute numerical difference between the two responses; for example, identical choices = 0 and maximally discrepant choices (e.g., Strongly agree to Strongly disagree) = 4. Any skipped item earned two points for that pair. Extrapolating from the endorsed cut-offs (based on more inconsistency items), we dropped all questionnaire data for subjects scoring > 5.

Demographic Questionnaire

Subjects reported their sex/gender (via free response), age (via free response), ethnicity ( “Hispanic or Latino” or “not Hispanic or Latino”), race (“Asian,” “Black: African or Caribbean descent,” “Native American or Alaskan Native,” “Native Hawaiian or Pacific Islander,” “White: European or Middle Eastern descent,” or “Multiracial”) and college major (via free response; we did not analyze these data). A final question asked whether subjects had previously participated in a thought-probe study.

Thought probes

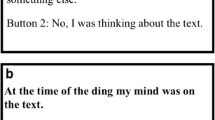

Subjects saw one of four probe types in both SART and flanker tasks (i.e., probe type repeated across tasks). All probes asked subjects to characterize their immediately preceding thoughts—in the instant before the probe—via forced-choice response. Our three primary probe types differed in the dimensions of thought assessed: Content probes asked subjects about the topics of their mind-wandering (“What”), intentionality probes asked about the reasons for their mind-wandering (“Why”), and depth probes asked about the extent or extremity of their mind-wandering (“How Much”). A secondary, fourth probe type also assessed thought content, but did not include a content category for thoughts about their task performance (“task-related interference;” TRI).

A second screen asked subjects to rate their confidence in their preceding thought report on a vertically oriented 1–5 scale, labeled on-screen, from top to bottom, as: 1. Not at all confident; 2. Somewhat confident; 3. Confident; 4. Very confident; 5. Extremely confident. Subjects pressed the keyboard key corresponding to their choice.

Subjects responded to each probe by pressing the key corresponding to one of the numbered options. RTs were recorded but we imposed no time limits. After explaining how probes worked and would appear, the experimenter read these instructions aloud (subjects saw “category” in the bracketed text below for content and intentionality probes, and “response” in the bracketed text for depth probes):

Remember, when you see a screen like this, please respond based on what you were thinking *just before* the screen appeared. Do not try to reconstruct what you were thinking during the preceding words on the screen, and please select the [category/response] that best describes your thoughts as accurately as you can. Remember that it is quite normal to have any of these kinds of thoughts during an ongoing task.

Content probes

Our primary content (“What”) probes, with TRI included, provided eight response options, numbered in the following order in a vertically oriented list. The italicized text appeared at each probe, and the experimenter explained the choices via instructions at the beginning of the task: (1) The task, for thoughts about task stimuli, required responses, or task goals; (2) Task experience/performance, for thoughts about one’s performance or task difficulty (TRI); (3) Everyday things, for thoughts about routine events in the recent or distant past or future; (4) Current state of being, for thoughts about physical or emotional states; (5) Personal worries, for thoughts about life concerns; (6) Daydreams, for fantasies or thoughts disconnected from reality; (7) External environment, for thoughts about objects or task-unrelated events in the room; (8) Other, for any thoughts not captured by the other choices.

Our secondary content probes, with TRI excluded, presented probes exactly like those above, but without response option 2. Otherwise, each of the response options above was numbered from 1 to 7. In both content-probe conditions, the experimenter read these instructions before explaining the thought probes:

It is perfectly normal to think about things that are not related to the task. We will give you several categories of things that people might think about during tasks like these. Please try your best to honestly assess your thoughts and choose a category that best describes your thoughts at the time when we ask.

Intentionality probes

Our intentionality (“Why”) probes asked whether subjects had been mentally on- or off-task and, if off-task, whether they’d been so intentionally or unintentionally. The experimenter explained TUTs and intentionality by reading these on-screen instructions:

It is perfectly normal to think about things that are not related to the task. For instance, you may think about off-task things such as something you did recently or will be doing later, your current emotional or physical state, personal worries, daydreams, or your external environment. Please try your best to honestly assess your thoughts and choose a response that best describes your thoughts at the time when we ask.

There are generally two paths to off-task thinking. INTENTIONAL: Sometimes when you’re working on a task, you deliberately or intentionally think about things unrelated to the task. This is what we refer to as intentional off-task thinking. That is, when you deliberately think about something other than the task. UNINTENTIONAL: Other times when you’re working on a task, you unintentionally think about things unrelated to the task. This is what we refer to as unintentional off-task thinking. That is, when you spontaneously think about something other than the task.

Each probe presented three numbered response options on-screen, appearing in a vertically oriented list: (1) The task; (2) Off-task: Intentional (on purpose); (3) Off-task: Unintentional (spontaneous).

Depth probes

Our depth (“How Much”) probes asked subjects to rate the extent to which their immediately preceding thoughts were on- or off-task, using a 1–5 scale. The experimenter explained TUTs by reading these on-screen instructions:

It is perfectly normal to think about things that are not related to the task. For instance, you may think about off-task things such as something you did recently or will be doing later, your current emotional or physical state, personal worries, daydreams, or your external environment. Please try your best to honestly assess your thoughts and choose a response that best describes your thoughts at the time when we ask.

Each probe presented five numbered response options on-screen, appearing in a vertically oriented list: (1) Completely on-task; (2) Mostly on-task; (3) Both on the task and off-task; (4) Mostly off-task; (5) Completely off-task (e.g., Franklin et al., 2011; Mrazek et al., 2012).

General procedures

Subjects completed a 100–120 min session in groups of 1–4, each at their own workstation (except during two semesters at WCU, where subjects completed only the cognitive tasks in a 60-min session). An experimenter read aloud on-screen instructions and remained to answer questions and monitor behavior. “Wait” screens appeared at the end of each task to prevent subjects from advancing until all subjects finished.

Results

This study presented a challenge for defining an analytic approach. It was exploratory insofar as we didn’t entertain theoretically derived hypotheses about which probe types might elicit discrepant effects. The study asked a specific question, however—whether commonly used probe types, which assess distinct dimensions of subjective experience, yield different results—and a set of outcomes we took to be most important (although not preregistered, we describe most outcomes and analyses in a rudimentary analysis-plan document finalized after data collection but before we analyzed the full dataset, posted along with our data at https://osf.io/vs2u5/.

We took a frequentist approach to analyses—while considering point estimates, confidence intervals, and effect sizes—to provisionally answer our validity questions about probe-types and to prioritize findings as worthy of future replications. In keeping with the exploratory aspect of the study, we erred toward false positives by not applying a correction for multiple comparisons. (Moreover, our analyses addressed many related questions that were not clearly separable to guide familywise error correction.) However, because we conducted many analyses, we exerted some modest control over Type I error by adopting an alpha level of .005 throughout (and we report, where applicable, 99.5% confidence intervals and exact p values > .001).

We supplemented some of our frequentist analyses with Bayes factors (BFs). BFs allowed us to compare predictive performance of competing models (Kass & Raftery, 1995), providing a continuous measure of evidence. Our null model reflected a Cauchy distribution (50% of the distribution was between d = −.707 and d = .707). We interpreted BFs < 0.33 as providing modest evidence for the null and BFs > 3.0 as providing modest evidence for the alternative hypothesis, and BFs < .10 as providing strong evidence for the null and BFs > 10 providing strong evidence for the alternative hypothesis.

We originally intended to analyze all four thought-probe conditions together but modified the plan upon preparing a presentation on these data (Kane et al., 2018), which suggested a question-focused organization. Thus, we first report analyses for the three probe conditions addressing our primary questions about valid TUT probing (content probes with TRI included, intentionality probes, and depth probes), and then report analyses for our two content probe conditions to address our secondary questions about TRI.

Data analysis exclusions

UNCG and WCU students were eligible to enroll if English was their native language, they were 18–35 years old, they were enrolled in introductory psychology, and they had not participated in a thought-probe study. We followed the conservative post-enrollment data exclusion criteria used by Kane et al. (2016) wherever possible. The Appendix describes the criteria used to exclude data based on experimenter reports, missing tasks, outlying task scores, and questionnaire responses, and it specifies the number of subjects whose data were dropped at each stage.

Final sample demographics

Our final sample of 1067 subjects had a M age of 19.0 (SD = 1.9; n reporting = 1066). Regarding gender, 63.7% self-identified as female, 36.0% as male, and 0.1% identified in some way as non-binary (n reporting = 1065). The racial composition of the sample (n reporting = 1041) was Asian = 3.4%, Black (African or Caribbean descent) = 31.4%, Native American or Alaskan Native = 1.1%, Native Hawaiian or Pacific Islander = 0.4%, White (European or Middle Eastern descent) = 55.8%, and multiracial = 6.8%; self-reported ethnicity, asked separately, was 7.2% Latino/a or Hispanic (n reporting = 1065).

Content versus intentionality versus depth probe reports

To allow TUT-rate comparisons across probe types, we translated the 1–5 ratings from the depth condition into a categorical TUT definition that was comparable to the other conditions (subsequent analyses will treat depth ratings as continuous). We established a point along the scale above which a thought should be considered a TUT. The cut-point was the value producing M TUT rates from the first task (SART) closest to those from the content and intentionality conditions—these were both close to 50%. Figure 1 shows that defining a TUT as any rating of ≥ 3 (both on the task and off-task) yielded approximately a 50% TUT rate average. Subjects in the depth condition thus seemed to treat an evenly split focus of attention (or, perhaps, any probe response choices that had “off-task” in the label) in the same way that subjects treated TUT experiences in the content and intentionality probe conditions. Of note, a daily-life study that probed subjects with either 1–5 depth probes or categorical on/off-task probes similarly found that a cut-point of ≥ 3 on depth probes produced matching M TUT rates to the categorical condition (37 vs. 40%; Seli, Beaty, et al., 2018).

Percent task-unrelated thought (TUT) reports in the Sustained Attention to Response Task (SART) from the depth probe condition, as a function of defining TUTs as thought ratings of ≥ 2, ≥ 3, ≥ 4, or 5. Box plots present the 25th, 50th, and 75% percentiles; whiskers extend to the smallest and largest values within 1.5 times the inter-quartile range. Means are presented as triangles; circles represent individual subjects’ TUT rates

Comparing means and consistency across probe types and tasks

Our first analyses tested whether TUT rates or confidence in TUT reports differed by probe type. Our analyses also tested for any differential consistency of TUT reports and TUT confidence ratings across SART and flanker tasks.

Mean TUT rates

Our most basic questions were whether different probes yielded different TUT rates and whether these showed differential cross-task stability. The data in Fig. 2 suggest that TUT rates were similar across probe types in the SART. TUT rates diverged, however, in the subsequent flanker task, dropping less from the SART to flanker in the content (“What”; n = 266) condition than in the intentionality (“Why”; n = 263) and depth (“How Much”; n = 269) conditions.

Percentage of task-unrelated thoughts (TUTs) reported in the Sustained Attention to Response Task (SART) and the flanker task, for subjects in the content (“What”), intentionality (“Why”), and depth (“HowMuch”) probe conditions. Box plots present the 25th, 50th, and 75% percentiles; whiskers extend to the smallest and largest values within 1.5 times the inter-quartile range. Means are presented as triangles

We tested these impressions with a linear mixed model (LMM) approach using the lme4 package (Bates et al., 2015). Starting broad then narrowing, we first examined an ANOVA table, then parameter estimates for each level of the factor, and finally all pairwise critical comparisons (made with the Least Square Means package [Lenth, 2016]). We computed p values for parameter estimates (i.e., at the second stage of this inferential approach) using the Satterthwaite approximation contained in the lmerTest package (Kuznetsova et al., 2017), which produces p values in line with actual false positive rates (Luke, 2017). The model predicted TUT rate with task and probe-type condition as fixed-effect predictors and subjects as the random effect. The ANOVA results indicated that TUT rates were higher in the SART than the flanker task, F(1, 795) = 242.71, p < .001, TUT rates differed across probe types, F(2, 795) = 10.69, p < .001, and probe-type interacted with task, F(2, 795) = 15.54, p < .001.

At the parameter level, we explored the probe-type × task interaction with TUT rate from content probes in the flanker task set as the reference condition. The difference in TUT rates between content and intentionality probe conditions in the flanker task was significantly larger than that in the SART, b = .09, SE = .02, t(795) = 4.29, p < .001, as was the difference between the content and depth conditions, b = .10, SE = .02, t(795) = 5.23, p < .001 (although the cross-task changes in TUT rate were significant for all probe-type conditions; for all paired contrasts, ts > 4.51, ps < .001).

Moreover, in the SART, TUT rates did not differ significantly across probe types (Ms = .52, .49, .48 for content, intentionality, and depth probes, respectively); for all paired contrasts from the linear mixed effects model, ts < 1.65, ps > .09. To aid interpretation of these null effects, we calculated BFs from t tests for these comparisons using the BayesFactor package (Morey & Rouder, 2018); these analyses yielded BF10 = .10, .21, and .30, each indicating data more in favor of the null model than the alternative model.

In the flanker task, however, TUT rates were higher in the content (M = .45) than intentionality (M = .34) condition, b = 0.12, SE = 0.02, t(1142.1) = 5.06, p < .001, and depth (M = .31) condition, b = .14, SE = .02, t(1142.1) = 6.17, p < .001; the latter two did not differ, b = 0.02, SE = 0.02, t(1142.1) = 1.08, p = .283. BFs for the contrasts of content versus intentionality probes (BF10 = 3173.37), and content versus depth probes (BF10 = 197,320.30) yielded strong evidence favoring the alternative over the null model; for intentionality versus depth probes, however, BF10 = .16, providing more support for the null than the alternative model. So, although probe types did not measurably affect TUT rates in the SART, they did in the flanker task (with TUT rates remaining more stable for the content condition across tasks than for the other conditions), our first indication that not all probed assessments of mind-wandering are created equal.

TUT rate correlations

We next assessed whether individual differences showed similar cross-task reliability across probe types by correlating SART TUTs with flanker TUTs. All correlations were substantial and significant, with nearly identical effect sizes and 99.5% confidence intervals. For content probes, r(264) = .61 [.49, .71], for intentionality probes, r(261) = .64 [.53, .73], and for depth probes, r(267) = .65 [.54, .74]. We find no evidence that probe type influenced variation in, or cross-task stability of, TUT rates.

Mean TUT confidence ratings

We next assessed whether confidence in TUT reports varied with probe type, and whether confidence differed in cross-task stability among probe types. We used data for all subjects with at least one TUT report. Figure 3 (which also presents confidence in on-task reports, discussed later) suggests that M confidence in TUT reports increased from the SART to flanker task, and subjects more confidently reported the content of TUTs than intentionality or depth. The ANOVA table results indicated that TUT confidence ratings were higher in the flanker than the SART, F(1, 711.7) = 99.45, p < .001, and confidence differed across probe types, F(2, 779.0) = 16.17, p < .001; they did not, however, produce a significant probe-type × task interaction, F(1, 711.5) = 0.42, p = .658.

Mean confidence ratings for task-unrelated thought (TUT) and on-task (OnTask) reports in the SART and the flanker task, for subjects in the content (“What”), intentionality (“Why”), and depth (“HowMuch”) probe conditions. Box plots present the 25th, 50th, and 75% percentiles; whiskers extend to the smallest and largest values within 1.5 times the inter-quartile range. Means are presented as triangles

Paired contrasts from the LMM indicated that, in the SART, TUT confidence was significantly greater for content than intentionality probes, b = 0.29, SE = 0.09, t(1010.2) = 3.11, p = .002, and depth probes, b = 0.45, SE = 0.09, t(1008.9) = 4.90, p < .001; the latter two did not differ significantly, b = 0.16, SE = 0.09, t(1010.3) = 1.77, p = .076. Again, to strengthen interpretation of these effects, we calculated BFs from t tests for these comparisons. BFs for the contrasts of content versus intentionality probes (BF10 = 18.93), and content versus depth probes (BF10 = 25,566.20) indicated strong evidence favoring the alternative over the null model; for intentionality versus depth probes, however, BF10 = .54, providing weak support for the null over the alternative model.

Similarly, in the flanker task, TUT confidence was significantly greater for content than intentionality probes, b = 0.30, SE = 0.09, t(1064.2) = 3.24, p = .001, and depth probes, b = 0.52, SE = 0.09, t(1089.1) = 5.45, p < .001; the latter two did not differ significantly from each other, b = 0.21, SE = 0.10, t(1108.3) = 2.21, p = .028. BFs for the contrasts of content versus intentionality probes (BF10 = 32.01), and versus depth probes (BF10 = 27,204.33) indicated strong evidence favoring the alternative over the null model; for intentionality versus depth probes, however, BF10 = .40, providing weak support for the null over the alternative model. Like TUT rates, then, confidence ratings suggest some differences among probe types, here with consistently higher confidence in TUT reports for the content condition than the other conditions.

We next considered confidence in TUT versus on-task thought reports. Figure 3 suggests that the content condition yielded more similar confidence ratings between on-task and TUT reports than did intentionality or depth conditions. We tested these impressions by creating a difference score for each subject in each task, subtracting TUT from on-task confidence, and submitting these difference scores to a LMM with the flanker content condition as the reference level. Compared to the content condition, the confidence difference scores in the flanker task were significantly larger in the intentionality condition, b = 0.29, SE = 0.09, t(1203) = 3.24, p = .001, and in the depth condition, b = 0.78, SE = .09, t(1247) = 8.45, p < .001; somewhat similarly, in the SART, the content condition yielded a (non-significantly) smaller difference score than did the intentionality condition, b = 0.22, SE = .09, t(1129) = 2.60, p = .009, and a significantly smaller difference score than did the depth condition, b = 0.71, SE = .09, t(1140) = 8.14, p < .001. The depth condition produced still larger confidence difference scores than did the intentionality condition in both the flanker, b = 0.49, SE = 0.09, t(1238) = 5.35, p < .001, and SART, b = 0.48, SE = 0.08, t(1130) = 5.59, p < .001. Overall, then, subjects were more confident about their content-based TUT reports than their intentionality- or depth-based TUT reports, and they were more similarly confident in TUT and on-task reports in the content condition than the other conditions; the largest discrepancies between on- and off-task confidence reports were produced in the depth condition.

Confidence ratings correlations

We next tested whether confidence ratings showed similar cross-task consistency for the three probe types by correlating SART with flanker TUT confidence. Again, all correlations were similar. For content probes, r(247) = .74 [.65, .81], for intentionality probes, r(227) = .72 [.62, .80], and for depth probes, r(215) = .67 [.55, .76]. We found no support for probe types measurably affecting individual differences in, or reliability of, confidence reports for TUT experiences.

Summary of cross-task comparisons

Within the limits of our methods and analytic approaches, we found no statistical evidence for probe-type differences in SART TUT rates (note the depth probe TUT rate in the SART was set to match those from the content and intentionality rates), in TUT-rate correlations between SART and flanker tasks, or in TUT confidence correlations between tasks. We did find, however, statistical evidence for the following differences: 1) TUT rates for intentionality and depth probes dropped more from the SART to flanker task than they did for content probes; in the flanker task, content probes yielded more than 10% higher TUT rates than the intentionality or depth probes. 2) In both tasks, subjects reported TUTs with more confidence in the content condition (reporting on the “what” of their experience) than in the intentionality condition (reporting on the “why”) and in the depth condition (reporting on “how much”), with depth confidence ratings in TUT about a half point lower (on a five-point scale) than in the content condition; confidence reports in the content condition were also more similar between on- and off-task reports than were those in the intentionality or depth conditions.

Comparing within-person SART performance correlates of TUTs across probe types

To test whether in-the-moment performance differentially correlated with in-the-moment TUT reports across probe conditions, we focused on the SART because it presented enough trials to allow within-person analyses and presented only one trial type for RTs. We first examined RT variability (“RTsd”)— the standard deviation across the four “go” trials preceding each no-go trial and thought report—to test whether the predicted increase in RTsd before TUTs versus on-task reports differed across probe types. We next examined no-go trial accuracy preceding each thought report to test whether the predicted increase in no-go errors preceding TUTs versus on-task reports differed across probe types.

RT variability

Figure 4 suggests that our study replicated prior findings (e.g., Bastian & Sakur, 2013; Seli, Cheyne, & Smilek, 2013): Subjects’ go-trial RTs were slightly more variable preceding TUT reports than on-task reports. Notably, this TUT-related increase in RTsd was similar across probe conditions. For the content-probe condition, Ms = 106 ms (SD = 39) vs. 118 ms (SD = 48) preceding on-task versus TUT reports, respectively; for the intentionality condition, Ms = 100 ms (SD = 45) vs. 113 ms (SD = 49), and for the depth condition, Ms = 99 ms (SD = 42) vs. 115 ms (SD = 62).

Standard deviations in reaction times (RTsd) for the four go-trials preceding task-unrelated thought (TUT) reports versus on-task (Task) reports in the SART, for subjects in the content (“What”), intentionality (“Why”), and depth (“HowMuch”) probe conditions. Box plots present the 25th, 50th, and 75% percentiles; whiskers extend to the smallest and largest values within 1.5 times the inter-quartile range. Means are presented as triangles. Each dot represents an individual subject’s RTsd preceding TUT or on-task reports

A LMM with on-task reports in the content-probe condition as the reference level indicated that RTsd was greater preceding TUTs than on-task reports there, b = 8 ms, SE = 2 ms, t(20686.3) = 3.66, p < .001. This small RTsd difference did not differ significantly for the content versus intentionality condition, b = 3 ms, SE = 3 ms, t(20794.4) = 0.91, p = .361, or depth condition, b = 7 ms, SE = 3 ms, t(20483.2) = 1.98, p = .047. The RTsd difference preceding TUT vs. on-task reports was significant in each probe condition; all ts > 3.65, ps < .001. (Fig. 4 indicates some outliers; conservatively, we did not delete them because: (a) we already dropped data from 13 subjects with outlying SART RTsd; (b) Fig. 4 shows that the pattern held across intentionality and depth conditions despite their having outliers in opposite cells (on-task vs. TUT) and; (c) Fig. 4 shows increased RTsd preceding TUT reports throughout the tails of the TUT distributions.)

No-go trial accuracy

The data presented in Fig. 5 show clearly that no-go trial accuracy was poorer preceding TUT than on-task reports (replicating, e.g., McVay & Kane, 2009, 2012a). In contrast to what we found in RTsd, however, the difference between on-task and TUT-reported trials varied dramatically across probe conditions; the different distributions clearly pass the “interocular trauma test” (hitting one between the eyes). For the content-probe condition, Ms = 59.9% (SD = 23.3) vs. 48.8% (SD = 24.8) preceding on-task versus TUT reports, respectively; this large effect was nonetheless dwarfed by that in the intentionality condition, Ms = 69.6% (SD = 24.8) and 42.4% (SD = 25.8), and the depth condition, Ms = 67.7% (SD = 26.5) and 39.2% (SD = 25.2). We analyzed accuracy using a generalized linear mixed model (GLMM) in the lme4 package (Bates et al., 2015) to account for the binomial distribution of trial-level accuracy (Dixon, 2008). A GLMM with on-task reports in the content-probe condition set as the reference level indicated that no-go trial accuracy was significantly lower preceding TUTs than on-task reports there, b = −.56, SE = .05, Z = −12.45, p < .001. This large accuracy difference between TUT and on-task reports was nonetheless significantly smaller than in the intentionality-probe condition, b = −.93, SE = .07, Z = −13.88, p < .001, and depth-probe condition, b = −.90, SE = .07, Z = −13.40, p < .001.

Accuracy (“Accy”) rates for no-go trials preceding task-unrelated thought (TUT) reports versus on-task (Task) reports in the SART, for subjects in the content (“What”), intentionality (“Why”), and depth (“HowMuch”) probe conditions. Box plots present the 25th, 50th, and 75% percentiles; whiskers extend to the smallest and largest values within 1.5 times the inter-quartile range. Means are presented as triangles. Each dot represents an individual subject’s M no-go accuracy rate preceding TUT or on-task reports

Summary of within-person performance correlates