Abstract

This paper describes normative data for newly collected exemplar responses to 70 semantic categories described in previous norming studies (Battig & Montague, Journal of Experimental Psychology, 80(3, pt.2): 1–46, 1969; Van Overschelde, Rawson, & Dunlosky, Journal of Memory and Language, 50(3): 289–335, 2004; Yoon et al., Psychology and Aging, 19(3), 379–393, 2004). These categories were presented to 246 young (18–39 years), middle (40–59 years), and older (60 years and older) English-speaking adults living in the United States who were asked to generate as many category exemplars as possible for each of the 70 categories. In order to understand differences in normative responses, we analyzed these responses a) between age groups within the current sample and b) in comparison to three previously published sets of norms. Experimental studies using such norms typically assume invariance of normative likelihoods across age and historical time. We replicate previous findings such that exemplar frequency correlations suggest moderate stability in generated category members between age groups and cohorts for many, but not all, categories. Further, analyses of rank-order correlations highlight that the traditional measure of typicality may not capture all aspects of typicality, namely that for some categories there is high consistency in the frequency of exemplars across age groups and/or norms, but the ordering of those exemplars differs significantly. We include a cluster analysis to aid in grouping categories based on relative stability across time, cohort, and age groups. These results emphasize the importance of maintaining and updating age-differentiated category norms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Common nouns (e.g., apple and pear) can be classified as members (exemplars) of a superordinate taxonomic category (e.g., fruit; Rosch & Mervis, 1975). Historically, categorizable stimuli have played and continue to play an important role in research on memory, cognition, and language (e.g., Brainerd, Gomes, & Nakamura, 2015; Hertzog, Cooper, & Fisk, 1996; Hunt, Davidson, & Lansman, 1981; Hunt, Smith, & Toth, 2016; Lewellen, Goldinger, Pisoni, & Greene, 1993; Maxfield & Zelinsky, 2012; McCloskey & Glucksber, 1979; Smith, Shoben, & Rips, 1974). Scientific use of verbal stimuli from noun categories requires normative data on the exemplars and their typicality as representatives of a given category. For instance, research has shown that exemplar differences in category typicality affect response times when people are asked to verify category membership or whether pairs of stimuli are drawn from the same or different categories, although other variables like category dominance also play a role (Casey, 1992; Hunt et al., 1981).

Over 60 years have passed since the first category norm dataset was published by Cohen, Bousfield, and Whitmarsh (1957). Given their importance for experimental psychology, these norms have since been updated and expanded (Battig & Montague, 1969; Van Overschelde et al., 2004; Yoon et al., 2004). The common method for collecting normative data is to use a generation task, in which individuals are asked to name exemplars belonging to a provided category label (e.g., animals, vegetables, fruits). Exemplar typicality is then scaled to capture the aggregate frequency of exemplar productions in a sample of respondents. Additionally, in all of the three published norming studies (Battig & Montague, 1969; Van Overschelde et al., 2004; Yoon et al., 2004), university student participants provided the responses, with known limitations in the chosen sample (e.g., geographical influences on category exemplar generation).

From early on, there has been recognition that category representations can change with historical time given the dynamic nature of popular language usage and cultural change. Battig and Montague (1969) reported that their update was driven by Cohen’s insistence for an update given that 8 years had passed since the Cohen et al. (1957) publication. The Van Overschelde et al. (2004) norms greatly expanded the number of categories relative to earlier efforts, including taxonomic labels such as “a football penalty” or “a type of car”, to capture changes in normative taxonomic membership over time and cohorts (e.g., “In 1965, undergraduates wore rubbers on their feet, they did not listen to rap, and they did not drive SUVs”; p. 290). In addition, other researchers have extended category norms into other languages, cultures, and age groups (Brown & Davies, 1976; Hasselhorn, Jaspers, & Hernando, 1990; Howard, 1980; Marful, Díez, & Fernandez, 2015; Posnansky, 1978; Yoon et al., 2004). Thus, continuing to update the category norms is essential for maintaining a current basis of exemplars, and their typicality, for use in studying human memory and language.

One of the opportunities that has arisen due to the continued updating and expansion of the category norms is the ability to better understand the effect of aging on semantic category representation. Indeed, there remains a debate as to what effect, if any, aging has on semantic representations, and whether any observed age-related changes are actually due to aging processes or due to cohort or historical effects. On the one hand, researchers argue that semantic representations remain consistent across age groups (Bowles, Williams, & Poon, 1983; Burke & Peters, 1986; Howard, 1980; Yoon et al., 2004). In particular, Howard (1980) replicated Battig and Montague’s (1969) procedure with the same stimuli in a sample of older adults, finding similar proffered exemplars and similar typicality rankings, a finding subsequently replicated by Yoon et al. (2004). However, this consistency might be most likely when examining basic categories of language concepts (e.g., animals, fruits, vegetables) that are less likely to change over historical time–and hence more likely to be invariant over birth cohorts. Invariance might be less likely with other categories, such as weapons or toys. For example, it is relatively unlikely that a ‘phaser’ or ‘laser gun’ would have been a generated exemplar prior to 1960. Furthermore, category fluency tests that present a taxonomic category label and request production of category exemplars (e.g., a verbal fluency task) typically find age differences and age changes in the number of valid exemplars produced (e.g., Bolla, Gray, Resnick, Galante, & Kawas, 1998; Brickman et al., 2005; Gordon, Young, & Garcia, 2018; Troyer, Moscovitch, & Winocur, 2000), even for categories like ‘animals’. This method is isomorphic with the typical exemplar-generation method for norming categorizable stimuli. It is possible that older adults’ lower levels of exemplar production, which could be due in part to perseveration of exemplars (Henry & Phillips, 2006), could affect estimated typicality of less-common taxonomic exemplars in norming studies. Alzheimer’s disease also appears to affect category fluency above and beyond declines seen under normal cognitive aging (Aschenbrenner et al., 2015; Brandt & Manning, 2009; Venneri et al., 2019). Furthermore, additional evidence suggests that older adults may not access or use category exemplars as efficiently, or in the same manner, as younger adults (Kintz & Wright, 2017; White, Storms, Malt, & Verheyen, 2018). For all these reasons, further evaluation of age differences in category norms seems important.

We provide another update of the category norms using adults in the United States. The last major updates based on young adult responses was published in 2004, as was the most recent study with older adults (Van Overschelde et al., 2004; Yoon et al., 2004). However, the current study goes beyond mere norm updating by engaging in an analysis of how category norms have changed, if any, with the passing of historical time. By capturing category norms across decades and across cohorts, researchers can better assess how semantic representations are influenced by cohort, age, and historical effects. Figure 1 provides a representation of the data available for comparisons across age groups and across cohorts at different measurements. Specifically, with the use of the Battig & Montague (1969) norms (henceforth called the “1969 norms”), the Van Overschelde, et al. (2004) norms (henceforth called the “v2004 norms”), the Yoon et al. (2004) norms (henceforth called the “y2004 norms”) and the present norms (henceforth called the “2018 norms"), we can assess longitudinal, cross-sectional, and time-lag data. In particular, we focus on those comparisons where at least one parameter (i.e., time of measurement, cohort, or age at test) is maintained between groups. From Fig. 1, we can see four possible comparisons across groups: comparisons where participants are the same age at test (1969-YA to v2004-YA; 1969-YA to y2004-YA; 1969-YA to 2018-YA; y2004-OA to 2018-OA), are from the same cohort (1969-OA to 2018-OA), are the same age at test and from the same cohort (v2004-YA to y2004-YA; v2004-YA to 2018-YA; y2004-YA to 2018-YA), and are tested at the same time point (v2004-YA to y2004-OA; y2004-YA to y2004-OA; 2018-YA to 2018-OA).

Knowing which categories remain consistent and which do not across time, cohort, and age will be particularly useful for stimulus selection and research inquiry. For example, if a study aims to assess a process theory of cognitive aging (e.g., working memory deficits and/or inhibition deficits with linguistic stimuli), having a category representation that is consistent across time and age is important so as not to build in a history-graded confound in stimulus properties into the cognitive task, creating a rival explanation for age differences in inhibitory function. On the other hand, if the research aims to assess how historical and developmental changes in semantic representation influence process theories of cognitive aging, then it may be particularly useful to select categories that are known to differ in normative properties across time and/or age. Thus, the present study provides an update of the category norms from a cross-sectional sample of adults in the United States, compares the new norms to previous datasets, and assesses the influence of cohort, age, and historical effects on categories.

Method

To keep as much consistency in methodology as possible, we modeled the current 2018 norms on the v2004 norms, which in turn modeled their norms on the 1969 norms. Table 1 provides a snapshot of the similarities and differences in methods between each of the norms. We elaborate here on the methodology of the current 2018 norms and refer the readers to the previous norms for more details on those. All of the data presented in this manuscript are publicly available here: categorynorms.psychology.gatech.edu.

Participants

We aimed to collect data from a heterogeneous sample of 250 adults, divided into three approximately equivalent age groups: young adults (18–39 years old), middle-aged adults (40–59 years old), and older adults (60 years and older). Participants were recruited using Amazon Mechanical Turk during the spring semester of 2018, with the criteria that participants were native English speakers and located in the United States. Data from four participants were excluded due to failure to follow task instructions (n = 3) and an error in response recording (n = 1). The final sample consisted of 83 young adults (M = 31, SD = 4.8), 79 middle-aged adults (M = 49, SD = 5.3), and 84 older adults (M = 67, SD = 4.6), varying in gender, education, and location within the United States. Specifically, the young adult group was comprised of 48 females (35 males), ranged in education from elementary school to a doctorate degree (M = 15.1 years, SD = 2.1), and represented 29 different states. The middle-aged adult group was comprised of 57 females (22 males), ranged in education from high school to a master’s degree (M = 15.2 years, SD – 2.2), and represented 34 different states. Lastly, the older adult group was comprised of 50 females (34 males), ranging in education from high school to a doctorate degree (M = 16.0 years, SD = 2.9), and represented 30 different states.

Procedure

The procedure was similar to that used in the v2004 norms but used Qualtrics and Amazon Mechanical Turk as the collection vehicle. The Qualtrics survey consisted of informed consent, demographic questions, and the norming task. All 70 categories from the v2004 norms, which overlaps in 56 categories with the 1969 norms and 47 categories with the y2004 norms, were shown to each participant in a random order one at a time. During the norming task, a category label was presented on the screen for 2 s and remained on the screen with a text box for input. Participants had 30 s to provide as many responses as possible by typing into a text box, and a timer was displayed for convenience. Moving forward before the 30 s expired was not allowed.

Analyses

Responses from participants were checked for spelling and corrected when possible (e.g., sargent corrected to sergeant). Any remaining unintelligible responses were removed (less than 1% of the data). In addition, similar responses were combined under one superordinate response (e.g., responses of boa, boa constrictor, and boa constrictors were combined to create the reported superordinate response of boa constrictor), as done in the previous norms.

We conducted similar analyses as previous norming studies investigating age group differences in our sample. First, we assessed the potency, or average number of responses by participants, of each category by age group following the 1969 and v2004 norms. Then we conducted assessments of between-subject variability following Howard (1980), specifically by assessing the type-to-token ratio and the idiosyncratic type-to-total type ratio for each category by age group.

Additionally, we analyzed differences in our dataset from previous norms to consider cohort and time period effects, in addition to age-related effects. Recall that we will focus on those comparisons across norm datasets where at least one of the following parameters is maintained: time of measurement, cohort, or age at test (see Fig. 1). Note that we could only conduct correlations for categories tested by both norms; thus, not all comparisons have the same number of categories included in their correlation calculation. For example, the category “a drug” was only tested in the v2004 and 2018 norms. Thus, there are only two possible correlations calculated (i.e., v2004-YA to 2018-YA; 2018-YA to 2018-OA). There were 600 (out of 770) correlations possible for analysis. Specifically, we considered the frequency of exemplars in each category across norm datasets following the v2004 norms and extended that analysis to also consider the rank order of exemplars in each category across norm datasets.

Finally, for each set of correlations (i.e., frequency and rank order), we also conducted a cluster analysis on the 47 categories where all norm comparisons could be made to provide initial data-driven insight into how groups of categories may begin to emerge based on patterns of frequency or rank-order correlations. We used a hierarchical clustering approach using the Ward method and the Mclust() function in R (Scrucca, Fop, Murphy, & Raftery, 2016) to determine the number of clusters (i.e., groups of categories) for each set of correlation analyses. We used standard parameters, did not set any priors, and report the results based on the best fitting model in an initial attempt to begin identifying groups of similar categories.

Results

Category norm dataset

The Appendix contains response data for each category as a separate table. In each table, we provide data for the overall sample (i.e., all age groups combined), along with the data broken apart by age group. The first column (Total) provides the proportion of participants that provided each response. We only list those responses that were given by at least 5% of the corresponding sample (overall and by specific age group), following the v2004 norms. The second column (First) provides the proportion of participants that produced the exemplar as their first response. The third column (Rank) provides the average output position and standard deviation of each exemplar. For example, in the category “a bird”, the response of blue jay was given as a response by 57% of participants overall (60% of young adults, 62% of middle-aged adults, and 51% of older adults), was given as the first response by 8% of participants overall (10% of young adults, 9% of middle-aged adults, and 5% of older adults), and had an average rank of 3.82 overall (3.96 for young adults, 3.73 for middle-aged adults, and 3.76 for older adults).

Category potency

Potency was calculated by dividing the number of responses given for a category by the total number of participants. Table 2 provides the mean and standard deviation of each category’s potency for the overall data, as well as for each age group. For example, the category “a bird” had an overall mean potency of 7.15 (SD = 2.69), with a mean potency of 7.08 (SD = 7.08) for young adults, 7.63 (SD = 7.63) for middle-aged adults, and 6.75 (SD = 2.30) for older adults. This indicates that participants, on average, responded to the category cue “bird” by generating, on average, 7.15 exemplars treated as category-appropriate responses, with young adults providing 7.08 responses, middle-aged adults providing 7.63 responses, and older adults providing 6.75 responses. Overall, category potency ranged from 2.67 to 10.91, with similar ranges for each age group (young adults: 2.66–11.52, middle-aged adults: 2.53–11.14, and older adults: 2.81–10.10).

We planned a priori to examine the interaction of age group and category, and to conduct pairwise comparisons of age groups (young adults–middle-aged adults; young adults–older adults; middle-aged adults–older adults) for each category. To do so, we conducted a repeated measures ANOVA (age group by category) to assess age differences in the mean potency. We found no significant main effect of age group, F(2, 243) = 1.062, p = .347, but a main effect of category, F(69, 16551) = 197.478, p < .0001. However, these main effects should be considered in the context of a significant age group by category interaction, F(138, 16551) = 2.66, p < .0001. Using a Tukey’s HSD corrected post hoc analyses, we found that 14 categories had at least one significant pairwise comparison between age groups (see Table 2). For example, there was a significant pairwise comparison between the middle-aged and older adult groups for the category “a bird”, such that the middle-aged adult group had a higher mean potency than the older adult group, p = .03. For those categories with at least one significant pairwise comparison between age groups, the numerical difference in mean potency was small, ranging from 0.81 to 1.58.

Between-subject variability

To examine other aspects of between-subject variability, we considered the type-to-token and idiosyncratic type-to-total type ratios (Table 3). “Type” refers to a response provided by at least one person (e.g., “robin”) and “token” refers to the number of times that type was provided (e.g., 31 tokens would indicate that 31 participants provided the type “robin”). In contrast, “idiosyncratic type” refers to a response provided by only one person. We considered both ratios for each age group by each category to determine how similar participant responses were within a given age group.

The type-to-token ratio reflects how common a particular type is within an age group. The more individuals that produce the same type for a given category, the lower the ratio value (i.e., the less between-subject variability). For example, using the category “a bird,” young adults provided 71 different types with a total of 588 tokens (a value of 0.12), middle-aged adults provided 85 different types with a total of 603 tokens (a value of 0.14), and older adults provided 77 different types with a total of 567 tokens (a value of 0.14). Thus, for this specific category, the young-adult group had numerically lower between-subject variability than middle-aged and older adult groups.

The idiosyncratic type-to-total type ratio reflects the proportion of unique responses generated in each age group. Lower ratio values indicate fewer unique responses for a given category (i.e., less between-subject variability). For example, using the same category “a bird,” young adults provided 17 idiosyncratic types with a total of 71 types (a value of 0.24), middle-aged adults provided 30 idiosyncratic types with a total of 85 types (a value of 0.35), and older adults provided 27 idiosyncratic types with a total of 77 types (a value of 0.35). Again, this specific category reflects numerically lower between-subject variability in the young adult group than the middle-aged and older adult groups.

To statistically assess between-subject variability in age groups across all categories, a Friedman’s test was conducted for each of the ratios, as done by Howard (1980). First, a Friedman’s test indicated that there was a significant effect of age group on the type-to-token ratio, χ2(2) = 12.72, p = .002. Using a symmetry test with corrected p values, the post hoc analysis indicated that the older adult group had a lower type-to-token ratio than in the middle-aged adult, p = .03, and younger adult, p = .002, groups, with no difference between the middle-aged and younger-adult groups, p = .69. Second, a Friedman’s test indicated no significant effect of age group on the idiosyncratic type-to-total type ratio, χ2(2) = 4.43, p = .108. Thus, when considering all categories together, these ratio tests provide evidence of limited between-subject variability within age groups, namely that older adults had, on average, lower type-to-token ratios (i.e., more consistent responses) than the middle-aged and younger-adult groups, with no differences in the rate of idiosyncratic responses across age groups.

Exemplar frequency correlations

Van Overschelde et al. (2004) reported an analysis of exemplar frequency by conducting Pearson correlations between their v2004 norms and the 1969 norms, that they labeled a “generational correlation.” They found significant variability in how stable exemplar frequencies were across generations/cohorts. In other words, there were several categories where the exemplar frequencies remained highly consistent between the 1969 and v2004 norms (e.g., “a color”), as well as several categories with significant changes in exemplar frequencies across norms (e.g., “a type of dance”; Van Overschelde et al., 2004). Table 4 provides a similar analysis of exemplar frequency correlations across norms, allowing for consideration of generational, historical, and age effects.

To compute the Pearson correlations, we obtained the frequency of each exemplar in each category for each norm dataset. We included all exemplars provided by at least 5% of the sample (rather than using a raw frequency count cutoff as done in the v2004 norms) so that consideration of low typicality responses would be included, while also reducing the influence of idiosyncratic responses and controlling for the issue in varying sample sizes across norms. Of the 600 correlations performed, over half (n = 321) had a Pearson’s r > .9 and over 80% (n = 498) had a Pearson’s r > .75, indicating high similarity in exemplar frequency across norm datasets (i.e., high-frequency exemplars tend to be consistently high frequency, low-frequency exemplars tend to be consistently low frequency). For example, the exemplar frequency correlations across all group comparisons for the category “a precious stone” were extremely high (r range, 0.93–0.99). However, it is important to point out that the Pearson r values ranged from 0.01 to 0.99, which replicates the similarly substantial range in the generational correlation reported in the v2004 norms. For example, young adults’ response frequencies for “a type of dance” from the current 2018 norms are highly correlated with those from young adults in the v2004 norms (r = 0.91); however, the same response frequency correlation between young adults from the current 2018 norms and those from the 1969 norms is practically zero (r = 0.03). This finding continues to harken the need to use up-to-date norms in psychological research, particularly for those categories where there may be significant influence of social and historical factors (e.g., “a disease”, “a toy”, “a weapon”, “ a type of fuel”).

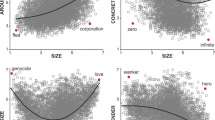

A cluster analysis was also conducted to identify if some categories were more similar in their correlation patterns across norm comparisons than other categories. Using standard parameters and no priors, the Mclust() function (Scrucca et al., 2016) identified two clusters, or groups of categories (see Fig. 2), best fitted by the VEE model. Cluster 1 (Fig. 2, pink) consisted of ten categories (e.g., a type of dance, a disease, a toy, a type of music). Cluster 2 (Fig. 2, teal) consisted of the remaining 37 categories (e.g., a bird, a science, a vegetable, a relative, a color). We assessed the average frequency correlations for each norm comparison within clusters as an initial attempt at describing the clusters (see Table 5). Higher r values indicate greater consistency between the frequencies of exemplars produced within a category between the two norms compared. This simple assessment indicated that Cluster 1 represents categories that were more variable across norms (r values ranging from 0.465 to 0.871), while Cluster 2 represents categories that were more stable across norms (r values ranging from 0.849 to 0.952).

Cluster analysis of 47 categories across norms using frequency correlations. From left to right, the pink cluster (Cluster 1) represents categories with moderate frequency correlations across norms, while the teal cluster (Cluster 2) represents categories with high-frequency correlations across norms

Exemplar rank-order correlations

We also evaluated Spearman’s rank-order correlations (Table 6) using the average rank position of each exemplar in each category for each norm dataset. We again included all exemplars provided by at least 5% of the sample. Of the 600 correlations performed, only 5% (n = 32) had a Spearman’s r > .9, with the range of Spearman r values from – 0.38 to 0.99. These results stood in stark contrast to the previous exemplar frequency correlations. Specifically, here the rank-order correlations indicated that there was much less stability in the ordering of exemplars across norm datasets, even if the frequency of those exemplars was stable. For example, responses for the category “a four-footed animal” seem to be highly stable with regard to exemplar frequency correlations (r range, 0.90–0.98). However, differences in rank-order correlations were evident in key group comparisons: adults within the same cohort showed moderate stability in rank-order correlation (e.g., “2018 OA – 1969 YA”: r = 0.68), but adults from different age groups tested at the same time point did not (e.g., “y2004 OA – y2004 YA”: r = 0.33). In other words, the overall rates in which adults reported exemplars for “a four-footed animal” were highly similar across groups, but the order in which they reported these exemplars differed as a function of the idiosyncrasies inherent to different age and cohort groups.

Again, a cluster analysis was conducted to identify if some categories were more similar in their correlation patterns across norm comparisons than other categories. Using standard parameters and no priors, the Mclust() function (Scrucca et al., 2016) identified five clusters, or groups of categories (see Fig. 3), best fitted by the EII model. Cluster 1 (Fig. 3, red) consisted of nine categories (e.g., a bird, a vegetable, a chemical element). Cluster 2 (Fig. 3, gold) consisted of 11 categories (e.g., a snake, an occupation or profession, a sport). Cluster 3 (Fig. 3, green) consisted of 15 categories (e.g., a crime, a metal, a unit of time). Cluster 4 (Fig. 3, blue) consisted of three categories (i.e., a type of dance, a disease, a science). Cluster 5 (Fig. 3, purple) consisted of nine categories (e.g., a toy, a weapon, a building for religious services). We also assessed the average frequency correlations for each norm comparison within clusters as an initial attempt at describing the clusters (see Table 7). Higher r values indicate greater consistency between the rank orders of exemplars produced within a category between the norms compared. While we initially found that there was much more variability in rank-order correlation r values across norm comparisons than exemplar frequency correlation r values, this clustering analysis provided a more fine-grained examination, allowing patterns to emerge. Clusters 1 and 3 had the most consistent average rank-order correlations across norm comparisons, with Cluster 3 having higher r values than Cluster 1. Thus, these clusters represent categories that were more stable across norms. Cluster 2 had low r values when comparisons were made including the Yoon et al. (2004) data, indicating that these norms were perhaps qualitatively different in their rank order as compared to the other norms analyzed. Cluster 4 represents categories that were highly influenced by age, cohort, and historical effects, as indicated by the low r values across the majority of norm comparisons. Finally, Cluster 5 had only very low r values for the norm comparison between younger and older adults in the present 2018 norms, perhaps indicating an age-related effect for the current period of time.

Cluster analysis of 47 categories across norms using rank-order correlations. From left to right, the red cluster (Cluster 1) had moderate rank-order correlations, the gold cluster (Cluster 2) had low rank-order correlations, the green cluster (Cluster 3) had high rank-order correlations, the blue cluster (Cluster 4) had very low rank-order correlations, and the purple cluster (Cluster 5) had moderate rank-order correlations

Based on the frequency and rank-order correlation clusters, we can begin to label categories on whether they are more or less stable across norms. Although we cannot be certain as to why some categories are more variable across norms (e.g., is variance due to age, cohort, or historical effects, or some combination), these labels may serve as a starting point for determining which categories need further investigation. Table 8 shows how categories might be labeled in regard to stability, from very low to high. Indeed, there are several categories which were quite stable across norms, in terms of both frequency and rank order of exemplars (e.g., “a crime”, “a fruit”, “a relative”). In contrast, there are some categories which were more variable across norms, particularly in regard to rank order. For example, while the category “a science” tended to have high consistency in exemplar frequency across norms, the ordering of the exemplars was highly variable.

Discussion

The present data set provides updated category norms using a heterogeneous sample of adults in the United States. There are two main differences of the present category norms in contrast to prior norms (Battig & Montague, 1969; Van Overschelde et al., 2004; Yoon et al., 2004): 1) a sampling of participants with no restriction on geographical location and 2) a sampling of participants from across adulthood who are not necessarily tied to a specific university. Despite these differences, we provide strong evidence that the frequency of exemplars within a category tend to remain consistent, while also providing new insight regarding the variability in ranking of exemplars within categories. These updated norms will be useful for continuing investigations of language, memory, and cognition, particularly as it relates to cognitive aging.

One major impact that these data will have in cognitive aging research is the ability to have accurate exemplar typicality estimates that are specific to different age groups. Recall that exemplar typicality is commonly obtained by scaling the mean number of times an exemplar is produced in a sample of participants, but previous norms usually rely only on young, college adult samples. This is a critical limitation of previous norms given that there is evidence to support change in the structure of semantic memory, or at least change in how words are retrieved from semantic memory, with increasing age. For example, Dubossarsky, De Deyne, and Hills (2017) provide evidence that a once tightly connected semantic memory structure begins to fragment into smaller clusters with increasing age (Dubossarsky et al., 2017), which is likely due in part to the degradation of exemplars on the periphery of categories (e.g., Borge-Holthoefer, Moreno, & Arenas, 2011). Although these changes in semantic structure could be accounted for by a change in higher-order cognition, like executive function and cognitive control (see reviews Siew, Wulff, Beckage, & Kenett, 2019; Wulff, De Deyne, Jones, & Mata, 2019), such that exemplars on the periphery are avoided in search tasks. Regardless of the underlying mechanism (i.e., change in structure or process), the fact that periphery exemplars are less accessible puts into question how exemplar typicality is determined, particularly for older adult samples. For example, a smaller category (i.e., fewer accessible exemplars) may impact the calculation of typicality (e.g., typicality will be larger for an exemplar if there are relatively few other exemplars to choose than if there are many other exemplars) and the rank ordering of exemplars (e.g., if there are many exemplars, variability in ordering may also increase). Using the common method of determining exemplar typicality, the current norms now provide a way to obtain exemplar typicality that is relative to younger (18–39 years), middle-aged (40–59 years), and older (60 years and older) adults (with publicly available access to the data for researchers to determine even more fine-grained age-associated typicality estimates), which may begin to address issues of age-related differences in semantic memory structure.

Although exemplar frequency correlations were moderate to high across norms, perhaps reflecting limited influence of age, cohort, and historical effects, our results on rank-order correlations indicated significant variability across norms, which points to a critical limitation of the current method in calculating exemplar typicality. That is, although an exemplar may reside in one’s semantic memory (and be accessible to the extent of production during the category fluency task), the importance of that exemplar to the category may change over time and with age (Brosseau & Cohen, 1996; Verheyen, Droeshout, & Storms, 2019). Our clustering results suggest that the ordering of exemplars may be more sensitive to capturing age, cohort, and historical effects. That is, although an exemplar might be produced in equal proportion between two groups, this does not necessarily reflect that the exemplar is as equally important to both groups. For example, in the category “a science”, the exemplar computer science had similar frequency proportions between young adults and older adults (5% and 6%, respectively), but differed in their average rank-order position (2.5 and 4.8, respectively). Here, we could make the suggestion that computer science has grown in recognition given the changing workforce demands and job opportunities, which are more relevant in the daily lives of younger adults who are seeking out their professions than older adults who are nearing retirement. In this case, computer science would have the same typicality estimate for the two age groups given current methods, even though these two groups differ in how important this exemplar is to their category. Thus, the traditional measure of exemplar typicality may not be sensitive enough. New methods for estimating exemplar typicality can be developed to take into consideration rank ordering, as well as other potential influences, like feature saliency (Montefinese, Ambrosini, Fairfield, & Mammarella, 2014). This is beyond the scope of the present paper, but these norms could be used by other researchers to vet out alternative methods of estimating exemplar typicality.

Additionally, we suggest that researchers selecting categorizable stimuli for age-comparative work should attend carefully to the information provided here to identify categories with sufficient stability to warrant assumptions of measurement invariance across age and time (Baltes, Reese, & Nesselroade, 1988; Labouvie, 1980), or at least make attempts to account for potential age, historical, and cohort differences in category representation. Notably, there were several categories, based on our cluster analysis, which could be deemed as relatively stable across norms (i.e., across age, time, and cohort). For example, the category “a type of reading material” had both relatively consistent frequency (r range, 0.70–0.94) and rank-order correlations (r range, 0.68–0.99) across norm comparisons. Thus, it is crucial that researchers continue to better disentangle which categories are more resilient to cognitive and societal factors, and which categories are not. Taken together, these findings are of crucial importance to researchers studying typicality effects on memory and language tasks, and harkens the need to better understand how our current method of estimating typicality, which does not take into rank ordering and has used norms from young adults, influences research findings, particularly in the context of cognitive aging research. Given the wide variety of research that is reliant on category norms and continuing concern regarding the replicability of psychological research (Arnett, 2016; Hanel & Vione, 2016; Henrich, Heine, & Norenzayan, 2010), it is critical that future norms continue to make attempts at capturing the variability of individuals, including across ages and cohorts.

The findings of this study also draw attention to some of its limitations, and more broadly, to the very idea that norms can be generated with broad generalizability that remains valid across epochs of historical time. Our data are based on a relatively small sample and it would be desirable to generate large-sample norms that are not limited to sampling university students. It would also be desirable to be able to evaluate subject-level moderator variables (e.g., education, verbal ability, and region of the country), item-level moderator variables (e.g., the number of unique exemplars possible in a given category and the distribution of psycholinguistic features of exemplars, like lexical frequency, typicality, feature distinctiveness, etc.), and the interaction of subject-level and item-level moderator variables on potency and rank ordering of exemplars in a given category. Finally, the finding of historical effects and age effects suggests that norms might need to be continuously updated, as might be contemplated by regular, recurring sampling or even open-ended sampling where age and time-point of responses are recorded to enable fine-grained analysis of historical trends. Longitudinal data might be collected to differentiate within-person consistency from historical change as has been done in studies of adult intellectual development (e.g., Schaie, 2013). Finally, evaluating how exemplar generation norms differ from norms collected with other methods (such as rankings or ratings of provided exemplars) might be critical for accurate assessment of typicality for exemplars found to have limited production frequencies.

In closing, the results presented here show that the very concept of normative typicality must be qualified to consider the dynamic influences of changing language usage within and between persons across historical time. The age-graded norms we provide here are a resource than can be effectively leveraged to construct age-appropriate stimuli for studies of age differences in sematic memory access, episodic memory, and language production that rely in some manner on access to exemplars from semantic categories.

References

Arnett, J. (2016). The neglected 95%: Why American psychology needs to become less American. In A. Kazdin (Ed.), Methodological issues and strategies in clinical research (pp. 115–132). American Psychological Association.

Aschenbrenner, A. J., Balota, D. A., Tse, C. S., Fagan, A. M., Holtzman, D. M., Benzinger, T. L. S., & Morris, J. C. (2015). Alzheimer disease biomarkers, attentional control, and semantic memory retrieval: Synergistic and mediational effects of biomarkers on a sensitive cognitive measure in non-demented older adults. Neuropsychology, 29(3), 368–381.

Baltes, P., Reese, H., & Nesselroade, J. (1988). Life-span developmental psychology: Introduction to research methods. Hillsdale, NJ: Erlbaum.

Battig, W., & Montague, W. (1969). Category norms of verbal items in 56 categories A replication and extension of the Connecticut category norms. Journal of Experimental Psychology, 80(3, pt.2), 1–46.

Bolla, K., Gray, S., Resnick, S., Galante, R., & Kawas, C. (1998). Category and letter fluency in highly educated older adults. The Clinical Neuropsychologist, 12(3), 330–338.

Borge-Holthoefer, J., Moreno, Y., & Arenas, A. (2011). Modeling abnormal priming in Alzheimer’s patients with a free association network. PLoS ONE, 6(8), e22651.

Bowles, N. L., Williams, D., & Poon, L. W. (1983). On the use of word association norms in aging research. Experimental Aging Research, 9(3), 175–177.

Brainerd, C., Gomes, C., & Nakamura, K. (2015). Dual recollection in episodic memory. Journal of Experimental Psychology: General, 144(4), 816–843.

Brandt, J., & Manning, K. (2009). Patterns of word-list generation in mild cognitive impairment and Alzheimer’s disease. The Clinical Neuropsychologist, 23(5), 870–879.

Brickman, A., Paul, R., Cohen, R., Williams, L., Macgregor, K., Jefferson, A., … Gordon, E. (2005). Category and letter verbal fluency across the adult lifespan: relationship to EEG theta power. Archives of Clinical Neuropsychology, 20(5), 561–573.

Brosseau, J., & Cohen, H. (1996). The representation of semantic categories in aging. Experimental Aging Research, 22(4), 381–391.

Brown, W., & Davies, G. (1976). Studies in Word Listing: Testing for Group Differences in Category Norms. The Irish Journal of Psychology, 3(2), 87–120.

Burke, D., & Peters, L. (1986). Word associations in old age: Evidence for consistency in semantic encoding during adulthood. Psychology and Aging, 1(4), 283–292.

Casey, P. (1992). A reexamination of the roles of typicality and category dominance in verifying category membership. Journal of Experimental Psychology: Learning, Memory, and Cognition, 18(4), 823–834.

Cohen, B., Bousfield, W., & Whitmarsh, G. (1957). Cultural norms for verbal items in 43 categories. (No. TR22). CONNECTICUT UNIV STORRS.

Dubossarsky, H., De Deyne, S., & Hills, T. (2017). Quantifying the structure of free association networks across the life span. Developmental Psychology, 53(8), 1560–1570.

Gordon, J., Young, M., & Garcia, C. (2018). Why do older adults have difficulty with semantic fluency? Aging, Neuropsychology, and Cognition, 25(6), 803–828.

Hanel, P. H. P., & Vione, K. C. (2016). Do student samples provide an accurate estimate of the general public? PLoS ONE, 11(12), e0168354.

Hasselhorn, M., Jaspers, A., & Hernando, M. (1990). Typizitätsnormen zu zehn Kategorien für Kinder von der Vorschule bis zur vierten Grundschulklasse [Category norms for verbal items in ten categories for children in kindergarten and in grades 1-4]. Sprache & Kognition, 9(2), 92–108.

Henrich, J., Heine, S. J., & Norenzayan, A. (2010). The weirdest people in the world? Behavioral and Brain Sciences, 33(2–3), 61–83.

Henry, J., & Phillips, L. (2006). Covariates of production and perseveration on tests of phonemic, semantic and alternating fluency in normal aging. Aging, Neuropsychology, and Cognition, 13(3–4), 529–551.

Hertzog, C., Cooper, B., & Fisk, A. (1996). Aging and individual differences in the development of skilled memory search performance. Psychology and Aging, 11(3), 497–520.

Howard, D. (1980). Category norms: A comparison of the Battig and Montague (1969) norms with the responses of adults between the ages of 20 and 80. Journal of Gerontology, 35(2), 225–231.

Hunt, E., Davidson, J., & Lansman, M. (1981). Individual differences in long-term memory access. Memory & Cognition, 9(6), 599–608.

Hunt, R., Smith, R., & Toth, J. (2016). Category cued recall evokes a generate-recognize retrieval process. Journal of Experimental Psychology: Learning, Memory, and Cognition, 42(3), 339–350.

Kintz, S., & Wright, H. H. (2017). Semantic knowledge use in discourse: Influence of age. Discourse Processes, 54(8), 670–681.

Labouvie, E. (1980). Identity versus equivalence of psychological measures and constructs. In L. W. Poon (Ed.), Aging in the 1980s: Psychological Issues (pp. 493–502). Washington, DC: American Psychological Association.

Lewellen, M., Goldinger, S., Pisoni, D., & Greene, B. (1993). Lexical familiarity and processing efficiency: Individual differences in naming, lexical decision, and semantic categorization. Journal of Experimental Psychology: General, 122(3), 316–330.

Marful, A., Díez, E., & Fernandez, A. (2015). Normative data for the 56 categories of Battig and Montague (1969) in Spanish. Behavior Research Methods, 47(3), 902–910.

Maxfield, J., & Zelinsky, G. (2012). Searching through the hierarchy: How level of target categorization affects visual search. Visual Cognition, 20(10), 1153–1163.

McCloskey, M., & Glucksber, S. (1979). Decision processes in verifying category membership statements: Implications for models of semantic memory. Cognitive Psychology, 11(1), 1–37.

Montefinese, M., Ambrosini, E., Fairfield, B., & Mammarella, N. (2014). Semantic significance: A new measure of feature salience. Memory and Cognition, 42(3), 355–369.

Posnansky, C. J. (1978). Category norms for verbal items in 25 categories for children in grades 2–6. Behavior Research Methods & Instrumentation, 10(6), 819–832.

Rosch, E., & Mervis, C. (1975). Family resemblances: Studies in the internal structure of categories. Cognitive Psychology, 7(4), 573–605.

Schaie, K. W. (2013). Developmental Influences on Adult Intelligence: The Seattle Longitudinal Study (2nd ed.). Oxford University Press.

Scrucca, L., Fop, M., Murphy, T., & Raftery, A. (2016). mclust 5: Clustering, classification and density estimation using Gaussian finite mixture models. The R Journal, 8(1), 289–317.

Siew, C., Wulff, D., Beckage, N., & Kenett, Y. (2019). Cognitive Network Science: A review of research on cognition through the lens of network representations, processes, and dynamics. Complexity, 2019, 2108423.

Smith, E., Shoben, E., & Rips, L. (1974). Structure and process in semantic memory: A featural model for semantic decisions. Psychological Review, 81(3), 214–241.

Troyer, A., Moscovitch, M., & Winocur, G. (2000). Normative data for clustering and switching on verbal fluency tasks. Journal of Clinical and Experimental Neuropsychology, 22(3), 370–378.

Van Overschelde, J., Rawson, K., & Dunlosky, J. (2004). Category norms: An updated and expanded version of the norms. Journal of Memory and Language, 50(3), 289–335.

Venneri, A., Mitolo, M., Beltrachini, L., Varma, S., Della Pietà, C., Jahn-Carta, C., … De Marco, M. (2019). Beyond episodic memory: Semantic processing as independent predictor of hippocampal/perirhinal volume in aging and mild cognitive impairment due to Alzheimer’s Disease. Neuropsychology, 33(4), 523–533.

Verheyen, S., Droeshout, E., & Storms, G. (2019). Age-related degree and criteria differences in semantic categorization. Journal of Cognition, 2(1), 17.

White, A., Storms, G., Malt, B., & Verheyen, S. (2018). Mind the generation gap: Differences between young and old in everyday lexical categories. Journal of Memory and Language, 98, 12–25.

Wulff, D., De Deyne, S., Jones, M., Mata, R., & Consortium, T. A. L. (2019). New perspectives on the aging lexicon. Trends in Cognitive Sciences, 23(8), 686–698.

Yoon, C., Feinberg, F., Hu, P., Gutchess, A., Hedden, T., Chen, H., … Park, D. (2004). Category norms as a function of culture and age: comparisons of item responses to 105 categories by American and Chinese adults. Psychology and Aging, 19(3), 379–393.

Acknowledgements

This work was supported by a Ruth L. Kirschsten NRSA Institutional Research Training Grant from the National Institute on Aging of the National Institutes of Health (5T32AG000175) awarded to NC and TC.

Open Practices Statement

The data generated and analyzed in the present study are available at categorynorms.psychology.gatech.edu.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

ESM 1

(DOCX 863 kb)

Rights and permissions

About this article

Cite this article

Castro, N., Curley, T. & Hertzog, C. Category norms with a cross-sectional sample of adults in the United States: Consideration of cohort, age, and historical effects on semantic categories. Behav Res 53, 898–917 (2021). https://doi.org/10.3758/s13428-020-01454-9

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-020-01454-9