Abstract

The Oriented Difference of Gaussians (ODOG) model of brightness (perceived intensity) by Blakeslee and McCourt (Vision Research 39:4361-4377, 1999), which is based on linear spatial filtering by oriented receptive fields followed by contrast normalization, has proven highly successful in parsimoniously predicting the perceived intensity (brightness) of regions in complex visual stimuli such as White's effect, which had been believed to defy filter-based explanations. Unlike competing explanations such as anchoring theory, filling-in, edge-integration, or layer decomposition, the spatial filtering approach embodied by the ODOG model readily accounts for the often overlooked but ubiquitous gradient structure of induction which, while most striking in grating induction, also occurs within the test fields of classical simultaneous brightness contrast and the White stimulus. Also, because the ODOG model does not require defined regions of interest, it is generalizable to any stimulus, including natural images. The ODOG model has motivated other researchers to develop modified versions (LODOG and FLODOG), and has served as an important counterweight and proof of concept to constrain high-level theories which rely on less well understood or justified mechanisms such as unconscious inference, transparency, perceptual grouping, and layer decomposition. Here we provide a brief but comprehensive description of the ODOG model as it has been implemented since 1999, as well as working Mathematica (Wolfram, Inc.) notebooks which users can employ to generate ODOG model predictions for their own stimuli.

Similar content being viewed by others

Introduction

A central question in the study of visual perception is how and under what circumstances the visual system is able to separate the physically invariant reflectance of a surface from its potentially changing illumination. The intensity distribution falling on the photoreceptor array is the product of these two sources and their independent recovery is thus an ill-posed problem in that there are a myriad of combinations of illumination and reflectance that can give rise to any particular intensity distribution, and in the absence of additional information there is no way to uniquely recover the physically correct solution. Much of the current debate surrounding brightness (perceived intensity) and lightness (perceived reflectance) perception, therefore, centers on the nature of the prior assumptions and processing strategies the visual system uses to parse (correctly or incorrectly) the intensity distribution at the retina into components of surface reflectance and illumination.

Blakeslee and McCourt (1999) developed the Oriented Difference of Gaussians (ODOG) model to assess the degree to which early visual processes sufficed to account for brightness (perceived intensity) in a set of canonical stimuli which included the White effect stimulus (White, 1979, 1981; White & White, 1985), the classical simultaneous brightness contrast (SBC) stimulus (Heinemann, 1972), and the grating induction (GI) stimulus (Blakeslee & McCourt, 1997; Foley & McCourt, 1985; McCourt, 1982, 1994; McCourt & Blakeslee, 1994; McCourt & Foley, 1985), including the variations introduced by Zaidi (1989). The defining features of the ODOG model are characteristics exhibited at early stages of cortical visual processing, e.g., spatial frequency selectivity, orientation selectivity, and contrast gain control. The ODOG model can account for the modulation in the strength of the White effect (Blakeslee & McCourt, 2004) and GI (Blakeslee & McCourt, 2011) with changes in the spatial frequency of the inducing gratings. The ODOG model has also been shown to account for brightness perception in a wide variety of additional displays including the Wertheimer-Benary Cross stimulus (Benary, 1924; Blakeslee & McCourt, 2001, 2003), the Hermann Grid stimulus (Blakeslee & McCourt, 2003), the Gelb Staircase stimulus (Blakeslee, Reetz, & McCourt, 2009; Cataliotti & Gilchrist, 1995), Howe's variations on White's stimulus (Blakeslee et al., 2005; Howe, 2001), Todorovic's (1997) and Williams, McCoy, & Purves’ (1998) variations on the SBC stimulus (Blakeslee & McCourt, 1999; 2012), the checkerboard induction stimulus (Blakeslee & McCourt, 2004; DeValois & DeValois, 1988), the shifted White stimulus (Blakeslee & McCourt, 2004; White, 1981), Adelson's Checker-Shadow stimulus (Adelson, 1993; Blakeslee & McCourt, 2012), Adelson's Corrugated Mondrian stimulus (Adelson, 1993; Blakeslee & McCourt, 2003) including Todorovic's (1997) variation (Blakeslee & McCourt, 2001, 2003), Adelson's Snake stimulus (Adelson, 2000; Blakeslee & McCourt, 2003, 2012; Somers & Adelson, 1997), Hillis and Brainard's (2007) Paint/Shadow stimulus (Blakeslee & McCourt, 2012), so-called “remote” brightness induction stimuli (Blakeslee & McCourt, 2003, 2005; Logvinenko, 2003; Shapley & Reid, 1985), and the mid-gray probes inserted into photographs by Cartier-Bresson (Blakeslee & McCourt, 2012; Gilchrist, 2006). A modified version of the ODOG model (LODOG) which replaces the image-based contrast normalization of ODOG with a local computation (Robinson, Hammon & de Sa, 2007) can explain illusory brightness effects in a somewhat wider variety of stimuli including the zig-zag White stimulus (Spehar & Clifford, 2015) and the radial White stimulus (Anstis, 2005).

Critically, unlike competing explanations for brightness perception such as anchoring theory (Gilchrist, 2006; Gilchrist, Kossyfidis, Bonato, Agostini, Cataliotti, Li, Spehar, Annan & Economou, 1999), filling-in (Grossberg & Todorovic, 1988), edge-integration (Land & McCann, 1971; Rudd & Zemach, 2004, 2007), or layer decomposition (Anderson, 1997), the spatial filtering approach embodied by the ODOG model readily accounts for the often overlooked but ubiquitous gradient (i.e., non-uniform) structure of induction which, while most striking in grating induction (Blakeslee & McCourt, 1999, 2013; Kingdom, 1999; McCourt, 1982; McCourt & Blakeslee, 2015), also occurs in the Hermann grid illusion (Hermann, 1870; Spillmann, 1994), the Chevreul staircase (Chevreul, 1890), Mach Bands (Mach, 1865), and within the test fields of classical simultaneous brightness contrast and the White stimulus (Blakeslee & McCourt, 1999, 2015)Footnote 1. Also, because the ODOG model does not require defined regions of interest it is generalizable to any stimulus, including natural images.

We acknowledge that the ODOG model is imperfect. It would, for example, benefit from modifications such as the replacement of ODOG filters with balanced Gabor functions (Cope, Blakeslee & McCourt, 2009) and the substitution of local contrast gain control (Cope, Blakeslee & McCourt, 2013; 2014) for the image-based (global) normalization procedure which is currently implemented. Nonetheless, the utility of the spatial filtering approach lies in the ODOG model’s success in accounting for brightness in a wide variety of stimuli, ranging from simple to complex, without the adjustment of any parameter values, and its parsimony, which acts as a scientifically necessary counterweight to high-level theories which posit only vaguely specified mechanisms such as unconscious inference, perceptual transparency, Gestalt grouping, intrinsic image layer decomposition, and the like.

Because of its rigor and simplicity the ODOG model has proven both influential and provocative (Kingdom, 2011). There are ten principal publications in which the ODOG model (or its earlier non-oriented DOG version) has been invoked to explain various aspects of brightness perception (Blakeslee & McCourt, 1997, 1999, 2001, 2003, 2004, 2005, 2012, 2013; Blakeslee et al., 2005, 2009). These papers have collectively been cited over 400 times (Google Scholar). In response to persistent requests for source code from colleagues desiring to test their psychophysical results and/or own model predictions against those of the ODOG model, we here provide fully annotated Wolfram Mathematica notebooks accompanied by a brief mathematical description of the ODOG model.

ODOG model filters

The ODOG model consists of 42 oriented difference-of-gaussians filters (i.e., receptive fields) taken over six orientations and seven octave-interval spatial scales. Input patterns are linearly processed by each filter and the filter outputs are combined by a particular nonlinear weighting which approximates the shallow low-frequency falloff of the suprathreshold contrast sensitivity function (Georgeson & Sullivan, 1975).

The ODOG filters are given by:

where σ 2 > σ 1 > 0 and y 1, y 2 are rotated variables given by:

The condition σ 2 > σ 1 ensures that regions of excitation and inhibition are aligned along the y1-axis. The filters are simple difference of unit volume gaussians and are thus perfectly balanced (i.e., total filter volume = 0).

The Fourier Transform of ODOG filters is given by:

where t 1, t 2 are rotated variables given by:

ODOG filters possess six orientations at 30o intervals:

and seven spatial scales arranged at octave intervals:

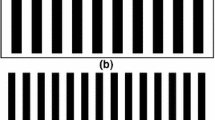

Figure 1(a) illustrates a space-domain representation of an ODOG filter in cross-section along the (oriented) y 1 -axis. Figure 1(b) illustrates the same filter in the spatial.

Input patterns

Input patterns p(x 1, x 2) are non-negative functions on the plane (i.e., images). The working region of the model is a square patch subtending 32o × 32o of visual angle, and the size of input patterns should be scaled accordinglyFootnote 2. The ODOG filters map input patterns p to output patterns q (which may be negative):

Note that the linear operator is a (reversed) convolution where the kernel has the form f(y − x)dy instead of f(x − y)dy.

The convolution form is exploited for computational efficiency. In practice, patterns are represented as 1024 × 1024 RGB pixel matrices, and the convolution is calculated using the Fast Fourier Transform.

Output patterns

Let q(σ 1, σ 2, α, x 1, x 2) be the output pattern produced by convolving an ODOG filter with spatial parameters σ 1, σ 2 and orientation α with a given input pattern p(x 1, x 2), as described in (Eq. B.1). The 42 output patterns undergo two additional stages of processing.

First, for each orientation α a weighted summation over filter size is taken:

where the weight function is \( w\left({\sigma}_1\right)={\left(\frac{8}{3}{\sigma}_1\right)}^{-1/10} \) with σ 1 in degrees. The integral is approximated by the sum:

where the values of σ 1 are given in (Eq. A.6) above.

The root mean square magnitude ‖Q(α; x 1, x 2)‖ of the output pattern at each orientation α is calculated by:

and is used as a contrast normalization factor.

The final output pattern R(x 1, x 2) is obtained by averaging the normalized output patterns over all orientations (0 ≤ α ≤ π rad):

The ODOG model approximates the integral by averaging over the six discrete orientations which are spaced at intervals of 30o:

Notes concerning implementation

It should be kept in mind that the model assumes a square region of space subtending 32o × 32o, which corresponds to an image size of 1,024 × 1,024 pixels (0.03125o/pixel). The space constant (σ) of the largest ODOG filter measures 6o (192 pixels), so the mapping of output patterns to input patterns which are restricted to the central 16o × 16o (512 × 512 pixel) region, and which are padded beyond this area with zeros (or with the pattern mean value) will be essentially free from distortion. In practice, input patterns larger than 512 × 512 pixels can be tolerated, although users may want to vary input pattern size and examine the output patterns to assess whether significant distortion is occurring.

Whereas input patterns are images (matrices of non-negative integers ranging from 0–255), the convolution of these patterns with the volume-balanced ODOG filters produces output patterns of positive and negative real numbers whose mean is zero. To display ODOG model output as images, and to compare it with psychophysical brightness matches (expressed as percent maximum luminance), output patterns are typically additively offset to possess a mean of 128, and are scaled to possess integer values between 0 and 255. The scaling factor is arbitrary, but is usually chosen to maximize the correlation between ODOG model output and brightness matching data.

Mathematica notebooks

Four executable Mathematica (.nb) notebooks are included with this paper. They are:

-

Blakeslee_Cope_&_McCourt_(Notebook_A_ODOG_Filter_FT_Generation).nb

-

Blakeslee_Cope_&_McCourt_(Notebook_B_File_Format_Conversion_TIF_to_DAT).nb

-

Blakeslee_Cope_&_McCourt_(Notebook_C_ODOG_Pattern_Processing).nb

-

Blakeslee_Cope_&_McCourt_(Notebook_D_Examine_Results).nb

These fully annotated Notebooks are written to step users through setting up appropriate directories, generating the library of ODOG filter files, processing a sample (image) pattern (White_Stimulus.tif) through the ODOG model, and examining the Input and Output patterns.

About Wolfram Mathematica

This implementation of the ODOG model uses Mathematica, a general purpose mathematical software platform by Wolfram Research, Inc. Extensive knowledge or experience with Mathematica is not required but the following background may be helpful:

-

Mathematica files are called Notebooks and the corresponding filenames have the extension .nb.

-

Notebooks are divided into cells which are identified by cell delimiters at the extreme right edge of the display.

-

To select a cell, click once on the cell delimiter, which highlights the selected cell.

-

Types of cells include Text cells (which contain text material), Input cells (which contain the executable Mathematica commands), and Output cells (which display the results of evaluating Input cells). Cell types can be identified by their different fonts. When Input cells are evaluated Output cells will appear. Another way to identify a cell type is to select the cell (click on the delimiter) and look under Format: Style in the Menu Bar where a check mark appears next to the cell type.

-

To evaluate an Input cell, select the cell (click on the delimiter) and press SHIFT+ENTER. This evaluates the commands in the Input cell. Text and Output cells cannot be evaluated. The delimiter of an Input cell is highlighted when the cell is evaluated and remains highlighted while the evaluation proceeds. In the ODOG model, some steps, such as generating the filter FT files in Notebook A, or the pattern processing stage in Notebook C, may take a few minutes for evaluation.

-

To stop an evaluation, click on Evaluation: Abort Evaluation in the Menu Bar.

-

To delete a cell (such as an Output cell), select the cell (click on the delimiter) and press the Delete key. After running the notebooks it is good practice to Delete All Output by selecting that option under Cell in the Menu Bar.

-

In this implementation of the ODOG model the only commands requiring interaction by the user are ones where directory or file names need to be set to specify storage locations. Automatic checks are provided to help.

-

The commands in an Input cell often end with semicolons which suppress the display of the output of evaluating the command. You can add or remove a semicolon at the end of a command without affecting the evaluation of a command, and you may find it helpful to remove one to see the result which is displayed. However, if the output is, say, a 1,024 × 1,024 matrix of complex-valued numerical data, the display may be too extensive to be helpful, although the experience will be memorable.

-

Mathematica is available for download on a 15-day trial basis on the Wolfram website (https://www.wolfram.com/mathematica/trial).

Notes

Researchers disagree about the conspicuity of such brightness gradients in some circumstances, but the existence of brightness gradients in physically homogeneous regions has been noted for over 100 years, has been measured experimentally, and is beyond dispute.

Because this implementation of the ODOG model performs a global contrast normalization of each orientation channel’s entire convolution image, and because the power of the ODOG model to explain test field brightness in White’s stimulus relies on the normalization of the unequal magnitudes of filter responses at different orientations, it is important that users bear this in mind when submitting stimuli to the model. For instance, the ODOG model accounts for test field appearance in the standard White stimulus such as supplied with this paper (White_Stimulus.tif) because the anisotropic stimulus occupies the entire image. The ODOG model will not account for the zig-zag version of this stimulus (Spehar & Clifford, 2015), or even a stimulus containing two White stimuli at orthogonal orientations, because these patterns, while possessing local orientation anisotropy, are globally isotropic, which causes the energy in each orientation channel’s convolution image to be nearly equal. Implementing local contrast normalization, as done by Robinson et al. (2007) in their LODOG version of the ODOG model, and which is characteristic of visual neurons (Carandini & Heeger, 1994; Cope et al, 2013; 2014), allows it to account for the zig-zag White stimulus.

References

Adelson, E. H. (1993). Perceptual organization and the judgment of brightness. Science, 262, 2042–2044.

Adelson, E. H. (2000). Lightness perception and lightness illusions. In M. Gazzaniga (Ed.), The New Cognitive Neurosciences (2nd ed., pp. 339–351). Cambridge, MA: MIT Press.

Anderson, B. L. (1997). A theory of illusory lightness and transparency in monocular and binocular images: The role of contour junctions. Perception, 26, 419–453.

Anstis, S. (2005). White’s Effect in color, luminance and motion. In L. Harris & M. Jenkin (Eds.), Seeing Spatial Form. Oxford: Oxford University Press.

Benary, W. (1924). Beobachtungen zu einem experiment uber helligkeitskontrast. Psychologische Forschung, 5, 131–142.

Blakeslee, B., & McCourt, M. E. (1997). Similar mechanisms underlie simultaneous brightness contrast and grating induction. Vision Research, 37, 2849–2869.

Blakeslee, B., & McCourt, M. E. (1999). A multiscale spatial filtering account of the White effect, simultaneous brightness contrast, and grating induction. Vision Research, 39, 4361–4377.

Blakeslee, B., & McCourt, M. E. (2001). A multiscale spatial filtering account of the Wertheimer-Benary effect and the corrugated Mondrian. Vision Research, 41, 2487–2502.

Blakeslee, B., & McCourt, M. E. (2003). A multiscale spatial filtering account of brightness phenomena. In L. Harris & M. Jenkin (Eds.), Levels of Perception. NY, New York: Springer.

Blakeslee, B., & McCourt, M. E. (2004). A unified theory of brightness contrast and assimilation incorporating oriented multiscale spatial filtering and contrast normalization. Vision Research, 44, 2483–2503.

Blakeslee, B., & McCourt, M. E. (2005). A multiscale spatial filtering account of grating induction and remote brightness induction effects: Reply to Logvinenko. Perception, 34, 793–802.

Blakeslee, B., & McCourt, M. E. (2011). Spatiotemporal analysis of brightness induction. Vision Research, 51, 1872–1879.

Blakeslee, B., & McCourt, M. E. (2012). When is spatial filtering enough? Investigations of lightness and brightness perception in stimuli containing a visible illumination component. Vision Research, 60, 40–50.

Blakeslee, B., & McCourt, M. E. (2013). Brightness induction magnitude declines with increasing distance from the inducing field edge. Vision Research, 78, 39–45.

Blakeslee, B., & McCourt, M. E. (2015). The White Effect. In Oxford compendium of visual illusions, A. Shapiro & D. Todorovic (Eds.). Oxford University Press (in press).

Blakeslee, B., Pasieka, W., & McCourt, M. E. (2005). Oriented multiscale spatial filtering and contrast normalization: A parsimonious model of brightness induction in a continuum of stimuli including White, Howe and simultaneous brightness contrast. Vision Research, 45, 607–615.

Blakeslee, B., Reetz, D., & McCourt, M. E. (2009). Spatial filtering versus anchoring accounts of brightness/lightness perception in staircase and simultaneous brightness/lightness contrast stimuli. Journal of Vision, 9(3), 22. doi:10.1167/9.3.22. 1–17, http://journalofvision.org/9/3/22/

Carandini, M., & Heeger, D. J. (1994). Summation and division by neurons in visual cortex. Science, 264, 1333–1336.

Cataliotti, J., & Gilchrist, A. (1995). Local and global processes in surface lightness perception. Perception & Psychophysics, 57, 125–135.

Chevreul, M. E. (1890). In Martel, C. (Translator), The principles of harmony and contrast of colours. London: Bell.

Cope, D., Blakeslee, B., & McCourt, M. E. (2009). Simple cell response properties imply receptive field structure: Balanced Gabor and/or bandlimited field functions. Journal of the Optical Society of America A: Optics, Image Science, and Vision, 26, 2067–2092.

Cope, D., Blakeslee, B., & McCourt, M. E. (2013). Modeling lateral geniculate nucleus response with contrast gain control. Part 1: Formulation. Journal of the Optical Society of America A: Optics, Image Science, and Vision, 30, 2401–2408.

Cope, D., Blakeslee, B., & McCourt, M. E. (2014). Modeling lateral geniculate nucleus response with contrast gain control. Part 2: Analysis. Journal of the Optical Society of America A: Optics, Image Science, and Vision, 31, 348–362.

DeValois, R. L., & DeValois, K. K. (1988). Spatial Vision. New York: Oxford University Press.

Foley, J. M., & McCourt, M. E. (1985). Visual grating induction. Journal of the Optical Society of America, A2, 1220–1230.

Georgeson, M. A., & Sullivan, G. D. (1975). Contrast constancy: Deblurring in human vision by spatial frequency channels. Journal of Physiology (London), 252, 627–656.

Gilchrist, A. L. (2006). Seeing Black and White. New York: Oxford University Press.

Gilchrist, A., Kossyfidis, C., Bonato, F., Agostini, T., Cataliotti, J., Li, X., Spehar, B., Annan, V., Economou,E. (1999). An anchoring theory of lightness perception. Psychological Review, 106, 795–834.

Grossberg, S., & Todorovic, D. (1988). Neural dynamics of 1-D and 2-D brightness perception: A unified model of classical and recent phenomena. Perception and Psychophysics, 43, 241–277.

Heinemann, E. G. (1972). Simultaneous brightness induction. In D. Jameson & L. M. Hurvich (Eds.), Handbook of Sensory Physiology, VII-4 Visual Psychophysics. Berlin: Springer-Verlag.

Hermann, L. (1870). Eine Erscheinung des simultanen Contrastes. Pflugers Archiv furs die gesamte Physiologie, 3, 13–15.

Hillis, J. M., & Brainard, D. H. (2007). Distinct mechanisms mediate visual detection and identification. Current Biology, 17, 1714–1719.

Howe, P. D. L. (2001). A comment on the Anderson (1997), the Todorovic (1997), and the Ross and Pessoa (2000) explanations of White s effect. Perception, 30, 1023–1026.

Kingdom, F. A. A. (1999). Old wine in new bottles? Some thoughts on Logvinenko's “Lightness induction revisited”. Perception, 28, 929–934.

Kingdom, F. A. A. (2011). Lightness, brightness and transparency: A quarter century of new ideas, captivating demonstrations and unrelenting controversy. Vision Research, 51, 652–673.

Land, E. H., & McCann, J. J. (1971). Lightness and retinex theory. Journal of the Optical Society of America, 61, 1–11.

Logvinenko, A. D. (2003). Does the bandpass linear filter response predict gradient lightness induction? A reply to Fred Kingdom. Perception, 32, 621–626.

Mach, E. (1865). On the effect of the spatial distribution of the light stimulus on the retina. In: Mach bands: quantitative studies on neural networks in the retina (1965), In F. Ratliff (Ed.) (253–271) San Francisco: Holden-Day.

McCourt, M. E. (1982). A spatial frequency dependent grating-induction effect. Vision Research, 22, 119–134.

McCourt, M. E. (1994). Grating induction: A new explanation for stationary visual phantoms. Vision Research, 34, 1609–1618.

McCourt, M. E., & Blakeslee, B. (1994). A contrast matching analysis of grating induction and suprathreshold contrast perception. Journal of the Optical Society of America, A, 11, 14–24.

McCourt, M. E., & Blakeslee, B. (2015). Grating Induction. In: Oxford compendium of visual illusions, A. Shapiro & D. Todorovic (Eds.), Oxford University Press (in press).

McCourt, M. E., & Foley, J. M. (1985). Spatial frequency interference on grating induction. Vision Research, 25, 1507–1518.

Robinson, A. E., Hammon, P. S., & de Sa, V. R. (2007). Explaining brightness illusions using spatial filtering and local response normalization. Vision Research, 47, 1631–1644.

Rudd, M. E., & Zemach, I. K. (2004). Quantitative properties of achromatic color induction: An edge integration analysis. Vision Research, 44, 971–981.

Rudd, M. E., & Zemach, I. K. (2007). Contrast polarity and edge integration in achromatic color perception. Journal of the Optical Society of America. A, 24, 2134–2156.

Shapley, R., & Reid, R. C. (1985). Contrast and assimilation in the perception of brightness. Proceedings of the National Academy of Science USA, 82, 5983–5986.

Somers, D. C., & Adelson, E. H. (1997). Junctions, transparency, and brightness. Investigative Ophthalmology and Visual Science, 38(Suppl), S453.

Spehar, B., & Clifford, C. W. G. (2015). The wedding cake illusion: Interaction of geometric and photometric factors in induced contrast and assimilation. In: Oxford compendium of visual illusions, A. Shapiro & D. Todorovic (Eds.). Oxford University Press (in press).

Spillmann, L. (1994). The Hermann grid illusion: A tool for studying human perceptive field organization. Perception, 23, 691–708.

Todorovic, D. (1997). Lightness and junctions. Perception, 26, 379–395.

White, M. (1979). A new effect of pattern on perceived lightness. Perception, 8, 413–416.

White, M. (1981). The effect of the nature of the surround on the perceived lightness of grey bars within square-wave test gratings. Perception, 10, 215–230.

White, M., & White, T. (1985). Counterphase lightness induction. Vision Research, 25, 1331–1335.

Williams, S. M., McCoy, A. N., & Purves, D. (1998). The influence of depicted illumination on perceived brightness. Proceedings of the National Academy of Sciences, 95, 13296–13300.

Zaidi, Q. (1989). Local and distal factors in visual grating induction. Vision Research, 29, 691–697.

Acknowledgments

This work was supported by grants NIH P20 GM103505 (MEM), NIH R01 EY014015 (BB, MEM), NSF IBN0212789 (BB), and NSF BCS1430503 (BB, MEM). The National Institute of General Medical Sciences (NIGMS) and the National Eye Institute (NEI) are components of the National Institutes of Health (NIH). The contents of this report are solely the responsibility of the authors and do not necessarily reflect the official views of the NIH, NIGMS, NEI, or NSF. The authors declare no conflicts of interest.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Blakeslee, B., Cope, D. & McCourt, M.E. The Oriented Difference of Gaussians (ODOG) model of brightness perception: Overview and executable Mathematica notebooks. Behav Res 48, 306–312 (2016). https://doi.org/10.3758/s13428-015-0573-4

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-015-0573-4