Abstract

Semantic coherence is a higher-order coherence benchmark that assesses whether a constellation of estimates—P(A), P(B), P(B | A), and P(A | B)—maps onto the relationship between sets implied by the description of a given problem. We present an automated method for evaluating semantic coherence in conditional probability estimates that efficiently reduces a large problem space into five meaningful patterns: identical sets, subsets, mutually exclusive sets, overlapping sets, and independent sets. It also identifies three theoretically interesting nonfallacious errors. We discuss unique issues in evaluating semantic coherence in conditional probabilities that are not present in joint probability judgments, such as errors resulting from dividing by zero and the use of a tolerance parameter to manage rounding errors. A spreadsheet implementing the methods described above can be downloaded as a supplement from www.springerlink.com.

Similar content being viewed by others

The study of probability estimation has a long and distinguished history in cognitive science, with Tversky and Kahneman’s (1974) article being particularly influential. Much of that research has focused on reasoning biases and logical fallacies (e.g., Kahneman & Tversky, 1973) or the empirical accuracy of such judgments (Reyna & Adam, 2003; Yates, Lee, Shinotsuka, Patalano, & Sieck, 1998). However, Wolfe, Reyna, and colleagues have recently introduced a new benchmark for assessing the quality of joint probability and conditional probability estimates, called semantic coherence (Fisher & Wolfe, 2010; Wolfe & Fisher, 2010; Wolfe & Reyna, 2010a, 2010b), along with a method for its assessment in joint probability estimation (Wolfe & Reyna, 2010b). In the present article, the concept and method of evaluating semantic coherence are extended to conditional probability judgment.

The work presented here builds on research by Wolfe and Reyna (2010a, 2010b). Wolfe and Reyna (2010b) conducted three experiments on joint probability estimation. Participants received a number of story problems and estimated P(A), P(B), P(A and B), and P(A or B). These patterns of estimates were analyzed for semantic coherence and logical fallacies using formulae implemented in an Excel spreadsheet (see Wolfe & Reyna, 2010a). In those experiments, gist representations were manipulated with pedagogic analogies. The suboptimal tendency to ignore relevant denominators was addressed with training in the logic of 2 × 2 tables to clarify joint probability estimates. In all experiments using these interventions, analogies increased semantic coherence, and the 2 × 2 tables reduced fallacies and increased semantic coherence. The spreadsheet (also available as a supplemental download from the Behavior Research Methods Web site) automated and greatly facilitated the analysis of the internal consistency of these response sets.

The remainder of the article will proceed as follows. First, the concept of semantic coherence is introduced, as it applies to conditional probability estimation. Next, an automated method of evaluating semantic coherence using formulae in a spreadsheet is outlined. In the final section, issues that are unique to conditional probability estimation are addressed, including the evaluation of nonfallacious errors, divide-by-zero errors, and the use of a tolerance parameter to manage rounding errors in estimations.

Bayes’s theorem has been a longstanding benchmark for assessing the coherence of conditional probability estimates (Barbey & Sloman, 2007; Kahneman & Tversky, 1973). One problem, however, is that a constellation of conditional probability estimates can be consistent with Bayes’s theorem and nonsensical at the same time. To illustrate this point, consider the following probability estimates regarding two election outcomes. Let P(S) be the probability that Smith will win and P(J) be the probability that Johnson will win. The estimates P(S) = .60, P(J) = .40, P(J | S) = .33, and P(S | J) = .50 are internally consistent because P(S | J) can be inferred from the previous three estimates by applying Bayes’s theorem. However, since election outcomes are mutually exclusive, the previous estimates are nonsensical, as they imply that both candidates can win the same election. Assuming that one candidate has won precludes the other candidate from winning. In order to achieve semantic coherence, it must be the case that P(S | J) = P(J | S) = 0, which also satisfies Bayes’s theorem. Thus, semantic coherence imposes an additional, more stringent constraint, whereby a constellation of estimates must map onto the relationship between sets described in the problem.

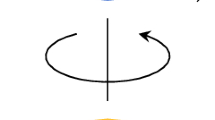

There are only five qualitatively different relationships among two sets of probability estimates and their conditional relationships: identical sets, in which all A are B and all B are A; mutually exclusive sets, in which no A are B; subsets, in which all A are B, but some B are A while some B are not A; and overlapping sets, in which some A are B, some B are A, but some A are not B and some B are not A. Independent sets represent a special case of overlapping sets in which the occurrence of A (or B) provides no additional information about the occurrence of B (or A). That is to say, A and B are orthogonal to one another, and the relationship between the two is completely random.

When estimating P(A), P(B), P(B | A), and P(A | B) using integers on a 0%–100% scale, there are over 100 million permutations. The formulae that we outline below reduce this large problem space into five meaningful response patterns, with the remaining responses classified as inconsistent with Bayes’s theorem. Based on the following definitions, the spreadsheet classifies a constellation of responses as matching a set relationship with a 1, or classifies with a 0 otherwise. The definitions produce a mutually exclusive and exhaustive classification system, with one rare exception that will be discussed further below. A constellation of estimates is semantically coherent with respect to identical sets if P(A) = P(B) and P(B | A) = P(A | B) = 1.00 (see Fig. 1). A constellation of estimates is semantically coherent with respect to mutually exclusive sets if P(A) + P(B) ≤ 1.00, P(A) > 0, P(B) > 0, and P(B | A) = P(A | B) = 0. The additional constraints that P(A) > 0 and P(B) > 0 are imposed because it is impossible to interpret a constellation when all four estimates equal zero. A constellation of estimates is semantically coherent with respect to subsets (with A as a subset of B) if 0 < P(A) < P(B), P(B | A) = 1.00, and P(A | B) = P(A)/P(B) (see Fig. 2). A constellation of estimates is semantically coherent with respect to independent sets if P(A) = P(A | B) > 0 and P(B) = P(B | A) > 0 (see Fig. 3).

To determine whether a set of estimates is consistent with overlapping sets or is inconsistent, an intermediate calculation is used to make the formulae more tractable. The result of this calculation is called total overlapping sets. It is true when a constellation of estimates does not match identical, mutually exclusive, subset, or independent sets. A constellation of estimates is semantically coherent with respect to overlapping sets if P(A | B) can be inferred from the other three estimates—that is, P(A), P(B), and P(B | A)—using Bayes’s theorem: P(A | B) = [P(B | A) * P(A)]/P(B). It must also avoid committing what we term a minimum overlapping error (described in detail below; see Fig. 4). Finally, a constellation of estimates is considered to be inconsistent if it does not match any of the previously defined set relationships.

Inconsistent sets account for approximately 97% of the entire problem space, and we have identified three particular errors that may provide some insight regarding the processes involved in probability estimation. A conversion error is committed when P(A | B) = P(B | A) and P(A) ≠ P(B). According to fuzzy trace theory (FTT; Reyna & Brainerd, 2008), a conversion error results from the simplification of the hierarchical set relationship for overlapping sets. Another error occurs when P(A) < P(B) but P(B | A) < P(A | B), when in fact the smaller denominator in P(B | A) implies a larger probability. According to FTT, this error occurs as a result of the complexity of the hierarchical set relationship present in overlapping sets. A minimum overlapping error, mentioned earlier, occurs when the conjunctive probability implied by a constellation of estimates does not satisfy the following constraint: P(B | A) * P(A) ≥ P(A) + P(B) – 1.00. The sum of probabilities will exceed 1.00 otherwise. It is important to note that a constellation of estimates can be consistent with Bayes’s theorem and yet violate this constraint, as the following demonstrates: P(A) = .80, P(B) = .80, P(B | A) = .10, P(A | B) = .10, but .80 * .10 = .08 < .80 + .80 – 1.00 = .60.

Unlike the assessment of semantic coherence in joint probability estimation (Wolfe & Reyna, 2010b), conditional probability estimation poses a unique challenge, because dividing by zero is implied when either P(A) or P(B) is estimated to be zero. The conditional implied by such an estimate is undefined. One approach is to classify such responses as inconsistent. Alternatively, the approach we adopt is to classify some of these responses as identical or mutually exclusive sets, based on semantics and context. To illustrate using the classic Linda problem (Tversky & Kahneman, 1983), consider the following estimates, in which P(F) is the probability that Linda is a feminist and P(B) is the probability that Linda is a bank teller: P(F) = .90, P(B) = 0, P(F | B) = .40, and P(B | F) = 0. The last conditional can be easily interpreted, because P(B | F) = [P(F | B) * 0]/.90 = 0 for all values of P(F | B). However, the conditional P(F | B) requires one to assume the truth of a statement previously said to be impossible. We argue that judges may bypass this contradiction by assuming an alternative state of affairs in which Linda is in fact a bank teller and providing an estimate on that basis—in which case, P(F | B) can take any value. We interpret such a pattern as consistent with mutually exclusive sets, because events A and B cannot occur simultaneously. Formally, if P(B) = 0, then 0 < P(A) ≤ 1.00, P(B | A) = 0, and 0 ≥ P(A | B) ≥ 1.00. A similar argument is proposed for identical sets in which P(A) = P(B) = 0 and P(B | A) = P(A | B) = 1.00. Even though P(A) = P(B) = 0, when A is assumed to be true, B must also be true, and vice versa. It should be noted that such responses are very rare in our experience (0.5% of responses).

Another issue resulting from division in conditional probability estimation is that of rounding error. Excel, for example, defaults to a precision level of 15 decimal places, a level of precision far too stringent to be expected of human judges. To remedy this problem, the formulae for subsets and overlapping sets compare the absolute difference between an estimated conditional and the expected conditional to an adjustable tolerance parameter, located in a separate tab of the spreadsheet. We recommend performing a sensitivity analysis by testing several tolerance levels. In our research, using tolerances of ±.005, ±.01, and ±.05 produced qualitatively similar results.

Our final issue regards the rare case in which a response is consistent with two set relationships (less than 0.5% of responses in our experience). When P(A) = P(B) = P(B | A) = P(A | B) = 1.00, it is consistent with both identical and independent sets. Resolving this problem requires an a priori classification decision in service of the goals of the researcher. By default, the supplemental spreadsheet classifies such responses as identical sets. As Wolfe and Reyna (2010b, p. 379) note, “automating the process of categorizing patterns of responses does not substitute for inspecting one’s data.”

Discussion

With only a few caveats, the method presented above automates the evaluation of semantic coherence in conditional probability estimation in a quick and effective manner. In addition to reducing a large problem space into five meaningful patterns, the spreadsheet identifies three nonfallacious errors that may be of theoretical interest. Unlike joint probability estimation, conditional probability estimation poses a unique challenge, because division by zero is implied by the conditionals when either of the marginal probabilities is estimated to be zero. Two solutions for dealing with this issue were proposed: classifying such responses as inconsistent, or classifying them as identical or mutually exclusive sets if certain constraints are satisfied. We find both solutions defensible, so long as one is adopted a priori. Since conditional probability estimation is more prone to rounding error as a result of division, a tolerance parameter can adjusted to a desired level of precision and used to perform a sensitivity analysis.

Much of the early work on conditional probability estimation addressed bold questions such as “Are people irrational?” and “Are people intuitive Bayesians?” Perhaps most current research focuses on questions that are more subtle and nuanced, but arguably no less important. For example, some researchers are interested in understanding the cognitive processes that produce conditional probability judgments, in studying individual differences in conditional probability estimation, and in determining the consequences of theoretically motivated cognitive interventions (Fisher & Wolfe, 2010; Wolfe & Fisher, 2010). Extending the work on semantic coherence from joint probabilities (Wolfe & Reyna, 2010a, 2010b) to conditional probabilities may have implications for the Bayesian approach to reasoning (Evans, 2007; Oaksford & Chater, 2007; Over, 2009). To illustrate, it has been argued that people judge the proposition “if A then B” as the conditional probability P(A | B) (Evans, 2007). Thus, from this standpoint, probability heuristics are used in many forms of verbal reasoning. Assessing semantic coherence within this framework may help distinguish among models of reasoning (Oaksford & Chater, 2007), as well as models of probabilistic inference (Reyna & Brainerd, 2008; Wolfe & Reyna, 2010b). Semantic coherence offers a benchmark or yardstick for assessing a constellation of conditional probability estimates. This is particularly helpful because, unlike joint probabilities, no single conditional probability estimate can be considered fallacious in and of itself. Yet, like fallacies, semantic coherence is a measure of internal consistency. It thus provides potential insights into nonrandom inconsistencies in thinking of the sort that have long been of interest to psychologists.

References

Barbey, A., & Sloman, S. (2007). Base-rate respect: From ecological rationality to dual processes. Behavioral and Brain Sciences, 30, 241–254.

Evans, J St B T. (2007). Hypothetical thinking: Dual processes in reasoning and judgment. Hove: Psychology Press.

Fisher, C. R., & Wolfe, C. R. (2010, November). Semantic coherence in conditional probability estimates: Euler circles and frequencies as pedagogic interventions. Paper presented at the 31st Annual Conference of the Society for Judgment and Decision Making, St. Louis, MO.

Kahneman, D., & Tversky, A. (1973). On the psychology of prediction. Psychological Review, 80, 237–251.

Oaksford, M., & Chater, N. (2007). Bayesian rationality: The probabilistic approach to human reasoning. Oxford: Oxford University Press.

Over, D. E. (2009). New paradigm psychology of reasoning. Thinking and Reasoning, 15, 431–438.

Reyna, V. F., & Adam, M. B. (2003). Fuzzy-trace theory, risk communication, and product labeling in sexually transmitted diseases. Risk Analysis, 23, 325–342.

Reyna, V. F., & Brainerd, C. J. (2008). Numeracy, ratio bias, and denominator neglect in judgments of risk and probability. Learning and Individual Differences, 18, 89–107.

Tversky, A., & Kahneman, D. (1974). Judgment under uncertainty—Heuristics and biases. Science, 185, 1124–1131.

Tversky, A., & Kahneman, D. (1983). Extensional versus intuitive reasoning: The conjunction fallacy in probability judgment. Psychological Review, 90, 293–315.

Wolfe, C. R., & Fisher, C. R. (2010, November). Semantic coherence in conditional probability estimates: 2 x 2 tables as pedagogic interventions. Paper presented to the 51st Annual Meeting of the Psychonomic Society, St. Louis, MO.

Wolfe, C. R., & Reyna, V. F. (2010a). Assessing semantic coherence and logical fallacies in joint probability estimates. Behavior Research Methods, 42, 366–372.

Wolfe, C. R., & Reyna, V. F. (2010b). Semantic coherence and fallacies in estimating joint probabilities. Journal of Behavioral Decision Making, 23, 203–223. doi:10.1002/bdm.650

Yates, J. F., Lee, J. W., Shinotsuka, H., Patalano, A. L., & Sieck, W. R. (1998). Cross-cultural variations in probability judgment accuracy: Beyond general knowledge overconfidence? Organizational Behavior and Human Decision Processes, 47, 89–117.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

ESM 1

(XLSX 52.3 kb)

Rights and permissions

About this article

Cite this article

Fisher, C.R., Wolfe, C.R. Assessing semantic coherence in conditional probability estimates. Behav Res 43, 999–1002 (2011). https://doi.org/10.3758/s13428-011-0099-3

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-011-0099-3