Abstract

While the neural bases of the earliest stages of speech categorization have been widely explored using neural decoding methods, there is still a lack of consensus on questions as basic as how wordforms are represented and in what way this word-level representation influences downstream processing in the brain. Isolating and localizing the neural representations of wordform is challenging because spoken words activate a variety of representations (e.g., segmental, semantic, articulatory) in addition to form-based representations. We addressed these challenges through a novel integrated neural decoding and effective connectivity design using region of interest (ROI)-based, source-reconstructed magnetoencephalography/electroencephalography (MEG/EEG) data collected during a lexical decision task. To identify wordform representations, we trained classifiers on words and nonwords from different phonological neighborhoods and then tested the classifiers' ability to discriminate between untrained target words that overlapped phonologically with the trained items. Training with word neighbors supported significantly better decoding than training with nonword neighbors in the period immediately following target presentation. Decoding regions included mostly right hemisphere regions in the posterior temporal lobe implicated in phonetic and lexical representation. Additionally, neighbors that aligned with target word beginnings (critical for word recognition) supported decoding, but equivalent phonological overlap with word codas did not, suggesting lexical mediation. Effective connectivity analyses showed a rich pattern of interaction between ROIs that support decoding based on training with lexical neighbors, especially driven by right posterior middle temporal gyrus. Collectively, these results evidence functional representation of wordforms in temporal lobes isolated from phonemic or semantic representations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Neural decoding analyses of activity in the posterior temporal gyri have provided important insight into the nature of neural sensitivity to segmental features in spoken language (Bhaya-Grossman & Chang, 2022; Mesgarani et al., 2014; Oganian & Chang, 2019; Yi et al., 2019). In contrast, it is less clear how neural activity reflects any aspects of specifically lexical knowledge (Poeppel & Idsardi, 2022). The goal of the present study is to isolate and localize neural representations of wordform – activity indexing sound patterns that differentiate individual words and mediate the mappings between acoustic-phonetic input and word-specific semantic, syntactic, and articulatory information.

Wordforms play a central role in lexically mediated language-dependent processes ranging from phonetic interpretation, word learning, lexical segmentation, and perceptual learning to sentence processing and rehearsal processes in working memory (Bresnan, 2001; Ganong, 1980; Gathercole et al., 1999; Merriman et al., 1989; Norris et al., 2003). However, questions as basic as how time is represented (Gwilliams et al., 2022; Hannagan et al., 2013), whether wordform representations are episodic or idealized (Pierrehumbert, 2016), morphologically decomposable or holistic (Pelletier, 2012), or fully specified versus underspecified (Lahiri & Marslen-Wilson, 1991) remain topics of vigorous debate. It is even unclear whether words and nonwords have overlapping representation above the segmental level. Nonword processing is strongly affected by form similarity to known words (Bailey & Hahn, 2001; Frisch et al., 2000; Gathercole, 1995). This could be a byproduct of word recognition processes, or a partial function of distributed word-level representations that can also capture overlapping nonword form patterns. Understanding the basis of these similarity effects would clarify the interpretation of results that depend on word-nonword contrasts.

Many studies have examined sensitivity to lexical wordform properties, including cohort size (Gaskell & Marslen-Wilson, 2002; Kocagoncu et al., 2017; Marslen-Wilson & Welsh, 1978; McClelland & Elman, 1986; Zhuang et al., 2014), phonological neighborhood density (Landauer & Streeter, 1973; Luce & Large, 2001; Luce & Pisoni, 1998; Peramunage et al., 2011), and lexical competitor environment (Prabhakaran et al., 2006). These lexical and sublexical effects are considered to play a crucial role in understanding the functional architecture of spoken word recognition and phonotactic constraints that shape wordform representation (Albright, 2009; Hayes & Wilson, 2008; Magnuson et al., 2003; Samuel & Pitt, 2003). An even larger literature has explored BOLD imaging contrasts between words and nonwords (see reviews by Binder et al., 2009; Davis & Gaskell, 2009). Several models (Gow, 2012; Hickok & Poeppel, 2007) have synthesized this research, hypothesizing that the bilateral posterior middle temporal gyrus and perhaps the bilateral supramarginal gyrus mediate the mapping between acoustic-phonetic and higher-level representations. While this work coarsely localizes the likely site containing such mediating wordform representations, it does not isolate individual wordforms, which would be an important first step towards characterizing their representation.

Isolating wordforms poses significant challenges. The phonological patterning of wordforms is confounded with the patterning of the phonemes that make up words, making it difficult to discriminate between lexical and segmental representation. This problem is compounded by evidence for the influence of lexical factors on phoneme processing and representation in the brain (Gow et al., 2021; Gow et al., 2008; Gwilliams et al., 2022; Leonard et al., 2016; Myers, 2007). Moreover, auditory words may evoke activation of semantic, articulatory, syntactic, and episodic representations in addition to stored representations of phonological wordform. Finally, evidence that both spoken words and phonotactically legal nonwords briefly activate multiple lexical candidates (Tanenhaus et al., 1995; Zhuang et al., 2014; Zwitserlood, 1989) suggests the need to separate the activation of a target word from that of its temporarily coactivated lexical candidates with overlapping phonology.

Neural decoding techniques provide powerful means for investigating the information content of neural signals (Haynes & Rees, 2006; Kriegeskorte & Diedrichsen, 2019; Kriegeskorte & Kievit, 2013). Several studies have used decoding to reconstruct latent information from human brain activity and to understand the relationship between neural representations and cognitive content (Anderson et al., 2019; Choi et al., 2021; Naselaris et al., 2009). The opportunities afforded by these methods have been tempered in part by a tendency to equate decodability with encoding or representation. Decoding analyses reveal the availability of information in neural activity to support a given classification but do not discriminate between latent information and functional representation – which must both capture contrast and influence downstream processing. In addition, functional representation should be localized in a neurally plausible brain region (e.g., an area independently identified as a lexical interface or wordform area). Following Dennett (1987) and Kriegeskorte and Diedrichsen (2019), we suggest that the concept of representation only becomes useful to theory if it can be demonstrated that a representation is plausibly localized and influences downstream processing. Several strategies have been proposed for demonstrating that putative neural representations affect downstream processing. Grootswagers et al. (2018) probed the relationship between neural activity and behavioral response time in tasks hypothesized to depend on those representations. While promising, this approach may be challenging to implement because it requires the identification of a task in which responses directly tap a specific representation, and reaction times are not significantly affected by post-representation metalinguistic processing demands. Goddard and colleagues (Goddard et al., 2016) used Granger causation analyses to explore downstream neural dependencies. Gow et al. (2023) used a variant of Goddard et al.’s strategy in which the same signals that were used to decode contrasting patterns of syllable repetition in individual brain regions using support vector machine analyses were shown to drive moment-by-moment decoding accuracy in other successful decoding regions using a Kalman filter implementation of Granger causation. The implicated regions were posterior temporal areas independently implicated in the processing of spoken words. Gow et al. (2023) suggested that these results demonstrate the propagation of representations of syllable repetitions.

In this study, we used a transfer-learning neural decoding and integrated effective connectivity approach, based on region of interest (ROI)-oriented source-reconstructed MEG/EEG activity, to identify and examine wordform representations. We isolated wordform representations by training classifiers to discriminate between activity evoked by sets of words and nonwords with overlapping phonology, for example, pick and pid (neighbors of pig) versus tote and tobe (neighbors of toad). We then tested the classifiers' ability to discriminate between untrained hub words, for example, pig versus toad. We reasoned that classifiers trained on activity prior to word recognition or nonword rejection would rely on the segmental overlap between neighbors and hub words, but that classification after word recognition would reflect similarity in global activation patterns associated with the consolidated representation of lexical neighbors. Because neighbors were defined by form similarity rather than semantic similarity, we reasoned that transfer performance would specifically depend on overlap in form representation between hub words and their neighbors. The inclusion of phonologically overlapping nonwords further allowed us to discriminate between lexically mediated classification (which should only occur for words) and sublexical influences (common to both words and nonwords). By comparing the alignment of overlap between neighbors and hub words (initial CV vs. final VC), we were further able to examine the role of word onsets and offsets in decoding. Word onsets play an out-sized role in spoken word recognition (see, e.g., Marslen-Wilson & Tyler, 1980), and so evidence of a decoding advantage for training sets that share an initial CV would be consistent with the claim that decoding taps lexical representation rather than simple phonological overlap. Finally, to determine whether decoded patterns had causal influences on downstream processing, we used the implementation of Granger causality analysis developed in Gow et al. (2023) to determine whether within-ROI activation patterns that support decoding in one area influenced decoding performance in downstream processing areas.

Methods

Participants

Twenty subjects (14 female) between the ages of 21 to 43 years (mean 29.5, SD = 7.1 years) participated. All were native speakers of American English and had no auditory, motor, or uncorrected visual impairments that could interfere with the task. Human participation was approved by the Human Subjects Review Board, and all procedures were conducted in compliance with the principles for ethical research established by the Declaration of Helsinki.

Stimuli

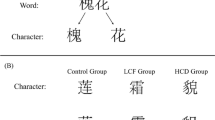

The stimuli consisted of spoken CVC words and nonwords. To limit the potential influence of gross low-level acoustic properties on classification, only stop consonants were used (/b,d,g,p,t,k/). Six words (pig, toad, cab, bike, dupe, gut) were chosen as hub words. These were each used to define a set of phonological neighbors. For each hub, we created three word and three nonword neighbors by changing only one phoneme, with the position of the changed phoneme counterbalanced across the three positions. Consonant changes involved either the voice or place feature. Changed vowels were selected to be in close proximity to the hub vowel in F1/F2 space, but this requirement was applied less strictly in order to generate word and nonword tokens. The full set of words and nonwords is included in the Online Supplementary Material (OSM; Table S1). The stimuli were recorded by two speakers, one male and one female. All final consonants were fully released, which provided a cue to item offset. After normalizing the duration to 350 ms and the intensity to 70 dB SPL, a Praat script was used to make additional versions of each token by scaling formant values and mean F0 to create eight discriminable virtual talkers with different vocal tract sizes (Darwin et al., 2003). This allowed us to both introduce spectral variability within the training set and increase the power of the design.

Procedure

Simultaneous MEG and EEG data were recorded while the subjects completed a lexical decision task using these stimuli. As the subjects listened to the recorded stimuli, the task was to determine whether or not the token they heard was a valid English word and signal their judgment via a left-handed keypress on a response pad. The subjects were asked to respond as accurately as possible. Stimuli were presented in 16 blocks, with two non-consecutive blocks assigned to each virtual talker. Within a block, each of the hub words was presented twice and each neighbor once, for a total of 48 trials. Trials were presented in a pseudo-randomized order, with the constraint that the second presentation of a hub word could not directly follow the first presentation. Stimulus presentation was controlled via PsychToolbox for Matlab (Kleiner et al., 2007).

The MEG/EEG data were collected using a Vectorview system (MEGIN, Finland) with 306 MEG channels, a 70-channel EEG cap, and vertical and horizontal electrooculograms. The EEG data were referenced to a nose electrode during the recording. All data were low-pass filtered at 330 Hz and sampled at 1,000 Hz. Prior to the testing, the locations of anatomical landmarks (nasion, and left and right preauricular points), four head-position indicator (HPI) coils, the EEG electrodes, and over 100 additional surface points on the scalp were digitized using a FASTRAK 3D digitizer (Polhemus, Colchester, VT). The head position with respect to the MEG sensor array was measured at the start of each block via the HPI coils and was tracked continuously during task performance. In a separate session, T1-weighted structural MRIs were collected from each subject on a 3T Siemens TIM Trio scanner using an MPRAGE sequence.

Behavioral analysis

Behavioral accuracy was analyzed using the lme4 (Bates & Bolker, 2012) and lmerTest (Kuznetsova et al., 2017) packages in R (R Core Team, 2023) to perform a logistic mixed-effects analysis of the relationship between accuracy and lexical class (two levels: Words vs. Nonwords). We ran the full model with word condition as the reference level. Lexical class was treated as a fixed effect. We used random intercepts and slopes for lexical class by participants and random intercepts for lexical class by items. We reported the model estimation of the change in accuracy rate (in log odds) from the reference category for each fixed effect (b), standard error of the estimate (SE), Wald z test statistic (z), and the associated p values.

MEG/EEG preprocessing and source reconstruction

MEG/EEG data were processed offline using MNE-C (Gramfort et al., 2014) via the Granger Processing Stream software (Gow & Caplan, 2012). Eyeblinks were identified manually, and a set of signal space projectors corresponding to eyeblinks and empty room noise were removed from the MEG data. Epochs from -100 to 1,000 ms time-locked to the onset of the auditory stimuli were extracted after low-pass filtering the data at 50 Hz. Epochs with high magnetometer (> 100 pT) or gradiometer (> 300 pT/cm) values were rejected. The remaining epochs were averaged across all trials with a correct response for each subject.

All decoding and effective connectivity analyses were based on MEG/EEG source estimates for a set of ROIs. Source estimation enables the interpretation of the results in terms of brain regions, and also allows connectivity analyses between regions, reducing confounds due to the spatial spread of signals over several MEG and EEG sensors (Schoffelen & Gross, 2009). Minimum Norm Estimates (MNEs) were calculated for each individual subject to reconstruct event-related electrical activity in the brain (Hämäläinen & Ilmoniemi, 1994). The MEG/EEG source space consisted of ~10,000 current dipoles located on the cortical surfaces reconstructed from the structural MRIs using Freesurfer (http://surfer.nmr.mgh.harvard.edu/). For the MEG/EEG forward model, a three-compartment (Leahy et al., 1998) boundary element model was used, with the skull and scalp boundaries obtained from the MRIs. The MRI and MEG/EEG data were co-registered using information from the digitizer and the HPI coils. The MNE inverse operator was constructed with free source orientation for the dipoles. Source estimates were obtained by multiplying the MEG/EEG sensor data with the inverse operator. The source estimates for each individual subject were then brought into a common space obtained by spherical morphing of the MRI data using Freesurfer (Fischl et al., 1999) and averaged to create the group average source reconstruction that was used to perform ROI generation. For the ROI source waveforms used in the decoding and effective connectivity analyses, we calculated noise-normalized MNE time courses for each ROI using dynamic statistical parametric mapping (dSPM).

ROIs were generated from the grand average evoked response using procedures previously described by Gow and Caplan (2012) designed to identify ROIs that meet the assumptions of Granger Causality analysis. Briefly, a set of potential centroid locations was generated consisting of the source space dipoles on the cortical surfaces with the highest activation in the time window from 100 to 500 ms after stimulus onset. From those centroids, neighboring dipoles were included into a growing ROI and distant dipoles were excluded from the final set of ROIs based on metrics of similarity, redundancy, and spatial weight. Because our decoding analyses rely on subdividing ROIs, it is beneficial to produce larger ROIs; we thus loosened our empirically determined similarity and redundancy constraints relative to previous studies (Gow & Nied (2014); see OSM Fig. 1 for an example ROI set created using previous parameters). This adjustment may increase the rate of type II errors through the inclusion of otherwise redundant signals but would not increase the likelihood of false-positive results in Granger analyses. This process resulted in a total of 39 ROIs, which were then transformed to each individual subject's source space.

For decoding analyses, individual ROIs were split into eight approximately equal-sized subdivisions. dSPM source time courses were calculated for each subdivision by averaging the source time courses of all the source dipoles within the subdivision. The mean value over the 100-ms pre-stimulus baseline period was subtracted, and the data were vector normalized across subdivisions for each timepoint in each epoch.

Source-space analyses of MEG and EEG significantly strengthen the interpretation of connectivity measures, compared with sensors-space analyses (Haufe et al., 2013). However, there will inevitably be some crosstalk between the estimated ROI source waveforms (Liu et al., 1998). Crosstalk can potentially lead to false-positive effects when a true effect in one ROI is falsely seen also in another ROI, or false-negative effects when the sensitivity to a true effect in one ROI is diminished due to the influence of crosstalk signal from another ROI without the effect. To minimize crosstalk effects, several steps were undertaken. First, we recorded simultaneous MEG and EEG, which can provide better spatial resolution than either method by itself (Sharon et al., 2007). Second, our data-driven algorithm for determining the ROIs was designed to maximize dissimilarity between the ROI source waveforms (Gow & Caplan, 2012). Third, effective connectivity analyses are based on prediction of future time points and thus less sensitive to the strictly spatial effects of crosstalk than zero-lag correlation-based connectivity measures (Nolte et al., 2004). Our previous studies of Granger causality among a relatively large number of ROIs (up to 68) have provided consistent results on speech and language-related processing (Avcu et al., 2023; Gow et al., 2008, 2023). Also, notably Michalareas et al. (2016) successfully analyzed effective connectivity among 26 human visual cortical areas using 275-channel MEG.

Neural decoding

Neural decoding analyses with a transfer learning design were conducted to evaluate phonological neighborhood representations using support vector machine (SVM) classifiers (Beach et al., 2021). The SVM classifiers were trained to discriminate neighborhoods using only trials in which the neighbors were presented, then tested on their performance to discriminate the corresponding hub words in a transfer learning design. Pairwise classification was done at each time point within the epoch for all neighborhood pairs.

To increase the robustness of the ROI source waveforms as input to the SVM, bins of eight trials were randomly selected and averaged within each condition. The random bin assignment was repeated 100 times for each condition. Single timepoints from these bin averages were used as the input to the SVM, and the average classification accuracy across the 100 bin assignments was used as the measure of decoding accuracy for each time point. Performance of these classifiers was then averaged across all pairwise neighborhood contrasts. For statistical analysis, the decoding accuracy data were submitted to cluster-based permutation tests at the group level. Clusters were defined as consecutive time points of above chance performance (alpha = 0.05; chance performance = 50% for pairwise classification). The observed accuracy data for each subject were then randomly flipped with respect to chance accuracy (Beach et al., 2021) across 1,000 permutations, and the largest cluster within each permutation was taken to form a distribution of cluster sizes. The cluster statistic was Bonferroni-adjusted (for 39 ROIs) with p < 0.00128 needed to reach significance with a corrected alpha of 0.05.

Our primary decoding analyses contrasted the transfer discrimination of pairs of hub words based on training by exclusively word or nonword neighbors (Cheng et al., 2014). The purpose was to determine the degree to which decoding relied on stored representations of known words as opposed to sublexical overlap present in both neighboring words and nonwords. In addition, to further isolate the effects of sublexical overlap, we compared the decoding of hub word contrasts as a function of positional overlap between the neighbors and hub words. All nonwords in the study partially overlapped with real words, and any decoding based on nonword training could be due to this overlap. Given evidence for the relative importance of onsets in spoken word recognition (Marslen-Wilson & Tyler, 1980), we hypothesized that overlap between the initial CV- of words and nonwords in the training set and hub words (e.g., the neighbors pick, pid, and the hub word pig) would produce better decoding than overlap involving the final -VC of training words (e.g., big, tig, and pig). To compare between conditions, a second set of cluster permutation tests was performed with an additional constraint: timepoints had to both be above chance (uncorrected alpha < 0.05) and different than the comparator condition (uncorrected alpha < 0.05) to be included in clusters. Permutations randomly flipped the relationship of observed data with respect to both the chance accuracy and zero difference between conditions null values. Clusters with Bonferroni-adjusted (for 39 ROIs) p < 0.00128 were counted as significantly different between conditions (alpha = 0.05).

Effective connectivity analyses

Effective connectivity analyses follow our previously published Granger causality analysis approach (Gow & Caplan, 2012), with modifications to integrate the results of the decoding analysis as described in Gow et al. (2023). The goal of the modified Granger causality analyses was to identify whether the activation time courses in ROIs that supported decoding could predict (or "Granger cause") the SVM classifier accuracy time courses in other ROIs. The integration involved substituting the single source waveform activation time course for each ROI (normally used in our Granger analyses) with data relevant to the decoding analyses. When evaluating the influence of a given ROI on others, the single activation time course for that ROI was substituted by the eight subdivision dSPM time courses used in the decoding analysis. When evaluating how other ROIs influenced a given ROI, the single activation time course for that ROI was substituted by the within-subject neural decoding accuracy time course averaged across all pairwise conditions from the decoding analysis based on word neighbors as the training set. All 39 ROIs were included in the predictive models, but only relationships between ROIs that showed significant transfer decoding for words were analyzed.

Representations, formally defined, require that the activity not only decode but also be related to a functional outcome (Dennett, 1987; Kriegeskorte & Diedrichsen, 2019). Our analysis thus focused on influences between regions that supported word-based transfer decoding to see how these activations reinforce each other when words were presented. Specifically, we examined the ability of the estimated activation time courses in a decoding ROI to predict the decoding accuracy in the other decoding ROIs. We selected the window of analysis to 250–550 ms, following latencies used for the lexically conditioned N400 ERP (Kutas & Federmeier, 2011). The strength of Granger causality, as quantified by the number of time points with a significant Granger Causality index, was compared in the single word condition to a control pair of ROIs (Milde et al., 2010) that did not exhibit transfer decoding in our analyses or have plausible processing relationship in this paradigm , L-cMFG1 and R-LOC1, using binomial tests.

Results

Behavioral results

Overall mean behavioral accuracy on the lexical decision was 90% (SD = 2.8%). Accuracy was higher for words (92%; SD = 4.4%) than for nonwords (86%; SD = 6.3%). This difference was statistically significant (b = 1.18, SE = 0.46, z =2.58, p =.01).

Regions of interest

A set of 39 ROIs associated with overall task-related activation was identified through our process of grouping contiguous cortical source locations associated with activation peaks that share similar temporal activation patterns (Fig. 1, Table S2 (OSM)). These ROIs were used for neural decoding and effective connectivity analyses.

Regions of interest (ROIs) visualized over an inflated averaged cortical surface. Lateral (top) and medial (bottom) views of the left and right hemisphere are shown. ROI names are generated based on the location of the centroid vertex for each ROI in the Desikian-Killiany atlas parcellation of the average subject brain. For further description of the ROIs, see Table S2 (Online Supplementary Material)

Neural decoding: Lexicality effects

Figure 2 shows results of the transfer decoding analysis in which SVM classifiers were trained using exclusively either word or nonword neighbors and then tested on their ability to classify the corresponding hub words. Within the entire 1,100-ms epoch window, significant clusters of decoding time points were found in five of the 39 ROIs (Fig. 2A): L-STG1, R-STG2, R-MTG2, R-ITG3, and R-postCG5. With the exception of R-postCG5, which shows a brief earlier period of decoding, all decoding begins after the point (~ 225 ms) at which Gwilliams et al. (2022) identify the earliest decoding of the third phoneme of words in connected speech. All five of these produced successful transfer decoding of hub words when trained with word neighbors. When trained with nonword neighbors, only L-STG1 produced successful transfer decoding and only prior to stimulus offset. This timing falls after the potential decodability of the phoneme that makes training items nonwords, but possibly before all phonemic information is integrated in lexical representations. Overall, training with word neighbors resulted in better decoding across more ROIs than training with nonword neighbors.

Bonferonni-corrected significant (corrected alpha = 0.05) transfer decoding clusters after training with word or nonword neighbors. (A) Clusters of significant above chance transfer decoding accuracy for words-only (blue solid) or nonwords-only (red dotted) conditions. (B) Clusters of transfer decoding accuracy which is above chance and significantly differs between training conditions. Better transfer decoding for words-only condition indicated by blue solid bars; no clusters of better transfer decoding for nonwords-only condition were observed. The vertical dotted line in both panels indicates the offset of the auditory stimuli

Differences between conditions were observed in a total of six ROIs (Fig. 2B). All significant differences showed better decoding when trained with words than with nonwords. Prior to stimulus offset, two ROIs showed a difference between training conditions: L-ParaHip1 and R-postCG2. Four ROIs showed a difference during the post-offset period between 400 and 600 ms: L-MTG1, R-STG1, R-STG2, and R-ITG3. These differences are consistent with a lexically mediated effect.

Neural decoding: Positional effects

We also examined the role of onset overlap between neighbors in the training set and hub word decoding. We hypothesized that neighbors that share a common CV onset with hub words would support more accurate hub word decoding than neighbors that share the same amount of overlap at their VC offsets. Within the entire epoch window, significant clusters of decoding time points were found in six of the 39 ROIs (Fig. S3A (OSM)) when classifiers were trained by neighbors that shared initial CV- sequences: L-STG1, R-STG1, R-STG2, R-STG3, R-postCG5 and R-preCG2. No ROIs produced successful transfer decoding when trained only by neighbors that shared final -VC sequences. Significant differences were found prior to stimulus offset for 13 ROIs (Fig. S3B (OSM)), with better decoding observed for initial CV- overlap in L-STG1, L-MTG2, L-ParsOrb1, R-STG1, R-STG2, R-STG3, R-MTG2, R-MTG4, R-ITG1, R-postCG4, R-postCG5, R-preCG2, and R-ParsOrb1. L-STG1 also supported better decoding for final -VC overlap over initial CV- overlap, but this was late in the epoch after the period typically associated with automatic spoken word recognition (Kutas & Federmeier, 2011). As predicted, we found better decoding was achieved using training neighbors with initial than with final overlap. Across both analyses, decoding supported by initial CV overlap generally occurred before stimulus offset. We believe this is due to the inclusion of nonwords in the training sets that failed to support lexical representation after they became inconsistent with lexical candidates.

Effective connectivity analyses

To determine whether local patterns of neural activity influence downstream neural processing, we examined whether the event-related neural activity in any one of our decoding ROIs significantly influenced the decoding accuracy in the others within the 250- to 550-ms window associated with lexical processing indexed by the N400 component (Kutas & Federmeier, 2011). We focused on interactions among the five ROIs (L-STG1, R-STG2, R-MTG2, R-ITG3, and R-postCG5) that successfully discriminated hub words after training with real word neighbors of those hubs. FDR-corrected tests revealed 13 significant interactions (Fig. 3). These included reciprocal connections between R-MTG2 and L-STG1, R-STG2 and R-postCG5, as well as between L-STG1 and R-ITG3.We hypothesize that reciprocal relationships reflect a resonance dynamic that develops parity between representations, for example aligning wordform representations hypothesized to occur in posterior middle temporal gyrus/inferior temporal gyrus (MTG/ITG) (Gow, 2012; Hickok & Poeppel, 2007) with lower level segmental representations associated with superior temporal gyrus (STG) (Hickok & Poeppel, 2007; Mesgarani et al., 2014).

Effective connectivity analysis of transfer decoding regions of interest (ROIs). Effective connectivity between the five ROIs that supported reliable transfer decoding of hub words after training with word neighbors in the interval of 250–550 ms after the onset of the word. Lighter arrows indicate significant one-way directed Granger causation between ROIs. Darker green arrows indicate significant reciprocal connectivity. Significance is based on FDR-corrected binomial testing with an alpha of 0.05

Discussion

We undertook this study to isolate wordform representations and examine their neural basis. Wordforms were isolated from segmental overlap in time and from semantic overlap in feature space using phonological neighborhoods. We found significant decoding after training with either word or nonword neighbors prior to stimulus offset, consistent with incremental acoustic-phonetic processing before the presentation of the stimulus was complete. Indeed, when training was based on phonological overlap of the initial consonant, the same ROIs were able to decode, suggesting phonological overlap alone was sufficient to produce decoding in the word and/or nonword conditions early in the epoch. In contrast, post-stimulus offset decoding occurred mainly after training with word neighbors, suggesting lexical sensitivity in the wordform representation. This post-offset regime aligned with the well-characterized event-related potential evidence of lexical processing, including the N400 (Kutas & Federmeier, 2011). Additionally, phonological overlap alone did not produce transfer decoding in the immediate post-offset period, strengthening the case for lexical sensitivity. The lexically sensitive decoding occurred in a bilateral network of mostly temporal lobe regions. The effective connectivity analysis showed that the word-evoked activity in these regions preferentially influenced decoding accuracy in other decoding regions, with one region in right posterior middle temporal gyrus, R-MTG2, showing direct reciprocal influences on all but one of its fellow decoding regions (R-ITG3), and indirect influences on that area (mediated by interactions with R-STG2). Independent evidence that the bilateral posterior middle temporal gyrus is involved in lexical processing (see reviews by Gow, 2012, and Hickok & Poeppel, 2007) attests to the plausibility of this region as a site for wordform representation. To sum up, our results revealed a bilateral network of temporal lobe areas that were sensitive to both the phonology and lexicality of word-like stimuli and also affected downstream processing.

We achieved these results despite implementing a difficult decoding design. Rather than a leave-n-out cross-validation approach common to many decoding studies (Guggenmos et al., 2018; Hastie et al., 2009), we tested the classifiers in a transfer learning design with stimuli from multiple talkers. The SVMs were trained on one set of words or nonwords and tested on an untrained set of phonologically similar words, based on the assumption that phonologically similar words have overlapping patterns of distributed neural representation. Additionally, our ROI-based approach restricted the signals that the SVM could rely upon to classify epochs. We made these design choices to address qualities necessary to establish a representation. As formally defined by Dennett (1987) and Kriegeskorte and Diedrichsen (2019), for a signal to be a representation it must index the stimulus feature, affect downstream processing, and have a plausible localization. Our integrated approach allowed us to evaluate all three criteria: the decoding analyses tested for feature sensitivity; the effective connectivity analyses examined downstream effects; and the ROI-based approach addressed the location.

Most of the ROIs that decoded hub words based on training with words were in bilateral temporal lobe regions previously implicated a variety of spoken languages functions. Posterior middle temporal regions that decoded for words (combining results from the single condition and differences between condition analyses) include L-MTG1 and R-MTG2, located in regions that have been implicated in wordform representation. Posterior MTG and adjacent areas have been specifically implicated in wordform representations that mediate the mapping between sound and meaning (Gow, 2012; Hickok & Poeppel, 2007). Imaging studies have shown that activation in these posterior temporal regions is influenced by wordform properties such as word frequency, lexical neighborhood size, lexical enhancement/suppression, phonological similarity, and word-level structural properties (Biran & Friedmann, 2005; Gow et al., 2023; Graves et al., 2007; Prabhakaran et al., 2006; Righi et al., 2009). In addition, damage to posterior temporal regions has been shown to produce deficits in lexico-semantic processing (Axer et al., 2001; Coslett et al., 1987; Goldstein, 1948; Wernicke, 1970).

R-ITG3 is an anterior temporal ROI sensitive to wordform, located in a region that has been associated with word retrieval (Abrahams et al., 2003), attention to semantic relations (McDermott et al., 2003), and representing the semantic similarity among concepts (Patterson et al., 2007). Superior temporal ROIs included L-STG1 and R-STG1,2,, in regions that have been shown to be sensitive to the processing and representation of the sound structure of language (Hickok & Poeppel, 2007; Mesgarani et al., 2014). Effective connectivity studies of phonotactic repair in phoneme categorization judgments (Gow & Nied, 2014), phonological acceptability judgments (Avcu et al., 2023), and phonotactic frequency effects on lexical decision (Gow & Olson, 2015, 2016; Gow & Segawa, 2009; Gow et al., 2008) suggest the possibility of "referred lexical sensitivity" arising from resonance between acoustic-phonetic representation in superior temporal regions and lexical representation in middle temporal regions and supramarginal gyrus.

Outside of the temporal lobe, a ventral sensorimotor ROI, R-postCG5, showed decoding for words after stimulus presentation. This specific ROI aligns with a segment of the sensorimotor cortex involved in oral movements (Pardo et al., 1997), sensitive to the frequency of articulatory patterns (Treutler & Sörös, 2021) and associated with the perception of spoken language (Schomers & Pulvermüller, 2016; Tremblay & Small, 2011).

The positional analysis (Figs. S3 and S4 (OSM)) showed that word-initial overlap between training and test items supported decoding by more ROIs than did word-final overlap. This aligns with behavioral results that have shown that spoken word recognition relies more heavily on word onsets than offsets (Allopenna et al., 1998; Marslen-Wilson & Tyler, 1980; Marslen-Wilson, 1987). Despite this onset-bias, more ROIs decoded in the word analysis than in the onset analysis specifically in the post-offset period, suggesting that overlap at each position contributed to classification performance in the word condition. While phonological representations appear to persist for up to hundreds of milliseconds after presentation (Gwilliams et al., 2022), we did not observe transfer decoding based on phonological overlap after stimulus presentation. This implies that the neural activation patterns reflected parallel activation of stored words with overlapping phonology. Evidence for overlapping neural representation of phonologically similar words suggests a neural basis for lexically mediated "gang effects" supporting a variety of speech and spoken word recognition effects where less activated lexical candidates provide cumulative top-down support based on phonological overlap with input representations. This interpretation is consistent with the claims of the TRACE model (McClelland & Elman, 1986), in which cohort size, length of the target word, and phonetic saliency can modulate the intensity of gang effects and their role in onset effects, phonotactic repair, and categorical speech perception (Hannagan et al., 2013).

The results of our integrated effective connectivity analyses strengthen the argument for functional representation of decodable properties. The results show a dense pattern of interaction between decoding regions with activity that supports decoding of hub word contrasts based on training with their word neighbors influencing decoding accuracy in other ROIs. Significantly, most interactions are reciprocal and involve an ROI in lexically implicated right posterior middle temporal gyrus, R-MTG2 (Gow, 2012; Hickok & Poeppel, 2007). The reciprocal effective connectivity between this region and bilateral posterior STG (L-STG1 and R-STG2) is consistent with prior findings showing that behavioral evidence for lexical influences on speech perception coincides with increased Granger influences by posterior MTG on posterior STG (Gow & Nied, 2014; Gow & Olson; Gow & Olson, 2015; Gow et al., 2021). Whereas damage to posterior MTG is associated with lexical deficits, damage to posterior STG is not associated with specifically lexical deficits (Gow, 2012; Hickok & Poeppel, 2007). This implies that decoding of lexical neighbors by posterior STG reflects segmental representations (Gwilliams et al., 2022; Mesgarani et al., 2014) that are enhanced by resonance with word level representations in posterior MTG. These representations may be further stabilized by reciprocal connections over a larger network of wordform-informed representations of articulation in inferior postcentral gyrus (R-postCG5) and representations integrating sensory, motor and linguistic representations in R-ITG3, a portion of anterior inferior temporal cortex with MNI coordinates consistent with the anterior temporal pole (Patterson et al., 2007; Small et al., 1997). These effective connectivity patterns demonstrate the propagation of wordform representation through a distributed network of regions involved in spoken word recognition.

Taken together, our results provide evidence of a functional neural representation of wordform in the right temporal cortex, with contributions from left STG. The convergence of decoding and effective connectivity results with an existing experimental literature implicating this region in form-based lexical processes satisfies the requirements for demonstrating representation identified by Dennett (1987) and Kriegeskorte and Diedrichsen (2019). This approach provides a potential template for future experimental exploration of neural representation. While the current results provide a limited window into the content of representations of wordform, the finding that representations evoked by phonologically similar words involve sufficiently similar patterns of neural activity to support transfer decoding demonstrates that wordform representations systematically encode aspects of phonological structure rather than simply individuating words or the mapping to syntactic or semantic information. We suggest that future work exploring the content of these representations may shed light on the role that lexical representation plays in listeners’ ability to discriminate phonemic minimal pairs, while still being able to recognize words despite systematic phonemic disruption resulting from phenomena including lawful phonological processes (e.g., assimilation), reduced speech, or dialectal variation.

Data Availability

The authors confirm that the data supporting the findings of this study are available within the article [and/or] its supplementary materials through the Harvard Dataverse (Gow, David, 2024, "Replication Data for Gang EffectsStudy", https://doi.org/10.7910/DVN/8FOV1J, Harvard Dataverse, V1). Code and documentation for our Granger Processing Stream (GPS) is available at https://www.nmr.mgh.harvard.edu/software/gps. GPS relies on Freesurfersoftware for MRI analysis and automatic cortical parcellation (https://surfer.nmr.mgh.harvard.edu/) and MNE software for reconstruction of minimum norm estimates of source activity based on combined MRI, MEG and EEG data (https://pypi.org/project/mne/). Support vector machine code can be found at (www.csie.ntu.edu.tw/~cjlin/libsvm).

References

Abrahams, S., Goldstein, L. H., Simmons, A., Brammer, M. J., Williams, S. C., Giampietro, V. P., ..., Leigh, P. N. (2003). Functional magnetic resonance imaging of verbal fluency and confrontation naming using compressed image acquisition to permit overt responses. Hum Brain Mapp, 20(1), 29-40. https://doi.org/10.1002/hbm.10126

Albright, A. (2009). Feature-based generalization as a source of gradient acceptability. Phonology, 26(1), 9–41. https://doi.org/10.1017/S0952675709001705

Allopenna, P. D., Magnuson, J. S., & Tanenhaus, M. K. (1998). Tracking the time course of spoken word recognition using eye movements: Evidence for continuous mapping models. Journal of Memory and Language, 38(4), 419–439. https://doi.org/10.1006/jmla.1997.2558

Anderson, A. J., Binder, J. R., Fernandino, L., Humphries, C. J., Conant, L. L., Raizada, R. D., ..., & Lalor, E. C. (2019). An integrated neural decoder of linguistic and experiential meaning. Journal of Neuroscience, 39(45), 8969-8987. https://doi.org/10.1523/JNEUROSCI.2575-18.2019

Avcu, E., Newman, O., Ahlfors, S. P., & Gow, D. W., Jr. (2023). Neural evidence suggests phonological acceptability judgments reflect similarity, not constraint evaluation. Cognition, 230, 105322. https://doi.org/10.1016/j.cognition.2022.105322

Axer, H., Keyserlingk, A. G. V., Berks, G., & Keyserlingk, D. G. V. (2001). Supra-and infrasylvian conduction aphasia. Brain and Language, 76(3), 317–331. https://doi.org/10.1006/brln.2000.2425

Bailey, T. M., & Hahn, U. (2001). Determinants of wordlikeness: Phonotactics or lexical neighborhoods? Journal of Memory and Language, 44(4), 568–591. https://doi.org/10.1006/jmla.2000.2756

Bates, D., Bolker, B. (2012) lme4. 0: Linear mixed-effects models using S4 classes. R package version 09999–1/r1692 2012. http://CRAN.R-project.org/package=lme4

Beach, S. D., Ozernov-Palchik, O., May, S. C., Centanni, T. M., Gabrieli, J. D., & Pantazis, D. (2021). Neural decoding reveals concurrent phonemic and subphonemic representations of speech across tasks. Neurobiology of Language, 2(2), 254–279. https://doi.org/10.1162/nol_a_00034

Bhaya-Grossman, I., & Chang, E. F. (2022). Speech computations of the human superior temporal gyrus. Annual Review of Psychology, 73, 79–102. https://doi.org/10.1146/annurev-psych-022321-035256

Binder, J. R., Desai, R. H., Graves, W. W., & Conant, L. L. (2009). Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cerebral Cortex, 19(12), 2767–2796. https://doi.org/10.1093/cercor/bhp055

Biran, M., & Friedmann, N. (2005). From phonological paraphasias to the structure of the phonological output lexicon. Language and Cognitive Processes, 20(4). https://doi.org/10.1080/01690960400005813

Bresnan, J. (2001). Explaining morphosyntactic competition. In Mark Baltin & Chris Collins (Eds.), Handbook of Contemporary Syntactic Theory (pp. 1–44). Blackwell.

Cheng, X., Schafer, G., & Riddell, P. M. (2014). Immediate Auditory Repetition of Words and Nonwords: An ERP Study of Lexical and Sublexical Processing. PLoS One, 9(3), e91988. https://doi.org/10.1371/journal.pone.0091988

Choi, H. S., Marslen-Wilson, W. D., Lyu, B., Randall, B., & Tyler, L. K. (2021). Decoding the real-time neurobiological properties of incremental semantic interpretation. Cerebral Cortex, 31(1), 233–247. https://doi.org/10.1093/cercor/bhaa222

Coslett, H. B., Roeltgen, D. P., Gonzalez Rothi, L., & Heilman, K. M. (1987). Transcortical sensory aphasia: evidence for subtypes. Brain and Language, 32(2), 362–378. https://doi.org/10.1016/0093-934X(87)90133-7

Darwin, C. J., Brungart, D. S., & Simpson, B. D. (2003). Effects of fundamental frequency and vocal-tract length changes on attention to one of two simultaneous talkers. The Journal of the Acoustical Society of America, 114(5), 2913–2922. https://doi.org/10.1121/1.1616924

Davis, M. H., & Gaskell, M. G. (2009). A complementary systems account of word learning: neural and behavioural evidence. Philosophical Transactions of the Royal Society of London Series B, Biological Sciences, 364(1536), 3773–3800. https://doi.org/10.1098/rstb.2009.0111

Dennett, D. C. (1987). The intentional stance. The MIT Press.

Fischl, B., Sereno, M. I., Tootell, R. B. H., & Dale, A. M. (1999). High resolution intersubject averaging and a coordinate system for the cortical surface. Human Brain Mapping, 8(4), 272–284. https://doi.org/10.1002/(sici)1097-0193(1999)8:4

Frisch, S., Large, N., & Pisoni, D. (2000). Perception of wordlikeness: Effects of segment probability and length on the processing of nonwords. Journal of Memory and Language, 42(4), 481–496. https://doi.org/10.1006/jmla.1999.2692

Ganong, W. F., 3rd. (1980). Phonetic categorization in auditory word perception. Journal of Experimental Psychology: Human Perception and Performormance, 6(1), 110–125. https://doi.org/10.1037/0096-1523.6.1.110

Gaskell, M. G., & Marslen-Wilson, W. (2002). Representation and competition in the perception of spoken words. Cognitive Psychology, 45(2), 220–266. https://doi.org/10.1016/S0010-0285(02)00003-8

Gathercole, S. E. (1995). Is nonword repetition a test of phonological memory or long-term knowledge? It all depends on the nonwords. Memory & Cognition, 23(1), 83–94.

Gathercole, S. E., Frankish, C. R., Pickering, S. J., & Peaker, S. (1999). Phonotactic influences on short-term memory. Journal of Experimental Psychology: Learning Memory and Cognition, 25(1), 84–95. https://doi.org/10.1037/0278-7393.25.1.84

Goddard, E., Carlson, T. A., Dermody, N., & Woolgar, A. (2016). Representational dynamics of object recognition: Feedforward and feedback information flows. Neuroimage, 128, 385–397. https://doi.org/10.1016/j.neuroimage.2016.01.006

Goldstein, K. (1948). Language and language disturbances; aphasic symptom complexes and their significance for medicine and theory of language. Grune & Stratton.

Gow, D. W. (2012). The cortical organization of lexical knowledge: A dual lexicon model of spoken language processing. Brain and Language, 121(3), 273–288. https://doi.org/10.1016/j.bandl.2012.03.005

Gow, D. W., Avcu, E., Schoenhaut, A., Sorensen, D. O., & Ahlfors, S. P. (2023). Abstract representations in temporal cortex support generative linguistic processing. Language. Cognition and Neuroscience, 38(6), 765–778. https://doi.org/10.1080/23273798.2022.2157029

Gow, D. W., & Caplan, D. N. (2012). New levels of language processing complexity and organization revealed by granger causation. Frontiers in Psychology, 3, 506. https://doi.org/10.3389/fpsyg.2012.00506

Gow, D. W., & Nied, A. (2014). Rules from words: Phonotactic biases in speech perception. PloS One, 9(1), 1–12. https://doi.org/10.1371/journal.pone.0086212

Gow, D. W., & Olson, B. B. (2015). Lexical mediation of phonotactic frequency effects on spoken word recognition: A Granger causality analysis of MRI-constrained MEG/EEG data. Journal of Memory and Language, 82, 41–55. https://doi.org/10.1016/j.jml.2015.03.004

Gow, D. W., & Olson, B. B. (2016). Sentential influences on acoustic-phonetic processing: A Granger causality analysis of multimodal imaging data. Language Cognition and Neuroscience, 31(7), 841–855. https://doi.org/10.1080/23273798.2015.1029498

Gow, D. W., Schoenhaut, A., Avcu, E., & Ahlfors, S. (2021). Behavioral and neurodynamic effects of word learning on phonotactic repair. Frontiers in Psychology, 12, 590155. https://doi.org/10.3389/fpsyg.2021.590155

Gow, D. W., & Segawa, J. A. (2009). Articulatory mediation of speech perception: a causal analysis of multi-modal imaging data. Cognition, 110(2), 222–236. https://doi.org/10.1016/j.cognition.2008.11.011

Gow, D. W., Segawa, J. A., Ahlfors, S. P., & Lin, F.-H. (2008). Lexical influences on speech perception: A Granger causality analysis of MEG and EEG source estimates. NeuroImage, 43(3), 614–623. https://doi.org/10.1016/j.neuroimage.2008.07.027

Gramfort, A., Luessi, M., Larson, E., Engemann, D. A., Strohmeier, D., Brodbeck, C., ..., & Hamalainen, M. S. (2014). MNE software for processing MEG and EEG data. Neuroimage, 86, 446-460. https://doi.org/10.1016/j.neuroimage.2013.10.027

Graves, W. W., Grabowski, T. J., Mehta, S., & Gordon, J. K. (2007). A neural signature of phonological access: distinguishing the effects of word frequency from familiarity and length in overt picture naming. Journal of Cognitive Neuroscience, 19(4), 617–631. https://doi.org/10.1162/jocn.2007.19.4.617

Grootswagers, T., Cichy, R. M., & Carlson, T. A. (2018). Finding decodable information that can be read out in behaviour. NeuroImage, 179, 252–262. https://doi.org/10.1016/j.neuroimage.2018.06.022

Guggenmos, M., Sterzer, P., & Cichy, R. M. (2018). Multivariate pattern analysis for MEG: A comparison of dissimilarity measures. Neuroimage, 173, 434–447. https://doi.org/10.1016/j.neuroimage.2018.02.044

Gwilliams, L., King, J. R., Marantz, A., & Poeppel, D. (2022). Neural dynamics of phoneme sequences reveal position-invariant code for content and order. Nature Communications, 13(1), 6606. https://doi.org/10.1038/s41467-022-34326-1

Hämäläinen, M. S., & Ilmoniemi, R. J. (1994). Interpreting magnetic fields of the brain: minimum norm estimates. Medical & Biological Engineering & Computing, 32, 35–42.

Hannagan, T., Magnuson, J. S., & Grainger, J. (2013). Spoken word recognition without a TRACE. Frontiers in Psychology, 4. https://doi.org/10.3389/fpsyg.2013.00563

Hastie, T., Tibshirani, R., & Friedman, J. H. (2009). The elements of statistical learning: data mining, inference, and prediction (2nd ed.). Springer.

Haufe, S., Nikulin, V. V., Müller, K.-R., & Nolte, G. (2013). A critical assessment of connectivity measures for EEG data: a simulation study. Neuroimage, 64, 120–133. https://doi.org/10.1016/j.neuroimage.2012.09.036

Hayes, B., & Wilson, C. (2008). A maximum entropy model of phonotactics and phonotactic learning. Linguistic Inquiry, 39(3), 379–440. https://doi.org/10.1162/ling.2008.39.3.379

Haynes, J.-D., & Rees, G. (2006). Decoding mental states from brain activity in humans. Nature Reviews Neuroscience, 7(7), 523–534. https://doi.org/10.1038/nrn1931

Hickok, G., & Poeppel, D. (2007). The cortical organization of speech processing. Nature Reviews Neuroscience, 8(5), 393–402. https://doi.org/10.1038/nrn2113

Kleiner, M., Brainard, D., Pelli, D., Ingling, A., Murray, R., & Broussard, C. (2007). What's new in psychtoolbox-3. Perception, 36(14), 1–16. https://doi.org/10.1177/03010066070360S101

Kocagoncu, E., Clarke, A., Devereux, B. J., & Tyler, L. K. (2017). Decoding the cortical dynamics of sound-meaning mapping. Journal of Neuroscience, 37(5), 1312–1319. https://doi.org/10.1523/JNEUROSCI.2858-16.2016

Kriegeskorte, N., & Diedrichsen, J. (2019). Peeling the onion of brain representations. Annual Review of Neuroscience, 42, 407–432. https://doi.org/10.1146/annurev-neuro-080317-061906

Kriegeskorte, N., & Kievit, R. A. (2013). Representational geometry: integrating cognition, computation, and the brain. Trends in Cognitive Sciences, 17(8), 401–412. https://doi.org/10.1016/j.tics.2013.06.007

Kutas, M., & Federmeier, K. D. (2011). Thirty years and counting: finding meaning in the N400 component of the event-related brain potential (ERP). Annual Review of Psychology, 62, 621–647. https://doi.org/10.1146/annurev.psych.093008.131123

Kuznetsova, A., Brockhoff, P. B., & Christensen, R. H. (2017). lmerTest package: tests in linear mixed effects models. Journal of Statistical Software, 82, 1–26. https://doi.org/10.18637/jss.v082.i13

Lahiri, A., & Marslen-Wilson, W. (1991). The mental representation of lexical form: A phonological approach to the recognition lexicon. Cognition, 38(3), 245–294. https://doi.org/10.1016/0010-0277(91)90008-R

Landauer, T. K., & Streeter, L. A. (1973). Structural differences between common and rare words: Failure of equivalence assumptions for theories of word recognition. Journal of Verbal Learning and Verbal Behavior, 12(2), 119–131. https://doi.org/10.1016/S0022-5371(73)80001-5

Leahy, R. M., Mosher, J. C., Spencer, M. E., Huang, M. X., & Lewine, J. D. (1998). A study of dipole localization accuracy for MEG and EEG using a human skull phantom. Electroencephalography and Clinical Neurophysiology, 107(2), 159–173. https://doi.org/10.1016/S0013-4694(98)00057-1

Leonard, M. K., Baud, M. O., Sjerps, M. J., & Chang, E. F. (2016). Perceptual restoration of masked speech in human cortex. Nature Communications, 7(13619). https://doi.org/10.1038/ncomms13619

Liu, A. K., Belliveau, J. W., & Dale, A. M. (1998). Spatiotemporal imaging of human brain activity using functional MRI constrained magnetoencephalography data: Monte Carlo simulations. Proceedings of the National Academy of Science USA, 95(15), 8945–8950. https://doi.org/10.1073/pnas.95.15.8945

Luce, P. A., & Large, N. (2001). Phonotactics, density, and entropy in spoken word recognition. Language and Cognitive Processes, 16(5), 565–581. https://doi.org/10.1080/01690960143000137

Luce, P. A., & Pisoni, D. B. (1998). Recognizing spoken words: the neighborhood activation model. Ear and Hearing, 19(1), 1–36. https://doi.org/10.1097/00003446-199802000-00001

Magnuson, J. S., McMurray, B., Tanenhaus, M. K., & Aslin, R. S. (2003). Lexical effects on compensation for coarticulation: a tale of two systems? Cognitive Science, 27(5), 801–805. https://doi.org/10.1016/s0364-0213(03)00067-3

Marslen-Wilson, W. D. (1987). Functional parallelism in spoken word-recognition. Cognition, 25(1–2), 71–102. https://doi.org/10.1016/0010-0277(87)90005-9

Marslen-Wilson, W., & Tyler, L. K. (1980). The temporal structure of spoken language understanding. Cognition, 8(1), 1–71. https://doi.org/10.1016/0010-0277(80)90015-3

Marslen-Wilson, W. D., & Welsh, A. (1978). Processing interactions and lexical access during word recognition in continuous speech. Cognitive Psychology, 10(1), 29–63. https://doi.org/10.1016/0010-0285(78)90018-X

McClelland, J. L., & Elman, J. L. (1986). The TRACE model of speech perception. Cognitive Psychology, 18(1), 1–86. https://doi.org/10.1016/0010-0285(86)90015-0

McDermott, K. B., Petersen, S. E., Watson, J. M., & Ojemann, J. G. (2003). A procedure for identifying regions preferentially activated by attention to semantic and phonological relations using functional magnetic resonance imaging. Neuropsychologia, 41(3), 293–303. https://doi.org/10.1016/s0028-3932(02)00162-8

Merriman, W. E., Bowman, L. L., & MacWhinney, B. (1989). The mutual exclusivity bias in children's word learning. Monographs of the Society for Research in Child Development, i-129. https://doi.org/10.2307/1166130

Mesgarani, N., Cheung, C., Johnson, K., & Chang, E. F. (2014). Phonetic feature encoding in human superior temporal gyrus. Science, 343(6174), 1006–1010. https://doi.org/10.1126/science.1245994

Michalareas, G., Vezoli, J., Van Pelt, S., Schoffelen, J.-M., Kennedy, H., & Fries, P. (2016). Alpha-beta and gamma rhythms subserve feedback and feedforward influences among human visual cortical areas. Neuron, 89(2), 384–397. https://doi.org/10.1016/j.neuron.2015.12.018

Milde, T., Leistritz, L., Astolfi, L., Miltner, W. H., Weiss, T., Babiloni, F., & Witte, H. (2010). A new Kalman filter approach for the estimation of high-dimensional time-variant multivariate AR models and its application in analysis of laser-evoked brain potentials. NeuroImage, 50(3), 960–969. https://doi.org/10.1016/j.neuroimage.2009.12.110

Myers, E. B. (2007). Dissociable effects of phonetic competition and category typicality in a phonetic categorization task: an fMRI investigation. Neuropsychologia, 45(7), 1463–1473. https://doi.org/10.1016/j.neuropsychologia.2006.11.005

Naselaris, T., Prenger, R. J., Kay, K. N., Oliver, M., & Gallant, J. L. (2009). Bayesian reconstruction of natural images from human brain activity. Neuron, 63(6), 902–915. https://doi.org/10.1016/j.neuron.2009.09.006

Nolte, G., Bai, O., Wheaton, L., Mari, Z., Vorbach, S., & Hallett, M. (2004). Identifying true brain interaction from EEG data using the imaginary part of coherency. Clinical Neurophysiology, 115(10), 2292–2307. https://doi.org/10.1016/j.clinph.2004.04.029

Norris, D., McQueen, J. M., & Cutler, A. (2003). Perceptual learning in speech. Cognitive Psychology, 47(2), 204–238. https://doi.org/10.1016/S0010-0285(03)00006-9

Organian, Y., & Chang, E. F. (2019). A speech envelope landmark for syllable encoding in human superior temporal gyrus. Science Advances (eaay6279). https://doi.org/10.1126/sciadv.aay6279

Pardo, J. V., Wood, T. D., Costello, P. A., Pardo, P. J., & Lee, J. T. (1997). PET study of the localization and laterality of lingual somatosensory processing in humans. Neuroscience Letters, 234(1), 23–26. https://doi.org/10.1016/s0304-3940(97)00650-2

Patterson, K., Nestor, P. J., & Rogers, T. T. (2007). Where do you know what you know? The representation of semantic knowledge in the human brain [Review]. Nature Reviews. Neuroscience, 8(12), 976–987. https://doi.org/10.1038/nrn2277

Pelletier, F. J. (2012). Holism And Compositionality. In M. Werning, W. Hinzen, & E. Machery (Eds.), The Oxford handbook of compositionality (pp. 149–174). Oxford University Press.

Peramunage, D., Blumstein, S. E., Myers, E. B., Goldrick, M., & Baese-Berk, M. (2011). Phonological neighborhood effects in spoken word production: an fMRI study. Journal of Cognitive Neuroscience, 23(3), 593–603. https://doi.org/10.1162/jocn.2010.21489

Pierrehumbert, J. B. (2016). Phonological representation: Beyond abstract versus episodic. Annual Review of Linguistics, 2, 33–52. https://doi.org/10.1146/annurev-linguistics-030514-125050

Poeppel, D., & Idsardi, W. (2022). We don’t know how the brain stores anything, let alone words. Trends in Cognitive Sciences, 26(12), 1054–1055. https://doi.org/10.1016/j.tics.2022.08.010

Prabhakaran, R., Blumstein, S. E., Myers, E. B., Hutchison, E., & Britton, B. (2006). An event-related fMRI investigation of phonological–lexical competition. Neuropsychologia, 44(12), 2209–2221. https://doi.org/10.1016/j.neuropsychologia.2006.05.025

R Core Team. (2023). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. URL https://www.R-project.org/

Righi, G., Blumstein, S. E., Mertus, J., & Worden, M. S. (2009). Neural systems underlying lexical competition: An eye tracking and fMRI study. Journal of Cognitive Neuroscience, 22(2), 213–224. https://doi.org/10.1162/jocn.2009.21200

Samuel, A. G., & Pitt, M. A. (2003). Lexical activation (and other factors) can mediate compensation for coarticulation. Journal of Memory and Language, 48(2), 416–434. https://doi.org/10.1016/S0749-596X(02)00514-4

Schoffelen, J. M., & Gross, J. (2009). Source connectivity analysis with MEG and EEG [Review]. Human Brain Mapping, 30(6), 1857–1865. https://doi.org/10.1002/hbm.20745

Schomers, M. R., & Pulvermüller, F. (2016). Is the sensorimotor cortex relevant for speech perception and understanding? An integrative review. Frontiers in Human Neuroscience, 10, 435. https://doi.org/10.3389/fnhum.2016.00435

Sharon, D., Hämäläinen, M. S., Tootell, R. B., Halgren, E., & Belliveau, J. W. (2007). The advantage of combining MEG and EEG: comparison to fMRI in focally stimulated visual cortex. NeuroImage, 36(4), 1225–1235. https://doi.org/10.1016/j.neuroimage.2007.03.066

Small, D. M., Jones-Gotman, M., Zatorre, R. J., Petrides, M., & Evans, A. C. (1997). A role for the right anterior temporal lobe in taste quality recognition. Journal of Neuroscience, 17(13), 5136–5142. https://doi.org/10.1523/JNEUROSCI.17-13-05136.1997

Tanenhaus, M. K., Spivey-Knowlton, M. J., Eberhard, K. M., & Sedivy, J. C. (1995). Integration of visual and linguistic information in spoken language comprehension. Science, 268(5217), 1632–1634. https://doi.org/10.1126/science.777786

Tremblay, P., & Small, S. L. (2011). From language comprehension to action understanding and back again. Cerebral Cortex, 21(5), 1166–1177. https://doi.org/10.1093/cercor/bhq189

Treutler, M., & Sörös, P. (2021). Functional MRI of native and non-native speech sound production in sequential German-English bilinguals. Frontiers in Human Neuroscience, 15, 683277. https://doi.org/10.3389/fnhum.2021.683277

Wernicke, C. (1970). The symptom complex of aphasia: A psychological study on an anatomical basis. Archives of Neurology, 22(3), 280–282. https://doi.org/10.1001/archneur.1970.00480210090013

Yi, H. G., Leonard, M. K., & Chang, E. F. (2019). The encoding of speech sounds in the superior temporal gyrus. Neuron, 102(6), 1096–1110. https://doi.org/10.1016/j.neuron.2019.04.023

Zhuang, J., Tyler, L. K., Randall, B., Stamatakis, E. A., & Marslen-Wilson, W. D. (2014). Optimally efficient neural systems for processing spoken language. Cerebral Cortex, 24(4), 908–918. https://doi.org/10.1093/cercor/bhs366

Zwitserlood, P. (1989). The locus of the effects of sentential-semantic context in spoken-word processing. Cognition, 32(1), 25–64. https://doi.org/10.1016/0010-0277(89)90013-9

Acknowledgements

This work was supported by National Institute on Deafness and Other Communication Disorders (NIDCD) grant R01DC015455 (P.I. D.G.), and the NIH grants P41EB030006 and S10OD030469. We thank Adriana Schoenhaut and Olivia Newman for their help during data collection. We also thank Dimitrios Pantazis for sharing code used to implement the SVM analyses, and the Harvard Statistical Consulting Service for advice about our statistical analyses.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Open Practices Statement

The data and materials for this experiment are available on request to gow@helix.mgh.harvard.edu pending permanent posting at the Harvard Dataverse (https://dataverse.harvard.edu/). This experiment was not preregistered.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sorensen, D.O., Avcu, E., Lynch, S. et al. Neural representation of phonological wordform in temporal cortex. Psychon Bull Rev (2024). https://doi.org/10.3758/s13423-024-02511-6

Accepted:

Published:

DOI: https://doi.org/10.3758/s13423-024-02511-6