Abstract

How people represent categories and how those representations change over time is a basic question about human cognition. Previous research has demonstrated that people categorize objects by comparing them to category prototypes in early stages of learning but consider the individual exemplars within each category in later stages. However, these results do not seem consistent with findings in the memory literature showing that it becomes increasingly easier to access representations of general knowledge than representations of specific items over time. Why would one rely more on exemplar-based representations in later stages of categorization when it is more difficult to access these exemplars in memory? To reconcile these incongruities, our study proposed that previous findings on categorization are a result of human participants adapting to a specific experimental environment, in which the probability of encountering an object stays uniform over time. In a more realistic environment, however, one would be less likely to encounter the same object if a long time has passed. Confirming our hypothesis, we demonstrated that under environmental statistics identical to typical categorization experiments the advantage of exemplar-based categorization over prototype-based categorization increases over time, replicating previous research in categorization. In contrast, under realistic environmental statistics simulated by our experiments the advantage of exemplar-based categorization over prototype-based categorization decreases over time. A second set of experiments replicated our results, while additionally demonstrating that human categorization is sensitive to the category structure presented to the participants. These results provide converging evidence that human categorization adapts appropriately to environmental statistics.

Similar content being viewed by others

Open Practices Statement

The data and analysis codes for all experiments are available on GitHub (https://github.com/arjundevraj/rational-categorization, https://github.com/arjundevraj/word_categorization). This study was pre-registered (Experiment 1: https://aspredicted.org/yq984.pdf; Experiment 2: https://aspredicted.org/9me7s.pdf).

References

Anderson, J. R., & Schooler, L. J. (1991). Reflections of the environment in memory. Psychological Science, 2, 396–408.

Anderson, J. R. (1990). The adaptive character of thought. Psychology Press.

Anderson, J. R. (1991). The adaptive nature of human categorization. Psychological Review, 98(3), 409–429.

Ashby, F. G., & Maddox, W. T. (1993). Relations between prototype, exemplar, and decision bound models of categorization. Journal of Mathematical Psychology, 37, 372–400.

Briscoe, E., & Feldman, J. (2011). Conceptual complexity and the bias/variance tradeoff. Cognition, 118(1), 2–16.

Donkin, C., & Nosofsky, R. M. (2012). A power-law model of psychological memory strength in short-and long-term recognition. Psychological Science, 23(6), 625–634.

Elliott, S. W., & Anderson, J. R. (1995). Effect of memory decay on predictions from changing categories. Journal of Experimental Psychology: Learning, Memory, and Cognition, 21(4), 815.

Estes, W. K. (1994). Classification and cognition. Oxford University Press.

Griffiths, T., Canini, K., Sanborn, A., & Navarro, D. (2007). Unifying rational models of categorization via the hierarchical Dirichlet process. In Proceedings of the 29th annual conference of the cognitive science society (pp. 323–328).

Hintzman, D. L. (1988). Judgments of frequency and recognition memory in a multiple-trace memory model. Psychological review, 95(4), 528.

Homa, D., Sterling, S., & Trepel, L. (1981). Limitations of exemplar-based generalization and the abstraction of categorical information. Journal of Experimental Psychology: Human Learning and Memory, 7(6), 418–439.

Logan, G. D. (1988). Toward an instance theory of automatization. Psychological Review, 95(4), 492–527.

McKinley, S. C., & Nosofsky, R. M. (1995). Investigations of exemplar and decision bound models in large, ill-defined category structures. Journal of Experimental Psychology: Human Perception and Performance, 21, 128–148.

McKinley, S. C., & Nosofsky, R. M. (1996). Selective attention and the formation of linear decision boundaries. Journal of Experimental Psychology: Human Perception and Performance, 22, 294–317.

McKinley, S. C., & Nosofsky, R. M. (1995). Investigations of exemplar and decision bound models in large, ill-defined category structures. Journal of Experimental Psychology: Human Perception and Performance, 21(1), 128.

Medin, D. L. (1975). A theory of context in discrimination learning. In G. H. Bower (Ed.), The psychology of learning and motivation. Academic Press.

Medin, D. L., & Schaffer, M. M. (1978). Context theory of classification learning. Psychological Review, 85, 207–238.

Medin, D. L., & Smith, E. E. (1981). Strategies and classification learning. Journal of Experimental Psychology: Human Learning and Memory, 7, 241–253.

Medin, D. L., Dewey, G. I., & Murphy, T. D. (1983). Relationships between item and category learning: Evidence that abstraction is not automatic. Journal of Experimental Psychology: Learning, Memory, and Cognition, 9(4), 607–625.

Medin, D. L., & Schwanenflugel, P. J. (1981). Linear separability in classification learning. Journal of Experimental Psychology: Human Learning and Memory, 7(5), 355.

Mervis, C. B., & Rosch, E. (1981). Categorization of natural objects. Annual Review of Psychology, 32(1), 89–115.

Nosofsky, R. M. (1984). Choice, similarity, and the context theory of classification. Journal of Experimental Psychology: Learning, Memory, and Cognition, 10, 104–114.

Nosofsky, R. M. (1986). Attention, similarity, and the identification-categorization relationship. Journal of Experimental Psychology: General, 115, 39–57.

Nosofsky, R. M. (1987). Attention and learning processes in the identification and categorization of integral stimuli. Journal of Experimental Psychology: Learning, Memory, and Cognition, 13, 87–109.

Nosofsky, R. M. (1988). Exemplar-based accounts of relations between classification, recognition, and typicality. Journal of Experimental Psychology: Learning, Memory, and Cognition, 14, 700–708.

Nosofsky, R. M., Palmeri, T. J., & McKinley, S. C. (1994). Rule-plus-exception model of classification learning. Psychological Review, 101, 53–79.

Nosofsky, R. M., & Zaki, S. R. (2002). Exemplar and prototype models revisited: Response strategies, selective attention, and stimulus generalization. Journal of Experimental Psychology: Learning, Memory, and Cognition, 28, 924–940.

Nosofsky, R. M. (1988). Exemplar-based accounts of relations between classification, recognition, and typicality. Journal of Experimental Psychology: learning, memory, and cognition, 14(4), 700.

Nosofsky, R. M. (1991). Tests of an exemplar model for relating perceptual classification and recognition memory. Journal of experimental psychology: human perception and performance, 17(1), 3.

Nosofsky, R. M., Cao, R., Cox, G. E., & Shiffrin, R. M. (2014). Familiarity and categorization processes in memory search. Cognitive Psychology, 75, 97–129.

Nosofsky, R. M., Cox, G., Cao, R., & Shiffrin, R. (2014). An exemplar-familiarity model predicts short-term and long-term probe recognition across diverse forms of memory search. Journal of Experimental Psychology Learning, Memory, and cognition, 40, 1524–39.

Nosofsky, R. M., Gluck, M. A., Palmeri, T. J., McKinley, S. C., & Glauthier, P. (1994). Comparing modes of rule-based classification learning: A replication and extension of Shepard, Hovland, and Jenkins (1961). Memory & Cognition, 22(3), 352–369.

Nosofsky, R. M., Little, D. R., Donkin, C., & Fific, M. (2011). Short-term memory scanning viewed as exemplar-based categorization. Psychological review, 118(2), 280.

Nosofsky, R. M., Palmeri, T. J., & McKinley, S. C. (1994). Rule-plus-exception model of classification learning. Psychological review, 101(1), 53–79.

Nosofsky, R. M., & Zaki, S. R. (2003). A hybrid-similarity exemplar model for predicting distinctiveness effects in perceptual old-new recognition. Journal of Experimental Psychology: Learning, Memory, and Cognition, 29(6), 1194.

Palmeri, T. J., & Nosofsky, R. M. (1995). Recognition memory for exceptions to the category rule. Journal of Experimental Psychology: Learning, Memory, and Cognition, 21, 548–568.

Palmeri, T. J., & Nosofsky, R. M. (1995). Recognition memory for exceptions to the category rule. Journal of Experimental Psychology: Learning, Memory, and Cognition, 21(3), 548.

Posner, M. I., & Keele, S. W. (1970). Retention of abstract ideas. Journal of Experimental Psychology, 83, 304–308.

Posner, M. I., & Keele, S. W. (1968). On the genesis of abstract ideas. Journal of Experimental Psychology, 77 (3, Pt.1), 353–363.

Reed, S. K. (1972). Pattern recognition and categorization. Cognitive Psychology, 3, 382–407.

Rojahn, K., & Pettigrew, T. F. (1992). Memory for schema-relevant information: A meta-analytic resolution. British Journal of Social Psychology, 31(2), 81–109.

Sakamoto, Y., & Love, B. C. (2004). Schematic influences on category learning and recognition memory. Journal of Experimental Psychology: General, 133(4), 534–553.

Shin, H. J., & Nosofsky, R. M. (1992). Similarity-scaling studies of dot-pattern classification and recognition. Journal of Experimental Psychology: General, 121, 278–304.

Smith, J. D., & Minda, J. P. (1998). Prototypes in the mist: The early epochs of category learning. Journal of Experimental Psychology, 24, 1411–1436.

Zeng, T., Tompary, A., Schapiro, A. C., & Thompson-Schill, S. L. (2021). Tracking the relation between gist and item memory over the course of long-term memory consolidation. eLife, 10, e65588.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Experiment 1 appeared in a paper in the non-archival conference proceedings of the Annual Meeting of the Cognitive Science Society in 2021. A pre-print of the manuscript is available at psyarxiv.com. This work was supported by the CV Starr Fellowship awarded to Q.Z. by Princeton Neuroscience Institute, a start-up fund awarded to Q.Z. by Rutgers University–New Brunswick, and AFOSR FA9550-18-1-0077 awarded to T.L.G. Correspondence concerning this article should be addressed to Qiong Zhang (qiong.z@rutgers.edu).

Appendix

Appendix

A. Models containing additional parameters, fitted for the entire experiment per participant

We explored additional versions of the prototype and exemplar models containing additional parameters as used in the literature. In particular, we introduced (1) the guessing-rate parameter, g, which measures the proportion of the time the participant was randomly guessing as opposed to actively using the model, (2) the response-scaling parameter, \(\gamma \), which describes the degree to which the participant’s choice is deterministic (Nosofsky & Zaki, 2002), and (3) each exemplar x’s memory strength, \(m_{x,j} = \alpha j^{-\beta }\), where \(\alpha \), the scaling parameter, can be assumed as 1, j is the lag (i.e., number of trials since exemplar x was last seen), and \(\beta \) indicates the rate of memory decay (Donkin & Nosofsky, 2012). While the guessing-rate parameter applies to both models, the response-scaling and exemplar memory-strength parameters only apply to exemplar model. After incorporating all new parameters, we arrive at the probability that stimulus i (\(S_{i}\)) will be categorized into category 1 (\(R_{1}\)) by the prototype model as

and by the exemplar model as

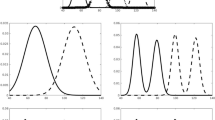

We applied a similar approach for model-fitting and fit-calculation as with the experimental conditions of Experiments 1 and 2 and considered the effects of adding each new parameter individually as well as their combined effects. For model simplicity, we obtained the fixed value for the memory strength parameter \(\beta = 1.4025\) from Donkin and Nosofsky (2012). We also tested that the specific choice of \(\beta \) value does not affect our main conclusions by additionally testing \(\beta = 0.3\) and \(\beta = 5\), as \(\beta \) values in previous studies fall in the range of 0.3 to 5 (Nosofsky, Cao, Cox, & Shiffrin, 2014a; Nosofsky, Cox, Cao, & Shiffrin, 2014b). We applied these new model versions to both the control and experimental conditions of Experiment 1 (i.e., two category exceptions). The fits of these new models, as shown in Fig. 8, follow the trends from the original models presented in this paper.

B. Models containing additional parameters, fitted for consecutive sets of 56 trials per participant

We also explored a model-fitting and fit-calculation approach that served as an intermediate between Smith and Minda’s (1998) original approach (model-fitting per participant and per trial segment, plotted fit as the SSE over the trial segment) and our approach (model-fitting per participant over the entire experiment, plotted fit as the MSE over the trial segment after applying the model on a trial by trial basis). In the intermediate approach for both the control and experimental conditions, we fit the model for each participant’s behavior for each consecutive set of 56 trials (i.e., how trial segments are defined in the control condition), hence generating 11 different fittings per participant, and then apply the model on a trial by trial basis for each trial segment and compute the MSE as the measure of fit. With this approach, one can still observe the trajectories of the two models over trial segments as defined in the experimental condition (i.e., temporally non-contiguous sets of trials that correspond to similar regions along each object’s power-law function), but also take into consideration the potential for parameter values to change over the course of the experiment as Smith and Minda (1998) do. This means that in the experimental condition, for any given participant, the fitted parameters may differ even within a trial segment, since different trials may belong to different sets of 56 consecutive trials.

We applied the new models (as well as the intermediate models, derived by individually adding each of the new parameters) described in the previous section with this fitting approach and obtained the results in Fig. 9. The patterns observed with our original versions of the models still hold, with the exemplar model gaining an advantage later in category learning in the control condition and losing its advantage in the experimental condition. In fact, after introducing the response-scaling parameter, we observe the first cases in which the prototype model actually outperforms the exemplar model in the final trial segments of the experimental condition, as seen in Fig. 9F and J. With this new model-fitting approach and using the same permutation test with 1000 random simulations as in Experiment 1, the interaction between model type and trial segment in the experimental condition for the model including all the new parameters (corresponding to Fig. 9J) was still statistically significant, \(p=.007\).

Categorization accuracy per stimulus for the experimental condition of Experiment 1

C. Accuracy per stimulus in the experimental condition

We more closely analyzed the behavioral results for the experimental condition by breaking down participants’ categorization accuracy over trial segments by stimulus, as shown in Fig. 10. Since the trial segment in the experimental condition captures the relative frequency of stimulus presentation, Fig. 10 shows that (1) for every stimulus, regardless of its status as an exception/non-exception, the participants’ categorization accuracy peaked early on (either in the second or third trial segment) and then declined afterwards, and (2) categorization accuracy for exceptions was generally lower throughout the experiment and also tended to decline more rapidly. These results are consistent with our hypothesis that people rationally adapt to the environmental statistics of the stimuli, and have worse representations of the stimuli when they are needed less (i.e., presented less frequently).

Model fits for the experimental condition of Experiment 1 with model-generated simulated data

Model fits for the experimental condition of Experiment 1 with model-generated simulated data, considering only trials after which all exemplars have already been seen

D. Comparison of model predictions to participant responses

While model fits and categorization accuracy are important metrics in our analyses, we also provide a more thorough account of the exact responses predicted by each model and how they compare to participants’ responses in critical trial segments. For both conditions of Experiment 1, we analyzed responses for the second trial segment, when categorization accuracy tends to peak in the experimental condition, and the final trial segment, in order to capture the full range of behavior in the experiment. In particular, we measured the average proportion of trials that each of the models and participants selected category 1 for each distinct stimulus within each trial segment. We generated the model response by sampling from a Bernoulli distribution seeded with the fitted model’s predicted probability for category 1. We used the model-fitting procedure in Appendix B, which fits the models per set of consecutive 56 trials. The results in Fig. 11 show that both the prototype and exemplar models captured the qualitative trends of the participants’ responses reasonably well, across all 14 stimuli, in early/late trial segments of control/experimental conditions, though the prototype model struggled in predicting responses for exceptions (Stimulus 7 and Stimulus 14). To further understand quantitatively how well prototype models and exemplar models capture the participants’ responses, we resort to the calculations of model fits, as illustrated in Fig. 5.

Model fits for the experimental condition of Experiment 2 with four exceptions, where each subplot (B-F) only includes trials that have the specified, fixed number of stimuli seen thus far, i.e., B only graphs results from the sets of trials for each participant between the introductions of the fourth and fifth stimuli, and A averages these results, including the results from 1–3 stimuli seen (not shown individually)

E. Confirming the results using simulated data

To better interpret the model fits, we produce simulations where the ground truth is known (i.e., either an exemplar model or a prototype model) and fit our models to the simulated data. Thus, for the exact stimulus sequences used in Experiment 1, we generated simulated response data by using the prototype or exemplar model as the ground truth (with each attentional weight set as \(\frac{1}{6}\) and the remaining parameters fixed at the median of their allowed range, and then generating a Bernoulli sample for the trial from the model’s predicted probability), fit the models to this data, and computed and graphed the model fits, as shown in Fig. 12.

Overall, our model-fitting procedure is robust to the ground-truth model, as we observed better fits (lower MSE) in the exemplar model than the prototype model when an exemplar model is the ground truth (Fig. 12A) and vice versa (Fig. 12B). However, the change of the model fits over trial segments only holds constant in the prototype model but not in the exemplar model, likely being affected by the total number of stimuli analyzed under a given trial segment (later trial segments in the experimental condition contain a larger number of stimuli on average than earlier segments, as more of them have been encountered over time). To eliminate the effect introduced by the number of exemplars previously encountered, we consider only trials in the simulated data after which all stimuli have been encountered, which generally would occur around or slightly after trial 455, corresponding to \(\sim \) 25% of the total experiment length. By analyzing this portion of the simulated data, model fits are no longer affected by the number of stimuli analyzed, as they all stay constant over trial segments under the ground truth of either an exemplar model or a prototype model (Fig. 13A, B).

Finally, we examine whether the main conclusion of our analysis of the empirical data in the experimental condition still holds after controlling for the number of exemplars. In this analysis, we only consider the sequence of trials between the introductions of every two consecutive stimuli so that the number of exemplars is fixed and has no effect on the exemplar model’s predictions. A formal permutation test on the averaged data from these analyses using the F interaction statistic to test the effects of model type (prototype/exemplar) and trial segment (second, last) was significant, \(p < .001\). The results from this analysis in the experimental condition with four exceptions are shown in Fig. 14, which indicates that our previously observed results still hold after controlling for the number of exemplars: the exemplar model’s advantage over the prototype model decreases over time in the experimental condition.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Devraj, A., Griffiths, T.L. & Zhang, Q. Reconciling categorization and memory via environmental statistics. Psychon Bull Rev (2024). https://doi.org/10.3758/s13423-023-02448-2

Accepted:

Published:

DOI: https://doi.org/10.3758/s13423-023-02448-2