Abstract

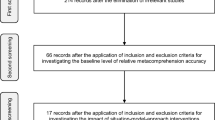

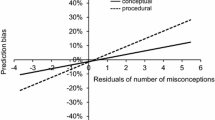

For decades, research on metacomprehension has demonstrated that many learners struggle to accurately discriminate their comprehension of texts. However, while reviews of experimental studies on relative metacomprehension accuracy have found average intra-individual correlations between predictions and performance of around .27 for adult readers, in some contexts even lower near-zero accuracy levels have been reported. One possible explanation for those strikingly low levels of accuracy is the high conceptual overlap between topics of the texts. To test this hypothesis, in the present work participants were randomly assigned to read one of two text sets that differed in their degree of conceptual overlap. Participants judged their understanding and completed an inference test for each topic. Across two studies, mean relative accuracy was found to match typical baseline levels for the low-overlap text sets and was significantly lower for the high-overlap text sets. Results suggest text similarity is an important factor impacting comprehension monitoring accuracy that may have contributed to the variable and sometimes inconsistent results reported in the metacomprehension literature.

Similar content being viewed by others

Data availability

The data are available via the Open Science Framework at https://osf.io/2xk6f/. Materials are available upon request from the authors. The experiments were not preregistered.

Code availability

Not applicable.

Notes

Per condition this number includes n = 8 dropped from the high-overlap condition and n = 10 dropped from the low-overlap condition.

Following computational procedures as outlined in Jarosz and Wiley (2014), the data were also examined by estimating a Bayes factor using Bayesian Information Criteria (BIC) (Wagenmakers, 2007), comparing the fit of the data under the null hypothesis and the alternative hypothesis. For JOCs, the estimated Bayes factor (null/alternative) suggested that the data were 10.55:1 and in favor of the null hypothesis, or rather, at least ten times more likely to occur if there were indeed no difference in average JOCs between the two conditions. For average test scores, the estimated Bayes factor (null/alternative) suggested that the data were 9.22:1 and in favor of the null hypothesis, or rather, at least nine times more likely to occur if test performance did not differ between the two conditions.

Pearson scores were highly correlated with relative accuracy computed with Gamma, r = .95, p < .001. When results are analyzed using Gamma correlations the same pattern emerges where mean relative accuracy was significantly lower for the high-overlap set than the low-overlap set, t(158) = 2.29, p = .02 , d = 0.36.

The estimated Bayes factor (null/alternative) suggested that the data were 8.39:1 and in favor of the null hypothesis for which no difference between conditions is expected.

The estimated Bayes factor (null/alternative) suggested that the data were 17.36:1 and 10.55:1 (absolute error and confidence bias respectively) in favor of the null hypothesis.

Of the 13 participants who were dropped due to invariance in judgments, n = 9 were in the high-overlap condition and n = 4 were in the low-overlap condition.

This pattern was the same when relative accuracy was computed using Gamma correlations, t(201) = 3.56, p < .001, d = 0.50.

Average test performance was a significant covariate in the ANCOVA model, F(1, 199) = 5.83, MSE = 0.15, p = .02, η2p = 0.03, but average JOC was not, F(1, 199) = 0.20, MSE = 0.15, p = .71. The results were the same when the ANCOVA was run using Gamma correlations. Mean relative accuracy (Gamma) was significantly lower for the high-overlap set, F(1,199) = 6.52, MSE = 0.24, p = .01, η2p = 0.03. The covariate for average test performance was F(1, 199) = 3.83, MSE = 0.24, p = .052, η2p = 0.02, and the covariate for JOC was F(1, 199) = 0.18, MSE = 0.24, p = .67. The results were also the same when the relative accuracy measure for the high-overlap condition was computed using the delayed JOCs collected after reading all texts instead of immediate ratings. Mean relative accuracy (Pearson) was significantly lower for the high-overlap set than for the low-overlap set, F(1, 199) = 12.25, MSE = 0.16, p < .001, η2p = 0.06. Average test performance was a significant covariate in the model, F(1, 199) = 4.95, MSE = 0.16, p = .03, η2p = 0.02, but average JOC was not, F(1, 199) = 0.21, MSE = 0.16, p = .65.

Further, the estimated Bayes factor (null/alternative) suggested that the data were at least 13 times more likely to occur if there was indeed no difference in within-participant variation in performance between conditions.

The estimated Bayes factor (null/alternative) suggested that the data were 11.06:1 and 8:29:1 (absolute error and confidence bias respectively) in favor of the null hypothesis (that is no difference between conditions).

References

Daffin, L., & Lane, C. (2021). Principles of social psychology (2nd ed.). Washington State University. https://opentext.wsu.edu/social-psychology/

Dunlosky, J., & Lipko, A. R. (2007). Metacomprehension: A brief history and how to improve its accuracy. Current Directions in Psychological Science, 16(4), 228–232. https://doi.org/10.1111/j.1467-8721.2007.00509.x

Dunlosky, J., & Metcalfe, J. (2009). Metacognition. Sage Publications Inc.

Dunlosky, J., & Rawson, K. A. (2012). Overconfidence produces underachievement: Inaccurate self evaluations undermine students’ learning and retention. Learning and Instruction, 22(4), 271–280. https://doi.org/10.1016/j.learninstruc.2011.08.003

Flavell, J. H. (1979). Metacognition and cognitive monitoring: A new area of cognitive–developmental inquiry. American Psychologist, 34(10), 906–911. https://doi.org/10.1037/0003-066X.34.10.906

Fukaya, T. (2010). Factors affecting the accuracy of metacomprehension: A meta-analysis. Japanese Journal of Educational Psychology, 58(2), 236–251. https://doi.org/10.5926/jjep.58.236

Griffin, T. D., Wiley, J., & Thiede, K. W. (2008). Individual differences, rereading, and self-explanation: Concurrent processing and cue validity as constraints on metacomprehension accuracy. Memory & Cognition, 36, 93–103. https://doi.org/10.3758/MC.36.1.93

Griffin, T. D., Jee, B. D., & Wiley, J. (2009). The effects of domain knowledge on metacomprehension accuracy. Memory & Cognition, 37(7), 1001–1013. https://doi.org/10.3758/MC.37.7.1001

Griffin, T. D., Mielicki, M. K., & Wiley, J. (2019). Improving students' metacomprehension accuracy. In J. Dunlosky & K. A. Rawson (Eds.), The Cambridge handbook of cognition and education (pp. 619–646). Cambridge University Press. https://doi.org/10.1017/9781108235631.025

Griffin, T. D., Wiley, J., & Thiede, K. W. (2019). The effects of comprehension-test expectancies on metacomprehension accuracy. Journal of Experimental Psychology: Learning, Memory, and Cognition, 45(6), 1066–1092. https://doi.org/10.1037/xlm0000634

Guerrero, T. A., & Wiley, J. (2018). Effects of text availability and reasoning processes on test performance. Proceedings of the 40th Annual Conference of the Cognitive Science Society (pp. 1745–1750). Cognitive Science Society. https://cogsci.mindmodeling.org/2018/papers/0336/0336.pdf

Guerrero, T. A., Griffin, T. D., & Wiley, J. (2022). I think I was wrong: The effect of making experimental predictions on learning about theories from psychology textbook excerpts. Metacognition & Learning, 17, 337–373. https://doi.org/10.1007/s11409-021-09276-6

Guerrero, T. A., Griffin, T. D., & Wiley, J. (2023). The effect of generating examples on comprehension and metacomprehension. Journal of Experimental Psychology: Applied. Advance online publication. https://doi.org/10.1037/xap0000490

Hildenbrand, L., & Sanchez, C. A. (2022). Metacognitive accuracy across cognitive and physical task domains. Psychonomic Bulletin & Review, 29(4), 1524–1530. https://doi.org/10.3758/s13423-022-02066-4

Jaeger, A. J., & Wiley, J. (2014). Do illustrations help or harm metacomprehension accuracy? Learning and Instruction, 34, 58–73. https://doi.org/10.1016/j.learninstruc.2014.08.002

Jarosz, A. F., & Wiley, J. (2014). What are the odds? A practical guide to computing and reporting Bayes factors. The Journal of Problem Solving, 7(1), 2–9. https://doi.org/10.7771/1932-6246.1167

Kintsch, W. (1994). Text comprehension, memory, and learning. American Psychologist, 49(4), 294–303. https://doi.org/10.1037//0003-066X.49.4.294

Kwon, H., & Linderholm, T. (2014). Effects of self-perception of reading skill on absolute accuracy of metacomprehension judgements. Current Psychology, 33(1), 73–88. https://doi.org/10.1007/s12144-013-9198-x

Landauer, T. K., Foltz, P. W., & Laham, D. (1998). An introduction to latent semantic analysis. Discourse Processes, 25(2–3), 259–284. https://doi.org/10.1080/01638539809545028

Maki, R. H. (1998a). Predicting performance on text: Delayed versus immediate predictions and tests. Memory & Cognition, 26(5), 959–964. https://doi.org/10.3758/BF03201176

Maki, R. H. (1998b). Test predictions over text material. In D. J. Hacker, J. Dunlosky, & A. C. Graesser (Eds.), Metacognition in educational theory and practice (pp. 117–144). Lawrence Erlbaum.

Maki, R. H., & McGuire, M. J. (2002). Metacognition for text: Findings and implications for education. In T. J. Perfect & B. L. Schwartz (Eds.), Applied metacognition (pp. 39–67). Cambridge University Press. https://doi.org/10.1017/CBO9780511489976.004

Maki, R. H., Jonas, D., & Kallod, M. (1994). The relationship between comprehension and metacomprehension ability. Psychonomic Bulletin & Review, 1, 126–129. https://doi.org/10.3758/BF03200769

Nelson, T. O. (1984). A comparison of current measures of the accuracy of feeling-of-knowing predictions. Psychological Bulletin, 95(1), 109–133. https://doi.org/10.1037/0033-2909.95.1.109

Nelson, T. O., & Dunlosky, J. (1991). When people's judgments of learning (JOLs) are extremely accurate at predicting subsequent recall: The “delayed-JOL effect” Psychological Science, 2(4), 267–271. https://doi.org/10.1111/j.1467-9280.1991.tb00147.x

Ozuru, Y., Kurby, C. A., & McNamara, D. S. (2012). The effect of metacomprehension judgment task on comprehension monitoring and metacognitive accuracy. Metacognition and Learning, 7(2), 113–131. https://doi.org/10.1007/s11409-012-9087-y

Prinz, A., Golke, S., & Wittwer, J. (2020). How accurately can learners discriminate their comprehension of texts? A comprehensive meta-analysis on relative metacomprehension accuracy and influencing factors. Educational Research Review, 31, 100358. https://doi.org/10.1016/j.edurev.2020.100358

Rawson, K. A., Dunlosky, J., & Thiede, K. W. (2000). The rereading effect: Metacomprehension accuracy improves across reading trials. Memory & Cognition, 28(6), 1004–1010. https://doi.org/10.3758/BF03209348

Rhodes, M. G., & Tauber, S. K. (2011). The influence of delaying judgments of learning on metacognitive accuracy: A meta-analytic review. Psychological Bulletin, 137(1), 131–148. https://doi.org/10.1037/a0021705

Shiu, L. P., & Chen, Q. S. (2013). Self and external monitoring of reading comprehension. Journal of Educational Psychology, 105, 78–88. https://doi.org/10.1037/a0029378

Thiede, K. W., & Anderson, M. C. M. (2003). Summarizing can improve metacomprehension accuracy. Contemporary Educational Psychology, 28(2), 129–160. https://doi.org/10.1016/S0361-476X(02)00011-5

Thiede, K. W., Anderson, M., & Therriault, D. (2003). Accuracy of metacognitive monitoring affects learning of texts. Journal of Educational Psychology, 95(1), 66–73. https://doi.org/10.1037/0022-0663.95.1.66

Thiede, K. W., Griffin, T. D., Wiley, J., & Redford, J. (2009). Metacognitive monitoring during and after reading. In D. J. Hacker, J. Dunlosky, & A. C. Graesser (Eds.), Handbook of metacognition in education (pp. 85–106). Routledge.

Thiede, K. W., Wiley, J., & Griffin, T. D. (2011). Test expectancy affects metacomprehension accuracy. British Journal of Educational Psychology, 81(2), 264–273. https://doi.org/10.1348/135910710X510494

Wagenmakers, E. J. (2007). A practical solution to the perva- sive problems of p values. Psychonomic Bulletin and Review, 14(5), 779–804. https://doi.org/10.3758/BF03194105

Waldeyer, J., & Roelle, J. (2020). The keyword effect: A conceptual replication, effects on bias, and an optimization. Metacognition and Learning, 16, 37–56. https://doi.org/10.1007/s11409-020-09235-7

Wiley, J., Griffin, T. D., & Thiede, K. W. (2005). Putting the comprehension in metacomprehension. Journal of General Psychology, 132, 408–428. https://doi.org/10.3200/GENP.132.4.408-428

Wiley, J., Griffin, T. D., Jaeger, A. J., Jarosz, A. F., Cushen, P. J., & Thiede, K. W. (2016). Improving metacomprehension accuracy in an undergraduate course context. Journal of Experimental Psychology: Applied, 22, 393–405. https://doi.org/10.1037/xap0000096

Wiley, J., Thiede, K. W., & Griffin, T. D. (2016). Improving metacomprehension with the situation model approach. In K. Mokhtari (Ed.), Improving reading comprehension through metacognitive reading instruction for first and second language readers (pp. 93–110). Rowman & Littlefield. https://doi.org/10.1037/xap0000096

Yang, C., Zhao, W., Yuan, B., Luo, L., & Shanks, D. R. (2023). Mind the gap between comprehension and metacomprehension: Meta-analysis of metacomprehension accuracy and intervention effectiveness. Review of Educational Research, 93(2), 143–194. https://doi.org/10.3102/00346543221094083

Zhao, Q., & Linderholm, T. (2011). Anchoring effects on prospective and retrospective metacomprehension judgments as a function of peer performance information. Metacognition and Learning, 6(1), 25–43. https://doi.org/10.1007/s11409-010-9065-1

Acknowledgements

The authors thank Tim George, Tricia A. Guerrero, and Marta K. Mielicki for their contributions and Lamorej Roberts for assistance in data collection.

Funding

This work was supported by the Institute of Education Sciences, US Department of Education under Grant R305A160008, and by the National Science Foundation (NSF) under DUE grant 1535299, to Thomas D. Griffin and Jennifer Wiley at the University of Illinois at Chicago. The opinions expressed are those of the authors and do not represent views of the Institute, the US Department of Education, or the National Science Foundation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest/Competing interests

The authors have no relevant financial or non-financial interests to disclose.

Ethics approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards. The study was approved by the Institutional Review Board of the University of Illinois at Chicago (Protocol # 2000-0676).

Consent to participate

Informed consent was obtained from all individual participants included in the study.

Consent for publication

Not applicable.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Open Practices Statement

The data are available via the Open Science Framework at https://osf.io/2xk6f/. Materials are available upon request from the authors. The experiments were not preregistered.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hildenbrand, L., Sarmento, D., Griffin, T.D. et al. Conceptual overlap among texts impedes comprehension monitoring. Psychon Bull Rev 31, 750–760 (2024). https://doi.org/10.3758/s13423-023-02349-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-023-02349-4