Abstract

Diffusion models of decision making, in which successive samples of noisy evidence are accumulated to decision criteria, provide a theoretical solution to von Neumann’s (1956) problem of how to increase the reliability of neural computation in the presence of noise. I introduce and evaluate a new neurally-inspired dual diffusion model, the linear drift, linear infinitesimal variance (LDLIV) model, which embodies three features often thought to characterize neural mechanisms of decision making. The accumulating evidence is intrinsically positively-valued, saturates at high intensities, and is accumulated for each alternative separately. I present explicit integral-equation predictions for the response time distribution and choice probabilities for the LDLIV model and compare its performance on two benchmark sets of data to three other models: the standard diffusion model and two dual diffusion model composed of racing Wiener processes, one between absorbing and reflecting boundaries and one with absorbing boundaries only. The LDLIV model and the standard diffusion model performed similarly to one another, although the standard diffusion model is more parsimonious, and both performed appreciably better than the other two dual diffusion models. I argue that accumulation of noisy evidence by a diffusion process and drift rate variability are both expressions of how the cognitive system solves von Neumann’s problem, by aggregating noisy representations over time and over elements of a neural population. I also argue that models that do not solve von Neumann’s problem do not address the main theoretical question that historically motivated research in this area.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

In 1956, the great applied mathematician John von Neumann published an article based on a set of lectures given at Caltech entitled “Probabilistic logics and the synthesis of reliable organisms from unreliable components” (Von Neumann, 1956). In it, he considered the effects of probabilistic variation on the fidelity with which neurons in the brain and central nervous system perform the computational task of translating perception into action. Von Neumann identified such variation, which he associated with errors in information transmission in the central nervous system, as the principal obstacle to reliable biological computation. His solution to the reliability problem was aggregation or multiplexing: A signal carried by a single neuron might be in error, but a signal carried by a bundle of neurons was more likely to be correct than not, and the bulk of his article focused on how to characterize and bound the error in automata composed of bundles of binary Pitts-McCulloch neurons (McCulloch & Pitts, 1943).

Von Neumann conceptualized neural computation in terms of elementary logic functions rather than as operations on analogue representations, but the theoretical problem he identified and his proposed solution to it remain at the heart of contemporary cognitive psychology and the way we theorize about cognitive processes and try to model them. My aim in this article is to present a new diffusion model of two-choice decision making, the linear-drift, linear infinitesimal variance (LDLIV) model, which I argue provides a more complete and satisfying solution to von Neumann’s problem than do previous models of this kind. The model has many feature in common with other diffusion decision models but its particular theoretical interest is that its architecture and process assumptions correspond more closely to what we would expect from a cognitive process synthesized from and implemented by elementary neural units.

I have used von Neumann’s problem as a focal point for this article because my larger theoretical aim, along with presenting and evaluating the new model, is to distinguish models that seek to provide a solution to von Neumann’s problem from those that do not. Most of the published work on decision models during the past few decades has focused on questions of comparative model fit and predictive adequacy rather than on the more fundamental theoretical questions of exactly what a model explains and how it explains it. By “how a model explains something” I mean the process assumptions or explanatory constructs from which its predictions are derived. In the “Discussion” section I argue that a model’s ability to provide a theoretical solution to von Neumann’s problem should be the primary criterion for judging a model’s adequacy. I have sought to situate the new model in this larger context because I believe the theoretical content of a model is at least as important as its ability to fit data and my aim is to try to restore questions of the former kind to the center of the debate.

Cognition as probabilistic neural computation

The idea of probabilistic variation in cognitive representations discussed by von Neumann was not a new one, but was discovered in psychology nearly 100 years earlier by Fechner, who found there was trial-to-trial variability in whether pairs of similar stimuli were judged to be the same or different (Fechner, 1860). The work of understanding the implications of Fechner’s discovery continued into the twentieth century and led to Thurstone’s law of comparative judgment (Thurstone, 1927) and signal detection theory (Green & Swets, 1966). But even before these developments, Fechner in 1860 already had a well-developed theory of decision making based on Gauss’s 1809 theory of errors (Sheynin, 1979), which substantially foreshadowed the modern theory (Link, 1994; Wixted, 2020). In Gauss’s theory, a measurement based on an aggregation of elements, each independently subject to error, will be normally distributed. Fechner attributed the variability in same-different decisions to aggregated measurement errors of this kind. His insight was thus much as the same as von Neumann’s 100 years later: Variability in cognitive representations is a reflection of aggregation over similar elements that are independently subject to error. It follows that reliability can be improved by increasing the number of elements that compose the representation, as von Neumann proposed. The idea that cognitive representations are normally distributed, which Link (1994) attributes to Fechner, was carried over into the law of comparative judgment and signal detection theory (Luce, 1994), both of which, in their most common forms, assume normally-distributed representations.

As important as signal detection theory is to mainstream psychology, there is an important dimension missing from its account, which is time. If we assume, following Fechner and von Neumann, that variability in cognitive representations reflects noise or error in the neural elements that compose them, then we would expect this variability to be present not only on the time scale of behavioral decisions but on the time scale of neural events as well — that is, from moment to moment as well as from decision to decision. Long before it was possible to record decision-related neural activity from single cells in awake behaving monkeys, multiple-look psychophysical experiments performed in the early days of signal detection theory showed that discrimination accuracy improved in proportion to the square root of the number of times a brief stimulus was presented (Swets, Shipley, McKey, & Green, 1959). A square-root law improvement is predicted if the decision is based on the sum of successive, independent, noisy representations of the stimulus. That this is so suggests the brain solves von Neumann’s problem in time in the same way as it solves it in space: by aggregation. Von Neumann’s solution — which is also the statistician’s solution — is to improve reliability by aggregating across noisy elements that code the same sensory quantity. The results of Swets et al. further suggest that (a) those elements are noisy or variable in time, and (b) the brain improves reliability by aggregating, or summing noisy representations over time.

These ideas were developed systematically during the 1960s and 1970s in psychology in the class of sequential-sampling decision models by authors such as Stone (1960), La Berge (1962), Audley & Pike (1965), Edwards (1965), Laming(1968), Link & Heath (1975), Vickers (1970, 1979), abd Ratcliff (1978). These models were inspired, in part, by the sequential statistics of Abraham Wald (1947) and, while they differed from one another in points of detail (Ratcliff and Smith, 2004; Smith & Ratcliff, 2015), they shared the common assumptions that evidence entering the decision process is perturbed by noise or error on a shorter time scale than that of the individual decision and that the decision is made by aggregating or accumulating independent samples of evidence over time.

Neurally-principled models of decision making

The classical sequential-sampling models were derived from theoretical principles and the best of them successfully accounted for choice probabilities (accuracy) and response times (RT) from behavioral experiments before it was possible to make recordings of decision-related neural activity in awake, behaving animals. When such recordings became possible technically (Roitman & Shadlen, 2002; Thompson, Hanes, Bichot, & Schall, 1996), the picture they provided was in striking agreement with the account proposed by the models. Recordings from the oculomotor cortex of monkeys performing eye-movement decision tasks typically show: (a) moment-to-moment statistical variation, reminiscent of von Neumann’s “unreliable components;” (b) a progressive increase in firing rates with the time elapsing from stimulus onset, and (c) responses that are time-locked to the point at which the firing rate reaches a threshold or criterion level (Hanes & Schall, 1996). Hanes and Schall argued that these properties are consistent with the idea that the neurons implement a process of evidence accumulation like that proposed by sequential-sampling models. In the intervening years the picture has become increasingly clear and has provided further support for their original insight (Forstmann, Ratcliff, & Wagenmakers, 2016; Smith & Ratcliff, 2004).

As researchers have continued to probe the relationship between decision processes and their neural implementation, there has been a corresponding impetus to develop models that are more neurally realistic or more deeply grounded in neurocomputational principles. “Neural realism” is, of course, a relative term, as neural processes can be modeled at different levels of resolution and what is realistic at one level is an oversimplification at another (Gerstner & Kistler, 2002; Tuckwell, 1988), but, in practice, questions of neural realism have tended to focus either on the decision architecture or the decision process. By “architecture” I mean the assumptions made about how evidence for different decision alternatives is represented. By “process” I mean the stochastic process that is used to represent the accumulating evidence. The debate about architecture has mainly been about whether the evidence for different alternatives is represented by a single stochastic process or whether each alternative is represented by its own evidence state or process. The debate about process has been about whether evidence is accumulated in discrete time or continuously and whether it is continuously distributed or comes in discrete chunks (Ratcliff & Smith, 2004; Smith & Ratcliff, 2015). Most neurocomputational studies have assumed diffusion processes of some kind — that is, continuous-time, continuous-state Markov processes (Rogers & Williams, 2000) – and I likewise focus on diffusion models.

The emphasis on diffusion processes is a natural one theoretically, because diffusion representations can be derived from either bottom-up or top-down approaches to characterizing evidence accumulation neurocomputationally. Examples of the bottom-up approach are the Poisson shot-noise models of Smith (2010) and Smith and McKenzie (2011), in which a diffusion process is obtained as the limit of processes that represent the flux in postsynaptic potentials induced by a sequence of action potentials. Examples of the top-down approach are the diffusion approximations to the mean-field dynamics of the Ising decision maker of Verdonck and Tuerlinckx (2014) and the spiking neuron model of Wang (2001, 2002), and Wong & Wang (2006). The Ising decision maker is a recurrent network of Pitts-McCulloch neurons like those analyzed by Von Neumann (1956) whereas the Wang model is a recurrent network of spiking neurons. In both models the decision process is represented as a process of energy minimization in which the stable, or attractor, states of the system correspond to decision states. The properties of recurrent models can be characterized using mean-field approximations, in which the average firing rate of neurons in a network is derived from an analysis of its global dynamics (Gerstner and Kistler, 2002). Verdonck and Tuerlinckx (2014) and Roxin and Ledberg (2008) derived diffusion approximations to the mean-field equations for the Ising decision maker and the Wang model, respectively, using central-limit theorem arguments, which assumed the number of participating neurons is large (Fechner’s assumption).

To date, the most widely and successfully applied model of two-choice decisions is the diffusion decision model (Ratcliff, 1978; Ratcliff & McKoon, 2008), which represents evidence accumulation as a Wiener, or Brownian motion, process. The Wiener process is the unique member of the class of diffusion processes that is also an independent-increments process, that is, in which each new increment to the process is statistically independent of those preceding it. One of the most straightforward ways to derive the Wiener process mathematically is as the continuous-time limit of a simple random walk (Feller, 1968; Ratcliff, 1978), which is a Markov process in which the increments to the process are either + 1 or − 1. Neurcomputationally, the simple random-walk can be thought of as arising from the difference of two processes, each composed of binary Pitts-McCulloch neurons like those analyzed by Von Neumann (1956) and assumed by Verdonck and Tuerlinckx (2014), which individually are either firing (+ 1) or silent (0) at any instant. The two component processes are assumed to represent the evidence for the two decision alternatives. As a model of decision making, the Wiener process has the attractive property that it implements a continuous-time version of Wald’s (1947) sequential probability ratio test (Bogacz, Brown, Moehlis, Holmes, & Cohen, 2006), which guarantees a form of statistical optimality in homogeneous environments, in which decisions are all of the same level of difficulty.

Despite the success of the diffusion model it has sometimes been seen as lacking neural realism. Its derivation, as the limit of a simple random walk implemented by populations of Pitts-McCulloch neurons, can be criticized on the grounds that such neurons radically simplify the properties of real neurons, although this has not commonly been identified as a serious problem. Indeed, most other models that propose diffusion process representations of evidence accumulation have made similar simplifying assumptions (Roxin & Ledberg, 2008; Verdonck & Tuerlinckx, 2014). In contrast, the shot-noise models of Smith (2010) and Smith and McKenzie (2011), although they also abstract away from the properties of real neurons, derive diffusion process representations from the flux of postsynaptic potentials induced by a train of action potentials. Neurally-motivated critiques of the diffusion model have tended to focus either on the model architecture or the use of the Wiener process — as opposed to some other process — to represent evidence accumulation. Three features of the neural processes involved in decision making not represented in the diffusion model have led to the claim it lacks neural realism:

-

1.

Neural firing rates typically increase in cells responding to both selected and unselected decision alternatives, suggesting that evidence for competing alternatives is represented anatomically by separate processes rather than by a single process.

-

2.

Neural firing rates saturate at high stimulus intensities, so any diffusion process model of evidence accumulation represented by firing rates should similarly be bounded.

-

3.

Neural firing rates are never negative, so any diffusion process model of the evidence represented by them should similarly be nonnegative.

Some researchers have investigated alternatives to the single-process Wiener model that align more closely with these principles, although it remains the benchmark model that any alternative model must equal in order to be credible and, indeed, the importance of the principles themselves can be debated (see the “Discussion” section). Gold and Shadlen (2001), for example, in defense of single-process models, argued that a log-likelihood-ratio evidence accumulator can be obtained by taking the difference between members of pairs of mirror-image units they called “neurons and antineurons,” whose activities individually are nonnegative. That being so, the requirement that neural evidence accumulation processes must be nonnegative arguably loses some of its force. However, the anatomical locus for the hypothetical signed difference process has not been identified and it is also the case that evidence for competing decision alternatives is represented separately as far downstream as the superior colliculus, which is the last neural structure before the response in eye-movement decision tasks. The existence of these anatomically late representations adds to the plausibility of the separate accumulators account.

Three main alternatives to the process and architecture assumptions of the diffusion model have been investigated:

-

1.

Independent accumulators. Several researchers have investigated models in which evidence for competing alternatives is modeled by racing diffusion processes (Logan, Van Zandt, Verbruggen, & Wagenmakers, 2014; Ratcliff & Smith, 2004; Ratcliff, Hasegawa, Hasegawa, Smith, & Segraves, 2007; Smith & Ratcliff, 2009; Tillman, Van Zandt, & Logan, 2020). Ratcliff et al., (2007) and Smith and Ratcliff (2009) called these models dual diffusion models, which is the terminology I adopt here. Other accumulator model architectures, like the simple accumulator or recruitment model (Audley & Pike, 1965; La Berge, 1962), the Poisson counter model (Smith & Van Zandt, 2000; Townsend & Ashby, 1983), and the Vickers accumulator (Smith & Vickers, 1988; Vickers, 1970; 1979), give poorer RT distribution predictions than models based on racing diffusion processes (Ratcliff & Smith, 2004). Usher and McClelland (2001) proposed a model with mutual inhibition between the accumulators on grounds of neural plausibility and some researchers have reported that these kinds of models give a better fit to data (Ditterich, 2006; Kirkpatrick, Turner, & Sederberg, 2021). Others have found that models with independent accumulators give good accounts of data (Ratcliff & Smith, 2004; Smith & Ratcliff, 2009; Tillman et al., 2020) including neural data. Ratcliff et al., (2007) found that a dual-diffusion model predicted choice probabilities, RT distributions, and neural firing rates in the superior colliculi of monkeys performing an eye-movement decision task. There was no evidence of suppression in the firing rates in cells associated with the non-chosen alternative at the time of the response, as predicted by mutual-inhibition models. The advantage of models with independent accumulators is that they are tractable analytically whereas models with interactive accumulators are not.

-

2.

Bounded evidence accumulation. Instead of modeling evidence accumulation using the Wiener process, some researchers have modeled it using the Ornstein-Uhlehbeck (OU) process, which is a diffusion process with leakage or decay (Busemeyer and Townsend, 1992; 1993; Diederich, 1995; Smith, 1995; Smith & Ratcliff, 2009; Smith, Ratcliff, & Sewell, 2014; Usher & McClelland, 2001). Unlike the Wiener process, the OU process has a stationary distribution At long times, evidence in the OU process has a Gaussian distribution with constant mean and variance (Karlin & Taylor, 1981; Smith, 2000). The stationarity property is a diffusion counterpart of the saturation property of neural firing rates. Although the OU process has sometimes been proposed on grounds of neural realism, whether it improves a model’s ability to account for data depends on its other assumptions. Ratcliff and Smith (2004) found that an OU process in a two-boundary, single-process architecture performed no better than a Wiener process and when the OU decay parameter was free to vary it went to zero, which is the Wiener process. However, Smith and Ratcliff (2009) found that in a dual-diffusion architecture a process with decay, that is, an OU process, performed better.

-

3.

Reflecting boundaries. The state, or evidence, space of the Wiener and OU processes is the entire real line, \(\mathbb {R}\), which is inconsistent with the use of these processes to model neural firing rates, which can never be negative. The simplest way to constrain a process to the positive half line, \(\mathbb {R}^{+}\), is with a reflecting boundary. When a process reaches such a boundary it is reflected back into the state space and continues accumulating (Karlin & Taylor, 1981). Models with reflecting boundaries have been proposed by Diederich (1995), Smith and Ratcliff (2009), and Usher and McClelland (2001).Footnote 1 Apart from considerations of neural plausibility, in the Usher and McClelland model the reflecting boundary is needed to stabilize its dynamics. Without it, if the evidence in one of the accumulators goes negative then the mutual inhibition between them becomes mutual excitation and the model becomes unstable.

The linear drift, linear infinitesimal variance model

In this article, I present and evaluate a new diffusion model, the LDLIV model, which embodies the three principles above. The model is shown in Fig. 1, along with the standard diffusion model and two related models that are evaluated below, to which it may be contrasted. Specifically, the LDLIV model assumes that: (a) evidence for competing alternatives is accumulated by independent processes; (b) the processes are bounded; and (c) the processes are nonnegative. An attractive feature of the LDLIV process that recommends it as a model of decision-making is that its dynamics naturally constrain it to the positive half line, \(\mathbb {R}^{+}\), without artificial or externally-imposed bounds. This contrasts with the dual diffusion models of Ratcliff et al., (2007) and Smith and Ratcliff (2009) and the leaky competing accumulator model of Usher and McClelland (2001) and the model of simple RT of Diederich (1995), in which the evidence processes are constrained by reflecting boundaries. Although the reflecting boundary endows these models with the right properties mathematically, it can be objected that the boundary is not a part of the process itself and lacks any clear neural interpretation. In contrast, the LDLIV process lives naturally, in the mathematical sense of the word, on \(\mathbb {R}^{+}\).

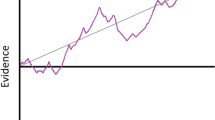

Sequential-sampling decision models. (a) Single-process Wiener diffusion model (DIFF). Starting at z the process accumulates evidence between decision criteria at 0 and a. There is variability in the drift rate, ξ, with standard deviation η, starting point, z, with range sz, and nondecision time, Ter, with range st. (b) Dual diffusion models. Evidence is accumulated by racing diffusion processes starting at z with decision criteria at a1 and a2. In the linear drift, linear infinitesimal variance model (LDLIV), zero is a natural boundary that constrains the processes to the positive real line \(\mathbb {R}^{+}\), and evidence accumulates with rate q and decays with rate − p. In the racing, reflecting Wiener model (WIEN) the processes are constrained below by reflecting boundaries at r and evidence accumulates with rate μ with no decay. The racing Walds model (WLD) is unbounded below. The LDLIV and WIEN models have across-trial variability in drift rate, starting point, and nondecision time; the WLD model has only across-trial variability in starting point and nondecision time

Another attractive property of the LDLIV process is that it becomes progressively more noisy, or variable, as the activation state increases. This property captures the general tendency for neural firing rates to become more variable as their means increase (Rieke et al., 1997). Model neurons typically embody this property as a basic design feature (Gerstner and Kistler, 2002, p. 177, Tuckwell, 1988, p. 197). The Wiener process in the standard diffusion model becomes more variable with the passage of time, but the change in variability (diffusion rate) is independent of the change in the mean (drift rate). In contrast, in the LDLIV process, the change in variability depends on the current state. This property seems to better capture the dynamics of noisy evidence growth in neural populations than does the assumption that drift and diffusion rates are independent of each other. A third attractive feature of the LDLIV model is that the distribution of evidence states in the process is positively skewed, as we would expect a diffusion approximation to neural firing rate distributions to be. This contrasts to the Wiener and OU processes, both of which predict normally distributed evidence states. The theoretical interest of the LDLIV process for decision modelers comes from the fact that it embodies the three design principles stated above and also that it is possible to derive explicit mathematical predictions for it using integral equation methods similar to those described by Smith (2000) and Smith and Ratcliff (2022). The methodological contribution of this article is to introduce an explicit integral equation representation of the first-passage time distribution for the LDLIV process and to use it to fit data.

I compare the LDLIV model to two dual-diffusion models that relax one or more of the three principles. One is a model composed of racing Wiener diffusion processes between absorbing and reflecting boundaries. This model embodies Principles 1 and 3 but relaxes Principle 2 (boundedness). I also obtain explicit mathematical predictions for this model using integral equations. The other model, which also relaxes Principle 3 (positivity), is composed of racing unconstrained Wiener diffusion processes. This model is attractive because of its conceptual and mathematical simplicity and was recently advocated as an alternative to the standard diffusion model by Tillman et al., (2020). I compare these models based on independent racing processes to the standard diffusion model.

Mathematically, a diffusion process is defined by specifying a pair of functions or coefficients: the drift rate and the diffusion rate (or diffusion coefficient), which are referred to as the infinitesimal moments of the process. The drift rate characterizes the expected change in the process in a unit interval and the diffusion rate characterizes the change in its variance (Bhattacharya and Waymire 1990, Ch. 7; Cox & Miller 1965, Ch. 5; Karlin & Taylor1981, Ch. 15). The square root of the diffusion rate is the infinitesimal standard deviation. In the most general diffusion process, the drift and diffusion rate may depend both on time, t, and the position of the process in the evidence space, x. We can write a stochastic differential equation (SDE) for such a process, Xt, which specifies the random change in the process during a small time interval, dt, as follows

where A(x, t) and B(x, t) are, respectively, the drift and diffusion rates, and dWt is a zero-mean Gaussian increment whose standard deviation in a small interval of duration Δt is of the order \(\sqrt {\Delta t}\). An alternative way to characterize such processes is via partial differential equations (the so-called Kolmogorov backward and forward equations).Footnote 2 For first-passage time problems, which characterize the time required to pass through an absorbing boundary, and which arise in relation to RT modeling, the relevant equation is the backward equation (Ratcliff, 1978). The LDLIV process (Feller, 1951) satisfies the SDE

with drift rate A(x, t) = px + q and diffusion rate B(x, t) = 2σx. The LDLIV process has a linear drift rate, like the OU process. When the state-dependent coefficient of the drift rate, p, is negative, it acts as a decay or leakage term, which tends to pull the process back to zero at a rate proportional to its current state, x. Like the OU process, leakage bounds the evidence accumulation process, consistent with Principle 2.

Where the LDLIV process differs from the OU process is in the diffusion rate. The OU process has a constant diffusion rate, which means it can take on both positive and negative values, like the Wiener process. In contrast, the diffusion rate of the LDLIV process is proportional to x, which constrains the process to the positive real line, consistent with Principle 3.

In the remainder of this article, I first present explicit integral-equation expressions for the response time and accuracy predictions for the LDLIV model and the Wiener model between absorbing and reflecting boundaries. Predictions for the other two models are available in the literature. I then describe applications of the models to data from two experiments. One is from a numerosity discrimination study by Ratcliff (2008), which crossed stimulus discriminability with a speed-accuracy manipulations. The other is from an attention-cuing study using sinusoidal grating stimuli by Smith, Ratcliff, and Wolfgang (2004). These two studies were used recently by Smith and Ratcliff (2022) as benchmarks to compare fixed-boundary, collapsing-boundary, and urgency-gating models and allowed them to distinguish between competing models. Both studies had multiple levels of stimulus discriminability (four in the Ratcliff study and five in the Smith et al. study) crossed with other experimental manipulations that produced different, and highly constrained, patterns of correct and error RTs, which are important in model comparison.

Many recent studies of decision making in psychology have followed single-cell recording studies of motion perception in neuroscience and have investigated decisions about coherent motion in random dot kinematograms (Dutilh et al., 2019). Indeed, the random dot motion task is now sometimes seen as being the prototype of a decision-making task. Smith and Lilburn (2020) questioned this characterization and argued that, in important respects, the task may be quite atypical. They reviewed evidence from psychophysical temporal integration studies suggesting it requires an unusually long period of perceptual integration to form a representation of the motion signal. Consistent with the psychophysics, they found that the RT distributions and choice probabilities in the Dutilh et al. data were better characterized by a model in which the drift rates depended on the time-varying outputs of a linear filter with an integration time of around 400 ms than by a model in which they were constant. The tasks I considered here can both be considered “typical” in that they have previously been well-described by the standard Wiener diffusion model with constant drift and diffusion rates.

Integral equation methods for diffusion decision models

Mathematically, the predicted choice probabilities and decision times in a diffusion model are obtained by solving a first-passage time problem, which gives the probability distribution of the time when the process first crosses an absorbing boundary, which is identified in the psychological model with the time at which the accumulating evidence reaches a decision criterion. Classically, the solution of the backward equation for the Wiener process leads to a representation of the RT distributions as an infinite series (Ratcliff, 1978) that can be computed efficiently. The availability of an explicit solution, which has been implemented in several third-party software packages (Vandekerckhove & Tuerlinckx, 2008; Voss & Voss, 2007; Wiecki, Sofer, & Frank, 2013), has been important in encouraging the use of the model in basic and applied settings (Ratcliff, Smith, & McKoon, 2015; 2016). For other, more complex, diffusion processes, infinite-series solutions cannot be obtained and other methods must be sought. For models with interacting processes, like the Usher and McClelland (2001) model, Monte Carlo simulation may be the only or the most practicable way to evaluate them (although see Ditterich (2006) for a finite-state Markov chain approach) and considerable effort has recently gone into developing methods for approximating likelihoods and estimating parameters of simulated decision models (e.g., Turner and Sederberg, 2014; Turner & Van Zandt, 2018; Fengler, Govindarajan, Chen, & Frank, 2021). My own bias is towards models whose first-passage time distributions can be expressed in a mathematically explicit form, because I believe they provide the sharpest theoretical insights into the properties of the underlying processes, and I have accordingly focused my attention on models of this kind.

The integral-equation method was pioneered by Durbin (1971), who used it to compute the power of the Kolmogorov-Smirnov test. The method was subsequently developed by Ricciardi and colleagues (Buonocore et al., 1987; Buonocore, Giorno, Nobile, & Ricciardi, 1990; Ricciardi, 1976; Ricciardi & Sato, 1983)to characterize the firing time distributions of model integrate-and-fire neurons. Early applications to decision processes were described by Heath (1992) and Smith (1995, 1998). A detailed tutorial introduction may be found in Smith (2000) and recent applications to collapsing-boundary and urgency-gating models may be found in Voskuilen, Ratcliff, and Smith (2016) and Smith and Ratcliff (2022). Applications to models with time-varying drift and diffusion rates can be found in Smith and Ratcliff (2009), Smith et al., (2014), and Smith and Lilburn (2020).

For models based on racing diffusion processes, the theoretical quantities of interest are the functions, g[a(t), t,|z,0], the first-passage time distribution for a diffusion process, Xt, starting at z at time 0, through an absorbing boundary a(t) at time t, which may be time-varying, as the notation suggests. The integral equation method yields a representation of the first-passage time density function in the form of a Volterra integral equation of the second kind, of the form

In this equation, g[a(t), t|z,0] is jointly a function of its values at previous times, τ < t, and of a kernel function, Ψ[a(t), t|a(τ), τ], which depends on the drift and diffusion rates of Xt and which is described in detail below. Equation 3 is derived from a general integral representation of the first-passage time for a diffusion process through an absorbing boundary, attributed to Fortet (1943). A derivation of Eq. 3 based on the Fortet representation is sketched in Appendix A.

A problem with the original Fortet representation, as Durbin (1971) recognized, was that the equation becomes singular as \(\tau \rightarrow t \), that is, as the interval of approximation becomes small. This is because the free transition density function of the process, f[a(t), t|a(τ), τ], which describes the position of the process in the absence of boundaries, approaches a Dirac delta function, δ(τ − t), which describes a spike of probability mass of infinite amplitude concentrated at the point t. This means that simple iterative schemes for evaluating the first-passage time density will become numerically unstable. Durbin considered some ad hoc ways to stabilize the equation but Buonocore et al., (1987) showed that for many processes of interest it is possible to choose the kernel in a way that removes the singularity entirely. With the kernel chosen in this way, the equation may be approximated on a discrete set of points and solved iteratively (Eq. A3). Derivations of the kernel functions for Wiener and OU processes with time-varying drift rates, diffusion rates, and boundaries, with an application to urgency-gating models, can be found in Smith and Ratcliff (2022).

The kernel of the integral equation for the LDLIV process

The stable form of the kernel in Eq. 3 derived by Buonocore et al., (1987), although it characterizes many of the processes of interest to decision modelers, is not applicable to either the LDLIV process or the Wiener process with reflecting boundary at r. This is because both processes have a boundary — a so-called regular boundary — at which the equation become singular. A regular boundary is a point that can be attained, but not exceeded, by a process starting in the interior of the state space, and conversely, a process starting on the boundary can attain a point on the interior of the space at a later time (Karlin and Taylor 1981, p. 234). For the Wiener process, r, r < z, is a regular boundary; for the LDLIV process, 0 is a regular boundary.Footnote 3 Although the general form of the kernel function derived by Buonocore et al. does not apply to either of these processes, Giorno et al., (1989a) showed that it is nevertheless possible to derive kernel functions for Eq. 3 in which the resulting discrete approximations are stable. I draw heavily on their results throughout the remainder of this article.

For the LDLIV process, Giorno, Nobile, Ricciardi, and Sato (1989a, Eq. 6.4) showed the kernel is

In Eq. 4, Iν(⋅), is a modified Bessel function of the first kind of order ν (Abramowitz and Stegun 1965, p. 374), and k(t) is a function that is chosen to make the kernel of the integral equation nonsingular (Giorno et al., 1989a, Eq. 6.7), which is of the form

where \(a^{\prime }(t)\) is the derivative of the absorbing boundary a(t). Here I am concerned exclusively with the constant boundary case, a(t) ≡ a, in which case the terms \(a^{\prime }(t)\) in Eqs. 4 and 6 are both zero.Footnote 4

I assume that the decision process takes the form of a race between LDLIV processes, each of which accumulates evidence for a given response alternative. The joint decision time-accuracy distributions are then given by the race model equation, in which the joint density is the product of the first-passage time density for the first-finishing process multiplied by the survivor function for the other process (or processes in n-alternative cases). Denoting the first passage time density for process i by gi(t), the distribution function by Gi(t), and the survivor function by \(\bar {G}_{i}(t)\), where \(\bar {G}_{i}(t) = 1 - G_{i}(t)\), the joint densities are

Models of the form of Eq. 6 have a long history in the RT literature. An advantage of this representation is that it carries over easily to n-choice decisions, but I restrict myself here to the two-choice case. I discuss how I parameterize the model to fit it to data in a subsequent section.

The kernel of the integral equation for the reflecting wiener process

A race model based on LDLIV processes satisfies all of the three preceding principles for a neurally-plausible decision model. A model based on racing reflecting Wiener processes satisfies Principles 1 and 3 but relaxes Principle 2 (saturation at high intensities). Giorno et al. (1989a, Eq. 5.14) showed that it was possible to derive a stable kernel in Eq. 3 for a Wiener process with drift rate, μ, diffusion rate, σ2, and a reflecting boundary at r, r ≤ z, using arguments like those used to derive Eq. 4. For the reflecting Wiener process the kernel takes the form

where Φ(⋅) is the normal distribution function.Footnote 5 As in Eq. 4, in the constant boundary case that we are concerned with here, a(t) ≡ a and the terms involving \(a^{\prime }(t)\) are zero.

Figure 2 shows predictions for models with racing LDLIV and reflecting Wiener processes. The top panels compare predictions for the models obtained using the integral equation method with simulations of them using the Euler method (Brown, Ratcliff, & Smith, 2006). The latter approximates the diffusion process with a discrete time, Gaussian-increments, random walk. Each of the simulations was based on 100,000 trials with a step size of 0.001 s. The predictions were computed using Eq. A3 using a time step of 0.01 s, which sufficed to provide good agreement with the simulations. The simulations show that the integral-equation method is an effective way to evaluate models of this kind. Smith and Ratcliff (2022) give further examples of simulated and integral-equation predictions for time-varying Wiener and OU processes.

Simulated and predicted joint density functions for correct responses, gC(t), and errors, gE(t), for dual reflecting Wiener and LDLIV models. Each of the simulations was based on 100,000 trials using a time step of 0.001 s. (a) Dual reflecting Wiener model with parameters μ1 = 1.75, μ2 = 0.5, a1 = 1.5, a2 = 1.5, σ = 1.0, z = 0, r = − 0.1. (b) Dual LDLIV model with parameters q1 = 1.5, q2 = 0.75, a1 = 1.2, a2 = 1.2, σ = 1.0, p = − 0.01, z = 0.1. (c) Comparison of the reflecting Wiener and LDLIV models with parameters as in (a) and (b). (d) Comparison of the reflecting Wiener and LDLIV models with parameters as in (a) and (b) except with large LDLIV decay (p = − 1.0). In (c) and (d) the continuous curves are the reflecting Wiener model and the dashed curves are the LDLIV model

The bottom panels of Fig. 2 compare the dual reflecting Wiener and LDLIV models to each other. Figure 2c compares the predictions when the LDLIV decay parameter is small (p = − 0.01) and Fig. 2d compares them when it is large (p = − 1.0). The parameters of the models in Fig. 2a and b were chosen by eye to try to make the predictions as close to each other as possible. As Fig. 2c shows, when the parameters were chosen in this way and decay was small, the models are in close agreement. When decay is increased, the mean and the variance of the distributions increase and the model predicts heavy right tails. The behavior of the model is therefore similar to the OU model, which predicts similar changes in the distribution shape as decay is increased.

The two-barrier and single-barrier Wiener processes

For consistency of implementation I used the integral-equation method to obtain predictions for the two-barrier Wiener model. Ratcliff and Smith (2004) compared the infinite-series method and the integral-equation method and found their predictions were in close agreement. Smith and Ratcliff (2022) presented integral-equation solutions for the Wiener model with time-varying drift and diffusion rates and decision boundaries. I used a version of their code with drift and diffusion rates held constant, augmented with variability in drift rates and starting points, to carry out the model fits described below.

The first-passage time density for the single-boundary Wiener process has a simple closed-form solution that is well known in the literature (Karlin & Taylor 1975, p. 363) where it is variously referred to as the Wald or the inverse-Gaussian distribution. The first-passage time density has the form

The meaning of the parameters in this model is the same as in the reflecting Wiener model of Eq. 7. Equation 8 has a long history in the psychological literature in modeling both simple and choice RT (e.g., Emerson, 1970; Heathcote, 2004; Matzke & Wagenmakers, 2009; Schwarz, 2001; 2002). It is possible to derive a closed-form expression for the marginal first-passage density for this process when the starting point, z, is uniformly distributed across trials rather than constant (Logan et al., 2014; Tillman et al., 2020) but, again, for consistency of implementation, I evaluated the effects of starting point variability numerically, as discussed below.

Method

Experimental studies

I compared the dual LDLIV and reflecting Wiener models, the racing Walds model, and the standard diffusion model using the data from the numerosity discrimination study of Ratcliff (2008) and the attentional cuing study of Smith et al., (2004). Smith and Ratcliff (2022) used the data from these studies to compare urgency-gating and collapsing boundaries models to the standard diffusion model. The version of the standard diffusion model I used to fit the Ratcliff (2008) data was slightly more general than the one considered by them in that it allowed nondecision times to vary with experimental instructions, for reasons described below.

The participants in Ratcliff’s (2008) study were asked to decide whether the number of randomly-placed dots in a 10 × 10 grid was greater or less than 50. There were eight nominal discriminability conditions, in which the numbers of dots were: 31-35, 36-40, 41-45, 46-50, 51-55, 56-60, 61-65, and 66-70, crossed with speed versus accuracy instructions. On half the trials participants were instructed to respond rapidly and on the other half they were told to respond accurately and they were given feedback to encourage them to perform as instructed. Data were collected from 19 college-aged participants and 19 older participants who performed both a standard RT task, in which they responded as soon as they had sufficient evidence, and a response-signal task, in which they responded to a random external deadline. Following Smith and Ratcliff (2022), I restrict my analysis to the younger participants and the standard RT task. After eliminating fast and slow outliers, there were about 850 valid trials in each cell of the design, yielding around 13,600 valid trials per participant.

Participants in the Smith et al., (2004) study performed an attentional cuing task in which low-contrast Gabor patches were presented for 60 ms at either a cued location, which was indicated by a 60 ms, flashed peripheral cue 140 ms before the stimulus, or at one of two uncued locations. On each trial, participants decided whether the orientation of the patch was vertical or horizontal. In one condition of the experiment the stimuli were backwardly masked with high-contrast checkerboards and in the other condition they were briefly flashed and then extinguished. Data were collected from six highly-practiced participants who performed the task at five different levels of contrast, which were chosen for each participant individually during practice to span a range of performance from just above chance (≈ 55% correct) to near-perfect (≈ 95% correct). Participants were encouraged to be as accurate as possible but not to deliberate for too long and were given auditory accuracy feedback on each trial. There were 400 valid trials for each participant in each cell of the Cue × Mask × Discriminability design, yielding 8000 trials per participant. The reader is referred to Smith and Ratcliff (2022) and the original articles for more details on the two studies.

Results

In the standard diffusion model, evidence accumulation is modeled by a Wiener diffusion process between absorbing boundaries that represent decision criteria for the two responses. In addition to within-trial, diffusive variability there are three sources of across-trial variability: variability in drift rates, starting points, and nondecision times (Ratcliff & McKoon, 2008). Drift rate is normally distributed with mean ν and standard deviation η; starting point is uniformly distributed with range sz, and nondecision time, Ter, is uniformly distributed with range st. Variability in drift rate and starting point allows the model to predict the ordering of correct responses and errors; variability in nondecision time allows it to better predict the leading edges of RT distributions under speed-stress conditions when accuracy is high and RTs are short. Typically, error RTs tend to be longer than RTs for correct responses when the task is difficult and accuracy is stressed and shorter when the task is easy and speed is stressed (Luce, 1986, p. 233).

In the dual-diffusion models, each accumulator has its own drift rate, which may be unrelated or be constrained in some way. These models raise questions about how to parameterize drift rates in a way that is theoretically principled, parsimonious, and flexible enough to account for the relationships found in empirical data. A common assumption is to assume that the drift rates or the mean drift rates sum to a constant across stimulus difficulty levels (Ratcliff & Smith, 2004; Ratcliff et al., 2007; Usher & McClelland, 2001). Although it is possible to implement a dual-diffusion model in which the drift rates are normally distributed — Smith and Ratcliff (2009) proposed a dual-diffusion model with reflecting OU processes and normally-distributed, negatively-correlated drift rates — a more natural assumption is to constrain drift rates to be positive. In the LDLIV process the positivity constraint is needed to avoid the q ≤ 0 exit boundary properties described in Footnote 3.

I assumed the drift rates in the dual-diffusion models were lognormally distributed (i.e., random variables whose logarithms are normally distributed), with dispersion parameters η1 and η2 for the accumulators associated with correct and error responses, respectively. The drift rates in stimulus condition j were distributed as

where ξ1 and ξ2 are independent standard normal variates and πj, 0 ≤ πj ≤ 1, is a mixing parameter that constrains νsum to be constant across stimulus conditions. With this parameterization the scaling factors νj1 and νj2 are the medians of the lognormal distributions, so Eq. 9 constrains the sums of the median drift rates in the two accumulators to be constant. A constraint on the medians could be seen as a natural one when the drift rate distributions are positively skewed, but I adopted it for computational convenience rather than because of a theoretical preference for medians over means. Like a constraint on the means, the constraint on the medians almost halves the number drift rate parameters that are needed to fit the model to data. Parameterized in this way, the LDLIV model requires five drift rate parameters to fit the four discriminability conditions of the Ratcliff et al. (2008) data and six to fit the five discriminability conditions of the Smith et al., (2004) data. In the notation of the LDLIV model, μ ≡ q, the stimulus-dependent part of drift rate.

In the case of the model with racing Walds (single-boundary Wiener processes with no reflecting boundaries), Tillman et al., (2020) reported the model can successfully account for slow errors without across-trial variability in drift rates because of its race-model structure, which naturally predicts slow errors. Indeed, if combined with the usual assumption of normally-distributed drift rates then the model would predict defective RT distributions because on some trials the drift rate would be away from the boundary and the probability the process would ever terminate would be less than 1.0. To evaluate the generality of Tillman et al.’s findings, I implemented the racing Walds model, as they did, without drift-rate variability. Initially, I imposed a constraint that the drift rates should sum to a constant, but found it performed poorly relative to the other dual diffusion models. I therefore allowed the drift rates for the two accumulators to vary freely and independently across conditions, which gave the model a similar number of free parameters to the other models. The fits I report are for the model with unconstrained drift rates.

There has been debate in the literature about whether speed-accuracy manipulations affect only decision criteria, as they are theoretically assumed to do, or whether they affect other processes as well. Several researchers have reported that speed-accuracy instructions affect nondecision times in addition to decision criteria (Arnold, Bröder, & Bayden, 2015; de Hollander et al., 2016; Donkin, Brown, Heathcote, & Wagenmakers, 2011; Dutilh et al., 2019; Huang et al., 2015)and others have reported they also affect mean drift rates or drift-rate variability (Donkin et al., 2011; Heathcote & Love, 2012; Ho et al., 2012; Rae, Heathcote, Donkin, Averell, & Brown, 2014; Starns, Ratcliff, & White, 2012). Smith and Lilburn (2020) suggested that these so-called violations of selective influence may reflect time-inhomogeneity in the drift and diffusion rates, and reanalyzed the random dot motion data of Dutilh et al. using a time-inhomogeneous diffusion model that captured the data well. Here I restrict myself to time-homogeneous versions of the models for the sake of computational tractability. Smith and Ratcliff (2022) reported a reanalysis of the Ratcliff (2008) numerosity data using models with a single Ter parameter but I have instead allowed Ter to vary with instructions as this produces substantial improvements in fits for the standard diffusion model. Whether these so-called violations of selective influence are best characterized as changes in the time of onset of evidence accumulation or as time-varying drift and diffusion rates is a question that is outside the scope of this article. My aim here is the narrower one of comparing the standard diffusion model and the racing diffusion models under conditions that yield the best accounts of the data for each model. The parameters of the models and their interpretations are summarized in Table 1.

Fitting method

How best to evaluate RT models empirically remains a subject of active debate, with classical and Bayesian, hierarchical and nonhierarchical methods all being widely used and advocated. The variety of methods used in the recent blinded validity study of Dutilh et al., (2019) highlights the diversity of practice among researchers in the area. Ratcliff and Childers (2015) carried out a parameter recovery study using the diffusion model in which they compared classical and hierarchical Bayesian methods and found that hierarchical Bayesian methods improved parameter recovery for small samples (i.e., small numbers of trials in each experimental condition for each participant), but when samples were large, there was little difference between them and classical methods.

I chose to use methods similar to those used in the original studies of Ratcliff (2008) and Smith et al., (2004) and in the reanalysis of their data by Smith and Ratcliff (2022). I minimized the likelihood-ratio chi-square statistic (G2) for the response proportions in the bins formed by the .1, .3, .5, .7, and .9 RT quantiles for the distributions of correct responses and errors. When bins are formed in this way there are a total of 12 bins (11 degrees of freedom) in each pair of joint distributions of correct responses and errors. The resulting G2 may then be written as a function of the observed and expected proportions in the bins as

In Eq. 10, pij and πij are, respectively, the observed and predicted response proportions in the bins bounded by the RT quantiles and “log” is the natural logarithm. The inner summation extends over the 12 bins formed by each pair of joint distributions and the outer summation extends over the M experimental conditions. For the numerosity study, M = 16 (2 Instruction conditions × 8 Dot proportions). For the cuing study, M = 5 (5 contrast conditions for each cell of the Cue × Mask experimental design). The quantity ni is the number of experimental trials on which each joint distribution pair was based. For the numerosity study ni ≈ 850 and for the cuing study ni = 400. I fit the models to the individual participants’ data by minimizing G2 using the Nelder-Mead simplex algorithm (Nelder & Mead, 1965) as implemented in Matlab (fminsearch). The fit statistics I report are the minimum G2 values obtained from six runs of simplex using randomly perturbed estimates from the preceding run as the starting point for the next run. One of my reasons for preferring fits at the individual level using classical methods to hierarchical Bayesian methods is the latter — as least as they have been implemented in the literature to date — tend to obscure the quality of the fits and the variation in comparative model performance at the individual participant level.

To compare models with different numbers of parameters, I used standard model selection methods based on the Akaike information criterion (AIC, Akaike, 1974) and the Bayesian information criterion (BIC, Schwarz, 1978). The first of these statistics is derived from classical principles whereas the second is Bayesian, but I use them in the spirit in which they are typically used in the modeling literature, as penalized likelihood statistics that impose more or less severe penalties on the number of free parameters in a model. As is well known, the AIC tends to gravitate towards more complex models with increasing sample sizes more quickly than does the BIC (Kass & Raftery, 1995). For binned data, the AIC and BIC may be written as

where k is the number of free parameters in the model and \(N = {\sum }_{i} n_{i}\) is the total number of observations on which the fit statistic was based.

Numerosity study (Ratcliff, 2008)

In the following tables, I use the identifiers “DIFF” to denote the standard diffusion model, “LDLIV” to denote the linear-drift, linear-infinitesimal variance model, “WNR” to denote the reflecting Wiener model, and “WLD” to denote the racing Walds model. Table 2 shows mean G2 statistics for each model, averaged over the 19 participants, together with the corresponding mean AIC and BIC values.

On average, the LDLIV model was the best-performing of the models by a substantial margin. The next best model was the diffusion model, followed by the reflecting Wiener model, followed by the racing Walds model. This pattern is mirrored at the individual participant level, as shown in Table 3, which shows the number of participants for whom each of the models was better than each of its competitors, as assessed by either the AIC or the BIC. The diffusion model was better than the LDLIV model for just under half the participants (9/19), but was better than either of the other dual diffusion models for the majority of participants by either criterion. The LDLIV model was better than either of the other two dual diffusion models for a substantial majority of participants. Of those two models, the reflecting Wiener process model was better than the racing Walds model for most participants.

In comparison, the G2 statistics for models with a single Ter parameter were 820.5, 621.7, 857.9, and 888.8, for the DIFF, LDLIV, WNR, and WLD models, respectively. The average improvement in fit when Ter varied with instructions ranges from 5% to 20%, with the largest improvement for the standard diffusion model. Table 4 shows the estimated parameters for the four models. Consistent with the observation that the standard diffusion model showed a large improvement in fit with variable Ter, it also showed the largest difference in the estimates of Ter, s and Ter, a (270 ms vs. 282 ms, respectively). The corresponding estimates for the the LDLIV model were 264 ms and 267 ms. The estimates of Ter, a for the WNR and WLD models were shorter than those of Ter, s rather than longer, suggesting the extra parameter in these models was simply describing random variation rather than essential structure in the data.

Of the four models, the diffusion model is the most parsimonious, in the sense of requiring the fewest parameters to account for the data. Even with constraints on the drift rates, the LDLIV and the reflecting Wiener process models required three or four more parameters because of the need to parameterize the mean drift rate and the drift rate variability for the two accumulators separately. The racing Walds model is able to characterize the data without drift rate variability, as Tillman et al., (2020) pointed out, but it required a total of eight free drift rate parameters in order to do so and was clearly the worst-performing of the models. Figure 3 shows fits of the diffusion and LDLIV models to the group data; Fig. 4 shows the corresponding fits for the reflecting Wiener and racing Walds models. The fits are shown as quantile-probability plots, in which quantiles of the RT distributions for correct responses and errors are plotted against the choice probabilities for a range of stimulus difficulties. Readers who are unfamiliar with this way of representing model fits are referred to Ratcliff and Smith (2004) or Ratcliff and McKoon (2008), among other other sources, for an account of how they are constructed. The data in Figs. 3 and 4 are quantile-averaged group data and the fitted values are quantile-averaged individual fits. The fits for the diffusion model in the upper part of Fig. 3 are the same as those in Fig. 3 in Smith and Ratcliff (2022).

Quantile probability functions for “large” and “small” responses for speed and accuracy conditions for the diffusion model, DIFF, and the LDLIV model fitted to the Ratcliff (2008) numerosity data. The quantile RTs in order from the bottom to top are the .1, .3, .5, .7, and .9 quantiles (circles, squares, diamonds, inverted triangles, upright triangles, respectively). The dark gray symbols are the quantiles for correct responses and the light gray symbols are the quantiles for errors. The continuous curves and x’s are the predictions from the model. For the data and models the quantile RTs are plotted on the y-axis against the observed and predicted response proportions on the x-axis

Quantile probability functions for “large” and “small” responses for speed and accuracy conditions for the reflecting Wiener model, WNR, and the racing Walds model, WLD, fitted to the Ratcliff (2008) numerosity data. The quantile RTs in order from the bottom to top are the .1, .3, .5, .7, and .9 quantiles (circles, squares, diamonds, inverted triangles, upright triangles, respectively). The dark gray symbols are the quantiles for correct responses and the light gray symbols are the quantiles for errors. The continuous curves and x’s are the predictions from the model. For the data and models the quantile RTs are plotted on the y-axis against the observed and predicted response proportions on the x-axis

The most obvious difference between the two models is in the tails of the predicted RT distributions. One of the effects of combining racing processes in a dual diffusion architecture is that the predicted RT distributions for the fastest-finishing process will be less skewed and more symmetrical than the distributions of either of the component processes in isolation. This is because slow responses occur only if both processes are slow to finish. By the race model equation (Eq. 6), an RT longer than a given t is equal to the product of the probabilities that both processes take longer than t to finish (i.e., the product of their survivor functions), which will be less than the finishing time probabilities of either process individually. The reduction in skewness is offset by the presence of decay, denoted p for the LDLIV model in Eq. 2, and estimated to be, on average, p = 2.428 in Table 4. This interaction between the architecture of the model and the effects of decay is likely why Ratcliff and Smith (2004) found decay to be zero in a single-process OU model but Smith and Ratcliff (2009) found decay was nonzero in a dual-diffusion OU model. The difference between single-process and dual-process models is evident in the distribution tails for the diffusion and LDLIV models in Fig. 3, as represented by the .9 quantile function (the topmost line in the plot). The diffusion model accurately predicts the .9 quantile in the speed condition and somewhat overpredicts it in the accuracy condition. The LDLIV model slightly underpredicts the .9 quantile function in the speed condition and underpredicts it more severely in the accuracy condition, especially for the error distributions. The tendency for the diffusion model to overpredict the .9 quantile function in the accuracy condition was reduced by allowing Ter to vary with instructions. Indeed, the most obvious qualitative difference between models with one and two Ter parameters was in how well the .9 quantile functions in the accuracy condition were predicted.

The tendency for dual diffusion models to underpredict the tails of RT distributions is particularly pronounced in the reflecting Wiener model, shown in the upper panels of Fig. 4. The reflecting Wiener process model was worse than the LDLIV model for a substantial majority of the participants (16/19 by either criterion). The reflecting Wiener model captured the shapes of the RT distributions fairly well in the speed condition but missed substantially in the accuracy condition. The pattern of misses is similar to that for the LDLIV model, but is magnified because the Wiener model does not have a decay term to offset the tendency for dual diffusion models to predict RT distributions that are more symmetrical than those predicted by the standard model.

Although the reflecting Wiener model was worse than the LDLIV model, it was nevertheless better than the racing Walds model for the majority of participants (12/19 by either criterion). Although the latter model is in principle able to predict slow errors by virtue of its race model structure, it failed to do so in the accuracy condition of this experiment. Rather, the predicted quantile-probability functions in the accuracy condition in Fig. 4 are almost symmetrical across the vertical midline, indicating the model is predicting essentially the same RT distributions for correct responses and errors. There are two reasons why the model might have failed to capture the slow error pattern in these data. The first is that it may be unable to capture the slow error pattern if the range of stimulus discriminabilities and associated accuracy varies widely. The second is that it may be unable to capture the slow error pattern when discriminability is crossed with speed versus accuracy instructions – even when Ter is allowed to vary with instructions, as was the case here.

Tillman et al., (2020) reported a simulation showing that the magnitude of the slow error effect predicted by the model increases with decision criterion and, consequently, increases monotonically with accuracy (their Fig. 3). However, they did not characterize the way in which the magnitude of the effect varies with drift rate and it is not obvious from their results that the model can capture a slow error pattern of the complexity of the one shown in Figs. 3 and 4. They reported a fit of the racing Walds model to the data of Ratcliff and Rouder (1998), which crossed stimulus discriminability with speed versus accuracy instructions, but they fit the mean RTs only. They also reported a fit to the RT distribution data of Rae et al., (2014), which varied speed versus accuracy instructions, but used only a single stimulus discriminability level, and even in this relatively unconstrained case the model appears to be failing to capture the shapes of the error distributions (their Fig. 8). As a further, more constrained, test they fit the model to a data from a lexical decision experiment reported by Ratcliff and Smith (2004) that collected RT and accuracy data for high-frequency, low-frequency, and very-low-frequency words and pseudowords, each of which was modeled with its own drift rate. It is difficult to infer the quality of the fit from Tillman et al.’s figure (their Fig. 6), which show joint cumulative distributions rather than quantile probability functions (see Luce (1986, pp. 17–20), for a relevant discussion on this point), but it suggests a significant failure to fit in some conditions, most obviously for error responses to pseudowords. Overall, the data of Ratcliff (2008) represent an appreciably greater challenge for the model than do the data sets that Tillman et al. considered and, as I have shown, on the Ratcliff data the model does not fare well. Our next application of the model to the attention cuing study of Smith et al., (2004) speaks further to the reasons for the model’s failure.

As well as differing in whether there was drift-rate variability, the reflecting Wiener and racing Walds models also differed in whether or not the process was constrained by a lower reflecting boundary. Unlike the reflecting Wiener model, the racing Walds model does not have a reflecting boundary to limit the excursions of the process away from the decision boundary. A single-boundary Wiener process, as characterized by the Wald distribution, can make arbitrarily large excursions away from the boundary with finite probability, which often leads to long finishing times. Indeed, if the drift rates are negative or zero then the probability that the process will never finish is greater than zero and the RT distributions will be defective, as noted previously.

To evaluate whether drift-rate variability or the reflecting boundary was the primary determinant of the difference between the two models, I refit the reflecting Wiener model with the reflecting boundary set to \(r = -1.5\max \limits (a_{1a}, a_{2a})\), that is, as a multiple of the largest of the two accuracy criteria. I conjectured, based on the estimates in Table 4, which show r set quite far from the starting point, that the reflecting boundary was contributing relatively little to the quality of the fit. The multiple of 1.5 was arbitrary, but served to minimize the effects of the boundary while avoiding numerical instabilities in the computation of the kernel. With r constrained in this way, the performance of the model was essentially unaltered. The mean G2 was 805.5, which is virtually identical to the G2 with r freely-varying in Table 2. (The very slight improvement in fit found with r constrained is due to the constrained model’s better convergence properties.) The comparative performance of the constrained model at the individual level was identical to that of the model with r freely-estimated in Table 3.

The large negative estimates of r in Table 4 contrast with Smith and Ratcliff’s (2009) dual-diffusion model, which consists of racing OU processes with negatively correlated, normally-distributed, drift rates and reflecting lower boundaries. They estimated the reflecting boundaries to be just below the starting points (again zero). Because of the negatively correlated drift rates in their model, error processes tend to drift towards the lower reflecting boundary, which prevents large negative excursions in the accumulating evidence, allowing the model to correctly predict distributions of error RTs. In the reflecting Wiener model, the drift rates are lognormally distributed and constrained to be positive, so all of the processes drift upwards and large negative excursions in the accumulating evidence are comparatively infrequent. Consequently, the reflecting boundary in the Wiener model has relatively little effect on model performance.

From the quantile-probability plot, the reasons for the better performance of the LDLIV model compared to the diffusion model are not particularly obvious, but they appear to lie in the relative ability of the models to account for faster responses. One of the unique features of the LDLIV model not shared with the other models is in the effects of starting point and starting point variability. The Wiener process models — including the diffusion model — are spatially homogeneous. Changing the starting point simply translates the process on the real line, \(\mathbb {R}\), and is tantamount to relabeling the decision boundaries relative to the new starting point. In contrast, the LDLIV model with a nonzero starting point is qualitatively different from one with a zero starting point because the diffusion rate in Eq. 2 depends on the position of the process in the evidence space, \(\mathbb {R^{+}}\). A process with a starting point of z > 0 will begin to diffuse towards the boundary more rapidly than one with a starting point of z ≈ 0. Table 4 shows that the estimated starting point in the LDLIV model was between a half and a third of the way towards the decision boundary in the speed and accuracy conditions, respectively. Unlike the other models, the starting point in the LDLIV model affects not only the distance the process has to travel in order to reach a boundary but also the speed with which it does so. In the other models, the distance to the boundary and the speed with which the process travels are independent of each other. This dependency seems to be what gives the model an advantage under conditions in which speed is stressed.

Spatial cuing study (Smith et al., 2004)

My treatment of the Smith et al., (2004) spatial cuing study follows the one in Smith and Ratcliff (2022), in which a separate model, with different drift rates, decision criteria, and nondecision times, was fitted to each cell in the Cue × Mask design for each participant. Smith et al., (2004) characterized the relationships among the conditions using an attention orienting model, which predicted how drift rates and nondecision times would change across the four conditions, but here I imposed no a priori theoretical constraints on the parameters because my primary concern was to compare decision models, not to test the attention model. Because there was no speed versus accuracy manipulation in the study, the starting point variability parameters could be omitted without worsening the fit and because the speed and accuracy of decisions to vertical and horizontal gratings were sufficiently similar they could be pooled to obtain a single distribution of correct responses and a single distribution of errors for each stimulus condition. This symmetry implies a symmetry constraint on the starting point in the diffusion model, z = a/2, and equality of the decision criteria in the dual-diffusion models, a1 = a2. I fit the same four models as for the Ratcliff et al. 2008 numerosity study. The averaged fit statistics are shown in Table 5; the comparative performance for the individual participants is shown in Table 6, and the estimated parameters are shown in Table 7. Figure 5 shows the performance of the diffusion and LDLIV models; Fig. 6 shows the performance of the reflecting Wiener and racing Walds models. I fit the models in the same way as for the Ratcliff et al. study, except I fit the models for each cell of the Cue × Mask design separately (24 fits in all). Smith and Ratcliff (2022) reported fits to the data from the Smith et al. study summed across the four cells of the design for each of the six participants, but as I have fitted independent models to each cell of the design I report them instead as 24 separate model fits, which better reflects the way they were carried out. Whether or not fit statistics are summed across cells for each participant before comparing the models has no effect on the inferences I wish to draw about them.

The performance of the models on the spatial cuing task was similar to the numerosity task, except that the order of the diffusion model and the LDLIV model was reversed. The diffusion model was the best model, on average by G2, the AIC, and the BIC, followed by the LDLIV model, then the reflecting Wiener model, followed by the racing Walds model. The average G2 for the diffusion model was only marginally smaller than for the LDLIV model, but the average AIC and BIC were both considerably smaller because of the diffusion model’s greater parsimony. At the level of the individual fits, the picture differed, depending on the whether the AIC or BIC was used. By the AIC, the performance of the LDLIV model was slightly better than that of the diffusion model (13/24), but the BIC overwhelmingly favored the diffusion model (19/24). Some researchers have reported that the BIC has poorer model recovery properties than the AIC because of its bias against complexity (Donkin, Nosofsky, Gold, & Shiffrin, 2015; Oberauer & Lin, 2017; van den Berg, Awh, & Ma, 2014), but the picture that emerges if performance on both criteria is taken into account is similar to the one obtained from the Ratcliff (2008) study, which suggests that both the diffusion and LDLIV models provide good accounts of the data. The quantile probability plots in Fig. 5 further reinforce the picture obtained from the fit statistics. Both models captured the essential structure of the data fairly well, although there are differences in the way they characterize the slow error pattern, particularly in the two masked conditions, in which the slow error pattern is most pronounced. Whereas the .9 quantile functions for the diffusion model are uniformly concave for all conditions, the corresponding functions for the LDLIV model show a tendency to become convex. These qualitative differences in the predictions reflect differences in the way in which the models parameterize drift rate variability. Both models predict slow errors via drift rate variability: In the diffusion model, drift rates are normally distributed and may take on both positive and negative values; in the LDLIV model, drift rates are lognormally distributed and constrained to be positive. The differences in the .9 quantile functions in Fig. 5 are a reflection of this difference.

The qualitative pattern of predictions for the reflecting Wiener model in Fig. 6 resemble those for the LDLIV model in Fig. 5 but the effects are magnified. In the masked conditions, the quantile probability functions are concave for correct responses and convex for errors, which may arguably capture the qualitative structure slightly better than the other models, although the quantitative fit is worse. The model is appreciably worse than the LDLIV model by the AIC (6/24) but only slightly worse by the BIC (11/24) because the LDLIV model has an additional decay parameter that is heavily penalized by the BIC. As in the fit to the Ratcliff (2008) data, the reflecting boundary was estimated to be quite far from the starting point (more than a third of the distance of the starting point from the decision boundary), so I refit the model with r = − 1.5a, as I did previously. The mean G2 for the constrained model was 140.1 with associated AIC and BIC of 162.7 and 206.6, respectively. The AIC selected the model with the reflecting boundary as the better model; the BIC selected the model without it, again, because the BIC penalizes free parameters more severely. At the individual participant level, the model with the freely-varying reflecting boundary is preferred for only 9 of the 24 data sets by the AIC and 5 by the BIC. Like the fits of the model to the Ratcliff (2008) data, then, these fit statistics show the performance of the model is only marginally improved by the presence of a reflecting boundary.