Abstract

Despite the recent increase in second-person neuroscience research, it is still hard to understand which neurocognitive mechanisms underlie real-time social behaviours. Here, we propose that social signalling can help us understand social interactions both at the single- and two-brain level in terms of social signal exchanges between senders and receivers. First, we show how subtle manipulations of being watched provide an important tool to dissect meaningful social signals. We then focus on how social signalling can help us build testable hypotheses for second-person neuroscience with the example of imitation and gaze behaviour. Finally, we suggest that linking neural activity to specific social signals will be key to fully understand the neurocognitive systems engaged during face-to-face interactions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Interest in the neuroscience of social interactions has grown rapidly in the past decade. Influential opinion papers have called for a new “second-person neuroscience” and for the study of face-to-face dynamics (De Jaegher et al., 2010; Risko et al., 2016; Schilbach et al., 2013). Building on these, researchers have begun to develop paradigms where two or more people interact (Konvalinka et al., 2010; Sebanz et al., 2006) and where brain activity is captured using hyperscanning (Babiloni & Astolfi, 2014; Montague et al., 2002). However, it is still not easy to pin down specific cognitive models of the processes engaged when people take part in dynamic, real-time interactions. That is, what kind of neurocognitive models can we use to make sense of dynamic social interactions?

Here, we propose that a social signalling framework can help us understand social interactions both at the single- and two-brain level in terms of signal exchanges between senders and receivers. Social signalling takes an incremental approach to this problem, asking what factors change between situations where one participant performs a task alone and the same situation where the participant is interacting with another person as they perform a task together. In particular, we suggest that the simple manipulation of “being watched” or not by another person provides a core test of social signalling and may be able to give us a robust and general theoretical framework in which to advance “second-person neuroscience.”

First, we briefly review evidence that “being watched” matters to participant’s behaviour and their brain activity patterns. We then outline the social signalling framework to understanding these changes, and we detail how this can be applied to understand two cases of social behaviour—imitation and eye gaze. Finally, we review emerging evidence on the neural mechanisms of social signalling and set out future directions.

Being watched as a basic test of social interactions

There is a long tradition of research into the differences in our behaviour when we are alone, versus when we are in the presence of others. A series of studies from Zajonc (1965) showed that cockroaches, rats, monkeys, and humans showed changes in behaviour when in the presence of a conspecific, a phenomenon described as social facilitation. It has been proposed that the presence of conspecifics (regardless of whether they are watching) increases arousal and facilitates dominant behaviours, in both cognitive and motor tasks (Geen, 1985; Strauss, 2002; Zajonc & Sales, 1966).

In humans, the effect of being watched by another person goes beyond mere social facilitation and has been described as an audience effect (Hamilton & Lind, 2016). Being watched is one of the most basic and simplest social interactions, first studied by Triplett more than 100 years ago (Triplett, 1898), when he showed that children wind in a fishing reel faster when with another child than when alone. Since then, several studies have shown how an audience can induce the belief in being watched and cause changes in behaviour and in underlying brain activity. The audience effect is most clearly induced by the physical presence of another person who is actively watching the participant but can also be induced by the feeling of being watched (e.g., via camera), and these different triggering conditions are reviewed below. For instance, participants tend to gaze less at the face of a live confederate when compared with the same confederate in a prerecorded video clip (Cañigueral & Hamilton, 2019a; Gobel et al., 2015; Laidlaw et al., 2011), and they smile more in the presence of a live friend or confederate (Fridlund, 1991; Hietanen et al., 2018). During economic games and social dilemmas, the belief in being watched leads to an increase in prosocial behaviour (Cañigueral & Hamilton, 2019a; Izuma et al., 2009; Izuma et al., 2011) and a decrease in risk-taking (Kumano et al., 2021) as well as more brain activity in regions linked to mentalizing and social reward processing (Izuma et al., 2009, 2010).

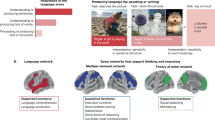

Several different cognitive mechanisms have been proposed to account for audience effects (Fig. 1). The response to being watched may draw on perceptual mentalizing (i.e., the attribution of perceptual states to other people; Teufel et al., 2010) to determine what the other person can see, and theory of mind (Tennie et al., 2010) to determine what they think. The presence of “watching eyes” could engage self-referential processing, which increases the sense of self-involvement in the interaction (Conty et al., 2016; Hazem et al., 2017). Furthermore, Bond (1982) proposed a self-presentation model of audience effects, whereby participants change their behaviour to present themselves positively to the audience. This also fits with the recent idea that being watched engages reputation management mechanisms—that is, changes in behaviour that aim to promote positive judgements in the presence of others (Cage, 2015; Izuma et al., 2009, 2010). However, there is still uncertainty about how to best interpret these findings and integrate them with other aspects of social neuroscience. Crucially, common to all these models is the idea that participants can send information about themselves to the watcher, that is, they can communicate.

a Viewing a picture or movie of another person engages processes of social perception only. b Seeing another person face to face means that it is possible, and socially important, to consider what the other person can see. This may engage processes of perceptual mentalizing, self-referential thinking, reputation management and social decision-making

From being watched to social signalling

To make sense of these very basic types of communication, we believe that it is helpful to draw on the extensive studies on signalling in animal behaviour (Stegmann, 2013). In this tradition, a signal is defined as a stimulus which sends information from one individual to another, and which is performed in order to benefit both the sender and the receiver. In contrast, a cue is a stimulus which only benefits the receiver. For example, the carbon dioxide which I breathe out is a cue to a mosquito that wants a meal but does not benefit me. In contrast, the bright colour of a butterfly’s wings is a signal to birds to avoid eating the butterfly, which benefits the butterfly in helping it to avoid predation. The concept of signalling described here is very broad, applying to all types of stimuli from wing colour (defined by evolution) to particular behaviours (which only occur in particular contexts and might be learnt). Here, we take this general idea and apply it to the much more specific case of human nonverbal behaviour. In particular, we suggest that it is helpful to use the idea of signalling to define which human actions are used as signals and what those signals mean.

The social signalling framework proposes that if a human action is a social signal, it must meet two basic criteria. First, the sender must produce the action in order to influence the receiver. Second, the action must have a beneficial impact on the receiver. Importantly, to test the first criteria, we can vary the presence of an audience who can receive the signal. If an action is performed when the sender can be seen and is suppressed when the sender cannot be seen, we have evidence that the action is being used as a signal. To test the second criteria, we must evaluate how the receiver’s behaviour or mental state changes when they perceive the action, that is, do receivers change their attitude to the sender when they receive a signal? Note that receiving a signal should benefit the receiver in the sense that they have gained information about the social world, even if that information is negatively valenced (e.g., learning that another person is hostile). However, we acknowledge that there are circumstances in which receiving additional social information via a signal may have negative consequences for the receiver (e.g., if sender is lying and uses the signal to manipulate the receiver); such circumstances are beyond the scope of this paper.

Overall, the social signalling framework takes an incremental approach to understand social interactions in terms of signal exchanges between senders and receivers. To compare cognitive processes between a solo task (e.g., one participant responding to a computer) and a dynamic social interaction (e.g., two participants in conversation) is very complex. By studying the specific processes which change between a solo task and a solo task with an audience, we hope it will be possible to incrementally specify the different cognitive processes involved in different types of social behaviour.

Social signalling builds on the basic premise of second-person neuroscience, that engaging in social interactions involves additional neurocognitive processes and social dynamics compared to not being in an interaction (Redcay & Schilbach, 2019; Schilbach et al., 2013), and sets out a concrete framework to establish testable hypotheses in the context of two-person interactions. In particular, social signalling suggests that we need to study and understand the interactive behaviour of both performers (senders) and observers (receivers). By using the simple manipulation of being watched or not being watched (i.e., varying the presence of an audience/receiver), we can test which behaviours are used as signals and define what information content the signals might carry (Bacharach & Gambetta, 2001; Skyrms, 2010). In contrast, by varying the presence of a social signal, we can test which effects, if any, this signal has on the receiver.

What counts as an audience?

To build a comprehensive account of social signalling, it is important to consider which manipulations count as being watched or not, and thus which engage the additional cognitive processes involved in audience effects. The extreme cases are most clear cut—when a participant is engaged in a face-to-face conversation with another living person, it is clear that they are being watched, while a participant who views a cartoon of a pair of eyes on a computer alone in a room (with no cameras) might see a face-like image but is not being watched. We illustrate these examples in Fig. 2, where we divide the space of possible interactions in terms of the visible stimulus features (e.g., an image of eyes) on the x-axis, and top-down contextual knowledge on the y-axis. The face-to-face conversation includes both visual cues to another person and the knowledge they are real and are watching (Fig. 2a) so an audience effect should be active. Instead, the person viewing cartoon eyes has minimal visual cues together with the knowledge they are not being watched (Fig. 2e), so the audience effect is not active.

Common stimuli used in social neuroscience research represented in a 2D space, along a spectrum of top-down (y-axis) and bottom-up (x-axis) features. Studies in the top-right corner of the plot clearly involve “being watched” (a) while those in the bottom-left do not (e). Studies in the yellow zone may be more ambiguous (b–d). (Colour figure online)

However, there are many other possible contexts which are much more ambiguous, and where we might not know if an audience effect is present or not; these are illustrated on the yellow diagonal (Fig. 2b–d). These include cases where a participant sees a camera and is told “you are being watched” but sees no visual cues (Fig. 2b; e.g., Somerville et al., 2013). In other cases, a participant might see an image or video clip of a person who directly addresses them (Fig. 2d), which include rich visual cues but participants still know that this image or video clip cannot actually see them (e.g., Baltazar et al., 2014; Wang & Hamilton, 2012). Finally, a number of studies use a combination of instructions and visual stimuli to induce the belief that participants are or are not engaged in a social interaction, using fake video calls (Cañigueral & Hamilton, 2019a), fake mirrors (Hietanen et al., 2019), or virtual characters (Wilms et al., 2010; Fig. 2c).

In each of these cases, either the perceptual features of the stimulus or the instructions given by the experimenter may lead participants to feel as if they are being watched. In interpreting such studies, we make the assumption that the feeling of “I am watched by a person” is a categorical percept (Hari & Puce, 2010)—that is, in each case the participant either does feel watched or does not, with no feeling of being half watched. However, it is not easy to make a blanket rule for which stimuli in the “ambiguous zone” will be treated as “true watchers” by participants and which will not—subtle effects of context can have a large impact. Equally, it is possible that some participants interpret a particular context as “being watched” while other participants in the same study do not. Future studies that aim to define more clearly when an ambiguous stimulus is treated as an audience will be useful in understanding basic mechanisms involved in detecting humans and triggering audience effect processes.

In the following, we illustrate how a framework of social signalling can help us build testable hypotheses for second-person neuroscience by separately manipulating both the sender and the receiver in a two-person interaction. We particularly focus on the examples of imitation and gaze behaviour, although similar studies have examined other social behaviours including facial expressions (Crivelli & Fridlund, 2018), eye blinks (Hömke et al., 2017, 2018), and hand gestures (Holler et al., 2018; Mol et al., 2011).

Imitation as an example of social signalling

Imitation is a simple social behaviour in which the actions of one person match the actions of the other (Heyes, 2011). It is relatively easy to recognise (Thorndike, 1898; Whiten & Ham, 1992) but there are many theories of why people imitate (see Farmer et al., 2018, for a review). These include imitation to learn new skills (Flynn & Smith, 2012); imitation to improve our understanding of another person via simulation (Gallese & Goldman, 1998; Pickering & Garrod, 2013); imitation to affiliate with others (Over & Carpenter, 2013; Uzgiris, 1981); and imitation as a side effect of associative learning (Heyes, 2017). Here, we focus on the claim that imitation is used as a social signal in order to build affiliation with others, sometimes described as the social glue hypothesis (Dijksterhuis, 2005; Lakin et al., 2003; Wang & Hamilton, 2012). Note that other social signals need not be linked to affiliation, such as those behaviours aimed at signalling dominance or status (Burgoon & Dunbar, 2006).

The claim that imitation acts as a social glue assumes that two people engage in an imitation sequence as shown in Fig. 3a. Here, the woman performs a hand gesture and the man then imitates her action: this is represented in the figure below as a pseudo-conversation, where red bubbles are the woman’s action/cognition and blue bubbles are the man’s action/cognition. When the man imitates the woman, his action is sending a signal to her. When she senses (probably implicitly) that she is being imitated, she receives the signal and may adjust her evaluation to like him more. If this interpretation of the imitation sequence under the social signalling framework is true, we can set out two testable hypotheses. First, imitation should be produced when other people can see it (Fig. 3b), because there is no need to send a signal if the signal cannot be received. Second, being imitated should change the internal state or behaviour of the receiver, as the receiver has gained new information about the sender (Fig. 3c).

The social signalling framework in the context of imitation. In a typical imitation sequence (a), one person acts, the second copies, and the first responds to being imitated; this is represented as a pseudo-conversation, where red bubbles are the woman’s action/cognition and blue bubbles are the man’s action/cognition. Two predictions must be true to classify imitation as a signal. First, the imitator (sender) produces the action more when he can be seen (b); data in Panel b confirms this (Krishnan-Barman & Hamilton, 2019). Second, the imitatee (receiver) must detect on some level that she is being copied and must respond (c); brain systems linked to this process are summarised in Panel c (IPL = inferior parietal lobule; TPJ = temporo-parietal junction; IFG = inferior frontal gyrus; vmPFC = ventromedial prefrontal cortex; Hale & Hamilton, 2016a). (Colour figure online)

In a study of dyadic interaction, we found support for the first prediction of the social signalling framework of imitation (Krishnan-Barman & Hamilton, 2019). Pairs of naïve participants in an augmented reality space were assigned roles of leader and follower in a cooperative game. On each trial, the leader learnt a block-moving sequence from the computer and demonstrated it to the follower, who was instructed to move the blocks in the same order; dyads received a score based on fast and accurate performance. Unbeknownst to the Follower, the Leader was given a secret instruction to move blocks using specific trajectories, including unusually high trajectories in some trials. In one half of the trials the Leader watched the Follower make their subsequent movements, while in the other half of the trials the Leader had their eyes closed during the Follower’s turn. We found that overall Followers tended to imitate the trajectories demonstrated by the Leaders, and critically, they imitated Leaders with greater fidelity when they knew they were being watched by the Leader (Fig. 3a).

The finding that being watched increases imitation is also seen in other contexts. A previous study (Bavelas et al., 1986) showed that observers winced more when watching an experimenter who was maintaining eye contact with them sustain a minor injury when compared with an experimenter who was looking elsewhere when experiencing a minor injury. Both toddlers (Vivanti & Dissanayake, 2014) and 4-month-old infants (de Klerk et al., 2018) show a greater propensity to imitate models in a video demonstration when cued with a direct rather than averted gaze. In a rapid reaction time study with adults, direct gaze enhances mimicry (Wang et al., 2011) and this effect is only seen if the gaze is present during the response period (Wang & Hamilton, 2014). However, in studies using direct gaze cues, it is hard to rule our arousal or alerting effects arising from the eyes (Senju & Johnson, 2009). Our recent paper (Krishnan-Barman & Hamilton, 2019) manipulated only the belief in being watched because participants stood side by side and did not directly see each other’s eyes. This suggests that the effect is not merely an epiphenomenon of arousal (Senju & Johnson, 2009) but is driven by the capacity to signal to the partner when the partner’s eyes are open. Together, all these results offer support for the hypothesis that imitation is a social signal initiated by the sender.

We now turn to the second hypothesis: if imitation is a social signal, then being imitated should (on some level) be detected by the receiver and this new information should change the internal state or behaviour of the receiver. Several detailed reviews outline the downstream impacts of being mimicked (Chartrand & Lakin, 2012; Chartrand & van Baaren, 2009; Hale & Hamilton, 2016a; but see Hale & Hamilton, 2016b). Broadly being mimicked appears to build rapport and increase our liking for other people (Chartrand & Bargh, 1999; Lakin & Chartrand, 2003; Stel & Vonk, 2010), and this effect is present from early in childhood (Meltzoff, 2007). Interestingly, this effect may persist even when the mimicker is a computer or virtual-reality agent (Bailenson & Yee, 2005; Suzuki et al., 2003; but see Hale & Hamilton, 2016b). In addition to building rapport, mimicking has also been shown to increase prosocial behaviour such as helping others (Müller et al., 2012) or increasing the tips that restaurant patrons give waitresses (van Baaren et al., 2003). Thus, positive behavioural consequences of being imitated seem well-documented, though the precise neural and cognitive mechanisms which allow us to detect “being mimicked” are less well defined (Hale & Hamilton, 2016a). Taken together, these results support the hypothesis that imitation is a signal, produced by a sender when they are being watched, and resulting in changes in behaviour among the recipients of this signal.

One important question in a signalling account of imitation concerns the level of intentionality and awareness of the signals, in both the sender and the receiver. Like many nonverbal behaviours, people often seem to be unaware of when they imitate others and of when others imitate them. In fact, awareness of being imitated may reduce the social glue effect (Kulesza et al., 2016). Thus, the social signalling framework makes no claims that people consciously intend to send a signal or are explicitly aware of receiving a signal, and it is possible that all these sophisticated processes can occur without awareness, in the same way that a tennis player can hit a ball without awareness of their patterns of muscle activity. It will be an interesting question for future studies to explore how intentions and awareness interact with nonverbal social signalling behaviours.

Gaze as an example of social signalling

Gaze and eye movements are a particularly intriguing social behaviour, because the eyes are used to gather information about the world but can also signal information to others (Gobel et al., 2015). There is evidence that people change their gaze behaviour when they are being watched, which supports the first prediction of the social signalling framework. For instance, participants direct less gaze to the face of a live confederate than to the face of the same confederate in a prerecorded video clip (Laidlaw et al., 2011). Similarly, across two studies we recently showed that, when participants are in a live interaction or when they are (or believe they are) in a live video call, they gaze less to the other person than if they are seeing a prerecorded video clip (Cañigueral & Hamilton, 2019a; Cañigueral, Ward, et al., 2021).

Some studies have suggested that gaze avoidance found in live contexts signals compliance with social norms (e.g., it is not polite to stare at someone; Foulsham et al., 2011; Gobel et al., 2015; Goffman, 1963) or reduces arousal associated with eye contact in live interactions (Argyle & Dean, 1965; Kendon, 1967). However, other studies using tasks that involve conversation have shown that the amount of gaze directed to the confederate is greater when they are listening compared to speaking (Cañigueral, Ward, et al., 2021; Freeth et al., 2013). Moreover, one study found that in contexts involving natural conversation participants direct more gaze to the confederate when they believe they are in a live video call (Mansour & Kuhn, 2019). Altogether these findings suggests that, beyond being in a live interaction, the communicative context and role in the interaction also modulates gaze patterns (Cañigueral & Hamilton, 2019b).

In line with the second prediction of the social signalling framework, some studies show that live direct or averted gaze has effects on the receiver. Studies using pictures and virtual agents have shown that direct gaze can engage brain systems linked to reward (Georgescu et al., 2013; Kampe et al., 2001), but can also be processed as a threat stimulus (Sato et al., 2004). In live contexts, seeing direct or averted gaze activates the approach or avoidance motivational brain system, respectively (Hietanen et al., 2008; Pönkänen et al., 2011). In conversation, eye gaze regulates turn-taking between speakers and listeners: speakers avert their gaze when they start to speak and when they hesitate to indicate that they want to say something but give direct gaze to the listener when they are finishing an utterance to indicate that they want to give the turn (Ho et al., 2015; Kendon, 1967). Thus, the key role of eye gaze as a social signal emerges from its dual function as a cue of “being watched” and as a dynamic modulator of social interactions on a moment-by-moment basis (Cañigueral & Hamilton, 2019b).

Neural mechanisms for social signalling

Within the social signalling framework, the exchange of social signals will also modulate brain mechanisms engaged by senders and receivers. At the sender’s end, brain activity should change depending on the presence or absence of an audience, and several studies have used creative paradigms to test this hypothesis inside the fMRI scanner. For instance, using mirrors it has been shown that mutual eye contact with a live partner recruits the medial prefrontal cortex, a brain area involved in mentalizing and communication (Cavallo et al., 2015). Using a fake video-call paradigm, it has also been shown that the belief in being watched during a prosocial decision-making task recruits brain regions associated with mentalizing (medial prefrontal cortex) and reward processing (ventral striatum), which are two key processes for reputation management (Izuma et al., 2009, 2010). Similarly, the belief in being watched or that an audio feed is presented in real-time (versus prerecorded) engages mentalizing brain regions (Müller-Pinzler et al., 2016; Redcay et al., 2010; Somerville et al., 2013; Warnell et al., 2018), and the belief of chatting online with another human (versus a computer) engages reward processing areas (Redcay et al., 2010; Warnell et al., 2018). These studies all point to the idea that a particular network of brain regions previously linked to mentalizing and reward are also engaged when a participant feels they can be seen by or can communicate with another person.

Commenting on patterns of brain activity related to receiving social signals from other people might seem simple, in the sense that hundreds of studies have itemized brain regions of social perception. Such studies do not typically distinguish whether a particular behaviour was intended as a signal or not but have identified brain systems which respond to emotional faces, to gestures, to actions and to observing gaze patterns (Andric & Small, 2012; Bhat et al., 2017; Diano et al., 2017; Pelphrey et al., 2004). When the finer distinction between a signal and a cue matters, it is helpful to consider studies which distinguish between ostensive and nonostensive behaviours. These have shown that ostensive communicative cues such as direct gaze, being offered an object, or hearing one’s own name recruit brain areas related to processing of communicative intent, mental states, and reward (Caruana et al., 2015; Kampe et al., 2003; Redcay et al., 2016; Schilbach et al., 2006; Schilbach et al., 2010; Tylén et al., 2012).

During social signalling, receivers also need to infer the intended message or “speaker meaning” embedded in a signal, which is strongly dependent on contextual information beyond the signal itself (e.g., based on assumptions about the senders’ beliefs and intentions; Hagoort, 2019). For instance, sustained direct gaze between participants interacting face-to-face can result in either laughter or hostility, according to the context set by a preceding cooperative or competitive task (Jarick & Kingstone, 2015). Thus, at the receiver’s end social signalling may also recruit brain systems involved in inferring such “speaker meaning.” Studies within the field of neuropragmatics have investigated this question in the context of spoken language, and have found that listening to irony, implicit answers or indirect evaluations and requests recruits the medial prefrontal cortex and temporal-parietal junction (Bašnáková et al., 2014; Jang et al., 2013; Spotorno et al., 2012; van Ackeren et al., 2012). Moreover, when participants are the receivers of the indirect message, versus just overhearers, listening to indirect replies also recruits the anterior insula and pregenual anterior cingulate cortex (Bašnáková et al., 2015). These findings suggest that mentalizing and affective brain systems are required to understand the “speaker meaning” and communicative intent of a signal (Hagoort, 2019; Hagoort & Levinson, 2014).

Although the studies presented above have advanced our understanding of how the brain implements a variety of cognitive processes when being watched or when receiving a social signal, they rely on controlled laboratory settings that require participants to be alone and stay still inside the fMRI scanner. This limits the researcher’s ability to study brain systems recruited in social interactions, where participants naturally move their face, head, and body to communicate with others. Luckily, these limitations can be overcome by techniques that allow much higher mobility (Czeszumski et al., 2020). For instance, although EEG has traditionally been highly sensitive to motion artifacts, recent developments have created robust mobile EEG (MEG) systems that can be easily used in naturalistic settings (Melnik et al., 2017). To a lesser extent, MEG has also successfully been used for studies involving natural conversation (Mandel et al., 2016). Finally, functional near-infrared spectroscopy (fNIRS) is a novel neuroimaging technique that can record haemodynamic signals in the brain during face-to-face interactions (Pinti et al., 2018). Crucially, the fact that EEG and fNIRS are silent and wearable means that they can be easily combined with other methodologies that capture natural social behaviours, such as motion capture (mocap), face-tracking and eye-tracking systems.

For instance, by combining mocap and fNIRS to study imitation in a dyadic task, we have recently found that when participants are being watched as they perform an imitation task, there was a decrease in activation of the right parietal region and the right temporal-parietal junction (Krishnan-Barman, 2021). In another study (Cañigueral, Zhang, et al., 2021), we simultaneously recorded pairs of participants (who were facing each other) with eye-tracking, face-tracking, and fNIRS to test how social behaviours and brain activity are modulated when sharing biographical information. Results showed that reciprocal interactions where information was shared recruited brain regions previously linked to reputation management (Izuma, 2012), particularly to mentalizing (temporo-parietal junction; [TPJ]) and strategic decision-making (dorsolateral prefrontal cortex [dlPFC]; Saxe & Kanwisher, 2003; Saxe & Wexler, 2005; Soutschek et al., 2015; Speitel et al., 2019).

Within the social signalling framework, it is necessary to link brain activity patterns to meaningful social signals to fully understand the neurocognitive systems engaged during social interactions. In an exploratory analysis, we investigated how the amount of facial displays is related to brain activity in face-to-face interactions (Cañigueral, Zhang, et al., 2021). We found that spontaneous production of facial displays (i.e., participants moving their own face) recruited the left supramarginal gyrus, whereas spontaneous observation of facial displays (i.e., participants seeing their partner move the face) recruited the right dlPFC. These brain regions have been previously linked to speech actions (Wildgruber et al., 1996) and emotion inference from faces (A. Nakamura et al., 2014; K. Nakamura et al., 1999), respectively. However, these findings also suggest that these brain regions are able to track facial displays over time (as the interaction develops), and further reveal that there may be specific brain systems involved in the dynamic processing of social signals beyond those traditionally linked to motor control and face perception.

Other studies have taken advantage of EEG and fNIRS to study how two brains synchronize when two people are interacting face-to-face and exchange specific social signals. For instance, dual brain and video recordings of hand movements show that cross-brain synchrony increases during spontaneous imitation of hand movements (Dumas et al., 2010). In combination with eye-tracking systems, it has also been shown that cross-brain synchrony between partners increases during moments of mutual eye contact (Hirsch et al., 2017; Leong et al., 2017; Piazza et al., 2020). To further test which specific aspects of social signalling are modulated by cross-brain synchrony, we combined behavioural and neural dyadic recordings with a novel analytical approach that carefully controls for task- and behaviour-related effects (cross-brain GLM; Kingsbury et al., 2019; Cañigueral, Zhang, et al., 2021). We found that, after controlling for task structure and social behaviours, cross-brain synchrony between mentalizing (right TPJ) and strategic decision-making regions (left dlPFC) increased when participants were sharing information. In line with the mutual prediction theory (Hamilton, 2021; Kingsbury et al., 2019), this finding suggests that cross-brain synchrony allows us to appropriately anticipate and react to each other’s social signals in the context of an ongoing shared interaction.

Altogether, these studies demonstrate how a multimodal approach to social interactions is crucial to fully understand the neurocognitive systems underlying social signalling. Simple manipulations of the belief in being watched or communicative context show that mentalizing, reward and decision-making brain systems are engaged when participants take part in a live interaction. Beyond this, specific brain regions related to speech production and emotion processing track the dynamic exchange of social signals, while cross-brain synchrony might index the participants’ ability to anticipate and react to these signals. Future studies that carefully manipulate the social context and combine novel technologies to capture both brain activity and social behaviours will be critical to discern the role of each of these mechanisms in social signalling, as well as how they are all coordinated to enable real-world face-to-face social interactions.

Taking the social signalling framework further

The ideas about social signalling outlined here provide a very minimal version of this framework. We suggest that the simple manipulation of “being watched” or not by another person provides a core test of social signalling, and that the two key features required to identify a signal are that the sender intends (on some level) to send a signal and that the receiver reacts (in some way) to the signal. Researchers in animal communication often examine further criteria. For example, persistence by the sender provides evidence that a signal is important—if the sender does not see any reaction from the recipient and the signal matters, the sender will keep sending it. Such behaviour implies that the sender has a goal of “she must get the message” and will persist until the goal is achieved. Other studies of animal behaviour consider if a particular signal is honest or deceptive (Dawkins & Guilford, 1991), and how recipients can distinguish these. Although our basic framework does not include these additional features, testing for them could be useful to have a more complex and detailed approach to social signalling.

Similarly, it is important to consider how social signalling might be modulated along different dimensions. For instance, while in many situations social signals are overt, in some cases there will be covert social signalling to facilitate effective cooperation: covert signals can be accurately received by its intended audience to foster affiliation, but not by others if it may lead to dislike (Smaldino et al., 2018). From the point of view of the audience, social signalling also differs in its directedness, that is, signals can be directed to “me,” or to a third person, or can also be undirected (e.g., face-covering tattoos; Gambetta, 2009). Importantly, depending on their directedness social signals will recruit different brain systems (Tylén et al., 2012). Another intriguing aspect is the context in which social signalling takes place, and how we adapt (or not) social signals to each of these contexts. For example, nonverbal behaviours such as nodding or hand movements play a central role in face-to-face communication to convey the full complexity of a spoken message (Kendon, 1967, 1970), but we continue to perform them in a telephone conversation although they can no longer be seen by the receiver. Thus, social signalling should allow for the nuance present in real-world contexts when testing which behaviours count or not as social signals. Finally, inspired by fields like conversation analysis (Schegloff, 2007), the investigation of social interactions within a social signalling framework entails considering signals as components within sequences of interaction instead of isolated entities, where prior and subsequent signals determine the relevance and meaning of the current signal. Acknowledging these dimensions when designing and interpreting studies will be critical to avoid an oversimplified view of social signalling.

It is also helpful to consider how this signalling framework relates to other approaches to the study of social interaction, and we briefly describe two rival approaches. First, some have suggested that we should focus entirely on the interaction and the emergent features of that situation (De Jaegher et al., 2010). Such dynamical systems often eschew traditional descriptions of single brain cognition, and of individuals as “senders” and “receivers.” There may well be situations, such as understanding the dynamic coordination of pianists playing a duet, where a division of the interaction into two distinct roles does not help. However, as the examples above illustrate, there are many situations where it is useful to understand who is sending a signal, what signal that is and how the recipient responds.

Second, some theories of social behaviour draw on studies of linguistic communication to interpret actions in terms of many different levels of relevance (Sperber & Wilson, 1995), where each action is tailored to the needs of the recipient. Such careful and detailed communication may be found for verbal behaviour, but it is not clear that the same models can be imported as a framework for all types of social interaction. Our approach here deliberately draws on work from animal cognition, which makes minimal assumptions about the complexity of the cognitive processes underlying social signalling. A key challenge is to understand if signalling can be driven by simple rules or if it requires the full complexity of linguistic communication.

In the present paper, we aim to highlight a “mid-level” type of explanation as a useful framework for interpreting current studies and guiding future studies. We suggest that a social signalling framework gives us a way to understand two-person interactions at the single-brain level, in the context of all our existing cognitive neuroscience. We aim to define precisely what signals each individual sends and receives during an interaction and the cognitive processes involved. These cognitive processes happen within a single brain but only in the context of a dynamic interaction with another person.

Concluding remarks

Recent calls for second-person neuroscience have resulted in a significant body of research focused on two-person interactions. However, it is not yet clear which neurocognitive mechanisms underlie these real-time dynamic social behaviours, or how novel interactive methods can relate to findings from traditional single-brain studies of social cognition. The social signalling framework proposes that communication is embodied in social behaviours, and so must be instantiated in the physical world via signals embedded in motor actions, eye gaze or facial expressions. We propose that a social signalling framework can help us make sense of face-to-face interactions by taking step-by-step advances from traditional one-person studies to novel two-person paradigms (e.g., subtle manipulations of being watched). Key to this work is to understand the details of the signals—to identify a specific signal, link it to a context, understand when it is produced, and understand what effect it has on the receiver. We believe that, without a detailed understanding of signalling behaviours, it will be hard to make sense of the new wave of data emerging from second-person neuroscience methods.

Data availability

Not applicable.

Code availability

Not applicable.

References

Andric, M., & Small, S. L. (2012). Gesture’s neural language. Frontiers in Psychology, 3(99), 1–12.

Argyle, M., & Dean, J. (1965). Eye-Contact, Distance and Affilitation. Sociometry, 28(3), 289–304.

Babiloni, F., & Astolfi, L. (2014). Social neuroscience and hyperscanning techniques: Past, present and future. Neuroscience & Biobehavioral Reviews, 44, 76–93.

Bacharach, M., & Gambetta, D. (2001). Trust in signs. In K. S. Cook (Ed.), Trust in society (pp. 148–184). Russell Sage Foundation.

Bailenson, J. N., & Yee, N. (2005). Digital chameleons: Automatic assimilation of nonverbal gestures in immersive virtual environments. Psychological Science, 16(10), 814–819.

Baltazar, M., Hazem, N., Vilarem, E., Beaucousin, V., Picq, J. L., & Conty, L. (2014). Eye contact elicits bodily self-awareness in human adults. Cognition, 133(1), 120–127.

Bašnáková, J., van Berkum, J., Weber, K., & Hagoort, P. (2015). A job interview in the MRI scanner: How does indirectness affect addressees and overhearers? Neuropsychologia, 76, 79–91.

Bašnáková, J., Weber, K., Petersson, K. M., van Berkum, J., & Hagoort, P. (2014). Beyond the language given: The neural correlates of inferring speaker meaning. Cerebral Cortex, 24(10), 2572–2578.

Bavelas, J. B., Black, A., Lemery, C. R., & Mullett, J. (1986). “I show how you feel”: Motor mimicry as a communicative act. Journal of Personality and Social Psychology, 50(2), 322–329.

Bhat, A. N., Hoffman, M. D., Trost, S. L., Culotta, M. L., Eilbott, J., Tsuzuki, D., & Pelphrey, K. A. (2017). Cortical activation during action observation, action execution, and interpersonal synchrony in adults: A functional near-infrared spectroscopy (fNIRS) study. Frontiers in Human Neuroscience, 11(September). https://doi.org/10.3389/fnhum.2017.00431

Bond, C. F. J. (1982). Social facilitation: A self-presentational view. Journal of Personality and Social Psychology, 42(6), 1042–1050.

Burgoon, J. K., & Dunbar, N. E. (2006). Nonverbal expressions of dominance and power in human relationships. In V. Manusov & M. L. Patterson (Eds.), The SAGE handbook of nonverbal communication (pp. 279–298). SAGE Publications. https://doi.org/10.4135/9781412976152.n15

Cage, E. A. (2015). Mechanisms of social influence: Reputation management in typical and autistic individuals (Doctoral thesis). University of London.

Cañigueral, R., & Hamilton, A. F. de C. (2019a). Being watched: Effects of an audience on eye gaze and prosocial behaviour. Acta Psychologica, 195, 50–63.

Cañigueral, R., & Hamilton, A. F. de C. (2019b). The Role of eye gaze during natural social interactions in typical and autistic people. Frontiers in Psychology, 10(560), 1–18.

Cañigueral, R., Ward, J. A., & Hamilton, A. F. de C. (2021). Effects of being watched on eye gaze and facial displays of typical and autistic individuals during conversation. Autism, 25(1), 210–226.

Cañigueral, R., Zhang, X., Noah, J. A., Tachtsidis, I., Hamilton, A. F. de C., & Hirsch, J. (2021). Facial and neural mechanisms during interactive disclosure of biographical information. NeuroImage, 226, 117572. https://doi.org/10.1016/j.neuroimage.2020.117572

Caruana, N., Brock, J., & Woolgar, A. (2015). A frontotemporoparietal network common to initiating and responding to joint attention bids. NeuroImage, 108, 34–46.

Cavallo, A., Lungu, O., Becchio, C., Ansuini, C., Rustichini, A., & Fadiga, L. (2015). When gaze opens the channel for communication: Integrative role of IFG and MPFC. NeuroImage, 119, 63–69.

Chartrand, T. L., & Bargh, J. A. (1999). The chameleon effect: The perception–behavior link and social interaction. Journal of Personality and Social Psychology, 76(6), 893–910.

Chartrand, T. L., & Lakin, J. L. (2012). The antecedents and consequences of human behavioral mimicry. Annual Review of Psychology, 64(1), 285–308.

Chartrand, T. L., & van Baaren, R. B. (2009). Human mimicry. In M. P. Zanna (Ed.), Advances in experimental social psychology (Vol. 41, pp. 219–274). 10.1016/S0065-2601(08)00405-X

Conty, L., George, N., & Hietanen, J. K. (2016). Watching Eyes effects: When others meet the self. Consciousness and Cognition, 45, 184–197.

Crivelli, C., & Fridlund, A. J. (2018). Facial displays are tools for social influence. Trends in Cognitive Sciences, 22(5), 388–399.

Czeszumski, A., Eustergerling, S., Lang, A., Menrath, D., Gerstenberger, M., Schuberth, S., Schreiber, F., Rendon, Z. Z., & König, P. (2020). Hyperscanning: A valid method to study neural inter-brain underpinnings of social interaction. Frontiers in Human Neuroscience, 14(February), 1–17.

Dawkins, M. S., & Guilford, T. (1991). The corruption of honest signalling. Animal Behaviour, 41(5), 865–873.

De Jaegher, H., Di Paolo, E., & Gallagher, S. (2010). Can social interaction constitute social cognition? Trends in Cognitive Sciences, 14(10), 441–447.

de Klerk, C. C. J. M., Hamilton, A. F. de C., & Southgate, V. (2018). Eye contact modulates facial mimicry in 4-month-old infants: An EMG and fNIRS study. Cortex, 106, 93–103.

Diano, M., Tamietto, M., Celeghin, A., Weiskrantz, L., Tatu, M. K., Bagnis, A., Duca, S., Geminiani, G., Cauda, F., & Costa, T. (2017). Dynamic changes in amygdala psychophysiological connectivity reveal distinct neural networks for facial expressions of basic emotions. Scientific Reports, 7(February), 1–13.

Dijksterhuis, A. (2005). Why are we social animals: The high road to imitation as social glue. In S. Hurley & N. Chater (Eds.), Perspectives on imitation: From neuroscience to social science (Vol. 2., pp. 207–220). MIT Press.

Dumas, G., Nadel, J., Soussignan, R., Martinerie, J., & Garnero, L. (2010). Inter-brain synchronization during social interaction. PLOS ONE, 5(8), Article e12166. https://doi.org/10.1371/journal.pone.0012166

Freeth, M., Foulsham, T., & Kingstone, A. (2013). What affects social attention? Social presence, eye contact and autistic traits. PLOS ONE, 8(1). https://doi.org/10.1371/journal.pone.0053286

Farmer, H., Ciaunica, A., & Hamilton, A. F. de C. (2018). The functions of imitative behaviour in humans. Mind & Language, 33(4), 378–396.

Flynn, E., & Smith, K. (2012). Investigating the mechanisms of cultural acquistion: How pervasive is overimitation in adults? Social Psychology, 43(4), 185–195.

Foulsham, T., Walker, E., & Kingstone, A. (2011). The where, what and when of gaze allocation in the lab and the natural environment. Vision Research, 51(17), 1920–1931.

Gambetta, D. (2009). Codes of the underworld: How criminals communicate. Princeton University Press.

Fridlund, A. J. (1991). Sociality of solitary smiling: Potentiation by an implicit audience. Journal of Personality and Social Psychology, 60(2), 229–240.

Gallese, V., & Goldman, A. (1998). Mirror neurons and the simulation theory of mind-reading. Trends in Cognitive Sciences, 2(12), 493–501.

Goffman, E. (1963). Behavior in public places. Simon & Schuster.

Geen, R. G. (1985). Evaluation apprehension and response withholding in solution of anagrams. Personality and Individual Differences, 6(3), 293–298.

Georgescu, A. L., Kuzmanovic, B., Schilbach, L., Tepest, R., Kulbida, R., Bente, G., & Vogeley, K. (2013). Neural correlates of “social gaze” processing in high-functioning autism under systematic variation of gaze duration. NeuroImage: Clinical, 3, 340–351.

Gobel, M. S., Kim, H. S., & Richardson, D. C. (2015). The dual function of social gaze. Cognition, 136, 359–364.

Hagoort, P. (2019). The neurobiology of language beyond single-word processing. Science, 366(6461), 55–58.

Hagoort, P., & Levinson, S. C. (2014). Neuropragmatics. In M. S. Gazzaniga & G. R. Mangun (Eds.), The cognitive neurosciences (5th ed., pp. 667–674). MIT Press.

Hale, J., & Hamilton, A. F. de C. (2016a). Cognitive mechanisms for responding to mimicry from others. Neuroscience and Biobehavioral Reviews, 63, 106–123.

Hale, J., & Hamilton, A. F. de C. (2016b). Testing the relationship between mimicry, trust and rapport in virtual reality conversations. Scientific Reports, 6(1), 35295.

Hamilton, A. F. de C. (2021). Hyperscanning: Beyond the Hype. Neuron, 109(3), 404–407. https://doi.org/10.1016/j.neuron.2020.11.00

Hamilton, A. F. de C., Lind, F. (2016). Audience effects: What can they tell us about social neuroscience theory of mind and autism? Culture and Brain, 4(2) 159–177. https://doi.org/10.1007/s40167-016-0044-5

Hari, R., & Puce, A. (2010). The tipping point of animacy: How, when, and where we perceive life in a face. Psychological Science, 21(12), 1854–1862.

Hazem, N., George, N., Baltazar, M., & Conty, L. (2017). I know you can see me: Social attention influences bodily self-awareness. Biological Psychology, 124, 21–29.

Heyes, C. (2011). Automatic imitation. Psychological Bulletin, 137(3), 463–483.

Heyes, C. (2017). When does social learning become cultural learning? Developmental Science, 20(2), 1–14.

Hietanen, J. K., Helminen, T. M., Kiilavuori, H., Kylliäinen, A., Lehtonen, H., & Peltola, M. J. (2018). Your attention makes me smile: Direct gaze elicits affiliative facial expressions. Biological Psychology, 132, 1–8.

Hietanen, J. K., Kylliäinen, A., & Peltola, M. J. (2019). The effect of being watched on facial EMG and autonomic activity in response to another individual’s facial expressions. Scientific Reports, 9(1), 14759.

Hietanen, J. K., Leppänen, J. M., Peltola, M. J., Linna-aho, K., & Ruuhiala, H. J. (2008). Seeing direct and averted gaze activates the approach-avoidance motivational brain systems. Neuropsychologia, 46(9), 2423–2430 http://linkinghub.elsevier.com/retrieve/pii/S0028393208000936

Hirsch, J., Zhang, X., Noah, J. A., & Ono, Y. (2017). Frontal temporal and parietal systems synchronize within and across brains during live eye-to-eye contact. NeuroImage, 157(January), 314–330.

Ho, S., Foulsham, T., & Kingstone, A. (2015). Speaking and listening with the eyes: Gaze signaling during dyadic interactions. PLOS ONE, 10(8), 1–18.

Holler, J., Kendrick, K. H., & Levinson, S. C. (2018). Processing language in face-to-face conversation: Questions with gestures get faster responses. Psychonomic Bulletin & Review, 25(5), 1900–1908.

Hömke, P., Holler, J., & Levinson, S. C. (2017). Eye blinking as addressee feedback in face-to-face conversation. Research on Language and Social Interaction, 50(1), 54–70.

Hömke, P., Holler, J., & Levinson, S. C. (2018). Eye blinks are perceived as communicative signals in human face-to-face interaction. PLOS ONE, 13(12), Article e0208030. https://doi.org/10.1371/journal.pone.0208030

Izuma, K. (2012). The social neuroscience of reputation. Neuroscience Research, 72(4), 283–288.

Izuma, K., Matsumoto, K., Camerer, C. F., & Adolphs, R. (2011). Insensitivity to social reputation in autism. Proceedings of the National Academy of Sciences, 108(42), 17302–17307.

Izuma, K., Saito, D. N., & Sadato, N. (2009). Processing of the incentive for social approval in the ventral striatum during charitable donation. Journal of Cognitive Neuroscience, 22(4), 621–631.

Izuma, K., Saito, D. N., & Sadato, N. (2010). The roles of the medial prefrontal cortex and striatum in reputation processing. Social Neuroscience, 5(2), 133–147.

Jang, G., Yoon, S., Lee, S.-E., Park, H., Kim, J., Ko, J. H., & Park, H.-J. (2013). Everyday conversation requires cognitive inference: Neural bases of comprehending implicated meanings in conversations. NeuroImage, 81, 61–72.

Jarick, M., & Kingstone, A. (2015). The duality of gaze: eyes extract and signal social information during sustained cooperative and competitive dyadic gaze. Frontiers in Psychology, 6(September), 1–7.

Kampe, K. K. W., Frith, C. D., Dolan, R. J., & Frith, U. (2001). Reward value of attractiveness and gaze. Nature: Brief. Communications, 413, 589.

Kampe, K. K. W., Frith, C. D., & Frith, U. (2003). “Hey John”: signals conveying communicative intention toward the self activate brain regions associated with “mentalizing”, regardless of modality. The Journal of Neuroscience, 23(12), 5258–5263.

Kendon, A. (1967). Some functions of gaze-direction in social interaction. Acta Psychologica, 26, 22–63.

Kendon, A. (1970). Movement coordination in social interaction: Some examples described. Acta Psychologica, 32, 100–125.

Kingsbury, L., Huang, S., Wang, J., Gu, K., Golshani, P., Wu, Y. E., & Hong, W. (2019). Correlated neural activity and encoding of behavior across brains of socially interacting animals. Cell, 178(2), 429–446.e16.

Konvalinka, I., Vuust, P., Roepstorff, A., & Frith, C. D. (2010). Follow you, follow me: Continuous mutual prediction and adaptation in joint tapping. Quarterly Journal of Experimental Psychology, 63(11), 2220–2230.

Krishnan-Barman, S. (2021). Adults imitate to send a social signal. .

Krishnan-Barman, S., & Hamilton, A. F. de C. (2019). Adults imitate to send a social signal. Cognition, 187, 150–155.

Kulesza, W., Dolinski, D., & Wicher, P. (2016). Knowing that you mimic me: The link between mimicry, awareness and liking. Social Influence, 11(1), 68–74.

Kumano, S., Hamilton, A. F. de C., & Bahrami, B. (2021). The role of anticipated regret in choosing for others. Scientific Reports, 11(1), 12557.

Laidlaw, K. E. W., Foulsham, T., Kuhn, G., & Kingstone, A. (2011). Potential social interactions are important to social attention. Proceedings of the National Academy of Sciences, 108(14), 5548–5553.

Lakin, J. L., & Chartrand, T. L. (2003). Using nonconscious behavioral mimicry to create affiliation and rapport. Psychological Science, 14(4), 334–339.

Lakin, J. L., Jefferis, V. E., Cheng, C. M., & Chartrand, T. L. (2003). The chameleon effect as social glue: Evidence for the evolutionary significance of nonconscious mimicry. Journal of Nonverbal Behavior, 27(3), 145–161.

Leong, V., Byrne, E., Clackson, K., Georgieva, S., Lam, S., & Wass, S. (2017). Speaker gaze increases information coupling between infant and adult brains. Proceedings of the National Academy of Sciences, 114(50), 13290–13295.

Mandel, A., Bourguignon, M., Parkkonen, L., & Hari, R. (2016). Sensorimotor activation related to speaker vs. listener role during natural conversation. Neuroscience Letters, 614, 99–104.

Mansour, H., & Kuhn, G. (2019). Studying “natural” eye movements in an “unnatural” social environment: The influence of social activity, framing, and sub-clinical traits on gaze aversion. Quarterly Journal of Experimental Psychology, 72(8), 1913–1925.

Melnik, A., Legkov, P., Izdebski, K., Kärcher, S. M., Hairston, W. D., Ferris, D. P., & König, P. (2017). Systems, subjects, sessions: To what extent do these factors influence EEG data? Frontiers in Human Neuroscience, 11(March), 1–20.

Meltzoff, A. N. (2007). “Like me”: A foundation for social cognition. Developmental Science, 10(1), 126–134.

Mol, L., Krahmer, E., Maes, A., & Swerts, M. (2011). Seeing and being seen: The effects on gesture production. Journal of Computer-Mediated Communication, 17(1), 77–100.

Montague, P. R., Berns, G. S., Cohen, J. D., McClure, S. M., Pagnoni, G., Dhamala, M., Wiest, M. C., Karpov, I., King, R. D., Apple, N., & Fisher, R. E. (2002). Hyperscanning: Simultaneous fMRI during Linked Social Interactions. NeuroImage, 16(4), 1159–1164.

Müller, B. C. N., Maaskant, A. J., van Baaren, R. B., & Dijksterhuis, A. P. (2012). Prosocial consequences of imitation. Psychological Reports, 110(3), 891–898.

Müller-Pinzler, L., Gazzola, V., Keysers, C., Sommer, J., Jansen, A., Frassle, S., Einhauser, W., Paulus, F. M., & Krach, S. (2016). Neural pathways of embarrassment and their modulation by social anxiety. NeuroImage, 49(0), 252–261.

Nakamura, A., Maess, B., Knösche, T. R., & Friederici, A. D. (2014). Different hemispheric roles in recognition of happy expressions. PLOS ONE, 9(2), e88628.

Nakamura, K., Kawashima, R., Ito, K., Sugiura, M., Kato, T., Nakamura, A., Hatano, K., Nagumo, S., Kubota, K., Fukuda, H., & Kojima, S. (1999). Activation of the right inferior frontal cortex during assessment of facial emotion. Journal of Neurophysiologyeurophysiology, 82(3), 1610–1614.

Over, H., & Carpenter, M. (2013). The social side of imitation. Child Development Perspectives, 7(1), 6–11.

Pelphrey, K. A., Viola, R. J., & McCarthy, G. (2004). When strangers pass: Processing of mutual and averted social gaze in the superior temporal sulcus. Psychological Science, 15(9), 598–603.

Piazza, E. A., Hasenfratz, L., Hasson, U., & Lew-Williams, C. (2020). Infant and adult brains are coupled to the dynamics of natural communication. Psychological Science, 31(1), 6–17.

Pickering, M. J., & Garrod, S. (2013). An integrated theory of language production and comprehension. Behavioral and Brain Sciences, 36(4), 329–347.

Pinti, P., Tachtsidis, I., Hamilton, A. F. de C., Hirsch, J., Aichelburg, C., Gilbert, S. J., & Burgess, P. W. (2018). The present and future use of functional near-infrared spectroscopy (fNIRS) for cognitive neuroscience. Annals of the New York Academy of Sciences, 1464(1), 5–29.

Pönkänen, L. M., Peltola, M. J., & Hietanen, J. K. (2011). The observer observed: Frontal EEG asymmetry and autonomic responses differentiate between another person’s direct and averted gaze when the face is seen live. International Journal of Psychophysiology, 82(2), 180–187.

Redcay, E., Dodell-Feder, D., Pearrow, M. J., Mavros, P. L., Kleiner, M., Gabrieli, J. D. E., & Saxe, R. (2010). Live face-to-face interaction during fMRI: a new tool for social cognitive neuroscience. NeuroImage, 50(4), 1639–1647.

Redcay, E., Ludlum, R. S., Velnoskey, K. R., & Kanwal, S. (2016). Communicative signals promote object recognition memory and modulate the right posterior STS. Journal of Cognitive Neuroscience, 28(1), 8–19.

Redcay, E., & Schilbach, L. (2019). Using second-person neuroscience to elucidate the mechanisms of social interaction. Nature Reviews Neuroscience, 20(8), 495–505.

Risko, E. F., Richardson, D. C., & Kingstone, A. (2016). Breaking the fourth wall of cognitive science: real-world social attention and the dual function of gaze. Current Directions in Psychological Science, 25(1), 70–74.

Sato, W., Yoshikawa, S., Kochiyama, T., & Matsumura, M. (2004). The amygdala processes the emotional significance of facial expressions: An fMRI investigation using the interaction between expression and face direction. NeuroImage, 22(2), 1006–1013.

Saxe, R., & Kanwisher, N. (2003). People thinking about thinking people: The role of the temporo-parietal junction in “theory of mind.” NeuroImage, 19, 1835–1842.

Saxe, R., & Wexler, A. (2005). Making sense of another mind: The role of the right temporo-parietal junction. Neuropsychologia, 43(10), 1391–1399.

Schegloff, E. A. (2007). Sequence organization in interaction. Cambridge University Press.

Schilbach, L., Timmermans, B., Reddy, V., Costall, A., Bente, G., Schlicht, T., & Vogeley, K. (2013). Toward a second-person neuroscience. Behavioral and Brain Sciences, 36(4), 393–414.

Schilbach, L., Wilms, M., Eickhoff, S. B., Romanzetti, S., Tepest, R., Bente, G., Shah, N. J., Fink, G. R., & Vogeley, K. (2010). Minds made for sharing: Initiating joint attention recruits reward-related neurocircuitry. Journal of Cognitive Neuroscience, 22(12), 2702–2715.

Schilbach, L., Wohlschlaeger, A. M., Kraemer, N. C., Newen, A., Shah, N. J., Fink, G. R., & Vogeley, K. (2006). Being with virtual others: Neural correlates of social interaction. Neuropsychologia, 44(5), 718–730.

Sebanz, N., Bekkering, H., & Knoblich, G. (2006). Joint action: Bodies and minds moving together. Trends in Cognitive Sciences, 10(2), 70–76.

Senju, A., & Johnson, M. H. (2009). The eye contact effect: Mechanisms and development. Trends in Cognitive Sciences, 13(3), 127–134.

Skyrms, B. (2010). Signals. Oxford University Press. https://doi.org/10.1093/acprof:oso/9780199580828.001.0001

Smaldino, P. E., Flamson, T. J., & McElreath, R. (2018). The evolution of covert signaling. Scientific Reports, 8(1), 4905.

Somerville, L. H., Jones, R. M., Ruberry, E. J., & Dyke, J. P. (2013). Medial prefrontal cortex and the emergence of self-conscious emotion in adolescence. Psychological Science, 24(8), 1554–1562.

Soutschek, A., Sauter, M., & Schubert, T. (2015). The importance of the lateral prefrontal cortex for strategic decision making in the prisoner’s dilemma. Cognitive, Affective, & Behavioral Neuroscience, 15(4), 854–860.

Speitel, C., Traut-Mattausch, E., & Jonas, E. (2019). Functions of the right DLPFC and right TPJ in proposers and responders in the ultimatum game. Social Cognitive and Affective Neuroscience, 14(3), 263–270.

Sperber, D., & Wilson, D. (1995). Relevance: Communication and cognition ((2nd ed.). ed.).

Spotorno, N., Koun, E., Prado, J., Van Der Henst, J.-B., & Noveck, I. A. (2012). Neural evidence that utterance-processing entails mentalizing: The case of irony. NeuroImage, 63(1), 25–39.

Stegmann, U. E. (2013). Animal communication theory: Information and influence. Cambridge University Press.

Stel, M., & Vonk, R. (2010). Mimicry in social interaction: Benefits for mimickers, mimickees, and their interaction. British Journal of Psychology, 101(2), 311–323.

Strauss, B. (2002). Social facilitation in motor tasks: A review of research and theory. Psychology of Sport and Exercise, 3(3), 237–256.

Suzuki, N., Takeuchi, Y., Ishii, K., & Okada, M. (2003). Effects of echoic mimicry using hummed sounds on human–computer interaction. Speech Communication, 40(4), 559–573.

Tennie, C., Frith, U., & Frith, C. D. (2010). Reputation management in the age of the world-wide web. Trends in Cognitive Sciences, 14(11), 482–488.

Teufel, C., Fletcher, P. C., & Davis, G. (2010). Seeing other minds: Attributed mental states influence perception. Trends in Cognitive Sciences, 14(8), 376–382.

Thorndike, E. L. (1898). Animal intelligence: An experimental study of the associative processes in animals. The Psychological Review: Monograph Supplements, 2(4), i–109.

Triplett, N. (1898). The dynamogenic factors in pacemaking and competition. The American Journal of Psychology, 9(4), 507–533.

Tylén, K., Allen, M., Hunter, B. K., & Roepstorff, A. (2012). Interaction vs. observation: Distinctive modes of social cognition in human brain and behavior? A combined fMRI and eye-tracking study. Frontiers in Human Neuroscience, 6(331), 1–11.

Uzgiris, I. C. (1981). Two functions of imitation during infancy. International Journal of Behavioral Development, 4(1), 1–12.

van Ackeren, M. J., Casasanto, D., Bekkering, H., Hagoort, P., & Rueschemeyer, S.-A. (2012). Pragmatics in action: Indirect requests engage theory of mind areas and the cortical motor network. Journal of Cognitive Neuroscience, 24(11), 2237–2247.

van Baaren, R. B., Holland, R. W., Steenaert, B., & van Knippenberg, A. (2003). Mimicry for money: Behavioral consequences of imitation. Journal of Experimental Social Psychology, 39(4), 393–398.

Vivanti, G., & Dissanayake, C. (2014). Propensity to imitate in autism is not modulated by the model’s gaze direction: An eye-tracking study. Autism Research, 7(3), 392–399.

Wang, Y., & Hamilton, A. F. de C. (2012). Social top-down response modulation (STORM): A model of the control of mimicry in social interaction. Frontiers in Human Neuroscience, 6(June), 1–10.

Wang, Y., & Hamilton, A. F. de C. (2014). Why does gaze enhance mimicry? Placing gaze-mimicry effects in relation to other gaze phenomena. The Quarterly Journal of Experimental Psychology, 67(4), 747–762.

Wang, Y., Newport, R., & Hamilton, A. F. de C. (2011). Eye contact enhances mimicry of intransitive hand movements. Royal Society Biology Letters, 7(1), 7–10.

Warnell, K. R., Sadikova, E., & Redcay, E. (2018). Let’s chat: developmental neural bases of social motivation during real-time peer interaction. Developmental Science, 21(April), 1–14.

Whiten, A., & Ham, R. (1992). On the nature and evolution of imitation in the animal kingdom: Reappraisal of a century of research. https://doi.org/10.1016/S0065-3454(08)60146-1

Wildgruber, D., Ackermann, H., Klose, U., Kardatzki, B., & Grodd, W. (1996). Functional lateralization of speech production at primary motor cortex: An fMRI study. NeuroReport, 7, 2791–2795.

Wilms, M., Schilbach, L., Pfeiffer, U. J., Bente, G., Fink, G. R., & Vogeley, K. (2010). It’s in your eyes—Using gaze-contingent stimuli to create truly interactive paradigms for social cognitive and affective neuroscience. Social Cognitive and Affective Neuroscience, 5(1), 98–107.

Zajonc, R. B. (1965). Social facilitation. Science, 149(3681), 269–274.

Zajonc, R. B., & Sales, S. M. (1966). Social facilitation of dominant and subordinate responses. Journal of Experimental Social Psychology, 2(2), 160–168.

Funding

A.H. and R.C. were funded by the Leverhulme Trust on Grant No. RPG-2016-251 (PI: A.H.). S.K.B. was funded by an ESRC PhD studentship.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval

Not applicable

Consent to participate

Not applicable

Consent for publication

Not applicable

Conflicts of interest/Competing interests

The authors have no financial or nonfinancial conflict of interests to disclose.

Open practices statement

Not applicable

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visithttp://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cañigueral, R., Krishnan-Barman, S. & Hamilton, A.F.d.C. Social signalling as a framework for second-person neuroscience. Psychon Bull Rev 29, 2083–2095 (2022). https://doi.org/10.3758/s13423-022-02103-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-022-02103-2