Abstract

We examined the claim that the autobiographical Implicit Association Test (aIAT) can detect concealed memories. Subjects read action statements (e.g., “break the toothpick”) and either performed the action or completed math problems. They then imagined some of these actions and some new actions. Two weeks later, the subjects completed a memory test and then an aIAT in which they categorized true and false statements (e.g., “I am in front of the computer”) and whether they had or had not performed actions from Session 1. For half of the subjects, the nonperformed statements were actions that they saw but did not perform; for the remaining subjects, these statements were actions that they saw and imagined but did not perform. Our results showed that the aIAT can distinguish between true autobiographical events (performed actions) and false events (nonperformed actions), but that it is less effective, the more that subjects remember performing actions that they did not really perform. Thus, the diagnosticity of the aIAT may be limited.

Similar content being viewed by others

Distinguishing true from false memories may be “one of the biggest challenges in human memory research” (Bernstein & Loftus, 2009, p. 370). Criminal justice implications include evaluating false confessions, eyewitness reports, and claims of childhood sexual abuse. Unfortunately, current strategies to identify true from false memories are fraught with problems (Bernstein & Loftus, 2009). Recently, however, Sartori and colleagues (Sartori, Agosta, Zogmaister, Ferrara, & Castiello, 2008) introduced a computerized tool—the autobiographical Implicit Association Test (2012; aIAT)—that has been described as a “lie detector” and as a means of detecting concealed memories (Agosta, 2009; Hu & Rosenfeld, 2012). Indeed, recommended applications of the aIAT include malingered whiplash syndrome, malingered depression, and sexual abuse/assault (see Codognotto, Agosta, Rigoni, & Sartori, 2008). Moreover, it has already been applied in court cases—for example, to identify sexual abuse victims within a family (http://aiat.psy.unipd.it; Codognotto et al., 2008). Does the aIAT have sufficient diagnostic power to hold such sway in the courtroom? Current data suggest that the aIAT can detect intentional lying, but can it detect an unintentional false belief or memory?

The 1990s were plagued by criminal cases involving (mainly) women recovering emotionally charged memories of childhood sexual abuse that they claimed they had repressed and were only able to recall with intensive therapy. However, some of these women later came to doubt the veracity of their memories. For memory scientists, these claims (and retractions) highlighted the problem of differentiating true and false memories and sparked decades of research. Indeed, we now know that people can come to believe that some part of an event, or even an entire event, really happened to them when in fact it did not. These events range from remembering seeing an unpresented word, through to rich false memories, or detailed memories of individual events that never occurred—such as remembering committing a crime, in the case of a false confession (Loftus & Bernstein, 2005).

The source-monitoring framework (SMF) helps explain these false memories (Johnson, Hashtroudi, & Lindsay, 1993; Lindsay, 2008). According to the SMF, people tend to automatically attribute mental content—thoughts, images, feelings, and memories—to a particular source using heuristics that can sometimes lead to errors. One such heuristic involves evaluating the qualitative detail associated with mental representations: More sensory information (e.g., smells, sounds, and images; Johnson, Foley, Suengas, & Raye, 1988) can imply that a memory is real, all other things being equal. However, active imagining can increase the qualitative details typical of a real memory, and the passage of time can decrease qualitative details (Dobson & Markham, 1993; Johnson et al., 1988; Sporer & Sharman, 2006). Anything that causes the characteristics of an imagined event to become more similar to a real event increases source confusion, and thus the likelihood that a false memory will be judged to be true. Since the problem has such significant consequences, researchers have looked for diagnostic tools to differentiate truth from fiction.

For example, although neuroimaging techniques have revealed possible dissociations in brain activity for true and false memories, no current technique can reliably assess a given memory’s veracity (Schacter & Slotnick, 2004). Similarly, and counterintuitively, the evidence suggests that genuine emotion does not indicate accuracy (Laney & Loftus, 2008). Moreover, attempts to detect whether certain personality characteristics might encourage false memories have produced mixed, even contradictory, findings (see Hekkanen & McEvoy, 2002; Porter, Birt, Yuille, & Lehman, 2000). Finally, several tools—based on written/verbal statements or physiological measurements—have been developed to aid practitioners in detecting deception (e.g., criteria-based content analysis). Although such tools may be applied to false memory detection, they are not infallible (Blandón-Gitlin, Pezdek, Lindsay, & Hagen, 2009). Not surprisingly then, the aIAT was heralded as a significant development (e.g., Association for Psychological Science, 2008).

The aIAT is premised on the same idea as the original Implicit Association Test (Greenwald, McGhee, & Schwartz, 1998): Associated—or congruent—concepts are stored together in memory, leading to faster responding when they are processed simultaneously. In aIAT terms, we automatically associate concepts that we know to be true (“I am reading an article”) with autobiographical events that have really happened to us (“Last year, I went to a conference”). Similarly, things that we know to be false are associated with one another (“I am sunbathing at the beach”; “Last year, I went to summer camp”). Thus, the aIAT includes logically true or false statements and—in a typical version—sentences from real and fabricated autobiographical events that subjects report. This structure relies on the assumption that what is true and false feels very different. However, we know that the line can be blurred: Sometimes a false memory can seem just like a true memory.

Experiments introducing the aIAT first appeared in Psychological Science (Sartori et al., 2008) and have since appeared in other leading journals. Several methodological issues have received scrutiny. Verschuere and De Houwer (2009) demonstrated that, when coached, people can “fake” their aIAT responses, just as they can with other lie-detection tests. However, these fakers can often be identified (Agosta, Ghirardi, Zogmaister, Castiello, & Sartori, 2011; Cvencek, Greenwald, Brown, Gray, & Snowden, 2010). In general, we know that aIAT (and IAT) scores are malleable using subtle manipulations. For example, an implicit racial preference for Whites over Blacks can be reduced by exposure to positively viewed Blacks (Dasgupta & Greenwald, 2001). Agosta, Mega, and Sartori (2011) also highlighted the methodological importance of using affirmatively worded statements and reminder labels in the aIAT. However, we believe at least one critical issue remains, requiring empirical scrutiny. According to the aIAT website (http://aiat.psy.unipd.it/), the tool can “be used to establish whether an autobiographical memory trace is encoded in the respondent’s mind/brain.” Until recently, however, no research has evaluated the aIAT’s effectiveness at identifying “memories” that arise from other mental traces, such as imagination. Marini, Agosta, Mazzoni, Dalla Barba, and Sartori (2012) recently found that compared to falsely remembered words, correctly remembered words were more associated with “true” sentences. However, their research did not pit true and false memories against one another in a single aIAT.

We know that people look for qualitative characteristics of a memory when attempting to identify whether that memory is real. We also know that when people repeatedly imagine performing an action, they often falsely remember having performed it (e.g., Goff & Roediger, 1998). In other words, the degree of source confusion that people experience is directly related to whether their false memories qualitatively resemble true memories. Thus, we wondered: Does this variation in source confusion affect the aIAT’s ability to distinguish true from false memories?

To test this question, we adapted a standard paradigm to induce false memories for simple actions (Goff & Roediger, 1998; Lampinen, Odegard, & Bullington, 2003). We presented subjects with objects (e.g., a coin) and associated action statements (e.g., “flip the coin”), varying whether those statements were seen, imagined, or performed. Two weeks later, subjects rated their belief and memory for performing a list of actions. Finally, they completed one of two aIATs. We had three questions: (1) Can the aIAT successfully detect nonperformed versus performed actions? (2) Is the aIAT more accurate at detecting nonperformed actions when they have been imagined rather than not imagined? and (3) Does the effectiveness of the aIAT depend on the extent to which subjects believe that they have performed or remember performing nonperformed as compared to performed actions?

Method

Subjects

A group of 82 English-speaking subjects participated for course credit or £6. We excluded three participants with unusual data patterns,Footnote 1 leaving 79 subjects (85% female; 16–33 years of age, M = 19.77, SD = 3.27).

Design, materials, and procedure

Subjects individually completed two sessions in the laboratory. During the course of the experiment, they interacted with 36 action statements, categorized into six groups of six actions. Table 1 describes the six action instructions; assignment of action groups to action instruction was counterbalanced using a Latin square design.

Session 1

Following the consent procedure and demographic questions, the remainder of Session 1 comprised two phases. Eight subjects failed to follow the instructions during this phase of the study and were subsequently excluded and replaced.Footnote 2

Encoding phase

Subjects sat in front of a computer alongside objects related to the 36 action statements. Twenty-four action statements appeared on the computer screen; each statement was followed by either a “perform” or “maths” instruction, then a 10-s blank screen during which subjects either performed the action or completed maths problems. A sound signalled the next action statement. Subjects then completed a 10-min filler task.

Imagination phase

Next, subjects imagined a total of 18 action statements—six that they had performed, six that they had seen but not performed, and six new to this phase—on five separate occasions, and rated how vividly they could imagine themselves performing the action (1 = not very, 8 = extremely). The action statements appeared in a random order.

Session 2

Two weeks later, subjects returned to the laboratory for another two-phase session.

Belief/remember phase

First, they saw all 36 action statements in a randomized order. For each action, subjects first rated how much they believed that they had performed the action at Time 1 (1 = definitely did not do this, 8 = definitely did do this), and then rated how much they remembered performing the action (1 = no memory of doing this, 8 = clear and detailed memory of doing this; Nash, Wade, & Lindsay, 2009).

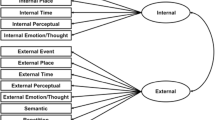

aIAT phase

Subjects’ task on the aIAT was to sort statements into four categories as quickly as possible. The subjects categorized logical statements as being “true” (six statements) or “false” (six statements; e.g., “I am in front of the computer”), and action statements (e.g., “I flipped the coin”) as being actions that they were “innocent” or “guilty” of performing. For all subjects, guilty actions were actions seen and performed (SP), and innocent actions were actions seen but not performed (S). Here, we introduced a between-subjects manipulation: For subjects in the imagined condition, we used innocent actions that they had seen and imagined during Session 1 (SI); for subjects in the not-imagined condition, we used innocent actions that they had only seen (S).

Subjects completed five blocks of aIAT trials. In Block 1 (20 trials), subjects pressed the “D” key for true statements and the “K” key for false statements. In Block 2 (20 trials), they pressed these same keys to sort guilty and innocent actions. In Block 3 (60 trials), they used the “D” key to sort both true and guilty statements, and the “K” key to sort false and innocent statements. Thus, in this congruent block, subjects sorted associated concepts together. In Block 4 (40 trials), we reversed the keys, such that subjects sorted guilty actions using “K” and innocent actions using “D.” Finally, in Block 5 (60 trials), subjects sorted true and innocent statements using the “D” key, and false and guilty statements using the “K” key. Here, incongruent concepts were sorted together. The category names remained onscreen throughout each block, and a red “X” appeared when subjects made an error; they were required to respond correctly before proceeding. We counterbalanced the order of Blocks 3 and 5.

Finally, subjects were debriefed.

Results and discussion

Before turning to our three key research questions, we first examined subjects’ beliefs and memories for all of the actions, by action instructions. These data appear in Fig. 1. We were most interested in the subjects’ overall beliefs and memories for the two groups of nonperformed actions that we used in the subsequent aIATs (see Table 1). Collapsing across conditions, we compared belief and memory ratings for seen-only (S) items and seen and imagined (SI) items using a 2 × 2 analysis of variance (ANOVA). Subjects rated the SI actions higher than the S actions on both the belief (M SI = 3.56, SD = 1.49; M S = 2.74, SD = 1.16) and memory (M SI = 3.03, SD = 1.38; M S = 2.20, SD = 1.01) scales, a main effect of action instruction type, F(1, 78) = 36.05, p < .01, η p 2 = .32. Subjects also rated beliefs higher than memories, F(1, 78) = 36.05, p < .01, η p 2 = .32. These data fit with previous work showing that imagination affects people’s beliefs and memories about simple actions.

Next, we turn to our first research question: Can the aIAT successfully detect nonperformed versus performed actions? In accordance with previous aIAT/IAT research (Greenwald, Nosek, & Banaji, 2003; Sartori et al., 2008), we removed RTs <150 or >10,000 ms. We retained both incorrect and correct trials for analysis, but added a built-in penalty—the additional time that subjects took to make a correct response—to all error-trial latencies, which is a property of standard IAT measures, in order to correct for processing time that would otherwise be attributed to incorrect responses. We calculated d (an effect size measure) by subtracting the subjects’ average response time to trials in the congruent block from their average in the incongruent block, and dividing this score by the standard deviation for all trials in both blocks. The d algorithm was designed to maximize internal consistency, minimize factors that affect general speed of responding, and calibrate scores by each individual’s variance in response time (Schnabel, Asendorpf, & Greenwald, 2008).

We expected subjects to respond faster when sentences about performed actions were paired with logically true sentences (congruent), than when sentences about nonperformed actions were paired with logically true sentences (incongruent). This response pattern results in a positive d score; the higher the d score, the more effective the aIAT. We found that d scores were largely (97.5%) positive, indicating that it was possible in most cases to correctly identify the real event on the basis of whether subjects were fastest in the congruent or the incongruent block. This classification rate is similar to rates reported elsewhere (e.g., Agosta, Ghirardi, Zogmaister, Castiello, Sartori, 2011 and Agosta, Mega, Sartori 2011; Sartori et al., 2008) and was not affected by using the more general category labels “guilty” and “innocent” for action sentences, a potential limitation of our procedure. Hence, our data suggest that the aIAT can perform well under certain conditions when diagnosticity is defined as classification accuracy. However, we have reservations about placing emphasis on classification accuracy, because it implies a clear dichotomy between accurate and inaccurate classifications when in fact, as d approaches zero, there is probably no meaningful difference between incongruent and congruent blocks—certainly not one that would be truly diagnostic in an applied forensic setting.

Our second research question was, Is the aIAT more accurate at detecting nonperformed actions when they have been imagined rather than not imagined? We compared subjects in the imagined condition to subjects in the not-imagined condition on d, and found no difference: Imagining the nonperformed actions did not appear to affect aIAT accuracy, M imag = 0.58, SD = 0.29; M not imag = 0.59, SD = 0.22, t(77) = 0.30, p = .77.

However, as Fig. 1 illustrates, subjects tended to exhibit source confusion—by experiencing some degree of belief or memory that they had performed the action—in all situations in which they were exposed to an action that they did not actually perform. Thus, we examined whether the degree of source confusion altered the aIAT’s effectiveness. That is, we tested our third research question: Does the effectiveness of the aIAT depend on the extent to which subjects believe that they performed, or remember performing, nonperformed as compared to performed actions?

To answer this question, we examined the extent to which the subjects’ beliefs and memories for nonperformed, innocent actions were rated similarly to—and, thus, were not distinguishable from—their beliefs and memories for performed, guilty actions. To create our measures of source discrimination, we classified subjects by condition (imagined vs. not imagined). For each condition, we first subtracted belief ratings for nonperformed actions from belief ratings for performed actions, then repeated the calculation for memory ratings. As Table 2 shows, the scores tended to be positive (with the exception of five cases), indicating that subjects tended to rate performed actions higher than nonperformed actions. Moreover, imagining increased people’s tendency to say that they believed and remembered that they had performed actions that they had not performed. A 2 (rating type: belief, memory) × 2 (condition: imagined, not imagined) ANOVA on the source discrimination scores showed a main effect of imagination, F(1, 77) = 4.74, p = .03, η p 2 = .06. There was no interaction between condition and rating type, nor a main effect of rating type, Fs < 1.

We next examined the relationship between source discrimination scores and d. Figure 2 illustrates that the more source confusion that subjects experienced (indicated by lower source discrimination scores), the less effectively the aIAT discriminated performed from nonperformed actions. We observed this effect in both the imagined and not-imagined conditions, and the magnitude of the effect was stronger for memory ratings [imagined condition, r bel(41) = .263, p = .10; r mem(41) = .374, p = .02; not-imagined condition, r bel(38) = .348, p = .03; r mem(38) = .391, p = .02]. Thus, our data suggest that subjects’ degree of source confusion about the actions that they did and did not perform was related to their aIAT performance.

To summarize, our results suggest that although imagination leads to increased source confusion between performed and nonperformed actions, ultimately it is the extent of any source confusion, rather than its origin, that leads to reduced discrimination of true and false events on the aIAT. That is, subjects exhibited source confusion in both conditions, and the effect of source confusion on aIAT scores was equivocal.

Drawing on the SMF, we can infer that the passage of time and simple exposure to nonperformed actions—exacerbated for some people by imagination—may have increased the qualitative similarity between mental representations of the performed and nonperformed actions, resulting in a feeling of remembering. Of course, Fig. 1 illustrates that subjects rated actions that they had performed much higher on the belief and memory scales than actions that they had not performed. However, stronger false memories might produce a greater reduction in d. Thus, we may be underestimating the effect of source confusion on the aIAT’s effectiveness, particularly when we consider that performing simple actions is not akin to the emotionally rich, detailed events that we might see in forensic settings (Loftus & Bernstein, 2005). However, much of the prior aIAT research has used similarly simple stimuli and events. Thus, future research should also address the aIAT’s ability to detect rich false memories.

In practical terms, our results support other findings showing that the aIAT can detect actions that people have versus have not performed. However, its discriminative ability appears malleable, which is undesirable, considering that confidently held, detailed memories for entire emotional events can be false. Thus, when faced with a specific case in which the veracity of an event is unknown—such as recovered memories of sexual abuse—the diagnosticity of the aIAT is questionable.

To conclude, although efforts to develop techniques that improve our ability to accurately distinguish true from false statements are admirable and important, the forensic utility of the aIAT must be considered in light of factors that may influence its effectiveness. Our findings suggest that researchers should be careful about recommending the aIAT until the boundaries of its reliability are better understood.

Notes

At Session 2, one subject claimed to definitely remember (Ms ≥ 6.67) having performed all types of actions during Session 1; one subject’s aIAT error rate was an outlier (75% of trials were errors); and one’s d score was an outlier. Scores more than three SDs from the mean were defined as outliers.

A research assistant observed subjects throughout the experiment. We eliminated subjects who performed actions ahead of the onscreen instructions, did not perform some of the actions that they were asked to perform, did not follow the imagination instructions, or were not familiar with the objects.

References

Agosta, S. (2009). The autobiographical IAT, a new technique for memory detection. Unpublished doctoral dissertation, Università degli Studi di Padova, Padova, Italy.

Agosta, S., Ghirardi, V., Zogmaister, C., Castiello, U., & Sartori, G. (2011a). Detecting fakers of the autobiographical IAT. Applied Cognitive Psychology, 25, 299–306. doi:10.1002/acp.1691

Agosta, S., Mega, A., & Sartori, G. (2011b). Accuracy and reliability of the autobiographical IAT. Acta Psychologica, 136, 268–275. doi:10.1016/j.actpsy.2010.05.011

Association for Psychological Science. (2008, August 1). New lie detecting technique [Press release]. Retrieved from www.psychologicalscience.org/index.php/news/releases/new-lie-detecting-technique.html

Autobiographical Implicit Association Test. (2012). Retrieved July 23, 2012, from http://aiat.psy.unipd.it/

Bernstein, D. M., & Loftus, E. F. (2009). How to tell if a particular memory is true or false. Perspectives on Psychological Science, 4, 370–374. doi:10.1111/j.1745-6924.2009.01140.x

Blandón-Gitlin, I., Pezdek, K., Lindsay, D. S., & Hagen, L. (2009). Criteria-based content analysis of true and suggested accounts of events. Applied Cognitive Psychology, 23, 901–917. doi:10.1002/acp.1504

Codognotto, S., Agosta, S., Rigoni, D., & Sartori, G. (2008). A novel lie detection technique in the assessment of testimony of sexual assault [Abstract]. Sexologies: Vol. 17, Supplement 1. Abstracts of the 9th Congress of the European Federation of Sexology. Oxford, UK: Elsevier. doi:10.1016/S1158-1360(08)72783-X (p. S98).

Cvencek, D., Greenwald, A. G., Brown, A. S., Gray, N. S., & Snowden, R. J. (2010). Faking of the Implicit Association Test is statistically detectable and partly correctable. Basic and Applied Social Psychology, 32, 302–314. doi:10.1080/01973533.2010.519236

Dasgupta, A. G., & Greenwald, A. G. (2001). Exposure to admired group members reduces automatic intergroup bias. Journal of Personality and Social Psychology, 81, 800–814. doi:10.1037/0022-3514 81.5.800

Dobson, M., & Markham, R. (1993). Imagery ability and source monitoring: Implications for eyewitness memory. British Journal of Psychology, 84, 111–118. doi:10.1111/j.2044-8295.1993.tb02466.x

Goff, L. M., & Roediger, H. L., III. (1998). Imagination inflation for action events: Repeated imaginings lead to illusory recollections. Memory & Cognition, 26, 20–33. doi:10.3758/BF03211367

Greenwald, A. G., McGhee, D. E., & Schwartz, J. K. L. (1998). Measuring individual differences in implicit cognition: The Implicit Association Test. Journal of Personality and Social Psychology, 74, 464–480. doi:10.1037/0022-3514.74.6.1464

Greenwald, A. G., Nosek, B. A., & Banaji, M. R. (2003). Understanding and using the Implicit Association Test: I. An improved scoring algorithm”: Correction to Greenwald et al. Journal of Personality and Social Psychology, 85, 481. doi:10.1037/h0087889

Hekkanen, S. T., & McEvoy, C. (2002). False memories and source monitoring problems: Criterion differences. Applied Cognitive Psychology, 16, 73–85. doi:10.1002/acp.753

Hu, X., & Rosenfeld, J. P. (2012). Combining the P300-complex trial-based Concealed Information Test and the reaction time-based autobiographical Implicit Association Test in concealed memory detection. Psychophysiology, 49, 1090–1100. doi:10.1111/j.1469-8986.2012.01389.x

Johnson, M. K., Foley, M. A., Suengas, A. G., & Raye, C. L. (1988). Phenomenal characteristics of memories for perceived and imagined autobiographical events. Journal of Experimental Psychology: General, 117, 371–376. doi:10.1037/0096-3445.117.4.371

Johnson, M. K., Hashtroudi, S., & Lindsay, D. S. (1993). Source monitoring. Psychological Bulletin, 114, 3–28. doi:10.1037/0033-2909.114.1.3

Lampinen, J. M., Odegard, T. N., & Bullington, J. (2003). Qualities of memories for performed and imagined actions. Applied Cognitive Psychology, 17, 881–893. doi:10.1002/acp.916

Laney, C., & Loftus, E. F. (2008). Emotional content of true and false memories. Memory, 16, 500–516. doi:10.1080/09658210802065939

Lindsay, D. S. (2008). Source monitoring. In J. H. Byrne & H. L. Roediger III (Eds.), Learning and memory: A comprehensive reference. Vol. 2: Cognitive psychology of memory (pp. 325–348). Oxford: Elsevier.

Loftus, E. F., & Bernstein, D. M. (2005). Rich false memories: The royal road to success. In A. Healy (Ed.), Experimental cognitive psychology and its applications: Festschrift in honor of Lyle Bourne, Walter Kintsch, and Thomas Landauer (pp. 101–113). Washington DC: American Psychological Association.

Marini, M., Agosta, S., Mazzoni, G., Dalla Barba, G., & Sartori, G. (2012). True and false DRM memories: Differences detected with an implicit task. Frontiers in Psychology, 3, 1–7. doi:10.3389/fpsyg.2012.00310

Nash, R. A., Wade, K. A., & Lindsay, D. S. (2009). Digitally manipulating memory: Effects of doctored videos and imagination in distorting beliefs and memories. Memory & Cognition, 37, 414–424. doi:10.3758/MC.37.4.414

Porter, H. G., Birt, A. R., Yuille, J. C., & Lehman, D. R. (2000). Negotiating false memories: Interviewer and rememberer characteristics relate to memory distortion. Psychological Science, 11, 507–510. doi:10.1111/1467-9280.00297

Sartori, G., Agosta, S., Zogmaister, C., Ferrara, S. D., & Castiello, U. (2008). How to accurately detect autobiographical events. Psychological Science, 19, 772–780. doi:10.1111/j.1467-9280.2008.02156.x

Schacter, D. L., & Slotnick, S. D. (2004). The cognitive neuroscience of memory distortion. Neuron, 44, 149–160. doi:10.1016/j.neuron.2004.08.017

Schnabel, K., Asendorpf, J. B., & Greenwald, A. G. (2008). Implicit Association Tests: A landmark for the assessment of implicit personality self-concept. In G. J. Boyle, G. Matthews, & D. H. Saklofske (Eds.), Handbook of personality theory and testing (pp. 508–528). London, UK: Sage.

Sporer, S. L., & Sharman, S. J. (2006). Should I believe this? Reality monitoring of account of self-experienced and invented recent and distant autobiographical events. Applied Cognitive Psychology, 20, 837–854. doi:10.1002/acp.1234

Verschuere, B., & De Houwer, J. (2009). Cheating the lie-detector: Faking in the autobiographical Implicit Association Test. Psychological Science, 20, 410–413. doi:10.1111/j.1467-9280.2009.02308.x

Author note

We are grateful to the support of the British Academy, Grant Award No. SG090082. We thank Robert Nash, Karen Mitchell and two anonymous reviewers for their helpful comments on earlier drafts of this paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Takarangi, M.K.T., Strange, D., Shortland, A.E. et al. Source confusion influences the effectiveness of the autobiographical IAT. Psychon Bull Rev 20, 1232–1238 (2013). https://doi.org/10.3758/s13423-013-0430-3

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-013-0430-3