Abstract

A variety of perceptual correspondences between auditory and visual features have been reported, but few studies have investigated how rhythm, an auditory feature defined purely by dynamics relevant to speech and music, interacts with visual features. Here, we demonstrate a novel crossmodal association between auditory rhythm and visual clutter. Participants were shown a variety of visual scenes from diverse categories and asked to report the auditory rhythm that perceptually matched each scene by adjusting the rate of amplitude modulation (AM) of a sound. Participants matched each scene to a specific AM rate with surprising consistency. A spatial-frequency analysis showed that scenes with greater contrast energy in midrange spatial frequencies were matched to faster AM rates. Bandpass-filtering the scenes indicated that greater contrast energy in this spatial-frequency range was associated with an abundance of object boundaries and contours, suggesting that participants matched more cluttered scenes to faster AM rates. Consistent with this hypothesis, AM-rate matches were strongly correlated with perceived clutter. Additional results indicated that both AM-rate matches and perceived clutter depend on object-based (cycles per object) rather than retinal (cycles per degree of visual angle) spatial frequency. Taken together, these results suggest a systematic crossmodal association between auditory rhythm, representing density in the temporal domain, and visual clutter, representing object-based density in the spatial domain. This association may allow for the use of auditory rhythm to influence how visual clutter is perceived and attended.

Similar content being viewed by others

Previous research has demonstrated a variety of perceptual correspondences between auditory and visual features. Most of these associations are based on auditory loudness mapping to visual brightness; auditory pitch (or pitch change) mapping to visual lightness, elevation, and size; and auditory timbre (often conveyed by speech sounds) mapping to sharpness/smoothness of visual contours or shapes (e.g., Bernstein & Edelstein, 1971; Evans & Treisman, 2010; Marks, 1987; Mossbridge, Grabowecky & Suzuki 2011; Ramachandran & Hubbard, 2001; Sweeny, Guzman-Martinez, Ortega, Grabowecky & Suzuki 2012).

Few studies have investigated how rhythm, an auditory feature defined purely by dynamics, may interact with visual features. Rhythm is a fundamental auditory feature coded in the auditory cortex, it plays an integral role in providing information about objects and scenes (Liang, Lu & Wang 2002; Schreiner & Urbas, 1986, 1998), and it conveys affective and linguistic information in music and speech (e.g., Bhatara, Tirovolas, Duan, Levy & Levitin 2011; Juslin & Laukka, 2003; Scherer, 1986). Intuitively, a faster auditory rhythm is associated with visual properties that imply rapid dynamics. Consistent with this idea, Shintel and Nusbaum (2007) showed that listening to a verbal description of an object spoken at an atypically rapid rate speeded recognition of a subsequently presented picture when the picture depicted an object in motion relative to when it depicted the same object at rest. This suggests that auditory rhythm can interact with the perception of visual dynamics.

Recently, Guzman-Martinez, Ortega, Grabowecky, Mossbridge, and Suzuki (2012) have demonstrated that auditory rhythm is also associated with visual spatial frequency, a fundamental visual feature initially coded in the primary visual cortex (De Valois, Albrecht, & Thorell, 1982; Geisler & Albrecht, 1997) that is relevant for perceiving textures, objects, hierarchical structures, and scenes (Landy & Graham, 2004; Schyns & Oliva, 1994; Shulman, Sullivan, Gish & Sakoda 1986; Sowden & Schyns, 2006). They used a basic form of auditory rhythm conveyed by an amplitude-modulated (AM) sound (a white noise whose intensity is modulated at a fixed rate) and a basic form of visual spatial frequency conveyed by a Gabor patch (a repetitive grating-like pattern whose luminance is modulated at a fixed spatial frequency). They found that participants matched faster AM rates to higher spatial frequencies in an approximately linear relationship. This crossmodal relationship is absolute (rather than relative), in that it is equivalent whether each participant found an auditory match to only one Gabor patch, or found auditory matches to multiple Gabor patches of different spatial frequencies. The relationship is perceptual, in that it is not based on general magnitude matching in an abstract numerical representation or on matching the number of “bars” in Gabor patches to AM rates. It was further shown that the relationship is functionally relevant, in that an AM sound can guide attention to a Gabor patch with the corresponding spatial frequency. These results suggest a fundamental relationship between the auditory processing of rhythm (AM rate) and the visual processing of spatial frequency.

Although it is necessary to characterize a crossmodal relationship using simplified visual stimuli, it is also important to understand how the relationship is relevant to perception in the real world. In the natural environment, we encounter complex scenes that are characterized by many spatial frequencies. It has been shown that the responses of spatial-frequency-tuned neurons to natural scenes are not readily predictable from their responses to Gabor patches (e.g., Olshausen & Field, 2006). In the present study, we thus investigated how the basic perceptual relationship between auditory rhythm and isolated visual spatial frequencies generalized to a perceptual relationship between auditory rhythm and natural scenes composed of multiple spatial-frequency components. This investigation would also elucidate how auditory rhythm may systematically influence the processing of complex visual scenes.

Experiment 1

We first determined whether people would consistently match a variety of complex visual scenes to specific auditory AM rates. Namely, we asked, does a natural scene have an implied auditory rhythm? For example, a cluttered indoor scene might be matched to a faster AM rate than would a less cluttered indoor scene, an urban scene to a faster AM rate than a beach scene, a mountain scene to a slower AM rate than a forest scene, and so on. Indeed, we found that people consistently matched each scene to a specific AM rate. We then analyzed the spatial-frequency components of the images in order to investigate the source of this crossmodal association.

Method

Participants

The participants in all of our experiments were Northwestern University undergraduate students, who gave informed consent to participate for partial course credit, had normal or corrected-to-normal visual acuity and normal hearing, and were tested individually in a dimly lit room. A group of 20 (nine female, 11 male) students participated in Experiment 1.

Stimuli and procedures

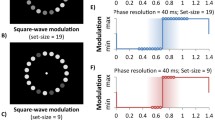

The participants determined auditory-AM-rate matches to 24 natural scenes (see the Supplementary Materials) and three Gabor patches (0.50, 2.20, and 4.50 cycles/cm in physical spatial frequency, corresponding to 0.75, 3.30, and 6.79 cycles/degree [c/deg] in retinal spatial frequency); see Fig. 1 for the trial information. All images were randomly presented three times, totaling 81 trials. Participants were given three practice trials prior to the experiment, in which they determined AM-rate matches to geometrical patterns. All images were displayed full-screen on a 22-in. color CRT monitor (1,152 × 870 pixels, 85 Hz). An integrated head-and-chin rest was used to stabilize the viewing distance at 84 cm.

Trial sequence. Following 1,000 ms of central fixation, participants saw a grayscale photograph (27.7º × 20.6º of visual angle) of an outdoor nature, outdoor urban, or indoor scene, or a Gabor patch of one of three spatial frequencies. After 1,000 ms from the onset of the visual image, participants heard an amplitude-modulated white noise (62 dB SPL) through Sennheiser HD 256 headphones (10–20000 Hz frequency response). While looking at the image, they adjusted the amplitude-modulation (AM) rate of the sound using the arrow keys (increasing or decreasing the AM rate in 1-Hz increments) over the range of 1–12 Hz. To avoid an anchoring effect, the initial AM rate was randomly set to 4, 6, or 8 Hz on each trial. When participants felt that the auditory rhythm matched the visual image, they pressed a button to register the response. The image then disappeared and the next trial began after a 1,000-ms blank screen. A similar crossmodal matching procedure was used in Guzman-Martinez et al. (2012)

After auditory–visual matching trials were completed, participants determined whether each scene (not including the three Gabor patches) was dense or sparse, with a forced choice response. The image was displayed slightly smaller (20.5º × 16.0º of visual angle) in order to present the choice words “dense” and “sparse” below the image. Each scene was randomly presented in two separate blocks. Left/right placement of the two words was counterbalanced (e.g., if the word “dense” appeared on the left in the first block, it appeared on the right in the second block, or vice versa). This provided a measure of perceived clutter.

The experiment was controlled using MATLAB software with Psychophysics Toolbox extensions (Brainard, 1997; Pelli, 1997).

Results

Participants matched specific auditory AM rates to the 24 scenes from diverse categories (nature, urban, and indoor) with surprising consistency (Fig. 2, black circles), F(23, 437) = 17.43, p < .0001 (main effect of images). The AM-rate matches to the intermixed Gabor patches were similar to those reported by Guzman-Martinez et al. (2012), in that no main effect of experiment emerged [F(1, 25) = 2.80, n.s.], although we did find a marginal interaction between experiment and spatial frequency [F(2, 50) = 2.81, p = .07] in which our participants assigned slightly lower AM rates to the highest-spatial-frequency Gabor patch (see Table 1 for the AM-rate matches in the two studies). We replicated a robust linear relationship between spatial frequency and AM rate [t(19) = 6.10, p < .0001, for the linear contrast]. The fact that our participants, who saw complex scenes as well as Gabor patches, and Guzman-Martinez et al.’s participants, who only saw Gabor patches, similarly matched Gabor patches of different spatial frequencies to specific AM rates suggests that people tend to use a consistent strategy to match AM rates to both simple Gabor patches and complex scenes. Because Gabor patches primarily carry single spatial frequencies, we hypothesized that our participants used spatial-frequency information to match AM rates to visual scenes.

Amplitude-modulation (AM) rate matches to 24 images, ordered from those matched to the slowest AM rate to those matched to the fastest AM rate, based on the results from Experiments 1 (black circles) and 2 (gray squares). Note that the AM-rate matches are similar

To evaluate this hypothesis, we applied a two-dimensional Fourier transform to each scene and obtained its spatial-frequency profile with respect to 12 spatial-frequency bins, ranging from 0.05 to 12.8 c/deg (see Table 2).

For each participant, we computed the correlation between AM-rate matches and contrast energy for each spatial-frequency bin. For example, if AM-rate matches were slower for scenes with more energy in lower-spatial-frequency components, the correlations would be negative for lower-spatial-frequency bins. If AM-rate matches were faster for scenes with more energy in higher-spatial-frequency components, the correlations would be positive for higher-spatial-frequency bins. Outlier images were removed from each correlation (across observers) using a 95 % confidence ellipse (5 % of the images were removed, on average). The average correlation coefficient, r, is shown as a function of spatial frequency bin in Fig. 3 (black curve). The function is peaked. That is, we did not obtain a simple crossmodal relationship in which the contrast energy in higher-spatial-frequency components drove faster AM-rate matches. Instead, the results suggest that the faster AM-rate matches were driven by the energy in the specific midrange spatial frequencies (0.3–1.25 c/deg).

Contributions of the contrast energy in each spatial-frequency bin to the amplitude-modulation-rate (AM-rate) match and perceived clutter in Experiment 1. Each point represents the correlation (across images) between the contrast energy in each spatial-frequency bin and the matched AM rate (black symbols) or clutter rating (gray symbols). The error bars represent ±1 SEM across participants

In order to gain insight into why the midrange spatial frequencies were strongly associated with faster AM-rate matches, we filtered each image within this window of spatial frequency (0.3–1.25 c/deg). Representative examples are shown in Fig. 4. An inspection of these images suggests that scenes with stronger contrast energy in this spatial frequency bin tend to have more object boundaries and contours (e.g., the top image in Fig. 4), whereas scenes with weaker contrast energy in this spatial frequency bin tend to have fewer object boundaries (e.g., the bottom image in Fig. 4). This may suggest that AM-rate matches to visual scenes are based on the numerosity of object boundaries and contours. Consistent with this idea, we found a strong aggregate correlation between the average perceived clutter rating and the average AM-rate match across the 24 scenes (r = .62), t(22) = 3.68, p = .001. Significantly positive correlations were also attained when they were computed separately for each participant, t(19) = 3.89, p = .001. This supports the hypothesis that greater contrast energy in the midrange spatial frequencies drives a faster AM-rate match, because it makes a scene appear more cluttered.

Two examples of images bandpass filtered at the critical midrange spatial frequencies strongly associated with faster amplitude-modulation-rate (AM-rate) matches. Comparison of the original and filtered versions of the two example images shows that greater contrast energy in these midrange spatial frequencies (top images) reflects more object boundaries and contours

To determine whether spatial-frequency information contributed to AM rate matches over and above perceived clutter, we computed the correlation between the clutter rating and the contrast energy in each spatial frequency bin. If AM-rate matches were completely mediated by perceived clutter, the midrange spatial frequencies that strongly drive faster AM-rate matches should also strongly drive higher clutter ratings. As can be seen in Fig. 3 (gray curve), although the functions for perceived clutter and AM-rate matches are both broadly elevated within similar ranges of spatial frequencies, the peakFootnote 1 occurs at a significantly higher spatial frequency for perceived clutter (M = 1.30 c/deg, SD = 1.93) than for AM-rate matches (M = 0.44 c/deg, SD = 4.09), t(17) = 3.62 , p < .01. Thus, although spatial frequency and perceived clutter similarly contribute to AM-rate matches, perceived clutter depends on a slightly higher range of spatial frequencies than does the crossmodal association. This suggests that the spatial-frequency profiles of visual scenes contribute to AM-rate matches over and above perceived clutter.

Experiment 2

The goal of this experiment was to determine whether the spatial-frequency-mediated crossmodal association between visual scenes and auditory AM rate was based on retinal, physical, or object-based spatial frequency. In the case of single-spatial-frequency texture patches (Gabor patches), the association is based on physical spatial frequency (Guzman-Martinez et al., 2012). For texture perception, physical spatial frequency is informative because it allows for anticipation of the felt texture prior to touching a surface. For scene perception, however, object-based spatial frequency (i.e., number of cycles per object) would be particularly informative, because it conveys information about object structure and scene features irrespective of the viewing distance or scaling of photographs (e.g., Parish & Sperling, 1991; Sowden & Schyns, 2006). Thus, it is possible that AM-rate matches to natural scenes may be based on object-based (rather than physical) spatial frequency.

We tested this hypothesis by reducing the size of the images by half, thus doubling the physical/retinal spatial frequencies (physical and retinal spatial frequencies are indistinguishable at a fixed viewing distance), without affecting the object-based spatial frequencies. If the crossmodal matches depend on physical/retinal spatial frequencies, the AM-rate matches to the individual images should change, but the critical spatial frequencies (which are strongly correlated with AM-rate matches) should remain the same in cycles per degree. In contrast, if the crossmodal matches depend on object-based spatial frequencies, the AM-rate matches to the individual images should remain the same, but the critical spatial frequency should double in cycles per degree, because an identical object-based spatial frequency corresponds to a doubled physical/retinal spatial frequency when image size is halved.

Method

Participants

A new group of 14 undergraduate students (nine female, five male) participated.

Stimuli and procedures

The stimuli and procedures were the same as in Experiment 1, except that image size was halved (to 13.2º × 9.87º of visual angle).

Results

The auditory AM-rate matches to the 24 scenes (Fig. 2, gray squares) remained statistically equivalent to those in Experiment 1 [F(23, 736) = 1.51, n.s., for the Experiment × Picture interaction], despite the fact that halving the image size doubled the physical/retinal spatial frequencies in each image. This result is consistent with the hypothesis that AM-rate matches depend on object-based spatial frequency, which is independent of image size.

We performed the same analyses that we had in Experiment 1 to investigate how the AM-rate matches depended on spatial frequency and perceived clutter. If the relationship between spatial frequency and AM-rate match depends on physical/retinal spatial frequency, the correlation should peak at the same spatial frequency, in cycles per degree, as it did in Experiment 1. Alternatively, because we halved the linear size of each image, if the relationship depends on object-based spatial frequency, the correlation should peak at the doubled spatial frequency in cycles per degree (corresponding to the same object-based spatial frequency). As for the relationship between spatial frequency and perceived clutter, if dense/sparse judgments are based on clutter in an object-based representation, this correlation should also peak at the doubled spatial frequency.

As in Experiment 1, we found that greater contrast energy within specific ranges of spatial frequencies was strongly associated with faster AM-rate matches and with greater perceived clutter (Fig. 5). Importantly, the critical spatial-frequency ranges in cycles per degree doubled relative to those in Experiment 1, consistent with the hypothesis that the association between AM rate and spatial frequency and that between perceived clutter and spatial frequency both depend on object-based spatial frequency. Furthermore, we replicated the strong correlation between perceived clutter and AM-rate match, r = .80, t(22) = 6.35, p < .0001, in an aggregate correlation, which was also significant when computed separately for each participant, t(13) = 13.14, p < .0001, confirming our inference from Experiment 1 that AM-rate matches are partly based on the perceived clutter of a visual scene. We also replicated the result that spatial frequency makes a contribution to AM-rate matches over and above perceived clutter; as in Experiment 1, the correlation peak occurred at a higher spatial frequency for perceived density (M = 2.20 c/deg, SD = 2.92) than for AM-rate matches ( M = 1.54 c/deg, SD = 2.82), t(11) = 2.84, p = .01 (cf. Figs. 3 and 5). The replication of Experiment 1 with respect to doubling spatial frequency in cycles per degree suggests that both AM-rate matches and perceived clutter depend on the object-based spatial frequency.

Contributions of the contrast energy in each spatial-frequency bin to the amplitude-modulation-rate (AM-rate) match and perceived clutter in Experiment 2. Each point represents the correlation (across images) between the contrast energy in each spatial-frequency bin and the matched AM rate (black symbols) or clutter rating (gray symbols). The error bars represent ±1 SEM across participants

Discussion

Our results have revealed a novel relationship between auditory rhythm and visual clutter. Participants matched images to faster or slower AM rates on the basis of the strength of a specific range of object-based spatial frequencies that indicated the numerosity of the object boundaries and contours associated with perceived clutter.

Interestingly, the spatial frequencies most closely associated with faster AM-rate matches and those most closely associated with greater perceived clutter were similar but significantly different. This shows that specific spatial-frequency components contribute to AM-rate matches over and above their contributions via generating the explicit perception of visual clutter. It is possible that the object-boundary and contour information conveyed by the critical spatial-frequency range (see Fig. 4) implicitly drives faster AM-rate matches. Alternatively, it is possible that the contrast energy in this spatial-frequency range drives faster AM-rate matches by generating other characteristic perceptual experiences, such as dynamism and implied loudness. Investigating these possibilities would be an interesting avenue for future investigation.

An important difference between the present results and those of Guzman-Martinez et al. (2012) is that, whereas their AM-rate matches depended on physical spatial frequency, ours depended on object-based spatial frequency. Because Gabor patches might simulate the visual perception of corrugated surfaces, Guzman-Martinez et al. suggested that the crossmodal association between physical spatial frequency and AM rate might derive from multisensory experience of manually exploring textured surfaces. That is, if we assume that the speed of manual exploration is relatively constant, the density of surface corrugation conveyed by physical spatial frequency would be positively correlated with the AM rate of the sound generated by gliding a hand over the surface. Of course, one cannot glide a hand over an entire natural scene. It is possible that the dual nature of visual information, conveyed as both a texture and a collection of visual objects, may give rise to a texture-based association with auditory AM rate in terms of physical spatial frequency and an object-based association with auditory AM rate in terms of object-based spatial frequency. Another possibility is that because the abstraction of perceptual relationships is common in perception and cognition (e.g., Baliki, Geha & Apkarian 2009; Barsalou, 1999; Piazza, Pinel, Le Bihan & Dehaene 2007; Walsh, 2003), it is conceivable that the crossmodal association between surface corrugation (reflected in physical spatial frequency) and AM rate that developed via the multisensory experience of manual exploration might extend to a more abstract crossmodal association between clutter (reflected in object-based spatial frequency) and AM rate. Future research will be necessary to evaluate these and other possibilities.

Overall, our results suggest that the auditory–visual association between rhythm and clutter is a fundamental synesthetic association akin to those between loudness and brightness and between pitch and lightness/elevation/size. Our results also suggest that visual clutter is a scene feature that people spontaneously associate with auditory rhythm, because our participants were not instructed to use any specific strategy to generate the auditory rhythm that would match each visual image.

Importantly, previous research investigating the behavioral effects of auditory–visual associations has suggested that the association between auditory rhythm and visual clutter may have behavioral consequences. First, it has been shown that sounds can bias the perception of associated visual features. For example, it was recently shown that hearing laughter makes a single happy face appear happier and makes a sad face in a crowd appear sadder (Sherman, Sweeny, Grabowecky, & Suzuki, 2012). Additionally, hearing a /wii/ sound, typically produced by horizontally stretching the mouth, makes a flat ellipse appear flatter, whereas a /woo/ sound typically produced by vertically stretching the mouth, makes a flat ellipse appear taller (Sweeny et al., 2012). Likewise, an auditory rhythm may bias the overall perceived visual clutter of a scene. For example, it is possible that listening to a fast (or slow) rhythm might increase (or decrease) the perceived visual clutter of a scene.

Another potential behavioral consequence of the correspondence between rhythm and clutter is based on the finding that sounds can guide attention and eye movements to associated visual objects or features. For example, hearing a “meow” sound guides eye movements toward and facilitates the detection of a target cat in visual search (Iordenescu, Grabowecky, Franconeri, Theeuwes, & Suzuki, 2010). Additionally, hearing an AM sound guides attention to a Gabor patch carrying the associated spatial frequency (Guzman-Martinez et al., 2012). It is thus possible that a faster auditory rhythm might guide attention and/or eye movements to more cluttered regions in a visual scene. In considering these potential behavioral consequences, it will be important to determine whether the association generalizes to other ways of presenting auditory rhythm, such as music. We used amplitude-modulated white noise because amplitude modulation is the simplest way to convey auditory rhythm, and a white-noise carrier contains a broad range of auditory frequencies, so that our results would not be idiosyncratic to any specific auditory frequency.

In summary, we have demonstrated a novel perceptual association between auditory rhythm and visual clutter conveyed by a specific range of object-based spatial frequencies. This crossmodal association may allow for the use of auditory rhythms to modulate the impression of visual clutter as well as to guide attention and eye movements to more cluttered regions.

Notes

Peak locations were estimated by fitting each participant’s tuning functions with quadratic polynomials. Two participants were excluded from the analysis (also in Exp. 2, coincidentally) because their peak locations deviated from the mean by more than three standard deviations.

References

Baliki, M. N., Geha, P. Y., & Apkarian, A. V. (2009). Parsing pain perception between nociceptive representation and magnitude estimation. Journal of Neurophysiology, 101, 875–887.

Barsalou, L. W. (1999). Perceptual symbol systems. The Behavioral and Brain Sciences, 22, 577–609. doi:10.1017/S0140525X99002149. disc. 609–660.

Bernstein, I. H., & Edelstein, B. A. (1971). Effects of some variations in auditory input upon visual choice reaction time. Journal of Experimental Psychology, 87, 241–247.

Bhatara, A., Tirovolas, A. K., Duan, L. M., Levy, B., & Levitin, D. J. (2011). Perception of emotional expression in musical performance. Journal of Experimental Psychology. Human Perception and Performance, 37, 921–934.

Brainard, D. H. (1997). The Psychophysics Toolbox. Spatial Vision, 10, 433–436. doi:10.1163/156856897X00357

De Valois, R. L., Albrecht, D. G., & Thorell, L. G. (1982). Spatial frequency selectivity of cells in macaque visual cortex. Vision Research, 22, 545–559.

Evans, K. K., & Treisman, A. (2010). Natural cross-modal mappings between visual and auditory features. Journal of Vision, 10(1), 6. doi:10.1167/10.1.6. 1–12.

Geisler, W. S., & Albrecht, D. G. (1997). Visual cortex neurons in monkeys and cats: Detection, discrimination, and identification. Visual Neuroscience, 14, 897–919.

Guzman-Martinez, E., Ortega, L., Grabowecky, M., Mossbridge, J., & Suzuki, S. (2012). Interactive coding of visual spatial frequency and auditory amplitude-modulation rate. Current Biology, 22, 383–388.

Iordanescu, L., Grabowecky, M., Franconeri, S., Theeuwes, J., & Suzuki, S. (2010). Characteristic sounds make you look at target objects more quickly. Attention, Perception, & Psychophysics, 72, 1736–1741. doi:10.3758/APP.72.7.1736

Juslin, P. N., & Laukka, P. (2003). Communication of emotions in vocal expression and music performance: Different channels, same code? Psychological Bulletin, 129, 770–814.

Landy, M. S., & Graham, N. (2004). Visual perception of texture. In L. M. Chalupa & J. S. Werner (Eds.), The visual neurosciences (pp. 1106–1118). Cambridge: MIT Press.

Liang, L., Lu, T., & Wang, X. (2002). Neural representations of sinusoidal amplitude and frequency modulations in the primary auditory cortex of awake primates. Journal of Neurophysiology, 87, 2237–2261.

Marks, L. E. (1987). On cross-modal similarity: Auditory–visual interactions in speeded discrimination. Journal of Experimental Psychology. Human Perception and Performance, 13, 384–394. doi:10.1037/0096-1523.13.3.384

Mossbridge, J., Grabowecky, M., & Suzuki, S. (2011). Changes in auditory frequency guide visual-spatial attention. Cognition, 121, 133–139.

Olshausen, B. A., & Field, J. D. (2006). What is the other 85 percent of V1 doing? In J. L. van Hemmen & T. J. Sejnowski (Eds.), 23 problems in systems neuroscience (pp. 182–221). New York: Oxford University Press.

Parish, D. H., & Sperling, G. (1991). Object spatial frequencies, retinal spatial frequencies, noise, and the efficiency of letter discrimination. Vision Research, 31, 1399–1415. doi:10.1016/0042-6989(91)90060-I

Pelli, D. G. (1997). The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision, 10, 437–442. doi:10.1163/156856897X00366

Piazza, M., Pinel, P., Le Bihan, D., & Dehaene, S. (2007). A magnitude code common to numerosities and number symbols in human intraparietal cortex. Neuron, 53, 293–305. doi:10.1016/j.neuron.2006.11.022

Ramachandran, V. S., & Hubbard, E. M. (2001). Synaesthesia—A window into perception, thought and language. Journal of Consciousness Studies, 8, 3–34.

Scherer, K. R. (1986). Vocal affect expression: A review and a model for future research. Psychological Bulletin, 99, 143–165.

Schreiner, C. E., & Urbas, J. V. (1986). Representation of amplitude modulation in the auditory cortex of the cat: I. The anterior auditory field (AAF). Hearing Research, 21, 227–241.

Schreiner, C. E., & Urbas, J. V. (1998). Representation of amplitude modulation in the auditory cortex of the cat: II. Comparison between cortical fields. Hearing Research, 32, 49–63.

Schyns, P. G., & Oliva, A. (1994). From blobs to boundary edges: Evidence for time and spatial scale dependent scene recognition. Psychological Science, 5, 195–200.

Sherman, A., Sweeny, T. D., Grabowecky, M., & Suzuki, S. (2012). Laughter exaggerates happy and sad faces depending on visual context. Psychonomic Bulletin & Review, 19, 163–169.

Shintel, H., & Nusbaum, H. C. (2007). The sound of motion in spoken language: Visual information conveyed by acoustic properties of speech. Cognition, 105, 681–690.

Shulman, G. L., Sullivan, M. A., Gish, K., & Sakoda, W. J. (1986). The role of spatial-frequency channels in the perception of local and global structure. Perception, 15, 259–273.

Sowden, P. T., & Schyns, P. G. (2006). Channel surfing in the visual brain. Trends in Cognitive Sciences, 10, 538–545.

Sweeny, T., Guzman-Martinez, E., Ortega, L., Grabowecky, M., & Suzuki, S. (2012). Sounds exaggerate visual shape. Cognition, 124, 194–200. doi:10.1016/j.cognition.2012.04.009

Walsh, V. (2003). A theory of magnitude: Common cortical metrics of time, space and quantity. Trends in Cognitive Sciences, 7, 483–488. doi:10.1016/j.tics.2003.09.002

Acknowledgments

NIH grants R01EY018197 and R01EY021184.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

ESM 1

(DOCX 19.4 mb)

Rights and permissions

About this article

Cite this article

Sherman, A., Grabowecky, M. & Suzuki, S. Auditory rhythms are systemically associated with spatial-frequency and density information in visual scenes. Psychon Bull Rev 20, 740–746 (2013). https://doi.org/10.3758/s13423-013-0399-y

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-013-0399-y