Abstract

Several lines of research have shown that performing movements while learning new information aids later retention of that information, compared to learning by perception alone. For instance, articulated words are more accurately remembered than words that are silently read (the production effect). A candidate mechanism for this movement-enhanced encoding, sensorimotor prediction, assumes that acquired sensorimotor associations enable movements to prime associated percepts and hence improve encoding. Yet it is still unknown how the extent of prior sensorimotor experience influences the benefits of movement on encoding. The current study addressed this question by examining whether the production effect is modified by prior language experience. Does the production effect reduce or persist in a second language (L2) compared to a first language (L1)? Two groups of unbalanced bilinguals, German (L1) – English (L2) bilinguals (Experiment 1) and English (L1) – German (L2) bilinguals (Experiment 2), learned lists of German and English words by reading the words silently or reading the words aloud, and they subsequently performed recognition tests. Both groups showed a pronounced production effect (higher recognition accuracy for spoken compared to silently read words) in the first and second languages. Surprisingly, the production effect was greater in the second languages compared to the first languages, across both bilingual groups. We discuss interpretations based on increased phonological encoding, increased effort or attention, or both, when reading aloud in a second language.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

We constantly seek out new information when reading articles, making financial decisions, or following a course of study. How can we better retain new information, and are there specific conditions or strategies we can utilize? While we can readily learn by perceiving information, by reading the newspaper or listening to a lecture, we may improve our memory through movement, such as repeating a phone number aloud, taking notes during a lecture, or drawing (Allen et al., 2020; Damsgaard et al., 2020; Fernandes et al., 2018; MacLeod et al., 2010). The mechanisms underlying these learning effects are not yet well understood. One candidate mechanism may be motor-to-sensory predictions: performing movements is thought to elicit predictions of resulting sensory feedback (Mathias et al., 2021; Miall & Wolpert, 1996). Because accurate prediction depends on prior learning (Bar, 2007; Bubic et al., 2010), the benefit of movement on encoding may also depend on experience. When action-perception associations are in place, performing actions should prime associated percepts and may thereby aid their retention. Building on this notion, the question arises whether more extensive experience acquiring action-perception associations strengthens this cross-modal priming and thereby increases the benefit of movement on encoding. The current study examined this prediction.

Several lines of research suggest that performing movements while learning particular items improves retention of those items, compared to perceptual learning alone. One such robust phenomenon in the language domain, known as the production effect, occurs when participants first study lists of words by speaking them out loud or silently reading (or hearing) them, and subsequently show increased recognition accuracy for the spoken words compared to the silently studied words (Forrin et al., 2012; MacLeod et al., 2010; Mama & Icht, 2016; Murray, 1965; Ozubko et al., 2012). Notably, other types of production (i.e., movement) such as writing, typing, whispering, and gesturing, also appear to benefit word recognition compared to perceptual learning conditions involving either reading or hearing words (Forrin et al., 2012; Mama & Icht, 2016; Mathias et al., 2021). Movement has also been associated with memory enhancements in other contexts, involving different types of movements when learning different types of items. For instance, sets of instructions are better recalled after having acted or performed those instructions compared to having only heard or read the instructions (the enactment effect; Allen et al., 2020; Makri & Jarrold, 2021). In addition, drawing appears to benefit later word recognition and recall, compared to viewing or imagining word referents at learning (Fernandes et al., 2018; Wammes et al., 2019). In the studies described above, memory is typically tested in unimodal conditions that do not involve production, such as visual or auditory recognition. Overall this evidence points to a possible multi-modal encoding benefit: encoding may improve when more input (or output) channels are engaged (Mama & Icht, 2016; Wammes et al., 2019). Interestingly, recent evidence suggests that movement may confer a unique benefit to encoding. When particular component processes involved in drawing were isolated and compared, movement appeared to contribute relatively more to word memory than viewing or imagining the word referents. While word recognition was greatest after drawing, drawing blindly or simply tracing the outline of an image also increased recognition accuracy more than viewing or imagining images (Wammes et al., 2019). Thus, movement may make a unique contribution to multi-modal encoding.

The question remains: by what mechanisms could movement improve memory? Applying frameworks for action (movement) – perception coupling – one can assume that performing a movement should activate the corresponding percept that normally results from that movement, via motor-to-sensory predictions (Mathias et al., 2021; Miall & Wolpert, 1996). Reciprocal action-perception associations should additionally allow percepts, such as words or objects, to activate corresponding motor associations (Hommel, 2009; Koch et al., 2004; Pfister, 2019; Shin et al., 2010). Thus, movements are assumed to be tightly and reciprocally coupled to their corresponding percepts, which may help explain the effect of movement on memory. In accord with this idea, recent evidence suggests that motor regions may contribute directly to memory for items learned with movement. Participants learned novel words and their translations into their first language, with or without performing gestures that corresponded to the translations. In later memory tests, transcranial magnetic stimulation (TMS) over primary motor cortex slowed the recognition of novel word translations learned with gestures, compared to novel words learned without gestures (Mathias et al., 2021). The motor system may contribute to encoding via motor-to-sensory predictions (forward models), which may improve encoding and later retention.

From the viewpoint of a sensorimotor prediction hypothesis for movement-enhanced encoding, a necessary pre-condition for this hypothesis is that accurate predictions (for instance, accurate internal models) have been established through prior experience (Bar, 2007; Bubic et al., 2010; Guenther, 2006; Mathias et al., 2021; Miall & Wolpert, 1996). Forward models for example are assumed to be improved by learning movement-feedback contingencies, which then enable accurate motor-to-sensory predictions (Miall & Wolpert, 1996). Evidence from the music domain suggests that skilled performers with well-established audio-motor associations can use movement at encoding to enhance later recognition. Musicians showed greater recognition accuracy for melodies they had performed compared to those they had only listened to during learning (R. M. Brown & Palmer, 2012; Mathias et al., 2014). Additionally, musicians showed greater cortical responses to deviations within melodies they had performed at learning, compared to melodies they had only heard at learning (Mathias et al., 2014). Similarly, non-musicians who had practiced performing a musical sequence for 2 weeks showed greater cortical responses to sequence deviations compared to non-musicians who had learned the sequence by listening only (Lappe et al., 2008). These results suggest that when sensorimotor associations are established through experience, performing at learning may improve sensorimotor predictions, which may later aid recognition. The question is, could sensorimotor prediction help explain the production effect in the language domain? That is, do the acquired phoneme-motor associations of one’s native language play a role in the production effect?

A key question that has not yet been addressed is how much prior experience is needed for movement to benefit encoding. When participants have shown movement-related memory improvements, it could be assumed that they had prior experience to draw upon. The production effect has been demonstrated when participants studied words in their native language (Forrin et al., 2012; Kaushanskaya & Yoo, 2011; MacLeod et al., 2010; Ozubko & MacLeod, 2010; Zamuner et al., 2016), and when they learned non-words (and their referents) that followed the phonological rules of their native language (Kaushanskaya & Yoo, 2011; MacLeod et al., 2022). Notably, the production effect was not detected when participants learned non-words with an unfamiliar phonology (Kaushanskaya & Yoo, 2011). These findings raise the possibility that the production effect may rely on, or improve with, prior sensorimotor training, such as well-established phoneme-motor associations in one’s native language. The question then arises: how much experience may be necessary for movement to benefit memory? If sensorimotor prediction plays a role in movement-enhanced encoding, does the memory benefit increase with greater amounts of prior experience? In other words, does the production effect increase in one’s native language and decrease in a second language?

The current study examined the role of prior experience on the memory benefits of movement by examining the production effect in the context of bilingualism. Specifically, we examined whether the production effect increased when bilinguals encoded and recognized words in their first language compared to words in their second language. Importantly, we focused on unbalanced bilinguals whose experience in their first language was relatively greater than that of their second language. In two experiments, two independent groups of unbalanced bilinguals, German-English bilinguals (Experiment 1) and English-German bilinguals (Experiment 2), studied lists of German or English words (learning tasks) and later recognized words from the lists. Each participant performed a learning task followed by a recognition task twice: once with only German words and once with only English words. During learning, participants were presented with words on a screen one at a time, and they either read them silently or read them while also speaking them out loud. In a subsequent recognition task, participants were presented with new and old words, and responded “yes” or “no” according to whether they recognized each word or not. We predicted higher recognition accuracy for words that were spoken compared to those that were silently read (a production effect). Crucially, we also predicted a larger difference between spoken and silently read words in participants’ first language (L1) compared to their second language (L2), on the premise that the production effect may be influenced by sensorimotor predictions that are experience-dependent. Note that we here assume that “experience” can include the influence of both time (amount of exposure or practice) and age (sensitive periods for language acquisition): a second language may be practiced less and learned at a later age compared to a first language.

Experiment 1

Method

Participants

A sample size of N = 57 per experiment was estimated from an a priori power analysis. Because the forward model perspective does not make explicit assumptions about effect sizes, we instead estimated an effect size of η2p = .16 based primarily on a reported interaction between spoken versus silent encoding of linguistic items (non-words) and the familiarity of the material (familiar vs. unfamiliar phonology) (Kaushanskaya & Yoo, 2011), similar to the planned analysis for the current study. Power analysis using an alpha level of 0.05 and a power level of 0.80 suggested a sample size of N = 57. To verify participants’ knowledge of German and English we administered the LexTALE word identification task in each language (Lemhöfer & Broersma, 2012) in addition to a language background questionnaire (see below).

Sixty-six German-English bilingual volunteers from the RWTH Aachen University student community participated in the experiment and received course credits as compensation. Eight participants were excluded: two reported that they were not native German speakers, one did not confirm that they spoke English, one performed below a pre-determined 75% accuracy cutoff on the German LexTALE task, one performed below a pre-determined 50% accuracy cutoff on the English LexTALE task, and three performed more than 5% of the experiment trials incorrectly (incorrectly speaking or silently reading on a given trial). The final sample consisted of 58 participants, all of whom reported to be native German speakers with English as a second language (note that many participants spoke multiple languages, see Table 1), and they reported having normal or corrected-to-normal vision and to being neurologically healthy. The sample included 51 females and 53 right-handed participants with a mean age of 21.3 years (SD = 3.73, range 18–37 years). See Table 1 (German L1) for a summary of participants’ language backgrounds. Participants provided informed consent, and the study procedures were conducted according to the 1964 Declaration of Helsinki and its later amendments.

Materials

Stimuli consisted of words that were drawn from a pool of 120 English nouns and 120 German nouns. The English pool was the same 120 words listed in the Appendix of MacDonald and MacLeod (MacDonald & MacLeod, 1998) and later used in the experiments reported by MacLeod and colleagues (MacLeod et al., 2010). English nouns were five to ten letters long and had frequencies greater than 30 per million (see MacLeod et al., 2010). The German words were selected from the SUBTLEX-DE database (Brysbaert et al., 2011) such that they matched the English words in length and in mean log frequency, as recommended by Brysbaert and colleagues (Brysbaert et al., 2011). The words presented to each participant in each language were drawn randomly from each pool (see below).Footnote 1 In addition, items for a practice task consisted of the German words for the numbers 1 through 10.

Procedure

The experiment was conducted online using Gorilla (gorilla.sc). Participants used their own computers to complete the study and were required to use a laptop or a desktop with a microphone. All instructions were presented in German, except for the instructions for the English LexTALE and the instructions for the English learning and recognition tasks. Before beginning the experiment, participants were asked to test their microphone by making a short recording of their voice and playing it back. Participants were asked to exit the experiment if the recording did not work. All vocal responses during the experiment were recorded by the participant’s microphone.

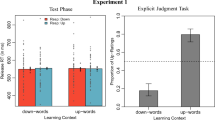

Participants first completed two word-identification tasks (LexTALE task), the first one in German and the second one in English. On each trial participants were presented with a string of letters, and they were instructed to decide whether it was an existing word in the respective language by pressing the “J” key if they thought it was an existing word (even if they did not know its meaning) and the “K” key if they thought it was not an existing word (or were not sure). Participants were given 5 seconds (s) to respond on each trial. Each task included 60 trials, half of which were words and half of which were non-words, presented in a pseudorandom order.

Participants then completed a brief practice task in which ten German number words were presented one at a time in either blue or white, in a pseudorandom order, against a grey background. Participants were instructed to speak aloud the words presented in blue, and to silently read the words presented in white. Each trial began with a blank screen shown for 500 milliseconds (ms), followed by the presentation of a word in either blue or white in the center of the screen, at which point the microphone began recording. Each word remained on-screen for 2 s, after which the recording stopped and the next trial began automatically. The aim of the practice task was to ensure that participants understood when to speak aloud and when to remain silent.

The main part of the experiment consisted of a learning task followed by a recognition task, both of which were completed once with only English words and once with only German words, the order of which was counter-balanced across participants. In other words, each participant completed the learning and recognition task first in one language, and then completed the learning and recognition task again in the other language. The procedure of the learning and recognition tasks was modelled closely after MacLeod and colleagues (MacLeod et al., 2010) in order to replicate the classical production effect and to facilitate comparison between the present and previous results. For each participant and for each language (German and English), 80 words were randomly selected from the 120-word pool. Each of those 80 words were then randomly divided into two sets of 40 words, such that 40 words were presented in blue and 40 words were presented in white during the learning task, in a random order. In addition, 20 words were then randomly selected from each set of 40 words, and an additional 20 words were randomly selected from the remaining 40 words that were not presented at learning. These 60 words were presented in yellow during the recognition task.

During the learning task, each trial began with a blank screen presented for 500 ms, followed by the presentation of a blue or white word against a grey background in the center of the screen, at which point the microphone began recording. Each word remained on-screen for 2 s, after which the recording stopped and the next trial began automatically. The learning task consisted of 80 trials. The recognition task began immediately after the learning task, after a short set of instructions. During the recognition task, each trial began with a blank screen presented for 500 ms, followed by the presentation of a yellow word against a grey background in the upper part of the screen and two response buttons, one labelled “Ja” or “Yes” and one labelled “Nein” or “No” (for the German or English recognition task, respectively), in the lower part of the screen. The word remained on-screen until the participant clicked with their mouse on one of the response buttons, after which the next trial began immediately. The recognition task consisted of 60 trials.

Participants ended the experiment by answering questions related to their language background and demographic information, and they were asked to verify whether they were neurologically healthy. The entire experiment lasted approximately 30 min.

Audio recordings from each learning task trial for each participant were coded offline by hand according to whether participants correctly remained silent or spoke aloud according to the instructions presented during the task. For any trial that did not comply with the instructions, the corresponding item for that participant was discarded from further analysis.Footnote 2

Results

Recognition

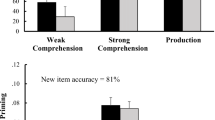

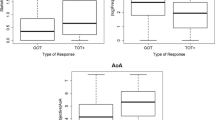

Recognition accuracy was assessed by calculating both hit rates as well as d-prime scores (hit rate minus false alarm rate, both z-normalized) in order to account for possible response bias, similar to previously reported procedures (Fernandes et al., 2018; Wammes et al., 2019). Hit rates and false alarm rates are reported in Table 2. A 2 (Production: Spoken vs. Silent) × 2 (Language: L1 (German) vs. L2 (English)) within-subjects ANOVA on hit rates showed main effects of Production (Spoken > Silent, F(1, 57) = 203.99, p < .001, η2G = .418 , η2p = .78) and Language (English (L2) > German (L1), F(1, 57) = 18.63, p < .001, η2G = .043 , η2p = .25), and no interaction (F(1, 57) = 2.02, p = .161, η2G = .005, η2p = .03). A second 2 × 2 within-subjects ANOVA (with the same fixed factor structure) on d-prime scores showed main effects of Production (Spoken > Silent, F(1, 57) = 95.70, p < .001, η2G = .187, η2p = .63) and Language (English (L2) > German (L1), F(1, 57) = 21.19, p < .001, η2G = .089, η2p = .27). In addition, a Production by Language interaction was shown (F(1, 57) = 14.86, p < .001, η2G = .025, η2p = .21) such that recognition accuracy was relatively greater when speaking English (L2) words aloud compared to speaking German (L1) words aloud (Spoken English: M = 3.28, SE = 0.275, 95%CI = [2.733 3.83], Spoken German: M = 1.99, SE = 0.157, 95%CI = [1.672 2.30], difference = 1.296, SE = 0.263), t = 4.926, p < .0001, Hedge’s g = .72). The difference between recognition accuracy for English and German words that were silently read was relatively smaller (Silent English: M = 1.53, SE = 0.146, 95%CI = [1.236 1.82], Silent German: M = 1.12, SE = 0.101, 95%CI = [0.912 1.32], difference = 0.413, SE = 0.161, t = 2.562, p = .0131, Hedge’s g = .42) (see Fig. 1a).

Production by language: Experiment 1, Experiment 2, and Combined. Note. Effects of Production (Read Aloud = Spoken, vs. Read Silently = Silent) and Language (L1 vs. L2) on recognition accuracy (d-prime scores) for each language group, and across groups. (a) German – English bilinguals (Experiment 1). (b) English – German bilinguals (Experiment 2). (c) Both groups combined (L1 = German or English, L2 = German or English). In all panels (a, b, and c) marginal means are plotted and error bars represent 95% confidence intervals

Discussion

Experiment 1 showed a robust production effect in both a first and a second language. In addition, we observed that recognition accuracy (as measured by d-prime scores) was greater after speaking words aloud in a second language (English) compared to speaking words aloud in a first language (German). This result suggests a greater production effect in a second language compared to a first language, contrary to our initial prediction.

In order to generalize this finding both to a different bilingual group and to a larger and more diverse population (not limited to a German university), a second experiment presented the same task and stimuli to unbalanced English-German bilinguals recruited via Prolific, an online recruiting service available to people around the world. Based on the findings of Experiment 1, we predicted that English-German bilinguals would show a greater production effect for German words (a second language) compared to English words (their first language).

Experiment 2

Method

Participants

Eighty English-German bilingual volunteers participated in the study via Prolific (prolific.co) and received monetary compensation (£3.75 total, approximately £7.5/h). Pre-screening criteria limited online recruitment to individuals who had upon registration with Prolific declared themselves to be between the ages of 18 to 35 years, to have normal or corrected-to-normal vision, and to be both native English speakers and fluent in German. After completing the study, participants were asked to report their age, vision correction, and if they were neurologically healthy. A total of 23 participants were excluded (29%) based on pre-determined criteria: one participant did not follow the task instructions on more than 5% of the trials, nine participants did not report that they were neurologically healthy, five participants reported that they were not native English speakers, seven participants scored below 50% on the German LexTALE, and one participant scored well below 75% on the English LexTALE (63%). Because of the high attrition rate, one participant was included who scored very close to the English LexTALE cutoff (73%) and met all other eligibility criteria. This yielded a final sample of N = 57, all of whom reported that they were native English speakers with German as a second language (note that some participants spoke multiple languages, see Table 1), and they reported having normal or corrected-to-normal vision and to be neurologically healthy. The sample included 35 females and 49 right-handed participants with a mean age of 26.4 years (SD = 4.88, range 19–35 years). See Table 1 (English L1) for a summary of participants’ language background. Participants provided informed consent, and the study procedures were conducted according to the 1964 Declaration of Helsinki and its later amendments.

Materials

The same set of 120 English and 120 German nouns were used. For the practice task, the English words for the numbers 1 through 10 were presented.

Procedure

The experiment was conducted online using Gorilla. All aspects of the procedure were identical to those of Experiment 1, with the following exceptions. All instructions were presented in English, except for the instructions for the German LexTALE and the instructions for the German learning and recognition tasks. Participants completed the English LexTALE followed by the German LexTALE.

Results

Recognition

Hit rates and false alarm rates are reported in Table 3. A 2 (Production: Spoken vs. Silent) × 2 (Language: L1 (English) vs. L2 (German)) within-subjects ANOVA on hit rates showed main effects of Production (Spoken > Silent, F(1, 56) = 313.35, p < .001, η2G = .558 , η2p = .85) and Language (German (L2) > English (L1), F(1, 56) = 4.88, p = .031, η2G = .012 , η2p = .08), and no interaction (F(1, 56) = 0.12, p = .735, η2G < .001 , η2p = .002). A second 2 × 2 within-subjects ANOVA (with the same fixed factor structure) on d-prime scores showed a main effect of Production (Spoken > Silent, F(1, 56) = 112.07, p < .001, η2G = .217, η2p = .67), and no main effect of Language (F(1, 56) = 2.22, p = .141, η2G = .013, η2p = .04). A near-significant Production by Language interaction was shown (F(1, 56) = 3.74, p = .058, η2G = .006, η2p = .06), such that recognition accuracy was marginally greater when speaking aloud in German compared to speaking aloud in English (Spoken German: M = 3.28, SE = 0.305, 95%CI = [2.671 3.89], Spoken English: M = 2.67, SE = 0.216, 95%CI = [2.239 3.10], difference = 0.611, SE = 0.339), t = 1.804, p = .0766, Hedge’s g = .30), whereas there was little difference in recognition accuracy between silently reading in German versus English (Silent German: M = 1.35, SE = 0.158, 95%CI = [1.031 1.66], Silent English: M = 1.22, SE = 0.141, 95%CI = [0.942 1.51], difference = 0.123, SE = 0.195), t = 0.628, p = .5323, Hedge’s g = .11) (see Fig. 1b).

Comparison of Experiments 1 and 2

In order to assess the production effect in a first versus a second language independently of language, we directly compared the two language groups. A 2 (Production: Spoken vs. Silent) × 2 (Language: L1 vs. L2) × 2 (Group: native German speakers vs. native English speakers) mixed ANOVA on hit rates showed main effects of Production (Spoken > Silent, F(1, 113) = 516.00, p < .001, η2G = .493 , η2p = .82) and Language (L2 > L1, F(1, 113) = 21.13, p < .001, η2G = .025, η2p = .16), and a Production by Group interaction (F(1, 113) = 11.57, p < .001, η2G = .021, η2p = .09) such that the hit rate for silently read words was greater for the German speakers (M = 63.7, SE = 1.98, 95%CI = [59.8 67.7]) than for the English speakers (M = 56.8, SE = 2.00, 95%CI = [52.9 60.8]) (difference = 6.91, SE = 2.81, t(113) = 2.456, p = .0156, Hedge’s g = .46). The Production by Language interaction did not reach significance (F(1, 113) = 1.69, p = .197, η2G = .002, η2p = .01). A second 2 × 2 × 2 mixed ANOVA (with the same fixed factor structure) on d-prime scores showed main effects of Production (Spoken > Silent, F(1, 113) = 207.90, p < .001, η2G = .202, η2p = .65) and Language (L2 > L1, F(1, 113) = 15.77, p < .001, η2G = .04, η2p = .12). In addition, a Production by Language interaction was shown (F(1, 113) = 16.21, p < .001, η2G = .013, η2p = .13), such that recognition accuracy was relatively greater after speaking aloud in one’s L2 compared to speaking aloud in one’s L1 (Spoken L2: M = 3.28, SE = .2051, 95%CI = [2.876 3.69], Spoken L1: M = 2.33, SE = .1331, 95%CI = [2.065 2.59], difference = 0.964, SE = 0.214), t = 4.455, p < .0001, Hedge’s g = .50), compared to silently reading in one’s L2 versus L1 (Silent L2: M = 1.44, SE = .1073, 95%CI = [1.225 1.65], Silent L1: M = 1.17, SE = .0867, 95%CI = [0.998 1.34], difference = 0.268, SE = 0.126, t = 2.117, p = .0364, Hedge’s g = .26) (see Fig. 1c). These results were additionally replicated using a generalized linear mixed effects model analysis (see Appendix 1).

Discussion

Experiment 2 showed a robust production effect in both a first and a second language, similar to Experiment 1. In addition, we observed that recognition accuracy (as measured by d-prime scores) was marginally greater after speaking words aloud in a second language (German) compared to doing so in a first language (English), in an independent group of unbalanced bilinguals. Thus, the English-German bilinguals showed a similar, though less pronounced, L2 advantage for production. The less-pronounced L2 production effect may have been due to greater L2 background variability (note, e.g., the standard deviations in Table 1) or to other sources of variability that were present due to sampling from a larger population. A direct comparison of the two language groups suggested a consistent pattern across the two groups: speaking aloud yielded greater recognition accuracy (d-prime) in a second language (German or English) compared to a first language (German or English). Additionally, hit rates for all silently read words were higher for the native German speakers compared to the native English speakers, possibly due to different response biases between the two groups.

General discussion

Summary of findings

We observed evidence that the production effect may be greater in a second language compared to a first language. Bilinguals showed higher recognition accuracy for words they had spoken compared to words they had silently read, replicating the production effect. Furthermore, recognition accuracy for spoken words was greater in a second language (L2) compared to a first language (L1). This pattern was generally consistent across two language groups: German-English bilinguals and English-German bilinguals (all performing the same task with the same stimuli). Below we consider how domain-specific (i.e., psycholinguistic) and domain-general processes could contribute to the findings.

Psycholinguistic processes

One way to interpret the results is to consider whether phonological processing plays a role in the production effect. Reading in a second language may have involved transforming written words (orthographic form) into the corresponding phonemes (sounds) and then into meaning (semantics), while reading in a first language may have involved associating written words directly with their meaning (Coltheart et al., 2001; Jobard et al., 2003; Seidenberg, 2005; Seidenberg & McClelland, 1989). Some evidence suggests that sound-meaning associations may be utilized less with greater reading experience (Wise Younger et al., 2017). In early childhood, phonological abilities and activation in regions implicated in phonological processing (e.g., inferior parietal regions) were associated with later orthographic abilities (Sprenger-Charolles et al., 2003) and reading improvements (Wise Younger et al., 2017). Adults learning a second language also showed inferior parietal activation, which additionally correlated with proficiency gains (Barbeau et al., 2017). Unbalanced bilinguals showed greater activation in brain regions involved in articulation and orthographic-phonological associations (inferior frontal gyrus, premotor cortex, fusiform gyrus) when reading out loud in their second language compared to their first language (Berken et al., 2015). If reading in a second language relies more on phonological processing than in a first language, articulating L2 words might prime phonological associations, which may increase the production effect. However, from this perspective one could also assume that articulation is redundant to phonological processing in a second language and thus may not offer a novel encoding process.

Contrary to the notion that phonological processing may decrease when reading in a first language, some evidence suggests that skilled readers are influenced by phonological features of words (Van Orden, 1987). If we assume then that reading in a first language could utilize phonological processing to an equal or even greater extent than a second language, we could suppose that articulation offers a unique or non-redundant form of processing to L2 reading that in turn benefits encoding. Thus, remaining questions include: (1) the degree to which a second language relies on phonological processing, (2) whether articulation complements or primes phonological codes, and (3) whether phonology contributes to the production effect. To date there is some evidence to support a role of phonological processing in the production effect. An fMRI study showed that speaking words aloud at encoding increased response in both somatosensory and auditory regions (e.g., planum temporale) implicated in phonological processing, compared to either silently reading or speaking aloud a control word (“check”), and these regions correlated with improved recollection (Bailey et al., 2021). At the same time, a recent study found little to no modulation of the production effect when phonologically similar distractors were present at recognition (Fawcett et al., 2022). Further work will be needed to answer these questions.

Domain-general processes

Another way to interpret the results is to consider the role of domain-general processes, for example the cognitive control requirements of articulating in one’s first versus second language. One possibility is that reading or remembering words in a second language is less demanding compared to a first language. Some research suggests that recognition accuracy is higher for words in a second language compared to a first language (Durgunoǧlu & Roediger, 1987; Francis et al., 2018; Francis & Gutiérrez, 2012), which we replicated as main effects in the current study. It was proposed that L2 words may be less susceptible to contextual or semantic competition during memory retrieval. A similar interpretation has been used to explain superior recognition of low-frequency compared to high-frequency words (Francis & Strobach, 2013). If we apply this interpretation to the current findings, we still need to explain the increased production effect in a second language, thus an additional mnemonic process would need to be assumed.

Additionally, speaking out loud in a second language may be more demanding than speaking out loud in a first language, which may lead to a memory advantage. For instance, the desirable difficulty perspective posits that learning outcomes should improve when greater cognitive effort is applied at encoding, provided that the learning task is not overly challenging (Bjork & Bjork, 2019, 2020; Eskenazi & Nix, 2021). Consistent with this idea, the production effect was amplified when participants spoke words out loud following a short retention delay after reading the words (Mama & Icht, 2018). Delayed vocal production at encoding resulted in greater recall accuracy compared to both silent reading and immediate vocal production at encoding. Additionally, delayed vocal production resulted in greater recall accuracy when the word was presented only once before delayed production, compared to when the word was presented again (unpredictably) at production. The authors attributed recall improvements to the increased difficulty of encoding items by both reading and maintaining items in working memory, compared to only reading (Mama & Icht, 2018). According to this perspective, speaking in a second language may have improved recognition memory due to its difficulty relative to speaking in a first language or reading silently in a second language. Further work is needed to clarify the processes (e.g., arousal) that contribute to memory-enhancing difficulty.

One possibility is that increased attention to L2 words at encoding improved later retention, particularly when they were spoken. For example, word recall improved when participants made more errors while pronouncing words at encoding, which may have led to increased attentional monitoring (Phaf & Wolters, 1993). Other work suggested that the production effect may decrease in the presence of distraction: the production effect failed to be detected when amplitude-fluctuating background noise or background speech was presented during encoding by reading aloud or silently, but the effect remained in the presence of steady-state background noise (Mama et al., 2018). In the current study, it could be that L2 words increased attention when they were spoken, compared to silently reading them or compared to speaking L1 words. Further work would be required to clarify when and how attention is engaged during the process of speaking in a second language. Speaking may increase the salience of L2 words, if, for example, articulation or auditory feedback captures (orients) attention. Speaking in a second language may additionally increase the monitoring of speech output (e.g., Phaf & Wolters, 1993). Additionally, attention may be engaged prior to speaking. Recent work examining electrophysiological signatures of the production effect showed a P3b response increase during encoding when participants saw the instruction to speak out loud (prior to speaking), compared to seeing the instruction to remain silent (Hassall et al., 2016; Zhang et al., 2023) or to say a control word (“check”) (Zhang et al., 2023). The authors suggested that speech preparation, involving attention or other preparatory processes, may play a role in the production effect (Zhang et al., 2023). It would be interesting to see if these findings extend to a second language.

Based on the available evidence, we suggest that both psycholinguistic and domain-general processes provide plausible explanations for the current results and both merit further investigation. The influence of bilingualism on the production effect raises further questions about the role of language production processes in memory. Neuroimaging evidence is consistent with a role for phonological processes in the production effect (Bailey et al., 2021) and in second-language reading (Berken et al., 2015), yet some work also calls this role into question (e.g., Fawcett et al., 2022). In addition, it is reasonable to assume that speaking in a first and second language have different cognitive demands that could in turn impact both language production and encoding. For example, phonological processing when reading in a second language could involve directing attention to phonological planning or output. A combination of attentional and phonological processes may also be helpful for explaining seemingly contradictory findings, such as a production effect increase when singing (Quinlan & Taylor, 2019), but a decrease when speaking as a popular character (Wakeham-Lewis et al., 2022). Considering multiple processes (Zhang et al., 2023) may help advance our understanding of the memory benefits of production.

The production effect and expertise

The current study suggests that the production effect does not depend on extensive lifelong sensorimotor experience, such as the experience that underlies one’s first language. Previous work suggests that some experience is still necessary (see, e.g., Lappe et al., 2008). The production effect has been shown for novel words and novel word referents after training, when those novel words used native-language phonology. English speakers more accurately recognized English-sounding non-words (MacLeod et al., 2022) or their referents (English words or images) (Kaushanskaya & Yoo, 2011; Zamuner et al., 2016) when the non-words were spoken aloud compared to read silently. When non-words were phonologically unfamiliar, no increase in referent recognition after speaking aloud was detected (Kaushanskaya & Yoo, 2011). Interestingly, performing gestures helped people learn novel words with a less-familiar (non-native) phonology. Participants more accurately recognized the novel word translations they had studied while performing gestures corresponding to the referents compared to those they studied while viewing pictures of the referents (Mathias et al., 2021). Thus, while some experience may be critical for a production effect, extensive experience does not seem to be necessary. Whether there is an optimal level of experience remains to be seen.

Limitations

It is important to note that first and second languages may be processed differently as a function of either time-dependent factors (exposure/practice) or sensitive periods for language acquisition. For example, when unbalanced bilinguals were matched on language proficiency to balanced (simultaneous) bilinguals, language processing differences were still seen between the groups (e.g., greater phonologically relevant neural activation when reading in a second language) (Berken et al., 2015). In the current study we cannot disentangle the influences of acquisition age and years of exposure or practice to the L2 production effect.

To the best of our knowledge, the current results are among the first to report a production effect outside of participants’ native language, and this potentially involves pronunciation challenges. We focused our task instructions and data inclusion on task compliance: we did not provide any specific instructions for pronunciation, and responses were included if they complied with instructions to speak or remain silent. We expected pronunciations to differ greatly across participants, which precluded adopting strict pronunciation criteria. We also reasoned that pronunciation errors should work against the production effect, as words pronounced differently might be difficult to recognize later, which would mean that our reported effect sizes may be conservative.Footnote 3

Another limitation of this study is that we cannot draw strong conclusions about how language proficiency influences the production effect at an individual level. That is, the language background information and the LexTALE scores we collected were used only to establish that the groups as a whole were relatively unbalanced in terms of average L1 and L2 proficiency. Although the LexTALE task is often used to assess proficiency, it showed low-to-modest correlations with a standardized language assessment protocol (Puig-Mayenco et al., 2023). The authors argued that the LexTALE task should not be used as a diagnostic measure of global proficiency for a given individual. Further work using comprehensive and diagnostic language testing would be needed to examine how individual proficiency would predict a single individual’s production effect.

Finally, it should be noted that the languages examined here, German and English, share phonological similarities. The extent to which an increased L2 production effect is influenced by the L1-L2 similarity is an open question. If phonological similarity decreases the L2 production effect (if speaking in an L2 was too easy here, for example), then we may have underestimated the effect size of an L2 production effect. A valuable next step would be to examine bilinguals who speak highly dissimilar languages. In addition, many participants spoke multiple languages (see Table 1), particularly Experiment 1 participants, who commonly spoke Dutch (related to German and English), French, or Spanish (both less related to German or English). Multilingualism could potentially increase or decrease the L2 production effect, for example by highlighting differences or similarities between German and English, respectively. Both possibilities are suggested by exploratory regression analyses on individual L2 production effect scores (Appendix 2): speaking one additional language related to a decreased L2 production effect in Experiment 1, but speaking three additional languages related to an increased L2 production effect in Experiment 2 (although only two participants spoke three additional languages in Experiment 2, see Appendix 2). The potential influences of multilingualism would be an interesting topic for further study.

Conclusion

We examined the role of prior experience (time- or age-dependent) on the encoding benefits of movement by examining the production effect in unbalanced bilinguals. We observed a greater production effect in a second language compared to a first language. Participants more accurately recognized words they had spoken aloud in their second language compared to their first language. This result was relatively consistent across two independent groups of unbalanced bilinguals (German-English and English-German bilinguals). We propose that psycholinguistic and/or domain-general processes may account for the findings. For example, reading in a second language may utilize phonological-motor associations, and/or increased effort or attention may be engaged in speaking in a second language. The findings here add further insight into the possible mechanisms behind encoding by movement (e.g., the production effect) by showing that less prior experience may amplify these memory benefits.

Data availability

The raw, anonymized datasets are freely available on psycharchives.org.

Code availability

The custom R code used for the current study are freely available on psycharchives.org.

Notes

A misspelling of one German word (President rather than Präsident) was corrected approximately halfway through Experiment 2 and was also removed from all analyses. There were also three German words that were plural versions of other words in the pool (e.g., “Menschen”, plural of “Mensch”). All results in Experiments 1 and 2 remained unchanged when these words were removed from the analyses.

Trials where participants correctly spoke aloud were included even if pronunciation was unclear or contained errors. We reasoned that pronunciation errors could lead to recognition errors, which should reduce the predicted production effect.

Additionally, a subset of participants in Experiment 2 (n = 51) were rated by bilingual coders as having overall “good,” “ok,” or “poor” L1 and L2 pronunciation. Visual examination showed that “good” L2 pronouncers (n = 34) showed a slightly numerically larger L2 production effect than “ok” L2 pronouncers (n = 15). Only two participants received a “poor” rating, and did so in both their L2 and their L1. Although the ratings are crude, they suggest that including a variety of pronunciations may indeed have decreased the average size of the L2 production effect.

References

Allen, R. J., Hill, L. J. B., Eddy, L. H., & Waterman, A. H. (2020). Exploring the effects of demonstration and enactment in facilitating recall of instructions in working memory. Memory and Cognition, 48(3), 400–410. https://doi.org/10.3758/s13421-019-00978-6

Bailey, L. M., Bodner, G. E., Matheson, H. E., Stewart, B. M., Roddick, K., O’Neil, K., Simmons, M., Lambert, A. M., Krigolson, O. E., Newman, A. J., & Fawcett, J. M. (2021). Neural correlates of the production effect: An fMRI study. Brain and Cognition, 152, 105757. https://doi.org/10.1016/j.bandc.2021.105757

Bar, M. (2007). The proactive brain: Using analogies and associations to generate predictions. Trends in Cognitive Sciences, 11(7), 280–289. https://doi.org/10.1016/j.tics.2007.05.005

Barbeau, E. B., Chai, X. J., Chen, J.-K., Soles, J., Berken, J., Baum, S., Watkins, K. E., & Klein, D. (2017). The role of the left inferior parietal lobule in second language learning: An intensive language training fMRI study. Neuropsychologia, 98, 169–176. https://doi.org/10.1016/j.neuropsychologia.2016.10.003

Bates, D., Mächler, M., Bolker, B., & Walker, S. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67(1). https://doi.org/10.18637/jss.v067.i01

Berken, J. A., Gracco, V. L., Chen, J. K., Watkins, K. E., Baum, S., Callahan, M., & Klein, D. (2015). Neural activation in speech production and reading aloud in native and non-native languages. NeuroImage, 112, 208–217. https://doi.org/10.1016/j.neuroimage.2015.03.016

Bjork, R. A., & Bjork, E. L. (2019). Forgetting as the friend of learning: Implications for teaching and self-regulated learning. Advances in Physiology Education, 43(2), 164–167. https://doi.org/10.1152/advan.00001.2019

Bjork, R. A., & Bjork, E. L. (2020). Desirable difficulties in theory and practice. Journal of Applied Research in Memory and Cognition, 9(4), 475–479. https://doi.org/10.1016/j.jarmac.2020.09.003

Brown, R. M., & Palmer, C. (2012). Auditory-motor learning influences auditory memory for music. Memory & Cognition, 40(4), 567–578. https://doi.org/10.3758/s13421-011-0177-x

Brown, V. A. (2021). An introduction to linear mixed-effects modeling in R. Advances in Methods and Practices in Psychological Science, 4(1), 2515245920960351. https://doi.org/10.1177/2515245920960351

Brysbaert, M., Buchmeier, M., Conrad, M., Jacobs, A. M., Bölte, J., & Böhl, A. (2011). The word frequency effect. Experimental Psychology, 58(5), 412–424. https://doi.org/10.1027/1618-3169/a000123

Bubic, A., von Cramon, D. Y., & Schubotz, R. I. (2010). Prediction, cognition and the brain. Frontiers in Human Neuroscience, 4(March), 25. https://doi.org/10.3389/fnhum.2010.00025

Coltheart, M., Rastle, K., Perry, C., Langdon, R., & Ziegler, J. (2001). DRC: A dual route cascaded model of visual word recognition and reading aloud. Psychological Review, 108(1), 204–256. https://doi.org/10.1037/0033-295X.108.1.204

Damsgaard, L., Elleby, S. R., Gejl, A. K., Malling, A. S. B., Bugge, A., Lundbye-Jensen, J., Poulsen, M., Nielsen, G., & Wienecke, J. (2020). Motor-enriched encoding can improve children’s early letter recognition. Frontiers in Psychology, 11(June), 1–12. https://doi.org/10.3389/fpsyg.2020.01207

Durgunoǧlu, A. Y., & Roediger, H. L. (1987). Test differences in accessing bilingual memory. Journal of Memory and Language, 26(4), 377–391. https://doi.org/10.1016/0749-596X(87)90097-0

Elias, M., & Degani, T. (2022). Cross-language interactions during novel word learning: The contribution of form similarity and participant characteristics. Bilingualism: Language and Cognition, 25(4), 548–565. https://doi.org/10.1017/S1366728921000857

Eskenazi, M. A., & Nix, B. (2021). Individual differences in the desirable difficulty effect during lexical acquisition. Journal of Experimental Psychology: Learning, Memory, and Cognition, 47(1), 45–52. https://doi.org/10.1037/xlm0000809

Fawcett, J. M., Bodner, G. E., Paulewicz, B., Rose, J., & Wakeham-Lewis, R. (2022). Production can enhance semantic encoding: Evidence from forced-choice recognition with homophone versus synonym lures. Psychonomic Bulletin & Review, 29(6), 2256–2263. https://doi.org/10.3758/s13423-022-02140-x

Fernandes, M. A., Wammes, J. D., & Meade, M. E. (2018). The surprisingly powerful influence of drawing on memory. Current Directions in Psychological Science, 27(5), 302–308. https://doi.org/10.1177/0963721418755385

Forrin, N. D., MacLeod, C. M., & Ozubko, J. D. (2012). Widening the boundaries of the production effect. Memory and Cognition, 40(7), 1046–1055. https://doi.org/10.3758/s13421-012-0210-8

Francis, W. S., & Gutiérrez, M. (2012). Bilingual recognition memory: Stronger performance but weaker levels-of-processing effects in the less fluent language. Memory & Cognition, 40(3), 496–503. https://doi.org/10.3758/s13421-011-0163-3

Francis, W. S., & Strobach, E. N. (2013). The bilingual L2 advantage in recognition memory. Psychonomic Bulletin & Review, 20(6), 1296–1303. https://doi.org/10.3758/s13423-013-0427-y

Francis, W. S., Taylor, R. S., Gutiérrez, M., Liaño, M. K., Manzanera, D. G., & Penalver, R. M. (2018). The effects of bilingual language proficiency on recall accuracy and semantic clustering in free recall output: Evidence for shared semantic associations across languages. Memory, 26(10), 1364–1378. https://doi.org/10.1080/09658211.2018.1476551

Guenther, F. H. (2006). Cortical interactions underlying the production of speech sounds. Journal of Communication Disorders, 39(5), 350–365. https://doi.org/10.1016/j.jcomdis.2006.06.013

Hassall, C. D., Quinlan, C. K., Turk, D. J., Taylor, T. L., & Krigolson, O. E. (2016). A preliminary investigation into the neural basis of the production effect. Canadian Journal of Experimental Psychology / Revue Canadienne de Psychologie Expérimentale, 70(2), 139–146. https://doi.org/10.1037/cep0000093

Hommel, B. (2009). Action control according to TEC (theory of event coding). Psychological Research, 73(4), 512–526. https://doi.org/10.1007/s00426-009-0234-2

Jobard, G., Crivello, F., & Tzourio-Mazoyer, N. (2003). Evaluation of the dual route theory of reading: A metanalysis of 35 neuroimaging studies. NeuroImage, 20(2), 693–712. https://doi.org/10.1016/S1053-8119(03)00343-4

Kaushanskaya, M., & Yoo, J. (2011). Rehearsal effects in adult word learning. Language and Cognitive Processes, 26(1), 121–148. https://doi.org/10.1080/01690965.2010.486579

Koch, I., Keller, P., & Prinz, W. (2004). The Ideomotor approach to action control: Implications for skilled performance. International Journal of Sport and Exercise Psychology, 2(4), 362–375. https://doi.org/10.1080/1612197x.2004.9671751

Lappe, C., Herholz, S. C., Trainor, L. J., & Pantev, C. (2008). Cortical plasticity induced by short-term unimodal and multimodal musical training. The Journal of Neuroscience, 28(39), 9632–9639. https://doi.org/10.1523/JNEUROSCI.2254-08.2008

Lemhöfer, K., & Broersma, M. (2012). Introducing LexTALE: A quick and valid lexical test for advanced learners of English. Behavior Research Methods, 44(2), 325–343. https://doi.org/10.3758/s13428-011-0146-0

MacDonald, P. A., & MacLeod, C. M. (1998). The influence of attention at encoding on direct and indirect remembering. Acta Psychologica, 98(2–3), 291–310. https://doi.org/10.1016/s0001-6918(97)00047-4

MacLeod, C. M., Gopie, N., Hourihan, K. L., Neary, K. R., & Ozubko, J. D. (2010). The production effect: Delineation of a phenomenon. Journal of Experimental Psychology: Learning, Memory, and Cognition, 36(3), 671–685. https://doi.org/10.1037/a0018785

MacLeod, C. M., Ozubko, J. D., Hourihan, K. L., & Major, J. C. (2022). The production effect is consistent over material variations: Support for the distinctiveness account. Memory, 30(8), 1000–1007. https://doi.org/10.1080/09658211.2022.2069270

Makri, A., & Jarrold, C. (2021). Investigating the underlying mechanisms of the enactment effect: The role of action–object bindings in aiding immediate memory performance. Quarterly Journal of Experimental Psychology, 74(12), 2084–2096. https://doi.org/10.1177/17470218211019026

Mama, Y., Fostick, L., & Icht, M. (2018). The impact of different background noises on the production effect. Acta Psychologica, 185, 235–242. https://doi.org/10.1016/j.actpsy.2018.03.002

Mama, Y., & Icht, M. (2016). Auditioning the distinctiveness account: Expanding the production effect to the auditory modality reveals the superiority of writing over vocalising. Memory, 24(1), 98–113. https://doi.org/10.1080/09658211.2014.986135

Mama, Y., & Icht, M. (2018). Production on hold: Delaying vocal production enhances the production effect in free recall. Memory, 26(5), 589–602. https://doi.org/10.1080/09658211.2017.1384496

Mathias, B., Palmer, C., Perrin, F., & Tillmann, B. (2014). Sensorimotor learning enhances expectations during auditory perception. Cerebral Cortex, 25(March), 1–17. https://doi.org/10.1093/cercor/bhu030

Mathias, B., Waibel, A., Hartwigsen, G., Sureth, L., Macedonia, M., Mayer, K. M., & von Kriegstein, K. (2021). Motor cortex causally contributes to vocabulary translation following sensorimotor-enriched training. The Journal of Neuroscience, 41(41), 8618–8631. https://doi.org/10.1523/JNEUROSCI.2249-20.2021

Miall, R. C., & Wolpert, D. M. (1996). Forward models for physiological motor control. Neural Networks, 9(8), 1265–1279. https://doi.org/10.1016/S0893-6080(96)00035-4

Murray, D. J. (1965). Vocalization-at-presentation, auditory presentation and immediate recall. Nature, 207(5000), 1011–1012. https://doi.org/10.1038/2071011a0

Ozubko, J. D., Hourihan, K. L., & MacLeod, C. M. (2012). Production benefits learning: The production effect endures and improves memory for text. Memory, 20(7), 717–727. https://doi.org/10.1080/09658211.2012.699070

Ozubko, J. D., & MacLeod, C. M. (2010). The production effect in memory: Evidence that distinctiveness underlies the benefit. Journal of Experimental Psychology: Learning Memory and Cognition, 36(6), 1543–1547. https://doi.org/10.1037/a0020604

Pfister, R. (2019). Effect-based action control with body-related effects: Implications for empirical approaches to ideomotor action control. Psychological Review, 126(1), 153–161. https://doi.org/10.1037/rev0000140

Phaf, R. H., & Wolters, G. (1993). Attentional shifts in maintenance rehearsal. The American Journal of Psychology, 106(3), 353. https://doi.org/10.2307/1423182

Puig-Mayenco, E., Chaouch-Orozco, A., Liu, H., & Martín-Villena, F. (2023). The LexTALE as a measure of L2 global proficiency: A cautionary tale based on a partial replication of Lemhöfer and Broersma (2012). Linguistic Approaches to Bilingualism, 13(3), 299–314. https://doi.org/10.1075/lab.22048.pui

Quinlan, C. K., & Taylor, T. L. (2019). Mechanisms underlying the production effect for singing. Canadian Journal of Experimental Psychology / Revue Canadienne de Psychologie Expérimentale, 73(4), 254–264. https://doi.org/10.1037/cep0000179

R Core Team (2023). R: A language and environment for statistical computing. R Foundation for Statistical Computing. https://www.R-project.org

Seidenberg, M. S. (2005). Connectionist models of word reading. Current Directions in Psychological Science, 14(5), 238–242. https://doi.org/10.1111/j.0963-7214.2005.00372.x

Seidenberg, M. S., & McClelland, J. L. (1989). A distributed, developmental model of word recognition and naming. Psychological Review, 96(4), 523–568. https://doi.org/10.1037/0033-295X.96.4.523

Shin, Y. K., Proctor, R. W., & Capaldi, E. J. (2010). A review of contemporary ideomotor theory. Psychological Bulletin, 136(6), 943–974. https://doi.org/10.1037/a0020541

Singmann, H., Bolker, B., Westfall, J., Aust, F., Ben-Shachar, M. (2023). afex: Analysis of factorial experiments. R package version 1.3-0. https://CRAN.R-project.org/package=afex

Sprenger-Charolles, L., Siegel, L. S., Béchennec, D., & Serniclaes, W. (2003). Development of phonological and orthographic processing in reading aloud, in silent reading, and in spelling: A four-year longitudinal study. Journal of Experimental Child Psychology, 84(3), 194–217. https://doi.org/10.1016/S0022-0965(03)00024-9

Van Orden, G. C. (1987). A ROWS is a ROSE: Spelling, sound, and reading. Memory & Cognition, 15(3), 181–198. https://doi.org/10.3758/BF03197716

Voeten, C. C. (2023). buildmer: Stepwise elimination and term reordering for mixed-effects regression. R package version 2.9. https://CRAN.R-project.org/package=buildmer

Wakeham-Lewis, R. M., Ozubko, J., & Fawcett, J. M. (2022). Characterizing production: The production effect is eliminated for unusual voices unless they are frequent at study. Memory, 30(10), 1319–1333. https://doi.org/10.1080/09658211.2022.2115075

Wammes, J. D., Jonker, T. R., & Fernandes, M. A. (2019). Drawing improves memory: The importance of multimodal encoding context. Cognition, 191(March 2018) 103955. https://doi.org/10.1016/j.cognition.2019.04.024

Wise Younger, J., Tucker-Drob, E., & Booth, J. R. (2017). Longitudinal changes in reading network connectivity related to skill improvement. NeuroImage, 158, 90–98. https://doi.org/10.1016/j.neuroimage.2017.06.044

Zamuner, T. S., Morin-Lessard, E., Strahm, S., & Page, M. P. A. (2016). Spoken word recognition of novel words, either produced or only heard during learning. Journal of Memory and Language, 89, 55–67. https://doi.org/10.1016/j.jml.2015.10.003

Zhang, B., Meng, Z., Li, Q., Chen, A., & Bodner, G. E. (2023). EEG-based univariate and multivariate analyses reveal that multiple processes contribute to the production effect in recognition. Cortex, 165, 57–69. https://doi.org/10.1016/j.cortex.2023.04.006

Acknowledgements

We thank Janina Koerner, Alexander Schnapka, Alma Paulick, and Freya Maren Lindemuth for their assistance in recruiting participants, piloting, and coding responses.

Funding

Open Access funding enabled and organized by Projekt DEAL. No funding was received for conducting this study.

Author information

Authors and Affiliations

Contributions

RMB and TCR conceptualized the study, RMB collected and analyzed the data, RMB wrote the manuscript, and TCR edited the manuscript.

Corresponding author

Ethics declarations

Conflicts of interest/competing interests

The authors have no relevant financial or non-financial interests to disclose.

Ethics approval

Because this study was non-invasive and was conducted with healthy adult volunteers with full legal capacity, no ethics approval was required. The study procedures were conducted in accordance with the ethical standards of the institutional and/or national research committee and as laid down in the 1964 Declaration of Helsinki and its later amendments or comparable ethical standards.

Consent to participate

All individual participants gave their informed consent prior to their inclusion in this study.

Consent for publication

All individual participants gave their informed consent to the use of their data for publication.

Additional information

Open Practices Statement

The data and code for all experiments are available on psycharchives.org (data: https://doi.org/10.23668/psycharchives.14096, code: https://doi.org/10.23668/psycharchives.14097). None of the experiments were preregistered.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1

Generalized linear mixed effects modelling

In order to model both participants and stimuli (words) as random effects, we performed a generalized linear mixed effects modelling analysis on the combined data from Experiments 1 and 2. Response accuracy at recognition was modelled as a discrete outcome for each word individually (a correct or incorrect response to each item, correct = 1, incorrect = 0) using generalized linear mixed-effects modelling (glmer() function in the lme4 package, version 1.1.33 (Bates et al., 2015) in R, version 4.3.1 (R Core Team, 2023). We followed the procedure recommended by Brown (Brown, 2021).

First, the factors “Production (Spoken/Silent)” and “Language (L1/L2)” were dummy coded to facilitate interpretation of interactions (Spoken = 1, Silent = 0, L1 = 0, L2 = 1) and the factor “Group” was sum-coded such that the average across the two groups was centered on zero (“German L1 – English L2” = -0.5, “English L1 – German L2” = 0.5) in order to facilitate interpretation of an interaction averaged over levels of “Group” (see Brown, 2021).

Next, a maximal model was constructed using the maximal possible random effects structure, below, including all possible random-effect intercepts and slopes. Note that slopes that were not possible to estimate were omitted: a slope across “Group” cannot be estimated for each participant (“Group” is a between-subject factor), and a slope across “Language” cannot be estimated for each word (“Language” is a between-word factor). (Note that the code “production*language*group” below also includes all main effects and 2-way interactions.)

The above maximal model was submitted to the buildmer() function (“buildmer” package, version. 2.9 (Voeten, 2023) (as used in e.g., Elias & Degani, 2022)) in R, which finds the maximal feasible random effects structure that can be estimated by the data (that is capable of converging). Additionally, we forced the buildmer() function to include all possible fixed effects in the final model (saturated fixed effects), using the following code:

This yielded a final model (below) that included intercepts for participant and word and the slope for participant (see model summary in Table 4).

Likelihood ratio tests were then conducted on all fixed effects in the above model using the mixed() function (“afex” package in R, v. 1.3.0) (Singmann et al., 2023) using the following code:

Results showed that the Production by Language interaction contributed significantly to the model fit (see Table 5).

We additionally performed a generalized linear mixed effects model analysis for each Experiment separately, following the same procedure as above (without the fixed factor “Group”). The maximal model submitted to the buildmer() function (using a saturated fixed factor structure) was as follows:

For each Experiment, the maximal model capable of converging (which was then submitted to the mixed() function) included intercepts for participants and words:

Model summaries and likelihood ratio test results for each Experiment are reported in Tables 6, 7, 8 and 9 below.

Appendix 2

Exploratory hierarchical regression analyses of the L2 production effect

Exploratory hierarchical regression analyses were performed on the L2 (second language) production effect magnitude for each individual. L2 production effect scores for each individual were calculated by subtracting the “Silent” condition dprime score from the “Spoken” condition dprime score, both in the L2 only (Tables 10, 11 and 12). Difference scores were also calculated by subtracting L1 (first language) production effect magnitude (L1 Spoken minus L1 Silent) from the L2 production effect scores for each individual (Tables 13, 14 and 15). Regression analyses were conducted on each of the above scores separately for each Experiment (Tables 10, 11, 13, and 14) and for the two Experiments combined (Tables 12 and 15). Predictor variables included: (1) age of acquisition, years of study, and number of other languages spoken (block 1); (2) self-rated L2 speaking, understanding, reading, and writing and level (block 2); and (3) L2 LexTALE score and L1 LexTALE score (block 3). All continuous variables were zero-centered. Categorical variables were dummy-coded: “Number of other languages” (zero, one, two, or three other languages) was coded with reference to “zero” as Variable 1 (one = 1, otherwise = 0), Variable 2 (two = 1, otherwise = 0) and Variable 3 (three = 1, otherwise = 0), and “Self-rated L2 level” (A2, B1, B2, or C1) was coded with reference to “A2” as Variable 1 (B1 = 1, otherwise = 0 ), Variable 2 (B2 = 1, otherwise = 0), and Variable 3 (C1 = 1, otherwise = 0).

The regression models did not account for statistically significant proportions of the L2 production effect variance, and adding successive blocks of predictors did not significantly improve the models (Tables 10, 11, and 12). Only the first block of predictors in Experiment 2 accounted for a statistically significant proportion of the L2 minus L1 production effect variance, though adding successive blocks of predictors did not significantly improve the model (Table 14). In Experiment 1, speaking one additional language and self-rated L2 writing ability showed statistically significant negative and positive relationships, respectively, with L2 production effect magnitude (Table 10). In Experiment 2, speaking three additional languages showed a statistically significant positive relationship with L2 production effect magnitude (Table 11) and with L2 minus L1 production effect magnitude (Table 14), although this result should be interpreted with caution because only two participants in Experiment 2 spoke three additional languages.

All of the above predictor variable labels and other abbreviations apply to Tables 11, 12, 13, 14 and 15 below.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Brown, R.M., Roembke, T.C. Production benefits on encoding are modulated by language experience: Less experience may help. Mem Cogn 52, 926–943 (2024). https://doi.org/10.3758/s13421-023-01510-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-023-01510-7